Journal:Undertaking sociotechnical evaluations of health information technologies

| Full article title | Undertaking sociotechnical evaluations of health information technologies |

|---|---|

| Journal | Informatics in Primary Care |

| Author(s) | Cresswell, Kathrin M.; Sheikh, Aziz |

| Author affiliation(s) | School of Health in Social Science, University of Edinburgh; Centre for Population Health Sciences, University of Edinburgh |

| Primary contact | Email: kathrin.beyer@ed.ac.uk |

| Year published | 2014 |

| Volume and issue | 21 (2) |

| Page(s) | 78–83 |

| DOI | 10.14236/jhi.v21i2.54 |

| ISSN | 2058-4563 |

| Distribution license | Creative Commons Attribution 2.5 Generic |

| Website | http://hijournal.bcs.org/index.php/jhi/article/view/54/80 |

| Download | http://hijournal.bcs.org/index.php/jhi/article/download/54/79 (PDF) |

Abstract

There is an increasing international recognition that the evaluation of health information technologies should involve assessments of both the technology and the social/organisational contexts into which it is deployed. There is, however, a lack of agreement on definitions, published guidance on how such ‘sociotechnical evaluations’ should be undertaken, and how they distinguish themselves from other approaches. We explain what sociotechnical evaluations are, consider the contexts in which these are most usefully undertaken, explain what they entail, reflect on the potential pitfalls associated with such research, and suggest possible ways to avoid these.

Keywords: Evaluation, health information technology, sociotechnical

Introduction

Internationally, there is a growing political drive to implement ever more complex information technologies into healthcare settings, in the hope that these will help improve the quality, safety, and efficiency of healthcare.[1] There is in parallel a growing appreciation that such interventions need to be formally evaluated, as the benefits of technologies should not simply be assumed. Complex systems such as electronic health records, and electronic prescribing and telemonitoring technologies are often very costly to procure and maintain, and so, even if effectiveness in relation to the quality of care is established, cost effectiveness needs to be examined. There is now also a growing body of work indicating that technologies may inadvertently introduce new risks, largely arising from difficulties of systems to integrate with existing work processes.[2]

The study of technological innovation into healthcare settings should therefore — particularly if the technology is likely to be disruptive — offer an opportunity to understand and evaluate the changing inter-relationships between technology and human/organisational (or socio-) factors. Whilst there is a growing theoretical and empirical evidence base on this subject, there is as yet little practical guidance explaining how such sociotechnical evaluations should be undertaken.[3][4][5][6][7][8][9]

Although there is an increasing appreciation of the complex processes involved in using and implementing technology in social contexts to improve the safety and quality of healthcare[3][4][5][6][7][8][9], current sociotechnical approaches somewhat fail to distinguish themselves from other methodologies such as usability testing and context-sensitive methods of investigation. This may be due to the lack of agreed existing definitions of what constitutes sociotechnical approaches to evaluation and the range of disciplinary backgrounds involved.

Drawing on our experience of conducting a number of recent studies of complex health information technologies[10][11][12][13][14][15][16], we aim to provide a practical guide to undertaking sociotechnical evaluations: we consider the contexts in which such approaches are most usefully employed, explain what sociotechnical evaluations involve, and reflect on the potential pitfalls associated with such work and how these might be avoided.

What are sociotechnical evaluations and when should this approach be used?

Sociotechnical perspectives assume that ‘organisational and human (socio) factors and information technology system factors (technical) are inter-related parts of one system, each shaping the other.[12] In line with this, sociotechnical evaluations involve researching the way technical and social dimensions change and shape each other over time.[17][18][19]

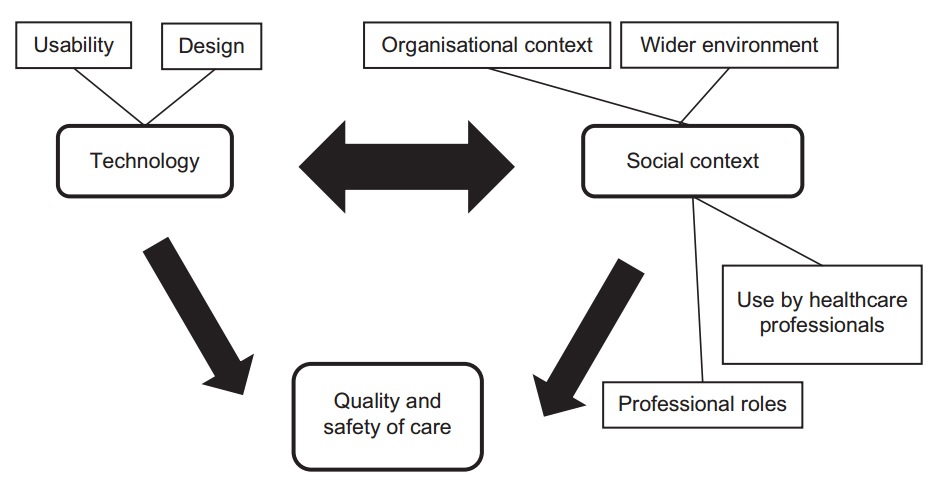

We summarise some typical components of a sociotechnical evaluation in Box 1. This approach is potentially most appropriate when there is a complex, iterative relationship between the technology and social processes in the environment into which it is introduced. Conversely, it is less useful when there is likely to be a simple, linear cause-and-effect relationship between the technology and social processes. The dimensions explored in an evaluation may encompass investigating how technologies change social processes (e.g. the way care is delivered by, for example, introducing electronic health records), and how technologies themselves can change over time as a result of user/organisational requirements (e.g. ongoing customisation to improve usability) (Figure 1).[5] Such adaptation is important as if the use of the technology results in perceived adverse consequences for the delivery of care, the technology is likely to frustrate busy clinical staff and may be abandoned altogether.[19]

A further defining component of sociotechnical evaluations is the attempt to study processes associated with the introduction of a new technology in social/organisational settings, as these mediators can offer important insights into potentially transferable lessons.[12][14][15][20] This focus on processes is important, because of the increasing number of technological functionalities and vast differences in implementation contexts. In contrast, evaluations that focus solely on investigating the impact of technology on outcomes often have limited generalisability beyond the immediate clinical setting in which the research was undertaken.

|

Figure 1. Dimensions commonly explored in sociotechnical evaluations of health information technologies

What study designs lend themselves to sociotechnical evaluations?

The focus on investigating and exploring processes lends itself best to a naturalistic approach, but sociotechnical evaluations may also incorporate aspects of more positivist designs, particularly in the context of undertaking quasi-experimental studies, when investigators do not have direct control over the technology that is to be implemented.[15]

Sociotechnical evaluations should ideally be undertaken using a prospective design, as this can help to map and understand the interplay between the technology and the social context, and thereby identify important insights into how the technology is received and used.[11][12][14][15][16][20][21]

Any appropriate design with a longitudinal dimension that allows mediating processes to be understood in detail is therefore potentially suitable for a sociotechnical evaluation. Mixed-methods sociotechnical evaluations are becoming more popular and are likely to represent an important expansion area for this research approach.[11]

What data to collect and how to analyse these

A range of qualitative and quantitative data may be gathered during sociotechnical evaluations (see Box 1). Qualitative data can help to shed light on social processes and perceived technical features such as individual attitudes and expectations (interviews and focus groups), planned organisational strategies and policies (documents), and use of technology in context (observations). These data may be complemented by quantitative work investigating the measurable impacts of technology on social systems. For instance, collecting health economic and cost data can provide insights into investment and maintenance costs, benefits, and returns on investment. Quantitative data collection can also help to explore the impact of systems on the safety and quality of care, e.g. by measuring reductions in errors and increased efficiencies associated with the move from paper-based to electronic systems.

A key distinctive feature in relation to analysis is the focus on exploring the dynamic relationship between technical and social factors over time. It is therefore important to obtain insights into technical characteristics and social processes before the introduction of the new technology into a new social context, changes to technical and social aspects once the technology is introduced, potential underlying relationships between technical and social dimensions, and the changes that occur over time as the technology becomes more embedded within the new social context. Learning across implementations can be promoted by identifying what mechanisms underlie observations and hypothesising if/how these may be applicable to other contexts. This may be informed by a realistic evaluation perspective, assessing contexts (existing and desirable conditions for certain outcomes to be produced), mechanisms (potential causal pathways that may lead to an outcome), and outcomes (the observable effects produced).[22] The approach may help to overcome traditional boundaries associated with the separation of technical and social spheres, as all three aspects (contexts, mechanisms, and outcomes) are neither distinctively technical nor distinctively social — they emerge out of the interplay of both.

Potential pitfalls and how to avoid these

There are, however, a number of challenges associated with sociotechnical evaluations of health information technologies (summarised in Table 1). These stem from a lack of existing agreement on various components of a sociotechnical system, possible study designs, and data analysis strategies. They range from practical issues surrounding the management of the work itself and coping with shifting implementation timelines, to more conceptual challenges surrounding the extraction of a ‘bottom line’, and the pragmatic use of theory.

| ||||||||||||||

Practical challenges associated with sociotechnical evaluations

Implementations of complex health information technologies such as electronic health records and electronic prescribing systems affect many aspects of organisational functioning and therefore tend to require complex evaluations. This is compounded with increasing organisational size and/or if more than one organisation is studied. Specific expertise needed in the research team will vary depending on the research questions being studied and methods employed, but may include methodological (qualitative, statistical, epidemiological, and health economic), theoretical (organisational change, management, and human factors), managerial (applied team management), healthcare professional (doctors, nurses, and allied health professions), and technical (information technology specialists and system developers) expertise. Exploitation of individual strengths whilst ensuring coordinated efforts can in our experience be greatly facilitated by assigning lead researchers to individual aspects of the work investigating different impacts/consequences (e.g. cost and changes to individual work practices), and organisations to be studied.

A further practical challenge that evaluators are likely to face is the impact of shifting implementation landscapes and timelines, potentially resulting in original methodologies having to be adapted accordingly.[12] This is a common problem in evaluations of technical and/or health policy interventions, where data collection activities depend on the planned introduction of technologies.[14] As a result of expanding timelines, originally anticipated quantitative measurement making before, during, and after assessments of technology introduction may not be possible, and evaluators may lack opportunities to investigate the systems once they are routinely used within organisations.[11][12][13][14] Longitudinal evaluative work over extended periods of time is therefore important.[16] This should be characterised by early and close collaboration between evaluators and decision makers, so that changes in strategic direction and potential consequences for evaluation activities can be planned for in advance.[15]

Conceptual challenges associated with sociotechnical evaluations

There are also a number of conceptual challenges associated with conducting sociotechnical evaluations of complex health information technologies. The first relates to the researching of context surrounding the technology. Here, it is important to explore the use of technology by individuals, but also the wider environment in which these processes are situated (e.g. professional, organisational, and political contexts), as this can impact significantly on the way technology is adopted and changed over time.[2][14][15] With this in mind, it is, for example, often insufficient to only explore healthcare professional perspectives on technology implementation and adoption; there is also a need to gain insights into managerial and organisational perspectives to get a more rounded understanding of implementation processes and reasons underlying strategic decisions. Similarly, broader political and commercial developments may play a role in shaping user experiences and the deployment/design of technologies.[12][19]

Another common challenge facing sociotechnical evaluations is the extraction of a ‘bottom line’. Large complex processes tend to be most accurately described with the help of large complex stories, but there is also a need to ensure that messages emerging from evaluations are heard by decision makers, and this is often only possible with a limited number of straightforward key messages. In the face of this pressure, many evaluators have resorted to making bold statements surrounding the ‘success’ or ‘failure’ of evaluations of complex technologies, but in our experience, this is neither constructive in relation to future decision making nor accurate in representing reality. Evaluators need to acknowledge the existence of different notions of success as well as different temporal dimensions associated with perceived ‘failures’ (e.g. something that was initially observed as a ‘failure’ may on reflection turn out to be a ‘success’). A better way to conceptualise outputs of sociotechnical evaluations is through a summary of key lessons learned, outlining how and why these may be transferable to other settings.

The final conceptual challenge takes a more theoretical angle. It is now commonly recognised that theory can help to develop transferable lessons between settings, and there are many theories that draw on sociotechnical principles.[23] However, different existing research traditions vary significantly in the way technologies, processes, and stakeholders are conceptualised. This results in a lack of existing overall framework through which implementations can be examined, although some existing approaches are summarised in Box 2.[24] In addition, theoretical lenses are often hard to understand for non-academic audiences and lack pragmatism. This inhibits learning from experience and also widens the existing gap between academic research and frontline practice. With this in mind, it is key not to ignore the issue surrounding theory as is so often done in existing evaluations — particularly those carried out by healthcare organisations that lack the necessary expertise. More usable integrative theoretically informed evaluation frameworks are currently in development, but in the meantime, we advocate drawing on those that encompass not only social and technical dimensions, but also the wider contexts in which local developments are taking place (Box 2).

|

Conclusions

Sociotechnical evaluations are a powerful tool to research complex technological change, particularly if the aim is to investigate non-linear relationships between technology and social processes. However, there are also challenges associated with their conduct as they involve investigating complex processes over time and within complex changing environments. The existing literature has, due to a lack of existing agreement on defining components of such work, not always fully appreciated these.

We hope that our suggested definitions, experiences, and reflections will contribute to a more integrated approach to conducting sociotechnical evaluations of technological systems in healthcare settings. Given the very considerable policy interest and substantial financial resources being expended in implementing health information technologies in an attempt to achieve the triple aims of enhancing the safety/quality of care, improving health outcomes, and maximising the efficiency of care, we believe that greater use of sociotechnical evaluations will help to understand key processes in the interrelationships between technology, people, and organisations. This understanding will help to derive important and potentially transferable lessons.

Contributors and sources

Aziz Sheikh conceived this work and is the guarantor. Aziz Sheikh was the principal investigator of the project discussed and is currently leading a National Institute for Health Research-funded national evaluation of electronic prescribing and medicines administration systems. He is supported by a Harkness Health Policy and Practice fellowship from The Commonwealth Fund. Kathrin M Cresswell was employed as a researcher and led on the write-up and drafting the initial version of the paper, with Aziz Sheikh commenting on various drafts.

Acknowledgements

The authors are very grateful to all participants who kindly gave their time and to the extended project team of the work drawn on. The authors are also grateful for the helpful comments on an earlier version of this manuscript by Dr. Allison Worth.

Funding

This work has drawn on research funded by the NHS Connecting for Health Evaluation Programme (NHS CFHEP 001, NHS CFHEP 005, NHS CFHEP 009, NHS CFHEP 010) and the National Institute for Health Research (NIHR) under its Programme Grants for Applied Research scheme (RP-PG-1209-10099). The views expressed are those of the authors and not necessarily those of the NHS, the NIHR, or the Department of Health. AS is supported by The Commonwealth Fund, a private independent foundation based in New York City. The views presented here are those of the author and not necessarily those of The Commonwealth Fund, its directors, officers, or staff.

Competing interest declaration

The authors have no competing interests.

References

- ↑ Coiera, E. (2009). "Building a National Health IT System from the middle out". Journal of the American Medical Informatics Association 16: 271–3. doi:10.1197/jamia.M3183. PMC PMC2732241. PMID 19407078. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC2732241.

- ↑ 2.0 2.1 Greenhalgh, T.; Stones, R. (2010). "Theorising big IT programmes in healthcare: strong structuration theory meets actor-network theory". Social Science and Medicine 70: 1285–94. doi:10.1016/j.socscimed.2009.12.034. PMID 20185218.

- ↑ 3.0 3.1 Catwell, L.; Sheikh, A. (2009). "Evaluating eHealth interven-tions: the need for continuous systemic evaluation". PLoS Medicine 6: e1000126. doi:10.1371/journal.pmed.1000126. PMC PMC2719100. PMID 19688038. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC2719100.

- ↑ 4.0 4.1 Berg, M.; Aarts, J.; van der Lei, J. (2003). "ICT in health care: socio-technical approaches". Methods of Information in Medicine 42: 297–301. PMID 14534625.

- ↑ 5.0 5.1 5.2 Cresswell, K.; Worth, A.; Sheikh, A. (2010). "Actor-network theory and its role in understanding the implementation of information technology developments in healthcare". BMC Medical Informatics and Decision Making 10: 67. doi:10.1186/1472-6947-10-67. PMC PMC2988706. PMID 21040575. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC2988706.

- ↑ 6.0 6.1 "Health IT and Patient Safety: Building Safer Systems for Better Care" (PDF). Institute of Medicine. 2011. http://www.iom.edu/~/media/Files/Report%20Files/2011/Health-IT/HIT%20and%20Patient%20Safety.pdf. Retrieved 16 July 2013.

- ↑ 7.0 7.1 Cherns, A. (1987). "Principles of sociotechnical design revisited". Human Relations 40: 153–61. doi:10.1177/001872678704000303.

- ↑ 8.0 8.1 Clegg, C.W. (2000). "Sociotechnical principles for system design". Applied Ergonomics 31: 463–77. doi:10.1016/S0003-6870(00)00009-0.

- ↑ 9.0 9.1 Harrison, M.I.; Koppel, R.; Bar-Lev, S. (2007). "Unintended consequences of information technologies in health care—an interactive socio-technical analysis". Journal of the American Medical Informatics Association 14: 542–9. doi:10.1197/jamia.M2384. PMC PMC1975796. PMID 17600093. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC1975796.

- ↑ Black, A.D.; Car, J.; Pagliari, C.; Anandan, C.; Cresswell, K.; Bokun, T.; et al. (2011). "The impact of eHealth on the quality and safety of health care: a systematic overview". PLoS Medicine 8: e1000387.

- ↑ 11.0 11.1 11.2 11.3 Sheikh, A.; Cornford, T.; Barber, N.; Avery, A.; Takian, A.; Lichtner, V.; et al. (2011). "Implementation and adoption of nationwide electronic health records in secondary care in England: final qualitative results from a prospective national evaluation in “early adopter” hospitals". British Medical Journal 343: d6054. doi:10.1136/bmj.d6054. PMC PMC3195310. PMID 22006942. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3195310.

- ↑ 12.0 12.1 12.2 12.3 12.4 12.5 12.6 Robertson, A.; Cresswell, K.; Takian, A.; Petrakaki, D.; Crowe, S.; Cornford, T.; et al. (2010). "Implementation and adoption of nationwide electronic health records in secondary care in England: qualitative analysis of interim results from a prospective national evaluation". British Medical Journal 341: c4564.

- ↑ 13.0 13.1 Takian, A.; Petrakaki, D.; Cornford, T.; Sheikh, A.; Barber, N. (2012). "Building a house on shifting sand: methodological considerations when evaluating the implementation and adoption of national electronic health record systems". BMC Health Services Research 12: 105–29. doi:10.1186/1472-6963-12-105. PMC PMC3469374. PMID 22545646. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3469374.

- ↑ 14.0 14.1 14.2 14.3 14.4 14.5 Westbrook, J.; Braithwaite, J.; Georgiou, A.; Ampt, A.; Creswick, A.; Coiera, E.; et al. (2007). "Multimethod evaluation of information and communication technologies in health in the context of wicked problems and sociotechnical theory". Journal of the American Medical Informatics Association 14: 746–55. doi:10.1197/jamia.M2462. PMC PMC2213479. PMID 17712083. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC2213479.

- ↑ 15.0 15.1 15.2 15.3 15.4 15.5 Greenhalgh, T.; Hinder, S.; Stramer, K.; Bratan, T.; Russell, J. (2010). "Adoption, non-adoption, and abandonment of a personal electronic health record: case study of HealthSpace". British Medical Journal 341: c5814.

- ↑ 16.0 16.1 16.2 Barber, N.; Cornford, T.; Klecun, E. (2007). "Qualitative evaluation of an electronic prescribing and administration system". Quality and Safety in Health Care 16: 271–8. doi:10.1136/qshc.2006.019505. PMC PMC2464937. PMID 17693675. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC2464937.

- ↑ Berg, M. (1999). "Patient care information systems and health care work: a sociotechnical approach". International Journal of Medical Informatics 55: 87–101. doi:10.1016/S1386-5056(99)00011-8.

- ↑ Cornford, T.; Doukidis, G.; Forster, D. (1994). "Experience outcome with a structure, process and framework for evaluating an information system". Omega, International Journal of Management Science 22: 491–504. doi:10.1016/0305-0483(94)90030-2.

- ↑ 19.0 19.1 19.2 Cresswell, K.; Worth, A.; Sheikh, A. (2012). "Comparative case study investigating sociotechnical processes of change in the con-text of a national electronic health record implementation". Health Informatics Journal 18: 251–70. doi:10.1177/1460458212445399. PMID 23257056.

- ↑ 20.0 20.1 Crowe, C.; Cresswell, K.; Robertson, R.; Huby, G.; Avery, A.; Sheikh, A. (2011). "The case study approach". BMC Medical Research Methodology 11: 100. doi:10.1186/1471-2288-11-100. PMC PMC3141799. PMID 21707982. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3141799.

- ↑ Cresswell, K.M.; Bates, D.W.; Sheikh, A. (2013). "Ten key considerations for the successful implementation and adoption of large-scale health information technology". Journal of the American Medical Informatics Association 20: e9–13.

- ↑ Pawson, R.; Tilley, N. (1997). Realistic Evaluation. Sage Publications.

- ↑ Wacker, J.G. (1998). "A definition of theory: research guidelines for different theory-building research methods in operations management". Journal of Operations Management 16: 361–85. doi:10.1016/S0272-6963(98)00019-9.

- ↑ Greenhalgh, T.; Potts, H.; Wong, G.; Bark, P.; Swinglehurst, D. (2009). "Tensions and paradoxes in electronic patient record research: a systematic literature review using the meta-narrative method". Milbank Quarterly 87: 729–88. doi:10.1111/j.1468-0009.2009.00578.x. PMC PMC2888022. PMID 20021585. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC2888022.

Notes

This presentation is faithful to the original, with only a few minor changes to presentation.