Journal:Cybersecurity impacts for artificial intelligence use within Industry 4.0

| Full article title | Cybersecurity impacts for artificial intelligence use within industry 4.0 |

|---|---|

| Journal | Scientific Bulletin |

| Author(s) | Dawson, Maurice |

| Author affiliation(s) | Illinois Institute of Technology |

| Primary contact | Email: mdawson2 at iit dot edu |

| Year published | 2021 |

| Volume and issue | 26(1) |

| Page(s) | 24–31 |

| DOI | 10.2478/bsaft-2021-0003 |

| ISSN | 2451-3148 |

| Distribution license | Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International |

| Website | https://www.sciendo.com/article/10.2478/bsaft-2021-0003 |

| Download | https://www.sciendo.com/backend/api/download/file?packageId=60d501ad0849e84dbf047e44 (PDF) |

Abstract

In today’s modern digital manufacturing landscape, new and emerging technologies can shape how an organization can compete, while others will view those technologies as a necessity to survive, as manufacturing has been identified as a critical infrastructure. Universities struggle to hire university professors that are adequately trained or willing to enter academia due to competitive salary offers in the industry. Meanwhile, the demand for people knowledgeable in fields such as artificial intelligence, data science, and cybersecurity continuously rises, with no foreseeable drop in demand in the next several years. This results in organizations deploying technologies with a staff that inadequately understands what new cybersecurity risks they are introducing into the company. This work examines how organizations can potentially mitigate some of the risk associated with integrating these new technologies and developing their workforce to be better prepared for looming changes in technological skill need. With the anticipation of over 10% growth in organizations deploying artificial intelligence in the near term, the current cybersecurity workforce needs are lacking over half a million trained people. With this struggle to find a viable workforce apparent, this research paper aims to serve as a guide for information technology (IT) managers and senior management to foresee the cybersecurity risks that will result from the incorporation of these new technological advances into the organization.

Keywords: artificial intelligence, cybersecurity, workforce, risk management

Introduction

Global research and advisory firm Gartner’s 2019 Chief Information Officer (CIO) Agenda survey showed demand for artificial intelligence (AI) implementation within businesses had grown from 4% to 14%. (Goasduff, 2019) This survey represented over 80 organizations and 3,000 respondents, showing the transition to a new era in information technology (IT) as digitalization quickly continues.[1] Meanwhile, in fields such as cybersecurity within the United States (U.S.), websites such as CyberSeek show over 500,000 total cybersecurity job openings, with the top three titles being "cybersecurity engineering," "cybersecurity analyst," and "network engineer / architect."[2] The same site shows that the supply of these workers is deficient. Reviewing the cybersecurity workforce supply/demand ratio, the national average is 2.0, while the national average for all jobs is 4.0 in the U.S.[2]

Pinzone et al. looked into technical skills needed, and "data science management" was one of the five organizational areas affected by Industry 4.0.[3] For data science management, some of the identified skills were data storage use, cloud computing use, and analytical application and tool development in languages such as Python.[3] For this skill, however, there is no mention of addressing security while developing these needed data science applications, all while new vulnerabilities are found within the code of numerous applications.[4] In fact, ther is no mention of secure use of these tools under "data science management" and three other organizational areas described by Pinzone et al.'. However, cybersecurity is mentioned in relation to privacy, safety, and security management under the organizational area "IT-OT integration management."[3]

Industry 4.0

Manufacturing has undergone many industrial revolutions that have changed the ability to produce goods, as shown in Figure 1. Technological advances have made these industrial revolutions possible. The Third Industrial Revolution introduced computers and automation, whereas Industry 4.0 (often referred to as the Fourth Industrial Revolution) introduced cyber-physical systems. The need for further automation and unparalleled levels of data exchange has brought about multiple changes. These changes come in the form of internet-enabled hyperconnected devices found in the internet of things (IoT) and the internet of everything (IoE) and are changing the manufacturing industry in terms of what can be accomplished.

|

Manufacturing is but one of many industries looking to incorporate IoT and other internet-enabled devices. However, while the manufacturing industry has started to incorporate this new technology, related security incidents have arisen. Countries like the United Kingdom (U.K.) have reported such incidents, including eighty-five manufacturing plants falling victim to cyber-attacks in 2018.[5] In the Middle East and Africa, the attacks have ranged from the leak of online dating profiles to oil refineries in Saudi Arabia. With cyber-attacks continuing to rise from year to year, there is a push to incorporate more sophisticated security technology into manufacturing.

The promise of AI in manufacturing

In 2016, there were two reports from the U.S. White House describing the promise and the future of AI as it affects automation and the economy.[6][7] Specifically, some of the promised growth could be achieved by adopting technologies such as robots and systems that allow for massive improvements in the supply chain.[6] The use of AI in Industry 4.0-based manufacturing systems is still emerging, and lots of work remains to be done to ensure their proper development and implementation.[8] Ultimately, the goal is to produce more goods with fewer employed workers in the facility, which would enable economic growth globally. The National Institute of Standards and Technology (NIST) shows that the significant benefits of AI include a rise in productivity, more efficient resource use, and increased creativity. However, downsides include the negative impact on the job market and its failure to address inequality.

Evolving environments

With organizations pushing for more connectivity, and as a result resulting in more complexity amongst hyperconnected systems, organizational leadership must understand what the potential results are for their organization. As the push for a competitive edge requires data scientists within an organization to perform statistical analysis on more data to reveal how the resulting information could be harnessed, it becomes essential to consider the security implications of adopting more Industry 4.0 technologies. Truth be told, the Fourth Industrial Revolution consists of high levels of automation and unparalleled data exchange levels with unknown security risks.[9]

Additionally, with the lockdowns associated with Coronavirus disease 2019 (COVID-19), remote work has been strongly encouraged in some metropolitan regions within the U.S. An increased need for social distancing to keep employees safe brought with it growth and expansion in remote system management, virtual collaboration, and cloud computing services. With many systems already poorly protected, these added complexities have only added to the threat landscape. Researchers have already debated how the COVID-19 crisis is challenging technology and how it could accelerate the revolution.[10] At the same time, the number of cybersecurity attacks has risen since the global pandemic started, including attacks on the World Health Organization[11], with overall attacks rising by six times the usual levels for hacking and phishing attempts.[12] These attacks have been felt among all industries, from manufacturing to healthcare.

Understanding the risks

Organizational cybersecurity risks for AI fall into three areas (see Table 1). The first area is people, with one of the risks being associated with inadequately trained personnel. Inadequate training can lead to falling for deepfakes and allowing authentication to rely solely on stored credentials that another user could obtain through circumvention. Misplaced trust is yet another result of a corporate roll-out plan that is not inclusive and does not account for issues that will come up for those who are ultimately impacted by the implementation of this technology. The second area of risk involves organizational processes. Automated processes that get implemented must incorporate proper security controls. If there are poor security controls in the AI and other portions of the overall manufacturing systems, this will increase the threat landscape. The last area of risk is technology, which when implemented must undergo full system testing, which includes testing in a sandbox environment before deployment. Periodic testing needs to occur to ensure the newly released and older Common Weakness Enumerations (CWEs) do not affect the system's security posture. However, testing falls into the risk of the process, as the organization would need to have selected or designed a process that consists of frequent and in-depth testing.

| ||||||||||

When discussing risk, it is essential to note that people are also responsible for developing AI, so having component developers means a lot to how the AI will utlmately behave. The design of complex alorithms that give life to the system is solely dependent upon the skills of the engineers. For years, people have wrestled with AI's capabilities and exactly where that would lead society.[13] However, the thing that researchers did not place enought thought into was how to equip those ultimately responsible for the AI systems to defend them. For the staff that will manage their manufacturing floors, it is imperative that they understand IoT and how AI ultimately changes the ecosystem.

After reviewing numerous current programs in managerial education, such as the Master of Business Administration (MBA) advanced degree, the majority of industrial engineering courses fail to address cybersecurity in the curriculum. A review of over 40 MBA programs shows that the technical coursework may have a course that deals with information systems (IS) and a small section dedicated to cybersecurity in a high-level, generalized scope. This is inadequate and not focused enough to have those insights necessary to tackle future challenges.

Training is another critical factor in executing organizational processes regarding technology.[14] For any selected process to work effectively and efficiently, those responsible for carrying out the process should have been trained and aware of the actual processes. It's already challenging enough to insert new changes into the organization, but training must be conducted in a manner that still allows retention.

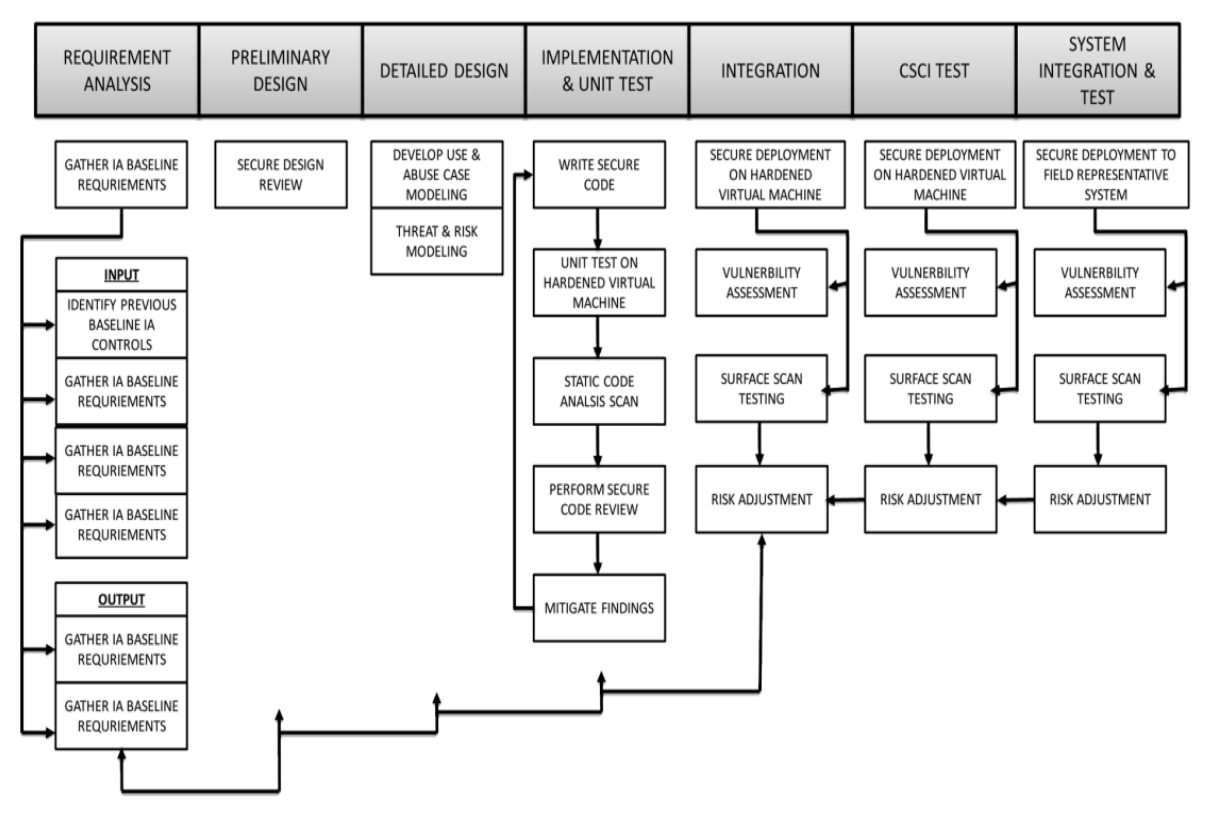

For designing the AI, developing security throughout the lifecycle is vital, as demonstrated in Figure 2. This means there needs to be well-defined requirements and interactive testing of software. Doing this allows for proper inspection of the code to ensure there are no backdoors, dead code that allows for exploitation, or hidden malicious programs.

|

Technology has been identified as the final area of risk to address. As managers and senior leader leaders wish to add these technologies, several things can be done. Managing the supply chain and the acquisition of systems is a risk within technology. Not knowing the origin of a particular piece of code or hardware poses a risk as the quality or security of that specific item may harbor a vulnerability for the more extensive system. Imagine the case of a critical algorithm being developed by a nation-state that ends up rerouting critical data or causing a catastrophic event at a facility such as fire due to overheating.

Importance of manufacturing in the U.S.

In February 2019, the Executive Office of the President issued Executive Order (EO) 13859 titled Maintaining American Leadership in Artificial Intelligence.[15] It called for promoting AI research and innovation while protecting American AI. Around the same time, Putin and Russia reportedly wanted to be the global leader for AI and use it in AI-driven asymmetric warfare.[16] However, after investigating Russia's investments to AI, the country appeared to fall far behind countries like China and the U.S.[16] Countries continue to scramble to ensure they are global players in AI with significant investments, though they arguably are not doing enough to promote cybersecurity within the AI and other computing systems of the manufacturing industry.

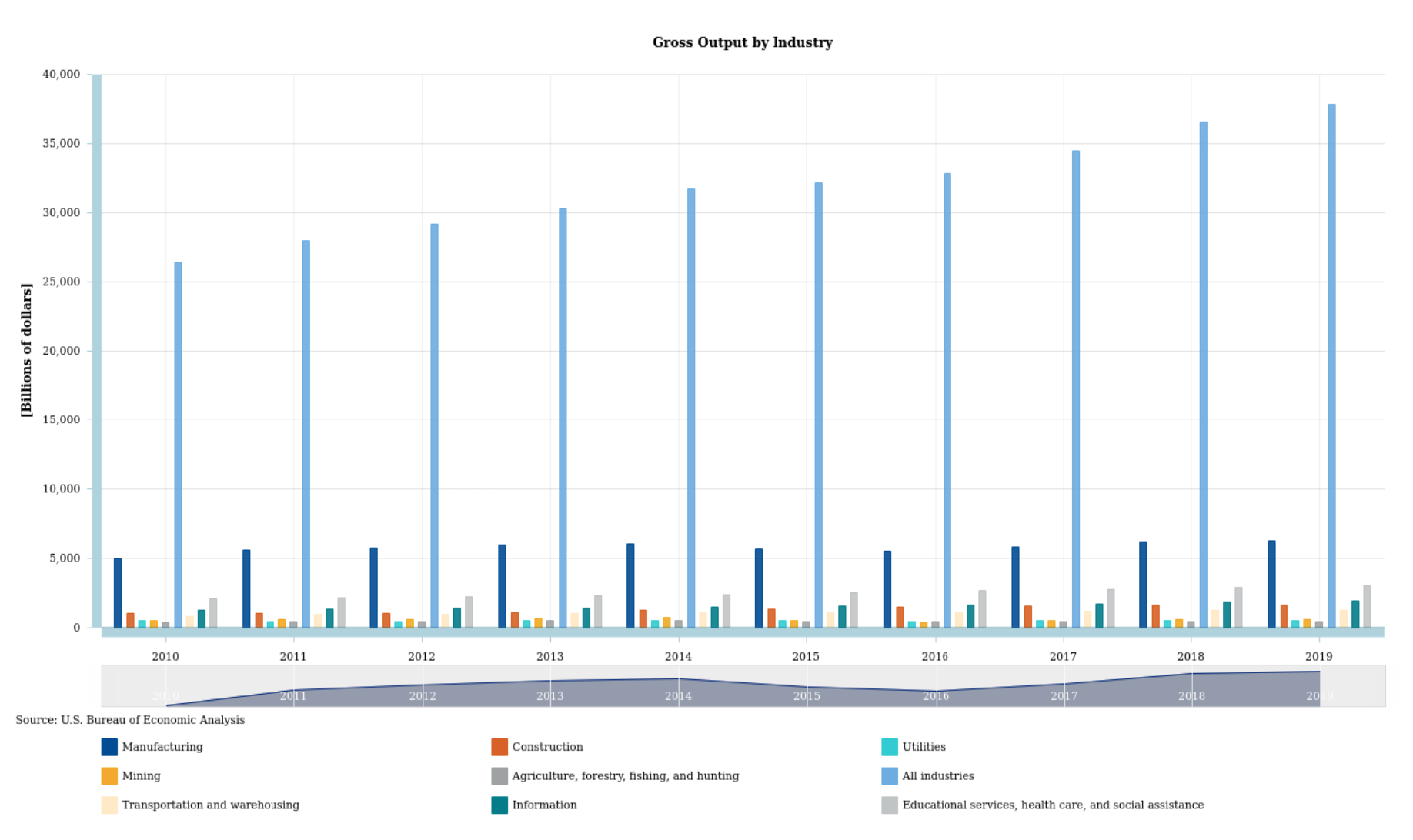

The U.S. Bureau of Economic Analysis (BEA) data from 2010 to 2019 shows the gross output for multiple industries. The industries evaluated (see Figure 3) were:

- Manufacturing

- Agriculture, forestry, fishing, and hunting

- Educational services, health care, and social assistance

- Construction

- Utilities

- Transportation and warehousing

- Minining

- Information

|

The year 2019 shows manufacturing being 16.57% of total gross output.[17] In 2018, that percentage was 16.98%, and 16.86%, 16.9%, and 17.73% in 2017, 2016, and 2015, respectively.[17] This data provided by the BEA shows tha manufacturing is significant to the gross output for the U.S., meaning that if this industry finds itself under attack, it could result in catastrophic events such as loss of critical intellectual property (IP), manufacturing output, and overall lower gross output that would lower the total output by an estimated 16%.

Conclusions

Today's modern manufacturing IoT environment requires organizations to effectively manage expectations and risks as they relate to cybersecurity. Organizationa need to consider how they fully utilize technologies such as data science while protecting the data that is being analyzed. This paper examined some risks associated with organizational use of AI. Understanding cyber defense and cyber offensive viewpoints may yet provide some insight into how organizations should conduct tests to review the threat landscape.[18]

Three areas of risks that need to be managed for implementation of AI into Industry 4.0 were reviewed in this work. Reviewing data from BEA proves the significance of manufacturing in the U.S. and highlights the potential impact of cybersecurity vulnerabilities that are not managed appropriately. In conclusion, as AI becomes increasingly prominent in critical industries such as manufacturing, it is essential to ensure that proper security controls are in place to thwart any possible threat, whether internal or external.[19]

References

- ↑ "Gartner Survey of More Than 3,000 CIOs Reveals That Enterprises Are Entering the Third Era of IT". Gartner, Inc. 16 October 2018. https://www.gartner.com/en/newsroom/press-releases/2018-10-16-gartner-survey-of-more-than-3000-cios-reveals-that-enterprises-are-entering-the-third-era-of-it. Retrieved 05 February 2020.

- ↑ 2.0 2.1 "Cybersecurity Supply/Demand Heat Map". CyberSeek. https://www.cyberseek.org/heatmap.html. Retrieved 15 March 2020.

- ↑ 3.0 3.1 3.2 Pinzone, M.; Fantini, P.; Perini, S. (2017). "Jobs and Skills in Industry 4.0: An Exploratory Research". APMS 2017: Advances in Production Management Systems. The Path to Intelligent, Collaborative and Sustainable Manufacturing: 282–88. doi:10.1007/978-3-319-66923-6_33.

- ↑ Murtaza, S.S.; Khreich, W.; Hamou-Lhadj, A. et al. (2017). "Mining trends and patterns of software vulnerabilities". Journal of Systems and Software 117: 218–28. doi:10.1016/j.jss.2016.02.048.

- ↑ Ambrose, J. (23 April 2018). "Half of UK manufacturers fall victim to cyber attacks". The Telegraph. https://www.telegraph.co.uk/business/2018/04/22/half-uk-manufacturers-fall-victim-cyber-attacks/. Retrieved 04 June 2020.

- ↑ 6.0 6.1 Lee, K. (20 December 2016). "Artificial Intelligence, Automation, and the Economy". The White House Blog. The Obama White House. https://obamawhitehouse.archives.gov/blog/2016/12/20/artificial-intelligence-automation-and-economy. Retrieved 07 June 2020.

- ↑ Felten, E. (3 May 2016). "Preparing for the Future of Artificial Intelligence". The White House Blog. The Obama White House. https://obamawhitehouse.archives.gov/blog/2016/05/03/preparing-future-artificial-intelligence. Retrieved 07 June 2020.

- ↑ Lee, J.; Davari, H.; Singh, J. et al. (2018). "Industrial Artificial Intelligence for industry 4.0-based manufacturing systems". Manufacturing Letters 18: 20–23. doi:10.1016/j.mfglet.2018.09.002.

- ↑ Dawson, M. (2018). "Cyber Security in Industry 4.0: The Pitfalls of Having Hyperconnected Systems". Journal of Strategic Management Studies 10 (1): 19–28. doi:10.24760/iasme.10.1_19.

- ↑ IEEE (4 June 2020). "How COVID-19 is Affecting Industry 4.0 and the Future of Innovation". IEEE Transmitter. https://transmitter.ieee.org/how-covid-19-is-affecting-industry-4-0-and-the-future-of-innovation/. Retrieved 08 June 2020.

- ↑ World Health Organization (23 April 2020). "WHO reports fivefold increase in cyber attacks, urges vigilance". World Health Organization. https://www.who.int/news/item/23-04-2020-who-reports-fivefold-increase-in-cyber-attacks-urges-vigilance. Retrieved 07 June 2020.

- ↑ Muncaster, P. (1 April 2020). "Cyber-Attacks Up 37% Over Past Month as #COVID19 Bites". InfoSecurity Magazine. https://www.infosecurity-magazine.com/news/cyberattacks-up-37-over-past-month/. Retrieved 05 June 2021.

- ↑ Minsky, M.L. (1982). "Why People Think Computers Can't". AI Magazine 3 (4): 3. doi:10.1609/aimag.v3i4.376.

- ↑ Umble, E.J.; Haft, R.R.; Umble, M.M. (2003). "Enterprise resource planning: Implementation procedures and critical success factors". European Journal of Operational Research 146 (2): 241–57. doi:10.1016/S0377-2217(02)00547-7.

- ↑ Executive Office of the President (2019). "Maintaining American Leadership in Artificial Intelligence". Federal Register: 3967–72. https://www.federalregister.gov/documents/2019/02/14/2019-02544/maintaining-american-leadership-in-artificial-intelligence.

- ↑ 16.0 16.1 Polyakova, A. (15 November 2018). "Weapons of the weak: Russia and AI-driven asymmetric warfare". Brookings Institution. https://www.brookings.edu/research/weapons-of-the-weak-russia-and-ai-driven-asymmetric-warfare/. Retrieved 07 June 2020.

- ↑ 17.0 17.1 "Interactive Access to Industry Economic Accounts Data: GDP by Industry". U.S. Bureau of Economic Analysis. 2020. Archived from the original on 18 June 2020. https://web.archive.org/web/20200618204544/https://apps.bea.gov/iTable/iTable.cfm?reqid=51&step=1.

- ↑ Dawson, M. (2020). "National Cybersecurity Education: Bridging Defense to Offense". Land Forces Academy Review 25 (1): 68–75. doi:10.2478/raft-2020-0009.

- ↑ "Artificial Intelligence and International Security: The Long View". Ethics & Internation Affairs 33 (2): 169–79. 2019. doi:10.1017/S0892679419000145.

Notes

This presentation is faithful to the original, with only a few minor changes to presentation, grammar, and punctuation. In some cases important information was missing from the references, and that information was added. URLs for the two White House articles were broken from the original; active URLs for the same content were found and added for this version. The URL for the U.S. Bureau of Economic Analysis data was also dead in the original; an archived URL was found and used for this version. A few words were added, updated or shifted for improved grammar and readability, but this version is otherwise unchanged in compliance with the "NoDerivatives" portion of the original's license.