Difference between revisions of "Journal:Privacy-preserving healthcare informatics: A review"

Shawndouglas (talk | contribs) (Created stub. Saving and adding more.) |

Shawndouglas (talk | contribs) |

||

| Line 38: | Line 38: | ||

==Privacy threats== | ==Privacy threats== | ||

In this section, we first discuss privacy-preserving data publishing (PPDP) and the properties of healthcare data. Then, we present the major privacy disclosures in healthcare data publication and show the relevant attack models. Finally, we present the privacy and utility objective in PPDP. | |||

===Privacy-preserving data publishing=== | |||

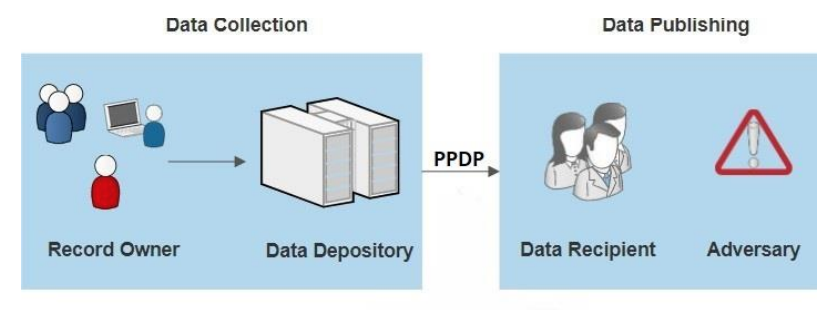

Privacy-preserving data publishing (PPDP) provides technical solutions that address the privacy and utility preservation challenges of [[data sharing]] scenarios. An overview of PPDP is shown in Figure 1, which includes a general data collection and data publishing scenario. | |||

[[File:Fig1 Chong ITMWebConf21 36.png|700px]] | |||

{{clear}} | |||

{| | |||

| STYLE="vertical-align:top;"| | |||

{| border="0" cellpadding="5" cellspacing="0" width="700px" | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"| <blockquote>'''Figure 1.''' Overview of privacy-preserving data publishing (PPDP)</blockquote> | |||

|- | |||

|} | |||

|} | |||

During the data collection phase, data of the record owner (patient) are collected by the data holder (hospital) and stored in an EHR. In the data publishing phase, the data holder releases the collected data to the data recipient (e.g., the public or a third party, e.g., an insurance company or medical research center) for further analysis and [[data mining]]. However, some of the data recipients (adversary) are not honest and attempt to obtain more information about the record owner beyond the published data, which includes the identity and sensitive data of the record owner. Hence, PPDP serves as a vital process that sanitizes sensitive information to avoid privacy violations of one or more individuals. | |||

===Healthcare data=== | |||

Typically, stored healthcare data exists as relational data in tabular form. Each row (tuple) corresponds to one record owner, and each column corresponds to a number of distinct attributes, which can be grouped into the following four categories: | |||

* '''Explicit identifier (ID)''': a set of attributes such as name, social security number, national IDs, mobile number, and drivers license number that uniquely identifies a record owner | |||

* '''Quasi-identifier (QID)''': a set of attributes such as date of birth, gender, address, zip code, and hobby that cannot uniquely identify a record owner but can potentially identify the target if combined with some auxiliary information | |||

* '''Sensitive attribute (SA)''': sensitive personal information such as diagnosis codes, genomic information, salary, health condition, insurance information, and relationship status that the record owner intends to keep private from unauthorized parties | |||

* '''Non-sensitive attribute (NSA)''': a set of attributes such as cookie IDs, hashed email addresses, and mobile advertising IDs generated from an EHR that do not violate the privacy of the record owner if they are disclosed (Note: all attributes that are not categorized as ID, QID, and SA are classified as NSA.) | |||

Each attribute can be further classified as a numerical attribute (e.g., age, zip code, and date of birth) and non-numerical attribute (e.g., gender, job, and disease). Table 1 shows an example dataset, in which the name of patients is naively anonymized (by removing the names and social security numbers). | |||

{| | |||

| STYLE="vertical-align:top;"| | |||

{| class="wikitable" border="1" cellpadding="5" cellspacing="0" width="100%" | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" colspan="5"|'''Table 1.''' An example of different types of attributes in a relational table | |||

|- | |||

|- | |||

! style="background-color:#e2e2e2; padding-left:10px; padding-right:10px;" rowspan="2"|Name | |||

! style="background-color:#e2e2e2; padding-left:10px; padding-right:10px;" colspan="3"|Quasi-identifier | |||

! style="background-color:#e2e2e2; padding-left:10px; padding-right:10px;"|Sensitive attribute | |||

|- | |||

! style="background-color:#e2e2e2; padding-left:10px; padding-right:10px;"|Age | |||

! style="background-color:#e2e2e2; padding-left:10px; padding-right:10px;"|Zip code | |||

! style="background-color:#e2e2e2; padding-left:10px; padding-right:10px;"|Gender | |||

! style="background-color:#e2e2e2; padding-left:10px; padding-right:10px;"|Disease | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|1 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|23 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|96038 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|Male | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|Diabetes | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|2 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|28 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|96070 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|Female | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|Diabetes | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|3 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|26 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|96073 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|Male | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|Diabetes | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|4 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|37 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|96328 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|Male | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|Cancer | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|5 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|33 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|96319 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|Female | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|Mental Illness | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|6 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|33 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|96388 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|Female | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|Diabetes | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|7 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|43 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|96583 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|Male | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|Diabetes | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|8 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|49 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|96512 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|Female | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|Cancer | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|9 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|45 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|96590 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|Male | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|Cancer | |||

|- | |||

|} | |||

|} | |||

===Privacy disclosures=== | |||

A privacy disclosure is defined as a disclosure of personal information that users intend to keep private from an entity which is not authorized to access or have the information. There are three types of privacy disclosures: | |||

* '''Identity disclosure''': Identity disclosure, also known as reidentification, is the major privacy threat in publishing healthcare data. It occurs when the true identity of a targeted victim is revealed by an adversary from the published data. In other words, an individual is reidentified when an adversary is able to map a record in the published data to its corresponding patient with high probability (record linkage). For example, if an adversary possesses the information that A is 43 years old, then A is reidentified as record 7 in Table 1. | |||

* '''Attribute disclosure''': This disclosure occurs when an adversary successfully links a victim to their SA information in the published data with high probability (attribute linkage). This SA information could be an SA value (e.g., "Disease" in Table 1) or a range that contains the SA value (e.g., medical cost range). | |||

* '''Membership disclosure''': This disclosure occurs when an adversary successfully infers the existence of a targeted victim in the published data with high probability. For example, the inference of an individual in a [[COVID-19]]-positive database poses a privacy threat to the individual. | |||

===Attack models=== | |||

Privacy attacks could be launched by matching a published table containing sensitive information about the target victim with some external resources modelling the background knowledge of the attacker. For a successful attack, an adversary may require the following prior knowledge: | |||

* '''The published table, 𝑻′''': An adversary has access to the published table 𝑇′ (which is often an open resource) and knows that 𝑇 is an anonymized data for some table T. | |||

* '''QID of a targeted victim''': An adversary possesses partial or complete QID values about a target from any external resource and the values are accurate. This assumption is realistic as the QID information is easy to acquire from different sources, including real-life inspection data, external demographic data, and and voter list data. | |||

* '''Knowledge about the distribution of the SA and NSA in table T''' : For example, an adversary may possess the information of ''P'' (disease=diabetes, age>50) and may utilize this knowledge to make additional inferences about records in the published table 𝑇′. | |||

Revision as of 19:07, 11 April 2021

| Full article title | Privacy-preserving healthcare informatics: A review |

|---|---|

| Journal | ITM Web of Conferences |

| Author(s) | Chong, Kah Meng |

| Author affiliation(s) | Universiti Tunku Abdul Rahman |

| Primary contact | kmchong at utar dot edu dot my |

| Year published | 2021 |

| Volume and issue | 36 |

| Article # | 04005 |

| DOI | 10.1051/itmconf/20213604005 |

| ISSN | 2271-2097 |

| Distribution license | Creative Commons Attribution 4.0 International |

| Website | https://www.itm-conferences.org/articles/itmconf/abs/2021/01/itmconf_icmsa2021_04005/ |

| Download | https://www.itm-conferences.org/articles/itmconf/pdf/2021/01/itmconf_icmsa2021_04005.pdf (PDF) |

|

|

This article should be considered a work in progress and incomplete. Consider this article incomplete until this notice is removed. |

Abstract

The electronic health record (EHR) is the key to an efficient healthcare service delivery system. The publication of healthcare data is highly beneficial to healthcare industries and government institutions to support a variety of medical and census research. However, healthcare data contains sensitive information of patients, and the publication of such data could lead to unintended privacy disclosures. In this paper, we present a comprehensive survey of the state-of-the-art privacy-enhancing methods that ensure a secure healthcare data sharing environment. We focus on the recently proposed schemes based on data anonymization and differential privacy approaches in the protection of healthcare data privacy. We highlight the strengths and limitations of the two approaches and discuss some promising future research directions in this area.

Keywords: data privacy, data sharing, electronic health record, healthcare informatics,

Introduction

Electronic health record (EHR) systems are increasingly adopted as an important paradigm in the healthcare industry to collect and store patient data, which includes sensitive information such as demographic data, medical history, diagnosis code, medications, treatment plans, hospitalization records, insurance information, immunization dates, allergies, and laboratory and test results. The availability of such big data has provided unprecedented opportunities to improve the efficiency and quality of healthcare services, particularly in improving patient care outcomes and reducing medical costs. EHR data have been published to allow useful analysis as required by the healthcare industry[1] and government institutions.[2][3] Some key examples may include large-scale statistical analytics (e.g., the study of correlation between diseases), clinical decision making, treatment optimization, clustering (e.g., epidemic control), and census surveys. Driven by the potential of EHR systems, a number of EHR repositories have been established, such as the National Database for Autism Research (NDAR), U.K. Data Service, ClinicalTrials.gov, and UNC Health Care (UNCHC).

Although the publication of EHR data is enormously beneficial, it could lead to unintended privacy disclosures. Many conventional cryptography and security methods have been deployed to primarily protect the security of EHR systems, including access control, authentication, and encryption. However, these technologies do not guarantee privacy preservation of sensitive data. That is, the sensitive information of patient could still be inferred from the published data by an adversary. Various regulations and guidelines have been developed to restrict publishable data types, data usage, and data storage, including the Health Insurance Portability and Accountability Act (HIPAA)[4][5], General Data Protection Regulation (GDPR)[6][7], and Personal Data Protection Act.[8] However, there are several limitations to this regulatory approach. First, a high trust level is required of the data recipient that they follow the rules and regulations provided by the data publisher. Yet, there are adversaries who attempt to attack the published data to reidentify a target victim. Second, sensitive data still might be carelessly published due to human error and fall into the wrong hands, which eventually leads to a breach of individual privacy. As such, regulations and guidelines alone do not provide computational guarantee for preserving the privacy of a patient and thus cannot fully prevent such privacy violations. The need of protecting individual data privacy in a hostile environment, while allowing accurate analysis of patient data, has driven the development of effective privacy models in protecting healthcare data.

In this paper, we present the privacy issues in healthcare data publication and elaborate on relevant adversarial attack models. With a focus on data anonymization and differential privacy, we discuss the limitations and strengths of these proposed approaches. Finally, we conclude the paper and highlight future research direction in this area.

Privacy threats

In this section, we first discuss privacy-preserving data publishing (PPDP) and the properties of healthcare data. Then, we present the major privacy disclosures in healthcare data publication and show the relevant attack models. Finally, we present the privacy and utility objective in PPDP.

Privacy-preserving data publishing

Privacy-preserving data publishing (PPDP) provides technical solutions that address the privacy and utility preservation challenges of data sharing scenarios. An overview of PPDP is shown in Figure 1, which includes a general data collection and data publishing scenario.

|

During the data collection phase, data of the record owner (patient) are collected by the data holder (hospital) and stored in an EHR. In the data publishing phase, the data holder releases the collected data to the data recipient (e.g., the public or a third party, e.g., an insurance company or medical research center) for further analysis and data mining. However, some of the data recipients (adversary) are not honest and attempt to obtain more information about the record owner beyond the published data, which includes the identity and sensitive data of the record owner. Hence, PPDP serves as a vital process that sanitizes sensitive information to avoid privacy violations of one or more individuals.

Healthcare data

Typically, stored healthcare data exists as relational data in tabular form. Each row (tuple) corresponds to one record owner, and each column corresponds to a number of distinct attributes, which can be grouped into the following four categories:

- Explicit identifier (ID): a set of attributes such as name, social security number, national IDs, mobile number, and drivers license number that uniquely identifies a record owner

- Quasi-identifier (QID): a set of attributes such as date of birth, gender, address, zip code, and hobby that cannot uniquely identify a record owner but can potentially identify the target if combined with some auxiliary information

- Sensitive attribute (SA): sensitive personal information such as diagnosis codes, genomic information, salary, health condition, insurance information, and relationship status that the record owner intends to keep private from unauthorized parties

- Non-sensitive attribute (NSA): a set of attributes such as cookie IDs, hashed email addresses, and mobile advertising IDs generated from an EHR that do not violate the privacy of the record owner if they are disclosed (Note: all attributes that are not categorized as ID, QID, and SA are classified as NSA.)

Each attribute can be further classified as a numerical attribute (e.g., age, zip code, and date of birth) and non-numerical attribute (e.g., gender, job, and disease). Table 1 shows an example dataset, in which the name of patients is naively anonymized (by removing the names and social security numbers).

| |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

Privacy disclosures

A privacy disclosure is defined as a disclosure of personal information that users intend to keep private from an entity which is not authorized to access or have the information. There are three types of privacy disclosures:

- Identity disclosure: Identity disclosure, also known as reidentification, is the major privacy threat in publishing healthcare data. It occurs when the true identity of a targeted victim is revealed by an adversary from the published data. In other words, an individual is reidentified when an adversary is able to map a record in the published data to its corresponding patient with high probability (record linkage). For example, if an adversary possesses the information that A is 43 years old, then A is reidentified as record 7 in Table 1.

- Attribute disclosure: This disclosure occurs when an adversary successfully links a victim to their SA information in the published data with high probability (attribute linkage). This SA information could be an SA value (e.g., "Disease" in Table 1) or a range that contains the SA value (e.g., medical cost range).

- Membership disclosure: This disclosure occurs when an adversary successfully infers the existence of a targeted victim in the published data with high probability. For example, the inference of an individual in a COVID-19-positive database poses a privacy threat to the individual.

Attack models

Privacy attacks could be launched by matching a published table containing sensitive information about the target victim with some external resources modelling the background knowledge of the attacker. For a successful attack, an adversary may require the following prior knowledge:

- The published table, 𝑻′: An adversary has access to the published table 𝑇′ (which is often an open resource) and knows that 𝑇 is an anonymized data for some table T.

- QID of a targeted victim: An adversary possesses partial or complete QID values about a target from any external resource and the values are accurate. This assumption is realistic as the QID information is easy to acquire from different sources, including real-life inspection data, external demographic data, and and voter list data.

- Knowledge about the distribution of the SA and NSA in table T : For example, an adversary may possess the information of P (disease=diabetes, age>50) and may utilize this knowledge to make additional inferences about records in the published table 𝑇′.

References

- ↑ Senthilkumar, S.A.; Rai, B.K.; Meshram, A.A. et al. (2018). "Big Data in Healthcare Management: A Review of Literature". American Journal of Theoretical and Applied Business 4 (2): 57–69. doi:10.11648/j.ajtab.20180402.14.

- ↑ Dudeck, M.A.; Horan, T.C.; Peterson, K.D. et al. (2011). "National Healthcare Safety Network (NHSN) Report, data summary for 2010, device-associated module". American Journal of Infection Control 39 (10): 798-816. doi:10.1016/j.ajic.2011.10.001. PMID 22133532.

- ↑ Powell, K.M.; Li, Q.; Gross, C. et al. (2019). "Ventilator-Associated Events Reported by U.S. Hospitals to the National Healthcare Safety Network, 2015-2017". Proceedings of the American Thoracic Society 2019 International Conference. doi:10.1164/ajrccm-conference.2019.199.1_MeetingAbstracts.A3419.

- ↑ Cohen, I.G.; Mello, M.M. (2018). "HIPAA and Protecting Health Information in the 21st Century". JAMA 320 (3): 231–32. doi:10.1001/jama.2018.5630. PMID 29800120.

- ↑ Obeng, O.; Paul, S. (2019). "Understanding HIPAA Compliance Practice in Healthcare Organizations in a Cultural Context". AMCIS 2019 Proceedings: 1–5. https://aisel.aisnet.org/amcis2019/info_security_privacy/info_security_privacy/1/.

- ↑ Voigt, P.; von dem Bussche, A. (2017). The EU General Data Protection Regulation (GDPR): A Practical Guide. Springer. ISBN 9783319579580.

- ↑ Tikkinen-Piri, C.; Rohunen, A.; Markkula, J. (2018). "EU General Data Protection Regulation: Changes and implications for personal data collecting companies". Computer Law & Security Review 34 (1): 134–53. doi:10.1016/j.clsr.2017.05.015.

- ↑ Carey, P. (2018). Data Protection: A Practical Guide to UK and EU Law. Oxford University Press. ISBN 9780198815419.

Notes

This presentation is faithful to the original, with only a few minor changes to presentation and grammar for readability. In some cases important information was missing from the references, and that information was added.