LII:Elements of Laboratory Technology Management

Title: Elements of Laboratory Technology Management

Author for citation: Joe Liscouski, with editorial modifications by Shawn Douglas

License for content: Creative Commons Attribution 4.0 International

Publication date: 2014

|

|

This article should be considered a work in progress and incomplete. Consider this article incomplete until this notice is removed. |

Introduction

This discussion is less about specific technologies than it is about the ability to use advanced laboratory technologies effectively. “Effectively” means that products and technologies are used successfully to address needs in your lab, and that they improve the lab’s ability to function. If they don't do that, you’ve wasted your money. Additionally, if the technology in question hasn’t been deployed according to a deliberate plan, your funded projects may not achieve everything they could. Optimally, when applied thoughtfully, the available technologies should result in the transformation of lab work from a labor-intensive effort to one that is intellectually intensive, making the most effective use of people and resources.

People come to the subject of laboratory automation from widely differing perspectives. To some it’s about robotics, to others it’s about laboratory informatics, and even others view it as simply data acquisition and analysis. It all depends on what your interests are, and more importantly what your immediate needs are.

People began working in this field in the 1940s and 1950s, with the work focused on analog electronics to improve instrumentation; this was the first phase of lab automation. Most notably were the development of scanning spectrophotometers and process chromatographs. Those who first encountered this equipment didn’t think much of it and considered it the world as it’s always been. Others who had to deal with products like the Spectronic 20[a] (a single-beam manual spectrophotometer), and use it to develop visible spectra one wavelength measurement at a time, appreciated the automation of scanning instruments.

Mercury switches and timers triggered by cams on a rotating shaft provided chromatographs with the ability to automatically take samples, actuate back flush valves, and take care of other functions without operator intervention. This left the analyst with the task of measuring peaks, developing calibration curves, and performing calculations, at least until data systems became available.

The direction of laboratory automation changed significantly when computer chips became available. In the 1960s, companies such as PerkinElmer were experimenting with the use of computer systems for data acquisition as precursors to commercial products. The availability of general-purpose computers such as the PDP-8 and PDP-12 series (along with the Lab 8e) from Digital Equipment, with other models available from other vendors, made it possible for researchers to connect their instruments to computers and carry out experiments. The development of microprocessors from Intel (4004, 8008) led to the evolution of “intelligent” laboratory equipment ranging from processor-controlled stirring hot-plates to chromatographic integrators.

As researchers learned to use these systems, their application rapidly progressed from data acquisition to interactive control of the experiments, including data storage, analysis, and reporting. Today, the product set available for laboratory applications includes data acquisition systems, laboratory information management systems (LIMS), electronic laboratory notebooks (ELNs), laboratory robotics, and specialized components to help researchers, scientists, and technicians apply modern technologies to their work.

While there is a lot of technology available, the question remains "how do you go about using it?" Not only do we need to know how to use it, but we also must do so while avoiding our own biases about how computer systems operate. Our familiarity with using computer systems in our daily lives may cause us to assume they are doing what we need them to do, without questioning how it actually gets done. “The vendor knows what they are doing” is a poor reason for not testing and evaluating control parameters to ensure they are suitable and appropriate for your work.

Moving from lab functions and requirements to practical solutions

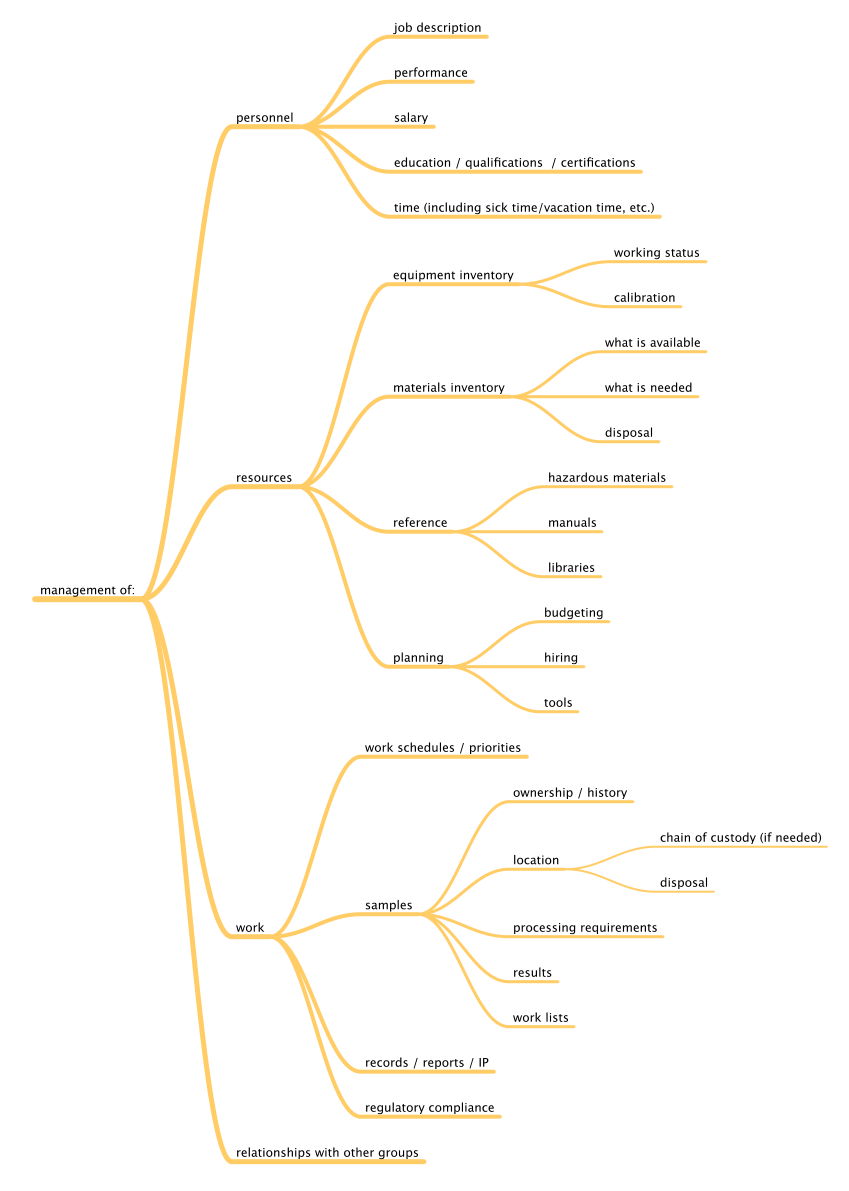

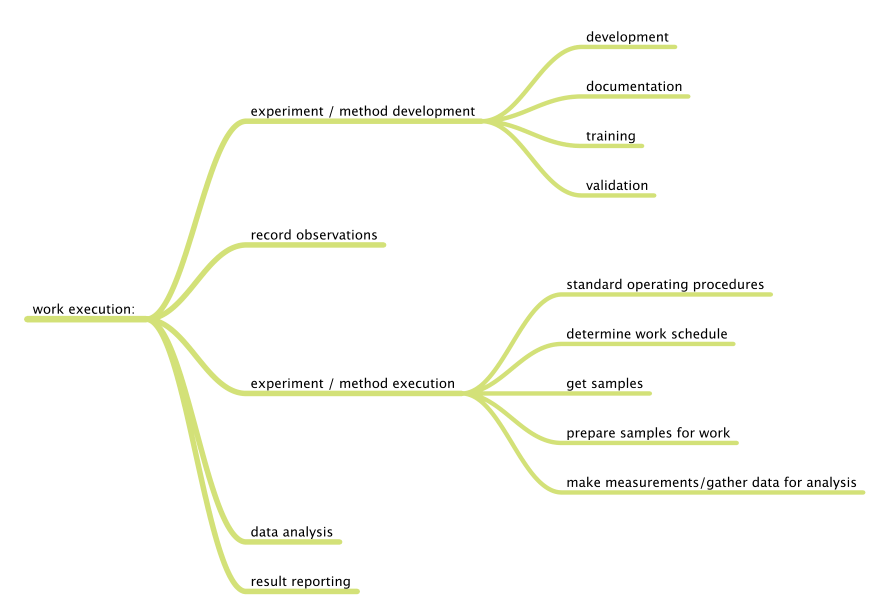

Before we can begin to understand the application of the tools and technologies that are available, we have to know what we want to accomplish, specifically what problems we want to solve. We can divide laboratory functions into two broad classes: management and work execution. Figure 1 addresses management functions, wheras Figure 2 addresses work execution functions, all common to laboratories. You can add to them based on your own experience.

|

|

Vendors have been developing products to address these work areas, and there are a lot of products available. Many of them are "point" solutions: products that are focused on one aspect of work without an effort to integrate them with others. That isn’t surprising since there isn’t an architectural basis for integration aside from specific hardware systems (e.g., Firewire, USB) or vendor-specific software systems (e.g., office product suites). Another issue in scientific work is that the vendor may only be interested in solving a particular problem, with most of the emphasis on an instrument or technique. They may provide the software needed to support their hardware, with data transfer and integration left to the user.

As you work through this document, you’ll find a map of management responsibilities and technologies. How do you connect the above map of functions to the technologies? Applying software and hardware solutions to your lab's needs requires deliberate planning. The days of purchasing point solutions to problems have passed. Today's lab managers need to think more broadly about product usage and how components of lab software systems work together. The point of this document is to help you understand what you need to think about in that regard.

Given those summaries of lab activities, how do we apply available technologies to improve lab operations? Most of the answers fall under the heading of "laboratory automation," so we’ll begin by looking at what that is.

What is laboratory automation?

This isn’t a trivial question; your answer may depend on the field you are working in, your experience, and your current interests. To some it means robotics, to others it is a LIMS (or their clinical counterpart, the laboratory information systems or LIS). The ELN and instrument data systems (IDS) are additional elements worth noting. These are examples of product classes and technologies used in lab automation, but they don’t define the field. Wikipedia provides the following as a definition[1]:

Footnotes

- ↑ The Spectronic 20 was developed by Bausch & Lomb in 1954 and is currently owned and marketed in updated versions by ThermoFisher.

About the author

Initially educated as a chemist, author Joe Liscouski (joe dot liscouski at gmail dot com) is an experienced laboratory automation/computing professional with over forty years of experience in the field, including the design and development of automation systems (both custom and commercial systems), LIMS, robotics and data interchange standards. He also consults on the use of computing in laboratory work. He has held symposia on validation and presented technical material and short courses on laboratory automation and computing in the U.S., Europe, and Japan. He has worked/consulted in pharmaceutical, biotech, polymer, medical, and government laboratories. His current work centers on working with companies to establish planning programs for lab systems, developing effective support groups, and helping people with the application of automation and information technologies in research and quality control environments.

References

- ↑ "Laboratory automation". Wikipedia. Archived from the original on 05 December 2014. https://en.wikipedia.org/w/index.php?title=Laboratory_automation&oldid=636846823.