Difference between revisions of "Template:Article of the week"

Shawndouglas (talk | contribs) (Updated article of the week text.) |

Shawndouglas (talk | contribs) (Updated article of the week text (corrected).) |

||

| Line 1: | Line 1: | ||

<div style="float: left; margin: 0.5em 0.9em 0.4em 0em;">[[File: | <div style="float: left; margin: 0.5em 0.9em 0.4em 0em;">[[File:Fig3 Eivazzadeh JMIRMedInformatics2016 4-2.png|240px]]</div> | ||

'''"[[Journal: | '''"[[Journal:Evaluating health information systems using ontologies|Evaluating health information systems using ontologies]]"''' | ||

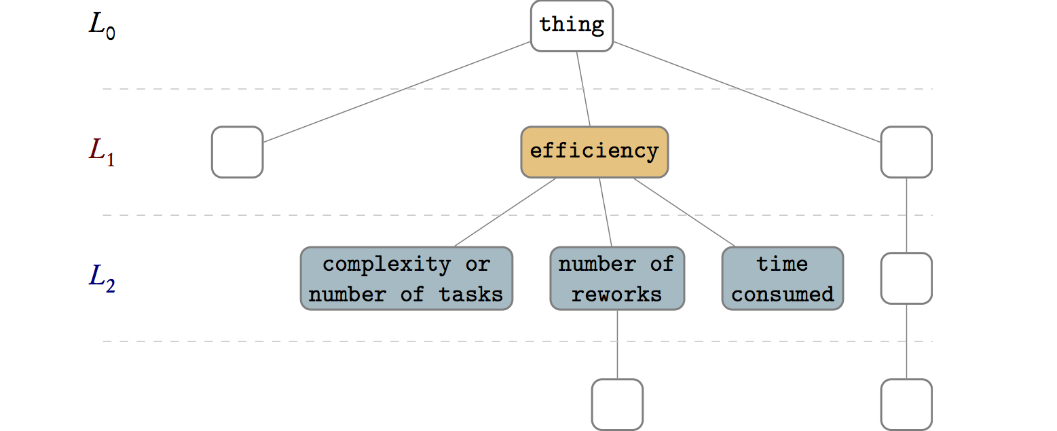

There are several frameworks that attempt to address the challenges of evaluation of [[Health information technology|health information systems]] by offering models, methods, and guidelines about what to evaluate, how to evaluate, and how to report the evaluation results. Model-based evaluation frameworks usually suggest universally applicable evaluation aspects but do not consider case-specific aspects. On the other hand, evaluation frameworks that are case-specific, by eliciting user requirements, limit their output to the evaluation aspects suggested by the users in the early phases of system development. In addition, these case-specific approaches extract different sets of evaluation aspects from each case, making it challenging to collectively compare, unify, or aggregate the evaluation of a set of heterogeneous health information systems. | |||

The aim of this paper is to find a method capable of suggesting evaluation aspects for a set of one or more health information systems — whether similar or heterogeneous — by organizing, unifying, and aggregating the quality attributes extracted from those systems and from an external evaluation framework. ('''[[Journal:Evaluating health information systems using ontologies|Full article...]]''')<br /> | |||

<br /> | <br /> | ||

''Recently featured'': | ''Recently featured'': | ||

Revision as of 15:05, 22 August 2016

"Evaluating health information systems using ontologies"

There are several frameworks that attempt to address the challenges of evaluation of health information systems by offering models, methods, and guidelines about what to evaluate, how to evaluate, and how to report the evaluation results. Model-based evaluation frameworks usually suggest universally applicable evaluation aspects but do not consider case-specific aspects. On the other hand, evaluation frameworks that are case-specific, by eliciting user requirements, limit their output to the evaluation aspects suggested by the users in the early phases of system development. In addition, these case-specific approaches extract different sets of evaluation aspects from each case, making it challenging to collectively compare, unify, or aggregate the evaluation of a set of heterogeneous health information systems.

The aim of this paper is to find a method capable of suggesting evaluation aspects for a set of one or more health information systems — whether similar or heterogeneous — by organizing, unifying, and aggregating the quality attributes extracted from those systems and from an external evaluation framework. (Full article...)

Recently featured: