Difference between revisions of "Main Page/Featured article of the week/2015"

Shawndouglas (talk | contribs) (Added last week's article of the week.) |

Shawndouglas (talk | contribs) m (Red link fixes) |

||

| (24 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

{{ombox | {{ombox | ||

| type = notice | | type = notice | ||

| text = If you're looking for the 2014 | | text = If you're looking for other "Article of the Week" archives: [[Main Page/Featured article of the week/2014|2014]] - 2015 - [[Main Page/Featured article of the week/2016|2016]] - [[Main Page/Featured article of the week/2017|2017]] - [[Main Page/Featured article of the week/2018|2018]] - [[Main Page/Featured article of the week/2019|2019]] - [[Main Page/Featured article of the week/2020|2020]] - [[Main Page/Featured article of the week/2021|2021]] - [[Main Page/Featured article of the week/2022|2022]] - [[Main Page/Featured article of the week/2023|2023]] - [[Main Page/Featured article of the week/2024|2024]] | ||

}} | }} | ||

| Line 17: | Line 17: | ||

<!-- Below this line begin pasting previous news --> | <!-- Below this line begin pasting previous news --> | ||

<h2 style="font-size:105%; font-weight:bold; text-align:left; color:#000; padding:0.2em 0.4em; width:50%;">Featured article of the week: August 31–September 6:</h2> | <h2 style="font-size:105%; font-weight:bold; text-align:left; color:#000; padding:0.2em 0.4em; width:50%;">Featured article of the week: December 28—January 3:</h2> | ||

<div style="padding:0.4em 1em 0.3em 1em;"> | |||

<div style="float: left; margin: 0.5em 0.9em 0.4em 0em;">[[File:Fig3 Dander BMCBioinformatics2014 15.jpg|220px]]</div> | |||

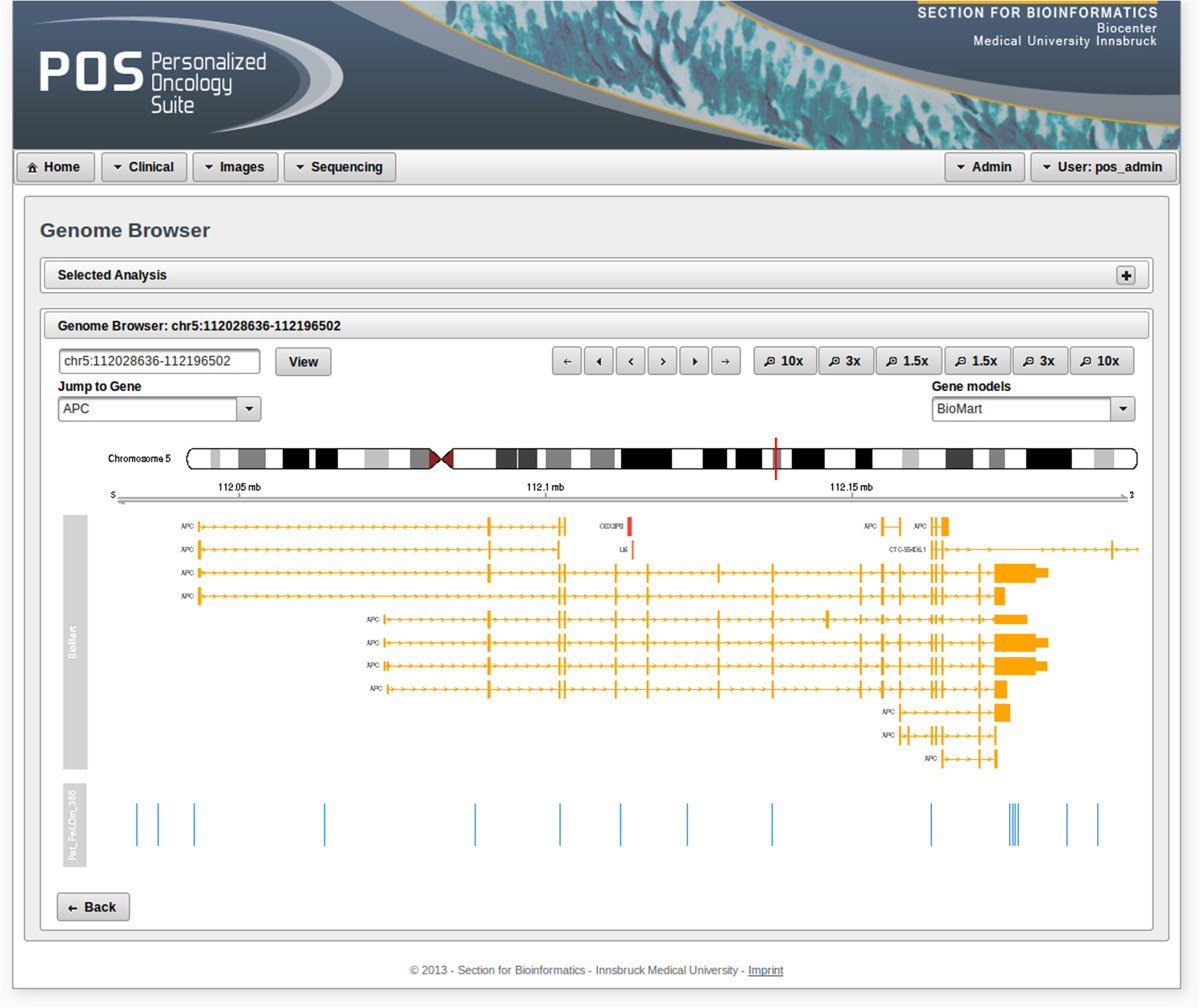

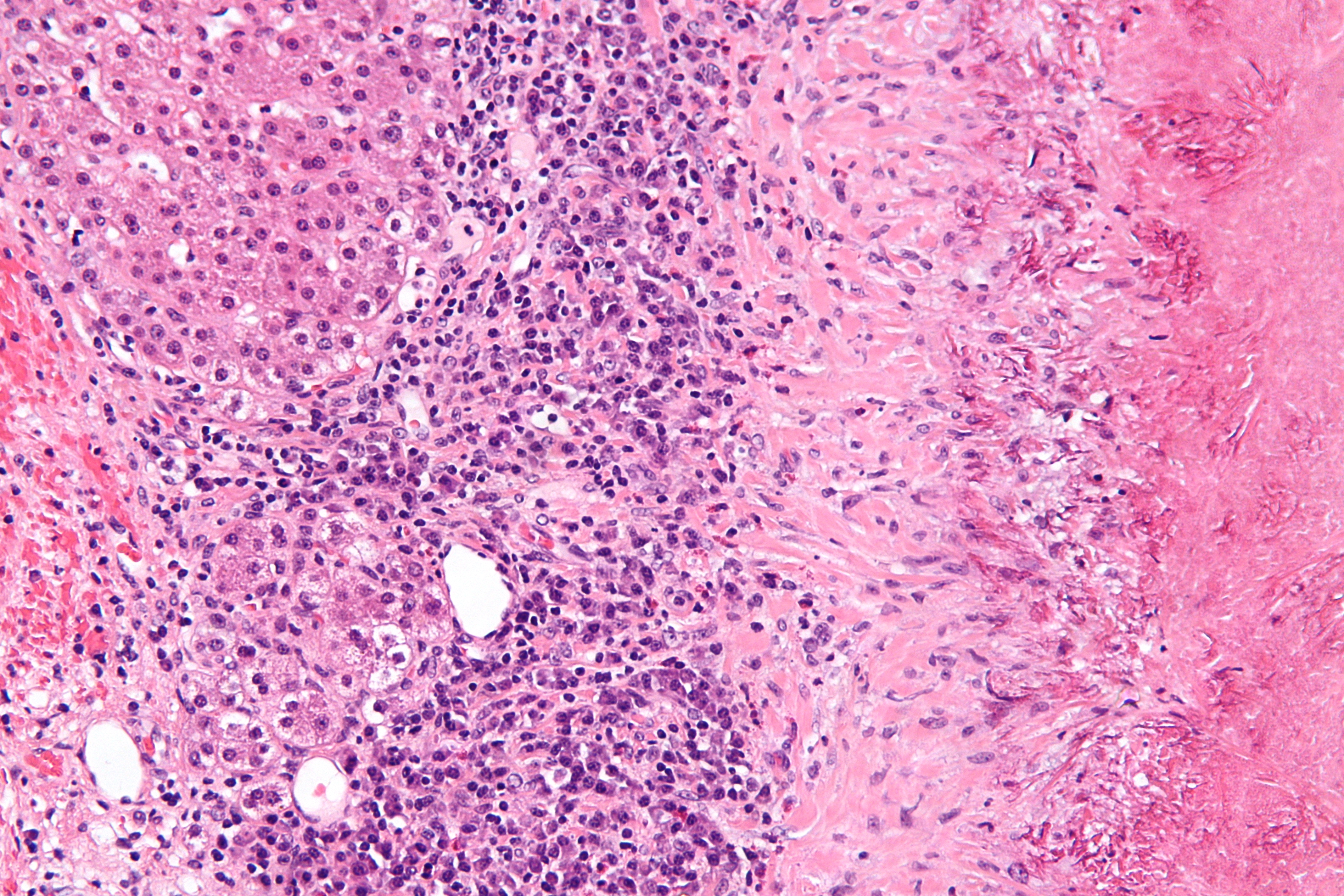

'''"[[Journal:Personalized Oncology Suite: Integrating next-generation sequencing data and whole-slide bioimages|Personalized Oncology Suite: Integrating next-generation sequencing data and whole-slide bioimages]]"''' | |||

Cancer immunotherapy has recently entered a remarkable renaissance phase with the approval of several agents for treatment. [[Cancer informatics|Cancer treatment platforms]] have demonstrated profound tumor regressions including complete cure in patients with metastatic cancer. Moreover, technological advances in next-generation sequencing (NGS) as well as the development of devices for scanning whole-slide bioimages from tissue sections and [[Bioimage informatics|image analysis software]] for quantitation of tumor-infiltrating lymphocytes (TILs) allow, for the first time, the development of personalized cancer immunotherapies that target patient specific mutations. However, there is currently no [[bioinformatics]] solution that supports the integration of these heterogeneous datasets. | |||

We have developed a bioinformatics platform – Personalized Oncology Suite (POS) – that integrates clinical data, NGS data and whole-slide bioimages from tissue sections. POS is a web-based platform that is scalable, flexible and expandable. The underlying database is based on a data warehouse schema, which is used to integrate [[information]] from different sources. POS stores clinical data, [[Genomics|genomic]] data (SNPs and INDELs identified from NGS analysis), and scanned whole-slide images. ('''[[Journal:Personalized Oncology Suite: Integrating next-generation sequencing data and whole-slide bioimages|Full article...]]''')<br /> | |||

</div> | |||

|- | |||

|<br /><h2 style="font-size:105%; font-weight:bold; text-align:left; color:#000; padding:0.2em 0.4em; width:50%;">Featured article of the week: December 21—27:</h2> | |||

<div style="padding:0.4em 1em 0.3em 1em;"> | |||

<div style="float: left; margin: 0.5em 0.9em 0.4em 0em;">[[File:Fig1 Munkhdalai JCheminformatics2015 7-1.jpg|220px]]</div> | |||

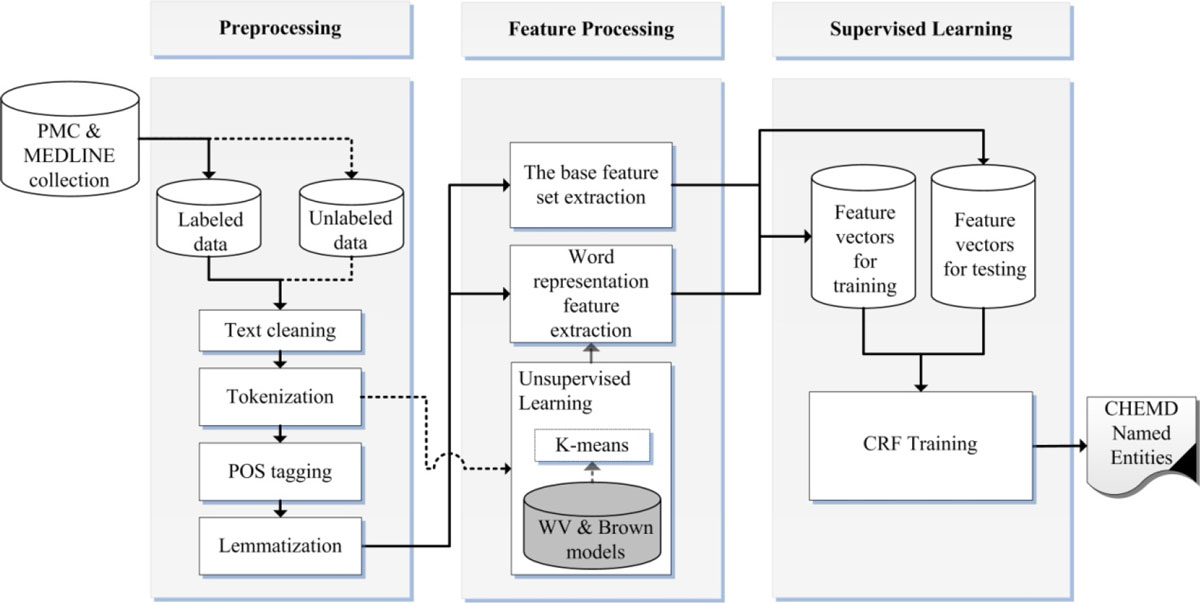

'''"[[Journal:Incorporating domain knowledge in chemical and biomedical named entity recognition with word representations|Incorporating domain knowledge in chemical and biomedical named entity recognition with word representations]]"''' | |||

Chemical and biomedical Named Entity Recognition (NER) is an essential prerequisite task before effective text mining can begin for biochemical-text data. Exploiting unlabeled text data to leverage system performance has been an active and challenging research topic in text mining due to the recent growth in the amount of biomedical literature. | |||

We present a semi-supervised learning method that efficiently exploits unlabeled data in order to incorporate domain knowledge into a named entity recognition model and to leverage system performance. The proposed method includes Natural Language Processing (NLP) tasks for text preprocessing, learning word representation features from a large amount of text data for feature extraction, and conditional random fields for token classification. Other than the free text in the domain, the proposed method does not rely on any lexicon nor any dictionary in order to keep the system applicable to other NER tasks in bio-text data. ('''[[Journal:Incorporating domain knowledge in chemical and biomedical named entity recognition with word representations|Full article...]]''')<br /> | |||

</div> | |||

|- | |||

|<br /><h2 style="font-size:105%; font-weight:bold; text-align:left; color:#000; padding:0.2em 0.4em; width:50%;">Featured article of the week: December 14—20:</h2> | |||

<div style="padding:0.4em 1em 0.3em 1em;"> | |||

<div style="float: left; margin: 0.5em 0.9em 0.4em 0em;">[[File:Fig1 GanzingerPeerJCS2015 3.png|220px]]</div> | |||

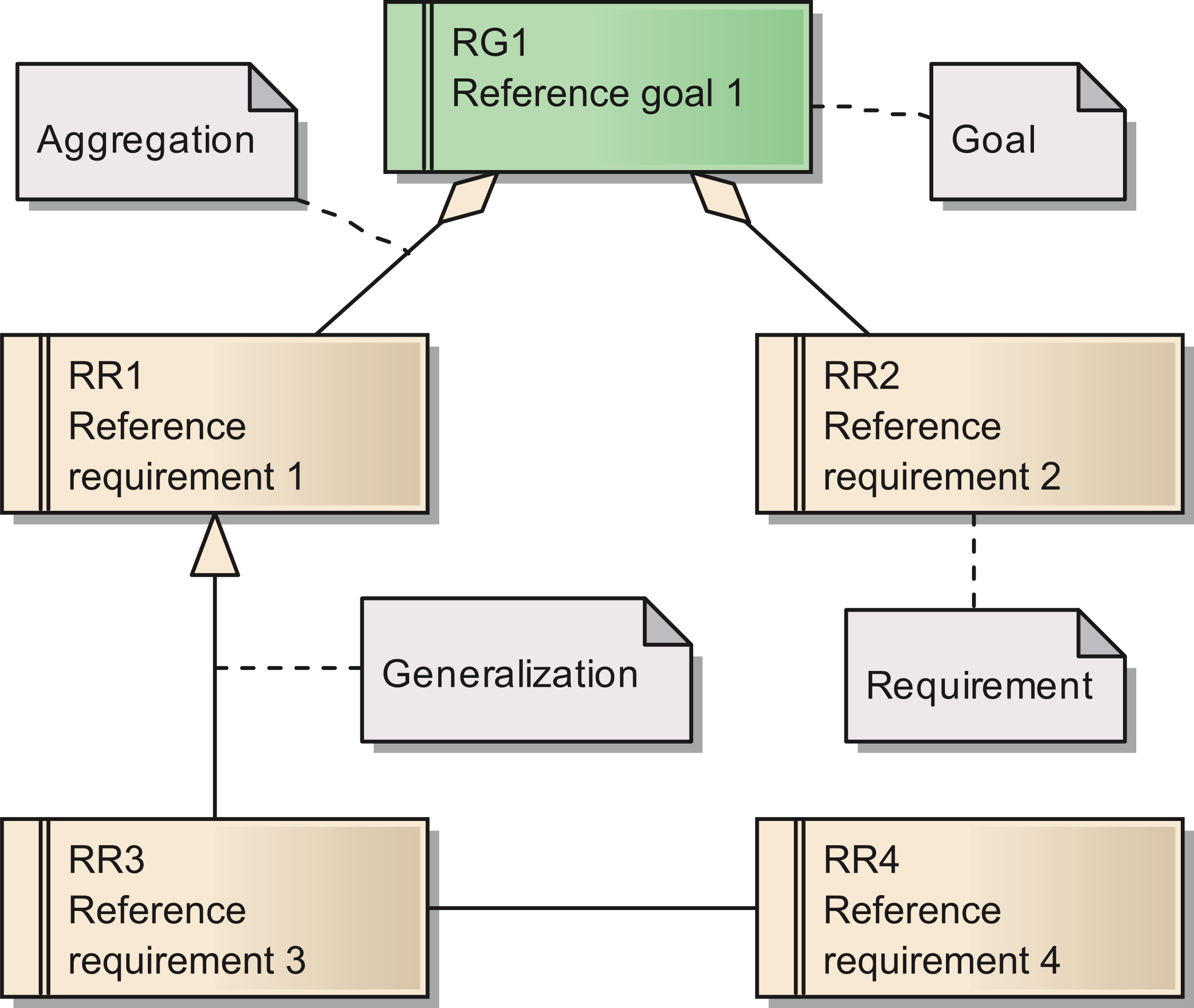

'''"[[Journal:Requirements for data integration platforms in biomedical research networks: A reference model|Requirements for data integration platforms in biomedical research networks: A reference model]]"''' | |||

Biomedical research networks need to integrate research data among their members and with external partners. To support such data sharing activities, an adequate information technology infrastructure is necessary. To facilitate the establishment of such an infrastructure, we developed a reference model for the requirements. The reference model consists of five reference goals and 15 reference requirements. Using the Unified Modeling Language, the goals and requirements are set into relation to each other. In addition, all goals and requirements are described textually in tables. This reference model can be used by research networks as a basis for a resource efficient acquisition of their project specific requirements. Furthermore, a concrete instance of the reference model is described for a research network on liver cancer. The reference model is transferred into a requirements model of the specific network. Based on this concrete requirements model, a service-oriented information technology architecture is derived and also described in this paper. ('''[[Journal:Requirements for data integration platforms in biomedical research networks: A reference model|Full article...]]''')<br /> | |||

</div> | |||

|- | |||

|<br /><h2 style="font-size:105%; font-weight:bold; text-align:left; color:#000; padding:0.2em 0.4em; width:50%;">Featured article of the week: December 7—13:</h2> | |||

<div style="padding:0.4em 1em 0.3em 1em;"> | |||

<div style="float: left; margin: 0.5em 0.9em 0.4em 0em;">[[File:Fig1 Barker BMCBio2013 14.jpg|220px]]</div> | |||

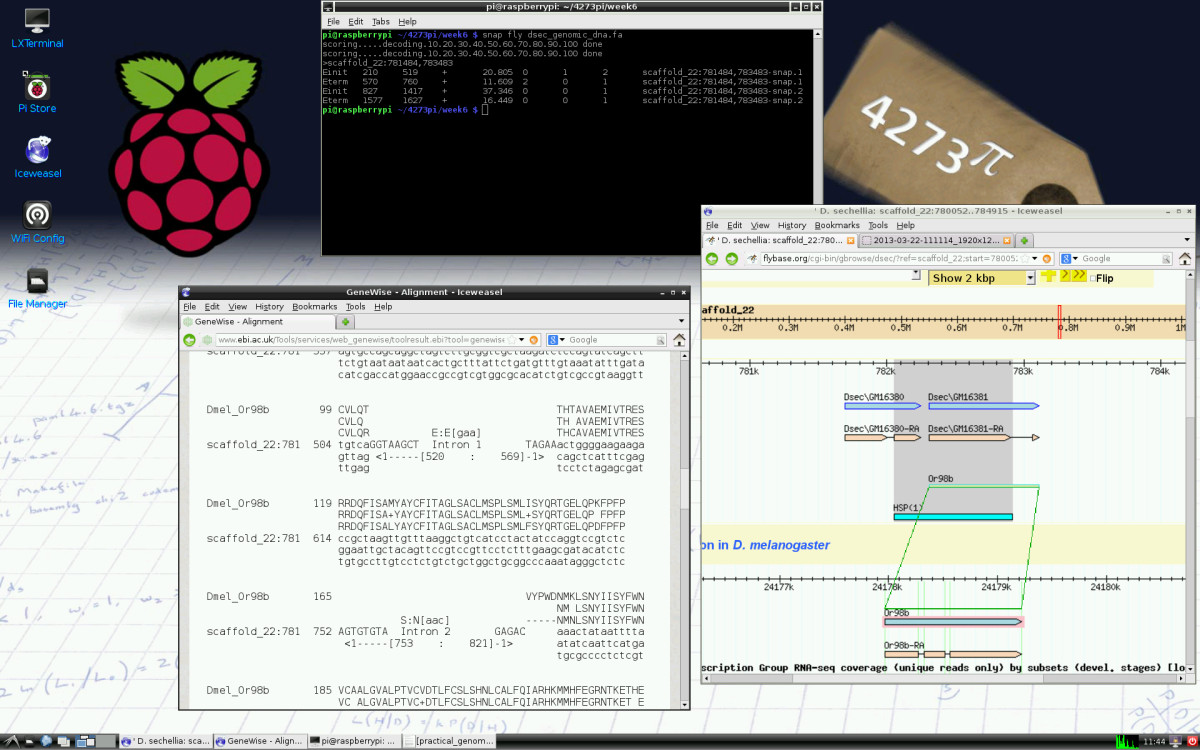

'''"[[Journal:4273π: Bioinformatics education on low cost ARM hardware|4273π: Bioinformatics education on low cost ARM hardware]]"''' | |||

Teaching [[bioinformatics]] at universities is complicated by typical computer classroom settings. As well as running software locally and online, students should gain experience of systems administration. For a future career in biology or bioinformatics, the installation of software is a useful skill. We propose that this may be taught by running the course on GNU/Linux running on inexpensive Raspberry Pi computer hardware, for which students may be granted full administrator access. | |||

We release 4273''π'', an operating system image for Raspberry Pi based on Raspbian Linux. This includes minor customisations for classroom use and includes our Open Access bioinformatics course, ''4273π Bioinformatics for Biologists''. This is based on the final-year undergraduate module BL4273, run on Raspberry Pi computers at the University of St Andrews, Semester 1, academic year 2012–2013. 4273''π'' is a means to teach bioinformatics, including systems administration tasks, to undergraduates at low cost. ('''[[Journal:4273π: Bioinformatics education on low cost ARM hardware|Full article...]]''')<br /> | |||

</div> | |||

|- | |||

|<br /><h2 style="font-size:105%; font-weight:bold; text-align:left; color:#000; padding:0.2em 0.4em; width:50%;">Featured article of the week: November 30–December 6:</h2> | |||

<div style="padding:0.4em 1em 0.3em 1em;"> | |||

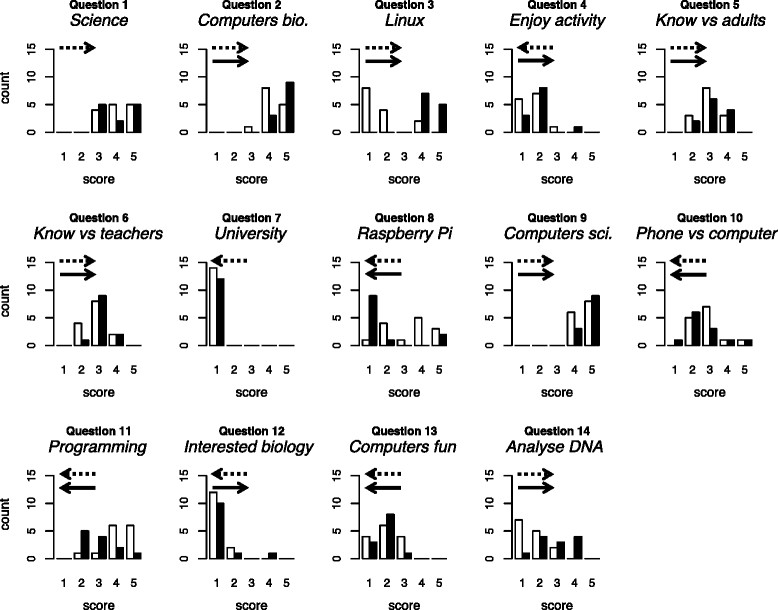

<div style="float: left; margin: 0.5em 0.9em 0.4em 0em;">[[File:Fig1 Barker IntJourSTEMEd2015 2.jpg|220px]]</div> | |||

'''"[[Journal:University-level practical activities in bioinformatics benefit voluntary groups of pupils in the last 2 years of school|University-level practical activities in bioinformatics benefit voluntary groups of pupils in the last 2 years of school]]"''' | |||

[[Bioinformatics]] — the use of computers in biology — is of major and increasing importance to biological sciences and medicine. We conducted a preliminary investigation of the value of bringing practical, university-level bioinformatics education to the school level. We conducted voluntary activities for pupils at two schools in Scotland (years S5 and S6; pupils aged 15–17). We used material originally developed for an optional final-year undergraduate module and now incorporated into 4273''π'', a resource for teaching and learning bioinformatics on the low-cost Raspberry Pi computer. | |||

Pupils’ feedback forms suggested our activities were beneficial. During the course of the activity, they provide strong evidence of increase in the following: pupils’ perception of the value of computers within biology; their knowledge of the Linux operating system and the Raspberry Pi; their willingness to use computers rather than phones or tablets; their ability to program a computer and their ability to analyse DNA sequences with a computer. We found no strong evidence of negative effects. ('''[[Journal:University-level practical activities in bioinformatics benefit voluntary groups of pupils in the last 2 years of school|Full article...]]''')<br /> | |||

</div> | |||

|- | |||

|<br /><h2 style="font-size:105%; font-weight:bold; text-align:left; color:#000; padding:0.2em 0.4em; width:50%;">Featured article of the week: November 23–29:</h2> | |||

<div style="padding:0.4em 1em 0.3em 1em;"> | |||

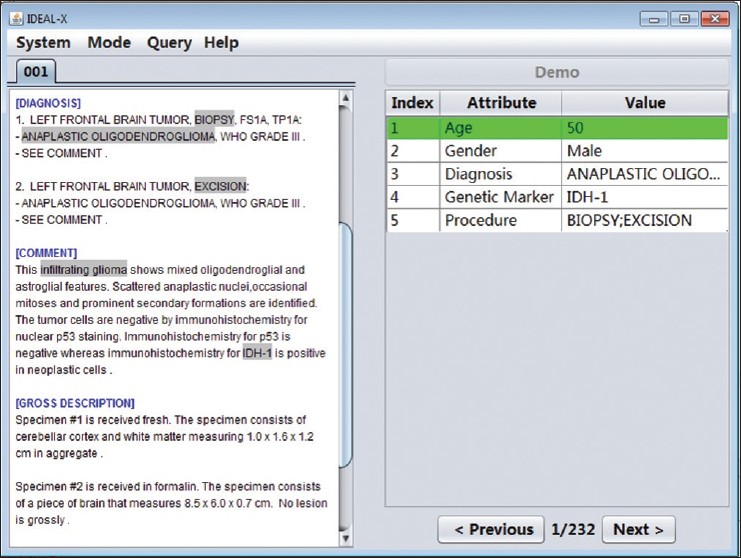

<div style="float: left; margin: 0.5em 0.9em 0.4em 0em;">[[File:Fig2 Zheng JPathInfo2015 6.jpg|220px]]</div> | |||

'''"[[Journal:Support patient search on pathology reports with interactive online learning based data extraction|Support patient search on pathology reports with interactive online learning based data extraction]]"''' | |||

Structural reporting enables semantic understanding and prompt retrieval of clinical findings about patients. While [[LIS feature#Synoptic reporting|synoptic pathology reporting]] provides templates for data entries, information in [[Clinical pathology|pathology]] reports remains primarily in narrative free text form. Extracting data of interest from narrative pathology reports could significantly improve the representation of the information and enable complex structured queries. However, manual extraction is tedious and error-prone, and automated tools are often constructed with a fixed training dataset and not easily adaptable. Our goal is to extract data from pathology reports to support advanced patient search with a highly adaptable semi-automated data extraction system, which can adjust and self-improve by learning from a user's interaction with minimal human effort. | |||

We have developed an online machine learning based information extraction system called IDEAL-X. With its graphical user interface, the system's data extraction engine automatically annotates values for users to review upon loading each report text. The system analyzes users' corrections regarding these annotations with online machine learning, and incrementally enhances and refines the learning model as reports are processed. ('''[[Journal:Support patient search on pathology reports with interactive online learning based data extraction|Full article...]]''')<br /> | |||

</div> | |||

|- | |||

|<br /><h2 style="font-size:105%; font-weight:bold; text-align:left; color:#000; padding:0.2em 0.4em; width:50%;">Featured article of the week: November 16–22:</h2> | |||

<div style="padding:0.4em 1em 0.3em 1em;"> | |||

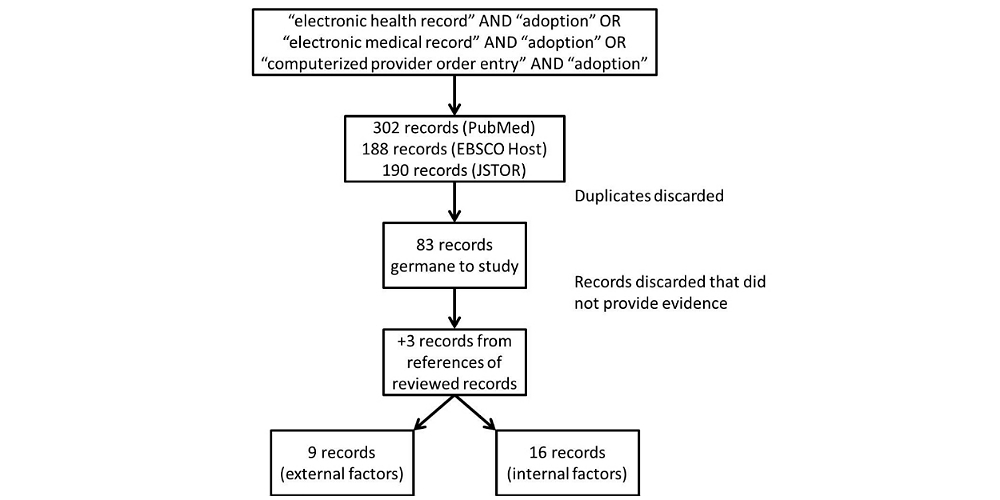

<div style="float: left; margin: 0.5em 0.9em 0.4em 0em;">[[File:Fig1 Kruse JMIRMedInfo2014 2-1.jpg|220px]]</div> | |||

'''"[[Journal:Factors associated with adoption of health information technology: A conceptual model based on a systematic review|Factors associated with adoption of health information technology: A conceptual model based on a systematic review]]"''' | |||

The purpose of this systematic review is to identify a full-spectrum of both internal organizational and external environmental factors associated with the adoption of [[health information technology]] (HIT), specifically the EHR. The result is a conceptual model that is commensurate with the complexity of with the health care sector. We performed a systematic literature search in PubMed (restricted to English), EBSCO Host, and Google Scholar for both empirical studies and theory-based writing from 1993-2013 that demonstrated association between influential factors and three modes of HIT: EHR, [[electronic medical record]] (EMR), and computerized provider order entry (CPOE). We also looked at published books on organizational theories. We made notes and noted trends on adoption factors. These factors were grouped as adoption factors associated with various versions of EHR adoption. The resulting conceptual model summarizes the diversity of independent variables (IVs) and dependent variables (DVs) used in articles, editorials, books, as well as quantitative and qualitative studies (n=83). ('''[[Journal:Factors associated with adoption of health information technology: A conceptual model based on a systematic review|Full article...]]''')<br /> | |||

</div> | |||

|- | |||

|<br /><h2 style="font-size:105%; font-weight:bold; text-align:left; color:#000; padding:0.2em 0.4em; width:50%;">Featured article of the week: November 2–15:</h2> | |||

<div style="padding:0.4em 1em 0.3em 1em;"> | |||

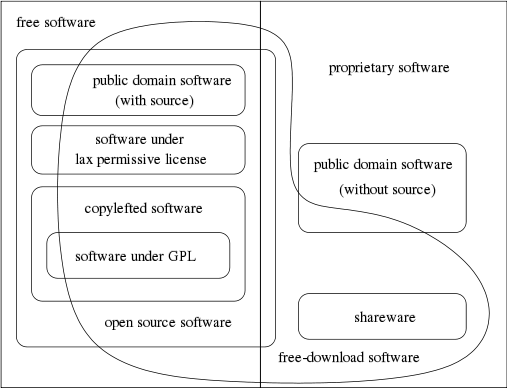

<div style="float: left; margin: 0.5em 0.9em 0.4em 0em;">[[File:Fig1_Joyce_2015.png|220px]]</div> | |||

'''"[[Journal:Generalized procedure for screening free software and open-source software applications|Generalized procedure for screening free software and open-source software applications]]"''' | |||

Free software and [[:Category:Open-source software|open-source software projects]] have become a popular alternative tool in both scientific research and other fields. However, selecting the optimal application for use in a project can be a major task in itself, as the list of potential applications must first be identified and screened to determine promising candidates before an in-depth analysis of systems can be performed. To simplify this process, we have initiated a project to generate a library of in-depth reviews of free software and open-source software applications. Preliminary to beginning this project, a review of evaluation methods available in the literature was performed. As we found no one method that stood out, we synthesized a general procedure using a variety of available sources for screening a designated class of applications to determine which ones to evaluate in more depth. In this paper, we examine a number of currently published processes to identify their strengths and weaknesses. By selecting from these processes we synthesize a proposed screening procedure to triage available systems and identify those most promising of pursuit. ('''[[Journal:Generalized procedure for screening free software and open-source software applications|Full article...]]''')<br /> | |||

</div> | |||

|- | |||

|<br /><h2 style="font-size:105%; font-weight:bold; text-align:left; color:#000; padding:0.2em 0.4em; width:50%;">Featured article of the week: October 26–November 1:</h2> | |||

<div style="padding:0.4em 1em 0.3em 1em;"> | |||

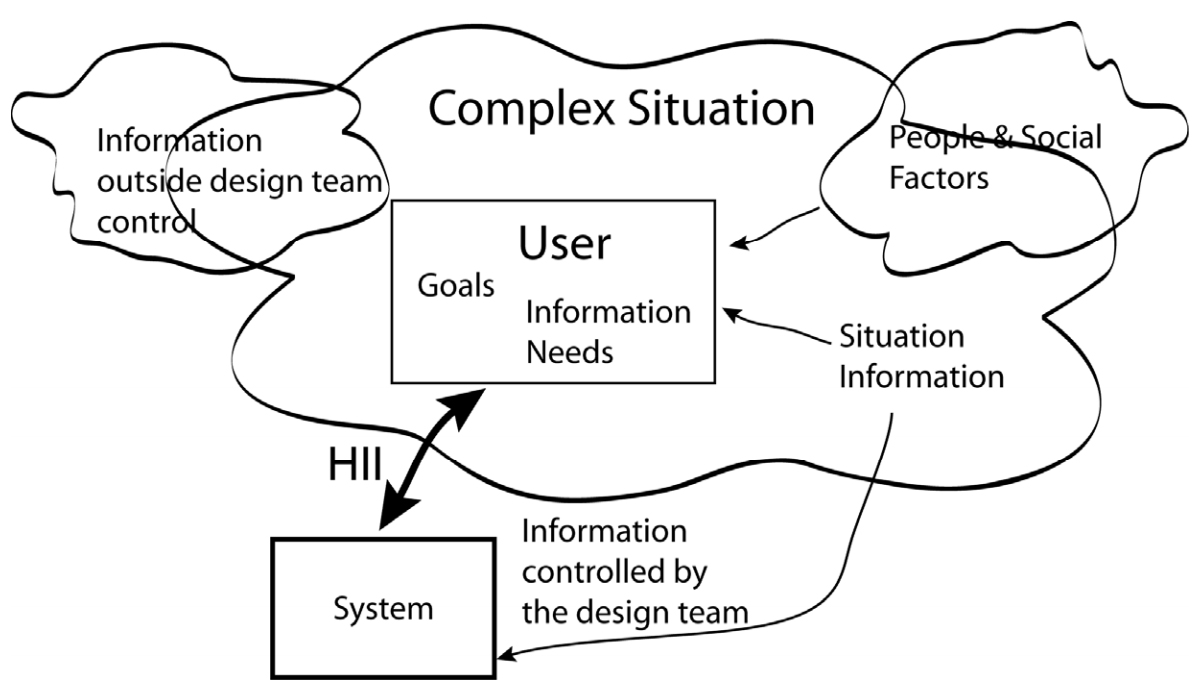

<div style="float: left; margin: 0.5em 0.9em 0.4em 0em;">[[File:Fig1 Albers Informatics2015 2-2.jpg|220px]]</div> | |||

'''"[[Journal:Human–information interaction with complex information for decision-making|Human–information interaction with complex information for decision-making]]"''' | |||

Human–information interaction (HII) for simple [[information]] and for complex information is different because people's goals and information needs differ between the two cases. With complex information, comprehension comes from understanding the relationships and interactions within the information and factors outside of a design team's control. Yet, a design team must consider all these within an HII design in order to maximize the communication potential. This paper considers how simple and complex information requires different design strategies and how those strategies differ. ('''[[Journal:Human–information interaction with complex information for decision-making|Full article...]]''')<br /> | |||

</div> | |||

|- | |||

|<br /><h2 style="font-size:105%; font-weight:bold; text-align:left; color:#000; padding:0.2em 0.4em; width:50%;">Featured article of the week: October 19–25:</h2> | |||

<div style="padding:0.4em 1em 0.3em 1em;"> | |||

<div style="float: left; margin: 0.5em 0.9em 0.4em 0em;">[[File:Fig2 Celi JMIRMedInformatics2014 2-2.jpg|220px]]</div> | |||

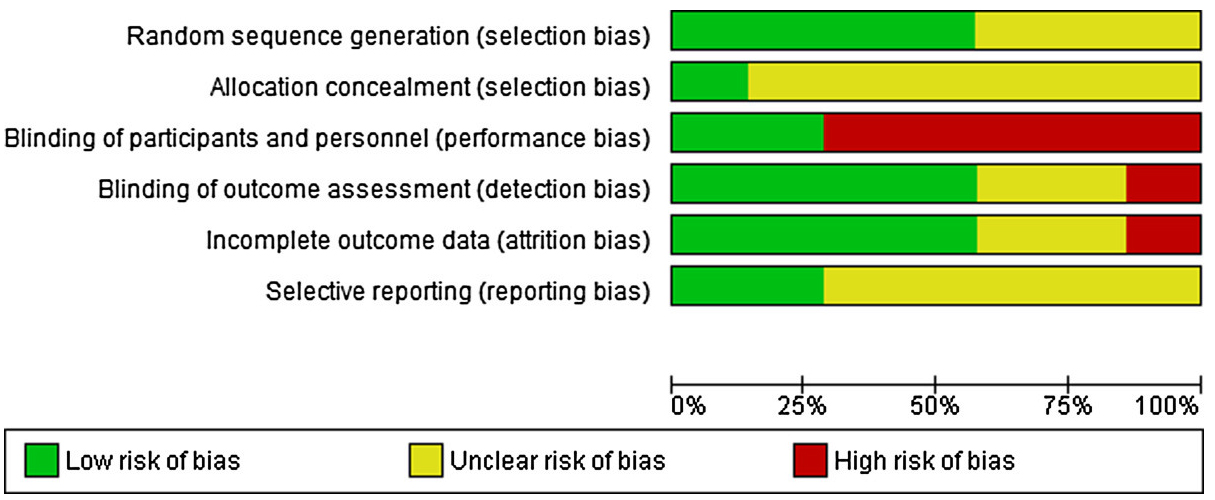

'''"[[Journal:Making big data useful for health care: A summary of the inaugural MIT Critical Data Conference|Making big data useful for health care: A summary of the inaugural MIT Critical Data Conference]]"''' | |||

With growing concerns that big data will only augment the problem of unreliable research, the Laboratory of Computational Physiology at the Massachusetts Institute of Technology organized the Critical Data Conference in January 2014. Thought leaders from academia, government, and industry across disciplines — including clinical medicine, computer science, public health, [[informatics]], biomedical research, health technology, statistics, and epidemiology — gathered and discussed the pitfalls and challenges of big data in health care. The key message from the conference is that the value of large amounts of data hinges on the ability of researchers to share data, methodologies, and findings in an open setting. If empirical value is to be from the analysis of retrospective data, groups must continuously work together on similar problems to create more effective peer review. This will lead to improvement in methodology and quality, with each iteration of analysis resulting in more reliability. ('''[[Journal:Making big data useful for health care: A summary of the inaugural MIT Critical Data Conference|Full article...]]''')<br /> | |||

</div> | |||

|- | |||

|<br /><h2 style="font-size:105%; font-weight:bold; text-align:left; color:#000; padding:0.2em 0.4em; width:50%;">Featured article of the week: October 12–18:</h2> | |||

<div style="padding:0.4em 1em 0.3em 1em;"> | |||

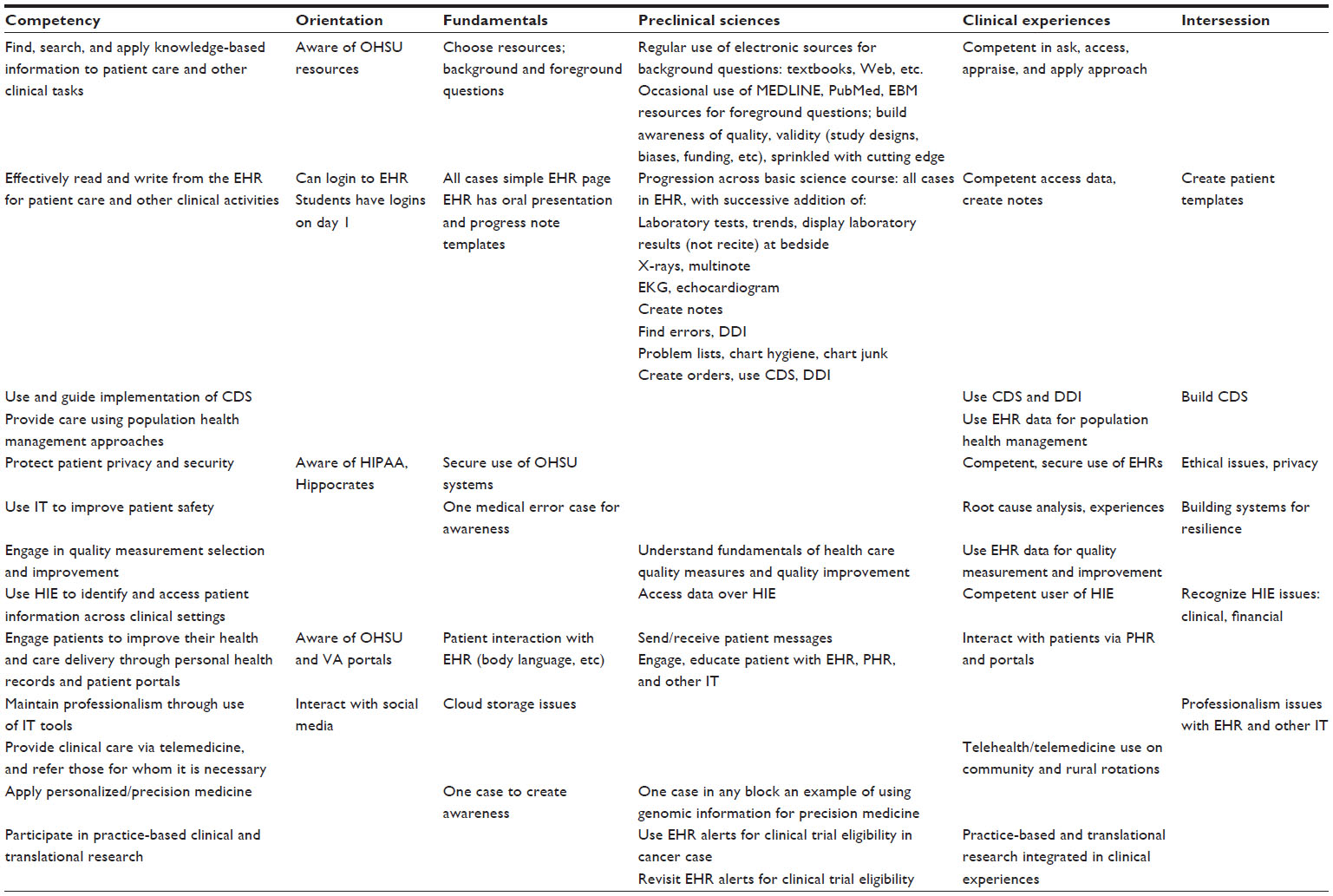

<div style="float: left; margin: 0.5em 0.9em 0.4em 0em;">[[File:Tab2 Hersh AdvancesMedEdPrac2014 2014-5.jpg|220px]]</div> | |||

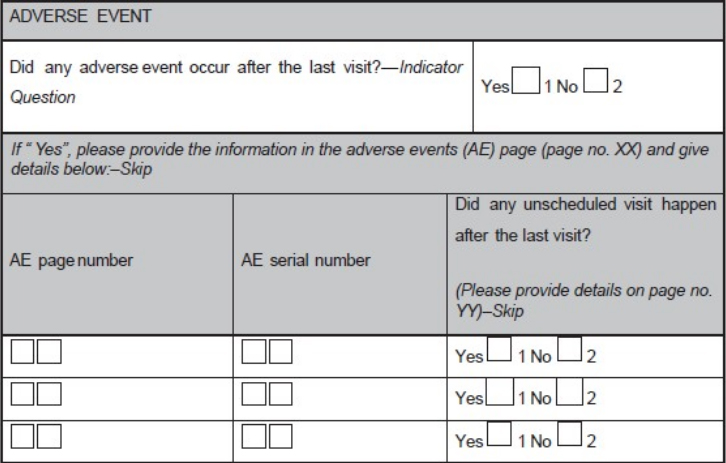

'''"[[Journal:Beyond information retrieval and electronic health record use: Competencies in clinical informatics for medical education|Beyond information retrieval and electronic health record use: Competencies in clinical informatics for medical education]]"''' | |||

Physicians in the 21st century will increasingly interact in diverse ways with information systems, requiring competence in many aspects of [[clinical informatics]]. In recent years, many medical school curricula have added content in [[information]] retrieval (search) and basic use of the [[electronic health record]]. However, this omits the growing number of other ways that physicians are interacting with information that includes activities such as [[Clinical decision support system|clinical decision support]], quality measurement and improvement, personal health records, telemedicine, and personalized medicine. We describe a process whereby six faculty members representing different perspectives came together to define competencies in clinical informatics for a curriculum transformation process occurring at Oregon Health & Science University. From the broad competencies, we also developed specific learning objectives and milestones, an implementation schedule, and mapping to general competency domains. We present our work to encourage debate and refinement as well as facilitate evaluation in this area. ('''[[Journal:Beyond information retrieval and electronic health record use: Competencies in clinical informatics for medical education|Full article...]]''')<br /> | |||

</div> | |||

|- | |||

|<br /><h2 style="font-size:105%; font-weight:bold; text-align:left; color:#000; padding:0.2em 0.4em; width:50%;">Featured article of the week: October 5–11:</h2> | |||

<div style="padding:0.4em 1em 0.3em 1em;"> | |||

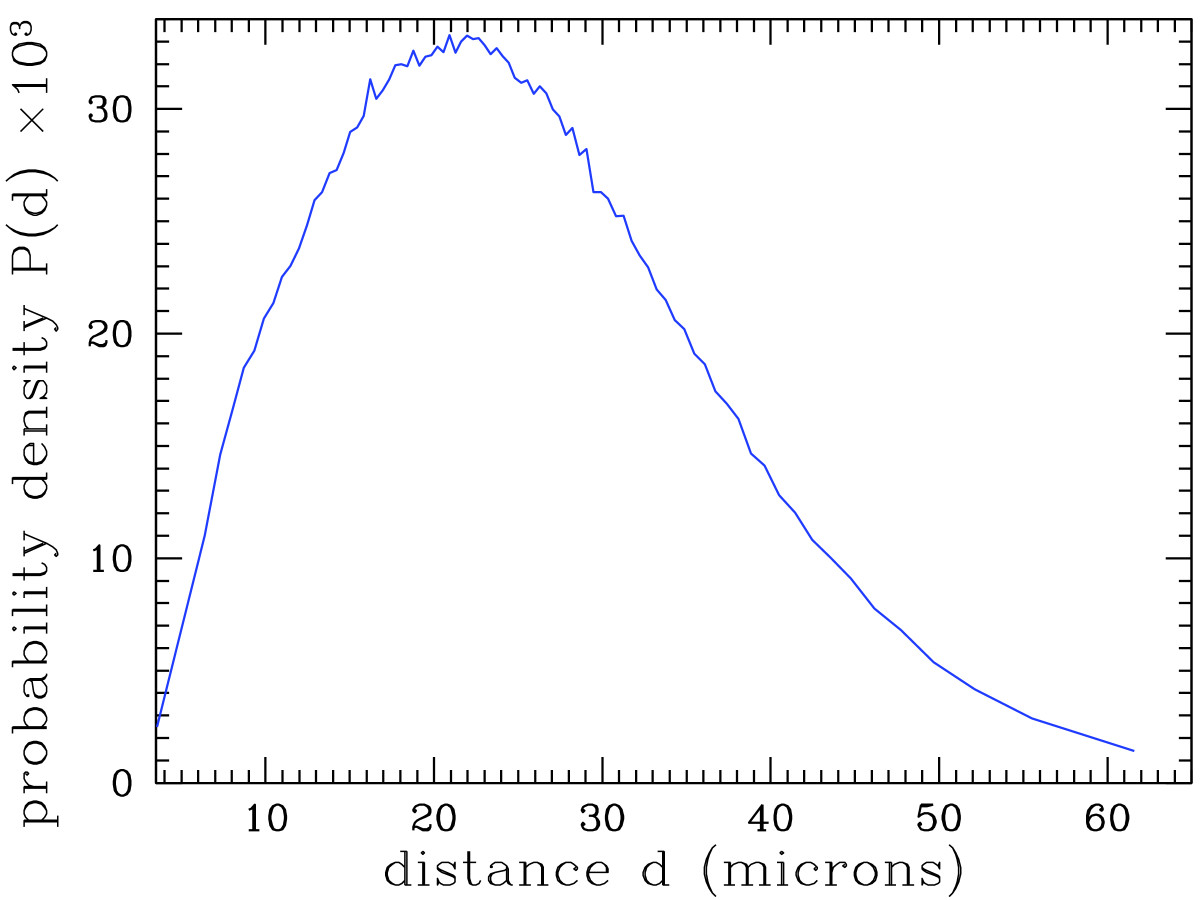

<div style="float: left; margin: 0.5em 0.9em 0.4em 0em;">[[File:Fig5 Deroulers DiagnosticPath2013 8.jpg|220px]]</div> | |||

'''"[[Journal:Analyzing huge pathology images with open source software|Analyzing huge pathology images with open source software]]"''' | |||

Background: Digital pathology images are increasingly used both for diagnosis and research, because slide scanners are nowadays broadly available and because the quantitative study of these images yields new insights in systems biology. However, such virtual slides build up a technical challenge since the images occupy often several gigabytes and cannot be fully opened in a computer’s memory. Moreover, there is no standard format. Therefore, most common open source tools such as ImageJ fail at treating them, and the others require expensive hardware while still being prohibitively slow. | |||

Results: We have developed several cross-platform open source software tools to overcome these limitations. The NDPITools provide a way to transform microscopy images initially in the loosely supported NDPI format into one or several standard TIFF files, and to create mosaics (division of huge images into small ones, with or without overlap) in various TIFF and JPEG formats. They can be driven through ImageJ plugins. The LargeTIFFTools achieve similar functionality for huge TIFF images which do not fit into RAM. We test the performance of these tools on several digital slides and compare them, when applicable, to standard software. A statistical study of the cells in a tissue sample from an oligodendroglioma was performed on an average laptop computer to demonstrate the efficiency of the tools. ('''[[Journal:Analyzing huge pathology images with open source software|Full article...]]''')<br /> | |||

</div> | |||

|- | |||

|<br /><h2 style="font-size:105%; font-weight:bold; text-align:left; color:#000; padding:0.2em 0.4em; width:50%;">Featured article of the week: September 28–October 4:</h2> | |||

<div style="padding:0.4em 1em 0.3em 1em;"> | |||

<div style="float: left; margin: 0.5em 0.9em 0.4em 0em;">[[File:Fig2 Stocker BMCBioinformatics2009 10.jpg|220px]]</div> | |||

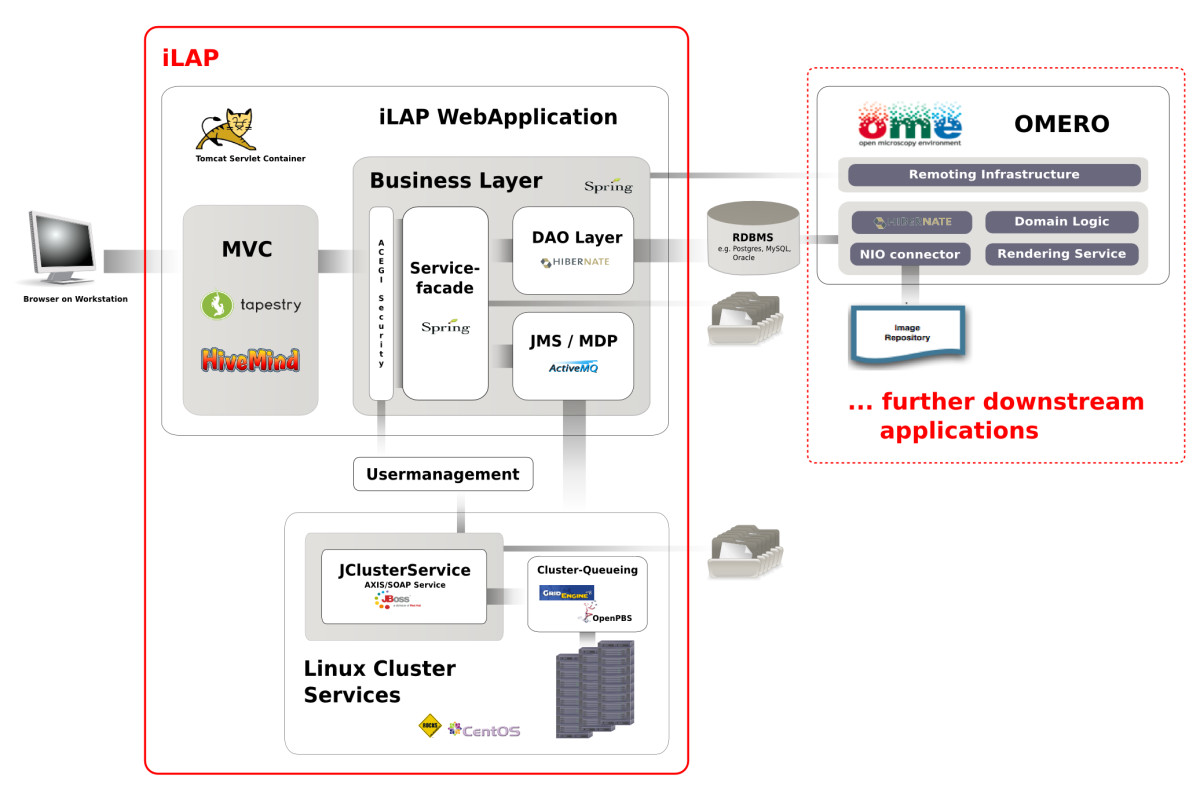

'''"[[Journal:iLAP: A workflow-driven software for experimental protocol development, data acquisition and analysis|iLAP: A workflow-driven software for experimental protocol development, data acquisition and analysis]]"''' | |||

Background: In recent years, the genome biology community has expended considerable effort to confront the challenges of managing heterogeneous data in a structured and organized way and developed [[laboratory information management system]]s (LIMS) for both raw and processed data. On the other hand, [[Electronic laboratory notebook|electronic notebooks]] were developed to record and manage scientific data, and facilitate data-sharing. Software which enables both, management of large datasets and digital recording of [[laboratory]] procedures would serve a real need in laboratories using medium and high-throughput techniques. | |||

Results: We have developed iLAP (Laboratory data management, Analysis, and Protocol development), a workflow-driven information management system specifically designed to create and manage experimental protocols, and to analyze and share laboratory data. The system combines experimental protocol development, wizard-based data acquisition, and high-throughput data analysis into a single, integrated system. We demonstrate the power and the flexibility of the platform using a microscopy case study based on a combinatorial multiple fluorescence in situ hybridization (m-FISH) protocol and 3D-image reconstruction. ('''[[Journal:iLAP: A workflow-driven software for experimental protocol development, data acquisition and analysis|Full article...]]''')<br /> | |||

</div> | |||

|- | |||

|<br /><h2 style="font-size:105%; font-weight:bold; text-align:left; color:#000; padding:0.2em 0.4em; width:50%;">Featured article of the week: September 21–27:</h2> | |||

<div style="padding:0.4em 1em 0.3em 1em;"> | |||

<div style="float: left; margin: 0.5em 0.9em 0.4em 0em;">[[File:Pic1 Zieth electronic2014 8-2.jpg|220px]]</div> | |||

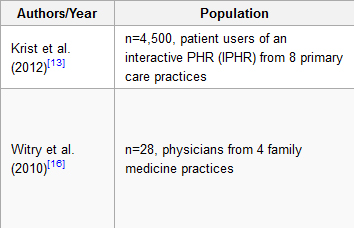

'''"[[Journal:The evolution, use, and effects of integrated personal health records: A narrative review|The evolution, use, and effects of integrated personal health records: A narrative review]]"''' | |||

Objective: To present a summarized literature review of the evolution, use, and effects of Personal Health Records (PHRs). | |||

Methods: Medline and PubMed were searched for ‘personal health records’. Seven hundred thirty-three references were initially screened resulting in 230 studies selected as relevant based on initial title and abstract review. After further review, a total of 52 articles provided relevant information and were included in this paper. These articles were reviewed by one author and grouped into the following categories: PHR evolution and adoption, patient user attitudes toward PHRs, patient reported barriers to use, and the role of PHRs in self-management. | |||

Results: Eleven papers described evolution and adoption, 17 papers described PHR user attitudes, 10 papers described barriers to use, and 11 papers described PHR use in self-management. Three papers were not grouped into a category but were used to inform the Discussion. PHRs have evolved from patient-maintained paper health records to provider-linked [[electronic health record]]s. Patients report enthusiasm for the potential of modern PHRs, yet few patients actually use an electronic PHR. Low patient adoption of PHRs is associated with poor interface design and low health and computer literacy on the part of patient users. ('''[[Journal:The evolution, use, and effects of integrated personal health records: A narrative review|Full article...]]''')<br /> | |||

</div> | |||

|- | |||

|<br /><h2 style="font-size:105%; font-weight:bold; text-align:left; color:#000; padding:0.2em 0.4em; width:50%;">Featured article of the week: August 31–September 6:</h2> | |||

<div style="padding:0.4em 1em 0.3em 1em;"> | <div style="padding:0.4em 1em 0.3em 1em;"> | ||

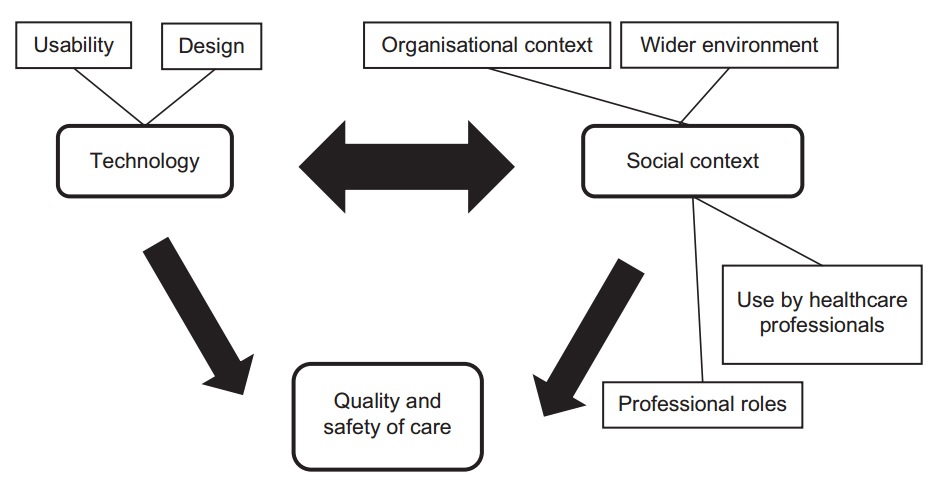

<div style="float: left; margin: 0.5em 0.9em 0.4em 0em;">[[File:Fig1 Cresswell InformaticsPC2014 21-2.jpg|220px]]</div> | <div style="float: left; margin: 0.5em 0.9em 0.4em 0em;">[[File:Fig1 Cresswell InformaticsPC2014 21-2.jpg|220px]]</div> | ||

| Line 92: | Line 222: | ||

|<br /><h2 style="font-size:105%; font-weight:bold; text-align:left; color:#000; padding:0.2em 0.4em; width:50%;">Featured article of the week: July 6–12:</h2> | |<br /><h2 style="font-size:105%; font-weight:bold; text-align:left; color:#000; padding:0.2em 0.4em; width:50%;">Featured article of the week: July 6–12:</h2> | ||

<div style="padding:0.4em 1em 0.3em 1em;"> | <div style="padding:0.4em 1em 0.3em 1em;"> | ||

<div style="float: left; margin: 0.5em 0.9em 0.4em 0em;">[[File: | <div style="float: left; margin: 0.5em 0.9em 0.4em 0em;">[[File:Healthcare Apps for Android Tablets.jpg|220px]]</div> | ||

'''[[Health information technology]] (HIT)''' is the application of "hardware and software in an effort to manage and manipulate health data and information." HIT acts as a framework for the comprehensive management of health information originating from consumers, providers, governments, and insurers in order to improve the overall state of health care. Among those improvements, the Congressional Budget Office (CBO) of the United States believes HIT can reduce or eliminate errors from medical transcription, reduce the number of diagnostic tests that get duplicated, and improve patient outcomes and service efficiency among other things. | '''[[Health information technology]] (HIT)''' is the application of "hardware and software in an effort to manage and manipulate health data and information." HIT acts as a framework for the comprehensive management of health information originating from consumers, providers, governments, and insurers in order to improve the overall state of health care. Among those improvements, the Congressional Budget Office (CBO) of the United States believes HIT can reduce or eliminate errors from medical transcription, reduce the number of diagnostic tests that get duplicated, and improve patient outcomes and service efficiency among other things. | ||

| Line 111: | Line 241: | ||

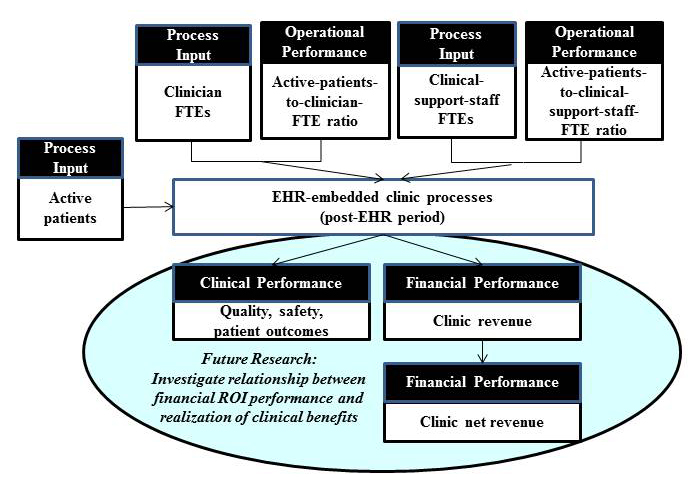

A '''[[medical practice management system]]''' (also '''practice management system''' or '''PMS''') is a software-based information and enterprise management tool for physician offices that offers a set of key features that support an individual or group medical practice's operations. Those key features include — but are not limited to — appointment scheduling, patient registration, procedure posting, insurance billing, patient billing, payment posting, data and file maintenance, and reporting. | A '''[[medical practice management system]]''' (also '''practice management system''' or '''PMS''') is a software-based information and enterprise management tool for physician offices that offers a set of key features that support an individual or group medical practice's operations. Those key features include — but are not limited to — appointment scheduling, patient registration, procedure posting, insurance billing, patient billing, payment posting, data and file maintenance, and reporting. | ||

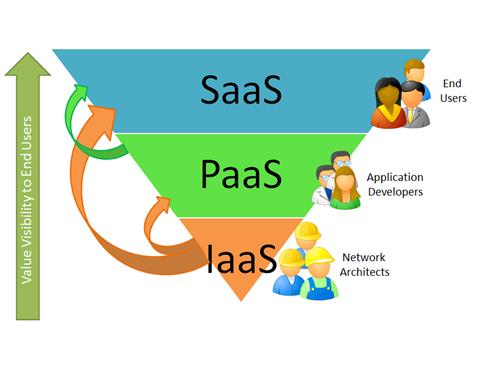

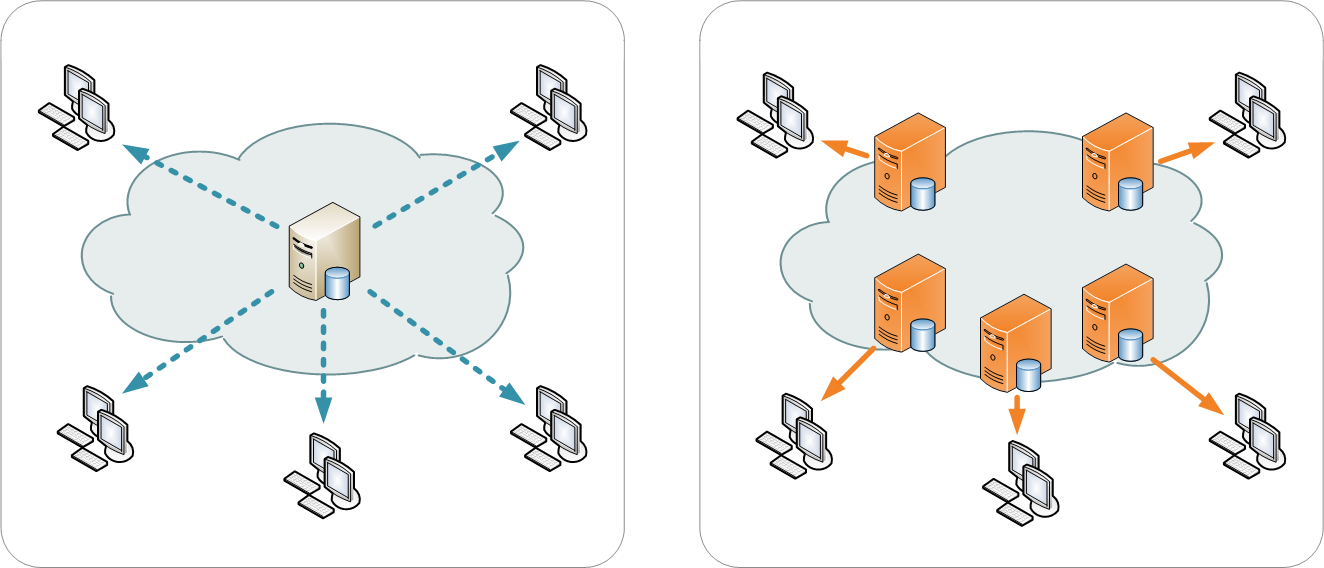

The PMS has traditionally been a stand-alone application, installed on computers in the physician office. But like [[laboratory information management | The PMS has traditionally been a stand-alone application, installed on computers in the physician office. But like [[laboratory information management system]]s, [[hospital information system]]s, and other informatics software, trends have shifted to both web-based and cloud-based access to PMS applications. Cloud-based PMSs have been around at least since 2011, and they have become more attractive for several reasons, including the ease of letting the vendor maintain and update the technology from their end, the need for less hardware, and the convenience of accessing the system from anywhere. ('''[[Medical practice management system|Full article...]]''')<br /> | ||

</div> | </div> | ||

|- | |- | ||

Latest revision as of 18:55, 12 April 2024

|

|

If you're looking for other "Article of the Week" archives: 2014 - 2015 - 2016 - 2017 - 2018 - 2019 - 2020 - 2021 - 2022 - 2023 - 2024 |

Featured article of the week archive - 2015

Welcome to the LIMSwiki 2015 archive for the Featured Article of the Week.

Featured article of the week: December 28—January 3:"Personalized Oncology Suite: Integrating next-generation sequencing data and whole-slide bioimages" Cancer immunotherapy has recently entered a remarkable renaissance phase with the approval of several agents for treatment. Cancer treatment platforms have demonstrated profound tumor regressions including complete cure in patients with metastatic cancer. Moreover, technological advances in next-generation sequencing (NGS) as well as the development of devices for scanning whole-slide bioimages from tissue sections and image analysis software for quantitation of tumor-infiltrating lymphocytes (TILs) allow, for the first time, the development of personalized cancer immunotherapies that target patient specific mutations. However, there is currently no bioinformatics solution that supports the integration of these heterogeneous datasets. We have developed a bioinformatics platform – Personalized Oncology Suite (POS) – that integrates clinical data, NGS data and whole-slide bioimages from tissue sections. POS is a web-based platform that is scalable, flexible and expandable. The underlying database is based on a data warehouse schema, which is used to integrate information from different sources. POS stores clinical data, genomic data (SNPs and INDELs identified from NGS analysis), and scanned whole-slide images. (Full article...)

|