Journal:Requirements for data integration platforms in biomedical research networks: A reference model

| Full article title | Requirements for data integration platforms in biomedical research networks: A reference model |

|---|---|

| Journal | PeerJ Computer Science |

| Author(s) | Ganzinger, Matthias; Knaup, Petra |

| Author affiliation(s) | Heidelberg University |

| Primary contact | Email: matthias.ganzinger@med.uni-heidelberg.de |

| Editors | Juan, Hsueh-Fen |

| Year published | 2015 |

| Volume and issue | 3 |

| Page(s) | e755 |

| DOI | 10.7717/peerj.755 |

| ISSN | 2376-5992 |

| Distribution license | Creative Commons Attribution 4.0 International |

| Website | https://peerj.com/articles/755/ |

| Download | https://peerj.com/articles/755.pdf (PDF) |

Abstract

Biomedical research networks need to integrate research data among their members and with external partners. To support such data sharing activities, an adequate information technology infrastructure is necessary. To facilitate the establishment of such an infrastructure, we developed a reference model for the requirements. The reference model consists of five reference goals and 15 reference requirements. Using the Unified Modeling Language, the goals and requirements are set into relation to each other. In addition, all goals and requirements are described textually in tables. This reference model can be used by research networks as a basis for a resource efficient acquisition of their project specific requirements. Furthermore, a concrete instance of the reference model is described for a research network on liver cancer. The reference model is transferred into a requirements model of the specific network. Based on this concrete requirements model, a service-oriented information technology architecture is derived and also described in this paper.

Keywords: Research network, Reference model, Data integration, Biomedical informatics, Service-oriented architecture

Introduction

Current biomedical research is supported by modern biotechnological methods producing vast amounts of data (Frey, Maojo & Mitchell, 2007[1]; Baker, 2010[2]). In order to get a comprehensive picture of the physiology and pathogenic processes of diseases, many facets of biological mechanisms need to be examined. Contemporary research, e.g., investigating cancer, is a complex endeavor that can be conducted most successfully when researchers of multiple disciplines cooperate and draw conclusions from comprehensive scientific data sets (Welsh, Jirotka & Gavaghan, 2006[3]; Mathew et al., 2007[4]). As a frequent measure to support cooperation, research networks sharing common resources are established.

To generate added value from such a network, all available scientific and clinical data should be combined to facilitate a new, comprehensive perspective. This requires provision of adequate information technology (IT) which is a challenge on all levels of biomedical research. For example, it is inevitable for research networks to use an IT infrastructure for sharing data and findings in order to leverage joint analyses. Data generated by biotechnological devices can only be evaluated thoroughly by applying biostatistical methods with IT tools.

However, data structures are often heterogeneous, resulting in the need for a data integration process. This process involves the harmonization of data structures by defining appropriate metadata (Cimino, 1998[5]). Depending on the specific needs and data structures of the research network, often a non-standard IT platform needs to be developed to meet the specific requirements. An important requirement might be the protection of data in terms of security and privacy, especially when patient data are involved.

In the German research network SFB/TRR77 — Liver Cancer: From Molecular Pathogenesis to Targeted Therapies it was our task to explore the most appropriate IT-architecture for supporting networked research (Woll, Manns & Schirmacher, 2013[6]). The research network consists of 22 projects sharing common resources and research data. To provide this network with a data integration platform we implemented a service-oriented architecture (SOA) (Taylor et al., 2004[7]; Papazoglou et al., 2008[8]; Wei & Blake, 2010[9]; Bosin, Dessì & Pes, 2011[10]). The IT system is based on the cancer Common Ontologic Representation Environment Software Development Kit (caCORE SDK) components of the cancer Biomedical Informatics Grid (caBIG) (Komatsoulis et al., 2008[11]; Kunz, Lin & Frey, 2009[12]). The resulting system is called pelican (platform enabling liver cancer networked research) (Ganzinger et al., 2011[13]). Transfer of these data sharing concepts to other networks investigating different disease areas is possible.

We consider our research network as a typical example for a whole class of biomedical research networks. To support this kind of projects, we provide a framework for the development of data integration platforms for such projects. Specifically, we strive for the following two objectives:

Objective 1: Provide a reference model of requirements of biomedical research networks regarding an IT platform for sharing and analyzing data.

Objective 2: Design a SOA of an IT platform for our research network on liver cancer. It should implement the reference model for requirements. While this SOA is specific to this project, parts can be reused for similar projects.

Methods

For the design of a data integration platform it is important to first assess the requirements of the system’s intended users. To support this task, we developed a reference model for requirements. A reference model is a generic model which is valid not only for a specific research network but for a class of such organizations. For the development of the reference model, we used the research network on liver cancer as primary source. These requirements were consolidated and abstracted to get a generic model that can be applied to other research networks.

In the first step, a general understanding of the network’s aims and tasks was acquired by analyzing written descriptions of the participating projects. In addition, questionnaires were sent to the principal investigators to capture the data types and data formats used within the projects. In the second step, projects were visited and their research subjects and processes were captured by interviewing project members.

For the reference model, we use the term goal to describe the highest level of requirements. This is in accordance with ISO/IEC/IEEE 24765, where goal is defined as “an intended outcome” (ISO/IEC/IEEE, 2011[14]). In contrast, requirement is defined as “a condition or capability needed by a user to solve a problem or achieve an objective” (ISO/IEC/IEEE, 2011[14]). In our reference model, each requirement was related to a goal, either directly or indirectly. Requirements were then mapped to concrete functions in the resulting data integration system. On the other hand, goals were used to structure requirements and usually do not lead to a specific function of the system.

To provide a more detailed characterization of the goals, we provide a standardized table for each of them which covers the reference number, name, description and weight of the goal. Table 1 shows the structure of such a table. The complete set of tables for all the goals is available in Table S1.

|

Table 1. Schema for documenting reference requirements and goals;

Cite this object using this DOI: 10.7717/peerj.755/table-1

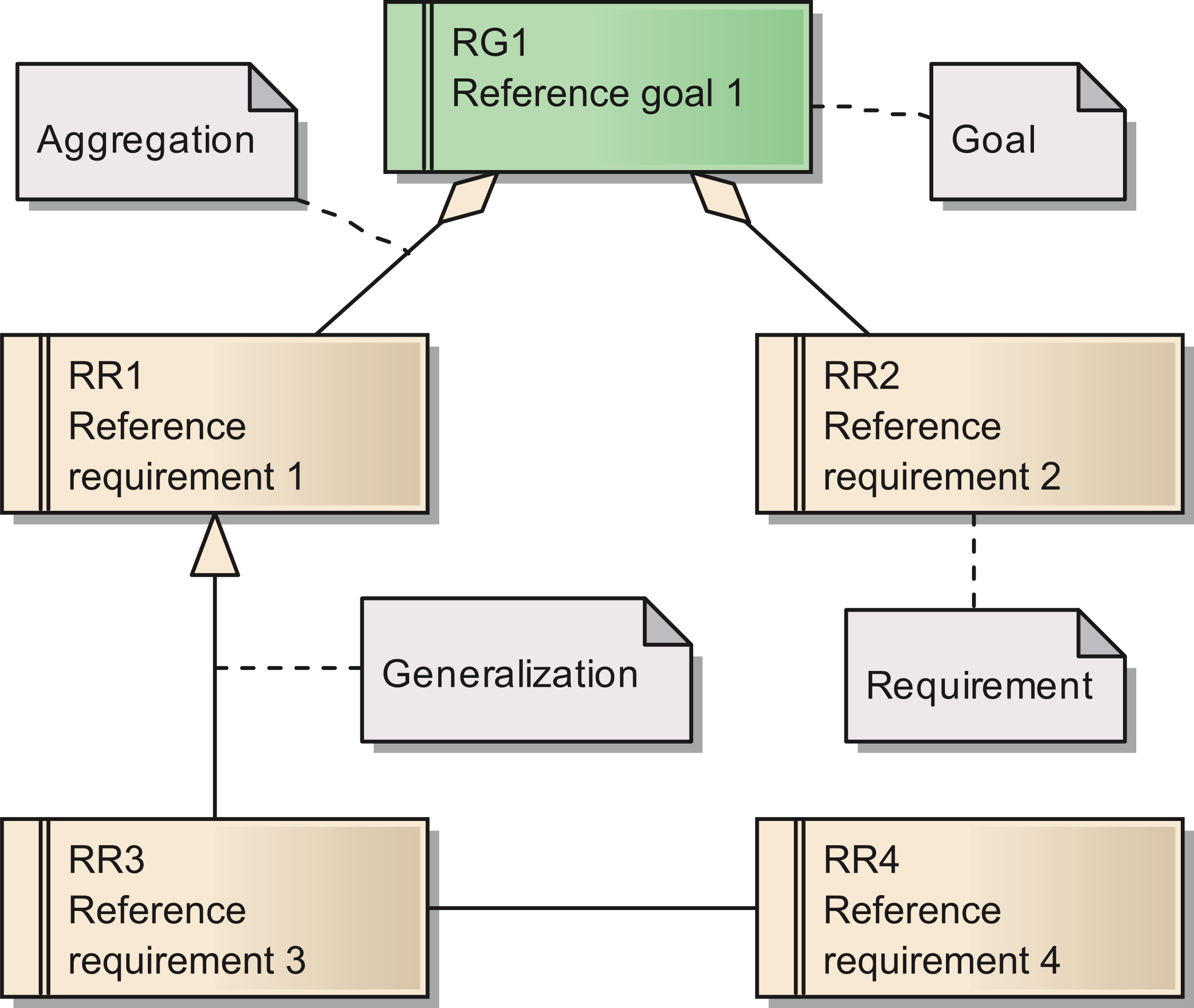

The requirements are documented in the same way as the goals. Figure 1 shows a Unified Modeling Language (UML) diagram with all elements used for describing of both goals and their subordinated requirements (Object Management Group, 2012[15]). As for the goals, we provide a set of tables with more detailed descriptions for all requirements in Table S1 In total, we identified 15 requirements for the reference model.

Figure 1: Overview of the UML elements used in requirements diagrams;

Cite this object using this DOI: 10.7717/peerj.755/fig-1

The instantiation of the reference model for requirements to meet the needs of a specific research network provides the basis for the architecture of the desired data integration and its subsequent implementation. We provide a concrete instance of a reference model as well as the resulting IT-architecture in this manuscript.

For the research described in this paper, ethics approval was not deemed necessary. This work involved no human subjects in the sense of medical research, as e.g., covered by the Declaration of Helsinki (World Medical Association, 2013[16]). At no time were patients included for survey or interview. Data was only acquired from scientists regarding their work and data, but no personal or patient related data were gathered. Participants were not required to participate in this study; they consented by returning the questionnaire. No research was conducted outside Germany, the authors’ country of residence. However, in other countries the approval of an institutional review board or other authority might be necessary to apply the reference model.

Results

In this section we describe the reference model for requirements. Then, we show how a concrete model for requirements and an IT-architecture is derived from this reference model. The reference model for requirements is an abstract model and thus a universally usable artefact. It is mapped in several steps to the network specific system architecture.

Reference model

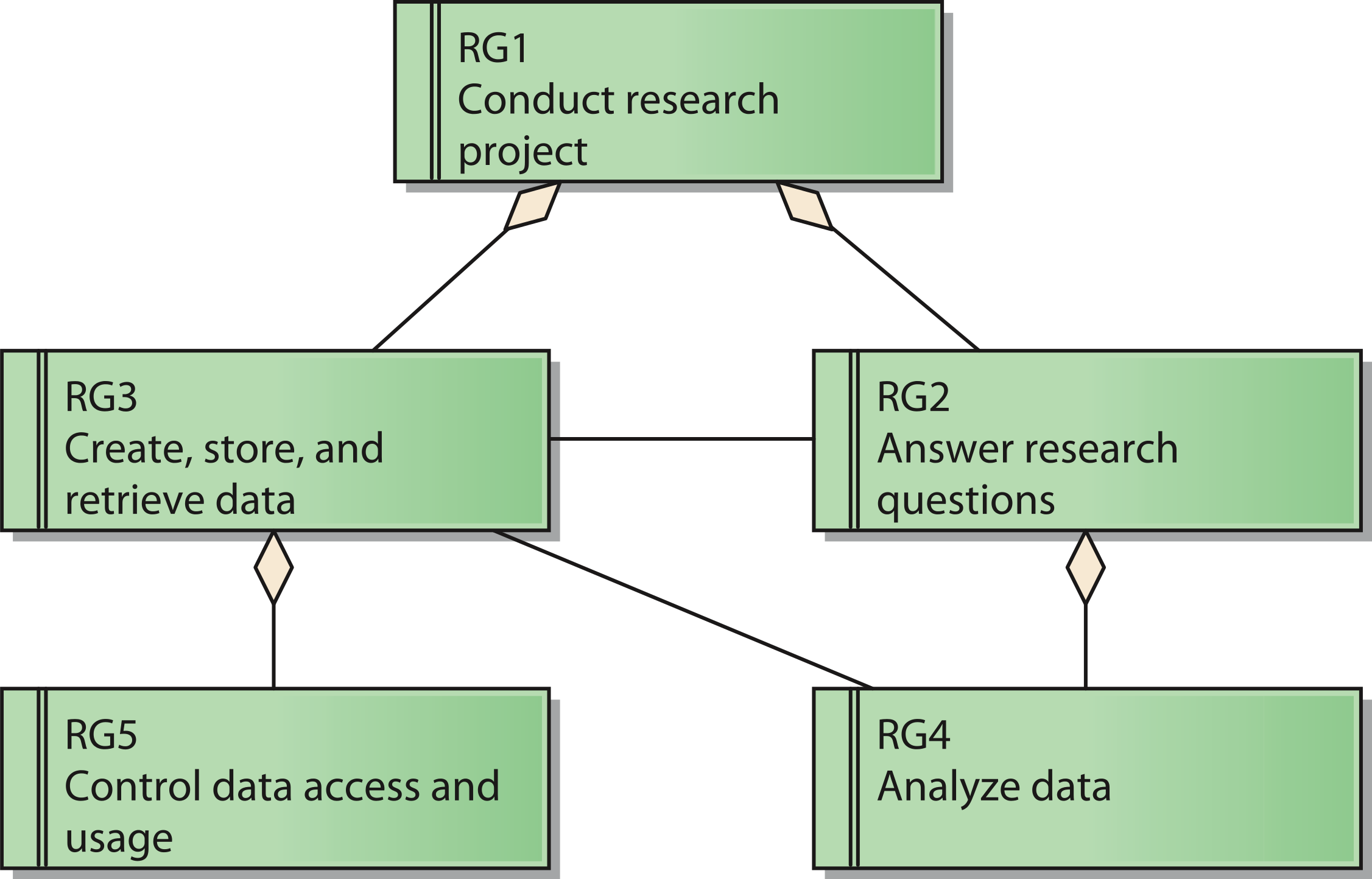

The reference model for requirements covers five reference goals (RG). An overview of the goals and their relations is shown in Fig. 2 by means of a UML requirements diagram. The reference goals are:

- Conduct research project (reference goal RG1): The ultimate goal of a research network is to fulfill the intended research tasks. This usually corresponds to the project specification of the funding organization.

- Answer research questions (reference goal RG2): Each research network has specific research questions it pursues to answer. These questions frame the core of the network and led to its establishment in the first place.

- Create, store, and retrieve data (reference goal RG3): Research networks need data to conduct the project. Thus, it is necessary to generate and handle them.

- Analyze data (reference goal RG4): To generate knowledge out of the data it is necessary to analyze them.

- Control data access and usage (reference goal RG5): Research networks need to protect their data. This includes the prevention of unauthorized access to protected data like patient data as well as aspects of intellectual property rights that need to be respected by authorized users as well.

These goals are ordered in a hierarchical structure: Goal RG1 acts as the root node, which has the two sub goals RG2 and RG3. Goal RG4 is subordinated to Goal RG2, whereas Goal RG5 is a sub goal of Goal RG3.

Figure 2: Reference model for goals of a research network;

Cite this object using this DOI: 10.7717/peerj.755/fig-2

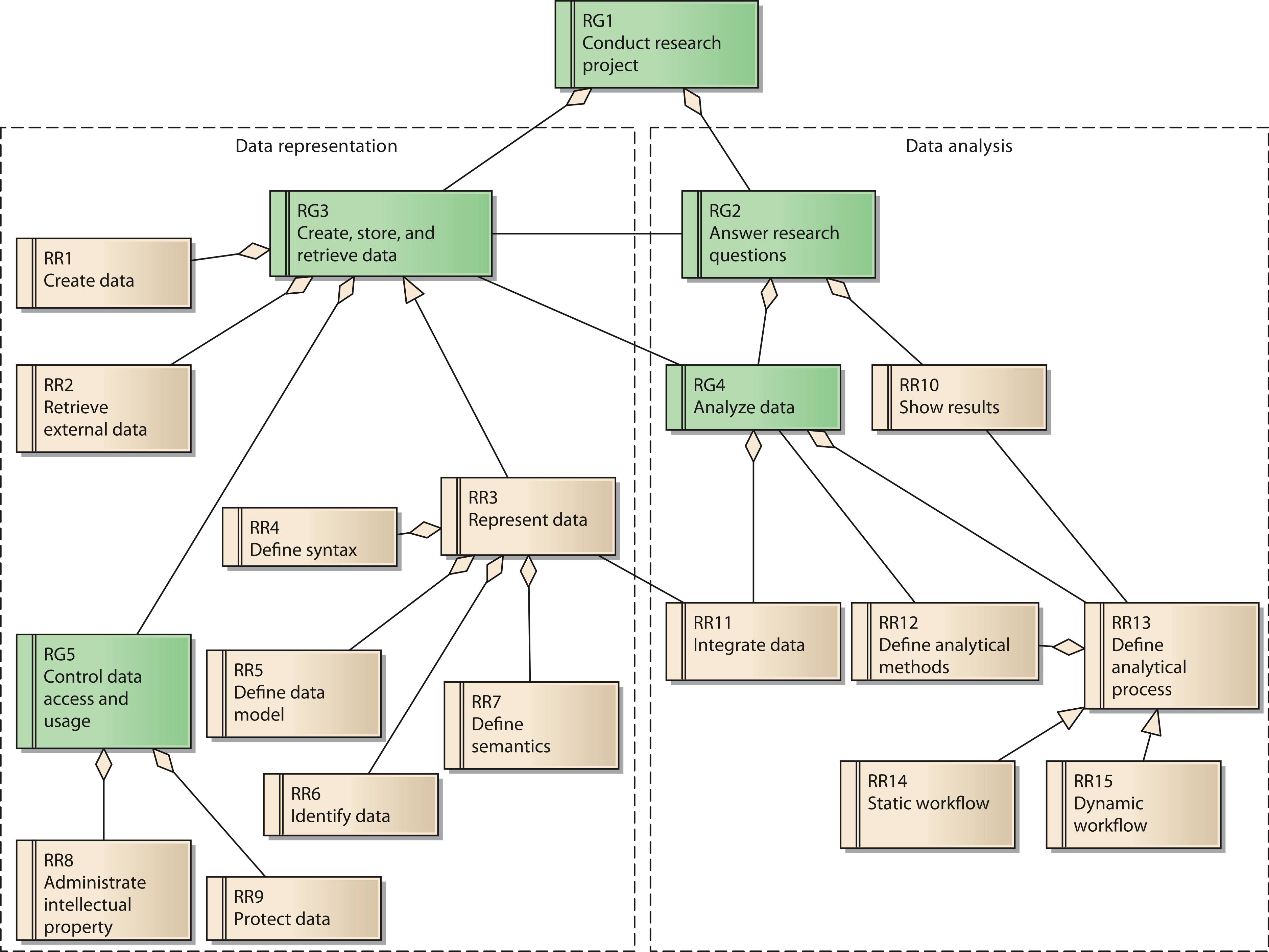

Each goal has several requirements. In total, the reference model contains 15 reference requirements (RR). A UML diagram with all reference goals and reference requirements is shown in Fig. 3. Reference requirements are associated with the reference goals as follows:

Figure 3: Reference model for goals and requirements of a research network;

Cite this object using this DOI: 10.7717/peerj.755/fig-3

Goal 3 is associated with the reference requirements to create data (RR1) and to retrieve external data (RR2). These two requirements respect possible sources of data necessary for the research network. Reference requirement RR3, represent data, is further defined by its subordinate requirements define syntax (RR4), define data model (RR5), identify data (RR6), and define semantics (RR7).

Goal 5, control data access and usage, has two aspects, which are represented by reference requirements RR8 and RR9. RR8 requires the creator’s contribution in the generation of data for the research network to be recognized when data are used by others. As a consequence, even users with legitimate access to the system have to adhere to usage regulations. These regulations should be checked and enforced by the system as far as possible. In contrast, RR9 covers the requirement to protect data from unauthorized access.

The second group of reference requirements covers data analysis. At the highest level we identify goal 2, answer research questions. It is associated downstream with goal RG4, analyze data. Goal RG4 is composed of two reference requirements: RR11 integrate data and RR13 define analytical process. RR11 is associated with RR3, since the technical provision of data within the research network is of great relevance for the integration of data. RR13 has subordinate requirement RR12, define analytical methods, which covers the low-level data analysis methods. RR14 and RR15 cover two distinct instances of RR13: RR14 describes static workflows with all process steps being fixed. In this case, the order of analytical steps and data sources used cannot be changed by the users of the system. In contrast, RR15 considers dynamic workflows, allowing users to compose analytical steps and data sources as needed. Since the type of data involved in a dynamic workflow is not known upfront, this reference requirement is more demanding in terms of the semantic description of data sources. Precise annotation of data sources is necessary in order to perform automated transformations for matching different data fields.

RR13 is further associated with RR10, show results. RR10 covers the requirement to present the results of the analysis adequately. Thus, it partially fulfills goal 2, answer research questions.

The reference model for requirements is the basis for a network specific requirements model. We present an example for creating such a model and all the following steps in the next section. All goals and requirements from the reference model are mapped to network specific instances. In this process, elements of the reference model are checked for their applicability to the specific research network. Further special requirements of the network are considered at this point as well.

The network specific requirements model is then mapped to system properties. These are qualities contributed to the system by different components. At first, we consider abstract components instead of specific products. For example, in a research network, reference requirement RR1 create data might be mapped to a system property automated creation of data services. This property is then mapped to the specific component responsible for the implementation of this property.

In a second step, the abstract components are mapped to specific components in accordance with the research network’s requirements. Specific components can be preexisting modules with a product character, software development frameworks providing specific functionality, or newly developed components.

In a final modelling step a distribution model of the components is created. All components need to be mapped to system resources down to the hardware level. Among others, the following aspects have to be considered is this step:

- Security: Components with high security requirements should be isolated against other, less sensitive components and thus be run on a separate system node.

- Performance: All components must be distributed in a way that availability of sufficient system resources is ensured.

- Maintainability: To ensure that the possibly complex distributed system can be managed efficiently, components should be grouped together in a sensible way.

Sample application: pelican

We now describe a sample application of our reference model within the research network SFB/TRR77 on liver cancer. Further we describe two specifications for metadata we developed for the research network.

Specific model for requirements

In this section we summarize key requirements specific to our research network. The complete list of requirements is shown in Table S2.

The first goal of the research network, an instance of reference goal RG1, is defined by its research assignment of gaining a deeper understanding of the molecular basis of liver cancer development. This spans research on the chronic liver disease to progression of metastatic cancer. Further, the research network aims to identify novel preventive, diagnostic and therapeutic approaches on liver cancer. Subordinated to goal G1 is G3, the instance of reference goal RG3 regarding the data necessary for the network. Since molecular processes play a major role within the research network, genomic microarray data are of central importance. They are complemented by imaging data like tissue microarray (TMA) data and clinical data.

Goal G2, answering research questions, is characterized by the following two questions:

- Which generic or specific mechanisms of chronic liver diseases, especially of chronic virus infections and inflammation mediated processes predispose or initiate liver cancer?

- Which molecular key events promoting or keeping up liver cancer could act as tumor markers or are promising targets for future therapeutic interventions?

Goal G5 requires making the data available for cross project analysis within the network, but to protect data against unauthorized access at the same time. Especially important to the members of the project is the requirement R8, subordinated to goal G5: The projects contributing data to the network require keeping control over the data in order to ensure proper crediting of their intellectual property. Thus, they require fine-grained rules for data access control. Depending on the type of data, they should be available only to specific members of the network, to all members of the project or the general public.

System architecture

To acknowledge the project’s requirement R8, to keep the ownership over their data, a federation was used as the underlying concept of the system architecture. Technically, pelican implements a SOA. All data sources of the projects are transformed into data services and made available to the research network. The data services stay under the control of the contributing project. This can even go as far as running the service on computer hardware on the projects’ premises. Data services are complemented by analytical services. All services are described by standardized metadata to help finding appropriate services and allow for automated access to the services’ interfaces. Using a web-based user interface, researchers can chain data services and analytical services to answer specific research questions.

Component model

The requirements are mapped to system properties first. In the next step, components are identified to provide these properties as module of the new system. In Table 2 we show the complete chain of mappings from requirements over system features to components. Each component is realized either by a readily available product or by a newly developed module. In Table 3 we give an overview of our components.

| |||||||||||||||||||||||||||||||||||||||||||||||||||||||||

Table 2. Mapping of requirements to corresponding system features and components;

Cite this object using this DOI: 10.7717/peerj.755/table-2

|

Table 3. Specification of concrete implementation components for the elements of the component model;

Cite this object using this DOI: 10.7717/peerj.755/table-3

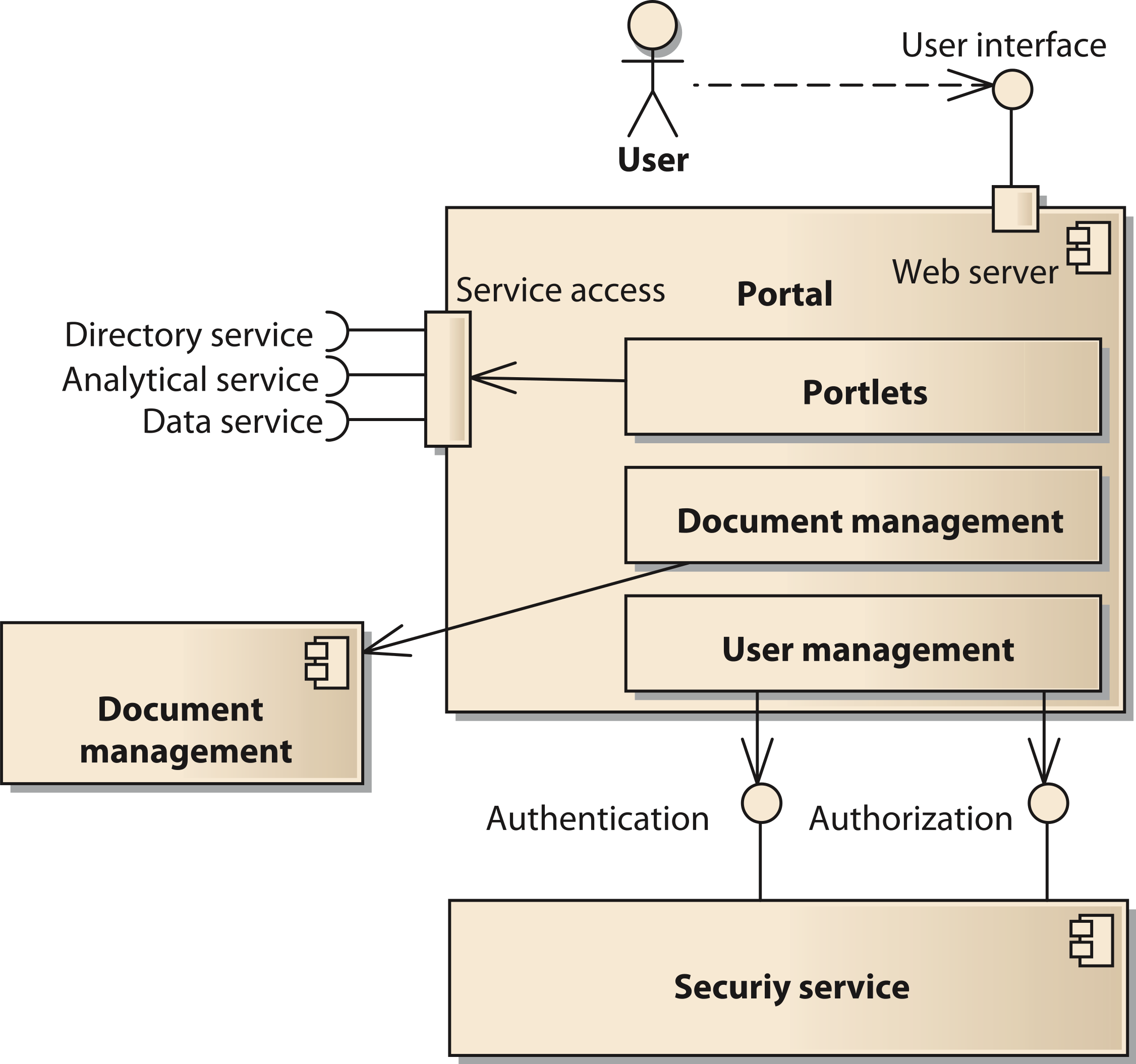

The portal component provides the user interface to the system. It is implemented using the open source software Liferay (http://www.liferay.com, accessed: 2014-07-03) (Sezov, Jr, 2011[17]). Liferay provides a number of functions affecting several components of our model. Thus, we provide a decomposition of the portal components in Fig. 4. One important subcomponent of the portal is the document management system. It is realized by the Alfresco component (http://www.alfresco.com, accessed: 2014-07-03) (Berman, Barnett & Mooney, 2012[18]). The user interface of Alfresco can be integrated into the Liferay portal or be accessed with a separate unified resource locator (URL). The portal provides user management functionality to control access to portal pages and components like portlets (Java Community Process, 2008[19]). However, the user account information including username, passwords, and others is stored in a separate component using the Lightweight Directory Access Protocol (LDAP). Thus, it is possible for all components of the SOA-network to commonly access the users’ identity information.

Figure 4: Structure of the component portal;

Cite this object using this DOI: 10.7717/peerj.755/fig-4

Data services are generated by using caCORE SDK (Wiley & Gagne, 2012[20]). With caCORE SDK it is not necessary to program the software for the service in a traditional way. Instead, a UML data model in Extensible Markup Language Metadata Interchange (XMI) notation has to be prepared (Object Management Group, 2002[15]; Bray et al., 2006[21]). From this model, caCORE SDK generates several artefacts resulting in a deployment packages for Java application servers like Apache Tomcat (The Apache Software Foundation, 2014[22]). To simplify this process for spreadsheet based microarray data, we developed a software tool to generate the XMI file as well. As a result, a service conforming to the web services specification ready for deployment is generated. For the provision of network specific metadata we chose TemaTres to serve our controlled vocabulary in standard formats like SKOS or Dublin Core (Weibel, 1997[23]; Miles & Bechhofer, 2009[24]; Gonzales-Aguilar, Ramírez-Posada & Ferreyra, 2012[25]). Our analytical services are backed by the open source language and environment for statistical computing called R (R Core Team, 2014[26]). R is integrated into the services using the Rserve component (Urbanek, 2003[27]).

Deployment model

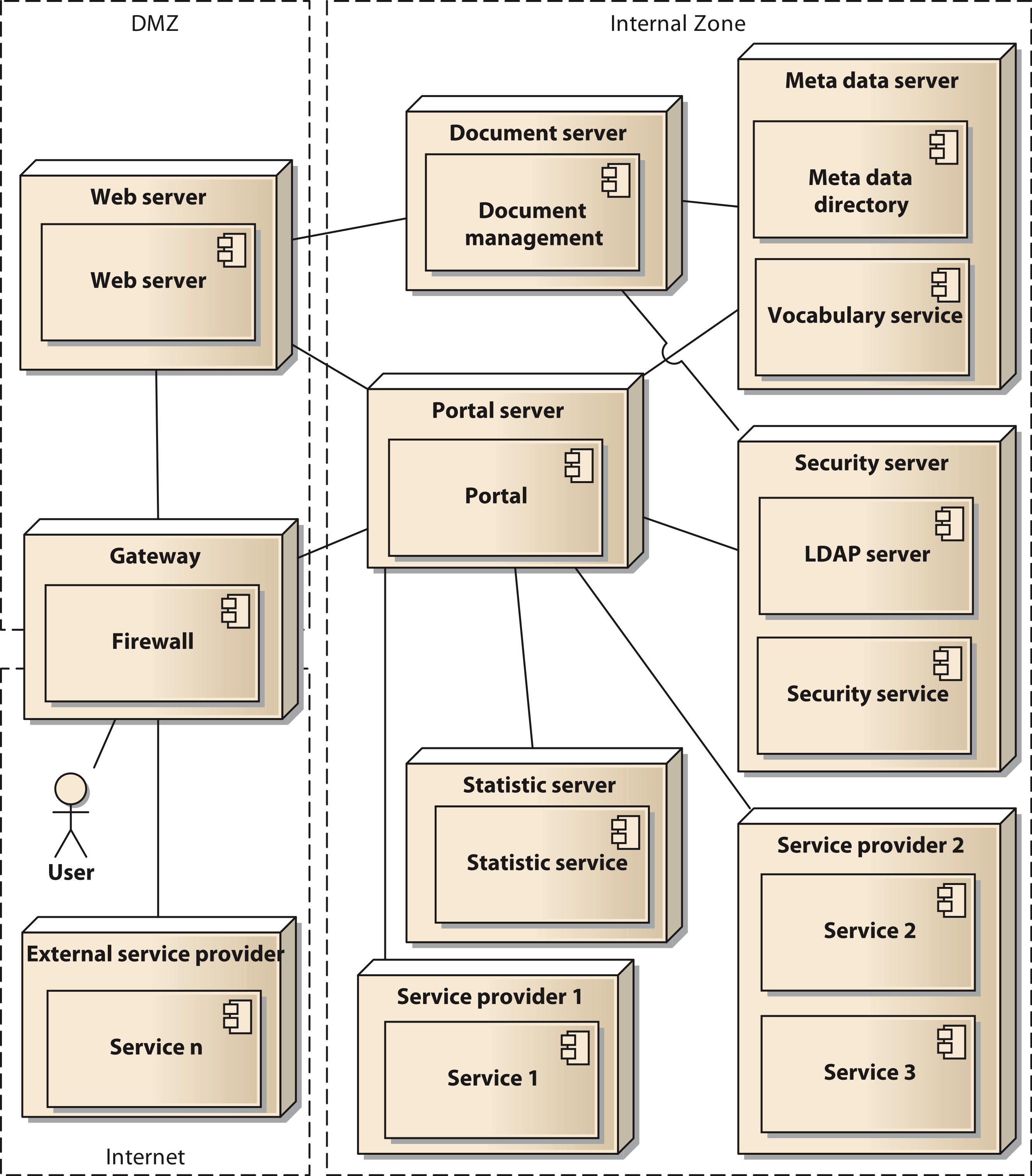

In a final modelling step the components are distributed to the physical resources available for the system. In our case we used two servers with a common virtualization layer based on VMware VSphere server. Thus, all nodes in our deployment model represent virtual machines (VM). Using virtual switches, routers, and firewall appliances we were able to implement our Internet Protocol (IP) network infrastructure. To enhance security, we implement a network zoning model comprised of an internet zone, a demilitarized zone (DMZ) and an internal zone. Figure 5 shows the deployment model in UML notation. The services shown in the model (service 1 to service n) are to be considered as examples, since the concrete number of services is permanently changing. The deployment model also reflects the different levels of control that can be executed by the owners of the data. They range from shared nodes on the common servers over a dedicated VM to deployment on external hardware controlled by the respective projects.

Figure 5: Deployment diagram of the components of the architecture in UML notation;

Cite this object using this DOI: 10.7717/peerj.755/fig-5

Discussion

In this manuscript, we describe a reference model for the requirements of research networks towards an IT platform. For many funding programs, including research grants of the European Commission, the collaboration of several research organizations at different sites is mandatory. This leads to a structural similarity to our research network on liver cancer. Even though other research networks will have different research aims, there are still requirements that are common to most networks. Since the reference model already covers a basic set of requirements, it allows future research networks to focus on defining specific requirements distinguishing them from other networks.

Users of the reference model are responsible for assessing the reference model’s applicability to their project-specific needs. The reference model is based on data of a real research network that were generalized. To avoid bias in the model that might hinder transferability, we incorporated different views in the process of constructing the model. However, the transferability of the model to another context is, as for any model, limited. As a consequence, future research networks will have to derive a project specific instance of the reference model to reflect the corresponding characteristics of the project. The reference model is a tool intended to help its users to create a concrete model covering the requirements of a research network with a high degree of completeness. The reference model provides guidance for this task. We expect that it will help in reducing the effort to acquire all requirements.

A common technique used in requirements engineering for software is use case modelling (Jacobson, 1992[28]; Bittner & Spence, 2002[29]). A use case is a compact scenario describing certain aspects of how a technical system behaves and how it interacts with other actors like its users. A use case model is a way of capturing requirements in an interactive way. Thus, a use case model can be developed further into a requirements model. In our context we found it hard to apply the use case approach since the researchers in our network mostly have biomedical backgrounds and thus are not familiar with software development. Nevertheless, use case modelling might be a helpful tool for other research networks when applying our reference model.

We applied the reference model successfully to a research network on liver cancer. Some specific requirements in this network led to the decision to set up a federated system allowing for a maximum of control of the individual projects over their respective data. The system was implemented as a service-oriented architecture using, among others, components of the caBIG project. A public version of our system with limited functionality is available at https://livercancer.imbi.uni-heidelberg.de/data. There, a gene symbol like BRCA1 can be entered into the search field and services are invoked for data retrieval as discussed in this paper. Other projects can benefit from this architecture as well, but the architecture is tailored to research networks with the requirements of federating data as data services. With this architecture, we try to acknowledge the data protection requirements of the participating projects. Still, further research regarding the use of data and crediting creatorship of data is necessary. First steps were made as part of this project (He et al., 2013[30]).

In case the requirements regarding data control are more relaxed, an alternative would be to keep the data in a central data warehouse instead of the federation. In that case, i2b2 might be a suitable component to provide the data warehouse component (Murphy et al., 2007[31]). Such a centralized approach also affects how and when data are harmonized: In a central research data warehouse data are harmonized at the time of loading the database, which ideally leads to a completely and consistently harmonized database. In a service-oriented approach data are provided by means of data services as they are. All services are described by corresponding metadata, enabling automated transformation of the data at time of access.

Our sample research network concentrates more on basic research than clinical application. In the future, we plan to apply our reference model to further projects with a stronger translational component. By doing so, we will be able to reevaluate the framework in a more clinical context.

Supplemental information

|

Additional information and declarations

Competing interests

The authors declare there are no competing interests.

Author contributions

Matthias Ganzinger conceived and designed the experiments, performed the experiments, analyzed the data, wrote the paper, prepared figures and/or tables, reviewed drafts of the paper.

Petra Knaup wrote the paper, reviewed drafts of the paper.

Human ethics

The following information was supplied relating to ethical approvals (i.e., approving body and any reference numbers):

For the research described in this paper, ethics approval was not deemed necessary. This work involved no human subjects in the sense of medical research, as e.g., covered by the Declaration of Helsinki (World Medical Association, 2013[16]). At no time patients were included for survey or interview. Data was only acquired from scientists regarding their work and data, but no personal or patient related data were gathered. Participants were not required to participate in this study; they consented by returning the questionnaire. No research was conducted outside Germany, the authors’ country of residence. However, in other countries the approval of an institutional review board or other authority might be necessary to apply the reference model.

Funding

This work was funded by the SFB/TRR 77 “Liver Cancer. From Molecular Pathogenesis to Targeted Therapies” of the Deutsche Forschungsgemeinschaft (DFG, http://www.dfg.de). The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

References

- ↑ Frey, L.J.; Maojo, V.; Mitchell, J.A. (2007). "Bioinformatics linkage of heterogeneous clinical and genomic information in support of personalized medicine". IMIA Yearbook of Medical Informatics 2007: 98-105. ISSN 0943-4747. PMID 17700912.

- ↑ Baker, M. (2010). "Next-generation sequencing: adjusting to data overload". Nature Methods 7 (7): 495-499. doi:10.1038/nmeth0710-495.

- ↑ Welsh, E.; Jirotka, M.; Gavaghan, D. (2006). "Post-genomic science: cross-disciplinary and large-scale collaborative research and its organizational and technological challenges for the scientific research process". Philosophical Transactions of the Royal Society A 364 (1843): 1533-1549. doi:10.1098/rsta.2006.1785. PMID 16766359.

- ↑ Mathew, J.P.; Taylor, B.S.; Bader, G.D.; Pyarajan, S.; Antoniotti, M.; Chinnaiyan, A.M.; Sander, C.; Burakoff, S.J.; Mishra, B. (2007). "From bytes to bedside: data integration and computational biology for translational cancer research". PLOS Computational Biology 3 (2): e12. doi:10.1371/journal.pcbi.0030012. PMC PMC1808026. PMID 17319736. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC1808026.

- ↑ Cimino, J.J. (1998). "Desiderata for controlled medical vocabularies in the twenty-first century". Methods of Information in Medicine 37 (4–5): 394-403. PMC PMC3415631. PMID 9865037. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3415631.

- ↑ Woll, K.; Manns, M.; Schirmacher, P. (2013). "Sonderforschungsbereich SFB/TRR77: Leberkrebs: Von der molekularen Pathogenese zur zielgerichteten Therapie". Der Pathologe 34 (2): 232-234. doi:10.1007/s00292-013-1820-z.

- ↑ Taylor, K.L.; O’Keefe, C.M.; Colton, J.; Baxter, R.; Sparks, R.; Srinivasan, U.; Cameron, M.A.; Lefort, L. (2004). "A service oriented architecture for a health research data network". Proceedings of the 16th International Conference on Scientific and Statistical Database Management 2004: 443-444. doi:10.1109/SSDM.2004.1311251.

- ↑ Papazoglou, M.P.; Traverso, P.; Dustdar, S.; Leymann, F. (2008). "Service-oriented computing: A research roadmap". International Journal of Cooperative Information Systems 17: 223. doi:10.1142/S0218843008001816.

- ↑ Wei, Y.; Blake, M.B. (2010). "Service-oriented computing and cloud computing: Challenges and opportunities". IEEE Internet Computing 14 (6): 72–75. doi:10.1109/MIC.2010.147.

- ↑ Bosin, A.; Dessì, N.; Pes, B. (2011). "Extending the SOA paradigm to e-Science environments". Future Generation Computer Systems 27 (1): 20–31. doi:10.1016/j.future.2010.07.003.

- ↑ Komatsoulis, G.A.; Warzel, D.B.; Hartel, F.W.; Shanbhag, K.; Chilukuri, R.; Fragoso, G.; de Coronado, S.; Reeves, D.M.; Hadfield, J.B.; Ludet, C.; Covitz, P.A. (2008). "caCORE version 3: Implementation of a model driven, service-oriented architecture for semantic interoperability". Journal of Biomedical Informatics 41 (1): 106–123. doi:10.1016/j.jbi.2007.03.009. PMC PMC2254758. PMID 17512259. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC2254758.

- ↑ Kunz, I.; Lin, M.; Frey, L. (2009). "Metadata mapping and reuse in caBIG". BMC Bioinformatics 10 (Suppl 2): S4. doi:10.1186/1471-2105-10-S2-S4. PMC PMC2646244. PMID 19208192. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC2646244.

- ↑ Ganzinger, M.; Noack, T.; Diederichs, S.; Longerich, T. Knaup, P. (2011). "Service oriented data integration for a biomedical research network". Studies in Health Technology and Informatics 169: 867-71. doi:10.3233/978-1-60750-806-9-867. PMID 21893870.

- ↑ 14.0 14.1 ISO/IEC/IEEE (2011). ISO/IEC/IEEE 24765: Systems and software engineering — Vocabulary. Geneva, Switzerland: ISO.

- ↑ 15.0 15.1 "OMG XML Metadata Interchange (SMI) Specification (Version 1.2)" (PDF). Object Management Group, Inc. January 2002. http://www.omg.org/cgi-bin/doc?formal/02-01-01.pdf. Retrieved 09 December 2014.

- ↑ 16.0 16.1 World Medical Association (2013). "World Medical Association Declaration of Helsinki: Ethical Principles for Medical Research Involving Human Subjects". JAMA 310 (20): 2191-2194. doi:10.1001/jama.2013.281053. PMID 12432198.

- ↑ Sezov, Rich (2011). Liferay in Action: The Official Guide to Liferay Portal Development. Shelter Island, NY: Manning Publications. ISBN 9781935182825.

- ↑ Berman, A.E.; Barnett, W.K.; Mooney, S.D. (2012). "Collaborative software for traditional and translational research". Human Genomics 6: 21. doi:10.1186/1479-7364-6-21. PMC PMC3500217. PMID 23157911. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3500217.

- ↑ "JSR 286: Portlet Specification 2.0". Oracle Corporation. 2008. https://www.jcp.org/en/jsr/detail?id=286. Retrieved 09 December 2014.

- ↑ Wiley, A.; Gagne, B. (18 April 2012). "caCORE SDK Version 4.3 Object Relational Mapping Guide". National Cancer Institute. https://wiki.nci.nih.gov/display/caCORE/caCORE+SDK+Version+4.3+Object+Relational+Mapping+Guide. Retrieved 09 December 2014.

- ↑ Bray, T.; Paoli, J.; Sperberg-McQueen, C.M.; Maler, E.; Yergeau, F.; Cowan, J. (29 September 2006). "Extensible Markup Language (XML) 1.1 (Second Edition)". World Wide Web Consortium. http://www.w3.org/TR/xml11/. Retrieved 09 December 2014.

- ↑ "Apache Tomcat". The Apache Software Foundation. 2014. http://tomcat.apache.org/. Retrieved 02 December 2014.

- ↑ Weibel, S. (October/November 1997). "The Dublin Core: A Simple Content Description Model for Electronic Resources". Bulletin of the American Society for Information Science and Technology 24 (1): 9–11. doi:10.1002/bult.70.

- ↑ Miles, A.; Bechhofer, S. (18 August 2009). "SKOS Simple Knowledge Organization System Reference". World Wide Web Consortium. http://www.w3.org/TR/skos-reference/. Retrieved 09 December 2014.

- ↑ Gonzales-Aguilar, A.; Ramírez-Posada, M.; Ferreyra, D. (2012). "Tematres: software para gestionar tesauros". El Profesional de la Información 21 (3): 319–325. doi:10.3145/epi.2012.may.14.

- ↑ R Development Core Team (2015). "R: A language and environment for statistical computing". The R Foundation for Statistical Computing. https://www.r-project.org/. Retrieved 09 December 2014.

- ↑ Urbanek, S. (2003). "Rserve: A Fast Way to Provide R Functionality to Applications". In Hornik, K.; Leisch, F.; Zeileis, A.. Proceedings of the 3rd international workshop on distributed statistical computing (DSC 2003).

- ↑ Jacobson, I. (1992). Object Oriented Software Engineering: A Use Case Driven Approach. Wokingham, England: Addison-Wesley Professional.

- ↑ Bittner, K.; Spence, I. (2002). Use Case Modeling. Boston, MA: Addison-Wesley Professional.

- ↑ He, S.; Ganzinger, M.; Hurdle, J.F.; Knaup, P. (2013). "Proposal for a data publication and citation framework when sharing biomedical research resources". Studies in Health Technology and Informatics 192: 1201. doi:10.3233/978-1-61499-289-9-1201. PMID 23920975.

- ↑ Murphy, S.N.; Mendis, M.; Hackett, K.; Kuttan, R.; Pan, W.; Phillips, L.C.; Gainer, V.; Berkowicz, D.; Glaser, J.P.; Kohane, I.; Chueh, H.C. (2007). "Architecture of the open-source clinical research chart from Informatics for Integrating Biology and the Bedside". AMIA Annual Symposium Proceedings 2007: 548–552. PMC PMC2655844. PMID 18693896. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC2655844.

Notes

This presentation is faithful to the original, with only a few minor changes to presentation. References were in alphabetical on the original, but references are listed in order of appearance on this wiki. In most of the article's references DOIs and PubMed IDs were not given; they've been added to make the references more useful. In some cases important information was missing from the references, and that information was added.