Journal:A view of programming scalable data analysis: From clouds to exascale

| Full article title | A view of programming scalable data analysis: From clouds to exascale |

|---|---|

| Journal | Journal of Cloud Computing |

| Author(s) | Talia, Domenico |

| Author affiliation(s) | DIMES at Università della Calabria |

| Primary contact | Email: talia at dimes dot unical dot it |

| Year published | 2019 |

| Volume and issue | 8 |

| Page(s) | 4 |

| DOI | 10.1186/s13677-019-0127-x |

| ISSN | 2192-113X |

| Distribution license | Creative Commons Attribution 4.0 International |

| Website | https://link.springer.com/article/10.1186/s13677-019-0127-x |

| Download | https://link.springer.com/content/pdf/10.1186%2Fs13677-019-0127-x.pdf (PDF) |

|

|

This article contains rendered mathematical formulae. You may require the TeX All the Things plugin for Chrome or the Native MathML add-on and fonts for Firefox if they don't render properly for you. |

Abstract

Scalability is a key feature for big data analysis and machine learning frameworks and for applications that need to analyze very large and real-time data available from data repositories, social media, sensor networks, smartphones, and the internet. Scalable big data analysis today can be achieved by parallel implementations that are able to exploit the computing and storage facilities of high-performance computing (HPC) systems and cloud computing systems, whereas in the near future exascale systems will be used to implement extreme-scale data analysis. Here is discussed how cloud computing currently supports the development of scalable data mining solutions and what the main challenges to be addressed and solved for implementing innovative data analysis applications on exascale systems currently are.

Keywords: big data analysis, cloud computing, exascale computing, data mining, parallel programming, scalability

Introduction

Solving problems in science and engineering was the first motivation for inventing computers. Much later, computer science remains the main area in which innovative solutions and technologies are being developed and applied. Also due to the extraordinary advancement of computer technology, nowadays data are generated as never before. In fact, the amount of structured and unstructured digital data is going to increase beyond any estimate. Databases, file systems, data streams, social media, and data repositories are increasingly pervasive and decentralized.

As the data scale increases, we must address new challenges and attack ever-larger problems. New discoveries will be achieved and more accurate investigations can be carried out due to the increasingly widespread availability of large amounts of data. Scientific sectors that fail to make full use of the volume of digital data available today risk losing out on the significant opportunities that big data can offer.

To benefit from big data availability, specialists and researchers need advanced data analysis tools and applications running on scalable architectures allowing for the extraction of useful knowledge from such huge data sources. High-performance computing (HPC) systems and cloud computing systems today are capable platforms for addressing both the computational and data storage needs of big data mining and parallel knowledge discovery applications. These computing architectures are needed to run data analysis because complex data mining tasks involve data- and compute-intensive algorithms that require large, reliable, and effective storage facilities together with high-performance processors to obtain results in a timely fashion.

Now that data sources have become pervasively huge, reliable and effective programming tools and applications for data analysis are needed to extract value and find useful insights in them. New ways to correctly and proficiently compose different distributed models and paradigms are required, and interaction between hardware resources and programming levels must be addressed. Users, professionals, and scientists working in the area of big data need advanced data analysis programming models and tools coupled with scalable architectures to support the extraction of useful information from such massive repositories. The scalability of a parallel computing system is a measure of its capacity to reduce program execution time in proportion to the number of its processing elements. (The appendix of this article introduces and discusses in detail scalability in parallel systems.) According to scalability definition, scalable data analysis refers to the ability of a hardware/software parallel system to exploit increasing computing resources effectively in the analysis of (very) large datasets.

Today, complex analysis of real-world massive data sources requires using high-performance computing systems such as massively parallel machines or clouds. However in the next years, as parallel technologies advance, exascale computing systems will be exploited for implementing scalable big data analysis in all areas of science and engineering.[1] To reach this goal, new design and programming challenges must be addressed and solved. As such, the focus of this paper is on discussing current cloud-based designing and programming solutions for data analysis and suggesting new programming requirements and approaches to be conceived for meeting big data analysis challenges on future exascale platforms.

Current cloud computing platforms and parallel computing systems represent two different technological solutions for addressing the computational and data storage needs of big data mining and parallel knowledge discovery applications. Indeed, parallel machines offer high-end processors with the main goal to support HPC applications, whereas cloud systems implement a computing model in which dynamically scalable virtualized resources are provided to users and developers as a service over the internet. In fact, clouds do not mainly target HPC applications; they represent scalable computing and storage delivery platforms that can be adapted to the needs of different classes of people and organizations by exploiting a service-oriented architecture (SOA) approach. Clouds offer large facilities to many users who were unable to own their parallel/distributed computing systems to run applications and services. In particular, big data analysis applications requiring access and manipulating very large datasets with complex mining algorithms will significantly benefit from the use of cloud platforms.

Although not many cloud-based data analysis frameworks are available today for end users, within a few years they will become common.[2] Some current solutions are based on open-source systems, such as Apache Hadoop and Mahout, Spark, and SciDB, while others are proprietary solutions provided by companies such as Google, Microsoft, EMC, Amazon, BigML, Splunk Hunk, and InsightsOne. As more such platforms emerge, researchers and professionals will port increasingly powerful data mining programming tools and frameworks to the cloud to exploit complex and flexible software models such as the distributed workflow paradigm. The growing utilization of the service-oriented computing model could accelerate this trend.

From the definition of the term "big data," which refers to datasets so large and complex that traditional hardware and software data processing solutions are inadequate to manage and analyze, we can infer that conventional computer systems are not so powerful to process and mine big data[3], and they are not able to scale with the size of problems to be solved. As mentioned before, to face with limits of sequential machines, advanced systems like HPC, cloud computing, and even more scalable architectures are used today to analyze big data. Starting from this scenario, exascale computing systems will represent the next computing step.[4][5] Exascale systems refers to high-performance computing systems capable of at least one exaFLOPS, so their implementation represents a significant research and technology challenge. Their design and development is currently under investigation with the goal of building by 2020 high-performance computers composed of a very large number of multi-core processors expected to deliver a performance of 1018 operations per second. Cloud computing systems used today are able to store very large amounts of data; however, they do not provide the high performance expected from massively parallel exascale systems. This is the main motivation for developing exascale systems. Exascale technology will represent the most advanced model of supercomputers. They have been conceived for single-site supercomputing centers, not for distributed infrastructures that could use multi-clouds or fog computing systems for decentralizing computing and pervasive data management, and later be interconnected with exascale systems that could be used as a backbone for very large scale data analysis.

The development of exascale systems spurs a need to address and solve issues and challenges at both the hardware and software level. Indeed, it requires the design and implementation of novel software tools and runtime systems able to manage a high degree of parallelism, reliability, and data locality in extreme scale computers.[6] Needed are new programming constructs and runtime mechanisms able to adapt to the most appropriate parallelism degree and communication decomposition for making scalable and reliable data analysis tasks. Their dependence on parallelism grain size and data analysis task decomposition must be deeply studied. This is needed because parallelism exploitation depends on several features like parallel operations, communication overhead, input data size, I/O speed, problem size, and hardware configuration. Moreover, reliability and reproducibility are two additional key challenges to be addressed. At the programming level, constructs for handling and recovering communication, data access, and computing failures must be designed. At the same time, reproducibility in scalable data analysis asks for rich information useful to assure similar results on environments that may dynamically change. All these factors must be taken into account in designing data analysis applications and tools that will be scalable on exascale systems.

Moreover, reliable and effective methods for storing, accessing, and communicating data; intelligent techniques for massive data analysis; and software architectures enabling the scalable extraction of knowledge from data are needed.[3] To reach this goal, models and technologies enabling cloud computing systems and HPC architectures must be extended/adapted or completely changed to be reliable and scalable on the very large number of processors/cores that compose extreme scale platforms and for supporting the implementation of clever data analysis algorithms that ought to be scalable and dynamic in resource usage. Exascale computing infrastructures will play the role of an extraordinary platform for addressing both the computational and data storage needs of big data analysis applications. However, as mentioned before, to have a complete scenario, efforts must be performed for implementing big data analytics algorithms, architectures, programming tools, and applications in exascale systems.[7]

Pursuing this objective within a few years, scalable data access and analysis systems will become the most used platforms for big data analytics on large-scale clouds. In the long term, new exascale computing infrastructures will appear as viable platforms for big data analytics in the next decades, and data mining algorithms, tools, and applications will be ported on such platforms for implementing extreme data discovery solutions.

In this paper we first discuss cloud-based scalable data mining and machine learning solutions, then we examine the main research issues that must be addressed for implementing massively parallel data mining applications on exascale computing systems. Data-related issues are discussed together with communication, multi-processing, and programming issues. We then introduce issues and systems for scalable data analysis on clouds and then discuss design and programming issues for big data analysis in exascale systems. We close by outlining some open design challenges.

Data analysis on cloud computing platforms

Cloud computing platforms implement elastic services, scalable performance, and scalable data storage used by a large and everyday increasing number of users and applications.[8][9] In fact, cloud platforms have enlarged the arena of distributed computing systems by providing advanced internet services that complement and complete functionalities of distributed computing provided by the internet, grid systems, and peer-to-peer networks. In particular, most cloud computing applications use big data repositories stored within the cloud itself, so in those cases large datasets are analyzed with low latency to effectively extract data analysis models.

"Big data" is a new and overused term that refers to massive, heterogeneous, and often unstructured digital content that is difficult to process using traditional data management tools and techniques. The term includes the complexity and variety of data and data types, real-time data collection and processing needs, and the value that can be obtained by smart analytics. However, we should recognize that data are not necessarily important per se but they become very important if we are able to extract value from them—that is if we can exploit them to make discoveries. The extraction of useful knowledge from big digital datasets requires smart and scalable analytics algorithms, services, programming tools, and applications. All these tools require insights into big data to make them more useful for people.

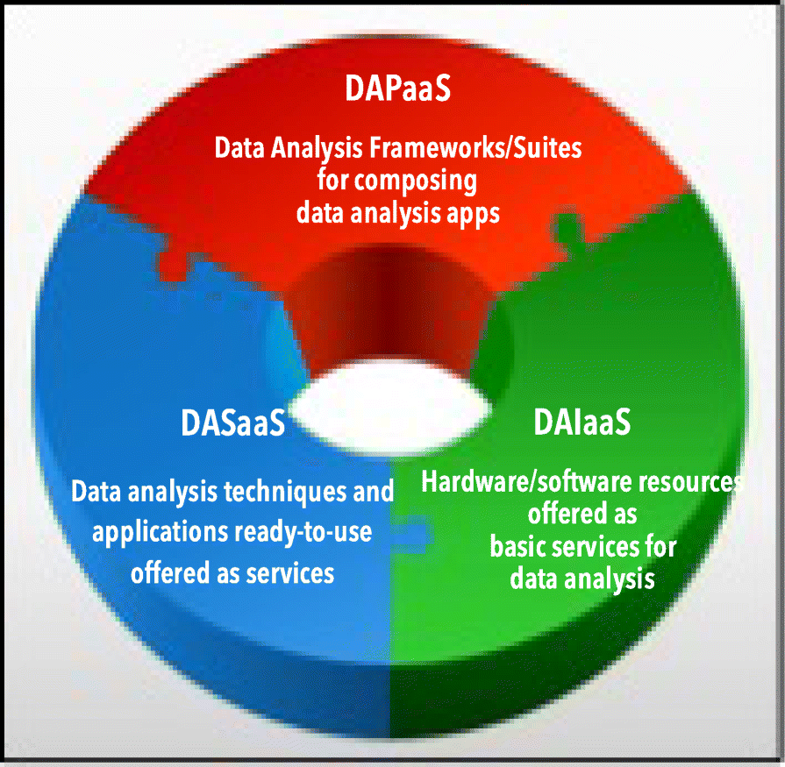

The growing use of service-oriented computing is accelerating the use of cloud-based systems for scalable big data analysis. Developers and researchers are adopting the three main cloud models—software as a service (SaaS), platform as a service (PaaS), and infrastructure as a service (IaaS)—to implement big data analytics solutions in the cloud.[10][11] According to a specialization of these three models, data analysis tasks and applications can be offered as services at the software, platform, or infrastructure level and made available every time from anywhere. A methodology for implementing them defines a new model stack to deliver data analysis solutions that are a specialization of the XaaS (everything as a service) stack and is called "data analysis as a service" (DAaaS). It adapts and specifies the three general service models (SaaS, PaaS, and IaaS) for supporting the structured development of big data analysis systems, tools, and applications according to a service-oriented approach. The DAaaS methodology is then based on the three basic models for delivering data analysis services at different levels as described here (see also Fig. 1):

- Data analysis infrastructure as a service (DAIaaS): This model provides a set of hardware/software virtualized resources that developers can assemble and use as an integrated infrastructure where storing large datasets, running data mining applications, and/or implementing data analytics systems from scratch;

- Data analysis platform as a service (DAPaaS): This model defines a supporting software platform that developers can use for programming and running their data analytics applications or extending existing ones without worrying about the underlying infrastructure or specific distributed architecture issues; and

- Data analysis software as a service (DASaaS): This is a higher-level model that offers to end users data mining algorithms, data analysis suites, or ready-to-use knowledge discovery applications as internet services that can be accessed and used directly through a web browser. According to this approach, all data analysis software is provided as a service, leaving end users without having to worry about implementation and execution details.

|

Cloud-based data analysis tools

Using the DASaaS methodology, we designed a cloud-based system, the Data Mining Cloud Framework (DMCF)[12] which supports three main classes of data analysis and knowledge discovery applications:

- Single-task applications, in which a single data mining task such as classification, clustering, or association rules discovery is performed on a given dataset;

- Parameter-sweeping applications, in which a dataset is analyzed by multiple instances of the same data mining algorithm with different parameters; and

- Workflow-based applications, in which knowledge discovery applications are specified as graphs linking together data sources, data mining tools, and data mining models.

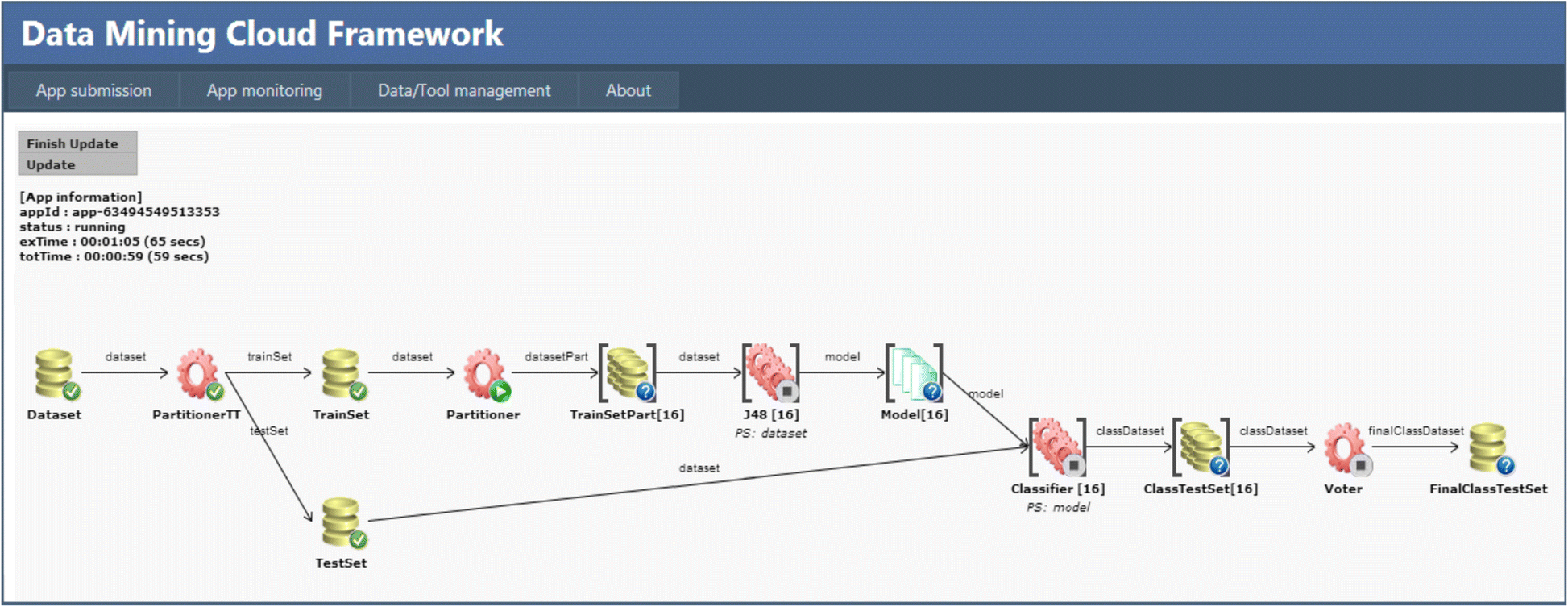

DMCF includes a large variety of processing patterns to express knowledge discovery workflows as graphs whose nodes denote resources (datasets, data analysis tools, mining models) and whose edges denote dependencies among resources. A web-based user interface allows users to compose their applications and submit them for execution to the cloud platform, following the data analysis software as a service approach. Visual workflows can be programmed in DMCF through a language called VL4Cloud (Visual Language for Cloud), whereas script-based workflows can be programmed by JS4Cloud (JavaScript for Cloud), a JavaScript-based language for data analysis programming.

Figure 2 shows a sample data mining workflow composed of several sequential and parallel steps. It is just an example for presenting the main features of the VL4Cloud programming interface.[12] The example workflow analyses a dataset by using n instances of a classification algorithm, which work on n portions of the training set and generate the same number of knowledge models. By using the n generated models and the test set, n classifiers produce in parallel n classified datasets (n classifications). In the final step of the workflow, a voter generates the final classification by assigning a class to each data item, by choosing the class predicted by the majority of the models.

|

Although DMCF has been mainly designed to coordinate coarse grain data and task parallelism in big data analysis applications by exploiting the workflow paradigm, the DMCF script-based programming interface (JS4Cloud) allows also for parallelizing fine-grain operations in data mining algorithms, as it permits to program in a JavaScript style any data mining algorithm, such as classification, clustering, and others. This can be done because loops and data parallel methods are run in parallel on the virtual machines of a cloud.[13][14]

Like DMCF, other innovative cloud-based systems designed for programming data analysis applications include Apache Spark, Sphere, Swift, Mahout, and CloudFlows. Most of them are open-source. Apache Spark is an open-source framework developed at University of California, Berkeley for in-memory data analysis and machine learning.[5] Spark has been designed to run both batch processing and dynamic applications like streaming, interactive queries, and graph analysis. Spark provides developers with a programming interface centered on a data structure called the "resilient distributed dataset" (RDD) that represents a read-only multi-set of data items distributed over a cluster of machines maintained in a fault-tolerant way. Differently from other systems and from Hadoop, Spark stores data in memory and queries it repeatedly so as to obtain better performance. This feature can be useful for a future implementation of Spark on exascale systems.

Swift is a workflow-based framework for implementing functional data-driven task parallelism in data-intensive applications. The Swift language provides a functional programming paradigm where workflows are designed as a set of calls with associated command-line arguments and input and output files. Swift uses an implicit data-driven task parallelism.[15] In fact, it looks like a sequential language, but being a dataflow language, all variables are futures, thus execution is based on data availability. Parallelism can be also exploited through the use of the foreach statement. Swift/T is a new implementation of the Swift language for high-performance computing. In this implementation, a Swift program is translated into an MPI program that uses the Turbine and ADLB runtime libraries for scalable dataflow processing over MPI. Recently, a porting of Swift/T on large cloud systems for the execution of numerous tasks has been investigated.

DMCF, differently from the other frameworks discussed here, it is the only system that offers both a visual and a script-based programming interface. Visual programming is a very convenient design approach for high-level users, like domain-expert analysts having a limited understanding of programming. On the other hand, script-based workflows are a useful paradigm for expert programmers who can code complex applications rapidly, in a more concise way and with greater flexibility. Finally, the workflow-based model exploited in DMCF and Swift make these frameworks of more general use with respect to Spark, which offers a very restricted set of programming patterns (e.g., map, filter, and reduce), so limiting the variety of data analysis applications that can be implemented with it.

These and other related systems are currently used for the development of big data analysis applications on HPC and cloud platforms. However, additional research in this field must be done and the development of new models, solutions, and tools is needed.[7][16] Just to mention a few, active and promising research topics are listed here, ordered by importance:

1. Programming models for big data analytics: New abstract programming models and constructs hiding the system complexity are needed for big data analytics tools. The MapReduce model and workflow models are often used on HPC and cloud implementations, but more research effort is needed to develop other scalable, adaptive, general-purpose higher-level models and tools. Research in this area is even more important for exascale systems; in the next section we will discuss some of these topics in exascale computing.

2. Reliability in scalable data analysis: As the number of processing elements increases, reliability of systems and applications decreases, and therefore mechanisms for detecting and handling hardware and software faults are needed. Although Fekete et al.[17] have proven that no reliable communication protocol can tolerate crashes of processors on which the protocol runs, some ways in which systems cope with the impossibility result can be found. Among them, at the programming level it is necessary to design constructs for handling communication, data access, and computing failures and for recovering from them. Programming models, languages, and APIs must provide general and data-oriented mechanisms for failure detection and isolation, preventing an entire application from failing and assuring its completion. Reliability is a much more important issue in the exascale domain, where the number of processing elements is massive and fault occurrence increases, making detection and recovering vital.

3. Application reproducibility: Reproducibility is another open research issue for designers of complex applications running on parallel systems. Reproducibility in scalable data analysis must, for example, face with data communication, data parallel manipulation, and dynamic computing environments. Reproducibility demands that current data analysis frameworks (like those based on MapReduce and on workflows) and the future ones, especially those implemented on exascale systems, must provide additional information and knowledge on how data are managed, on algorithm characteristics, and on configuration of software and execution environments.

4. Data and tool integration and openness: Code coordination and data integration are main issues in large-scale applications that use data and computing resources. Standard formats, data exchange models, and common application programming interfaces (APIs) are needed to support interoperability and ease cooperation among design teams using different data formats and tools.

5. Interoperability of big data analytics frameworks: The service-oriented paradigm allows running large-scale distributed applications on cloud heterogeneous platforms along with software components developed using different programming languages or tools. Cloud service paradigms must be designed to allow worldwide integration of multiple data analytics frameworks.

Exascale and big data analysis

As we discussed in the previous sections, data analysis gained a primary role because of the very large availability of datasets and the continuous advancement of methods and algorithms for finding knowledge in them. Data analysis solutions advance by exploiting the power of data mining and machine learning techniques and are changing several scientific and industrial areas. For example, the amount of data that social media daily generate is impressive and continuous. Some hundreds of terabyte of data, including several hundreds of millions of photos, are uploaded daily to Facebook and Twitter.

Therefore it is central to design scalable solutions for processing and analyzing such massive datasets. As a general forecast, IDC experts estimate data generated to reach about 45 zettabytes worldwide by 2020.[18] This impressive amount of digital data asks for scalable high-performance data analysis solutions. However, today only one-quarter of digital data available would be a candidate for analysis, and about five percent of that is actually analyzed. By 2020, the useful percentage could grow to about 35 percent, thanks to data mining technologies.

Extreme data sources and scientific computing

Scalability and performance requirements are challenging conventional data storage, file systems, and database management systems. Architectures of such systems have reached limits in handling extremely large processing tasks involving petabytes of data because they have not been built for scaling after a given threshold. New architectures and analytics platform solutions that must process big data for extracting complex predictive and descriptive models have become necessary.[19] Exascale systems, both from the hardware and the software side, can play a key role in supporting solutions to these problems.[1]

An IBM study reports that we are generating around 2.5 exabytes of data per day.[20] Because of that continuous and explosive growth of data, many applications require the use of scalable data analysis platforms. A well-known example is the ATLAS detector from the Large Hadron Collider at CERN in Geneva. The ATLAS infrastructure has a capacity of 200 PB of disk space and 300,000 processor cores, with more than 100 computing centers connected via 10 Gbps links. The data collection rate is massive, and only a portion of the data produced by the collider is stored. Several teams of scientists run complex applications to analyze subsets of those huge volumes of data. This analysis would be impossible without a high-performance infrastructure that supports data storage, communication, and processing. Also computational astronomers are collecting and producing increasingly larger datasets each year that without scalable infrastructures cannot be stored and processed. Another significant case is represented by the Energy Sciences Network (ESnet), the U.S. Department of Energy’s high-performance network managed by Berkeley Lab that in late 2012 rolled out a 100 gigabits-per-second national network to accommodate the growing scale of scientific data.

If we go from science to society, social data and eHealth are good examples to discuss. Social networks, such as Facebook and Twitter, have become very popular and are receiving increasing attention from the research community because of the huge amount of user-generated data, which provide valuable information concerning human behavior, habits, and travel. When the volume of data to be analyzed is of the order of terabytes or petabytes (billions of tweets or posts), scalable storage and computing solutions must be used, but no clear solutions today exist for the analysis of exascale datasets. The same occurs in the eHealth domain, where huge amounts of patient data are available and can be used for improving therapies, for forecasting and tracking of health data, and for the management of hospitals and health centers. Very complex data analysis in this area will need novel hardware/software solutions; however, exascale computing is still promising in other scientific fields where scalable storage and databases are not used/required. Examples of scientific disciplines where future exascale computing will be extensively used are quantum chromodynamics, materials simulation, molecular dynamics, materials design, earthquake simulations, subsurface geophysics, climate forecasting, nuclear energy, and combustion. All those applications require the use of sophisticated models and algorithms to solve complex equation systems that will benefit from the exploitation of exascale systems.

Programming model features for exascale data analysis

Implementing scalable data analysis applications in exascale computing systems is a complex job requiring high-level fine-grain parallel models, appropriate programming constructs, and skills in parallel and distributed programming. In particular, mechanisms and expertise are needed for expressing task dependencies and inter-task parallelism, for designing synchronization and load balancing mechanisms, handling failures, and properly managing distributed memory and concurrent communication among a very large number of tasks. Moreover, when the target computing infrastructures are heterogeneous and require different libraries and tools to program applications on them, the programming issues are even more complex. To cope with some of these issues in data-intensive applications, different scalable programming models have been proposed.[21]

Scalable programming models may be categorized by:

- i. Their level of abstraction, expressing high-level and low-level programming mechanisms, and

- ii. How they allow programmers to develop applications, using visual or script-based formalisms.

Using high-level scalable models, a programmer defines only the high-level logic of an application while hiding the low-level details that are not essential for application design, including infrastructure-dependent execution details. A programmer is assisted in application definition, and application performance depends on the compiler that analyzes the application code and optimizes its execution on the underlying infrastructure. On the other hand, low-level scalable models allow programmers to interact directly with computing and storage elements composing the underlying infrastructure and thus define the application's parallelism directly.

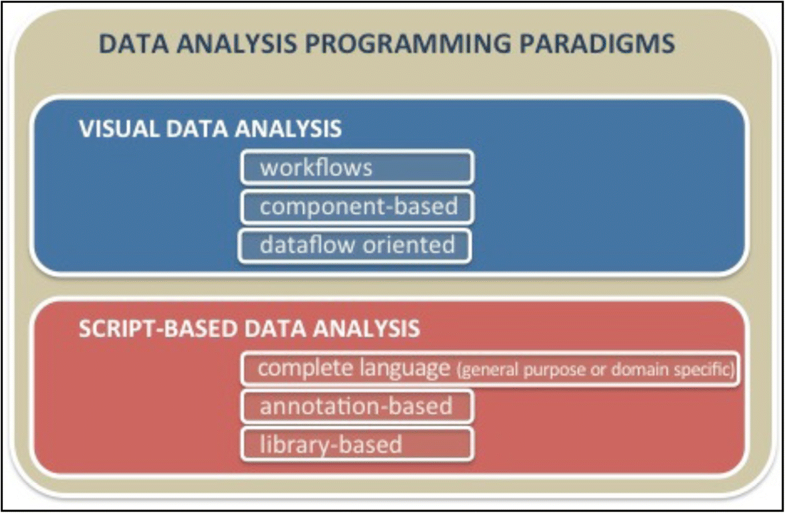

Data analysis applications implemented by some frameworks can be programmed through a visual interface, which is a convenient design approach for high-level users, for instance domain-expert analysts having a limited understanding of programming. In addition, a visual representation of workflows or components intrinsically captures parallelism at the task level, without the need to make parallelism explicit through control structures.[6] Visual-based data analysis typically is implemented by providing workflow-based languages or component-based paradigms (Fig. 3). Dataflow-based approaches that share with workflows the same application structure are also used. However, in dataflow models, the grain of parallelism and the size of data items are generally smaller with respect to workflows. In general, visual programming tools are not very flexible because they often implement a limited set of visual patterns and provide restricted manners to configure them. For addressing this issue, some visual languages provide users with the possibility to customize the behavior of patterns by adding code that can specify operations that execute a specific pattern when an event occurs.

|

On the other hand, code-based (or script-based) formalism allows users to program complex applications more rapidly, in a more concise way, and with higher flexibility.[13] Script-based applications can be designed in different ways (see Fig. 3):

- Use complete language or a language extension that allows to express parallelism in applications, according to a general purpose or a domain-specific approach. This approach requires the design and implementation of a new parallel programming language or a complete set of data types and parallel constructs to be fully inserted in an existing language.

- Use annotations in the application code that allow the compiler to identify which instructions will be executed in parallel. According to this approach, parallel statements are separated from sequential constructs, and they are clearly identified in the program code because they are denoted by special symbols or keywords.

- Use a library in the application code that adds parallelism to the data analysis application. Currently this is the most-used approach since it is orthogonal to host languages. MPI and MapReduce are two well-known examples of this approach.

Given the variety of data analysis applications and classes of users (from skilled programmers to end users) that can be envisioned for future exascale systems, there is a need for scalable programming models with different levels of abstractions (high-level and low-level) and different design formalisms (visual and script-based), according to the classification outlined above.

As we discussed, data-intensive applications are software programs that have a significant need to process large volumes of data.[22] Such applications devote most of their processing time to running I/O operations and exchanging and moving data among the processing elements of a parallel computing infrastructure. Parallel processing in data analysis applications typically involves accessing, pre-processing, partitioning, distributing, aggregating, querying, mining, and visualizing data that can be processed independently.

The main challenges for programming data analysis applications on exascale computing systems come from potential scalability; network latency and reliability; reproducibility of data analysis; and resilience of mechanisms and operations offered to developers for accessing, exchanging, and managing data. Indeed, processing extremely large data volumes requires operations and new algorithms able to scale in loading, storing, and processing massive amounts of data that generally must be partitioned in very small data grains, on which thousands to millions of simple parallel operations do analysis.

Exascale programming systems

Exascale systems force new requirements on programming systems to target platforms with hundreds of homogeneous and heterogeneous cores. Evolutionary models have been recently proposed for exascale programming that extend or adapt traditional parallel programming models like MPI (e.g., EPiGRAM[23] that uses a library-based approach, Open MPI for exascale in the ECP initiative), OpenMP (e.g., OmpSs[24] that exploits an annotation-based approach, the SOLLVE project), and MapReduce (e.g., Pig Latin[25] that implements a domain-specific complete language). These new frameworks limit the communication overhead in message passing paradigms or limit the synchronization control if a shared-memory model is used.[26]

As exascale systems are likely to be based on large distributed memory hardware, MPI is one of the most natural programming systems. MPI is currently used on over one million cores, and therefore it is reasonable to have MPI as one programming paradigm used on exascale systems. The same possibility occurs for MapReduce-based libraries that today are run on very large HPC and cloud systems. Both these paradigms are largely used for implementing big data analysis applications. As expected, general MPI all-to-all communication does not scale well in exascale environments; thus, to solve this issue new MPI releases introduced neighbor collectives to support sparse “all-to-some” communication patterns that limit the data exchange on limited regions of processors.[26]

Ensuring the reliability of exascale systems requires a holistic approach, including several hardware and software technologies for both predicting crashes and keeping systems stable despite failures. In the runtime of parallel APIs (like MPI and MapReduce-based libraries like Hadoop), a reliable communication layer must be provided if incorrect behavior in case of processor failure is to be mitigated. The lower unreliable layer is used by implementing a correct protocol that works safely with every implementation of the unreliable layer that cannot tolerate crashes of the processors on which it runs. Concerning MapReduce frameworks, reference[27] reports on an adaptive MapReduce framework, called P2P-MapReduce—which has been developed to manage node churn, master node failures, and job recovery in a decentralized way—provide a more reliable MapReduce middleware that can be effectively exploited in dynamic large-scale infrastructures.

On the other hand, new complete languages such as X10[28], ECL[29], UPC[30], Legion[31], and Chapel[32] have been defined by exploiting in them a data-centric approach. Furthermore, new APIs based on a revolutionary approach, such as GA[33] and SHMEM[34], have been implemented according to a library-based model. These novel parallel paradigms are devised to address the requirements of data processing using massive parallelism. In particular, languages such as X10, UPC, and Chapel and the GA library are based on a partitioned global address space (PGAS) memory model that is suited to implement data-intensive exascale applications because it uses private data structures and limits the amount of shared data among parallel threads.

Together with different approaches, such as Pig Latin and ECL, those programming models, languages, and APIs, must be further investigated, designed, and adapted, for providing data-centric scalable programming models useful in supporting the reliable and effective implementation of exascale data analysis applications composed of up to millions of computing units that process small data elements and exchange them with a very limited set of processing elements. PGAS-based models, data-flow and data-driven paradigms, and local-data approaches today represent promising solutions that could be used for exascale data analysis programming. The APGAS model is, for example, implemented in the X10 language, based on the notions of places and asynchrony. A place is an abstraction of shared, mutable data and worker threads operating on the data. A single APGAS computation can consist of hundreds or potentially tens of thousands of places. Asynchrony is implemented by a single block-structured control construct async. Given a statement ST, the construct async ST executes ST in a separate thread of control. Memory locations in one place can contain references to locations at other places. To compute upon data at another place, the following statement must be used:

This allows the task to change its place of execution to p, execute ST at p and return, leaving behind tasks that may have been spawned during the execution of ST.

Another interesting language based on the PGAS model is Chapel.[32] Its locality mechanisms can be effectively used for scalable data analysis where light data mining (sub-)tasks are run on local processing elements and partial results must be exchanged. Chapel's data locality provides control over where data values are stored and where tasks execute so that developers can ensure parallel data analysis computations execute near the variables they access, or vice-versa for minimizing the communication and synchronization costs. For example, Chapel programmers can specify how domains and arrays are distributed among the system nodes. Another appealing feature in Chapel is the expression of synchronization in a data-centric style. By associating synchronization constructs with data (variables), locality is enforced and data-driven parallelism can be easily expressed also at large scale. In Chapel, "locales" and "domains" are abstractions for referring to machine resources and map tasks and data to them. Locales are language abstractions for naming a portion of a target architecture (e.g., a GPU, a single core, or a multicore node) that has processing and storage capabilities. A locale specifies where (on which processing node) to execute tasks/statements/operations. For example, in a system composed of four locales:

we can use the following for executing the method Filter (D) on the first locale:

.

And to execute the K-means() algorithm on the four locales, we can use:

.

Whereas locales are used to map tasks to machine nodes, domain maps are used for mapping data to a target architecture. Here is a simple example of a declaration of a rectangular domain:

Domains can be also mapped to locales. Similar concepts (logical regions and mapping interfaces) are used in the Legion programming model.[31][21]

Exascale programming is a strongly evolving research field, and it is not possible to discuss in detail all programming models, languages, and libraries that are contributing to provide features and mechanisms useful for exascale data analysis application programming. However, the next section introduces, discusses, and classifies current programming systems for exascale computing according to the most used programming and data management models.

Exascale programming systems comparison

As mentioned, several parallel programming models, languages, and libraries are under development for providing high-level programming interfaces and tools for implementing high-performance applications on future Exascale computers. Here we introduce the most significant proposals and discuss their main features. Table 1 lists and classifies the considered systems and summarizes some pros and fallacies of different classes.

Summary of data security issues

Based on the literature review, Table 1 summarizes the major data security concerns that IT leaders should consider in order to move their ERP systems into the cloud.

| ||||||||||||||||||||||||

Since exascale systems will be composed of millions of processing nodes, distributed memory paradigms and message passing systems in particular are candidate tools to be used as programming systems for such class of systems. In this area, MPI is currently the most used and studied system. Different adaptations of this well-known model are under development such as, for example, Open MPI for Exascale. Other systems based on distributed memory programming are Pig Latin, Charm++, Legion, PaRSEC, Bulk Synchronous Parallel (BSP), AllScale API, and Enterprise Control Language (ECL). Just considering Pig Latin, we notice that some of its parallel operators such as FILTER, which selects a set of tuples from a relation based on a condition, and SPLIT, which partitions a relation into two or more relations, can be very useful in many highly parallel big data analysis applications.

On the other side, we have shared-memory models, where the major system is OpenMP, which offers a simple parallel programming model, although it does not provide mechanisms to explicitly map and control data distribution and includes non-scalable synchronization operations that make its implementation on massively parallel systems a challenging prospect. Other programming systems in this area are Threading Building Blocks (TBB), OmpSs, and Cilk++. The OpenMP synchronization model based on locks and atomic and sequential sections that limit parallelism exploitation in exascale systems are going to be modified and integrated in recent OpenMP implementations with new techniques and routines that increase asynchronous operations and parallelism exploitation. A similar approach is used in Cilk++, which supports parallel loops and hyperobjects, a new construct designed to solve data race problems created by parallel accesses to global variables. In fact, a hyperobject allows multiple tasks to share state without race conditions and without using explicit locks.

As a tradeoff between distributed and shared memory organizations, the Partitioned Global Address Space (PGAS) model has been designed for implementing a global memory address space that is logically partitioned, and portions of it are local to single processes. The main goal of the PGAS model is to limit data exchange and isolate failures in very large-scale systems. Languages and libraries based on PGAS are Unified Parallel C (UPC), Chapel, X10, Global Arrays (GA), Co-Array Fortran (CAF), DASH, and SHMEM. PGAS appears to be suited for implementing data-intensive exascale applications because it uses private data structures and limits the amount of shared data among parallel threads. Its memory-partitioning model facilitates failure detection and resilience. Another programming mechanism useful for decentralized data analysis is related to data synchronization. In the SHMEM library it is implemented through the shmem_barrier operation, which performs a barrier operation on a subset of processing elements, then enables them to go further by sharing synchronized data.

Starting from those three main programming approaches, hybrid systems have been proposed and developed to better map application tasks and data onto hardware architectures of exascale systems. In hybrid systems that combine distributed and shared memory, message-passing routines are used for data communication and inter-node processing, whereas shared-memory operations are used for exploiting intranode parallelism. A major example in this area is given by the different MPI + OpenMP systems recently implemented. Hybrid systems have been also designed by combining message passing models, like MPI, with PGAS models for restricting data communication overhead and improving MPI efficiency in execution time and memory consumption. The PGAS-based MPI implementation EMPI4Re, developed in the EPiGRAM project, is an example of this class of hybrid system.

Associated with the programming model issues, a set of challenges concern the design of runtime systems that in exascale computing systems must be tightly integrated with the programming tools level. The main challenges for runtime systems obviously include parallelism exploitation, limited data communication, data dependence management, data-aware task scheduling, processor heterogeneity, and energy efficiency. However, together with those main issues, other aspects are addressed in runtime systems like storage/memory hierarchies, storage and processor heterogeneity, performance adaptability, resource allocation, performance analysis, and performance portability. In addressing those issues, the currently used approaches aim at providing simplified abstractions and machine models that allow algorithm developers and application programmers to generate code that can run and scale on a wide range of exascale computing systems.

This is a complex task that can be achieved by exploiting techniques that allow the runtime system to cooperate with the compiler, the libraries, and the operating system to find integrated solutions and make smarter use of hardware resources by efficient ways to map the application code to the exascale hardware. Finally, due to the specific features of exascale hardware, runtime systems need to find methods and techniques that allow bringing the computing system closer to the application requirements. Research work in this area is carried out in projects like XPRESS, StarPU, Corvette DEGAS, libWater[35], Traleika-Glacier, OmpSs[24], SnuCL, D-TEC, SLEEC, PIPER, and X-TUNE that are proposing innovative solutions for large-scale parallel computing systems that can be used in exascale machines. For instance, a system that aims at integrating the runtime with the language level is OmpSs, where mechanisms for data dependence management (based on DAG analysis like in libWater) and for mapping tasks to computing nodes and handling processor heterogeneity (the target construct) are provided. Another issue to be taken into account in the interaction between the programming level and the runtime is performance and scalability monitoring. In the StarPU project, for example, performance feedback through task profiling and trace analysis is provided.

In large-scale high-performance machines and in exascale systems, the runtime systems are more complex than in traditional parallel computers. In fact, performance and scalability issues must be addressed at the inter-node runtime level, and they must be appropriately integrated with intra-node runtime mechanisms.[36] All these issues relate to system and application scalability. In fact, vertical scaling of systems with multicore parallelism within a single node must be addressed. Scalability is still an open issue in exascale systems also because speed-up requirements for system software and runtimes are much higher than in traditional HPC systems, and different portions of code in applications or runtimes can generate performance bottlenecks.

Concerning application resiliency, the runtime of exascale systems must include mechanisms for restarting tasks and accessing data in case of software or hardware faults without requiring developer involvement. Traditional approaches for providing reliability in HPC include checkpointing and restart (see for instance MPI_Checkpoint), reliable data storage (through file and in-memory replication or double buffering), and message logging for minimizing the checkpointing overhead. In fact, whereas the global checkpointing/restart technique is the most used to limit system/application faults, in the exascale scenario, new mechanisms with low overhead and highly scalability must be designed. These mechanisms should limit task and data duplication through smart approaches for selective replication. For example, silent data corruption (SDC) is recognized to be a critical problem in exascale computing. However, although replication is useful, their inherent inefficiency must be limited. Research work is carried out in this area to define techniques that limit replication costs while offering protection from SDC. For application/task checkpointing, instead of checkpointing the entire address space of the application, as occurs in OpenMP and MPI, the minimal state of the tasks needed to be checkpointed for the fault recovery must be identified, thus limiting data size and recovery overhead.

Requirements of exascale runtime for data analysis

One of the most important aspect to ponder in applications that run on exascale systems and analyze big datasets is the tradeoff between sharing data among processing elements and computing things locally to reduce communication and energy costs, while keeping performance and fault-tolerance levels. A scalable programming model founded on basic operations for data intensive/data-driven applications must include mechanisms and operations for:

- parallel data access that allows increasing data access bandwidth by partitioning data into multiple chunks, according to different methods, and accessing several data elements in parallel to meet high throughput requirements;

- fault resiliency, a major issue as machines expand in size and complexity; on exascale systems with huge amounts of processes, non-local communication must be prepared for a potential failure of one of the communication sides; runtimes must features failure-handling mechanisms for recovering from node and communication faults;

- data-driven local communication that is useful for limiting the data exchange overhead in massively parallel systems composed of many cores; in this case, data availability among neighbor nodes dictates the operations taken by those nodes;

- data processing on limited groups of cores, which allows concentrating data analysis operations involving limited sets of cores and large amount of data on localities of exascale machines facilitating a type of data affinity co-locating related data and computation;

- near-data synchronization to limit the overhead generated by synchronization mechanisms and protocols that involve several far away cores in keeping data up-to-date;

- in-memory querying and analytics, needed to reduce query response times and execution of analytics operations by caching large volumes of data in the computing node RAMs and issuing queries and other operations in parallel on the main memory of computing nodes;

- group-level data aggregation in parallel systems, which is useful for efficient summarization, graph traversal, and matrix operations, making it of great importance in programming models for data analysis on massively parallel systems; and

- locality-based data selection and classification, for limiting the latency of basic data analysis operations running in parallel on large scale machines in a way that the subset of data needed together in a given phase are locally available (in a subset of nearby cores).

A reliable and high-level programming model and its associated runtime must be able to manage and provide implementation solutions for those operations, together with the reliable exploitation of a very large amount of parallelism.

Real-world big data analysis applications cannot be practically solved on sequential machines. If we refer to real-world applications, each large-scale data mining and machine learning software platform that today is under development in the areas of social data analysis and bioinformatics will certainly benefit from the availability of exascale computing systems. They will also benefit from the use of exascale programming environments that will offer massive and adaptive-grain parallelism, data locality, local communication, and synchronization mechanisms, together with the other features discussed in the previous sections that are needed for reducing execution time and making feasible the solution of new problems and challenges. For example, in bioinformatics applications, parallel data partitioning is a key feature for running statistical analysis or machine learning algorithms on high-performance computing systems. After that, clever and complex data mining algorithms must be run on each single core/node of an exascale machine on subsets of data to produce data models in parallel. When partial models are produced, they can be checked locally and must be merged among nearby processors to obtain, for example, a general model of gene expression correlations or of drug-gene interactions. Therefore for those applications, data locality, highly parallel correlation algorithms, and limited communication structures are very important to reduce execution time from several days to a few minutes. Moreover, fault tolerance software mechanisms are also useful in long-running bioinformatics applications to avoid restarting them from the beginning when a software/hardware failure occurs.

Moving to social media applications, nowadays the huge volume of user-generated data in social media platforms such as Facebook, Twitter and Instagram are very precious sources of data from which to extract insights concerning human dynamics and behaviors. In fact, social media analysis is a fast growing research area that will benefit from the use of exascale computing systems. For example, social media users moving through a sequence of places in a city or a region may create a huge amount of geo-referenced data that include extensive knowledge about human dynamics and mobility behaviors. A methodology for discovering behavior and mobility patterns of users from social media posts and tweets includes a set of steps such as collection and pre-processing of geotagged items, organization of the input dataset, data analysis and trajectory mining algorithm execution, and results visualization. In all those data analysis steps, the utilization of scalable programming techniques and tools is vital to obtain practical results in feasible time when massive datasets are analyzed. The exascale programming features and requirements discussed here and in the previous sections will be very useful in social data analysis, particularly for executing parallel tasks like concurrent data acquisition (thus data items are collected exploiting parallel queries from different data sources), parallel data filtering ,and data partitioning by the exploitation of local and in-memory algorithms, classification, clustering and association mining algorithms that are computing intensive and need a large number of processing elements working asynchronously to produce learning models from billions of posts containing text, photos, and videos. The management and processing of terabytes of data that are involved in those applications cannot be done efficiently without solving issues like data locality, near-data processing, large asynchronous execution, and the other similar issues addressed in exascale computing systems.

Together with an accurate modeling of basic operations and of the programming languages/APIs that include them, supporting correct and effective data-intensive applications on exascale systems will require also a significant programming effort of developers when they need to implement complex algorithms and data-driven applications such as those used, for example, in big data analysis and distributed data mining. Parallel and distributed data mining strategies, like collective learning, meta-learning, and ensemble learning must be devised using fine-grain parallel approaches to be adapted on exascale computers. Programmers must be able to design and implement scalable algorithms by using the operations sketched above specifically adapted to those new systems. To reach this goal, a coordinated effort between the operation/language designers and the application developers would be fruitful.

In Exascale systems, the cost of accessing, moving, and processing data across a parallel system is enormous.[19][7] This requires mechanisms, techniques, and operations for capable data access, placement, and querying. In addition, scalable operations must be designed in such a way to avoid global synchronizations, centralized control, and global communications. Many data scientists want to be abstracted away from these tricky, lower level aspects of HPC until at least they have their code working, afterwards potentially tweaking communication and distribution choices in a high-level manner in order to further tune their code. Interoperability and integration with the MapReduce model and MPI must be investigated, with the main goal of achieving scalability on large-scale data processing.

Different data-driven abstractions can be combined for providing a programming model and an API that allow the reliable and productive programming of very large-scale heterogeneous and distributed memory systems. In order to simplify the development of applications in heterogeneous distributed memory environments, large-scale data-parallelism can be exploited on top of the abstraction of n-dimensional arrays subdivided in partitions, so that different array partitions are placed on different cores/nodes that will process in parallel the array partitions. This approach can allow the computing nodes to process in parallel data partitions at each core/node using a set of statements/library calls that hide the complexity of the underlying process. Data dependency in this scenario limits scalability, so it should be avoided or limited to a local scale.

Abstract data types provided by libraries, so that they can be easily integrated in existing applications, should support this abstraction. As we mentioned above, another issue is the gap between users with HPC needs and experts with the skills to make the most of these technologies. An appropriate directive-based approach can be to design, implement, and evaluate a compiler framework that allows generic translations from high-level languages to exascale heterogeneous platforms. A programming model should be designed at a level that is higher than that of standards, such as OpenCL, including also checkpointing and fault resiliency. Efforts must be carried out to show the feasibility of transparent checkpointing of exascale programs and quantitatively evaluate the runtime overhead. Approaches like CheCL show that it is also possible to enable transparent checkpoint and restart in high-performance and dependable GPU computing, including support for process migration among different processors such as a CPU and a GPU.

The model should enable rapid development with reduced effort for different heterogeneous platforms. These heterogeneous platforms need to include low-energy architectures and mobile devices. The new model should allow a preliminary evaluation of results on the target architectures.

Concluding remarks and future work

Cloud-based solutions for big data analysis tools and systems are in an advanced phase both on the research and the commercial sides. On the other hand, new exascale hardware/software solutions must be studied and designed to allow the mining of very large-scale datasets on those new platforms.

Exascale systems raise new requirements on application developers and programming systems to target architectures composed of a significantly large number of homogeneous and heterogeneous cores. General issues like energy consumption, multitasking, scheduling, reproducibility, and resiliency must be addressed together with other data-oriented issues like data distribution and mapping, data access, data communication, and synchronization. Programming constructs and runtime systems will play a crucial role in enabling future data analysis programming models, runtime models, and hardware platforms to address these challenges, supporting the scalable implementation of real big data analysis applications.

In particular, here we summarize a set of open design challenges that are critical for designing exascale programming systems and for their scalable implementation. The following design choices, among others, must be taken into account:

- Application reliability: Data analysis programming models must include constructs and/or mechanisms for handling task and data access failures as well as system recoveries. As new data analysis platforms appear ever larger, the fully reliable operations cannot be implicit, and this assumption becomes less credible, therefore explicit solutions must be proposed.

- Reproducibility requirements: Big data analysis running on massively parallel systems demands reproducibility. New data analysis programming frameworks must collect and generate metadata and provenance information about algorithm characteristics, software configuration, and execution environment for supporting application reproducibility on large-scale computing platforms.

- Communication mechanisms: Novel approaches must be devised for facing network unreliability[17] and network latency, for example by expressing asynchronous data communications and locality-based data exchange/sharing.

- Communication patterns: A correct paradigm design should include communication patterns allowing application-dependent features and data access models, limiting data movement, and simplifying the burden on exascale runtimes and interconnection.

- Data handling and sharing patterns: Data locality mechanisms/constructs like near-data computing must be designed and evaluated on big data applications when subsets of data are stored in nearby processors, and by avoiding that locality, this is imposed when data must be moved. Other challenges concern data affinity control data querying (NoSQL approach), global data distribution, and sharing patterns.

- Data-parallel constructs: Useful models like data-driven/data-centric constructs, dataflow parallel operations, independent data parallelism, and SPMD patterns must be deeply considered and studied.

- Grain of parallelism: Anything from fine-grain to process-grain parallelism must be analyzed also in combination with the different parallelism degree that the exascale hardware supports. Perhaps different grain size should be considered in a single model to address hardware needs and heterogeneity.

Finally, since big data mining algorithms often require the exchange of raw data or, better, of mining parameters and partial models, to achieve scalability and reliability on thousands of processing elements, metadata-based information, limited-communication programming mechanisms, and partition-based data structures with associated parallel operations must be proposed and implemented.

Abbreviations

APGAS: asynchronous partitioned global address space

BSP: bulk synchronous parallel

CAF: Co-Array Fortran

DAaaS: data analysis as a service

DAIaaS: data analysis infrastructure as a service

DAPaaS: data analysis platform as a service

DASaaS: data analysis software as a service

DMCF: Data Mining Cloud Framework

ECL: Enterprise Control Language

ESnet: Energy Sciences Network

GA: global array

HPC: high-performance computing

IaaS: infrastructure as a service

JS4Cloud: JavaScript for Cloud

PaaS: platform as a service

PGAS: partitioned global address space

RDD: resilient distributed dataset

SaaS: software as a service

SOA: service oriented computing

TBB: threading building blocks

VL4Cloud: Visual Language for Cloud

XaaS: everything as a service

Appendix

Scalability in parallel systems

Parallel computing systems aim at exploiting the capacity of usefully employing all their processing elements during application execution. Indeed, only an ideal parallel system can do that fully because of its sequential times that cannot be parallelized (as Amdahl’s law suggests[37]) and due to several sources of overhead such as sequential operations, communication, synchronization, I/O and memory access, network speed, I/O system speed, hardware and software failures, problem size, and program input. All these issues related to the ability of parallel systems to fully exploit their resources are referred to as system or program scalability.[38]

The scalability of a parallel computing system is a measure of its capacity to reduce program execution time in proportion to the number of its processing elements. According to this definition, scalable computing refers to the ability of a hardware/software parallel system to exploit increasing computing resources effectively in the execution of a software application.[39]

Despite the difficulties that can be faced in the parallel implementation of an application, a framework, or a programming system, a scalable parallel computation can always be made cost-optimal if the number of processing elements, the size of memory, the network bandwidth, and the size of the problem are chosen appropriately.

For evaluation and measuring scalability of a parallel program, some metrics have been defined and are largely used: parallel runtime T(p), speedup S(p) and efficiency E(p). Parallel runtime is the total processing time of the program using p processor (with p > 1). Speedup is the ratio between the total processing time of the program on one processor and the total processing time on p processors: S(p) = T(1)/T(p). Efficiency is the ratio between speedup and the total number of used processors: E(p) = S(p)/p.

Application scalability is influenced by the available hardware and software resources, their performance and reliability, and by the sources of overhead discussed before. In particular, scalability of data analysis applications are tight related to the exploitation of parallelism in data-driven operations and the overhead generated by data management mechanisms and techniques. Moreover, application scalability also depends on the programmer's ability to design the algorithms, reducing sequential time and exploiting parallel operations. Finally, the instruction designers and the runtime implementers contribute to exploitation of scalability.[40] All these arguments mean that for realizing exascale computing in practice, many issues and aspects must be taken into account by considering all the layers of hardware/software stack involved in the execution of exascale programs.

In addressing parallel system scalability, it must be also tackled system dependability. As the number of processors and network interconnections increases—and as tasks, threads, and message exchanges increase—the rate of failures and faults increases too.[41] As discussed in reference[42], the design of scalable parallel systems requires assuring system dependability. Therefore understanding of failure characteristics is a key issue to couple high performance and reliability in massive parallel systems at exascale size.

Acknowledgements

Funding

This work has been partially funded by the ASPIDE Project funded by the European Union’s Horizon 2020 research and innovation programme under grant agreement No 801091.

Availability of data and materials

Data sharing not applicable to this article as no datasets were generated or analyzed during the current study.

Authors’ contributions

DT carried out all the work presented in the paper. The author read and approved the final manuscript.

Competing interests

The author declare that he/she has no competing interests.

References

- ↑ 1.0 1.1 Petcu, D.; Iuhasz, G.; Pop, D. et al. (2015). "On Processing Extreme Data". Scalable Computing: Practice and Experience 16 (4). doi:10.12694/scpe.v16i4.1134.

- ↑ Tardieu, O.; Herta, B.; Cunningham, D. et al. (2016). "X10 and APGAS at Petascale". ACM Transactions on Parallel Computing (TOPC) 2 (4): 25. doi:10.1145/2894746.

- ↑ 3.0 3.1 Talia, D. (2015). "Making knowledge discovery services scalable on clouds for big data mining". Proceedings from the Second IEEE International Conference on Spatial Data Mining and Geographical Knowledge Services (ICSDM): 1–4. doi:10.1109/ICSDM.2015.7298015.

- ↑ Amarasinghe, S.; Campbell, D.; Carlson, W. et al. (14 September 2009). "ExaScale Software Study: Software Challenges in Extreme Scale Systems". DARPA IPTO. pp. 153. doi:10.1.1.205.3944. http://citeseerx.ist.psu.edu/viewdoc/summary?doi=10.1.1.205.3944.

- ↑ 5.0 5.1 Zaharia. M.; Xin, R.S.; Wendell, P. et al. (2016). "Apache Spark: A unified engine for big data processing". Communications of the ACM 59 (11): 56–65. doi:10.1145/2934664.

- ↑ 6.0 6.1 Maheshwari, K.; Montagnat, J. (2010). "Scientific Workflow Development Using Both Visual and Script-Based Representation". 6th World Congress on Services: 328–35. doi:10.1109/SERVICES.2010.14.

- ↑ 7.0 7.1 7.2 Reed, D.A.; Dongarra, J. (2015). "Exascale computing and big data". Communications of the ACM 58 (7): 56–68. doi:10.1145/2699414.

- ↑ Armbrust, M.; Fox, A.; Griffith, R. et al. (2010). "A view of cloud computing". Communications of the ACM 53 (4): 50–58. doi:10.1145/1721654.1721672.

- ↑ Gu, Y.; Grossman, R.L. (2009). "Sector and Sphere: The design and implementation of a high-performance data cloud". Philosophical Transactions, Series A: Mathematical, Physical, and Engineering Sciences 367 (1897): 2429–45. doi:10.1098/rsta.2009.0053. PMC PMC3391065. PMID 19451100. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3391065.

- ↑ Talia, D.; Trunfio, P.; Marozzo, F. (2015). Data Analysis in the Cloud. Elsevier. pp. 150. ISBN 9780128029145.

- ↑ Hwang, K. (2017). Cloud Computing for Machine Learning and Cognitive Applications. MIT Press. pp. 624. ISBN 9780262036412.

- ↑ 12.0 12.1 Marozzo, F.; Talia, D.; Trunfio, P. (2013). "A Cloud Framework for Big Data Analytics Workflows on Azure". In Catlett, C., Gentzsch, W., Grandinetti, L. et al.. Cloud Computing and Big Data. Advances in Parallel Computing. 23. pp. 182–91. doi:10.3233/978-1-61499-322-3-182. ISBN 9781614993223.

- ↑ 13.0 13.1 Marozzo, F.; Talia, D.; Trunfio, P. (2015). "JS4Cloud: script‐based workflow programming for scalable data analysis on cloud platforms". Concurrency and Computation: Practice and Experience 27 (17): 5214–37. doi:10.1002/cpe.3563.

- ↑ Talia, D. (2013). "Clouds for Scalable Big Data Analytics". Computer 46 (5): 98–101. doi:10.1109/MC.2013.162.

- ↑ Wozniak, J.M.; Wilde, M.; Foster, I.T. (2014). "Language Features for Scalable Distributed-Memory Dataflow Computing". Fourth Workshop on Data-Flow Execution Models for Extreme Scale Computing: 50–53. doi:10.1109/DFM.2014.17.

- ↑ Lucas, R.; Ang, J.; Bergman, K. et al. (10 February 2014). "Top Ten Exascale Research Challenges" (PDF). U.S. Department of Energy. pp. 80. https://science.energy.gov/~/media/ascr/ascac/pdf/meetings/20140210/Top10reportFEB14.pdf.

- ↑ 17.0 17.1 Fekete, A.; Lynch, N.; Mansour, Y.; Spinelli, J. (1993). "The impossibility of implementing reliable communication in the face of crashes". Journal of the ACM 40 (5): 1087–1107. doi:10.1145/174147.169676.

- ↑ IDC (April 2014). "The Digital Universe of Opportunities: Rich Data and the Increasing Value of the Internet of Things". Dell EMC. https://www.emc.com/leadership/digital-universe/2014iview/executive-summary.htm.

- ↑ 19.0 19.1 Chen, J.; Choudhary, A.; Feldman, S. et al. (March 2013). "Synergistic Challenges in Data-Intensive Science and Exascale Computing: DOE ASCAC Data Subcommittee Report". Department of Energy, Office of Science. https://www.scholars.northwestern.edu/en/publications/synergistic-challenges-in-data-intensive-science-and-exascale-com.

- ↑ "What will we make of this moment?" (PDF). IBM. 2013. pp. 151. https://www.ibm.com/annualreport/2013/bin/assets/2013_ibm_annual.pdf.

- ↑ 21.0 21.1 Diaz, J.; Muñoz-Caro, C.; Niño, A. (2012). "A Survey of Parallel Programming Models and Tools in the Multi and Many-Core Era". IEEE Transactions on Parallel and Distributed Systems 23 (8): 1369–86. doi:10.1109/TPDS.2011.308.

- ↑ Gorton, I.; Greenfield, P.; Szalay, A.; Willimas, R. (2008). "Data-Intensive Computing in the 21st Century". Computer 41 (4): 30–32. doi:10.1109/MC.2008.122.

- ↑ Markidis, S.; Peng, I.B.; Larsson, J. et al. (2016). "The EPiGRAM Project: Preparing Parallel Programming Models for Exascale". High Performance Computing - ISC High Performance 2016: 56–68. doi:10.1007/978-3-319-46079-6_5.

- ↑ 24.0 24.1 Fernández, A.; Beltran, V.; Martorell, X. et al. (2014). "Task-Based Programming with OmpSs and Its Application". Euro-Par 2014: Parallel Processing Workshops: 601–12. doi:10.1007/978-3-319-14313-2_51.

- ↑ Olston, C.; Reed, B.; Srivastava, U. et al. (2008). "Pig Latin: A not-so-foreign language for data processing". Proceedings of the 2008 ACM SIGMOD International Conference on Management of Data: 1099–1110. doi:10.1145/1376616.1376726.

- ↑ 26.0 26.1 Gropp, W.; Snir, M. (2013). "Programming for Exascale Computers". Computing in Science & Engineering 15 (6): 27–35. doi:10.1109/MCSE.2013.96.

- ↑ Marozzo, F.; Talia, D.; Trunfio, P. (2012). "P2P-MapReduce: Parallel data processing in dynamic cloud environments". Journal of Computer and System Sciences 78 (5): 1382–1402. doi:10.1016/j.jcss.2011.12.021.

- ↑ Tardieu, O.; Herta, B.; Cunningham, D. et al. (2014). "X10 and APGAS at Petascale". Proceedings of the 19th ACM SIGPLAN Symposium on Principles and Practice of Parallel Programming: 53–66. doi:10.1145/2555243.2555245.

- ↑ Yoo, A.; Kaplan, I. (2009). "Evaluating use of data flow systems for large graph analysis". Proceedings of the 2nd Workshop on Many-Task Computing on Grids and Supercomputers: 5. doi:10.1145/1646468.1646473.

- ↑ Nishtala, R.; Zheng, Y.; Hargrove, P.H. et al. (2011). "Tuning collective communication for Partitioned Global Address Space programming models". Parallel Computing 37 (9): 576–91. doi:10.1016/j.parco.2011.05.006.

- ↑ 31.0 31.1 Bauer, M.; Treichler, S.; Slaughter, E.; Aiken, A. (2012). "Legion: Expressing locality and independence with logical regions". Proceedings of the International Conference on High Performance Computing, Networking, Storage and Analysis: 66. https://dl.acm.org/citation.cfm?id=2389086.

- ↑ 32.0 32.1 Chamberlain, B.L.; Callahan, D.; Zima, H.P. (2007). "Parallel Programmability and the Chapel Language". The International Journal of High Performance Computing Applications 21 (3): 291–312. doi:10.1177/1094342007078442.

- ↑ Nieplocha, J.; Palmer, B.; Tipparaju, V. et al. (2006). "Advances, Applications and Performance of the Global Arrays Shared Memory Programming Toolkit". The International Journal of High Performance Computing Applications 20 (2): 203–31. doi:10.1177/1094342006064503.

- ↑ Meswani, M.R.; Carrington, L.; Snavely, A.; Poole, S. (2012). "Tools for Benchmarking, Tracing, and Simulating SHMEM Applications". CUG2012 Final Proceedings: 1–6. https://cug.org/proceedings/attendee_program_cug2012/by_auth.html.

- ↑ Crasso, I.; Pellagrini, S.; Cosenza, B.; Fahringer, T. (2013). "LibWater: Heterogeneous distributed computing made easy". Proceedings of the 27th International ACM conference on Supercomputing: 161–72. doi:10.1145/2464996.2465008.

- ↑ Sarkar, V.; Budimlic, Z.; Kulkani, M. (19 September 2016). "2014 Runtime Systems Summit. Runtime Systems Report". U.S. Department of Energy. doi:10.2172/1341724. https://www.osti.gov/biblio/1341724-runtime-systems-summit-runtime-systems-report.

- ↑ Amdahl, G.M. (1967). "Validity of single-processor approach to achieving large-scale computing capability". Proceedings of AFIPS Conference: 483–85.

- ↑ Bailey, D.H. (1991). "Twelve Ways to Fool the Masses When Giving Performance Results on Parallel Computers" (PDF). Supercomputing Review: 54–55. https://crd-legacy.lbl.gov/~dhbailey/dhbpapers/twelve-ways.pdf.

- ↑ Grama, A.; Karypis, G.; Kumar, V.; Gupta, A. (2003). Introduction to Parallel Computing (2nd ed.). Pearson. pp. 656. ISBN 9780201648652.

- ↑ Gustafson, J.L. (1988). "Reevaluating Amdahl's law". Communications of the ACM 31 (5): 532–33. doi:10.1145/42411.42415.

- ↑ Shi, J.Y.; Taifi, M.; Pradeep, A. et al. (2012). "Program Scalability Analysis for HPC Cloud: Applying Amdahl's Law to NAS Benchmarks". 2012 SC Companion: High Performance Computing, Networking Storage and Analysis: 1215–1225. doi:10.1109/SC.Companion.2012.147.

- ↑ Schroeder, B.; Gibson, G. (2010). "A Large-Scale Study of Failures in High-Performance Computing Systems". IEEE Transactions on Dependable and Secure Computing 7 (4): 337–50. doi:10.1109/TDSC.2009.4.

Notes