Journal:Guideline for software life cycle in health informatics

| Full article title | Guideline for software life cycle in health informatics |

|---|---|

| Journal | iScience |

| Author(s) | Hauschild, Anne-Christin; Martin, Roman; Holst, Sabrina C.; Wienbeck, Joachim; Heider, Dominik |

| Author affiliation(s) | Philipps University of Marburg, University Medical Center Göttingen |

| Primary contact | Email: dominik dot heider at uni-marburg dot de |

| Year published | 2022 |

| Volume and issue | 25(12) |

| Article # | 105534 |

| DOI | 10.1016/j.isci.2022.105534 |

| ISSN | 2589-0042 |

| Distribution license | Creative Commons Attribution 4.0 International |

| Website | https://www.sciencedirect.com/science/article/pii/S2589004222018065 |

| Download | https://www.sciencedirect.com/science/article/pii/S2589004222018065/pdfft (PDF) |

Abstract

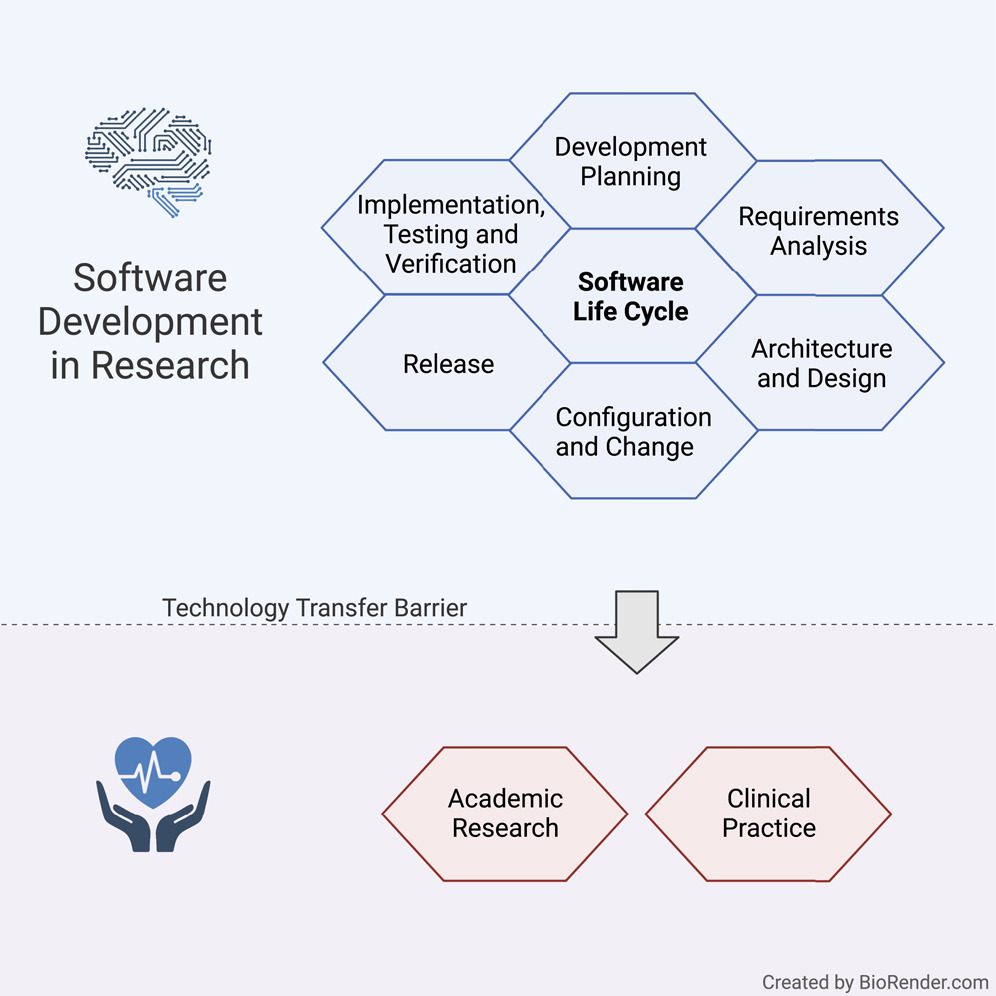

The long-lasting trend of medical informatics is to adapt novel technologies in the medical context. In particular, incorporating artificial intelligence (AI) to support clinical decision-making can significantly improve monitoring, diagnostics, and prognostics for the patient’s and medic’s sake. However, obstacles hinder a timely technology transfer from the medical research setting to the actual clinical setting. Due to the pressure for novelty in the research context, projects rarely implement quality standards.

Here, we propose a guideline for academic software life cycle (SLC) processes tailored to the needs and capabilities of research organizations. While the complete implementation of an SLC according to commercial industry standards is largely not feasible in scientific research work, we propose a subset of elements that we are convinced will provide a significant benefit to research settings while keeping the development effort within a feasible range.

Ultimately, the emerging quality checks for academic research software development can pave the way for a more accelerated deployment of academic software into clinical practice.

Keywords: health informatics, bioinformatics, software engineering

Introduction

Today, medical informatics is an integral part of health care systems that ensure the smooth operation of processes in medical care. Moreover, standard procedures ensure the transfer of knowledge from medical research to clinical practice, for instance, via regularly updated guidelines and regulations. In contrast, newly developed software innovations—such as systems based on artificial intelligence (AI) that could support clinical decisions and have already evolved to be the state-of-the-art in medical informatics research—rarely transfer to application in practice.

Modern methods such as AI and machine learning (ML) increasingly unroll their potential in medical healthcare to help patients and clinicians.[1] Clinical decision support systems (CDSSs) can effectively increase diagnostics, patient safety, and cost containment.[2] Easily accessible AI-based applications can improve diagnosis and treatment of patients, e.g., by precisely detecting symptoms[3], evaluating biomarkers[4], or detecting pathogenic resistance or subtypes.[5][6] Furthermore, upcoming concepts such as federated learning[7][8] and swarm learning[9] allow the cross-clinical creation of data-driven models without disrupting patient’s privacy[10][11], opening the gate for more powerful data-driven development.

However, software has its risks, especially within medical devices. To protect patients from any risk of injury, disability, or other harmful interventions, medical device software (MDSW)—which is intended to provide specific medical purposes, such as diagnosis, monitoring, prognosis, or treatment—are subject to strict regulations. Examples include the European Medical Devices Regulation (MDR)[12], the In Vitro Diagnostic Medical Devices Regulation (IVDR)[13], and the International Medical Device Regulators Forum (IMDRF).[14]

MDSW can be an integral part of a medical product or standalone software as an independent medical device.[15] MDSW can run in the cloud, as part of a software platform, or on a server, with both healthcare professionals and laypersons using it. Exceptions are software tools used for documentation or that solely control medical device hardware, or that serve no medical purpose.[13]

An integral part of all regulations for MDSW is development according to the software life cycle process, as defined by IEC 62304[16], a harmonized international standard that regulates MDSW life cycle development, requiring documentation and processes, such as software development planning, requirement analysis, architectural design, testing, verification, and maintenance.[12][17]

These regulations focus on minimizing patient risk, for example, harmful follow-up analysis, a wrong or missing treatment where needed, as a result of software failures, incorrect predictions, or other malfunctions. Several challenges arise in the attempt to eliminate these risks and allow for a smoother transfer of technology and greater reproducibility.

Challenges of scientific software development for health care

The primary goal of scientists remains conducting scientific activities rather than developing software. However, many scientists aim to make their findings and methodologies available to a broader audience and to be used for the greater good. Thus, many data science and AI methodologies, as well as corresponding implementations and software packages, exist that would, in theory, allow the development of efficacious AI-driven CDSSs and MDSW. However, scientists of different backgrounds tend to have very different knowledge of software engineering practices, often acquired through self-study.[18] Moreover, academic groups often consist of small teams that undergo frequent change or researchers that work on a "one person-one project" basis.[19][20] Thus, a lack of attention to relevant software development processes and engineering practices defined by the software life cycle negatively affects the usefulness of developed packages, particularly for developing software as a medical device.[21]

Pinning down the most critical requirements along with an accurate description and documentation of such is a significant challenge for all software projects independent of if it is conducted in research or industry.[18][22] It necessitates a detailed analysis of the non-functional requirements as determined, for instance, by regulatory entities, addressing aspects such as security, privacy, or infrastructural limitations, as well as functional requirements like user-friendly interfaces and specific results. This is very time-consuming and relies on a close interaction of developers, stakeholders, and potential users, which is often difficult to achieve under academic circumstances.[20][22] Moreover, researchers are enticed by academic hiring procedures and driven by funders to focus on “novelty” rather than software quality and practical usefulness.[19][20] Thus, implementations often fail to fulfill requirements, ensuring long-term sustainability such as documentation, usability, appropriate performance for practical application, user-friendliness optimally supporting potential users, and minimizing risks.[19][23]

The most critical aspects of ensuring sustainability in academic software are reproducibility, reusability, and traceability.[21][24] However, it has been shown that not only public accessibility but also documentation and portability are essential to ensure reproducibility and underpin trust in the scientific record of scientific software, enabling the re-use of research and code. Moreover, the prototype-centered development procedures often lack quality checks, such as systematic testing, that would ensure reusability.[21] Recently, scientific journals such as GigaScience or Biostatistics have promoted reproducibility and reusability by mandating the FAIR Principles (i.e., findability, accessibility, interoperability, and reusability). FAIR establishes a guideline for scientific data management and documentation.[19][25] Implementing the FAIR principles in academic software development has the potentil to lower the barriers to a successful industrial transition.

Additionally, for long-term software maintenance, well-structured development planning and processes can ensure the traceability of modifications via change management and version control. These aspects ultimately determine scientific rigor, transparency, and reproducibility.[19]

Software life cycle

Proper implementation of the software life cycle (SLC) guarantees high-quality planning, development, and maintenance of MDSW. The SLC deals with the planning and specification, development, maintenance, and configuration of the software. IEC 62304 defines the SLC as a conceptual structure across its lifetime, from the requirements’ definition to final release. It describes processes, tasks, and activities involved in developing a software product and their order and interdependencies. Furthermore, it defines milestones verifying the completeness of the results to be delivered.[16]

However, a complete SLC described in standards like IEC 62304 is not feasible for most research projects.[26] Academic research is often subject to tight schedules and focuses on proof-of-concept development, neglecting formal documentation or procedures. Here we provide recommendations for an SLC in academia, lowering the boundary for many research organizations to implement an SLC and fostering the transfer of technology to industrial development.[13][26]

Our goal: An academia-tailored software life cycle

Until now, there has been little guidance on supporting a structured software development culture for academic institutions according to standard SLC processes. In this article, we present a synopsis of all requirements in official standards that are relevant to academia. We adjusted these toward the specific demands of software development in research and established a limited SLC process for research organizations, which has the potential to greatly facilitate and speed up such technology transfer and reproducibility in a controlled and predictable way. Being aware that a complete SLC is not feasible for most academic settings, we proposed a subset of elements that we are convinced will provide a significant benefit without creating an excessive organizational burden for researchers and developers and keep activities in a manageable range.

Our proposal is centered on procedures for software development planning, software requirement analysis, software architectural design, software unit implementation, integration, testing, verification, and configuration management. Depending on the specific needs, the elements of an SLC process that work best for an organization may differ from what we propose. The fact, however, that a life cycle process is set up at all and that the elements are deliberately chosen is probably a key factor for facilitating technology transfer. However, any medical software development for clinical use must strictly follow the regulations relevant to the specific country or region. Thus, our guideline can only provide a starting point intended to be adapted to institute- or project-specific requirements, considering only relevant aspects. Nevertheless, the ideas presented here are not meant to provide a shortcut for medical software. An overview of our suggested SLC activities in tandem with regulations is provided in Table S1 of the Supplemental information.

Software life cycle for medical software research guideline

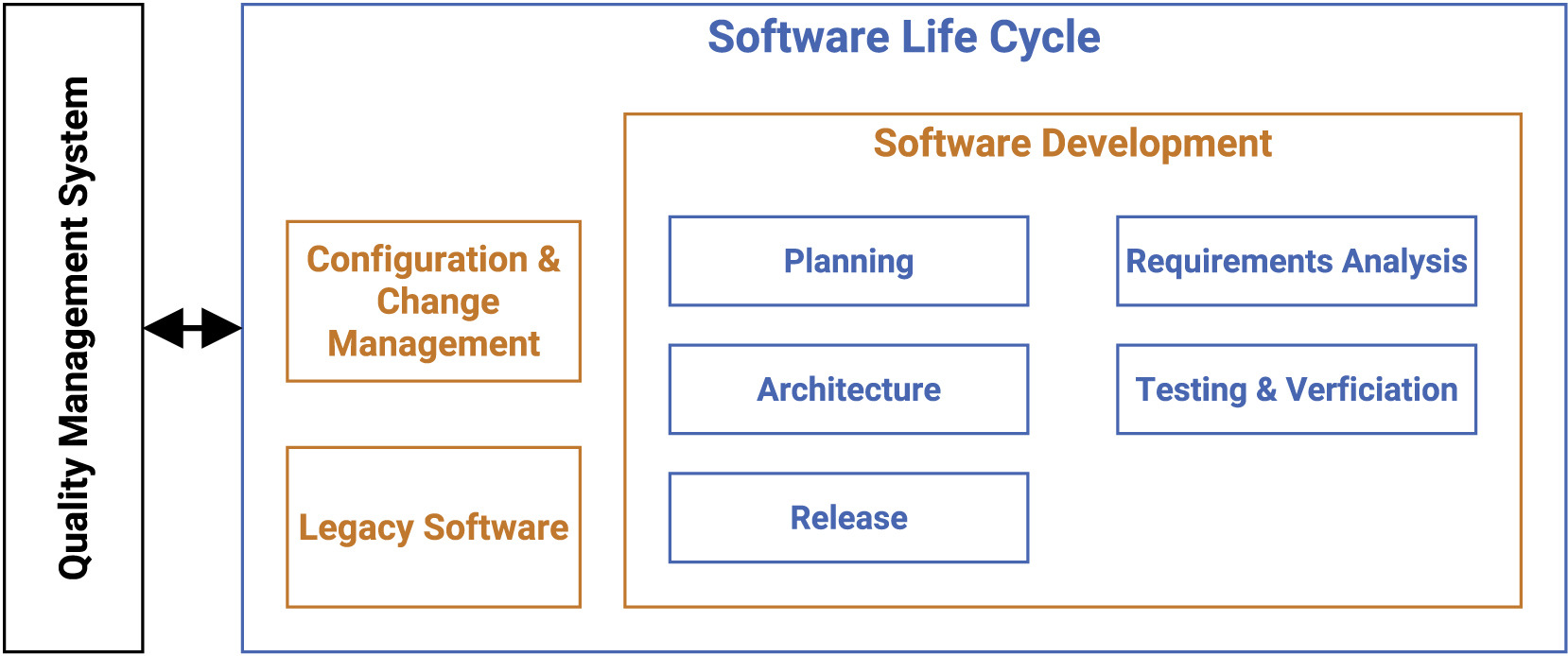

Our guideline covers multiple processes such as development planning, requirement analysis, software architecture, software design, implementation, software, and integration testing, verification, and release. In the following, we present the most vital points for the SLC, as depicted in Figure 1.

|

The main focus of the SLC, in compliance with the quality management system (QMS), lies in the software development arena, while also tapping into configuration and change management, as well as legacy software.

Software development: Planning

Defining an accurate software development plan is the first key component and must be updated regularly during the project, getting referenced and refined throughout the entire software life cycle model. It defines norms, methods, used processes, deliverable results, traceability between requirements, software testing, implemented risk control measures, configuration and change management, and verification of configuration elements, including software of unknown provenance (SOUP). (SOUP is a commonly used basic software library or set of packages that have not been developed for medical purposes.)

Two documents should be provided for the academic-tailored implementation: the process description and the development plan. Each document produced during software development has to contain a title, purpose, and responsible person.[16] In the case of multiple involved developers, the role and responsibility assignments should be noted down in the single process description.

First, the process description is provided by the definition of standard operating procedures (SOPs) for repetitive application problems.[27] The description contains each activity within the processes, when it will be completed, by whom, how, and with which input and output. The developed process description can be applied to multiple projects and has to be implemented by the developers.[12] Since the life cycle model must be completely defined or referenced by the development plan, a software engineering model must be selected to provide a general structure for the development phase, such as the V-model. As a common approach in medical device development, the V-model is successfully used to achieve regulatory compliance.[28] Generally, the selected model should match the project’s characteristics and thus can host agile practices or elements of SCRUM to support and conform to life cycle development practices.[29][30]

Second, the development plan describes the general documentation required for a product or project. The development plan defines concrete milestones to corresponding deadlines, assigns designated staff to the pre-defined roles, and refines measures or tools adjusted to the project. It is challenging to meet the regulatory requirement of defining tools, testing, and configuration management in advance, particularly in a volatile academic setting. Due to external factors and unforeseeable changes, the development plan must be updated over the lifetime of the project, while the pre-defined process descriptions remain. Additionally, the process should recommend defining a coding guideline or convention, including code style, nomenclature, and naming in the development plan, to increase software quality. For example, using pre-defined rules in git hooks or continuous integration (CI), combined with linting tools, can enforce coding compliance.

The development process gets more transparent and well-structured through the provision of these two documents.

Software development: Requirements analysis

A software requirement is a detailed statement about a property that a software product, system, or process should fulfill and is defined during software requirements analysis. Software requirements should cover functional requirements such as inputs, outputs, functions, processes, interface reactions, and thresholds, as well as non-functional requirements such as physical characteristics, computing environments, performance, cybersecurity, privacy, maintenance, installation, networking, and so forth. Precisely defined requirements are a vital element for the success of a project, particularly given that studies have concluded that half of all software errors are derived from mistakes in the requirement phase.[31][32] High-level requirements should be defined within the specifications, including the desired properties. These specifications describe mainly the project’s total goal under defined restrictions. For practical consideration, it is beneficial to begin with general natural language requirements and refine them into graphical notations, such as Unified Model Language (UML) diagrams.[33][34]

However, the original requirement within the specification must always be bidirectionally linked to allow traceability. It should be possible to describe and follow the life of requirements in all directions. This means that traceability implies the comprehension of a design, starting with the source of a requirement, its implementation, testing, and maintenance. Moreover, it facilitates a high level of software quality, a critical concern for medical devices. Therefore, the derivation and documentation of the requirements should be updated and verified during the project.[16] Finally, a critical aspect is the definition of requirements for maintenance, which is often neglected in academia since the focus is on publishing new technologies in contrast to maintaining existing software.

Nevertheless, maintenance must be considered at the beginning of the software life cycle to ensure that post-delivery support is possible.[35] Therefore, a maintenance plan in academia does not have to be complete but must define all factors influencing either the development or architecture. Table 1, which examines ISO/IEC 25010[36], can be used to evaluate the completeness of a software requirement analysis. Further, an implementation example is provided in the Supplemental information.

| ||||||||||||||||||||

Software development: Architecture and design

The regulatory authorities demand the definition of essential structural software components, identification of their primary responsibilities, visible features, and their interrelations.[16] The architecture as an overarching structure conceptually defines data storage, interfaces, and logical servers. At the same time, modularization is described within the detailed software design, specifying how the single elements of the architecture and the requirements are explicitly implemented.

Software architecture consists of the system’s structure in combination with architecture characteristics the system must support (e.g., availability, scalability, and security), architecture decisions (formulation of rules and constraints), and design principles. An architecture categorizes into monolithic and distributed architecture types consisting of single packages or separable sub-systems.[37] It is recommended to specify an appropriate architecture prior to implementation. However, the choice is not regulated.[16] These architectural design decisions will guide the developers throughout the development process.

The system has to be divided for the software design until it is represented through software units. These are sets of procedures or functions encapsulated in a package or class that cannot be further divided. Each software unit and interface needs a verified detailed design to ensure correct implementation.[16] The design principles cover every option or state of all system components in detail, such as a preferred method or protocol.[38] In order to have well testable and maintainable code, it is recommended to have software with low coupling (dependencies between the sub-systems) and high cohesion (internal dependencies).[39] These associations can be well described using widely accepted notation standards such as UML, including class and activity diagrams to document the architectural decisions, which is highly recommendable to facilitate understanding the architecture.[40]

In academia, two documents should be provided: the software architecture description and the detailed design. It is unlikely to narrow down the whole codebase in a detailed design. However, it must contain the utmost vital components, such as elements of design patterns, classes with crucial functionality, or specific interfaces. For example, a strict logical dissociation between the internal logic and the interface, e.g., for user interaction, is critical. Design patterns such as the model view controller (MVC) are favorable.[41]

Software development: Implementation, testing, and verification

Generally, each software unit must be implemented, tested, and verified. The IEEE defines implementation as translating a design into hardware or software components, or both.[42] In particular, the detailed design has to be translated into source code. Following a specific coding style and documentation standards is advisable during the implementation. Subsequently, every software unit has to be tested and verified separately, ensuring it works as specified in the detailed design and complies with the coding style. After that, it has to be integrated, verified, and tested dependently and independently in the following integration tests. These evaluate the software unit’s functionality combined with other components into an overall system.

The software is usually tested on different abstraction levels within the software’s life cycle, differentiating between unit, integration, regression, and system testing. While isolated unit tests verify the functionality of a separately testable software element, integration tests verify the interaction between the software units, as described by the software architecture. Along with different test strategies, such as top-down or bottom-up, integration tests must be conducted during several stages of the development process and are tailored to each integration level.[43]

Integration and system tests can be combined with routine activities but must cover all software requirements. Especially, software components affecting safety require extensive tests. Appropriate evaluations of the testing procedure, verification, and integration strategy concerning the previously determined requirements are necessary. Tests and results must be recorded with acceptance criteria, providing repeatability and traceability between requirements and their verifications.

Tests can be performed either as white-box testing[44], including the knowledge of the underlying architecture, or as black-box testing[45], which does not take into account the internal structure. Besides automated tests, non-automated tests should be conducted between program coding and the beginning of computer-based testing. Moreover, the three fundamental human testing methods are inspections, walkthroughs, and usability testing.[46] As demonstrated in our example in the supplemental information, SCRUM supports software integration and system testing since, after each sprint, an increment of potentially shippable functionality consisting of tested, well-written, and executable code is required (Supplemental information, Figure S2). Consequently, verification and testing are automatically included in the process of SCRUM. Project-specific regular, complete tests which are documented and traceable to requirements, software architecture, and detailed design are critical for software development within the law. A test is successful if it passes the acceptance criteria, defined through the requirements specification, the interface design within the detailed design, and the coding guideline. Ultimately, the verification evaluates whether all specified requirements are fulfilled by validating objective proof.[16]

To ensure adequate software verification, it has to be well-planned and integrated into several stages of the SLC: requirements analysis, software architecture, software design, and software units, as well as their integration, changes, and problem resolutions, have to be verified. The management of verification documents can also be partially organized automatically through CI or as such with the Jira API or the Gitlab CI/CD. Table 2 lists the suggested aspects to verify the different stages of the software development process. In particular, problem resolutions and other changes have to be re-verified and documented. Overall, verification is an activity of high importance throughout the whole development process. To verify more mature artifacts, one must verify their foundation as well. This hierarchy should always be kept in mind, as the Supplemental information example demonstrates.

| ||||||||||||||||

Software development: Release

In contrast to industry, academic software is released to other researchers via public repositories and journal publications. Before software release, testing and verification need to be completed and evaluated. That includes, first, all known residual anomalies that have to be documented and evaluated. Second, documentation of the release procedure and the software development environment has to be recorded with the released software version. Third, all activities and tasks of the software development plan must be completed and documented. Fourth, the medical device software, all configuration elements, and the documentation must be filled for the whole lifetime of the medical device software, defined by the development team as long as the relevant regulatory requirements demand it. Fifth, procedures to ensure a reliable delivery without damaging or unauthorized adjustments have to be defined.[16]

After the software is released, all changes and updates are implemented within the software maintenance process, following the same steps as the software development process. The post-delivery maintenance decisions that must be made are included in software development planning.

Suppose the development process is well-defined and followed, and the previous sections of this guideline are considered. In that case, the complete verification and the required documents are delivered by default. Well-implemented traceability is essential to ensure the development process can be archived transparently. Regarding SCRUM, one could include the required documentation within the definition of "done" to ensure everything is documented since the development takes place in a regulatory context.

Legacy software

According to IEC 62304, legacy software is defined as software that was not developed to be used within software as a medical device, such as general software packages and libraries. It, therefore, lacks sufficient verification that it was developed in compliance with the current version of the norm.

Thus, it is sufficient to prove it conforms to the norm, and shortcomings to the norm’s requirements need assessment.[16] Hence risks of using the legacy software, as well as the risks of missing documentation, need to be identified and mitigated, if possible, as defined by the risk management process of the IEC 62366-1 standard.[47]

In academia, it is essential to choose legacy software and document its usage carefully. Ideally, the used legal software has to fulfill the requirements of the IEC concerning risks as well, but the scope of action only demands closing gaps if it reduces the risk of usage.

Configuration and change management

Configuration and change management is crucial in ensuring usability, reproducibility, reusability, and traceability of software in the industry and academia. In academic research, automated change management systems, such as GitHub or GitLab, exist for software code and data and are regularly used.[25] However, implementing adequate configuration and change management documentation for the entire SLC, as required by the IEC 62304 standard, is particularly challenging in academia, where the pressure to publish urges researchers to focus on novelty rather than maintenance.

In order to mitigate the ongoing replication crisis[48][49], academic research institutions and projects should establish technical and administrative procedures to identify and define configuration items and SOUP, as well as their documentation within a system. This should include the documentation of problem reports, change requests, changes, and releases necessary to restore an item, determine its components, and provide the history of its changes. In particular, configuration change requests need to be documented, approved, and verified in projects with multiple developers and stakeholders.[16] Thus, it is advised to assign a representative person, ideally permanent technical academic staff, in charge of the change management to support the correct implementation of necessary processes for groups and projects in advance.[23] In academia, ticket systems such as those provided by most repositories can be easily used as version control systems for all code and documents to facilitate tracking changes. Moreover, tools such as Jira for project management and Confluence for project documentation are advisable to ensure traceability and good configuration management.

These tools can be utilized to establish a change management strategy or process that includes steps like:

- Create a problem report (including criticality).

- Conduct problem analysis, including software risk.

- Create a change request, if required.

- Implement and verify the change.

Discussion

The current trend toward using new technologies in the medical context[1], such as establishing AI- or ML-related software, paves the way to significantly improve monitoring, diagnostics, and prognostics for the patient’s and medical team’s sakes. The subjacent development, realized mainly by specialized research organizations and institutes, is time-consuming. Additionally, implementing these software achievements in the medical markets requires enormous efforts to cover all requirements of international standards.[19][20][21] This article reveals several knowledge transfer challenges from industrial standards to use in academia, focusing on the software life cycle in a biomedical context. Challenges mainly face the software development and engineering processes regarding reproducibility, reusability, and traceability.

In order to better establish an academia-tailored SLC, here we propose a comprehensive guideline for research facilities derived from the requirements of IEC 62304.[16] Complementary to our quality management guideline[50], we propose to address these challenges by following our guideline, which lowers the barriers to a potential technology transfer toward the medical industry. Furthermore, in the Supplemental information, we provide a comprehensive checklist for a successful SLC and demonstrate the feasibility of our guideline with our implementation example.

Since realizing industry-based regulatory requirements is mostly not feasible in an academic context, we focus on the most vital aspects of the SLC, covering software planning, development, architecture, maintenance, and legacy software. The implementation of our guidelines will not only improve the quality of medical software and avoid engineering errors but also mitigate potential risks that might arise from the introduction of AI in healthcare. An integration of SLC as standard procedure in academic programming could increase the overall quality of processing pipelines and thus the quality of data. Since evaluation strategies are well planned, this lends to a closer examination of the potential risks of false positives and false negatives. Ultimately, this supports a smooth transfer with potential manufacturers by delivering all demanded documents of a certain quality related to the software. Although some research organizations indeed have the resources to realize industrial standards such as quality management[51], we encourage scientists to further introduce SLC as a usual practice for software in research institutes. We envision that such a focus on SLC, in addition to focus on the FAIR principles for scientific data management and documentation, could become the standard for scientific health software publishing. Finally, this may pave the way for a smoother transition from research toward clinical practice.

Supplemental information

- Document S1 (PDF): Figures S1–S8 and Tables S1–S7.

Abbreviations, acronyms, and initialisms

- AI: artificial intelligence

- CDSS: clinical decision support system

- CI: continuous integration

- FAIR: findable, accessible, interoperable, and reusable

- IMDRF: International Medical Device Regulators Forum

- IVDR: In Vitro Diagnostic Medical Devices Regulation

- MDR: European Medical Devices Regulation

- MDSW: medical device software

- ML: machine learning

- MVC: model view controller

- QMS: quality management system

- SLC: software life cycle

- SOP: standard operating procedure

- SOUP: software of unknown provenance

Acknowledgements

This project has received funding from the European Union’s Horizon2020 research and innovation program under grant agreement No 826078. This publication reflects only the authors’ view and the European Commission is not responsible for any use that may be made of the information it contains.

Author contributions

Conceptualization, A.-C.H., R.M., S.C.H., J.W. and D.H.; Methodology, A.-C.H., R.M., S.C.H. and J. W.; Software, A.-C.H., S.C.H. and J.W.; Investigation, A.-C.H., R.M., S.C.H. and J.W.; Writing - Original Draft, A.-C.H., R.M., S.C.H. and J.W.; Writing - Review & Editing: A.-C.H., R.M., S.C.H., J.W. and D.H.; Supervision, D.H.

Conflict of interest

The authors declare no competing interests.

References

- ↑ 1.0 1.1 Muehlematter, Urs J; Daniore, Paola; Vokinger, Kerstin N (1 March 2021). "Approval of artificial intelligence and machine learning-based medical devices in the USA and Europe (2015–20): a comparative analysis" (in en). The Lancet Digital Health 3 (3): e195–e203. doi:10.1016/S2589-7500(20)30292-2. https://linkinghub.elsevier.com/retrieve/pii/S2589750020302922.

- ↑ Sutton, Reed T.; Pincock, David; Baumgart, Daniel C.; Sadowski, Daniel C.; Fedorak, Richard N.; Kroeker, Karen I. (6 February 2020). "An overview of clinical decision support systems: benefits, risks, and strategies for success" (in en). npj Digital Medicine 3 (1): 17. doi:10.1038/s41746-020-0221-y. ISSN 2398-6352. PMC PMC7005290. PMID 32047862. https://www.nature.com/articles/s41746-020-0221-y.

- ↑ Ceney, Adam; Tolond, Stephanie; Glowinski, Andrzej; Marks, Ben; Swift, Simon; Palser, Tom (15 July 2021). Wilson, Fernando A.. ed. "Accuracy of online symptom checkers and the potential impact on service utilisation" (in en). PLOS ONE 16 (7): e0254088. doi:10.1371/journal.pone.0254088. ISSN 1932-6203. PMC PMC8282353. PMID 34265845. https://dx.plos.org/10.1371/journal.pone.0254088.

- ↑ Anastasiou, Olympia E.; Kälsch, Julia; Hakmouni, Mahdi; Kucukoglu, Ozlem; Heider, Dominik; Korth, Johannes; Manka, Paul; Sowa, Jan-Peter et al. (1 July 2017). "Low transferrin and high ferritin concentrations are associated with worse outcome in acute liver failure" (in en). Liver International 37 (7): 1032–1041. doi:10.1111/liv.13369. https://onlinelibrary.wiley.com/doi/10.1111/liv.13369.

- ↑ Riemenschneider, Mona; Hummel, Thomas; Heider, Dominik (1 December 2016). "SHIVA - a web application for drug resistance and tropism testing in HIV" (in en). BMC Bioinformatics 17 (1): 314. doi:10.1186/s12859-016-1179-2. ISSN 1471-2105. PMC PMC4994198. PMID 27549230. http://bmcbioinformatics.biomedcentral.com/articles/10.1186/s12859-016-1179-2.

- ↑ Riemenschneider, Mona; Cashin, Kieran Y.; Budeus, Bettina; Sierra, Saleta; Shirvani-Dastgerdi, Elham; Bayanolhagh, Saeed; Kaiser, Rolf; Gorry, Paul R. et al. (29 April 2016). "Genotypic Prediction of Co-receptor Tropism of HIV-1 Subtypes A and C" (in en). Scientific Reports 6 (1): 24883. doi:10.1038/srep24883. ISSN 2045-2322. PMC PMC4850382. PMID 27126912. https://www.nature.com/articles/srep24883.

- ↑ Rieke, Nicola; Hancox, Jonny; Li, Wenqi; Milletarì, Fausto; Roth, Holger R.; Albarqouni, Shadi; Bakas, Spyridon; Galtier, Mathieu N. et al. (14 September 2020). "The future of digital health with federated learning" (in en). npj Digital Medicine 3 (1): 119. doi:10.1038/s41746-020-00323-1. ISSN 2398-6352. PMC PMC7490367. PMID 33015372. https://www.nature.com/articles/s41746-020-00323-1.

- ↑ Hauschild, Anne-Christin; Lemanczyk, Marta; Matschinske, Julian; Frisch, Tobias; Zolotareva, Olga; Holzinger, Andreas; Baumbach, Jan; Heider, Dominik (12 April 2022). Wren, Jonathan. ed. "Federated Random Forests can improve local performance of predictive models for various healthcare applications" (in en). Bioinformatics 38 (8): 2278–2286. doi:10.1093/bioinformatics/btac065. ISSN 1367-4803. https://academic.oup.com/bioinformatics/article/38/8/2278/6525214.

- ↑ Warnat-Herresthal, Stefanie; Schultze, Hartmut; Shastry, Krishnaprasad Lingadahalli; Manamohan, Sathyanarayanan; Mukherjee, Saikat; Garg, Vishesh; Sarveswara, Ravi; Händler, Kristian et al. (10 June 2021). "Swarm Learning for decentralized and confidential clinical machine learning" (in en). Nature 594 (7862): 265–270. doi:10.1038/s41586-021-03583-3. ISSN 0028-0836. PMC PMC8189907. PMID 34040261. https://www.nature.com/articles/s41586-021-03583-3.

- ↑ Torkzadehmahani, Reihaneh; Nasirigerdeh, Reza; Blumenthal, David B.; Kacprowski, Tim; List, Markus; Matschinske, Julian; Spaeth, Julian; Wenke, Nina Kerstin et al. (1 June 2022). "Privacy-Preserving Artificial Intelligence Techniques in Biomedicine" (in en). Methods of Information in Medicine 61 (S 01): e12–e27. doi:10.1055/s-0041-1740630. ISSN 0026-1270. PMC PMC9246509. PMID 35062032. http://www.thieme-connect.de/DOI/DOI?10.1055/s-0041-1740630.

- ↑ Nasirigerdeh, Reza; Torkzadehmahani, Reihaneh; Matschinske, Julian; Frisch, Tobias; List, Markus; Späth, Julian; Weiss, Stefan; Völker, Uwe et al. (24 January 2022). "sPLINK: a hybrid federated tool as a robust alternative to meta-analysis in genome-wide association studies" (in en). Genome Biology 23 (1): 32. doi:10.1186/s13059-021-02562-1. ISSN 1474-760X. PMC PMC8785575. PMID 35073941. https://genomebiology.biomedcentral.com/articles/10.1186/s13059-021-02562-1.

- ↑ 12.0 12.1 12.2 "Regulation (EU) 2017/745 of the European Parliament and of the Council of 5 April 2017 on medical devices". EUR-Lex. Publications Office of the European Union. 5 April 2017. http://data.europa.eu/eli/reg/2017/745/oj.

- ↑ 13.0 13.1 13.2 Medical Device Coordination Group (October 2019). "MDCG 2019-11 Guidance on Qualification and Classification of Software in Regulation (EU) 2017/745 – MDR and Regulation (EU) 2017/746 – IVDR" (PDF). https://health.ec.europa.eu/system/files/2020-09/md_mdcg_2019_11_guidance_qualification_classification_software_en_0.pdf.

- ↑ International Medical Device Regulators Forum SaMD Working Group (9 December 2013). "Software as a Medical Device (SaMD): Key Definitions" (PDF). International Medical Device Regulators Forum. https://www.imdrf.org/sites/default/files/docs/imdrf/final/technical/imdrf-tech-131209-samd-key-definitions-140901.pdf.

- ↑ Oen, R.D.R. (2009). "Software als medizinprodukt". Medizin Produkte Recht 2: 55–7.

- ↑ 16.00 16.01 16.02 16.03 16.04 16.05 16.06 16.07 16.08 16.09 16.10 16.11 "IEC 62304:2006/Amd 1:2015 Medical device software — Software life cycle processes — Amendment 1". International Organization for Standardization. June 2015. https://www.iso.org/standard/64686.html.

- ↑ "IEC 62304 Edition 1.1 2015-06 CONSOLIDATED VERSION Medical device software - Software life cycle processes". Recognized Consensus Standards. U.S. Food and Drug Administration. 14 January 2019. https://www.accessdata.fda.gov/scripts/cdrh/cfdocs/cfstandards/detail.cfm?standard__identification_no=38829.

- ↑ 18.0 18.1 Pinto, Gustavo; Wiese, Igor; Dias, Luiz Felipe (1 March 2018). "How do scientists develop scientific software? An external replication". 2018 IEEE 25th International Conference on Software Analysis, Evolution and Reengineering (SANER) (Campobasso: IEEE): 582–591. doi:10.1109/SANER.2018.8330263. ISBN 978-1-5386-4969-5. http://ieeexplore.ieee.org/document/8330263/.

- ↑ 19.0 19.1 19.2 19.3 19.4 19.5 Brito, Jaqueline J; Li, Jun; Moore, Jason H; Greene, Casey S; Nogoy, Nicole A; Garmire, Lana X; Mangul, Serghei (1 June 2020). "Recommendations to enhance rigor and reproducibility in biomedical research" (in en). GigaScience 9 (6): giaa056. doi:10.1093/gigascience/giaa056. ISSN 2047-217X. PMC PMC7263079. PMID 32479592. https://academic.oup.com/gigascience/article/doi/10.1093/gigascience/giaa056/5849489.

- ↑ 20.0 20.1 20.2 20.3 Mangul, Serghei; Mosqueiro, Thiago; Abdill, Richard J.; Duong, Dat; Mitchell, Keith; Sarwal, Varuni; Hill, Brian; Brito, Jaqueline et al. (20 June 2019). "Challenges and recommendations to improve the installability and archival stability of omics computational tools" (in en). PLOS Biology 17 (6): e3000333. doi:10.1371/journal.pbio.3000333. ISSN 1545-7885. PMC PMC6605654. PMID 31220077. https://dx.plos.org/10.1371/journal.pbio.3000333.

- ↑ 21.0 21.1 21.2 21.3 Lee, Graham; Bacon, Sebastian; Bush, Ian; Fortunato, Laura; Gavaghan, David; Lestang, Thibault; Morton, Caroline; Robinson, Martin et al. (1 February 2021). "Barely sufficient practices in scientific computing" (in en). Patterns 2 (2): 100206. doi:10.1016/j.patter.2021.100206. PMC PMC7892476. PMID 33659915. https://linkinghub.elsevier.com/retrieve/pii/S2666389921000167.

- ↑ 22.0 22.1 Wiese, Igor; Polato, Ivanilton; Pinto, Gustavo (1 July 2020). "Naming the Pain in Developing Scientific Software". IEEE Software 37 (4): 75–82. doi:10.1109/MS.2019.2899838. ISSN 0740-7459. https://ieeexplore.ieee.org/document/8664473/.

- ↑ 23.0 23.1 Riemenschneider, Mona; Wienbeck, Joachim; Scherag, André; Heider, Dominik (1 June 2018). "Data Science for Molecular Diagnostics Applications: From Academia to Clinic to Industry" (in en). Systems Medicine 1 (1): 13–17. doi:10.1089/sysm.2018.0002. ISSN 2573-3370. http://www.liebertpub.com/doi/10.1089/sysm.2018.0002.

- ↑ Coiera, Enrico; Ammenwerth, Elske; Georgiou, Andrew; Magrabi, Farah (1 August 2018). "Does health informatics have a replication crisis?" (in en). Journal of the American Medical Informatics Association 25 (8): 963–968. doi:10.1093/jamia/ocy028. ISSN 1067-5027. PMC PMC6077781. PMID 29669066. https://academic.oup.com/jamia/article/25/8/963/4970161.

- ↑ 25.0 25.1 Wilkinson, Mark D.; Dumontier, Michel; Aalbersberg, IJsbrand Jan; Appleton, Gabrielle; Axton, Myles; Baak, Arie; Blomberg, Niklas; Boiten, Jan-Willem et al. (15 March 2016). "The FAIR Guiding Principles for scientific data management and stewardship" (in en). Scientific Data 3 (1): 160018. doi:10.1038/sdata.2016.18. ISSN 2052-4463. PMC PMC4792175. PMID 26978244. https://www.nature.com/articles/sdata201618.

- ↑ 26.0 26.1 Sharma, Arjun; Blank, Anthony; Patel, Parashar; Stein, Kenneth (1 March 2013). "Health care policy and regulatory implications on medical device innovations: a cardiac rhythm medical device industry perspective" (in en). Journal of Interventional Cardiac Electrophysiology 36 (2): 107–117. doi:10.1007/s10840-013-9781-y. ISSN 1383-875X. PMC PMC3606523. PMID 23474980. http://link.springer.com/10.1007/s10840-013-9781-y.

- ↑ Manghani, Kishu (2011). "Quality assurance: Importance of systems and standard operating procedures" (in en). Perspectives in Clinical Research 2 (1): 34. doi:10.4103/2229-3485.76288. ISSN 2229-3485. PMC PMC3088954. PMID 21584180. http://www.picronline.org/text.asp?2011/2/1/34/76288.

- ↑ McHugh, Martin; Ali, Abder-Rahman; McCaffery, Fergal (2013). The Significance of Requirements in Medical Device Software Development. doi:10.21427/7ADY-5269. https://arrow.tudublin.ie/scschcomcon/126/.

- ↑ Memon, M.; Jalbani, A.A.; Menghwar, G.D. et al. (2016). "I2A: An Interoperability & Integration Architecture for Medical Device Software and eHealth Systems" (PDF). Science International 28 (4): 3783–87. ISSN 1013-5316. http://www.sci-int.com/pdf/637305175103306413.pdf.

- ↑ McHugh, Martin; Cawley, Oisin; McCaffcry, Fergal; Richardson, Ita; Wang, Xiaofeng (1 May 2013). "An agile V-model for medical device software development to overcome the challenges with plan-driven software development lifecycles". 2013 5th International Workshop on Software Engineering in Health Care (SEHC) (San Francisco, CA, USA: IEEE): 12–19. doi:10.1109/SEHC.2013.6602471. ISBN 978-1-4673-6282-5. https://ieeexplore.ieee.org/document/6602471/.

- ↑ Davis, Alan Mark (1993). Software requirements: objects, functions, and states (Rev ed.). Englewood Cliffs, N.J: PTR Prentice Hall. ISBN 978-0-13-805763-3.

- ↑ Leffingwell, D. (1997). "Calculating your return on investment from more effective requirements management". American Programmer 10 (4): 13–16.

- ↑ Booch, Grady; Rumbaugh, James; Jacobson, Ivar (1999). The unified modeling language user guide. The Addison-Wesley object technology series. Reading Mass: Addison-Wesley. ISBN 978-0-201-57168-4.

- ↑ Booch, G.; Jacobson, I.; Rumbaugh, J. et al. (4 January 2005). "Unified Modeling Language Specification Version 1.4.2" (PDF). Object Management Group. https://www.omg.org/spec/UML/ISO/19501/PDF.

- ↑ Fairley, Richard E. Dick; Bourque, Pierre; Keppler, John (1 April 2014). "The impact of SWEBOK Version 3 on software engineering education and training". 2014 IEEE 27th Conference on Software Engineering Education and Training (CSEE&T): 192–200. doi:10.1109/CSEET.2014.6816804. https://ieeexplore.ieee.org/document/6816804/.

- ↑ "ISO/IEC 25010:2011 Systems and software engineering — Systems and software Quality Requirements and Evaluation (SQuaRE) — System and software quality models". International Organization for Standardization. March 2011. https://www.iso.org/standard/35733.html.

- ↑ Mosleh, Mohsen; Dalili, Kia; Heydari, Babak (1 March 2018). "Distributed or Monolithic? A Computational Architecture Decision Framework". IEEE Systems Journal 12 (1): 125–136. doi:10.1109/JSYST.2016.2594290. ISSN 1932-8184. http://ieeexplore.ieee.org/document/7539535/.

- ↑ Richards, Mark; Ford, Neal (2020). Fundamentals of software architecture: an engineering approach (First edition ed.). Sebastopol, CA: O'Reilly Media, Inc. ISBN 978-1-4920-4345-4. OCLC on1089438191. https://www.worldcat.org/title/mediawiki/oclc/on1089438191.

- ↑ Jiang, Zhen Ming; Hassan, Ahmed E.; Holt, Richard C. (1 December 2006). "Visualizing Clone Cohesion and Coupling". 2006 13th Asia Pacific Software Engineering Conference (APSEC'06): 467–476. doi:10.1109/APSEC.2006.63. https://ieeexplore.ieee.org/document/4137451/.

- ↑ Hofmeister, C.; Nord, R. L.; Soni, D. (1999), Donohoe, Patrick, ed., "Describing Software Architecture with UML", Software Architecture (Boston, MA: Springer US) 12: 145–159, doi:10.1007/978-0-387-35563-4_9, ISBN 978-1-4757-6536-6, http://link.springer.com/10.1007/978-0-387-35563-4_9. Retrieved 2023-07-04

- ↑ Späth, Peter (2021), "About MVC: Model, View, Controller" (in en), Beginning Java MVC 1.0 (Berkeley, CA: Apress): 1–18, doi:10.1007/978-1-4842-6280-1_1, ISBN 978-1-4842-6279-5, http://link.springer.com/10.1007/978-1-4842-6280-1_1. Retrieved 2023-07-04

- ↑ IEEE Standard Glossary of Software Engineering Terminology. IEEE. 31 December 1990. doi:10.1109/ieeestd.1990.101064. ISBN 978-0-7381-0391-4. https://ieeexplore.ieee.org/servlet/opac?punumber=2238.

- ↑ Abran, Alain; Moore, James W., eds. (2001). Guide to the software engineering body of knowledge: trial version [version 0.95]; SWEBOK*; a project of the Software Engineering Coordinating Commettee. Los Alamitos, Calif.: IEEE Computer Society. ISBN 978-0-7695-1000-2.

- ↑ Nidhra, Srinivas (30 June 2012). "Black Box and White Box Testing Techniques - A Literature Review". International Journal of Embedded Systems and Applications 2 (2): 29–50. doi:10.5121/ijesa.2012.2204. http://www.airccse.org/journal/ijesa/papers/2212ijesa04.pdf.

- ↑ Murnane, T.; Reed, K. (2001). "On the effectiveness of mutation analysis as a black box testing technique". Proceedings 2001 Australian Software Engineering Conference (Canberra, ACT, Australia: IEEE Comput. Soc): 12–20. doi:10.1109/ASWEC.2001.948492. ISBN 978-0-7695-1254-9. http://ieeexplore.ieee.org/document/948492/.

- ↑ Myers, Glenford J.; Sandler, Corey; Badgett, Tom (2012). The art of software testing (3rd ed ed.). Hoboken, N.J: John Wiley & Sons. ISBN 978-1-118-03196-4. OCLC 728656684. https://www.worldcat.org/title/mediawiki/oclc/728656684.

- ↑ "IEC 62366-1:2015 Medical devices — Part 1: Application of usability engineering to medical devices". International Organization for Standardization. February 2015. https://www.iso.org/standard/63179.html.

- ↑ Hunter, Philip (1 September 2017). "The reproducibility “crisis”: Reaction to replication crisis should not stifle innovation" (in en). EMBO reports 18 (9): 1493–1496. doi:10.15252/embr.201744876. ISSN 1469-221X. PMC PMC5579390. PMID 28794201. https://www.embopress.org/doi/10.15252/embr.201744876.

- ↑ Boulesteix, Anne-Laure; Hoffmann, Sabine; Charlton, Alethea; Seibold, Heidi (1 October 2020). "A Replication Crisis in Methodological Research?" (in en). Significance 17 (5): 18–21. doi:10.1111/1740-9713.01444. ISSN 1740-9705. https://academic.oup.com/jrssig/article/17/5/18/7038554.

- ↑ Hauschild, Anne-Christin; Eick, Lisa; Wienbeck, Joachim; Heider, Dominik (1 July 2021). "Fostering reproducibility, reusability, and technology transfer in health informatics" (in en). iScience 24 (7): 102803. doi:10.1016/j.isci.2021.102803. PMC PMC8282945. PMID 34296072. https://linkinghub.elsevier.com/retrieve/pii/S2589004221007719.

- ↑ Sapunar, Damir (28 May 2016). "The business process management software for successful quality management and organization: case study from the University of Split School of medicine". Acta Medica Academica 45 (1): 26–33. doi:10.5644/ama2006-124.153. http://ama.ba/index.php/ama/article/view/268/pdf.

Notes

This presentation is faithful to the original, with only a few minor changes to presentation, though grammar and word usage was substantially updated for improved readability. In some cases important information was missing from the references, and that information was added.