Difference between revisions of "Journal:Launching genomics into the cloud: Deployment of Mercury, a next generation sequence analysis pipeline"

Shawndouglas (talk | contribs) (Added content. Saving and adding more.) |

Shawndouglas (talk | contribs) (Added content. Saving and adding more.) |

||

| Line 181: | Line 181: | ||

===Comparison to existing methods=== | ===Comparison to existing methods=== | ||

A number of other tools and services provide similar functionality to Mercury on DNAnexus, with differing approaches to extensibility, ease of use for non-programmers, support for local or cloud infrastructures, and software available by default (Table 2). For example, the academic service Galaxy primarily focuses on extensibility and building a developer community | A number of other tools and services provide similar functionality to Mercury on DNAnexus, with differing approaches to extensibility, ease of use for non-programmers, support for local or cloud infrastructures, and software available by default (Table 2). For example, the academic service Galaxy primarily focuses on extensibility and building a developer community.<ref name="GoecksGalaxy10">{{cite journal |title=Galaxy: a comprehensive approach for supporting accessible, reproducible, and transparent computational research in the life sciences |journal=Genome Biology |author=Goecks, J.; Nekrutenko, A.; Taylor, J.; The Galaxy Team |year=2010 |volume=11 |issue=8 |pages=R86 |doi=10.1186/gb-2010-11-8-r86 |pmid=20738864 |pmc=PMC2945788}}</ref><ref name="BlankenbergGalaxy10">{{cite journal |title=Galaxy: a web-based genome analysis tool for experimentalists |journal=Current Protocols in Molecular Biology |author=Blankenberg, D.; Von Kuster, G.; Coraor, N. et al. |year=2010 |volume=19 |issue=Unit 19.10.1–21 |doi=10.1002/0471142727.mb1910s89 |pmid=20069535 |pmc=PMC4264107}}</ref><ref name="GiardineGalaxy05">{{cite journal |title=Galaxy: A platform for interactive large-scale genome analysis |journal=Genome Research |author=Giardine, B.; Riemer, C.; Hardison, R.C. et al. |volume=15 |issue=10 |pages=1451–1455 |year=2005 |doi=10.1101/gr.4086505 |pmid=16169926 |pmc=PMC1240089}}</ref> Seven Bridges is a commercial service that combines a few fixed pipelines with a visually distinctive workflow editor. Chipster is an academic service that packages a variety of NGS tools in addition to a variety of microarray tools and combines these with visualization of data summaries and QC metrics.<ref name="KallioChipster11">{{cite journal |title=Chipster: user-friendly analysis software for microarray and other high-throughput data |journal=BMC Genomics |author=Kallio, M.A.; Tuimala, J.T.; Hupponen, T. et al. |volume=12 |pages=507 |year=2011 |doi=10.1186/1471-2164-12-507 |pmid=21999641 |pmc=PMC3215701}}</ref> Anduril is designed to manage a local cluster and contains packages for a variety of tasks, including alignment and variant calling as well as image analysis and flow cytometry, which are not addressed by the other cloud services surveyed.<ref name="OvaskaLarge10">{{cite journal |title=Large-scale data integration framework provides a comprehensive view on glioblastoma multiforme |journal=Genome Medicine |author=Ovaska, K.; Laakso, M.; Haapa-Paananen, S. et al. |volume=2 |issue=9 |pages=65 |year=2010 |doi=10.1186/gm186 |pmid=20822536 |pmc=PMC3092116}}</ref> With respect to the software used in sequence production pipelines, Mercury is most distinguished by its Atlas variant caller and the extensive annotations provided by its Cassandra annotation tool (https://www.hgsc.bcm.edu/software/cassandra). | ||

==References== | ==References== | ||

Revision as of 19:30, 18 December 2015

| Full article title | Launching genomics into the cloud: Deployment of Mercury, a next generation sequence analysis pipeline |

|---|---|

| Journal | BMC Bioinformatics |

| Author(s) |

Reid, Jeffrey G.; Carroll, Andrew; Veeraraghavan, Narayanan; Dahdouli, Mahmoud; Sundquist, Andreas; English, Adam; Bainbridge, Matthew; White, Simon; Salerno, William; Buhay, Christian; Yu, Fuli; Muzny, Donna; Daly, Richard; Duyk, Geoff; Gibbs, Richard A. Boerwinkle, Eric |

| Author affiliation(s) | Baylor College of Medicine; DNAnexus |

| Primary contact | Email: jgreid@bcm.edu |

| Year published | 2014 |

| Volume and issue | 15 |

| Page(s) | 30 |

| DOI | 10.1186/1471-2105-15-30 |

| ISSN | 1471-2105 |

| Distribution license | Creative Commons Attribution 2.0 Generic |

| Website | http://bmcbioinformatics.biomedcentral.com/articles/10.1186/1471-2105-15-30 |

| Download | http://bmcbioinformatics.biomedcentral.com/track/pdf/10.1186/1471-2105-15-30 (PDF) |

|

|

This article should not be considered complete until this message box has been removed. This is a work in progress. |

Abstract

Background: Massively parallel DNA sequencing generates staggering amounts of data. Decreasing cost, increasing throughput, and improved annotation have expanded the diversity of genomics applications in research and clinical practice. This expanding scale creates analytical challenges: accommodating peak compute demand, coordinating secure access for multiple analysts, and sharing validated tools and results.

Results: To address these challenges, we have developed the Mercury analysis pipeline and deployed it in local hardware and the Amazon Web Services cloud via the DNAnexus platform. Mercury is an automated, flexible, and extensible analysis workflow that provides accurate and reproducible genomic results at scales ranging from individuals to large cohorts.

Conclusions: By taking advantage of cloud computing and with Mercury implemented on the DNAnexus platform, we have demonstrated a powerful combination of a robust and fully validated software pipeline and a scalable computational resource that, to date, we have applied to more than 10,000 whole genome and whole exome samples.

Keywords: NGS data, Variant calling, Annotation, Clinical sequencing, Cloud computing

Background

Whole exome capture sequencing (WES) and whole genome sequencing (WGS) using next generation sequencing (NGS) technologies[1] have emerged as compelling paradigms for routine clinical diagnosis, genetic risk prediction, and patient management.[2] Large numbers of laboratories and hospitals routinely generate terabytes of NGS data, shifting the bottleneck in clinical genetics from DNA sequence production to DNA sequence analysis. Such analysis is most prevalent in three common settings: first, in a clinical diagnostics laboratory (e.g. Baylor’s Whole Genome Laboratory http://www.bcm.edu/geneticlabs/) testing single patients or families with presumed heritable disease; second, in a cancer-analysis setting where the unit of interest is either a normal-tumor tissue pair or normal-primary tumor-recurrence trio[3]; and third, in biomedical research studies sequencing a sample of well-phenotyped individuals. In each case, the input is a DNA sample of appropriate quality having a unique identification number, appropriate informed consent, and relevant clinical and phenotypic information.

As these new samples are sequenced, the resulting data is most effectively examined in the context of petabases of existing DNA sequence and the associated meta-data. Such large-scale comparative genomics requires new sequence data to be robustly characterized, consistently reproducible, and easily shared among large collaborations in a secure manner. And while data-management and information technologies have adapted to the processing and storage requirements of emerging sequencing technologies (e.g., the CRAM specification[4]), it is less certain that appropriate informative software interfaces will be made available to the genomics and clinical genetics communities. One element bridging the technology gap between the sequencing instrument and the scientist or clinician is a validated data processing pipeline that takes raw sequencing reads and produces an annotated personal genome ready for further analysis and clinical interpretation.

To address this need, we have designed and implemented Mercury, an automated approach that integrates multiple sequence analysis components across many computational steps, from obtaining patient samples to providing a fully annotated list of variant sites for clinical applications. Mercury fully integrates new software with existing routines (e.g., Altas2[5]) and provides the flexibility necessary to respond to changing sequencing technologies and the rapidly increasing volume of relevant data. Mercury has been implemented on both local infrastructure and in a cloud computing platform provided by DNAnexus using Amazon Web Services (AWS). While there are other NGS analysis pipelines, some of which have even been implemented in the cloud[6], the combination of Mercury and DNAnexus together provide for the first time a fully integrated genomic analysis resource that can serve the full spectrum of users.

Results and discussion

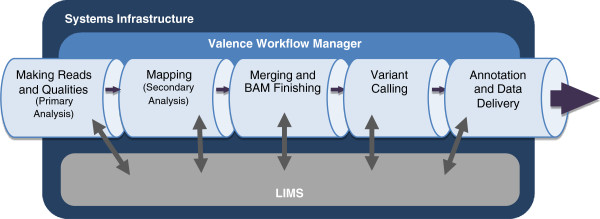

Figure 1 provides an overview of the Mercury data processing pipeline. Source information includes sample and project management data and the characteristics of library preparation and sequencing. This information enters the pipeline either directly from the user or from a laboratory information management system (LIMS). The first step, generating sequencing reads, is based on the vendor’s primary analysis software, which generates sequence reads and base-call confidence values (qualities). The second step maps the reads and qualities to the reference genome using a standard mapping tool, such as BWA[7][8], producing a BAM[9] (binary alignment/map) file. The third step produces a “finished” BAM that includes sorting, duplicate marking, indel realignment, base quality recalibration, and indexing (using a combination of tools including SAMtools[9], Picard (http://picard.sourceforge.net), and GATK[10]). The fourth step in Mercury uses the Atlas2 suite[5][11] (Atlas-SNP and Atlas-indel) to call variants and produce a variant file (VCF). The fifth step adds biological and functional annotation and formats the variant lists for delivery. Each step is described in detail in the Methods section, as is the flow of information between steps.

|

Mercury has been optimized for the Illumina HiSeq (Illumina, Inc.; San Diego, CA) platform, but the generalized workflow framework is adaptable to other settings. The entire pipeline has been implemented both locally and in a cloud computing environment. All relevant code and documentation are freely available online (https://www.hgsc.bcm.edu/content/mercury) and the scalable cloud solution is available within the DNAnexus library (http://www.dnanexus.com/). Sensible default parameters have already been determined so that researchers and clinicians can reliably analyze their data with Mercury without needing to configure any of the constituent programs or obtaining access to large computational resources, and they can do so in a manner compliant with multiple regulatory frameworks.

Local workflow management

Implementing a robust analysis framework that incorporates a heterogeneous collection of software tools presents many challenges. Running disparate software modules with varying inputs and outputs that depend on each other’s results requires appropriate error checking and centralized output logging. We therefore developed a simple yet extensible workflow management framework, Valence (http://sourceforge.net/projects/valence/), that manages the various steps and dependencies within Mercury and handles internal and external pipeline communication. This formal approach to workflow management helps maximize computational resource utilization and seamlessly guides the data from the sequencing instrument to an annotated variant file ready for clinical interpretation and downstream analysis.

Valence parses and executes an analysis protocol described in XML format with each step treated as either an action or a procedure. An action is defined as a direct call to the system to submit a program or script to the job scheduler for execution; a procedure is defined a collection of actions, which is itself a workflow. This design allows the user to easily add, remove, and modify the steps of any analysis protocol. A protocol description for a specific step must include the required cluster resources, any dependencies on other steps, and a command element that describes how to execute the program or script. To ensure that the XML wrappers are applicable to any run, the command is treated as a string template that allows XML variables to be substituted into the command prior to execution. Thus, a single XML wrapper describing how to run a program can be applied to different inputs. Valence can be deployed on any cluster with a job scheduler (e.g., PBS, LSF, SGE), implementing a database to track both the job (the collection of all the steps in a protocol to be executed) and the status (“Not Started,” “Running,” “Finished,” “Failed”) of any action associated with the job.

Mercury users can easily incorporate new analysis tools into an existing pipeline. For example, we recently expanded the scope of our pipeline to include Tiresias (https://sourceforge.net/projects/tiresias/), a structural variant caller focused on mobile elements, and ExCID (https://github.com/cbuhay/ExCID), an exome coverage analysis tool designed to provide clinical reports on under-covered regions. To incorporate Tiresias and ExCID into the Mercury pipeline, we needed only to specify the compute requirements and add the appropriate command to the existing XML workflow definition; Valence then automatically handles all data passing, logging, and error reporting.

Cloud workflow management

Mercury has been instantiated in the cloud via the DNAnexus platform (utilizing AWS’s EC2 and S3). DNAnexus provides a layer of abstraction that simplifies development, execution, monitoring, and collaboration on a cloud infrastructure. The constituent steps of the Mercury pipeline take the form of discrete “applets,” which are then linked to form a workflow within the DNAnexus platform infrastructure. Using the workflow construction GUI, one can add applets (representing each step) to the workflow and create a dependency graph by linking the inputs and outputs of subsequent applets. Inputs are provided to an instance of the workflow, and the entire workflow is run within the cloud infrastructure. The various steps within the workflow are then executed based on the dependency graph. As with Valence, individual applets can be configured to run with a specific set of computational resource requirements such as memory, local disk space, and number of cores and processors. We are currently working to merge the local and cloud infrastructure elements by integrating the upload agent into Valence, allowing Valence to trigger a DNAnexus workflow once all the data is successfully uploaded. Such coordination would serve to transparently support analysis bursts.

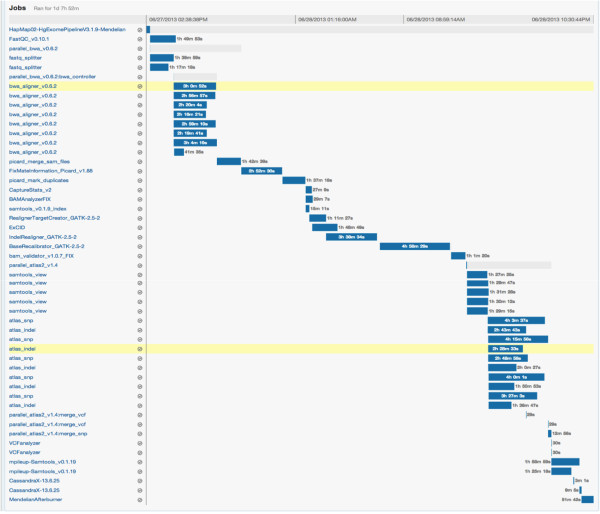

The Mercury pipeline within DNAnexus comprises code that uses the DNAnexus command-line interface to instantiate the pipeline in the cloud. The Mercury code for DNAnexus is executed on a local terminal. For example, one may provide a list of sample FASTQ files and sample meta-data locations to Mercury, at which point Mercury uploads the data and instantiates the predefined workflow within DNAnexus. On average, on a 100 Mbps connection, we were able to upload at a rate of ~14 MB/sec. We were able to parallelize this uploading process, yielding an effective upload rate of ~90 MB/sec. The size of a typical FASTQ file from WES with 150X coverage has a compressed (bzip2) file size of approximately 3 GB. Uploading such a file from a local server took less than five minutes. After sample data are uploaded to the DNAnexus environment, the workflow is instantiated in the cloud. Progress can be monitored online using the DNAnexus GUI (Figure 2) or locally through the Mercury monitoring code. To achieve full automation, the monitoring code can be made a part of a local process to poll for analysis status at regular intervals and start analysis of new sequences automatically upon completion of sequencing. When the Mercury monitoring code detects successful completion of analysis an email notification is sent out. The results can either be downloaded to the local server or the user can view various tracks and data with a native genome browser.

|

Performance

Turn-around time for raw data generation on most NGS platforms is already considered long for many clinical applications, so minimizing analysis time is a primary goal of the Mercury pipeline. By maintaining a high-performance computing cluster consisting of hundreds of 8-core, 48 GB RAM nodes and introducing Mercury into the sequencing pipeline, we can minimize wait times by ensuring that compute resources are always available for all sequence events as the instrument produces the data. To match compute resources to production requirements, we carefully monitor the run times (and RAM and CPU requirements) of each step in the Mercury pipeline. Table 1 describes the pipeline for each compute-intensive step, from the generation of reads and qualities from raw data (bcl to FASTQ) to generation of post-processed annotated variant files (VCFs). Resource requirements for each step are given in terms of fraction of an 8-core node (CPU) and RAM allocated. Note that some steps under-utilize available CPUs because they require most (or all) of the RAM available on a given node. For data generated via WES from human samples, the Mercury pipeline requires less than 36 hours of wall-clock time and 15 node-hours (i.e., the equivalent of one whole node fully dedicated to processing for 15 hours). Run times and resource requirements will vary with data type, reference genome, and computing hardware configuration.

| ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

After porting each element of the Mercury pipeline into the DNAnexus environment, the tools (i.e., “apps”) can be run on the cloud in environments with different CPU, RAM, disk, and bandwidth resources to optimize wall-clock time and cost-efficiency. Parallelization within a pipeline reduces the time for a single run, which is useful for quick development cycles or time-sensitive applications. In addition, many parallel pipelines can be run simultaneously. The current peak usage for the Mercury pipeline on DNAnexus is approximately 12,000 cores. This throughput is a small fraction of the theoretical maximum that could be achieved in AWS. For the standard implementation of Mercury in the cloud analyzing a validation exome (Hapmap sample NA12878), the total wall time to produce an annotated variant call VCF (starting with paired-end FASTQ files) is approximately one day.

Data management, sharing, reliability, and compliance

Large projects present a large data management challenge. For example, the Baylor-Hopkins Center for Mendelian Genomics has generated WES data from approximately 2,000 samples and the Human Genome Sequencing Center at Baylor College of Medicine has generated more than 10,000 WES and 3,500 WGS data from research samples from the CHARGE consortium.

As a pilot study, we processed 1,000 WES data sets–approximately 80 terabytes of genomic data–from the Center for Mendelian Genomics using Mercury in DNAnexus. Using multi-threaded uploads we were able to deliver data into the cloud at an average rate of ~960 exomes per day. Once uploaded, the data is analyzed with Mercury, and the resulting variants can be accessed for further analysis via a web GUI. Data can also be tagged, and these tags can be filtered or retrieved. Runs of individual pipelines and tools can be queried in a similar way.[12] As datasets become larger, multi-site collaborative consortiums play an increasingly important role in contemporary biomedical research. A major advantage of cloud computing over local computing is that cloud storage can be shared across multiple organizations. Instead of each collaborator maintaining a local copy of the data and working in isolation, cloud users can be given appropriate access permissions so some researchers can view and download the results, others can run analyses on the data and build tools, and those with administrative privileges can determine access to the project. This data paradigm is the only feasible approach to giving patients meaningful access to their own genomic data.

Comparison to existing methods

A number of other tools and services provide similar functionality to Mercury on DNAnexus, with differing approaches to extensibility, ease of use for non-programmers, support for local or cloud infrastructures, and software available by default (Table 2). For example, the academic service Galaxy primarily focuses on extensibility and building a developer community.[13][14][15] Seven Bridges is a commercial service that combines a few fixed pipelines with a visually distinctive workflow editor. Chipster is an academic service that packages a variety of NGS tools in addition to a variety of microarray tools and combines these with visualization of data summaries and QC metrics.[16] Anduril is designed to manage a local cluster and contains packages for a variety of tasks, including alignment and variant calling as well as image analysis and flow cytometry, which are not addressed by the other cloud services surveyed.[17] With respect to the software used in sequence production pipelines, Mercury is most distinguished by its Atlas variant caller and the extensive annotations provided by its Cassandra annotation tool (https://www.hgsc.bcm.edu/software/cassandra).

References

- ↑ Metzker, Michael L. (2010). "Sequencing technologies — The next generation". Nature Reviews Genetics 11 (1): 31–46. doi:10.1038/nrg2626. PMID 19997069.

- ↑ Bainbridge, Matthew N.; Wiszniewski, Wojciech; Murdock, David R. et al. (2011). "Whole-genome sequencing for optimized patient management". Science Translational Medicine 3 (87): 87re3. doi:10.1126/scitranslmed.3002243. PMC PMC3314311. PMID 21677200. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3314311.

- ↑ Cancer Genome Atlas Research Network (2011). "Integrated genomic analyses of ovarian carcinoma". Nature 474 (7353): 609–615. doi:10.1038/nature10166. PMC PMC3163504. PMID 21720365. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3163504.

- ↑ Wheeler, David A.; Srinivasan, Maithreyan; Egholm, Michael et al. (2008). "The complete genome of an individual by massively parallel DNA sequencing". Nature 452 (7189): 872–876. doi:10.1038/nature06884. PMID 18421352.

- ↑ 5.0 5.1 Challis, D.; Yu, J.; Evani, U.S. et al. (2012). "An integrative variant analysis suite for whole exome next-generation sequencing data". BMC Bioinformatics 13: 8. doi:10.1186/1471-2105-13-8. PMC PMC3292476. PMID 22239737. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3292476.

- ↑ O’Driscoll, A.; Daugelaite, J.; Sleator, R.D. (2013). "'Big data', Hadoop and cloud computing in genomics". Journal of Biomedical Informatics 46 (5): 774-81. doi:10.1016/j.jbi.2013.07.001. PMID 23872175.

- ↑ Li, H.; Durbin, R. (2009). "Fast and accurate short read alignment with Burrows–Wheeler transform". Bioinformatics 25 (14): 1754–60. doi:10.1093/bioinformatics/btp324. PMC PMC2705234. PMID 19451168. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC2705234.

- ↑ Li, H.; Durbin, R. (2010). "Fast and accurate long-read alignment with Burrows–Wheeler transform". Bioinformatics 26 (5): 589-95. doi:10.1093/bioinformatics/btp698. PMC PMC2828108. PMID 20080505. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC2828108.

- ↑ 9.0 9.1 Li, H.; Handsaker, B.; Wysoker, A. et al. (2009). "The Sequence Alignment/Map format and SAMtools". Bioinformatics 25 (16): 2078-9. doi:10.1093/bioinformatics/btp352. PMC PMC2723002. PMID 19505943. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC2723002.

- ↑ DePristo, M.A.; Banks, E.; Poplin, R. et al. (2011). "A framework for variation discovery and genotyping using next-generation DNA sequencing data". Nature Genetics 43 (5): 491-8. doi:10.1038/ng.806. PMC PMC3083463. PMID 21478889. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3083463.

- ↑ Shen, Y.; Wan, Z.; Coarfa, C. et al. (2010). "A SNP discovery method to assess variant allele probability from next-generation resequencing data". Genome Research 20 (2): 273-80. doi:10.1101/gr.096388.109. PMC PMC2813483. PMID 20019143. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC2813483.

- ↑ Morrison, A.C.; Voorman, A.; Johnson, A.D. et al. (2013). "Whole-genome sequence–based analysis of high-density lipoprotein cholesterol". Nature Genetics 45 (8): 899-901. doi:10.1038/ng.2671. PMC PMC4030301. PMID 23770607. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4030301.

- ↑ Goecks, J.; Nekrutenko, A.; Taylor, J.; The Galaxy Team (2010). "Galaxy: a comprehensive approach for supporting accessible, reproducible, and transparent computational research in the life sciences". Genome Biology 11 (8): R86. doi:10.1186/gb-2010-11-8-r86. PMC PMC2945788. PMID 20738864. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC2945788.

- ↑ Blankenberg, D.; Von Kuster, G.; Coraor, N. et al. (2010). "Galaxy: a web-based genome analysis tool for experimentalists". Current Protocols in Molecular Biology 19 (Unit 19.10.1–21). doi:10.1002/0471142727.mb1910s89. PMC PMC4264107. PMID 20069535. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4264107.

- ↑ Giardine, B.; Riemer, C.; Hardison, R.C. et al. (2005). "Galaxy: A platform for interactive large-scale genome analysis". Genome Research 15 (10): 1451–1455. doi:10.1101/gr.4086505. PMC PMC1240089. PMID 16169926. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC1240089.

- ↑ Kallio, M.A.; Tuimala, J.T.; Hupponen, T. et al. (2011). "Chipster: user-friendly analysis software for microarray and other high-throughput data". BMC Genomics 12: 507. doi:10.1186/1471-2164-12-507. PMC PMC3215701. PMID 21999641. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3215701.

- ↑ Ovaska, K.; Laakso, M.; Haapa-Paananen, S. et al. (2010). "Large-scale data integration framework provides a comprehensive view on glioblastoma multiforme". Genome Medicine 2 (9): 65. doi:10.1186/gm186. PMC PMC3092116. PMID 20822536. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3092116.

Notes

This presentation is faithful to the original, with only a few minor changes to presentation. In some cases important information was missing from the references, and that information was added.