Difference between revisions of "Journal:Principles of metadata organization at the ENCODE data coordination center"

Shawndouglas (talk | contribs) (Saving and adding more.) |

Shawndouglas (talk | contribs) (Saving and adding more content.) |

||

| Line 33: | Line 33: | ||

A description of these datasets, collectively known as metadata, encompasses, but is not limited to, the identification of the experimental method used to generate the data, the sex and age of the donor from whom a skin biopsy was taken, and the software used to align the sequencing reads to a reference genome. Defining and organizing the set of metadata that is relevant, informative and applicable to diverse experimental techniques is challenging. These challenges are not unique to the ENCODE DCC. Several major experimental consortia similar in scale to the ENCODE project exist, as well as public database projects that collect and distribute high-throughput genomic data. Analogous to the ENCODE project, the modENCODE project was begun in 2007 to identify functional elements in the model organisms ''Caenorhabditis elegans'' and ''Drosophila melanogaster''. The modENCODE DCC faced similar challenges in trying to integrate diverse data types using a variety of experimental techniques.<ref name="WashingtonTheModENC11">{{cite journal |title=The modENCODE Data Coordination Center: Lessons in harvesting comprehensive experimental details |journal=Database |author=Washington, N.L.; Stinson, E.O.; Perry, M.D. et al. |volume=2011 |pages=bar023 |year=2011 |doi=10.1093/database/bar023 |pmid=21856757 |pmc=PMC3170170}}</ref> Other consortia, such as the Roadmap Epigenomics Mapping Centers, also have been tasked with defining the metadata.<ref name="BernsteinTheNIH10">{{cite journal |title=The NIH Roadmap Epigenomics Mapping Consortium |journal=Nature Biotechnology |author=Bernstein, B.E.; Stamatoyannopoulos, J.A.; Costello, J.F. et al. |volume=28 |issue=10 |pages=1045-8 |year=2010 |doi=10.1038/nbt1010-1045 |pmid=20944595 |pmc=PMC3607281}}</ref> In addition, databases such as ArrayExpress at the EBI, GEO and SRA at the NCBI, Data Dryad (http://datadryad.org/) and FigShare (http://figshare.com/) serve as data repositories, accepting diverse data types from large consortia as well as from individual research laboratories.<ref name="KolesnikovArrray15">{{cite journal |title=ArrayExpress update -- Simplifying data submissions |journal=Nucleic Acids Research |author=Kolesnikov, N.; Hastings, E.; Keays, M. et al. |volume=43 |issue=D1 |pages=D1113-6 |year=2015 |doi=10.1093/nar/gku1057 |pmid=25361974 |pmc=PMC4383899}}</ref><ref name="BarrettNCBI13">{{cite journal |title=NCBI GEO: Archive for functional genomics data sets -- Update |journal=Nucleic Acids Research |author=Barrett, T.; Wilhite, S.E.; Ledoux, P. et al. |volume=41 |issue=D1 |pages=D991-5 |year=2013 |doi=10.1093/nar/gks1193 |pmid=23193258 |pmc=PMC3531084}}</ref><ref name="NCBIDatabase15">{{cite journal |title=Database resources of the National Center for Biotechnology Information |journal=Nucleic Acids Research |author=NCBI Resource Coordinators |volume=43 |issue=D1 |pages=D6–17 |year=2015 |doi=10.1093/nar/gku1130 |pmid=25398906 |pmc=PMC4383943}}</ref> | A description of these datasets, collectively known as metadata, encompasses, but is not limited to, the identification of the experimental method used to generate the data, the sex and age of the donor from whom a skin biopsy was taken, and the software used to align the sequencing reads to a reference genome. Defining and organizing the set of metadata that is relevant, informative and applicable to diverse experimental techniques is challenging. These challenges are not unique to the ENCODE DCC. Several major experimental consortia similar in scale to the ENCODE project exist, as well as public database projects that collect and distribute high-throughput genomic data. Analogous to the ENCODE project, the modENCODE project was begun in 2007 to identify functional elements in the model organisms ''Caenorhabditis elegans'' and ''Drosophila melanogaster''. The modENCODE DCC faced similar challenges in trying to integrate diverse data types using a variety of experimental techniques.<ref name="WashingtonTheModENC11">{{cite journal |title=The modENCODE Data Coordination Center: Lessons in harvesting comprehensive experimental details |journal=Database |author=Washington, N.L.; Stinson, E.O.; Perry, M.D. et al. |volume=2011 |pages=bar023 |year=2011 |doi=10.1093/database/bar023 |pmid=21856757 |pmc=PMC3170170}}</ref> Other consortia, such as the Roadmap Epigenomics Mapping Centers, also have been tasked with defining the metadata.<ref name="BernsteinTheNIH10">{{cite journal |title=The NIH Roadmap Epigenomics Mapping Consortium |journal=Nature Biotechnology |author=Bernstein, B.E.; Stamatoyannopoulos, J.A.; Costello, J.F. et al. |volume=28 |issue=10 |pages=1045-8 |year=2010 |doi=10.1038/nbt1010-1045 |pmid=20944595 |pmc=PMC3607281}}</ref> In addition, databases such as ArrayExpress at the EBI, GEO and SRA at the NCBI, Data Dryad (http://datadryad.org/) and FigShare (http://figshare.com/) serve as data repositories, accepting diverse data types from large consortia as well as from individual research laboratories.<ref name="KolesnikovArrray15">{{cite journal |title=ArrayExpress update -- Simplifying data submissions |journal=Nucleic Acids Research |author=Kolesnikov, N.; Hastings, E.; Keays, M. et al. |volume=43 |issue=D1 |pages=D1113-6 |year=2015 |doi=10.1093/nar/gku1057 |pmid=25361974 |pmc=PMC4383899}}</ref><ref name="BarrettNCBI13">{{cite journal |title=NCBI GEO: Archive for functional genomics data sets -- Update |journal=Nucleic Acids Research |author=Barrett, T.; Wilhite, S.E.; Ledoux, P. et al. |volume=41 |issue=D1 |pages=D991-5 |year=2013 |doi=10.1093/nar/gks1193 |pmid=23193258 |pmc=PMC3531084}}</ref><ref name="NCBIDatabase15">{{cite journal |title=Database resources of the National Center for Biotechnology Information |journal=Nucleic Acids Research |author=NCBI Resource Coordinators |volume=43 |issue=D1 |pages=D6–17 |year=2015 |doi=10.1093/nar/gku1130 |pmid=25398906 |pmc=PMC4383943}}</ref> | ||

The challenges of capturing metadata and organizing high-throughput genomic datasets are not unique to NIH-funded consortia and data repositories. Since many researchers submit their high-throughput data to data repositories and scientific data publications, tools and [[Informatics|data management software]], such as the Investigation Assay Study tools (ISA-tools) and [[laboratory information management system]]s (LIMS), provide resources aimed to help [[Laboratory|laboratories]] organize their data for better compatibility with these data repositories.<ref name="Rocca-SerraISA10">{{cite journal |title=ISA software suite: Supporting standards-compliant experimental annotation and enabling curation at the community level |journal=Bioinformatics |author=Rocca-Serra, P.; Brandizi, M.; Maquire, E. et al. |volume=26 |issue=18 |pages=2354-6 |year=2010 |doi=10.1093/bioinformatics/btq415 |pmid=20679334 |pmc=PMC2935443}}</ref> In addition, there have been multiple efforts to define a minimal set of metadata for genomic assays, including standards proposed by the Functional Genomics Data society (FGED; http://fged.org/projects/minseqe/) and the Global Alliance for Genomics and Health (GA4GH; https://github.com/ga4gh/schemas), to improve interoperability among data generated by diverse groups. | |||

Here, we describe how metadata are organized at the ENCODE DCC and define the metadata standard that is used to describe the experimental assays and computational analyses generated by the ENCODE project. The metadata standard includes the principles driving the selection of metadata as well as how these metadata are validated and used by the DCC. Understanding the principles and data organization will help improve the accessibility of the ENCODE datasets as well as provide transparency to the data generation processes. This understanding will allow integration of the diverse data within the ENCODE consortium as well as integration with related assays from other large-scale consortium projects and individual labs. | |||

==Metadata describing ENCODE assays== | |||

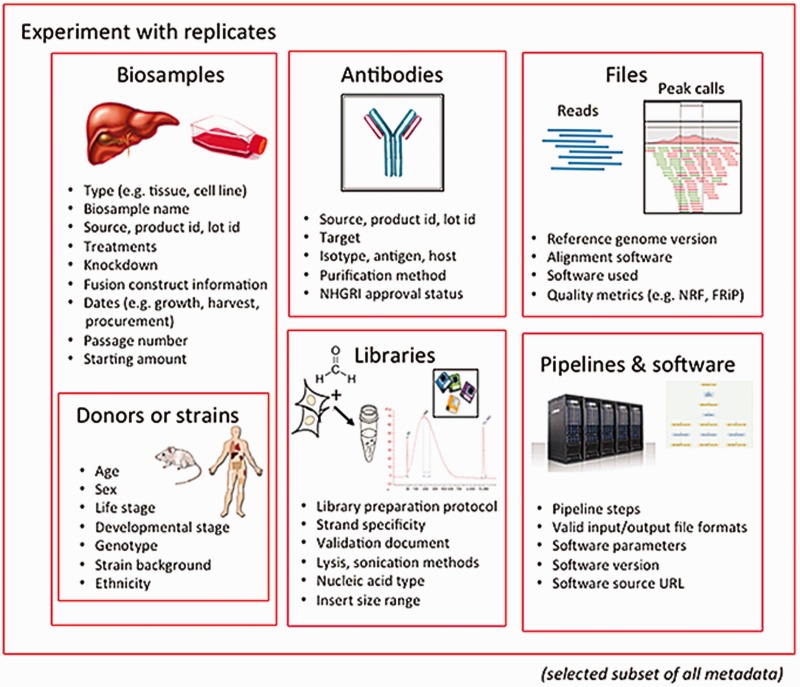

The categories of metadata currently being collected by the ENCODE DCC builds on the set collected during the previous phases of the project. During the earlier phases, a core set of metadata describing the assays, cell types and antibodies were submitted to the ENCODE DCC.<ref name="RosenbloomENCODE12">{{cite journal |title=ENCODE whole-genome data in the UCSC Genome Browser: Update 2012 |journal=Nucleic Acids Research |author=Rosenbloom, K.R.; Dreszer, T.R.; Long, J.C. et al. |volume=40 |issue=D1 |pages=D912-7 |year=2012 |doi=10.1093/nar/gkr1012 |pmid=22075998 |pmc=PMC3245183}}</ref> The current metadata set expands the number of categories into the following major organizational units: biosamples, libraries, antibodies, experiments, data files and pipelines (Figure 1). Only a selected set of metadata are included below as examples, to give a sense of the breadth and depth of our approach. | |||

[[File:Fig1 Hong Database2016 2016.jpg|800px]] | |||

{{clear}} | |||

{| | |||

| STYLE="vertical-align:top;"| | |||

{| border="0" cellpadding="5" cellspacing="0" width="800px" | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"| <blockquote>'''Figure 1.''' Major categories of metadata. The metadata captured for ENCODE can be grouped into the following major areas: biosamples and donors/strains (formerly ‘cell types’), libraries, antibodies, data files and pipelines and software. These categories are then grouped into an experiment with replicates. Only a subset of metadata is listed in the figure to provide an overview of the breadth and depth of metadata collected for an assay. The full set of metadata can be viewed at https://github.com/ENCODE-DCC/encoded/tree/master/src/encoded/schemas.</blockquote> | |||

|- | |||

|} | |||

|} | |||

The biological material used as input material for an experimental assay is called a biosample. This category of metadata is an expansion of the ‘cell types’ captured in previous phases of the ENCODE project.<ref name="RosenbloomENCODE12" /> Biosample metadata includes non-identifying information about the donors (if the sample is from a human) and details of strain backgrounds (if the sample is from model organisms) (Figure 1). Metadata for the biosample includes the source of the material (such as a company name or a lab), how it was handled in the lab (such as number of passages or starting amounts) and any modifications to the biological material (such as the integration of a fusion gene or the application of a treatment). | |||

The library refers to the nucleic acid material that is extracted from the biosample and contains details of the experimental methods used to prepare that nucleic acid for sequencing. Details of the specific population or sub-population of nucleic acid (e.g. DNA, rRNA, nuclear RNA, etc.) and how this material is prepared for sequencing libraries is captured as metadata. | |||

The metadata recorded for antibodies include the source of the antibody, as well as the product number and the specific lot of the antibody if acquired commercially. Capturing the antibody lot id is critical because there is potential for lot-to-lot variation in the specificity and sensitivity of an antibody. Antibody metadata include characterizations of the antibody performed by the labs, which examines this specificity and sensitivity of an antibody, as defined by the ENCODE consortium.<ref name="LandtChIP12">{{cite journal |title=ChIP-seq guidelines and practices of the ENCODE and modENCODE consortia |journal=Genome Research |author=Landt, S.G.; Marinov, G.K.; Kundaje, A. et al. |volume=22 |issue=9 |pages=1813-31 |year=2012 |doi=10.1101/gr.136184.111 |pmid=22955991 |pmc=PMC3431496}}</ref> | |||

The experiment refers to one or more replicates that are grouped together along with the raw data files and processed data files. Each replicate that is part of an experiment will be performed using the same experimental method or assay (e.g. ChIP-seq). A single replicate, which can be designated as a biological or technical replicate, is linked to a specific library and an antibody used in immunoprecipitation-based assay (e.g. ChIP-seq). Since the library is derived from the biosample, the details of the biosample are affiliated with the replicate through the library used. | |||

A single experiment can include multiple files. These files include, but are not limited to, the raw data (typically sequence reads), the mapping of these sequence reads against the reference genome, and genomic features that are represented by these reads (often called ‘signals’ or ‘peaks’). Metadata pertaining to files include the file format and a short description, known as an output type, of the contents of the file. | |||

In addition to capturing the format of the files generated (e.g. fastq, BAM, bigWig), metadata regarding quality control metrics, the software, the version of the software and pipelines used to generate the file are included as part of the pipeline-related set of metadata. The metadata for a given file also consist of other files that are connected through input and output relationships. | |||

==References== | ==References== | ||

Revision as of 21:02, 6 September 2016

| Full article title | Principles of metadata organization at the ENCODE data coordination center |

|---|---|

| Journal | Database |

| Author(s) |

Hong, Eurie L.; Sloan, Cricket A.; Chan, Esther T.; Davidson, Jean M.; Malladi, Venkat S.; Strattan, J. Seth; Hitz, Benjamin C.; Gabdank, Idan; Narayanan, Aditi K.; Ho, Marcus; Lee, Brian T.; Rowe, Laurence D.; Dreszer, Timothy R.; Roe, Greg R.; Podduturi, Nikhil R.; Tanaka, Forrest; Hilton, Jason A.; Cherry, J. Michael |

| Author affiliation(s) | Stanford University, University of California - Santa Cruz |

| Primary contact | Email: cherry at stanford dot edu |

| Year published | 2016 |

| Page(s) | baw001 |

| DOI | 10.1093/database/baw001 |

| ISSN | 1758-0463 |

| Distribution license | Creative Commons Attribution 4.0 International |

| Website | http://database.oxfordjournals.org/content/2016/baw001 |

| Download | http://database.oxfordjournals.org/content/2016/baw001.full.pdf+html (PDF) |

|

|

This article should not be considered complete until this message box has been removed. This is a work in progress. |

Abstract

The Encyclopedia of DNA Elements (ENCODE) Data Coordinating Center (DCC) is responsible for organizing, describing and providing access to the diverse data generated by the ENCODE project. The description of these data, known as metadata, includes the biological sample used as input, the protocols and assays performed on these samples, the data files generated from the results and the computational methods used to analyze the data. Here, we outline the principles and philosophy used to define the ENCODE metadata in order to create a metadata standard that can be applied to diverse assays and multiple genomic projects. In addition, we present how the data are validated and used by the ENCODE DCC in creating the ENCODE Portal (https://www.encodeproject.org/).

Database URL: www.encodeproject.org

Introduction

The goal of the Encyclopedia of DNA Elements (ENCODE) project is to annotate functional regions in the human and mouse genomes. Functional regions include those that code protein-coding or non-coding RNA gene products as well as regions that could have a regulatory role.[1][2] To this end, the project has surveyed the landscape of the human genome using over 35 high-throughput experimental methods in more than 250 different cell and tissue types, resulting in over 4000 experiments.[1][3] These datasets are submitted to a Data Coordinating Center (DCC), whose role is to describe, organize and provide access to these diverse datasets.[4]

A description of these datasets, collectively known as metadata, encompasses, but is not limited to, the identification of the experimental method used to generate the data, the sex and age of the donor from whom a skin biopsy was taken, and the software used to align the sequencing reads to a reference genome. Defining and organizing the set of metadata that is relevant, informative and applicable to diverse experimental techniques is challenging. These challenges are not unique to the ENCODE DCC. Several major experimental consortia similar in scale to the ENCODE project exist, as well as public database projects that collect and distribute high-throughput genomic data. Analogous to the ENCODE project, the modENCODE project was begun in 2007 to identify functional elements in the model organisms Caenorhabditis elegans and Drosophila melanogaster. The modENCODE DCC faced similar challenges in trying to integrate diverse data types using a variety of experimental techniques.[5] Other consortia, such as the Roadmap Epigenomics Mapping Centers, also have been tasked with defining the metadata.[6] In addition, databases such as ArrayExpress at the EBI, GEO and SRA at the NCBI, Data Dryad (http://datadryad.org/) and FigShare (http://figshare.com/) serve as data repositories, accepting diverse data types from large consortia as well as from individual research laboratories.[7][8][9]

The challenges of capturing metadata and organizing high-throughput genomic datasets are not unique to NIH-funded consortia and data repositories. Since many researchers submit their high-throughput data to data repositories and scientific data publications, tools and data management software, such as the Investigation Assay Study tools (ISA-tools) and laboratory information management systems (LIMS), provide resources aimed to help laboratories organize their data for better compatibility with these data repositories.[10] In addition, there have been multiple efforts to define a minimal set of metadata for genomic assays, including standards proposed by the Functional Genomics Data society (FGED; http://fged.org/projects/minseqe/) and the Global Alliance for Genomics and Health (GA4GH; https://github.com/ga4gh/schemas), to improve interoperability among data generated by diverse groups.

Here, we describe how metadata are organized at the ENCODE DCC and define the metadata standard that is used to describe the experimental assays and computational analyses generated by the ENCODE project. The metadata standard includes the principles driving the selection of metadata as well as how these metadata are validated and used by the DCC. Understanding the principles and data organization will help improve the accessibility of the ENCODE datasets as well as provide transparency to the data generation processes. This understanding will allow integration of the diverse data within the ENCODE consortium as well as integration with related assays from other large-scale consortium projects and individual labs.

Metadata describing ENCODE assays

The categories of metadata currently being collected by the ENCODE DCC builds on the set collected during the previous phases of the project. During the earlier phases, a core set of metadata describing the assays, cell types and antibodies were submitted to the ENCODE DCC.[11] The current metadata set expands the number of categories into the following major organizational units: biosamples, libraries, antibodies, experiments, data files and pipelines (Figure 1). Only a selected set of metadata are included below as examples, to give a sense of the breadth and depth of our approach.

|

The biological material used as input material for an experimental assay is called a biosample. This category of metadata is an expansion of the ‘cell types’ captured in previous phases of the ENCODE project.[11] Biosample metadata includes non-identifying information about the donors (if the sample is from a human) and details of strain backgrounds (if the sample is from model organisms) (Figure 1). Metadata for the biosample includes the source of the material (such as a company name or a lab), how it was handled in the lab (such as number of passages or starting amounts) and any modifications to the biological material (such as the integration of a fusion gene or the application of a treatment).

The library refers to the nucleic acid material that is extracted from the biosample and contains details of the experimental methods used to prepare that nucleic acid for sequencing. Details of the specific population or sub-population of nucleic acid (e.g. DNA, rRNA, nuclear RNA, etc.) and how this material is prepared for sequencing libraries is captured as metadata.

The metadata recorded for antibodies include the source of the antibody, as well as the product number and the specific lot of the antibody if acquired commercially. Capturing the antibody lot id is critical because there is potential for lot-to-lot variation in the specificity and sensitivity of an antibody. Antibody metadata include characterizations of the antibody performed by the labs, which examines this specificity and sensitivity of an antibody, as defined by the ENCODE consortium.[12]

The experiment refers to one or more replicates that are grouped together along with the raw data files and processed data files. Each replicate that is part of an experiment will be performed using the same experimental method or assay (e.g. ChIP-seq). A single replicate, which can be designated as a biological or technical replicate, is linked to a specific library and an antibody used in immunoprecipitation-based assay (e.g. ChIP-seq). Since the library is derived from the biosample, the details of the biosample are affiliated with the replicate through the library used.

A single experiment can include multiple files. These files include, but are not limited to, the raw data (typically sequence reads), the mapping of these sequence reads against the reference genome, and genomic features that are represented by these reads (often called ‘signals’ or ‘peaks’). Metadata pertaining to files include the file format and a short description, known as an output type, of the contents of the file.

In addition to capturing the format of the files generated (e.g. fastq, BAM, bigWig), metadata regarding quality control metrics, the software, the version of the software and pipelines used to generate the file are included as part of the pipeline-related set of metadata. The metadata for a given file also consist of other files that are connected through input and output relationships.

References

- ↑ 1.0 1.1 ENCODE Project Consortium (2012). "An integrated encyclopedia of DNA elements in the human genome". Nature 489 (7414): 57-74. doi:10.1038/nature11247. PMC PMC3439153. PMID 22955616. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3439153.

- ↑ Yue, F.; Cheng, Y.; Breschi, A. et al. (2014). "A comparative encyclopedia of DNA elements in the mouse genome". Nature 515 (7527): 355-64. doi:10.1038/nature13992. PMC PMC4266106. PMID 25409824. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4266106.

- ↑ ENCODE Project Consortium et al. (2007). "Identification and analysis of functional elements in 1% of the human genome by the ENCODE pilot project". Nature 447 (7146): 799–816. doi:10.1038/nature05874. PMC PMC2212820. PMID 17571346. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC2212820.

- ↑ Sloan, C.A.; Chan, E.T.; Davidson, J.M. et al. (2016). "ENCODE data at the ENCODE portal". Nucleic Acids Research 44 (D1): D726-32. doi:10.1093/nar/gkv1160. PMC PMC4702836. PMID 26527727. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4702836.

- ↑ Washington, N.L.; Stinson, E.O.; Perry, M.D. et al. (2011). "The modENCODE Data Coordination Center: Lessons in harvesting comprehensive experimental details". Database 2011: bar023. doi:10.1093/database/bar023. PMC PMC3170170. PMID 21856757. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3170170.

- ↑ Bernstein, B.E.; Stamatoyannopoulos, J.A.; Costello, J.F. et al. (2010). "The NIH Roadmap Epigenomics Mapping Consortium". Nature Biotechnology 28 (10): 1045-8. doi:10.1038/nbt1010-1045. PMC PMC3607281. PMID 20944595. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3607281.

- ↑ Kolesnikov, N.; Hastings, E.; Keays, M. et al. (2015). "ArrayExpress update -- Simplifying data submissions". Nucleic Acids Research 43 (D1): D1113-6. doi:10.1093/nar/gku1057. PMC PMC4383899. PMID 25361974. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4383899.

- ↑ Barrett, T.; Wilhite, S.E.; Ledoux, P. et al. (2013). "NCBI GEO: Archive for functional genomics data sets -- Update". Nucleic Acids Research 41 (D1): D991-5. doi:10.1093/nar/gks1193. PMC PMC3531084. PMID 23193258. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3531084.

- ↑ NCBI Resource Coordinators (2015). "Database resources of the National Center for Biotechnology Information". Nucleic Acids Research 43 (D1): D6–17. doi:10.1093/nar/gku1130. PMC PMC4383943. PMID 25398906. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4383943.

- ↑ Rocca-Serra, P.; Brandizi, M.; Maquire, E. et al. (2010). "ISA software suite: Supporting standards-compliant experimental annotation and enabling curation at the community level". Bioinformatics 26 (18): 2354-6. doi:10.1093/bioinformatics/btq415. PMC PMC2935443. PMID 20679334. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC2935443.

- ↑ 11.0 11.1 Rosenbloom, K.R.; Dreszer, T.R.; Long, J.C. et al. (2012). "ENCODE whole-genome data in the UCSC Genome Browser: Update 2012". Nucleic Acids Research 40 (D1): D912-7. doi:10.1093/nar/gkr1012. PMC PMC3245183. PMID 22075998. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3245183.

- ↑ Landt, S.G.; Marinov, G.K.; Kundaje, A. et al. (2012). "ChIP-seq guidelines and practices of the ENCODE and modENCODE consortia". Genome Research 22 (9): 1813-31. doi:10.1101/gr.136184.111. PMC PMC3431496. PMID 22955991. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3431496.

Notes

This presentation is faithful to the original, with only a few minor changes to presentation. In some cases important information was missing from the references, and that information was added.