Difference between revisions of "Journal:Semantics for an integrative and immersive pipeline combining visualization and analysis of molecular data"

Shawndouglas (talk | contribs) (Saving and adding more.) |

Shawndouglas (talk | contribs) (Saving and adding more.) |

||

| Line 31: | Line 31: | ||

Immersive environments play an important role in this context, providing both a better comprehension of the three-dimensional structure of molecules, and offering new interaction techniques to reduce the number of data manipulations executed by the experts (see Figure 1). A few studies took advantage of recent developments in virtual reality to enhance some structural biology tasks. Visualization is the first and most obvious task that was improved through new adaptive stereoscopic screens and immersive environments, plunging experts into the very center of their molecules.<ref name="vanDamImmersive00">{{cite journal |title=Immersive VR for scientific visualization: A progress report |journal=IEEE Computer Graphics and Applications |author=van Dam, A.; Forsberg, A.S.; Laidlaw, D.H. et al. |volume=20 |issue=6 |pages=26–52 |year=2000 |doi=10.1109/38.888006}}</ref><ref name="StoneImmersive10">{{cite journal |title=Immersive molecular visualization and interactive modeling with commodity hardware |journal=Proceedings of the 6th International Conference on Advances in Visual Computing |author=Stone. J.E.; Kohlmeyer, A.; Vandivort, K.L.; Schulten, K. |pages=382–93 |year=2010 |doi=10.1007/978-3-642-17274-8_38}}</ref><ref name="ODonoghueVisual10">{{cite journal |title=Visualization of macromolecular structures |journal=Nature Methods |author=O'Donoghue, S.I.; Goodsell, D.S.; Frangakis, A.S. et al. |volume=7 |issue=3 Suppl. |pages=S42–55 |year=2010 |doi=10.1038/nmeth.1427 |pmid=20195256}}</ref><ref name="HirstMolec14">{{cite journal |title=Molecular simulations and visualization: Introduction and overview |journal=Faraday Discussions |author=Hirst, J.D.; Glowacki, D.R.; Baaden, M. et al. |volume=169 |pages=9–22 |year=2014 |doi=10.1039/c4fd90024c |pmid=25285906}}</ref><ref name="GoddardUCSF18">{{cite journal |title=UCSF ChimeraX: Meeting modern challenges in visualization and analysis |journal=Protein Science |author=Goddard, T.D., Huang, C.C.; Meng, E.C. et al. |volume=27 |issue=1 |pages=14–25 |year=2018 |doi=10.1002/pro.3235 |pmid=28710774 |pmc=PMC5734306}}</ref> Structure manipulations during specific docking experiments have been improved thanks to the use of haptic devices and audio feedback to drive a simulation.<ref name="FéreyMulti09">{{cite journal |title=Multisensory VR interaction for protein-docking in the CoRSAIRe project |journal=Virtual Reality |author=Férey, N.; Nelson, J.; Martin, C. et al. |volume=13 |pages=273 |year=2009 |doi=10.1007/s10055-009-0136-z}}</ref> However, if 3D objects can rather easily be represented and manipulated in such environments, the integration of analytical values (energies, distance to reference, etc.)—2D by nature—leads to a certain complexity and is not a solved problem yet. As a consequence, no specific development has been made to set up an immersive platform where the expert could manipulate data coming from different sources to accelerate and improve the development of new hypotheses. | Immersive environments play an important role in this context, providing both a better comprehension of the three-dimensional structure of molecules, and offering new interaction techniques to reduce the number of data manipulations executed by the experts (see Figure 1). A few studies took advantage of recent developments in virtual reality to enhance some structural biology tasks. Visualization is the first and most obvious task that was improved through new adaptive stereoscopic screens and immersive environments, plunging experts into the very center of their molecules.<ref name="vanDamImmersive00">{{cite journal |title=Immersive VR for scientific visualization: A progress report |journal=IEEE Computer Graphics and Applications |author=van Dam, A.; Forsberg, A.S.; Laidlaw, D.H. et al. |volume=20 |issue=6 |pages=26–52 |year=2000 |doi=10.1109/38.888006}}</ref><ref name="StoneImmersive10">{{cite journal |title=Immersive molecular visualization and interactive modeling with commodity hardware |journal=Proceedings of the 6th International Conference on Advances in Visual Computing |author=Stone. J.E.; Kohlmeyer, A.; Vandivort, K.L.; Schulten, K. |pages=382–93 |year=2010 |doi=10.1007/978-3-642-17274-8_38}}</ref><ref name="ODonoghueVisual10">{{cite journal |title=Visualization of macromolecular structures |journal=Nature Methods |author=O'Donoghue, S.I.; Goodsell, D.S.; Frangakis, A.S. et al. |volume=7 |issue=3 Suppl. |pages=S42–55 |year=2010 |doi=10.1038/nmeth.1427 |pmid=20195256}}</ref><ref name="HirstMolec14">{{cite journal |title=Molecular simulations and visualization: Introduction and overview |journal=Faraday Discussions |author=Hirst, J.D.; Glowacki, D.R.; Baaden, M. et al. |volume=169 |pages=9–22 |year=2014 |doi=10.1039/c4fd90024c |pmid=25285906}}</ref><ref name="GoddardUCSF18">{{cite journal |title=UCSF ChimeraX: Meeting modern challenges in visualization and analysis |journal=Protein Science |author=Goddard, T.D., Huang, C.C.; Meng, E.C. et al. |volume=27 |issue=1 |pages=14–25 |year=2018 |doi=10.1002/pro.3235 |pmid=28710774 |pmc=PMC5734306}}</ref> Structure manipulations during specific docking experiments have been improved thanks to the use of haptic devices and audio feedback to drive a simulation.<ref name="FéreyMulti09">{{cite journal |title=Multisensory VR interaction for protein-docking in the CoRSAIRe project |journal=Virtual Reality |author=Férey, N.; Nelson, J.; Martin, C. et al. |volume=13 |pages=273 |year=2009 |doi=10.1007/s10055-009-0136-z}}</ref> However, if 3D objects can rather easily be represented and manipulated in such environments, the integration of analytical values (energies, distance to reference, etc.)—2D by nature—leads to a certain complexity and is not a solved problem yet. As a consequence, no specific development has been made to set up an immersive platform where the expert could manipulate data coming from different sources to accelerate and improve the development of new hypotheses. | ||

[[File:Fig1 Trellet JOfIntegBioinfo2018 15-2.jpg|600px]] | |||

{{clear}} | |||

{| | |||

| STYLE="vertical-align:top;"| | |||

{| border="0" cellpadding="5" cellspacing="0" width="600px" | |||

|- | |||

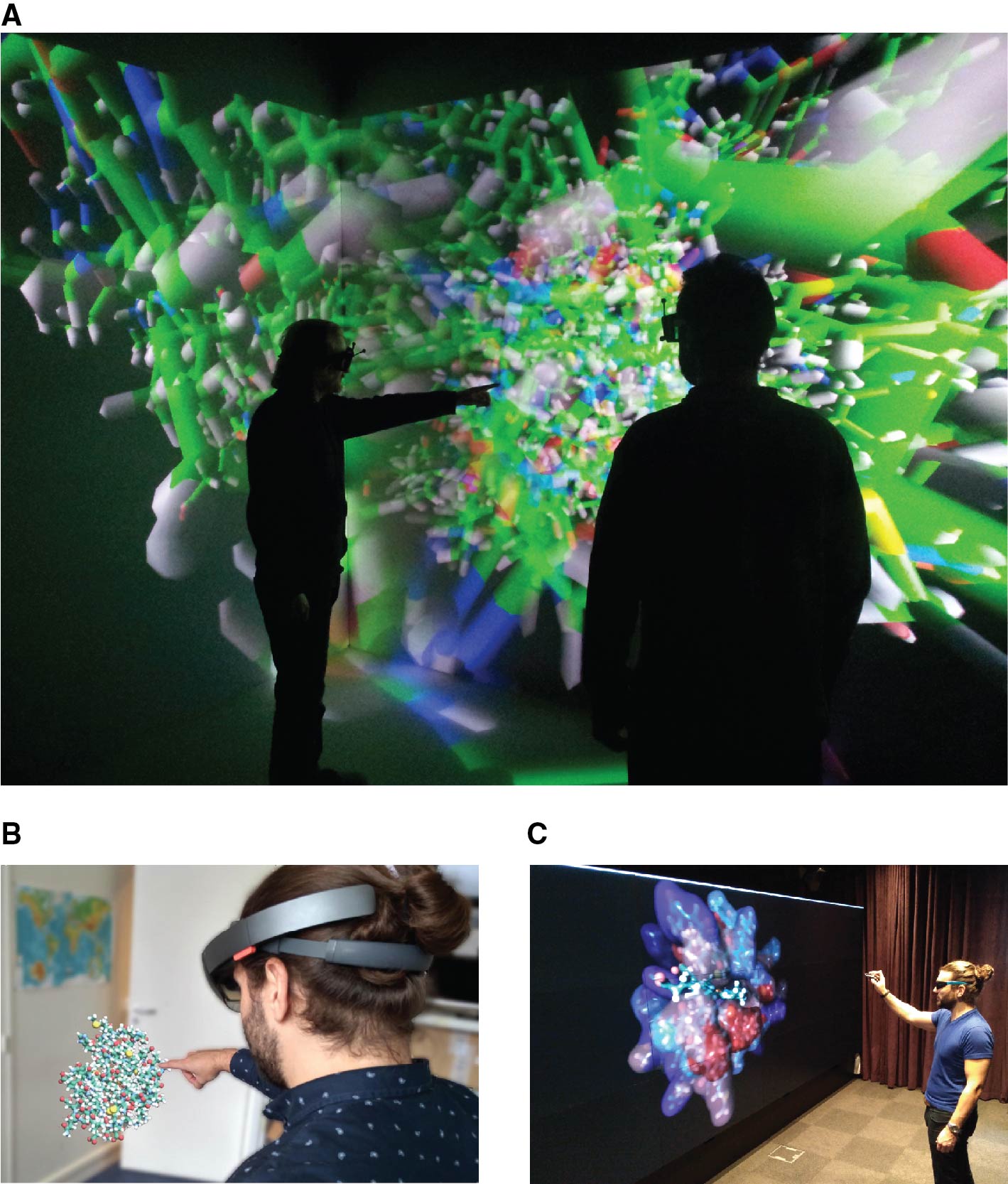

| style="background-color:white; padding-left:10px; padding-right:10px;"| <blockquote>'''Figure 1.''' Immersive, augmented reality, and screen wall environments used for molecular visualization: (A) EVE platform, a multi-user CAVE-system composed of 4 screens (LIMSI-CNRS/VENISE team, Orsay), (B) Microsoft Hololens and (C) screen wall of 8.3 m<sup>2</sup> composed of 12 screens at full HD resolution with 120 Hz refresh rate in stereoscopy (IBPC-CNRS/LBT, Paris).</blockquote> | |||

|- | |||

|} | |||

|} | |||

This lack of development can also be partly explained by the significant differences between the data handled by the 3D visualization software packages and the analytical tools. On one side, 3D visualization solutions such as PyMol<ref name="DeLanoThePy00">{{cite web |url=http://pymol.sourceforge.net/overview/index.htm |title=The PyMOL Molecular Graphics System |author=DeLano, W. |date=04 September 2000}}</ref>, VMD<ref name="HumphreyVMD96">{{cite journal |title=VMD: Visual molecular dynamics |journal=Journal of Molecular Graphics |author=Humphrey, W.; Dalke, A.; Schulten, K. et al. |volume=14 |issue=1 |pages=33–8 |year=1996 |doi=10.1016/0263-7855(96)00018-5}}</ref>, and UnityMol<ref name="LvGame13">{{cite journal |title=Game on, science - How video game technology may help biologists tackle visualization challenges |journal=PLoS One |author=Lv, Z.; Tek, A.; Da Silva, F. et al. |volume=8 |issue=3 |pages=e57990 |year=2013 |doi=10.1371/journal.pone.0057990 |pmid=23483961 |pmc=PMC3590297}}</ref> explore and manipulate 3D structure coordinates composing the molecular complex that will be displayed. The scene seen by the user is composed of 3D objects reporting the overall shape of a particular molecule and its environment at a particular state. This scene is static if we are interested in only one state of a given molecule, but is often dynamic when a whole simulated trajectory of conformational changes over time is considered. Analysis tools, on the other side, handle raw numbers, vectors, and matrices in various formats and dimensions, from various input sources depending on the analysis pipeline used to generate them. Their outputs are graphical representations of trends or comparisons between parameters or properties in 1 to ''N'' dimensions formatted in a way that experts can quickly understand and use such [[information]] to guide their hypotheses. | |||

Some of the aforementioned software do provide tools to gather analyses as static plots aside the 3D visualization space. Interactivity is limited and flexibility mainly depends on the user capability to create and tune scripts to improve the information displayed. We believe that a major improvement of tools available today would bring into play a scenario where the 3D visualization of a molecular event is coupled to monitoring the evolution of analytical properties, e.g., sub-elements such as distance variations and progression of simulation parameters, into a single working environment. The expert would be able to see any action performed in one space (either 3D visualization or analysis) with a coherent graphical impact on the second space to filter or highlight the parameter or sub-ensemble of objects targeted by the expert. | |||

We have developed a pipeline that aims to bring within the same immersive environment the visualization and analysis of heterogeneous data coming from molecular simulations. This pipeline addresses the lack of integrated tools efficiently combining the stereoscopic visualization of 3D objects and the representation/interaction with their associated physicochemical and geometric properties (both 2D and 3D) generated by standard analysis tools and that are either combined to the 3D objects (shape, colour, etc.) or displayed on a dedicated space integrated in the working environment (second mobile screen, 2D integration in the virtual scene, etc.). | |||

In this pipeline, we systematically combine structural and analytical data by using a semantic definition of the content (scientific data) and the context (immersive environments and interfaces). Such a high-level definition can be translated into an ontology from which instances or individuals of ontological concepts can then be created from real data to build a database of linked data for a defined phenomenon. On top of the data collection, an extensive list of possible interactions and actions defined in the ontology and based on the provided data can be computed and presented to the user. | |||

The creation of a semantic definition describing the content and the context of a molecular scene in immersion leads to the creation of an intelligent system where data and 3D molecular representations are (1) combined through pre-existing or inferred links present in our hierarchical definition of the concepts, (2) enriched with suitable and adaptive analyses proposed to the user with respect to the current task, and (3) manipulated by direct interaction allowing to both perform 3D visualization and exploration as well as analysis in a unique immersive environment. | |||

Our method narrows the need for complex interactions by considering what actions the user can perform with the data he is currently manipulating and the means of interaction his immersive environment provides. | |||

We will highlight our developments and the first outcomes of our work through three main sections: the first section attempts to provide a complete background of the usage of semantics in the fields of VR/AR systems and structural biology. In the second section we will describe and justify our implementation choices and how we linked the different technologies highlighted in the previous section. Finally, in a third section, we will show several applications of our platform and its capabilities to address the issues raised previously. | |||

==References== | ==References== | ||

Revision as of 02:42, 5 March 2019

| Full article title | Semantics for an integrative and immersive pipeline combining visualization and analysis of molecular data |

|---|---|

| Journal | Journal of Integrative Bioinformatics |

| Author(s) | Trellet, Mikael; Férey, Nicolas; Flotyński, Jakub; Baaden, Marc; Bourdot, Patrick |

| Author affiliation(s) | Bijvoet Center for Biomolecular Research, Université Paris Sud, Poznań Univ. of Economics and Business, Laboratoire de Biochimie Théorique |

| Primary contact | Email: m dot e dot trellet at uu dot nl |

| Year published | 2018 |

| Volume and issue | 15(2) |

| Page(s) | 20180004 |

| DOI | 10.1515/jib-2018-0004 |

| ISSN | 1613-4516 |

| Distribution license | Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International |

| Website | https://www.degruyter.com/view/j/jib.2018.15.issue-2/jib-2018-0004/jib-2018-0004.xml |

| Download | https://www.degruyter.com/downloadpdf/j/jib.2018.15.issue-2/jib-2018-0004/jib-2018-0004.xml (PDF) |

Abstract

The advances made in recent years in the field of structural biology significantly increased the throughput and complexity of data that scientists have to deal with. Combining and analyzing such heterogeneous amounts of data became a crucial time consumer in the daily tasks of scientists. However, only few efforts have been made to offer scientists an alternative to the standard compartmentalized tools they use to explore their data and that involve a regular back and forth between them. We propose here an integrated pipeline especially designed for immersive environments, promoting direct interactions on semantically linked 2D and 3D heterogeneous data, displayed in a common working space. The creation of a semantic definition describing the content and the context of a molecular scene leads to the creation of an intelligent system where data are (1) combined through pre-existing or inferred links present in our hierarchical definition of the concepts, (2) enriched with suitable and adaptive analyses proposed to the user with respect to the current task and (3) interactively presented in a unique working environment to be explored.

Keywords: virtual reality, semantics for interaction, structural biology

Introduction

Recent years have seen a profound change in the way structural biologists interact with their data. New techniques that try to capture the structure and dynamics of bio-molecules have reached an extraordinary high throughput of structural data.[1][2] Scientists must try to combine and analyze data flows from different sources to draw their hypotheses and conclusions. However, despite this increasing complexity, they tend to rely mainly on compartmentalized tools to only visualize or analyze limited portions of their data. This situation leads to a constant back and forth between the different tools and their associated environments. Consequently, a significant amount of time is dedicated to the transformation of data to account for the heterogeneous input data types each tool is allowing.

The need for platforms capable of handling the intricate data flow is then strong. In structural biology, the numerical simulation process is now able to deal with very large and heterogeneous molecular structures. These molecular assemblies may be composed of several million particles and consist of many different types of molecules, including a biologically realistic environment. This overall complexity raises the need to go beyond common visualization solutions and move towards integrated exploration systems where visualization and analysis can be merged.

Immersive environments play an important role in this context, providing both a better comprehension of the three-dimensional structure of molecules, and offering new interaction techniques to reduce the number of data manipulations executed by the experts (see Figure 1). A few studies took advantage of recent developments in virtual reality to enhance some structural biology tasks. Visualization is the first and most obvious task that was improved through new adaptive stereoscopic screens and immersive environments, plunging experts into the very center of their molecules.[3][4][5][6][7] Structure manipulations during specific docking experiments have been improved thanks to the use of haptic devices and audio feedback to drive a simulation.[8] However, if 3D objects can rather easily be represented and manipulated in such environments, the integration of analytical values (energies, distance to reference, etc.)—2D by nature—leads to a certain complexity and is not a solved problem yet. As a consequence, no specific development has been made to set up an immersive platform where the expert could manipulate data coming from different sources to accelerate and improve the development of new hypotheses.

|

This lack of development can also be partly explained by the significant differences between the data handled by the 3D visualization software packages and the analytical tools. On one side, 3D visualization solutions such as PyMol[9], VMD[10], and UnityMol[11] explore and manipulate 3D structure coordinates composing the molecular complex that will be displayed. The scene seen by the user is composed of 3D objects reporting the overall shape of a particular molecule and its environment at a particular state. This scene is static if we are interested in only one state of a given molecule, but is often dynamic when a whole simulated trajectory of conformational changes over time is considered. Analysis tools, on the other side, handle raw numbers, vectors, and matrices in various formats and dimensions, from various input sources depending on the analysis pipeline used to generate them. Their outputs are graphical representations of trends or comparisons between parameters or properties in 1 to N dimensions formatted in a way that experts can quickly understand and use such information to guide their hypotheses.

Some of the aforementioned software do provide tools to gather analyses as static plots aside the 3D visualization space. Interactivity is limited and flexibility mainly depends on the user capability to create and tune scripts to improve the information displayed. We believe that a major improvement of tools available today would bring into play a scenario where the 3D visualization of a molecular event is coupled to monitoring the evolution of analytical properties, e.g., sub-elements such as distance variations and progression of simulation parameters, into a single working environment. The expert would be able to see any action performed in one space (either 3D visualization or analysis) with a coherent graphical impact on the second space to filter or highlight the parameter or sub-ensemble of objects targeted by the expert.

We have developed a pipeline that aims to bring within the same immersive environment the visualization and analysis of heterogeneous data coming from molecular simulations. This pipeline addresses the lack of integrated tools efficiently combining the stereoscopic visualization of 3D objects and the representation/interaction with their associated physicochemical and geometric properties (both 2D and 3D) generated by standard analysis tools and that are either combined to the 3D objects (shape, colour, etc.) or displayed on a dedicated space integrated in the working environment (second mobile screen, 2D integration in the virtual scene, etc.).

In this pipeline, we systematically combine structural and analytical data by using a semantic definition of the content (scientific data) and the context (immersive environments and interfaces). Such a high-level definition can be translated into an ontology from which instances or individuals of ontological concepts can then be created from real data to build a database of linked data for a defined phenomenon. On top of the data collection, an extensive list of possible interactions and actions defined in the ontology and based on the provided data can be computed and presented to the user.

The creation of a semantic definition describing the content and the context of a molecular scene in immersion leads to the creation of an intelligent system where data and 3D molecular representations are (1) combined through pre-existing or inferred links present in our hierarchical definition of the concepts, (2) enriched with suitable and adaptive analyses proposed to the user with respect to the current task, and (3) manipulated by direct interaction allowing to both perform 3D visualization and exploration as well as analysis in a unique immersive environment.

Our method narrows the need for complex interactions by considering what actions the user can perform with the data he is currently manipulating and the means of interaction his immersive environment provides.

We will highlight our developments and the first outcomes of our work through three main sections: the first section attempts to provide a complete background of the usage of semantics in the fields of VR/AR systems and structural biology. In the second section we will describe and justify our implementation choices and how we linked the different technologies highlighted in the previous section. Finally, in a third section, we will show several applications of our platform and its capabilities to address the issues raised previously.

References

- ↑ Zhao, G.; Perilla, J.R.; Yufenyuy, E.L. et al. (2013). "Mature HIV-1 capsid structure by cryo-electron microscopy and all-atom molecular dynamics". Nature 497 (7451): 643–6. doi:10.1038/nature12162. PMC PMC3729984. PMID 23719463. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3729984.

- ↑ Zhang, J.; Ma, J.; Liu, D. et al. (2017). "Structure of phycobilisome from the red alga Griffithsia pacifica". Nature 551 (7678): 57–63. doi:10.1038/nature24278. PMID 29045394.

- ↑ van Dam, A.; Forsberg, A.S.; Laidlaw, D.H. et al. (2000). "Immersive VR for scientific visualization: A progress report". IEEE Computer Graphics and Applications 20 (6): 26–52. doi:10.1109/38.888006.

- ↑ Stone. J.E.; Kohlmeyer, A.; Vandivort, K.L.; Schulten, K. (2010). "Immersive molecular visualization and interactive modeling with commodity hardware". Proceedings of the 6th International Conference on Advances in Visual Computing: 382–93. doi:10.1007/978-3-642-17274-8_38.

- ↑ O'Donoghue, S.I.; Goodsell, D.S.; Frangakis, A.S. et al. (2010). "Visualization of macromolecular structures". Nature Methods 7 (3 Suppl.): S42–55. doi:10.1038/nmeth.1427. PMID 20195256.

- ↑ Hirst, J.D.; Glowacki, D.R.; Baaden, M. et al. (2014). "Molecular simulations and visualization: Introduction and overview". Faraday Discussions 169: 9–22. doi:10.1039/c4fd90024c. PMID 25285906.

- ↑ Goddard, T.D., Huang, C.C.; Meng, E.C. et al. (2018). "UCSF ChimeraX: Meeting modern challenges in visualization and analysis". Protein Science 27 (1): 14–25. doi:10.1002/pro.3235. PMC PMC5734306. PMID 28710774. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC5734306.

- ↑ Férey, N.; Nelson, J.; Martin, C. et al. (2009). "Multisensory VR interaction for protein-docking in the CoRSAIRe project". Virtual Reality 13: 273. doi:10.1007/s10055-009-0136-z.

- ↑ DeLano, W. (4 September 2000). "The PyMOL Molecular Graphics System". http://pymol.sourceforge.net/overview/index.htm.

- ↑ Humphrey, W.; Dalke, A.; Schulten, K. et al. (1996). "VMD: Visual molecular dynamics". Journal of Molecular Graphics 14 (1): 33–8. doi:10.1016/0263-7855(96)00018-5.

- ↑ Lv, Z.; Tek, A.; Da Silva, F. et al. (2013). "Game on, science - How video game technology may help biologists tackle visualization challenges". PLoS One 8 (3): e57990. doi:10.1371/journal.pone.0057990. PMC PMC3590297. PMID 23483961. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3590297.

Notes

This presentation is faithful to the original, with only a few minor changes to presentation. Some grammar and punctuation was cleaned up to improve readability. In some cases important information was missing from the references, and that information was added. Nothing else was changed in accordance with the NoDerivatives portion of the license.