Journal:An overview of data warehouse and data lake in modern enterprise data management

| Full article title | An overview of data warehouse and data lake in modern enterprise data management |

|---|---|

| Journal | Big Data and Cognitive Computing |

| Author(s) | Nambiar, Athira; Mundra, Divyansh |

| Author affiliation(s) | SRM Institute of Science and Technology |

| Primary contact | Email: athiram at srmist dot edu dot in |

| Year published | 2022 |

| Volume and issue | 6(4) |

| Article # | 132 |

| DOI | 10.3390/bdcc6040132 |

| ISSN | 2504-2289 |

| Distribution license | Creative Commons Attribution 4.0 International |

| Website | https://www.mdpi.com/2504-2289/6/4/132 |

| Download | https://www.mdpi.com/2504-2289/6/4/132/pdf (PDF) |

|

|

This article should be considered a work in progress and incomplete. Consider this article incomplete until this notice is removed. |

Abstract

Data is the lifeblood of any organization. In today’s world, organizations recognize the vital role of data in modern business intelligence systems for making meaningful decisions and staying competitive in the field. Efficient and optimal data analytics provides a competitive edge to its performance and services. Major organizations generate, collect, and process vast amounts of data, falling under the category of "big data." Managing and analyzing the sheer volume and variety of big data is a cumbersome process. At the same time, proper utilization of the vast collection of an organization’s information can generate meaningful insights into business tactics. In this regard, two of the more popular data management systems in the area of big data analytics—the data warehouse and data lake—act as platforms to accumulate the big data generated and used by organizations. Although seemingly similar, both of them differ in terms of their characteristics and applications.

This article presents a detailed overview of the roles of data warehouses and data lakes in modern enterprise data management. We detail the definitions, characteristics, and related works for the respective data management frameworks. Furthermore, we explain the architecture and design considerations of the current state of the art. Finally, we provide a perspective on the challenges and promising research directions for the future.

Keywords: big data, data warehousing, data lake, enterprise data management, OLAP, ETL tools, metadata, cloud computing, internet of things

Introduction

"Big data analytics" is one of the buzzwords in today’s digital world. It entails examining big data and uncovering any hidden patterns, correlations, etc. available in the data. [1] Big data analytics extracts and analyzes random data sets, forming them into meaningful information. According to statistics, the overall amount of data generated in the world in 2021 was approximately 79 zettabytes, and this is expected to double by 2025. [2] This unprecedented amount of data was the result of a data explosion that occurred during the last decade, wherein data interactions increased by 5,000 percent. [3]

Big data deals with the volume, variety, and velocity of data, while seeking the veracity (insightfulness) and value to data. These are known, in part, as the "Vs" of big data. [4] An unprecedented amount of diverse data is acquired, stored, and processed with high data quality for various application domains. These data originate from business transactions, real-time streaming, social media, video analytics, and text mining, creating a huge amount of semi- or unstructured data to be stored in different information silos. [5] The efficient integration and analysis of these multiple data across silos are required to divulge complete insight into the database. This is an open research topic of interest.

Big data and its related emerging technologies have been changing the way e-commerce and e-services operate and have been opening new frontiers in business analytics and related research. [6] Big data analytics systems play a big role in the modern enterprise management domain, from product distribution to sales and marketing, as well as the analysis of hidden trends, similarities, and other insights, giving companies opportunities to optimize their data to find new opportunities within it. [7] Since organizations with better and more accurate data can make more informed business decisions by looking at market trends and customer preferences, they can gain competitive advantages over others. Hence, organizations invest tremendously in artificial intelligence (AI) and big data technologies to strive toward digital transformation and data-driven decision making, which ultimately leads to advanced business intelligence (BI). [6] As per reports, the worldwide big data analytics and BI software applications markets seem as though they will increase by USD 68 billion and 17.6 billion by 2024–2025, respectively. [8]

Big data repositories exist in many forms, as per the requirements of corporations. [9] An effective data repository needs to unify, regulate, evaluate, and deploy a huge amount of data resources to enhance analytics and query performance. Based on the nature and the application scenario, there are many different types of data repositories outside of traditional relational databases. Two of the more popular data repositories among them are enterprise data warehouses and data lakes. [10,11,12]

A data warehouse (DW) is a data repository which stores structured, filtered, and processed data that has been treated for a specific purpose, whereas a data lake (DL) is a vast pool of data for which the purpose is not or has not yet been defined. [9] In detail, DWs store large amounts of data collected by different sources, typically using predefined schemas. Typically, a DW is a purpose-built relational database running on specialized hardware either on the premises of an organization or in the cloud. [13] DWs have been used widely for storing enterprise data and fueling BI and analytics applications. [14,15,16]

Data lakes (DLs) have emerged as big data repositories that store raw data and provide a rich list of functionalities with the help of metadata descriptions. [10] Although the DL is also a form of enterprise data storage, it does not inherently include the same analytics features commonly associated with DWs. Instead, they are repositories storing raw data in their original formats and providing a common access interface. From the lake, data may flow downstream to a DW to get processed, packaged, and become ready for consumption. As a relatively new concept, there has been very limited research discussing various aspects of DLs, especially in internet articles or blogs.

Although DWs and DLs share some overlapping features and use cases, there are fundamental differences in the data management philosophies, design characteristics, and ideal use conditions for each of these technologies. In this context, we provide a detailed overview and differences between both the DW and DL data management schemes in this survey paper. Furthermore, we consolidate the concepts and give a detailed analysis of different design aspects, various tools and utilities, etc., along with recent developments that have come into existence.

The remainder of this paper is organized as follows. In the next section, the terminology and basic definitions of big data analytics and the data management schemes are analyzed. Furthermore, the related works in the field are also summarized. After this summation, the architectures of both the DW and DL are presented, followed by their key design and practical aspects, presented at length. Afterwards, the various popular tools and services available for enterprise data management are summarized, followed by explanations of the open challenges and promising directions for these technologies. In particular, the pros and cons of various methods are critically discussed, and the observations are presented. Finally, conclusions are drawn from this research effort.

Definitions of big data analytics, data warehouse, and data lake

The definitions and fundamental notions of various data management schemes are provided in this section. Furthermore, related works and review papers on this topic are also summarized.

Big data analytics

With significant advancements in technology has come the unprecedented usage of computer networks, multimedia, the internet of things (IoT), social media, and cloud computing. [17] As a result, a huge amount of data, known as “big data,” has been generated. Effective data processing is required to collect, manage, and analyze these data efficiently. The process of big data processing is aimed at data mining (i.e., extracting knowledge from large amounts of data), leveraging data management, machine learning (ML), high-performance computing, statistical charting, pattern recognition, etc. The important characteristics of big data (known as the seven "Vs" of big data) are as follows[1]:

- Volume, or the available amount of data;

- Velocity, or the speed of data processing;

- Variety, or the different types of big data;

- Volatility, or the variability of the data;

- Veracity, or the accuracy of the data;

- Visualization, or the depiction of big data-generated insights through visual representation; and

- Value, or the benefits organizations derive from the data.

Typically, there are mainly three kinds of big data processing possible: batch processing, stream processing, and hybrid processing. [18] In batch processing, data stored in the non-volatile memory will be processed, and the probability and temporal characteristics of data conversion processes will be decided by the requirements of the problems. In stream processing, the collected data will be processed without storing them in non-volatile media, and the temporal characteristics of data conversion processes will mainly be determined by the incoming data rate. This is suitable for domains that require low response times. Another kind of big data processing, known as hybrid processing, combines both the batch and stream processing techniques to achieve high accuracy and a low processing time. [19] Some examples of hybrid big data processing are Lambda and Kappa Architecture. [20] Lambda Architecture processes huge quantities of data, enabling batch processing and stream processing methods with a hybrid approach. Kappa Architecture is a simpler alternative to Lambda Architecture, since it leverages the same technology stack to handle both real-time stream processing and historical batch processing. However, it avoids maintaining two different code bases for the batch and speed layers. The major notion is to facilitate real-time data processing using a single stream-processing engine, thus bypassing the multi-layered Lambda Architecture without compromising the standard quality of service.

Data warehouse

The concept of DWs was introduced in the late 1980s by IBM researchers Barry Devlin and Paul Murphy, with the aim to deliver an architectural model to solve the flow of data to decision support environments. [21] According to the definition by Inmon, “a data warehouse is a subject-oriented, nonvolatile, integrated, time-variant collection of data in support of management decisions.” [22] Formally, a DW is a large data repository wherein data can be stored and integrated from various sources in a well-structured manner and help in the decision-making process via proper data analytics. [23] The process of compiling information into a DW is known as data warehousing.

In enterprise data management, data warehousing is referred to as a set of decision-making systems targeted toward empowering the information specialist (leader, administrator, or analyst) to improve decision making and make decisions quicker. Hence, DW systems act as an important tool of BI, being used in enterprise data management by most medium and large organizations. [24,25] The past decade has seen unprecedented development both in the number of products and services offered and in the wide-scale adoption of these advancements by the industry. According to a comprehensive research report by Market Research Future (MRFR) titled “Data Warehouse as a Service Market information by Usage, by Deployment, by Application and Organization Size—forecast to 2028,” the market size will reach USD 7.69 billion, growing at a compound annual growth rate of 24.5%, by 2028. [26]

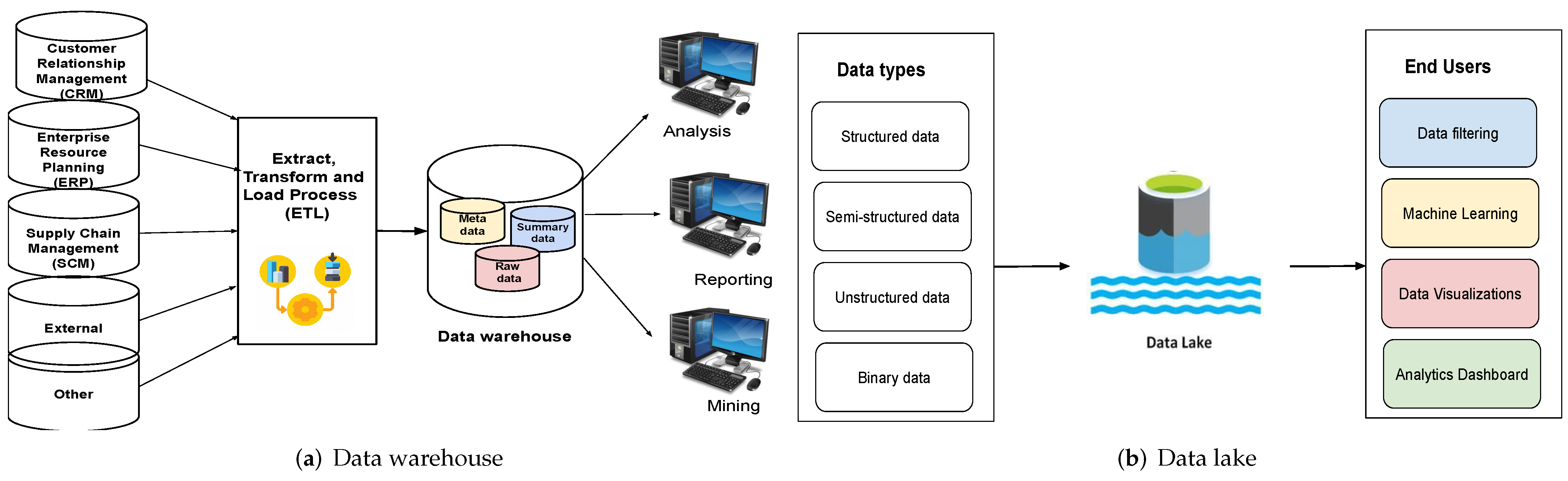

In the DW framework, data are periodically extracted from programs that aid in business operations and duplicated onto specialized processing units. They may then be approved, converted, reconstructed, and augmented with input from various options. The developed DW then becomes a primary origin of data for the production, analysis, and presentation of reports via instantaneous reports, e-portals, and digital readouts. It employs “online analytical processing” (OLAP), whose utility and execution needs differ from those of the “online transaction processing” (OLTP) implementations typically backed up by functional databases. [27,28] OLTP programs often computerize the handling of administrative data processes, such as order entry and banking transactions, which are an organization’s necessary activities. DWs, on the other hand, are primarily concerned with decision assistance. As shown in Figure 1a, a DW integrates data from various sources and helps with analysis, data mining, and reporting. A detailed description of a DW’s architecture is presented in the next major section.

|

DW advancements have benefited various sectors, including production (for supply shipment and client assistance), business (for profiling of clients and stock governance), monetary administration (for claims investigation, risk assessment, billing examination, and detecting fraud), logistics (for vehicle administration), broadcast communications (in order to analyze calls), utility companies (in order to analyze power use), and medical services. [29] The field of data warehousing has seen immense research and development over the last two decades in various research categories such as DW architecture, design, and evolution.

Data lake

By the beginning of the twenty-first century, new types of diverse data were emerging in ever-increasing volumes on the internet and at its interface to the enterprise (e.g., web-based business transactions, real-time streaming, sensor data, and social media). With the huge amount of data around, the need to have better solutions for storing and analyzing large amounts of semi-structured and unstructured data to gain relevant information and valuable insight became apparent. Traditional schema-on-write approaches such as the extract, transform, and load (ETL) process are too inefficient for such data management requirements. This gave rise to another popular modern enterprise data management scheme, the DL. [30,31,32]

DLs are centralized storage repositories that enable users to store raw, unprocessed data in their original format, including unstructured, semi-structured, or structured data, at scale. These help enterprises to make better business decisions via visualizations or dashboards from big data analysis, ML, and real-time analytics. A pictorial representation of a DL is given in Figure 1b, above.

According to Dixon, “whilst a data warehouse seems to be a bottle of water cleaned and ready for consumption, then 'Data Lake' is considered as a whole lake of data in a more natural state.” [33] Another definition for the DL is provided by King [34], as a mechanism that “stores disparate information while ignoring almost everything.” An explanation of DLs from an architectural viewpoint is given by Alrehamy and Walker [35]: “A data lake uses a flat architecture to store data in its raw format. Each data entity in the lake is associated with a unique identifier and a set of extended metadata, and consumers can use purpose-built schemas to query relevant data, which will result in a smaller set of data that can be analyzed to help answer a consumer’s question.” A DL houses data in its original raw form. The data in DLs can vary drastically in size and structure, and they lack any specific organizational structure. A DL can accommodate either very small or huge amounts of data as required. All of these features provide flexibility and scalability to DLs. At the same time, challenges related to the implementation and data analytics associated with DLs also arise.

DLs are becoming increasingly popular for organizations to store their data in a centralized manner. A DL may contain unstructured or multi-structured data, where most of them may have unrealized value for the enterprise. This allows organizations to store their data from different sources without any overhead related to the transformation of the data. [30] This also allows ad hoc data analyses to be performed on this data, which can then be used by organizations to drive key insights and data-driven decision making. DLs replace the previous way of organizing and processing data from various sources with a centralized, efficient, and flexible repository that allows organizations to maximize their gains from a data-driven ecosystem. DLs also allow organizations to scale them to their needs. This is achieved by separating storage from the computational part. Complex transformation and preprocessing of data in the case of DWs is eliminated. The upfront financial overhead of data ingestion is also reduced. Once data are collated in the lake or hub, it is available for analysis for the organization.

The differences between data warehouses and data lakes

Although DWs and DLs are used as two interchangeable terms, they are not the same. [21] One of the major differences between them is the different structures (i.e., processed vs. raw data). A DW stores data in processed and filtered form, whereas a DL stores raw or unprocessed data. Specifically, data are processed and organized into a single schema before being put into the warehouse, whereas raw and unstructured data are fed into a DL. Analysis is performed on the cleansed data in the warehouse. On the contrary, in a DL, data are selected and organized as and when needed.

As for storing processed data, a DW is economical. On the contrary, DLs have a comparatively larger capacity than the DW and are ideal for raw and unprocessed data analysis and employing ML. Another key difference is the objective or purpose of use. Typically, processed data that flow into DWs are used for specific purposes, and hence the storage space will not be wasted, whereas the purpose of usage for the DL is not defined and can ideally be used for any purpose. To use processed or filtered data, no specialized expertise is required, as merely familiarization with the presentation of data (e.g., charts, sheets, tables, and presentations) will do. Hence, DWs can be used by any business or individual. On the contrary, it is comparatively difficult to analyze DLs without familiarity with unprocessed data, hence requiring data scientists with appropriate skills or tools to comprehend them for specific business use. Accessibility or ease of use of data repositories is yet another aspect that differentiates DWs and DLs. Since the architecture of a DL has no proper structure, it has flexibility of use. Instead, the structure of a DW makes sure that no foreign particles invade it, and it is very costly to manipulate. This feature makes it very secure, too. A detailed analysis of the differences between DWs and DLs is given in Table 1.

| ||||||||||||||||||||||||||||||||||||||||||

References

- ↑ Moore, M. (7 April 2016). "The 7 V’s of Big Data". Impact.com. https://impact.com/marketing-intelligence/7-vs-big-data/. Retrieved 25 September 2022.

Notes

This presentation is faithful to the original, with only a few minor changes to presentation. In some cases important information was missing from the references, and that information was added. Inline URLs from the original were turned into full citations for this version.