Journal:Named data networking for genomics data management and integrated workflows

| Full article title | Named data networking for genomics data management and integrated workflows |

|---|---|

| Journal | Frontiers in Big Data |

| Author(s) | Ogle, Cameron; Reddick, David; McKnight, Coleman; Biggs, Tyler; Pauly, Rini; Ficklin, Stephen P.; Feltus, F. Alex; Shannigrahi, Susmit |

| Author affiliation(s) | Clemson University, Tennessee Tech University, Washington State University |

| Primary contact | Email: sshannigrahi at tntech dot edu |

| Year published | 2021 |

| Volume and issue | 4 |

| Article # | 582468 |

| DOI | 10.3389/fdata.2021.582468 |

| ISSN | 2624-909X |

| Distribution license | Creative Commons Attribution 4.0 International |

| Website | https://www.frontiersin.org/articles/10.3389/fdata.2021.582468/full |

| Download | https://www.frontiersin.org/articles/10.3389/fdata.2021.582468/pdf (PDF) |

|

|

This article should be considered a work in progress and incomplete. Consider this article incomplete until this notice is removed. |

Abstract

Advanced imaging and DNA sequencing technologies now enable the diverse biology community to routinely generate and analyze terabytes of high-resolution biological data. The community is rapidly heading toward the petascale in single-investigator laboratory settings. As evidence, the National Center for Biotechnology Information (NCBI) Sequence Read Archive (SRA) central DNA sequence repository alone contains over 45 petabytes of biological data. Given the geometric growth of this and other genomics repositories, an exabyte of mineable biological data is imminent. The challenges of effectively utilizing these datasets are enormous, as they are not only large in size but also stored in various geographically distributed repositories such as those hosted by the NCBI, as well as in the DNA Data Bank of Japan (DDBJ), European Bioinformatics Institute (EBI), and NASA’s GeneLab.

In this work, we first systematically point out the data management challenges of the genomics community. We then introduce named data networking (NDN), a novel but well-researched internet architecture capable of solving these challenges at the network layer. NDN performs all operations such as forwarding requests to data sources, content discovery, access, and retrieval using content names (that are similar to traditional filenames or filepaths), all while eliminating the need for a location layer (the IP address) for data management. Utilizing NDN for genomics workflows simplifies data discovery, speeds up data retrieval using in-network caching of popular datasets, and allows the community to create infrastructure that supports operations such as creating federation of content repositories, retrieval from multiple sources, remote data subsetting, and others. Using name-based operations also streamlines deployment and integration of workflows with various cloud platforms.

We make four signigicant contributions with this wor. First, we enumerate the cyberinfrastructure challenges of the genomics community that NDN can alleviate. Second, we describe our efforts in applying NDN for a contemporary genomics workflow (GEMmaker) and quantify the improvements. The preliminary evaluation shows a sixfold speed up in data insertion into the workflow. Third, as a pilot, we have used an NDN naming scheme (agreed upon by the community and discussed in the "Method" section) to publish data from broadly used data repositories, including the NCBI SRA. We have loaded the NDN testbed with these pre-processed genomes that can be accessed over NDN and used by anyone interested in those datasets. Finally, we discuss our continued effort in integrating NDN with cloud computing platforms, such as the Pacific Research Platform (PRP).

The reader should note that the goal of this paper is to introduce NDN to the genomics community and discuss NDN’s properties that can benefit the genomics community. We do not present an extensive performance evaluation of NDN; we are working on extending and evaluating our pilot deployment and will present systematic results in a future work.

Keywords: genomics data, genomics workflows, large science data, cloud computing, named data networking

Introduction

Scientific communities are entering a new era of exploration and discovery in many fields, driven by high-density data accumulation. A few examples are climate science[1], high-energy particle physics (HEP)[2], astrophysics[3][4], genomics[5], seismology[6], and biomedical research[7], just to name a few. Often referred to as “data-intensive” science, these communities utilize and generate extremely large volumes of data, often reaching into the petabytes[8] and soon projected to reach into the exabytes.

Data-intensive science has created radically new opportunities. Take for example high-throughput DNA sequencing (HTDS). Until recently, HTDS was slow and expensive, and only a few institutes were capable of performing it at scale.[9] With the advances in supercomputers, specialized DNA sequencers, and better bioinformatics algorithms, the effectiveness and cost of sequencing has dropped considerably and continues to drop. For example, sequencing the first reference human genome cost around $2.7 billion over 15 years, while currently it costs under $1,000 to resequence a human genome.[10] With commercial incentives, several companies are offering fragmented genome re-sequencing under $100, performed in only a few days. This massive drop in cost and improvement in speed supports more advanced scientific discovery. For example, earlier scientists could only test their hypothesis on a small number of genomes or gene expression conditions within or between species. With more publicly available datasets[5], scientists can test their hypotheses against a larger number of genomes, potentially enabling them to identify rare mutations, precisely classify diseases based on a specific patient, and, thusly, more accurately treat the disease.[11]

While the growth of DNA sequencing is encouraging, it has also created difficulty in genomics data management. For example, the National Center for Biotechnology Information’s (NCBI) Sequence Read Archive (SRA) database hosts 42 petabytes of publicly accessible DNA sequence data.[12] Scientists desiring to use public data must discover (or locate) the data and move it from globally distributed sites to on-premize clusters and distributed computing platforms, including public and commercial clouds. Public repositories such as the NCBI SRA contain a subset of all available genomics data.[13] Similar repositories are hosted by NASA, the National Institutes of Health (NIH), and other organizations. Even though these datasets are highly curated, each public repository uses their own standards for data naming, retrieval, and discovery that makes locating and utilizing these datasets difficult.

Moreover, data management problems require the community to build and the scientists to spend time learning complex infrastructures (e.g., cloud platforms, grids) and creating tools, scripts, and workflows that can (semi-) automate their research. The current trend of moving from localized institutional storage and computing to an on-demand cloud computing model adds another layer of complexity to the workflows. The next generation of scientific breakthroughs may require massive data. Our ability to manage, distribute, and utilize these types of extreme-scale datasets and securely integrate them with computational platforms may dictate our success (or failure) in future scientific research.

Our experience in designing and deploying protocols for "big data" science[8][14][15][16][17] [18] suggests that using hierarchical and community-developed names for storing, discovering, and accessing data can dramatically simplify scientific data management systems (SDMSs), and that the network is the ideal place for integrating domain workflows with distributed services. In this work, we propose a named ecosystem over an evolving but well-researched future internet architecture: named data networking (NDN). NDN utilizes content names for all data management operations such as content addressing, content discovery, and retrieval. Utilizing content names for all network operations massively simplifies data management infrastructure. Users simply ask for the content by name (e.g., “/ncbi/homo/sapiens/hg38”) and the network delivers the content to the user.

Using content names that are understood by the end-user over an NDN network provides multiple advantages: natural caching of popular content near the users, unified access mechanisms, and location-agnostic publication of data and services. For example, a dataset properly named can be downloaded by, for example, NCBI or GeneLab at NASA, whichever is closer to the researcher. Additionally, the derived data (results, annotations, publications) are easily publishable into the network (possibly after vetting and quality control by NCBI or NASA) and immediately discoverable if appropriate naming conventions are agreed upon and followed. Finally, NDN shifts the trust to content itself; each piece of content is cryptographically signed by the data producer and verifiable by anyone for provenance.

In this work, we first introduce NDN and the architectural constructs that make it attractive for the genomics community. We then discuss the data management and cyberinfrastructure challenges faced by the genomics community and how NDN can help alleviate them. We then present our pilot study applying NDN to a contemporary genomics workflow GEMmaker[19] and evaluate the integration. Finally, we discuss future research directions and an integration roadmap with cloud computing services.

Named data networking

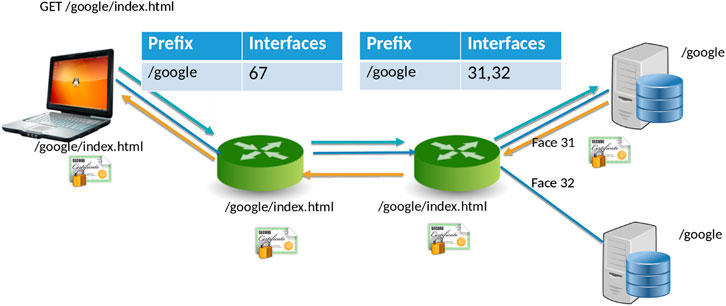

NDN[20] is a new networking paradigm that adopts a drastically different communication model than that current IP model. In NDN, data is accessed by content names (e.g., “/Human/DNA/Genome/hg38”) rather than through the host where it resides (e.g., ftp://ftp.ncbi.nlm.nih.gov/refseq/H_sapiens/annotation/GRCh38_latest/refseq_identifiers/GRCh38_latest_genomic.fna.gz). Naming the data allows the network to participate in operations that were not feasible before. Specifically, the network can take part in discovering and local caching of the data, merging similar requests, retrieval from multiple distributed data sources, and more. In NDN, the communication primitive is straightforward (Figure 1): the consumer asks for the content by content name (an “Interest” in NDN terminology), and the network forwards the request toward the publisher.

|

For communication, NDN uses two types of packets: Interest and Data. The content consumer initiates communication in NDN. To retrieve data, a consumer sends out an Interest packet into the network, which carries a name that identifies the desired data. One such content name (similar to a resource identifier) might be “/google/index.html”. A network router maintains a name-based forwarding table (FIB) (Figure 1). The router remembers the interface from which the request arrives and then forwards the Interest packet by looking up the name in its FIB. FIBs are populated using a name-based routing protocol such as Named-data Link State Routing Protocol (NLSR).[21]

NDN routes and forwards packets based on content names[22], which eliminates various problems that addresses pose in the IP architecture, such as address space exhaustion, Network Address Translation (NAT) traversal, mobility, and address management. In NDN, routers perform component-based longest prefix match of the Interest name the FIB. Routing in NDN is similar to IP routing. Instead of announcing IP prefixes, an NDN router announces name prefixes that it is willing to serve (e.g., “/google”). The announcement is propagated through the network and eventually populates the FIB of every router. Routers match incoming Interests against the FIB using longest prefix match. For example, “/google/videos/movie1.mpg” might match “/google” or “/google/video”. Though an unbounded namespace raises the question of how to maintain control over the routing table sizes and whether looking up variable-length, hierarchical names can be done at line rate, previous works have shown that it is indeed possible to forward packets at 100 Gbps or more.[23][24]

When the Interest reaches a node or router with the requested data, it packages the content under the same name (i.e., the request name), signs it with the producer’s signature, and returns it. For example, a request for “/google/index.html” brings back data under the same name “/google/index.html” that contains a payload with the actual data and the data producer’s (i.e., Google) signature. This Data packet follows the reverse path taken by the Interest. Note that Interest or Data packets do not carry any host information or IP addresses; they are simply forwarded based on names (for Interest packets) or state in the routers (for Data packets). Since every NDN Data packet is signed, the router can store it locally in a cache to satisfy future requests.

Hierarchical naming

There is no restriction on how content is named in NDN except they must be human-readable and hierarchical, and globally unique. The scientific communities develop the naming schemes as they see fit, and the uniqueness of names can be ensured by name registrars (similar to existing DNS Registrars).

The NDN design assumes hierarchically structured names, e.g., a genome sequence published by NCBI may have the name “/NCBI/Human/DNA/Genome/hg38”, where “/” indicates a separator between name components. The whole sequence may not fit in a single Data packet, so the segments (or chunks) of the sequence will have the names “/NCBI/Human/DNA/Genome/hg38/{1..n}“. Data that is routed and retrieved globally must have a globally unique name. This is achieved by creating a hierarchy of naming components, just like Domain Name System (DNS). In the example above, all sequences under NCBI will potentially reside under “/NCBI”; “/NCBI” is the name prefix that will be announced into the network. This hierarchical structure of names is useful both for applications and the network. For applications, it provides an opportunity to create structured, organized names. On the other hand, the network does not need to know all the possible content names, only a prefix. For example, “/NCBI” is sufficient for forwarding.

Data-centric security

In NDN, security is built into the content. Each piece of data is signed by the data producer and is carried with the content. Data signatures are mandatory; on receiving the data, applications can decide if they trust the publisher or not. The signature, coupled with data publisher information, enables the determination of data provenance. NDN’s data-centric security helps establish data provenance, e.g., users can verify content with names that begin with “/NCBI” is digitally signed by NCBI’s key.

NDN’s data-centric security decouples content from its original publisher and enables in-network caching; it is no longer critical where the data comes from since the client can verify the authenticity of the data. Unsigned data is rejected either in the network or at the receiving client. The receiver can get content from anyone, such as a repository, a router cache, or a neighbor—as well as the original publisher—and verify that the data is authentic.

In-network caching

Automatic in-network caching is enabled by naming data because a router can cache data packets in its content store to satisfy future requests. Unlike today’s Internet, NDN routers can reuse the cached data packets since they have persistent names and the producer’s signature. The cache (or Content Store) is an in-memory buffer that keeps packets temporarily for future requests. Data such as reference genomes can benefit from caching since caching the content near the user speeds up content delivery and reduces the load on the data servers. In addition to the CS, NDN supports persistent, disk-based repositories (repos).[25] These storage devices can support caching at a larger scale and CDN-like functionality without additional application-layer engineering.

In our previous work with the climate science and high-energy physics communities, we saw that even though scientific data is large, a strong locality of reference exists. We found that for climate data, even a 1 GB cache in the network speeds up data distribution significantly.[17] We observe similar patterns in the genomics community, where some of the reference genomes are very popular. These caches do not have to be at the core of the network. We anticipate most of the benefits will come from caching at the edge. For example, a large cache provisioned at the network gateway of a lab will benefit the scientists at that lab. In this case, the lab will provision and maintain their caches. If data is popular across many organizations, it is in the operators best interest to cache the data at the core since this will reduce latency and network traffic. Given that the price of data storage has gone down significantly (an 8 TB (8000 GB) hard-drive costs around $150, at the time of writing this paper), it does not significantly add to the operating costs of the labs. Additionally, new routers and switches are increasingly being shipped with storage, reducing the need for additional capital expenditure. Additionally, caching and cache maintenance is automated in NDN (it follows content popularity), eliminating the need to configure and maintain such storage.

Having introduced NDN in this section, we now enumerate the genomics data management problems and how NDN can solve them in the following section.

Genomics cyberinfrastructure challenges and solutions using NDN

The genomics community has made significant progress in recent decades. However, this progress has not been without challenges. A core challenge, like many other science domains, is data volume. Due to the low-cost sequencing instruments, the genomics community is rapidly approaching petascale data production at sequencing facilities housed in universities, research, and commercial centers. For example, the SRA repository at NCBI in Maryland, United States contains over 45 petabytes of high-throughput DNA sequence data, and there are other similar genomic data repositories around the world.[26][27] These data are complemented with metadata (though not always present or complete) representing evolutionary relationships, biological sample sources, measurement techniques, and biological conditions.[12]

Furthermore, while a large amount of data is accessible from large repositories such as the NCBI repository, a significant amount of genomics data resides in thousands of institutional repositories.

The current (preferred) way to publish data is to upload it to a central repository, e.g., NCBI, which is time-consuming and often requires effort from the scientists. The massively distributed nature of the data makes the genomics community unique. In other scientific communities, such as high-energy physics (HEP), climate, and astronomy, only a few large scale repositories serve most of the data. (Group and for Nuclear Research, 1991). For example, the Large Hadron Collider (LHC) produces most of the data for the HEP community at CERN, the telescopes (such as LSST and the to-be-built SKA) produces most of the data for astrophysics, and the supercomputers at various national labs produce climate simulation outputs. (Taylor and Doutriaux 2010)

References

- ↑ Cinquini, L.; Chrichton, D.; Mattmann, C. et al. (2014). "The Earth System Grid Federation: An open infrastructure for access to distributed geospatial data". Future Generation Computer Systems 36: 400–17. doi:10.1016/j.future.2013.07.002.

- ↑ ATLAS Collaboration; Aad, G.; Abat, E. et al. (2008). "The ATLAS Experiment at the CERN Large Hadron Collider". Journal of Instrumentation 3: S08003. doi:10.1088/1748-0221/3/08/S08003.

- ↑ Dewdney, P.E.; Hall, P.J.; Schillizzi, R.T. et al. (2009). "The Square Kilometre Array". Proceedings of the IEEE 97 (8): 1482-1496. doi:10.1109/JPROC.2009.2021005.

- ↑ LSST Dark Energy Science Collaboration; Abate, A.; Aldering, G. et al. (2012). "Large Synoptic Survey Telescope Dark Energy Science Collaboration". arXiv: 1–133. https://arxiv.org/abs/1211.0310v1.

- ↑ 5.0 5.1 Sayers, E.W.; Beck, J.; Brister, J.R. et al. (2020). "Database resources of the National Center for Biotechnology Information". Nucleic Acids Research (D1): D9–D16. doi:10.1093/nar/gkz899. PMC PMC6943063. PMID 31602479. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC6943063.

- ↑ Tsuchiya, S.; Sakamoto, Y.; Tsuchimoto, Y. et al. (2012). "Big Data Processing in Cloud Environments" (PDF). Fujitsu Scientific & Technical Journal 48 (2): 159–68. https://www.fujitsu.com/downloads/MAG/vol48-2/paper09.pdf.

- ↑ Luo, J.; Wu, M.; Gopukumar, D. et al. (2016). "Big Data Application in Biomedical Research and Health Care: A Literature Review". Biomedical Informatics Insights 8: 1–10. doi:10.4137/BII.S31559. PMC PMC4720168. PMID 26843812. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4720168.

- ↑ 8.0 8.1 Shannigrahi, S.; Fan, C.; Papadopoulos, C. et al. (2018). "NDN-SCI for managing large scale genomics data". Proceedings of the 5th ACM Conference on Information-Centric Networking: 204–05. doi:10.1145/3267955.3269022.

- ↑ McCombie, W.R.; McPherson, J.D.; Mardis, E.R. (2016). "Next-Generation Sequencing Technologies". Cold Spring Harbor Perspectives in Medicine 9 (11): 1–10. doi:10.4137/BII.S31559. PMC PMC4720168. PMID 26843812. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4720168.

- ↑ National Human Genome Research Institute (2020). "The Cost of Sequencing a Human Genome". National Institutes of Health. https://www.genome.gov/about-genomics/fact-sheets/Sequencing-Human-Genome-cost. Retrieved 16 March 2020.

- ↑ Lowy-Gallego, E.; Fairley, S.; Zheng-Bradley, X. et al. (2019). "Variant calling on the GRCh38 assembly with the data from phase three of the 1000 Genomes Project". Wellcome Open Research 4: 50. doi:10.12688/wellcomeopenres.15126.2. PMC PMC7059836. PMID 32175479. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC7059836.

- ↑ 12.0 12.1 NCBI (2020). "Sequence Read Archive". NCBI. https://trace.ncbi.nlm.nih.gov/Traces/sra/. Retrieved 04 February 2020.

- ↑ Stephens, Z.D.; Lee, S.Y.; Faghri, F. et al. (2015). "Big Data: Astronomical or Genomical?". PLoS Biology 13 (7): e1002195. doi:10.1371/journal.pbio.1002195. PMC PMC4494865. PMID 26151137. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4494865.

- ↑ Olschanowsky, C.; Shannigrahi, S. Papadopolous, C. et al. (2014). "Supporting climate research using named data networking". Proceedings of the IEEE 20th International Workshop on Local & Metropolitan Area Networks: 1–6. doi:10.1109/LANMAN.2014.7028640.

- ↑ Fan, C.l Shannigrahi, S.; DiBenedetto, S. et al. (2015). "Managing scientific data with named data networking". Proceedings of the Fifth International Workshop on Network-Aware Data Management: 1–7. doi:10.1145/2832099.2832100.

- ↑ Shannigrahi, S.; Papadopolous, C.; Yeh, E. et al. (2015). "Named Data Networking in Climate Research and HEP Applications". Journal of Physics: Conference Series 664: 052033. doi:10.1088/1742-6596/664/5/052033.

- ↑ 17.0 17.1 Shannigrahi, S.; Fan, C.; Papadopolous, C. (2017). "Request aggregation, caching, and forwarding strategies for improving large climate data distribution with NDN: a case study". Proceedings of the 4th ACM Conference on Information-Centric Networking: 54–65. doi:10.1145/3125719.3125722.

- ↑ Shannigrahi, S.; Fan, C.; Papadopolous, C. et al. (2018). "Named Data Networking Strategies for Improving Large Scientific Data Transfers". Proceedings of the 2018 IEEE International Conference on Communications Workshops. doi:10.1109/ICCW.2018.8403576.

- ↑ Hadish, J.; Biggs, T.; Shealy, B. et al. (22 January 2020). "SystemsGenetics/GEMmaker: Release v1.1". Zenodo. doi:10.5281/zenodo.3620945. https://zenodo.org/record/3620945.

- ↑ 20.0 20.1 Zhang, L.; Afanasyev, A.; Burke, J. et al. (2014). "Named data networking". ACM SIGCOMM Computer Communication Review 44 (3): 66-73. doi:10.1145/2656877.2656887.

- ↑ Hoque, A.K.M.M.; Amin, S.O.; Alyyan, A. et al. (2013). "NLSR: Named-data link state routing protocol". Proceedings of the 3rd ACM SIGCOMM workshop on Information-centric networking: 15–20. doi:10.1145/2491224.2491231.

- ↑ Afanasyev, A.; Shi, J.; Zhang, B. et al. (October 2016). "NFD Developer’s Guide - Revision 7". NDN, Technical Report NDN-0021. https://named-data.net/publications/techreports/ndn-0021-7-nfd-developer-guide/.

- ↑ So, W.; Narayanan, A.; Oran, D. et al. (2013). "Named data networking on a router: Forwarding at 20gbps and beyond". ACM SIGCOMM Computer Communication Review 43 (4): 495–6. doi:10.1145/2534169.2491699.

- ↑ Khoussi, S.; Nouri, A.; Shi, J. et al. (2019). "Performance Evaluation of the NDN Data Plane Using Statistical Model Checking". Proceedings of the International Symposium on Automated Technology for Verification and Analysis: 534–50. doi:10.1007/978-3-030-31784-3_31.

- ↑ Chen, S.; Shi, W; Cao, A. et al. (2014). "NDN Repo: An NDN Persistent Storage Model" (PDF). Tsinghua University. http://learn.tsinghua.edu.cn:8080/2011011088/WeiqiShi/content/NDNRepo.pdf.

- ↑ "DDBJ". Research Organization of Information and Systems. 2019. https://www.ddbj.nig.ac.jp/index-e.html. Retrieved 21 October 2019.

- ↑ "EMBL-EBI". Wellcome Genome Campus. 2021. https://www.ebi.ac.uk/. Retrieved 02 February 2021.

- ↑ Lathe, W.; williams, J.; Mangan, M. et al. (2008). "Genomic Data Resources: Challenges and Promises". Nature Education 1 (3): 2. https://www.nature.com/scitable/topicpage/genomic-data-resources-challenges-and-promises-743721/.

- ↑ Dankar, F.K.; Ptisyn, A.; Dankar, S.K. (2018). "The development of large-scale de-identified biomedical databases in the age of genomics—principles and challenges". Human Genomics 12: 19. doi:10.1186/s40246-018-0147-5. PMC PMC5894154. PMID 29636096. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC5894154.

- ↑ National Genomics Data Center Members and Partners (2020). "Database Resources of the National Genomics Data Center in 2020". Nucleic Acids Research 48 (D1): D24–33. doi:10.1093/nar/gkz913. PMC PMC7145560. PMID 31702008. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC7145560.

Notes

This presentation is faithful to the original, with only a few minor changes to presentation. In some cases important information was missing from the references, and that information was added. The original paper listed references alphabetically; this wiki lists them by order of appearance, by design. The two footnotes were turned into inline references for convenience.