Journal:Quality control in the clinical biochemistry laboratory: A glance

| Full article title | Quality control in the clinical biochemistry laboratory: A glance |

|---|---|

| Journal | Journal of Clinical and Diagnostic Research |

| Author(s) | Naphade, Manoj; Ankur, Panchbudhe S.; Rajendra, Shivkar R. |

| Author affiliation(s) | Kiran Medical College, Smt. Kashibai Navale Medical College and General Hospital |

| Primary contact | drnmanoj at gmail dot com |

| Year published | 2023 |

| Volume and issue | 17(2) |

| Page(s) | BE01 - BE04 |

| DOI | 10.7860/JCDR/2023/58635.17447 |

| ISSN | 0973-709X |

| Distribution license | Creative Commons Attribution-NonCommercial-NoDerivs 4.0 International |

| Website | https://www.jcdr.net//article_fulltext.asp |

| Download | https://www.jcdr.net//articles/PDF/17447/58635_CE(AD)_F(IS)_PF1(AKA_SS)_PFA(SS)_PN(SS).pdf (PDF) |

|

|

This article should be considered a work in progress and incomplete. Consider this article incomplete until this notice is removed. |

Abstract

Quality control (QC) is a process, designed to ensure reliable test results. It is part of overall laboratory quality management in terms of accuracy, reliability, and timeliness of reported test results. Two types of QC are exercised in clinical biochemistry: internal QC (IQC) and external quality assurance (QA). IQC represents the quality methods performed every day by laboratory personnel with the laboratory’s materials and equipment. It primarily checks the precision (i.e., repeatability or reproducibility) of the test method. External quality assurance service (EQAS) is performed periodically (i.e., every month, every two months, twice a year) by the laboratory personnel, who primarily are checking the accuracy of the laboratory’s analytical methods. Consequences of inaccurate results could be unnecessary treatment, treatment complications, failure to provide the proper treatment, delay in correct diagnosis, additional and unnecessary diagnostic testing, leading to increased healthcare costs (in terms of time and personnel effort) and often in poor patient outcomes. By running QC, a laboratory self-monitors its testing process and substantiates that the results produced are accurate and precise. The lab's quality management system (QMS), looking at every aspect of the laboratory from sample collection to result dispatch, is very important for achieving and maintaining good laboratory performance. A QC program allows the laboratory to differentiate between normal variation and error. This review article outlines the indispensable role of QC in the clinical biochemistry laboratory, which ensures patient satisfaction, ensures the credibility of the laboratory, generates confidence in laboratory results, and reduces unnecessary financial burden.

Keywords: accuracy, error, precision, quality control, quality management

Introduction

Laboratory investigations are indispensable tools in the healthcare delivery system. Patient-based laboratory testing is used for confirmation of diagnosis, as well as to monitor the progress of the patient and response to the treatment. [1] In today’s era of evidence-based medicine, a clinical laboratory acts like a platform on which all departments rely for timely delivery of patient care. [1] Upwards of 70 percent of clinical decisions are made with the help of diagnostic tests. [2]

A clinical sample (i.e., specimen) goes through three phases before a report confirming results is generated: pre-analytical, analytical, and post-analytical. [3] The pre-analytical phase involves all the steps before a sample is acknowledged by the laboratory, ranging from ordering of the test, patient preparation, sample collection, transportation, accession, and specimen preparation. The analytical phase refers to the processing or analysis of the sample using an autoanalyzer or standardized method. Finally, the post-analytical phase starts after a result/signal is received from an instrument and recorded (either automatically via laboratory information system [LIS] or manually via transcribing the report), interpreted by the physician, and further followed-up with the patient. [3]

Sources of laboratory errors

Within these three phases, there are a number of possible errors that can affect the quality of the clinical laboratory output. To improve quality, all three phases can be targeted individually, although it is well published that most errors occur in the pre- and post-analytical phases. [4–6] We examine each phase to see which errors are most common to each.

Pre-analytical errors

Common problems encountered and their possible resolutions in the pre-analytical phase are as follows [1]:

- Ordering investigations: Physicians should make an informed and conscious choice before ordering a test, keeping in mind the relevance for correct diagnosis, as well as the irrelevance of tests that may not add to the already available clinical knowledge concerning the patient. [1]

- Incomplete laboratory request forms: Legibility and completeness of the form are important to ensure the correct analyses are performed. For example, age or gender of the person may not be mentioned on the form, leading to an ill-advised test for that age or gender being used. [1]

- Patient preparation: Certain tests require a few precautions or steps of preparation be followed by the patient. For example, some tests require that the patient be fasting, such as blood glucose and lipid profile analyses. There may also be special timing issues for tests such as drug levels and hormone tests. This needs to be addressed to ensure that a reliable result is generated in the end since an error at this phase makes the following steps of analysis and interpretation irrelevant, even if performed to perfection. [1]

- Specimen collection (potential outcomes of collection errors): Incorrect phlebotomy practices and patient information can lead to an inadequate quantity of sample collection. For example, lipemic or hemolyzed samples make their further processing difficult or impossible and lead to subsequent unreliable results. Blood tests might require serum, plasma, or whole blood. Other tests might require urine or saliva. [7]

- Wrong patient-specimen identification/wrong labelling of the containers: Patient identification errors before sample collection account for up to 25 percent of all pre-analytical errors. [8] This can lead to patients being diagnosed and treated based on a sample from another patient. If not identified or correlated, outcomes can be catastrophic.

- Transportation: The conditions and time between sample collection and analysis, if not followed properly, are enough to affect certain analyses’ values.

- Errors in specimen preparation: The time spent processing the sample—including centrifugation speed and temperature, light exposure, and aliquot preparation—are critical considerations that must be weighed before sample processing is carried out. Not properly processing a specimen before the test, or the introduction of substances which interfere with test performance, may affect analytical results. [9]

- Limitations in reducing preanalytical errors: A more significant pre-analytical error source is biological variance, not linked to and uncontrollable by human error. [1]

Pre-analytical errors can be minimized by checking the test requisition form, the name of the patient on the vacutainer, and the requested tests. The implementation of barcode technology for specimen identification has been the major advance in the automation of the laboratory. A barcode label generated by a LIS can be read by one or more barcode readers placed in key positions in the analytical system. Barcode technology has the advantages of not requiring work lists for the workflow and preventing the mix-up of tubes in analyzers during sampling. [10] Pre-analytical errors can also be kept in check by working with the patient regarding food intake, alcohol intake, any drug usage, smoking, etc., as these factors may influence the result. Aside from instructing patients properly for the collection of the sample, the clinical lab can [7]:

- confirm the use of correct anticoagulant and the adequate amount of sample;

- inspect the serum for hemolysis or lipemic index;

- record the time at which the sample is received and when the report is ready and dispatched; and

- observe standard operating procedures (SOPs) for sample processing, as even time of separation, centrifuge speed, and the temperature at which a sample gets separated are important.

Analytical errors

Reliable test results can be achieved by a careful selection, evaluation, and implementation of analytical methods for investigations. Equipment, reagents, and consumables make up integral parts of the analytical process. A good equipment management system helps to maintain a high level of laboratory performance; reduces variation in test results, while improving the technologist’s confidence in the accuracy of testing results; and lowers repair costs, while reducing interruption of services due to breakdowns and failures. Improved quality and reproducibility of test results is also one of the biggest advantage of using automation in the analytical phase by minimizing errors due to carry-over of samples and inadequate sample mix up. [11] One of the goals of QC is to differentiate between normal variation and errors.

Common problems encountered in the analytical phase include:

- Reference ranges: While reporting results, laboratories should have well-established reference ranges based on physiological parameters such as age, the period of gestation in case of pregnancy, and gender (rather than ambiguous and general ones), as the interpreting physician will be treated based on these ranges provided. [7]

- Participation without action: Although the lab participates in quality programs, until and unless they use the information received through that participation, mere participation will not help improve analytical errors.

- Verify test performance: For any laboratory test parameter, its performance should be evaluated and verified with respect to its sensitivity, specificity, linearity, and precision.

- Total allowable error (TEa): Errors that occur during the analytical phase could be either random or systematic. TEa sets a limit for combined imprecision (random error) and bias (inaccuracy, or systematic error) that are tolerable in a single measurement or single test result to ensure clinical usefulness of that particular result. Defining the allowable error is important for high accuracy and precision of the analytical process. [12–13]

Analytical errors can be kept down by use of auto-analyzers for analysis of test parameters, ensuring the validity and acceptability of a new program, instrument, and technique for a particular test. They can also be minimized by verifying the reportable range, precision, analytical sensitivity, interferences, and accuracy, as provided by the test-specific kit insert. Analytical errors can also be held in check by using reference ranges specific to the physiological conditions of the patients, such as age, gender, and gestation, in case of pregnancy. These reference ranges should be verified by running samples of healthy individuals. Other things the lab can do include [7]:

- scheduling daily and monthly preventive maintenance for each instrument;

- checking water quality, power supply, calibration of electrical balance, and calibration of glassware and pipettes;

- maintaining records of date for reagents and kits when received and when opened,

- running new lots of the reagents with the old lot in parallel before being used for analysis; and

- monitoring QC using internal QC (IQC) and external quality assurance service (EQAS) programs.

Post-analytical errors

This stage refers to transmission of data from analyzers to the LIS, validation of results that have been produced, and posting of the results to physicians or patients in a timely fashion in order for the analytical work to be of diagnostic and therapeutic utility. A wrong result is as equally bad as a late one, especially for critical values that, if not reported at the right time to the right physician, would delay lifesaving intervention. Common post-analytical errors include incorrect calculation or unit of measurement, transcription error, delay in delivering the results to the physicians, clinic or patient results being sent to the wrong patient, and loss of the results. [7,13]

Automation has helped reduce these errors, for example by directly transferring results from an instrument to an LIS. Linking the availability of critical values directly to the mobile patient portal of the healthcare provider has further reduced time to a notification. [14]

Consequences of errors at any level can lead to delayed results, repeated visits, avoidable multiple sample collection actions, and incorrect results, and thereby incorrect or delayed diagnosis and treatment. The quality of patient care is compromised, adding to the overall cost and patient dissatisfaction, and at worst having lethal repercussions. [15]

Quality control

Irrespective of the phase during which an error occurs, defending the authenticity of the analytical result lies with the laboratory. [16] Reducing laboratory errors not only increases the confidence of reporting physicians but also of the patients in the system and helps curb any unnecessary expenditure of the hospital and lab. Thus it is of interest to check laboratory errors occurring in each laboratory and formulate corrective measures to avoid them. [1] This is where QC comes in, as do the elements of accuracy and precision. These elements can be defined as such:

- Accuracy: Accuracy refers to the closeness of a result to the actual value (i.e., true value). It is generally measured by direct comparison to a reference by using QC serum, with an accurate value assigned to it by the manufacturer. [13]

- Precision: Precision refers to the reproducibility or closeness of values to each other. [13]

Ideally, a laboratory should be trying for both high accuracy and precision. These can be gauged using statistical tools.

Statistical tools and terminology used in the laboratory for QC

It is very well-known that treatment of patients depends to a great extent on the reports generated by the clinical laboratory. As such, the results generated by the laboratory should be accurate. The laboratory data which is generated needs to be summarized in order to monitor test performance, i.e., quality control. [17] Statistical analysis that considers normal or Gaussian distribution comes into play here. These distribution models form the basis of statistical QC theory. A Gaussian curve indicates that about 68 percent of all values would fall within one standard deviation from the mean, while 95 percent would be expected to fall within two standard deviations and 99.7 percent would be expected to fall within three standard deviations. If the value falls between one standard deviation range, it indicates a good control. [13]

Terms used in statistical analysis include[13],[18]:

- Mean: This is the most commonly used term. It is the sum of data divided by the number of observations.

- Mode: This is the value that occurs most frequently in a list of observations. It is not affected by extreme values.

- Median: This is the number that occupies the central position when the data is arranged in ascending or descending manner.

- Standard deviation (SD): This is a measure of how much the data varies around the mean. It is a primary indicator of precision. It is very useful to the laboratory in analyzing QC results.

- Coefficient of variation (CV): This is the ratio of standard deviation to the mean and is expressed as a percentage.

- Standard deviation index (SDI): This is the difference between individual value subtracted from the group mean divided by the SD of the group, also known as the Z-statistic. It is used for peer-group comparison.

Quality control in the laboratory=

The QC process monitors and evaluates the analytical process that produces patient results. The aim of QC is to evaluate the errors in the pre-analytical, analytical, and post-analytical phases before test results are reported. The question of reliability for most testing can be resolved by regular use of QC materials. Further, reliability of test results is ensured by regular testing of QC products and statistical process control [18] before running patient samples. QC results are acceptable when they are in the acceptable range of the error limit and are unacceptable when results show excessive errors and are out-of-range. [18] We now take a look at QC materials and other methods of QC in the lab.

QC materials

QC materials resemble human serum, plasma, blood, urine and cerebrospinal fluid and contain analytes of known concentration which are determined by the laboratory, ideally in concentration close to the decision limits where medical decision is required. These QC materials can be liquid (ready-to-use) or freeze dried (lyophilized). They need to be stable for prolonged periods without any interfering preservatives; easy to store and dispense; free from communicable diseases like bacteria, viruses, and fungi; and affordable. Control samples with the same analytes but different concentrations are called levels. Normal level control contains normal levels for the analyte being tested. Abnormal level control contains the analyte at a concentration above or below the normal range for the analyte. Different levels check the performance of laboratory methods across all their measuring range. In most cases, control samples are manufactured by analyzer or reagent manufacturers, but they can also be made by laboratory personnel. [7],[13],[18]

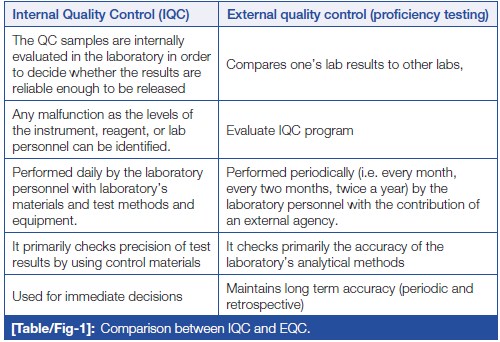

Irrespective of the size of the laboratory, minimum two levels of QC should be run once on the day of performing the test. If the laboratory is operational round the clock, two level controls should be run in the peak hour, subsequently one level every eight hours (19). In addition to above, after an instrument’s servicing, a change in reagent lots, after calibration, and whenever patient results seem inappropriate, QC material should be run to assure the results. This can be done with the help of IQC and EQAS, which are complementary to each other. [20] IQC includes all QC methods which are performed everyday by the laboratory personnel to check primarily the precision (repeatability or reproducibility) of the method with the laboratory’s materials and equipment. While EQAS comprises of all QC methods which are performed periodically (i.e., every month, every two months, twice a year) by the laboratory personnel with the contribution of an external center (e.g., referral laboratory, scientific associations, diagnostic industry, etc.), reflecting primarily the accuracy of the laboratory’s analytical methods. IQC and EQAS are compared in (Table/Fig 1).

|

QC charts

QC is a statistical process. QC charts are used to represent the values of control material within the defined upper and lower limit. The following are important charting methods.

Levey-Jennings chart

The Levey-Jennings chart is the most important control chart in laboratory QC. It can be used in IQC and EQAS as well. It detects all kinds of analytical errors (random and systematic) and is used for the estimation of their magnitude. [21] This graphical method displays controls values and evaluates whether a procedure is in-control or out-of-control. Daily control values are plotted versus time. Lines are drawn from point to point to better understand any systematic or random errors. [21]

Westgard rules

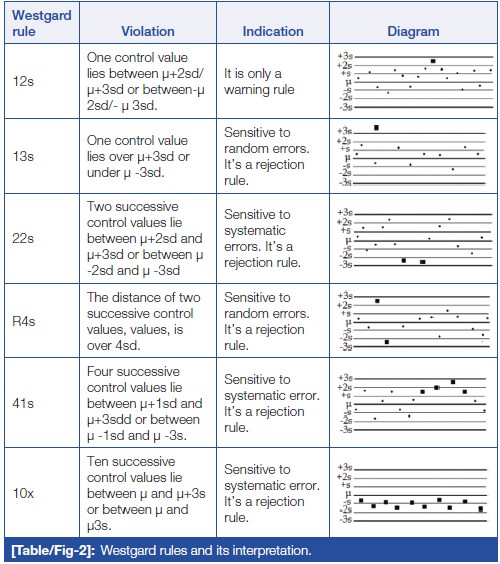

Error detection in the analytical phase of sample processing can be done with the help of Westgard rules. [22] Westgard devised a shorthand notation for expressing QC rules, such as "NL," where N represents the number of control observations to be evaluated and L represents the statistical limit for evaluating the control observations. These rules can be applied as single rules and as multi-rules. It also helps to decide whether the analytical run is in-control or out-of-control. (Table/Fig 2) shows Westgard rules and their interpretation.

|

Important steps to follow in case of QC failure are stop testing samples/release of reports, searching for recent events that could have caused the changes, examining environmental conditions like change in room temperature or humidity, following the manufacturer’s troubleshooting guide, root cause analysis (RCA), and corrective and preventive actions (CAPA). Corrective action stops the occurrence of non-conformities. Corrective action has to be taken when there is a problem. Preventive action gives the opportunity to prevent potential non-conformities and determine the type of error. [17]

QC errors (errors in the analytical process) are classified into systematic errors and random errors. Some errors encounter as both. Random error affects individual samples in a random and unpredictable manner, with a lack of reproducibility. It may be due to air bubbles in the reagents, inadequately mixed reagents, unstable temperature and incubation, unstable power supply, Fibrin clot in the sample probe, poor operator technique, or sudden failure or change in the light source. Systematic error displaces the mean value in one direction, which may go up and down and affect every test in a constant predictable manner. [17]

Shifts and trends

A shift is when the QC values move suddenly upwards or downwards from the mean and continue the same way, mathematically changing the mean, which in turn may be due to change in reagent lot and/or calibrator lot, change in temperature of incubators and reaction blocks, inaccurate calibration, etc. A trend is when the QC value slowly moves up or down from the mean and continues moving the same direction over time, which in turn may be due to deterioration of reagents/calibrators/control material, deterioration of instrument light source, gradual accumulation of debris in sample and/or reagent tubing, and failing calibration. [17]

Random and systematic errors must be detected at an early stage, and every effort should be taken in order to minimize them. These can be avoided by having well-trained staff; well-designed SOPs; regular maintenance of instruments, temperature controls, and electrical supplies; and thorough checking of the results. [17]

Conclusion

Laboratory investigations are a major contributor to most clinical decisions and one of the indispensable tools in modern healthcare. QC is one of the components of quality management and is a statistical way to monitor and evaluate the analytical process. QC not only ensures credibility of the laboratory but also generates confidence of customers in the laboratory's results. Reliable and confident laboratory testing avoids misdiagnosis, delayed treatment, and unnecessary costing of repeat testing. As such, the individual laboratory should assess and analyze their own QC process to find out the possible root cause of any digressive test results which are not correlating with patients' clinical presentation or expected response to treatment. Vigorous QC processes observing the latest technical advancements will contribute to the reduction in resource wastage and the minimization of errors in patient management.

Acknowledgements

Competing interests

None declared.

References

Notes

This presentation is faithful to the original, with changes to presentation, spelling, and grammar as needed. The PMCID and DOI were added when they were missing from the original reference. No other changes were made in accordance with the "NoDerivatives" portion of the content license.