Journal:The challenges of data quality and data quality assessment in the big data era

| Full article title | The challenges of data quality and data quality assessment in the big data era |

|---|---|

| Journal | Data Science Journal |

| Author(s) | Cai, Li; Zhu, Yangyong |

| Author affiliation(s) | Fudan University and Yunnan University |

| Primary contact | Email: lcai at fudan dot edu dot cn |

| Year published | 2015 |

| Volume and issue | 14 |

| Page(s) | 2 |

| DOI | 10.5334/dsj-2015-002 |

| ISSN | 1683-1470 |

| Distribution license | Creative Commons Attribution 4.0 International |

| Website | http://datascience.codata.org/articles/10.5334/dsj-2015-002/ |

| Download | http://datascience.codata.org/articles/10.5334/dsj-2015-002/galley/550/download/ (PDF) |

|

|

This article should not be considered complete until this message box has been removed. This is a work in progress. |

Abstract

High-quality data are the precondition for analyzing and using big data and for guaranteeing the value of the data. Currently, comprehensive analysis and research of quality standards and quality assessment methods for big data are lacking. First, this paper summarizes reviews of data quality research. Second, this paper analyzes the data characteristics of the big data environment, presents quality challenges faced by big data, and formulates a hierarchical data quality framework from the perspective of data users. This framework consists of big data quality dimensions, quality characteristics, and quality indexes. Finally, on the basis of this framework, this paper constructs a dynamic assessment process for data quality. This process has good expansibility and adaptability and can meet the needs of big data quality assessment. The research results enrich the theoretical scope of big data and lay a solid foundation for the future by establishing an assessment model and studying evaluation algorithms.

Introduction

Many significant technological changes have occurred in the information technology industry since the beginning of the 21st century, such as cloud computing, the Internet of Things, and social networking. The development of these technologies has made the amount of data increase continuously and accumulate at an unprecedented speed. All the above mentioned technologies announce the coming of big data.[1] Currently, the amount of global data is growing exponentially. The data unit is no longer the gigabyte (GB) and terabyte (TB), but the petabyte (PB; 1 PB = 210 TB), exabyte (EB; 1 EB = 210 PB), and zettabyte (ZB; 1 ZB = 210 EB). According to IDC’s “Digital Universe” forecasts[2], 40 ZB of data will be generated by 2020.

The emergence of an era of big data attracts the attention of industry, academics, and government. For example, in 2012, the U.S. government invested $200 million to start the "Big Data Research and Development Initiative."[3] Nature launched a special issue on big data.[4] Science also published a special issue "Dealing with Data," which illustrated the importance of big data for scientific research.[5] In addition, the development and utilization of big data have been spread widely in the medical field, retail, finance, manufacturing, logistics, telecommunications, and other industries and have generated great social value and industrial potential.[6]

By rapidly acquiring and analyzing big data from various sources and with various uses, researchers and decision-makers have gradually realized that this massive amount of information has benefits for understanding customer needs, improving service quality, and predicting and preventing risks. However, the use and analysis of big data must be based on accurate and high-quality data, which is a necessary condition for generating value from big data. Therefore, we analyzed the challenges faced by big data and proposed a quality assessment framework and assessment process for it.

Literature review on data quality

In the 1950s, researchers began to study quality issues, especially for the quality of products, and a series of definitions (for example, quality is "the degree to which a set of inherent characteristics fulfill the requirements"[7]; "fitness for use"[8]; "conformance to requirements"[9]) were published. Later, with the rapid development of information technology, research turned to the study of the data quality.

Research on data quality started abroad in the 1990s, and many scholars proposed different definitions of data quality and division methods of quality dimensions. The Total Data Quality Management group of MIT University led by Professor Richard Y. Wang has done in-depth research in the data quality area. They defined data quality as "fitness for use"[8] and proposed that data quality judgment depends on data consumers. At the same time, they defined a "data quality dimension" as a set of data quality attributes that represent a single aspect or construct of data quality. They used a two-stage survey to identify four categories containing 15 data quality dimensions.

Some literature regarded web data as research objects and proposed individual data quality standards and quality measures. Alexander and Tate[10] described six evaluation criteria - authority, accuracy, objectivity, currency, coverage/intended audience, and interaction/transaction features for web data. Katerattanakul and Siau[11] developed four categories for the information quality of an individual website and a questionnaire to test the importance of each of these newly developed information quality categories and how web users determine the information quality of individual sites. For information retrieval, Gauch[12] proposed six quality metrics, including currency, availability, information-to-noise ratio, authority, popularity, and cohesiveness, to investigate.

From the perspective of society and culture, Shanks and Corbitt[13] studied data quality and set up an emiotic-based framework for data quality with four levels and a total of 11 quality dimensions. Knight and Burn[14] summarized the most common dimensions and the frequency with which they are included in the different data quality/information quality frameworks. Then they presented the IQIP (Identify, Quantify, Implement, and Perfect) model as an approach to managing the choice and implementation of quality related algorithms of an internet crawling search engine.

According to the U.S. National Institute of Statistical Sciences (NISS)[15], the principles of data quality are: 1. data are a product, with customers, to whom they have both cost and value; 2. as a product, data have quality, resulting from the process by which data are generated; 3. data quality depends on multiple factors, including (at least) the purpose for which the data are used, the user, the time, etc.

Research in China on data quality began later than research abroad. The 63rd Research Institute of the PLA General Staff Headquarters created a data quality research group in 2008. They discussed basic problems with data quality such as definition, error sources, improving approaches, etc.[16] In 2011, Xi’an Jiaotong University set up a research group of information quality that analyzed the challenges and importance of assuring the quality of big data and response measures in the aspects of process, technology, and management.[17] The Computer Network Information Center of the Chinese Academy of Sciences proposed a data quality assessment method and index system[18] in which data quality is divided into three categories including external form quality, content quality, and the utility of quality. Each category is subdivided into quality characteristics and an evaluation index.

In summary, the existing studies focus on two aspects: a series of studies of web data quality and studies in specific areas, such as biology, medicine, geophysics, telecommunications, scientific data, etc. Big data as an emerging technology, acquires more and more attention but also lacks research results in establishing big data quality and assessment methods under multi-source, multi-modal environments.[19]

The challenges of data quality in the big data era

Features of big data

Because big data presents new features, its data quality also faces many challenges. The characteristics of big data come down to the 4Vs: Volume, Velocity, Variety, and Value.[20] Volume refers to the tremendous volume of the data. We usually use terabyte or above magnitudes to measure this data volume. Velocity means that data are being formed at an unprecedented speed and must be dealt with in a timely manner. Variety indicates that big data has all kinds of data types, and this diversity divides the data into structured data and unstructured data. These multityped data need higher data processing capabilities. Finally, Value represents low-value density. Value density is inversely proportional to total data size, the greater the big data scale, the less relatively valuable the data.

The challenges of data quality

Because big data has the 4V characteristics, when enterprises use and process big data, extracting high-quality and real data from the massive, variable, and complicated data sets becomes an urgent issue. At present, big data quality faces the following challenges:

1. The diversity of data sources brings abundant data types and complex data structures and increases the difficulty of data integration.

In the past, enterprises only used the data generated from their own business systems, such as sales and inventory data. But now, data collected and analyzed by enterprises have surpassed this scope. Big data sources are very wide, including: 1) data sets from the internet and mobile internet[21]; 2) data from the Internet of Things; 3) data collected by various industries; 4) scientific experimental and observational data[22], such as high-energy physics experimental data, biological data, and space observation data. These sources produce rich data types. One data type is unstructured data, for example, documents, video, audio, etc. The second type is semi-structured data, including: software packages/modules, spreadsheets, and financial reports. The third is structured data. The quantity of unstructured data occupies more than 80% of the total amount of data in existence.

As for enterprises, obtaining big data with complex structure from different sources and effectively integrating them are a daunting task.[23] There are conflicts and inconsistent or contradictory phenomena among data from different sources. In the case of small data volume, the data can be checked by a manual search or programming, even by ETL (Extract, Transform, Load) or ELT (Extract, Load, Transform). However, these methods are useless when processing PB-level even EB-level data volume.

2. Data volume is tremendous, and it is difficult to judge data quality within a reasonable amount of time.

After the industrial revolution, the amount of information dominated by characters doubled every ten years. After 1970, the amount of information doubled every three years. Today, the global amount of information can be doubled every two years. In 2011, the amount of global data created and copied reached 1.8 ZB. It is difficult to collect, clean, integrate, and finally obtain the necessary high-quality data within a reasonable time frame. Because the proportion of unstructured data in big data is very high, it will take a lot of time to transform unstructured types into structured types and further process the data. This is a great challenge to the existing techniques of data processing quality.

3. Data change very fast and the “timeliness” of data is very short, which necessitates higher requirements for processing technology.

Due to the rapid changes in big data, the “timeliness” of some data is very short. If companies can’t collect the required data in real time or deal with the data needs over a very long time, then they may obtain outdated and invalid information. Processing and analysis based on these data will produce useless or misleading conclusions, eventually leading to decision-making mistakes by governments or enterprises. At present, real-time processing and analysis software for big data is still in development or improvement phases; really effective commercial products are few.

4. No unified and approved data quality standards have been formed in China and abroad, and research on the data quality of big data has just begun.

In order to guarantee the product quality and improve benefits to enterprises, in 1987 the International Organization for Standardization (ISO) published ISO 9000 standards. Nowadays, there are more than 100 countries and regions all over the world actively carrying out these standards. This implementation promotes mutual understanding among enterprises in domestic and international trade and brings the benefit of eliminating trade barriers. By contrast, the study of data quality standards began in the 1990s, but not until 2011 did ISO published ISO 8000 data quality standards.[24] At present, more than 20 countries have participated in this standard, but there are many disputes about it. The standards need to be mature and perfect. At the same time, research on big data quality in China and abroad has just begun and there are, as yet, few results.

Quality criteria of big data

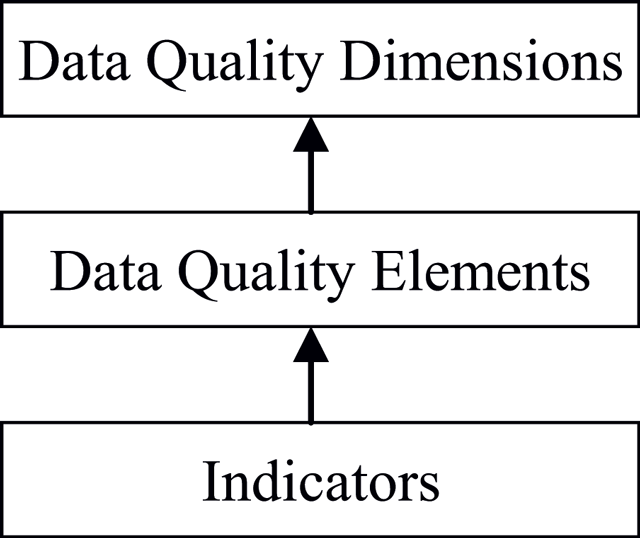

Big data is a new concept, and academia hasn’t made a uniform definition of its data quality and quality criteria. The literature differs on a definition of data quality, but one thing is certain: data quality depends not only on its own features but also on the business environment using the data, including business processes and business users. Only the data that conform to the relevant uses and meet requirements can be considered qualified (or good quality) data. Usually, data quality standards are developed from the perspective of data producers. In the past, data consumers were either direct or indirect data producers, which ensured the data quality. However, in the age of big data, with the diversity of data sources, data users are not necessarily data producers. Thus, it is very difficult to measure data quality. Therefore, we propose a hierarchical data quality standard from the perspective of the users, as shown in Figure 1.

|

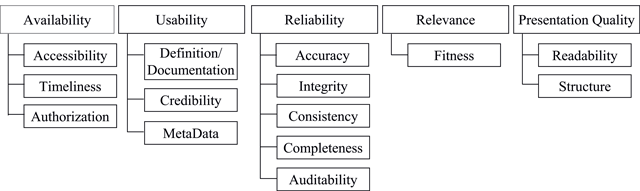

We chose data quality dimensions commonly accepted and widely used as big data quality standards and redefined their basic concepts based on actual business needs. At the same time, each dimension was divided into many typical elements associated with it, and each element has its own corresponding quality indicators. In this way, hierarchical quality standards for big data were used for evaluation. Figure 2 shows a universal two-layer data quality standard. Some detailed data quality indicators are given in Table 1.

|

| |||||||||||||||||||||||||||||||||||||||||||||

References

- ↑ Meng, X.; Ci, X. (2013). "Big Data Management: Concepts, Techniques and Challenges". Journal of Computer Research and Development 50 (1): 146–169. http://crad.ict.ac.cn/EN/abstract/abstract715.shtml.

- ↑ Gantz, J; Reinsel, D.; Arend, C. (February 2013). "The Digital Universe in 2020: Big Data, Bigger Digital Shadows, and Biggest Growth in the Far East — Western Europe" (PDF). IDC. http://www.emc.com/collateral/analyst-reports/idc-digital-universe-western-europe.pdf. Retrieved February 2013.

- ↑ Li, G.J.; Chen, X.Q. (2012). "Research Status and Scientific Thinking of Big Data". Bulletin of Chinese Academy of Sciences 27 (6): 648–657.

- ↑ "Big Data". Nature. Macmillan Publishers Limited. 3 September 2008. http://www.nature.com/news/specials/bigdata/index.html. Retrieved 05 November 2013.

- ↑ "Dealing with Data". Science. American Association for the Advancement of Science. 11 February 2011. http://www.sciencemag.org/site/special/data/. Retrieved 05 November 2013.

- ↑ Feng, Z.Y.; Guo, X.H.; Zeng, D.J. et al. (2013). "On the research frontiers of business management in the context of Big Data". Journal of Management Sciences in China 16 (1): 1–9.

- ↑ "Quality management system - Fundamentals and vocabulary (GB/T19000—2008/ISO9000:2005)" (PDF). General Administration of Quality Supervision. 29 October 2008. http://sc.ccic.com/uploadfile/2014/0811/20140811053122619.pdf.

- ↑ 8.0 8.1 Wang, R.Y.; Strong, D.M. (1996). "Beyond accuracy: What data quality means to data consumers". Journal of Management Information Systems 12 (4): 5–33. doi:10.1080/07421222.1996.11518099.

- ↑ Crosby, P.B. (1979). Quality Is Free: The Art of Making Quality Certain. McGraw-Hill Companies. pp. 309. ISBN 978-070145122.

- ↑ Alexander, J.E.; Tate, M.A. (1999). Web Wisdom: How to Evaluate and Create Information Quality on the Web. Hillsdale, NJ: L. Erlbaum Associates, Inc. ISBN 0805831231.

- ↑ Katerattanakul, P.; Siau, K. (1999). "Measuring information quality of web sites: Development of an instrument". ICIS '99: Proceedings of the 20th International Conference on Information Systems: 279–285. http://aisel.aisnet.org/icis1999/25/.

- ↑ Zhu, X.; Gauch, S. (2000). "Incorporating quality metrics in centralized/distributed information retrieval on the World Wide Web". SIGIR '00: Proceedings of the 23rd Annual International ACM SIGIR Conference on Research and Development in Information Retrieval: 288–295. doi:10.1145/345508.345602.

- ↑ Shanks, G.; Corbitt, B. (1999). "Understanding data quality: Social and cultural aspects" (PDF). Proceeding of the 10th Australasian Conference on Information Systems: 785–797. http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.5.4092&rep=rep1&type=pdf.

- ↑ Knight, S.; Burn, J. (2005). "Developing a Framework for Assessing Information Quality on the World Wide Web" (PDF). Information Science Journal 8: 159–171. http://inform.nu/Articles/Vol8/v8p159-172Knig.pdf.

- ↑ Karr, A.F.; Sanil, A.; Sacks, J.; Elmagarmid, A. (2001). "Workshop Report: Affiliates Workshop on Data Quality". National Institute of Statistical Sciences. http://www.niss.org/publications/workshop-report-affiliates-workshop-data-quality.

- ↑ "Research on Some Basic Problems in Data Quality Control". Control and Automation author=Cao, J.J.; Diao, X.C.; Wang, T. et al. 26 (9): 12–14. 2005. doi:10.3969/j.issn.2095-6835.2010.09.005.

- ↑ Zong, W.; Wu, F. (2013). "The Challenge of Data Quality in the Big Data Age". Journal of Xi’an Jiaotong University (Social Sciences) 33 (5): 38–43. http://caod.oriprobe.com/articles/39632886/The_Challenge_of_Data_Quality_in_the_Big_Data_Age.htm.

- ↑ "Data Quality Evaluation Method and Index System" (PDF). Data Application Environment Construction and Service of Chinese Academy of Sciences. 2009. http://www.csdb.cn/upload/101205/1012052021536150.pdf. Retrieved 30 October 2013.

- ↑ Song, M.; Qin, Z. (2007). "Reviews of Foreign Studies on Data Quality Management". Journal of Information 2: 7–9.

- ↑ Katal, A.; Wazid, M.; Goudar, R.H. (2013). "Big Data: Issues, Challenges, Tools and Good Practices". 2013 Sixth International Conference on Contemporary Computing: 404–409. doi:10.1109/IC3.2013.6612229.

- ↑ Li, J.Z.; Liu, X.M. (2013). "An Important Aspect of Big Data: Data Usability". Journal of Computer Research and Development 50 (6): 1147-1162. http://crad.ict.ac.cn/EN/abstract/abstract1252.shtml.

- ↑ Demchenko, Y.; Grosso, P.; de Laat, C.; Membrey, P. (2013). "Addressing Big Data Issues in Scientific Data Infrastructure". 2013 International Conference on Collaboration Technologies and Systems: 48–55. doi:10.1109/CTS.2013.6567203.

- ↑ McGilvray, D. (2008). Executing Data Quality Projects: Ten Steps to Quality Data and Trusted Information. Morgan Kaufmann. pp. 352. ISBN 9780123743695. M

- ↑ Wang, J.L.; Li, H.; Wang, Q. (2010). "Research on ISO 8000 Series Standards for Data Quality". Standard Science 12: 44–46.

Notes

This presentation is faithful to the original, with only a few minor changes to presentation. In some cases important information was missing from the references, and that information was added. One reference to the Data Quality Evaluation Method and Index System (18) no longer exists on the web and couldn't be found on the Internet Archive.