Journal:Judgements of research co-created by generative AI: Experimental evidence

| Full article title | Judgements of research co-created by generative AI: Experimental evidence |

|---|---|

| Journal | Economics and Business Review |

| Author(s) | Niszczota, Paweł; Conway, Paul |

| Author affiliation(s) | Poznań University of Economics and Business, University of Southampton |

| Primary contact | Email: pawel dot niszczota at ue dot poznan dot pl |

| Year published | 2023 |

| Volume and issue | 9(2) |

| Page(s) | 101–114 |

| DOI | 10.18559/ebr.2023.2.744 |

| ISSN | 2450-0097 |

| Distribution license | Creative Commons Attribution 4.0 International |

| Website | https://journals.ue.poznan.pl/ebr/article/view/744 |

| Download | https://journals.ue.poznan.pl/ebr/article/view/744/569 (PDF) |

Abstract

The introduction of ChatGPT has fuelled a public debate on the appropriateness of using generative artificial intelligence (AI) (large language models or LLMs) in work, including a debate on how they might be used (and abused) by researchers. In the current work, we test whether delegating parts of the research process to LLMs leads people to distrust researchers and devalues their scientific work. Participants (N = 402) considered a researcher who delegates elements of the research process to a PhD student or LLM and rated three aspects of such delegation. Firstly, they rated whether it is morally appropriate to do so. Secondly, they judged whether—after deciding to delegate the research process—they would trust the scientist (who decided to delegate) to oversee future projects. Thirdly, they rated the expected accuracy and quality of the output from the delegated research process. Our results show that people judged delegating to an LLM as less morally acceptable than delegating to a human (d = –0.78). Delegation to an LLM also decreased trust to oversee future research projects (d = –0.80), and people thought the results would be less accurate and of lower quality (d = –0.85). We discuss how this devaluation might transfer into the underreporting of generative AI use.

Keywords: trust in science, metascience, ChatGPT, GPT, large language models, generative AI, experiment

Introduction

The introduction of ChatGPT appears to have become a tipping point for large language models (LLMs). It is expected that LLMs—such as those released by OpenAI (i.e., ChatGPT and GPT-4)[1][2], but also major technology firms such as Google and Meta—will impact the work of many white-collar professions.[3][4][5] This impact extends to top academic journals such as Nature and Science, which have already acknowledged the impact artificial intelligence (AI) has on the scientific profession and started setting out some guides on how to use LLMs.[6][7] For example, listing ChatGPT as a co-author was deemed inappropriate.[6][8] However, the use of such models is not explicitly forbidden; rather, it is suggested that researchers report on which part of the research process they received assistance from ChatGPT.

Important questions remain regarding how scientists employing LLMs in their work are perceived by society.[9] Do people view the use of LLMs as diminishing the importance, value, and worth of scientific efforts, and if so, which elements of the scientific process does LLM usage most impact? We examine these questions with a study on the perceptions of scientists who rely on an LLM for various aspects of the scientific process.

We anticipated that, overall, people would view the delegation of aspects of the research process to an LLM as morally worse than delegating to a human, and that doing so would reduce trust in the delegating scientist. Moreover, insofar as people view creativity as a core human trait, especially in comparison to AI[10], and some aspects of the research process may entail more creativity than others—such as idea generation and prior literature synthesis[11], compared to data identification and preparation, testing framework determination and implementation, or results analysis—we tested the exploratory prediction that the effect of delegation to AI versus a human on moral ratings and trust might be different for these aspects.

We contribute to an emerging literature exploring how large language models can assist research on economics and financial economics. The reader can find a valuable discussion on the use of LLMs in economic research in Korinek[12] and Wach et al.[13] A noteworthy empirical study can be found in Dowling and Lucey[14], who asked financial academics to rate research ideas on cryptocurrency, and they judged that the output is of fair quality.

Research questions

We ask two research questions concerning laypeople’s perception of the use of LLMs in science. First, we tested the hypothesis that people will perceive research assistance from LLMs less favorably than the very same assistance from a junior human researcher. In both cases, we assume that the assistance is minor enough to not warrant co-authorship. This levels the playing field for human and AI assistance, as prominent journals have already expressed that LLMs cannot be listed as co-authors[6] as had already been done in some papers.[15]

Second, we examined in which aspects of the research process are the prospective human-AI disparities the strongest. If—as we hypothesize—delegating to AI is perceived less favorably, then one can assume that delegating such processes to AI will have the greatest potential to devalue work done by scientists.

Participants

To assess the consequences of delegating research processes to LLMs, 441 participants from Prolific[16] were recruited. Prolific is an online crowdsourcing platform used to collect primary data from humans, including experimental data.[17] For a long time, Amazon Mechanical Turk was the dominant online labor market, i.e., a marketplace where individuals can complete tasks—such as participate in a research study—for compensation.[18] However, our experience, as well as some research, has shown that data gathered using Prolific is superior[19], and thus we decided to use this platform. To further ensure a high quality of data and a relatively homogenous sample, we recruited participants who had a 98% or higher approval rating, were located and born in the United States, and whose first language was English. As preregistered, thirty-nine participants that did not correctly answer both attention check questions were excluded, leaving a final sample size of 402 (48.3% female, 49.8% male, and 1.9% selected non-binary or did not disclose). The mean age of participants was 42.0 years (SD = 13.9). Of the final sample size, 97.5% had heard about ChatGPT, and 38.1% interacted with it.

The study was pre-registered at https://aspredicted.org/GVL_MR5. Data and materials are available at https://osf.io/fsavc/. The data file includes a short description of all variables used in the analysis.

Experimental design

We conducted a mixed-design experiment. We randomly allocated participants to one of two conditions between-subjects. Participants rated a distinguished senior researcher who delegated a part of the research process to either another person—specifically, a PhD student with two years’ experience in the area (human condition), or to an LLM such as ChatGPT (LLM condition). Each participant rated the effect of such delegation on each of the five parts of the research process discussed in Cargill and O’Connor[20]: idea generation, prior literature synthesis, data identification and preparation, testing framework determination and implementation, and results analysis. Notably, Dowling and Lucey[14] used all of these except results analysis to assess the quality of ChatGPT’s output. We rephrased the two last research processes for clarity.

For each research process, the participants rated the extent to which they agreed with three items, on a Likert scale of 1 (strongly disagree) to 7 (strongly agree):

- I think that it is morally acceptable for a scientist to delegate—in such a scenario—the following part of the research process (after giving credit in the acknowledgments);

- I think that a scientist that delegated the part of the research process shown below should be trusted to oversee future research projects; and

- I think that delegating this part of the research process will produce correct output and stand up to scientific scrutiny (e.g., results would be robust, reliable, and correctly interpreted).

We expected the first two items to correlate with one another but not necessarily with the third. While people might acknowledge that AI might be better than humans in some tasks, they often exhibit an aversion toward the use of algorithms.[21]

Given that each participant rated three different items for five different research processes, we obtained fifteen data points per participant. The main analysis (see Table 2, in the next section) is performed on various levels: the pooled dataset (with 15 data points per participant), and separately for: (1) each of the three items, and (2) each of the five research processes.

Results

Preliminary analysis

Prior to presenting the regression results, we examined as to how answers correlated with each other. As expected, moral acceptability ratings (with correlations based on mean ratings from the five research processes) correlated highly with trust to oversee future projects, r = 0.81, p < 0.001. However, moral acceptability ratings also correlated highly with accuracy ratings, r = 0.81, p < 0.001. Similarly, trust ratings correlated highly with accuracy, r = 0.80, p < 0.001.

However, it remains possible that the relationship between such perceptions was lower when the scientist delegated to an LLM instead of a human. To determine this, we conducted a regression analysis treating one item as the dependent variable, and another as the independent variable, but we added an interaction with a dummy variable across delegation condition. Results, presented in Table 1, suggest that the strength of the relationship between moral acceptability, trust, and accuracy either becomes stronger when delegating to an LLM (rather than a human) or is not statistically different. Therefore, people evaluated moral acceptability, trust, and accuracy in a similar manner in each condition.

| ||||||||||||||||||||||||||||||||||||||||

Pre-registered analysis

We present the results of the pre-registered analysis in Table 2. Consistent with the hypothesis, people rated delegating the research process to an LLM as less morally acceptable and reported lower trust towards this scientist to oversee future research projects. Moreover, people also rated delegating to an LLM as producing less correct output. The effect of delegating to an LLM (relative to delegating the same to a PhD student) was similar for all three items, and thus results from the combined dataset (“All items and processes”) can serve as a benchmark for future studies.

For readers accustomed to Cohen’s d[22], the effect sizes (and 95% confidence intervals) of delegating to an LLM instead of a human were large: d = –0.78 [–0.99, –0.58] for moral acceptability, d = –0.80 [–1.00, –0.60] for trust, and d = –0.85 [–1.06, –0.65] for accuracy.

| ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

Exploratory analysis

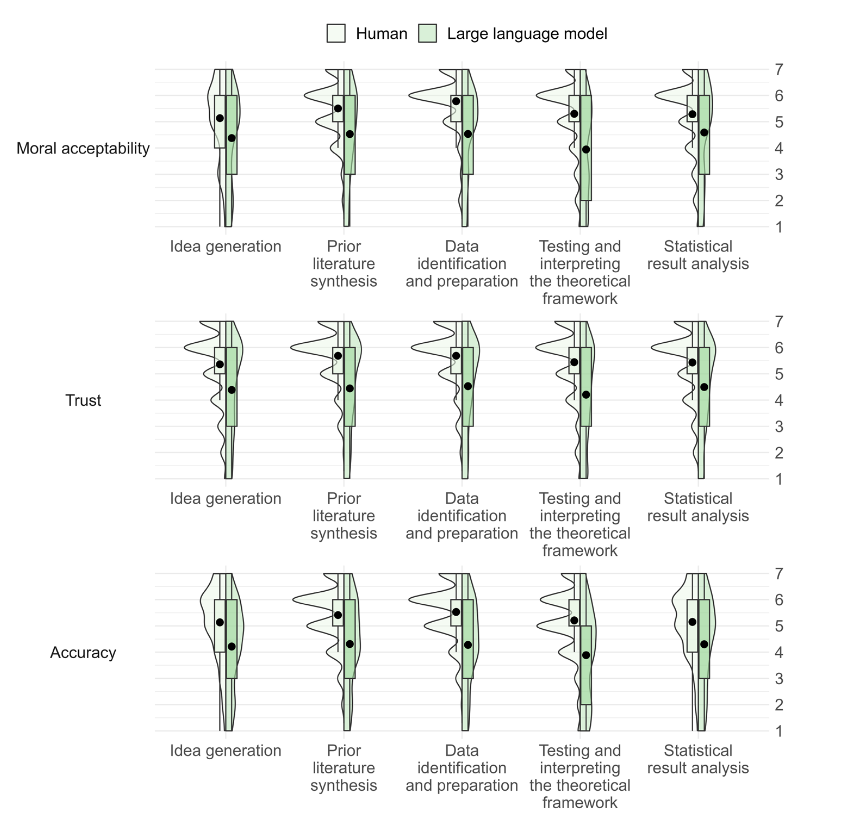

Table 2 (above) and Figure 1 present how ratings varied across the five research processes and conditions. The adverse effect of delegating to an LLM was strongest for the “Testing and interpreting the theoretical framework” process and weakest for the “Statistical result analysis” process. However, the patterns were robust for each of the five research elements, in the d = –0.81 (large effect) to –0.51 (medium effect) range. Therefore, despite some variation across research processes, people nonetheless judged delegation of any process to an LLM as worse than to a human.

|

Discussion

Overall, these results suggest that people have clear, strong negative views of scientists delegating any aspect of the research process to ChatGPT or similar LLMs, compared to a PhD student. They rated delegation to an LLM as less morally acceptable, a scientist choosing such delegation as less trustworthy for future projects, and they rated the output of such delegation as less accurate and of lower quality. These ratings held across all five aspects of the research process identified in past work: idea generation, prior literature synthesis, data identification and preparation, testing framework determination and implementation, and results analysis.[20] Although people showed the strongest differentiation between LLMs and human researchers for testing and interpreting the theoretical framework, and the weakest for statistical result analysis, the effect size for all five was substantial, with Cohen’s d’s that would be conventionally described as medium to large[22], but can be considered large to very large[25] based on effect sizes that are observed in psychological research.

Note that, as expected, moral ratings and trust in scientists were highly correlated, but additionally both correlated highly with perceptions of accuracy and scientific quality. One possibility for this pattern is that people think that delegating to LLMs is immoral and untrustworthy precisely because they view the output of such programs as scientifically questionable. This pattern leaves open the possibility that, with further advancement in AI, if the perceived scientific quality of LLMs increases, people may view delegation to such programs as less problematic.

Nonetheless, these results have clear implications for researchers considering use of ChatGPT or other LLMs. At least in their current state, people view such delegation as seriously problematic—as immoral, untrustworthy, and scientifically unsound. This view extends to all aspects of the research process. Therefore, there does not appear to be a widely approved way for researchers to incorporate LLMs into the research process without compromising their work’s perceived quality and integrity.

It is worth noting that the current work examined the case where the researcher honestly reports the use of the LLM in the acknowledgments section, as recommended by leading journals such as Science.[6] Moreover, the current work examined the case where the researcher delegating to a PhD student—essentially the control condition—features them only in the acknowledgments section rather than as a co-author. Arguably, people may view doing so as ethically questionable as the graduate student would have earned authorship according to common ethical guidelines such as those published by the American Psychological Association.[26] Therefore, the current findings represent a plausible best-case scenario—it is plausible that people would have even stronger negative reactions to a researcher who employed LLMs without revealing their use and who essentially takes credit for the output of an algorithm as compared to a researcher giving their PhD student colleague full authorship credit. These findings underscore the depth of the antipathy toward researchers using LLMs at this time.

Limitations

As with all studies, the current work suffers from some limitations. First, we compared delegation to LLMs to a second-year PhD student, a human with presumably sufficient competence as to normally warrant authorship in scientific publications. Naturally, the choice of comparison target should affect responses. For example, people may think that LLMs will produce more accurate output than, say, a four-year-old or someone who is illiterate. Future work could plausibly test how people perceive LLMs compared to a wide range of targets. However, we elected to begin by testing LLMs against someone who would likely otherwise participate in the scientific process.

Second, we examined the perceptions of laypeople who may have only vague familiarity or understanding of the scientific process. It remains to be seen whether journal editors, reviewers, senior university officials, and others who intimately understand the research process and evaluate scientists share the same views. It may be that with such familiarity, people perceive it more permissible to use LLMs for specific aspects such as data analysis. Findings might also differ using a different split of the research process, perhaps one that includes more fine-grained elements like generating figures based on data computed by humans.[14][20]

Likewise, we examined only perceptions of a scientist operating in a particular area, namely a researcher specializing in economics, finance, and psychology. It remains possible that people hold less-negative views of LLM usage in other branches of science, e.g., perhaps for papers in astrophysics requiring complex calculations. Along the same lines, results may be moderated by the perceived goals of the scientist; for example, it seems likely that people would not hold the same negative impression of research specifically designed to illustrate the uses and limitations of ChatGPT itself.[15]

Moreover, we asked people about a hypothetical scientist. It remains possible that asking about a specific (e.g., famous, eminent, trusted) scientist people demonstrate a lower aversion to LLM use, perhaps because they may infer this trusted scientist would only use LLMs if they had specialist knowledge that doing so was worthwhile and not likely to corrupt the research process. In other words, people may moderate inferences about the use of LLMs depending on their prior knowledge and evaluations of a specific scientist.

Finally, the current work examined American participants. It remains possible that results may vary in other populations; for example, Americans tend to view AI more critically than people in China.[27] Furthermore, not all scientists have equal access to state-of-the-art language models. For example, people from China cannot access these models[28], and Italy has banned access to ChatGPT, at least temporarily.[29] As such, the perceptions of research delegated to LLM may vary somewhat with access to such models or which models are popular or available.

Conclusions

Overall, the current findings suggest that people have strongly negative views of delegating any aspect of the research process to LLMs such as ChatGPT, compared to a junior human scientist: people rated doing so more immoral, more untrustworthy, and the results as less accurate and of lower quality. These findings held for five aspects of the research process, from idea generation to data analysis. Therefore, researchers should employ caution when considering whether to incorporate ChatGPT or other LLMs into their research. It appears that even when disclosing such practices according to modern standards, doing so may powerfully reduce perceptions of scientific quality and integrity.

Acknowledgements

Funding

This research was supported by grant 2021/42/E/HS4/00289 from the National Science Centre, Poland.

Conflict of interest

None stated.

References

- ↑ Schulman, J.; Zoph, B.; Kim, C. et al. (30 November 2022). "Introducing ChatGPT". OpenAI. https://openai.com/blog/chatgpt.

- ↑ OpenAI; Achiam, Josh; Adler, Steven; Agarwal, Sandhini; Ahmad, Lama; Akkaya, Ilge; Aleman, Florencia Leoni; Almeida, Diogo et al. (18 December 2023). "GPT-4 Technical Report". arXiv:2303.08774 [cs]. doi:10.48550/arxiv.2303.08774. http://arxiv.org/abs/2303.08774.

- ↑ Alper, Sinan; Yilmaz, Onurcan (1 April 2020). "Does an Abstract Mind-Set Increase the Internal Consistency of Moral Attitudes and Strengthen Individualizing Foundations?" (in en). Social Psychological and Personality Science 11 (3): 326–335. doi:10.1177/1948550619856309. ISSN 1948-5506. http://journals.sagepub.com/doi/10.1177/1948550619856309.

- ↑ Eloundou, Tyna; Manning, Sam; Mishkin, Pamela; Rock, Daniel (21 August 2023). "GPTs are GPTs: An Early Look at the Labor Market Impact Potential of Large Language Models". arXiv:2303.10130 [cs, econ, q-fin]. doi:10.48550/arxiv.2303.10130. http://arxiv.org/abs/2303.10130.

- ↑ Korzynski, Pawel; Mazurek, Grzegorz; Altmann, Andreas; Ejdys, Joanna; Kazlauskaite, Ruta; Paliszkiewicz, Joanna; Wach, Krzysztof; Ziemba, Ewa (30 May 2023). "Generative artificial intelligence as a new context for management theories: analysis of ChatGPT" (in en). Central European Management Journal 31 (1): 3–13. doi:10.1108/CEMJ-02-2023-0091. ISSN 2658-2430. https://www.emerald.com/insight/content/doi/10.1108/CEMJ-02-2023-0091/full/html.

- ↑ 6.0 6.1 6.2 6.3 Thorp, H. Holden (27 January 2023). "ChatGPT is fun, but not an author" (in en). Science 379 (6630): 313–313. doi:10.1126/science.adg7879. ISSN 0036-8075. https://www.science.org/doi/10.1126/science.adg7879.

- ↑ "Tools such as ChatGPT threaten transparent science; here are our ground rules for their use" (in en). Nature 613 (7945): 612–612. 26 January 2023. doi:10.1038/d41586-023-00191-1. ISSN 0028-0836. https://www.nature.com/articles/d41586-023-00191-1.

- ↑ Stokel-Walker, Chris (26 January 2023). "ChatGPT listed as author on research papers: many scientists disapprove" (in en). Nature 613 (7945): 620–621. doi:10.1038/d41586-023-00107-z. ISSN 0028-0836. https://www.nature.com/articles/d41586-023-00107-z.

- ↑ Dwivedi, Yogesh K.; Kshetri, Nir; Hughes, Laurie; Slade, Emma Louise; Jeyaraj, Anand; Kar, Arpan Kumar; Baabdullah, Abdullah M.; Koohang, Alex et al. (1 August 2023). "Opinion Paper: “So what if ChatGPT wrote it?” Multidisciplinary perspectives on opportunities, challenges and implications of generative conversational AI for research, practice and policy" (in en). International Journal of Information Management 71: 102642. doi:10.1016/j.ijinfomgt.2023.102642. https://linkinghub.elsevier.com/retrieve/pii/S0268401223000233.

- ↑ Cha, Young-Jae; Baek, Sojung; Ahn, Grace; Lee, Hyoungsuk; Lee, Boyun; Shin, Ji-eun; Jang, Dayk (1 February 2020). "Compensating for the loss of human distinctiveness: The use of social creativity under Human–Machine comparisons" (in en). Computers in Human Behavior 103: 80–90. doi:10.1016/j.chb.2019.08.027. https://linkinghub.elsevier.com/retrieve/pii/S0747563219303140.

- ↑ King, Michael (7 April 2023). Can GPT-4 formulate and test a novel hypothesis? Yes and no. doi:10.36227/techrxiv.22517278.v1. https://www.techrxiv.org/doi/full/10.36227/techrxiv.22517278.v1.

- ↑ Korinek, Anton (1 February 2023) (in en). Language Models and Cognitive Automation for Economic Research. Cambridge, MA. pp. w30957. doi:10.3386/w30957. http://www.nber.org/papers/w30957.pdf.

- ↑ Wach, Krzysztof; Duong, Cong Doanh; Ejdys, Joanna; Kazlauskaitė, Rūta; Korzynski, Pawel; Mazurek, Grzegorz; Paliszkiewicz, Joanna; Ziemba, Ewa (2023). "The dark side of generative artificial intelligence: A critical analysis of controversies and risks of ChatGPT". Entrepreneurial Business and Economics Review 11 (2): 7–30. doi:10.15678/EBER.2023.110201. https://eber.uek.krakow.pl/index.php/eber/article/view/2113.

- ↑ 14.0 14.1 14.2 Dowling, Michael; Lucey, Brian (1 May 2023). "ChatGPT for (Finance) research: The Bananarama Conjecture" (in en). Finance Research Letters 53: 103662. doi:10.1016/j.frl.2023.103662. https://linkinghub.elsevier.com/retrieve/pii/S1544612323000363.

- ↑ 15.0 15.1 Kung, Tiffany H.; Cheatham, Morgan; ChatGPT; Medenilla, Arielle; Sillos, Czarina; De Leon, Lorie; Elepaño, Camille; Madriaga, Maria et al. (20 December 2022) (in en). Performance of ChatGPT on USMLE: Potential for AI-Assisted Medical Education Using Large Language Models. doi:10.1101/2022.12.19.22283643. http://medrxiv.org/lookup/doi/10.1101/2022.12.19.22283643.

- ↑ Palan, Stefan; Schitter, Christian (1 March 2018). "Prolific.ac—A subject pool for online experiments" (in en). Journal of Behavioral and Experimental Finance 17: 22–27. doi:10.1016/j.jbef.2017.12.004. https://linkinghub.elsevier.com/retrieve/pii/S2214635017300989.

- ↑ Peer, Eyal; Brandimarte, Laura; Samat, Sonam; Acquisti, Alessandro (1 May 2017). "Beyond the Turk: Alternative platforms for crowdsourcing behavioral research" (in en). Journal of Experimental Social Psychology 70: 153–163. doi:10.1016/j.jesp.2017.01.006. https://linkinghub.elsevier.com/retrieve/pii/S0022103116303201.

- ↑ Buhrmester, Michael; Kwang, Tracy; Gosling, Samuel D. (1 January 2011). "Amazon's Mechanical Turk: A New Source of Inexpensive, Yet High-Quality, Data?" (in en). Perspectives on Psychological Science 6 (1): 3–5. doi:10.1177/1745691610393980. ISSN 1745-6916. http://journals.sagepub.com/doi/10.1177/1745691610393980.

- ↑ Peer, Eyal; Rothschild, David; Gordon, Andrew; Evernden, Zak; Damer, Ekaterina (29 September 2021). "Data quality of platforms and panels for online behavioral research" (in en). Behavior Research Methods 54 (4): 1643–1662. doi:10.3758/s13428-021-01694-3. ISSN 1554-3528. PMC PMC8480459. PMID 34590289. https://link.springer.com/10.3758/s13428-021-01694-3.

- ↑ 20.0 20.1 20.2 Cargill, Margaret; O'Connor, Patrick (2021). Writing scientific research articles: strategy and steps (Third edition ed.). Hoboken, NJ: Wiley-Blackwell. ISBN 978-1-119-71727-0.

- ↑ Dietvorst, Berkeley J.; Simmons, Joseph P.; Massey, Cade (2015). "Algorithm aversion: People erroneously avoid algorithms after seeing them err." (in en). Journal of Experimental Psychology: General 144 (1): 114–126. doi:10.1037/xge0000033. ISSN 1939-2222. https://doi.apa.org/doi/10.1037/xge0000033.

- ↑ 22.0 22.1 Cohen, Jacob (1988). Statistical power analysis for the behavioral sciences (2nd ed ed.). Hillsdale, N.J: L. Erlbaum Associates. ISBN 978-0-8058-0283-2.

- ↑ Bates, Douglas; Mächler, Martin; Bolker, Ben; Walker, Steve (2015). "Fitting Linear Mixed-Effects Models Using lme4" (in en). Journal of Statistical Software 67 (1). doi:10.18637/jss.v067.i01. ISSN 1548-7660. http://www.jstatsoft.org/v67/i01/.

- ↑ Kuznetsova, Alexandra; Brockhoff, Per B.; Christensen, Rune H. B. (2017). "lmerTest Package: Tests in Linear Mixed Effects Models" (in en). Journal of Statistical Software 82 (13). doi:10.18637/jss.v082.i13. ISSN 1548-7660. http://www.jstatsoft.org/v82/i13/.

- ↑ Funder, David C.; Ozer, Daniel J. (1 June 2019). "Evaluating Effect Size in Psychological Research: Sense and Nonsense" (in en). Advances in Methods and Practices in Psychological Science 2 (2): 156–168. doi:10.1177/2515245919847202. ISSN 2515-2459. http://journals.sagepub.com/doi/10.1177/2515245919847202.

- ↑ American Psychological Association (Washington, District of Columbia), ed. (2020). Publication manual of the American psychological association (Seventh edition ed.). Washington, DC: American Psychological Association. ISBN 978-1-4338-3215-4.

- ↑ Wu, Yuheng; Mou, Yi; Li, Zhipeng; Xu, Kun (1 March 2020). "Investigating American and Chinese Subjects’ explicit and implicit perceptions of AI-Generated artistic work" (in en). Computers in Human Behavior 104: 106186. doi:10.1016/j.chb.2019.106186. https://linkinghub.elsevier.com/retrieve/pii/S074756321930398X.

- ↑ Wang, Simon H. (2 March 2023). "OpenAI — explain why some countries are excluded from ChatGPT" (in en). Nature 615 (7950): 34–34. doi:10.1038/d41586-023-00553-9. ISSN 0028-0836. https://www.nature.com/articles/d41586-023-00553-9.

- ↑ Satariano, A. (31 March 2023). "ChatGPT Is Banned in Italy Over Privacy Concerns". The New York Times. https://www.nytimes.com/2023/03/31/technology/chatgpt-italy-ban.html.

Notes

This presentation is faithful to the original, with only a few minor changes to presentation, grammar, and punctuation. In some cases important information was missing from the references, and that information was added. The original lists references in alphabetical order; this version lists them in order of appearance, by design.