Journal:Learning health systems need to bridge the "two cultures" of clinical informatics and data science

| Full article title | Learning health systems need to bridge the "two cultures" of clinical informatics and data science |

|---|---|

| Journal | Journal of Innovation in Health Informatics |

| Author(s) | Scott, Philip J.; Dunscombe, Rachel; Evans, David; Mukherjee, Mome; Wyatt, Jeremy C. |

| Author affiliation(s) | University of Portsmouth, Salford Royal NHS Foundation Trust, British Computer Society, University of Edinburgh, University of Southampton |

| Primary contact | Email: Philip dot scott at port dot ac dot uk |

| Year published | 2018 |

| Volume and issue | 25(2) |

| Page(s) | 126–31 |

| DOI | 10.14236/jhi.v25i2.1062 |

| ISSN | 1687-8035 |

| Distribution license | Creative Commons Attribution 4.0 International |

| Website | https://www.hindawi.com/journals/abi/2018/4059018/ |

| Download | http://downloads.hindawi.com/journals/abi/2018/4059018.pdf (PDF) |

Abstract

Background: United Kingdom (U.K.) health research policy and plans for population health management are predicated upon transformative knowledge discovery from operational "big data." Learning health systems require not only data but also feedback loops of knowledge into changed practice. This depends on knowledge management and application, which in turn depends upon effective system design and implementation. Biomedical informatics is the interdisciplinary field at the intersection of health science, social science, and information science and technology that spans this entire scope.

Issues: In the U.K., the separate worlds of health data science (bioinformatics, big data) and effective healthcare system design and implementation (clinical informatics, "digital health") have operated as "two cultures." Much National Health Service and social care data is of very poor quality. Substantial research funding is wasted on data cleansing or by producing very weak evidence. There is not yet a sufficiently powerful professional community or evidence base of best practice to influence the practitioner community or the digital health industry.

Recommendation: The U.K. needs increased clinical informatics research and education capacity and capability at much greater scale and ambition to be able to meet policy expectations, address the fundamental gaps in the discipline’s evidence base, and mitigate the absence of regulation. Independent evaluation of digital health interventions should be the norm, not the exception.

Conclusions: Policy makers and research funders need to acknowledge the existing gap between the two cultures and recognize that the full social and economic benefits of digital health and data science can only be realized by accepting the interdisciplinary nature of biomedical informatics and supporting a significant expansion of clinical informatics capacity and capability.

Keywords: big data, health informatics, bioinformatics, evidence-based practice, health policy, program evaluation, education, learning health systems

Introduction

Novelist and English physical chemist C.P. Snow famously characterized the gulf between what he called the "two cultures" of science and the humanities as a serious barrier to progress.[1] In our field, at least in the U.K., there appears to be an analogous gap between the policy and funding programs of data science (bioinformatics, "big data") and effective system design and implementation (clinical informatics, "digital health").

Data science in healthcare is subject to strong regulatory and ethical controls, minimum educational qualifications, well-established methodologies, mandatory professional accreditation, and evidence-based independent scrutiny. By contrast, digital health has minimal substantive regulation or ethical foundation, no specified educational requirements, weak methodologies, a contested evidence base, and negligible peer scrutiny. Yet, vision of big data is to base science on the data routinely produced by digital health systems.

This paper is focused on the U.K. context. We bring together experience from the frontline National Health Service (NHS) clinical informatics and epidemiological research to present the operational realities of health data quality and the implications for data science. We argue that to build a successful learning health system, data science and clinical informatics should be seen as two parts of the same discipline with a common mission. We commend the work in progress to bridge this cultural divide but propose that the U.K. needs to expand its clinical informatics research and education capacity and capability at much greater scale to address the substantial gaps in the evidence base and to realize the anticipated societal aims.

Routine clinical data is highly problematic

Data quality in frontline healthcare systems faces a dual challenge in our current environment. First is the lack of standard data sets and adoption of reference values, though work is progressing in this area.[2] The second is the lack of data quality due to unreliable adherence to processes[3] and poor system usability.[4] Embarking on the implementation of clinical terminology, including the Systematized Nomenclature of Medicine Clinical Terms (SNOMED CT) and Logical Observation Identifiers Names and Codes (LOINC), shows us that our historical environment and the complexity of these standards always causes long debate and significant amounts of implementation effort. So far, little progress has been made even by the "Global Digital Exemplars"[5] in implementing SNOMED CT in any depth. Furthermore, complexity is introduced when interoperating with other care settings such as social care and mental health. General practitioner (GP) data is far from consistent. Different practices will use different fields in different ways and usage varies from clinician to clinician. Historically, the system has not forced users to standardize their recording or practice. This results in varying data quality between GP practices, which affects not just epidemiological studies but operational processes. Failure to enter accurate data into healthcare systems occurs for a number of reasons, including poor usability, overly complex systems, lack of data input logic to check errors, and poor business change leadership.

Most epidemiological research with routine clinical data uses coded data, rather than free text. Thus, there is an overreliance on codes used during clinical consultations. A national evaluation of usage of codes in primary care in Scotland, taking allergy as an example, found 50% of usage in over two million consultations over seven years were from eight codes used to report for an incentive program for GPs, 95% usage was from 10% of the 352 allergy codes (n = 36), and 21% of codes were never used.[6] A systematic review found variations in completeness (66%–96%) and correctness of morbidity recording across disease areas.[7] For instance, the quality of recording in diabetes is better than asthma in primary care.

There are also changes in case definition and diagnostic criteria across disease areas over time, which are seldom mentioned in the databases. A recent primary care study found that choice of codes can make a difference to outcome measures; for example, the incidence rate was found to be higher when non-diagnostic codes were used rather than with diagnostic codes.[8] Since there is variability of coding of data across GP practices, when practices with poor quality of recording were included in the analysis, there was significant difference in incidence rate and trends, with a lower incidence rate and decreasing trends when they were included. This study highlights the effect of miscoding and misclassification. It also shows that when data are missing, they might not be missing at random. Furthermore, there could be unavailability of codes that were needed during consultation and thus were recorded in free text. All these salient features around coding of data are often ignored when interrogating patient databases for research and thus could lead to erroneous conclusions. No amount of data cleansing could sort the inherent discrepancies involved in coded data.

Additionally, confounding by indication or severity can be problematic, e.g., when severely ill patients receive more intensive treatment, resulting in poorer outcomes compared to other patients.[9] Clinical databases only comprise patients who attended healthcare services. A U.K. study showed the difference in asthma prevalence when asthma was reported from population surveys compared to clinical databases.[10] Besides quality of coded data, there could also be lack of key variables in clinical databases, since their primary purpose was not designed for research, as seen in, for example, the absence of diagnoses in outpatient hospital attendances.

Furthermore, significant variance is seen in the success of electronic patient record deployments from the same commercial vendor in different localities. For example, the Arch Collaborative from KLAS Research[11] shows variance in all aspects of success, including data quality of the deployments by Cerner, Epic, and Allscripts. Experience in the United States (U.S.) has shown a particular risk from "copy and paste" errors.[12]

Biomedical informatics is an interdisciplinary field with a common mission

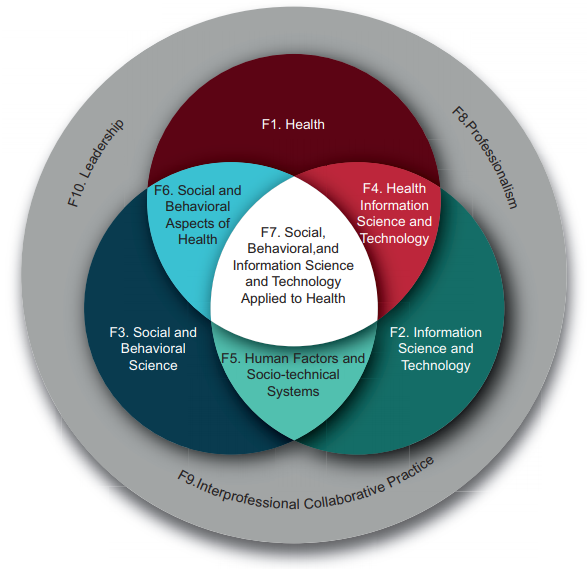

The two cultures are both embraced by the widely adopted American Medical Informatics Association definition of biomedical informatics: "the interdisciplinary field that studies and pursues the effective uses of biomedical data, information, and knowledge for scientific inquiry, problem solving and decision making, motivated by efforts to improve human health."[13] Biomedical informatics can be visualized as the intersection of health science, social science, and information science and technology. (See Figure 1, reproduced with permission from AMIA[14])

|

In this definition, biomedical informatics has sub-fields such as health informatics (comprising clinical and public health informatics) and bioinformatics (also called computational biology). Whereas bioinformatics deals with data science, clinical informatics "covers the practice of informatics in healthcare" (emphasis added). Therefore, getting clinical informatics right is more about people than it is about technology or data. As professor and author Enrico Coiera said, informatics is "as much about computers as cardiology is about stethoscopes."[15]

Of course, biomedical informatics must be aimed at a grand outcome–the betterment of health–rather than a contained body of knowledge or an abstract philosophy. The sole axis of interest is whether or not health is ultimately improved.

This has a number of implications. In pursuit of a better health outcome, a clinician may employ nuclear physics or big data analytics. Similarly, an informatician needs to be multi-disciplinary and citizen-centred as they play their part in a shared mission. Maintaining a system-wide view of outcomes is an ethical imperative for everyone involved, from research to application.[16]

Treating the two cultures within biomedical informatics as separate disciplines, rather than as a shared mission, may be professionally attractive and tractable for funders and policymakers, but this approach risks maintaining silos and working against the public interest. Instead, biomedical informatics researchers and practitioners–including clinicians–need to be part of a single professional organism made of interlocking professional communities; able to work together in a single systemic view of citizen benefit and harm, and able to implement the best scientific, engineering, and medical disciplines available. To do otherwise is simply unethical.

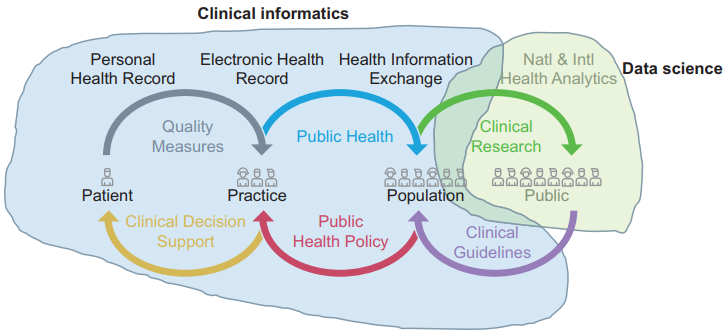

This ethical perspective opens up an exciting vista of fruitful, high-impact, applied research and professional practice. Global health public policy is united in its view that digital systems, data, and digital transformation are vital tools for the advancement of both health and care. Learning health systems[17] require not only the big data engine but also the feedback loop of knowledge into changed practice. This crucially depends on knowledge management and application, which in turn depends on effective system design and implementation: clinical informatics. Figure 2 (adapted from Rouse et al.[18], originally based on work by the Office of the National Coordinator[19]) illustrates how much of the learning health system depends on clinical informatics and how much on data science.

|

Steps towards convergence

There are several encouraging steps towards convergence. We highlight and commend several excellent initiatives that are taking a collaborative and aligned approach:

- the NHS Digital Academy[20]

- Health Education England’s "Building a digital ready workforce" program[21]

- The UK Faculty of Clinical Informatics[22]

- The Federation of Informatics Professionals[23]

In addition, some of the Academic Health Science Networks[24] are helping to bring together the practitioner and research communities in both data science and clinical informatics initiatives, and the Global Digital Exemplars[5] are to participate in a national evaluation program.[25] The invitation to participate in the recently launched "Local Health and Care Record Exemplars" program[26] includes several references to "research," but unfortunately this seems to be solely the big data aspect without the clinical informatics research needed to improve frontline usage and data quality.

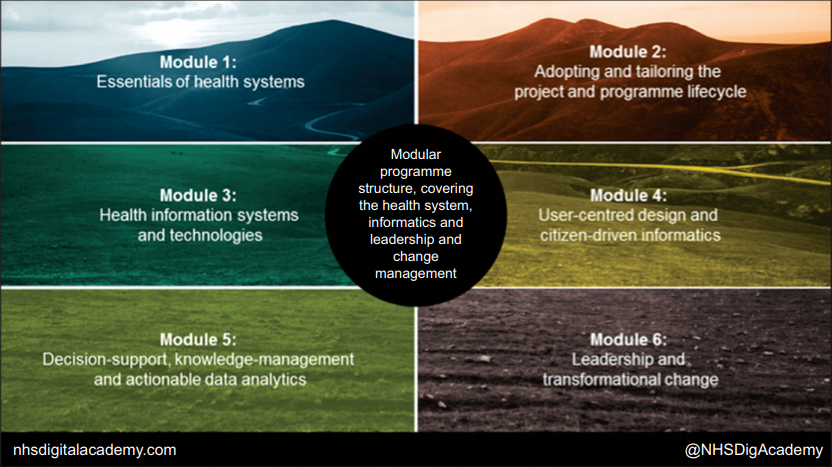

One focus of the NHS Digital Academy (Figure 3) will be to unpick the currently secret recipe for deriving user satisfaction, productivity, and good quality data from clinical systems. There is a significant focus on user-centered design, interoperability, and healthcare system standards within the modules. The aim is to ensure that the cohort of digital leaders understand the role of the end-to-end technology from data standards to usability in achieving good data for direct care and research.

|

Expanding clinical informatics research and education capacity and capability

However, we suggest that the U.K. needs increased clinical informatics research and education capacity and capability at much greater scale and ambition to be able to address the fundamental gaps in the discipline’s evidence base and mitigate the absence of regulation.[4] Numerous basic clinical informatics research questions remain to be satisfactorily addressed[27], including in the fields of:

- Cost effectiveness[28][29]

- Efficiency/productivity[30][31][32]

- Impact on service utilization[33]

- Patient empowerment/outcomes[34]

- Decision support[35]

- Usability and human factors[36][37]

- Unintended consequences[38][39][40][41]

- Application of safety-critical software engineering methods[42]

This realization has led to the "evidence-based health informatics" movement, which is well described in an open-access textbook by the same name.[43] The way to build our discipline’s evidence base is to identify and test relevant theories using rigorous evaluation studies.[44] A key measure that would bring the two cultures of data science and clinical informatics closer is to make independent evaluation of digital health interventions the norm, not the exception.[45][46] These studies need to be carried out by independent evaluators, not system developers, because there is clear systematic review evidence that even randomized controlled trials (RCTs) carried out by system developers are three times as likely to generate positive results than RCTs carried out by independent evaluators.[47]

Conclusions

We have highlighted serious issues with the quality of routine data and how that can be addressed beyond nugatory data cleansing. We submit that policy makers and research funders need to acknowledge the existing gap between the "two cultures" and recognize that the full social and economic benefits of digital health and data science can only be realized by accepting the interdisciplinary nature of biomedical informatics and supporting a significant expansion of clinical informatics capacity and capability.

References

- ↑ Snow, C.P. (1959). The Two Cultures and the Scientific Revolution: The Rede Lecture. Cambridge University Press. pp. 58.

- ↑ Scott, P.; Bentley, S.; Carpenter, I. et al. (2015). "Developing a conformance methodology for clinically-defined medical record headings: A preliminary report". European Journal for Biomedical Informatics 11 (2): en23–en30. https://www.ejbi.org/abstract/developing-a-conformance-methodology-for-clinicallydefinedrnmedical-record-headings-a-preliminary-report-3384.html.

- ↑ Burnett, S.; Franklin, B.D.; Moorthy, K. et al. (2012). "How reliable are clinical systems in the UK NHS? A study of seven NHS organisations". BMJ Quality and Safety 21 (6): 466–72. doi:10.1136/bmjqs-2011-000442. PMC PMC3355340. PMID 22495099. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3355340.

- ↑ 4.0 4.1 Koppel, R. (2016). "The health information technology safety framework: Building great structures on vast voids". BMJ Quality and Safety 25 (4): 218-20. doi:10.1136/bmjqs-2015-004746. PMID 26584580.

- ↑ 5.0 5.1 "Global Digital Exemplars". NHS England. 2018. https://www.england.nhs.uk/digitaltechnology/connecteddigitalsystems/exemplars/. Retrieved 26 March 2018.

- ↑ Mukherjee, M.; Wyatt, J.C.; Simpson, C.R.; Sheikh, A. (2016). "Usage of allergy codes in primary care electronic health records: A national evaluation in Scotland". Allergy 71 (11): 1594–1602. doi:10.1111/all.12928. PMID 27146325.

- ↑ Jordan, K.; Porcheret, M.; Croft, P. (2004). "Quality of morbidity coding in general practice computerized medical records: A systematic review". Family Practice 21 (4): 396–412. doi:10.1093/fampra/cmh409. PMID 15249528.

- ↑ Tate, A.R.; Dungey, S.; Glew, S. et al. (2017). "Quality of recording of diabetes in the UK: how does the GP's method of coding clinical data affect incidence estimates? Cross-sectional study using the CPRD database". BMJ Open 7 (1): e012905. doi:10.1136/bmjopen-2016-012905. PMC PMC5278252. PMID 28122831. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC5278252.

- ↑ Kyriacou, D.N.; Lewis, R.J. (2016). "Confounding by Indication in Clinical Research". JAMA 316 (17): 1818-1819. doi:10.1001/jama.2016.16435. PMID 27802529.

- ↑ Mukherjee, M.; Stoddart, A.; Gupta, R.P. et al. (2016). "The epidemiology, healthcare and societal burden and costs of asthma in the UK and its member nations: Analyses of standalone and linked national databases". BMC Medicine 14 (1): 113. doi:10.1186/s12916-016-0657-8. PMC PMC5002970. PMID 27568881. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC5002970.

- ↑ "The Arch Collaborative". KLAS Research. 2018. https://klasresearch.com/arch-collaborative.

- ↑ Koppel, R. (2014). "Illusions and delusions of cut, pasted, and cloned notes: Ephemeral reality and pixel prevarications". Chest 145 (3): 444–5. doi:10.1378/chest.13-1846. PMID 24590015.

- ↑ Kulikowski, C.A.; Shortliffe, E.H.; Currie, L.M. et al. (2012). "AMIA Board white paper: Definition of biomedical informatics and specification of core competencies for graduate education in the discipline". JAMIA 19 (6): 931–8. doi:10.1136/amiajnl-2012-001053. PMC PMC3534470. PMID 22683918. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3534470.

- ↑ "Health Informatics Core Competencies for CAHIIM" (PDF). AMIA. 2017. https://www.amia.org/sites/default/files/AMIA-Health-Informatics-Core-Competencies-for-CAHIIM.PDF. Retrieved 26 March 2018.

- ↑ Coiera, E. (2003). Guide to Health Informatics (2nd ed.). CRC Press. doi:10.1201/b13618. ISBN 9781444114003.

- ↑ Heathfield, H.A.; Wyatt, J. (1995). "The road to professionalism in medical informatics: A proposal for debate". Methods of Information in Medicine 34 (5): 426–33. PMID 8713756.

- ↑ Friedman, C.P.; Rubin, J.C.; Sullivan, K.J. (2017). "Toward an Information Infrastructure for Global Health Improvement". Yearbook of Medical Informatics 26 (1): 16-23. doi:10.15265/IY-2017-004. PMC PMC6239237. PMID 28480469. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC6239237.

- ↑ Rouse, W.B.; Johns, M.M.E.; Pepe, K.M. (2017). "Learning in the health care enterprise". Learning Health Systems 1 (4): e10024. doi:10.1002/lrh2.10024.

- ↑ "Connecting Health and Care for the Nation: A 10-Year Vision to Achieve an Interoperable Health IT Infrastructure" (PDF). Office of the National Coordinator. 5 June 2014. pp. 13. https://www.healthit.gov/sites/default/files/ONC10yearInteroperabilityConceptPaper.pdf. Retrieved 17 April 2018.

- ↑ "NHS Digital Academy". NHS England. 2018. https://www.england.nhs.uk/digitaltechnology/nhs-digital-academy/. Retrieved 26 March 2018.

- ↑ "Building a digital ready workforce". NHS England, Health Education England. 2018. https://www.hee.nhs.uk/our-work/building-digital-ready-workforce. Retrieved 26 March 2018.

- ↑ "Faculty of Clinical Informatics". Royal College of General Practitioner. 2018. https://www.facultyofclinicalinformatics.org.uk/. Retrieved 26 March 2018.

- ↑ De Lusignan, S.; Barlow, J.; Scott, P.J. (2018). "Genesis of a UK Faculty of Clinical Informatics at a time of anticipation for some, and ruby, golden and diamond celebrations for others". Journal of Innovation in Health Informatics 24 (4): 344–46. doi:10.14236/jhi.v24i4.1003. PMID 29334353.

- ↑ "Academic Health Science Networks". NHS England. 2018. https://www.england.nhs.uk/ourwork/part-rel/ahsn/. Retrieved 26 March 2018.

- ↑ "Global Digital Exemplar Programme Evaluation". The University of Edinburgh. 2018. https://www.ed.ac.uk/usher/digital-exemplars. Retrieved 26 March 2018.

- ↑ "Local Health and Care Record Exemplars" (PDF). NHS England. 18 May 2018. https://www.england.nhs.uk/wp-content/uploads/2018/05/local-health-and-care-record-exemplars-summary.pdf.

- ↑ Haux, R.; Kulikowski, C.A.; Bakken, S. et al. (2017). "Research Strategies for Biomedical and Health Informatics. Some Thought-provoking and Critical Proposals to Encourage Scientific Debate on the Nature of Good Research in Medical Informatics". Methods of Information in Medicine 56 (Open): e1–e10. doi:10.3414/ME16-01-0125. PMC PMC5388922. PMID 28119991. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC5388922.

- ↑ Reis, Z.S.N.; Maia, T.A.; Marcolino, M.S. et al. (2017). "Is There Evidence of Cost Benefits of Electronic Medical Records, Standards, or Interoperability in Hospital Information Systems? Overview of Systematic Reviews". JMIR Medical Informatics 5 (3): e26. doi:10.2196/medinform.7400. PMC PMC5596299. PMID 28851681. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC5596299.

- ↑ Dranove, D.; Forman, C.; Goldfarb, A. et al. (August 2012). "The Trillion Dollar Conundrum: Complementarities and Health Information Technology". The National Bureau of Economic Research. https://www.nber.org/papers/w18281.

- ↑ Friedberg, M.W.; Chen, P.G.; Van Busum, K.R. et al. (2014). "Factors Affecting Physician Professional Satisfaction and Their Implications for Patient Care, Health Systems, and Health Policy". Rand Health Quarterly 3 (4): 1. PMC PMC5051918. PMID 28083306. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC5051918.

- ↑ Hill Jr., R.G.; Sears, L.M.; Melanson, S.W. (2013). "4000 clicks: A productivity analysis of electronic medical records in a community hospital ED". American Journal of Emergency Medicine 31 (11): 1591-4. doi:10.1016/j.ajem.2013.06.028. PMID 24060331.

- ↑ Heponiemi, T.; Hyppönen, H.; Vehko, T. et al. (2017). "Finnish physicians' stress related to information systems keeps increasing: A longitudinal three-wave survey study". BMC Medical Informatics and Decision Making 17 (1): 147. doi:10.1186/s12911-017-0545-y. PMC PMC5646125. PMID 29041971. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC5646125.

- ↑ Kash, B.A.; Baek, J.; Davis, E. et al. (2017). "Review of successful hospital readmission reduction strategies and the role of health information exchange". International Journal of Medical Informatics 104: 97–104. doi:10.1016/j.ijmedinf.2017.05.012. PMID 28599821.

- ↑ Rigby, M.; Georgiou, A.; Hyppönen, H. et al. (2015). "Patient Portals as a Means of Information and Communication Technology Support to Patient- Centric Care Coordination - the Missing Evidence and the Challenges of Evaluation. A joint contribution of IMIA WG EVAL and EFMI WG EVAL". Yearbook of Medical Informatics 10 (1): 148–59. doi:10.15265/IY-2015-007. PMC PMC4587055. PMID 26123909. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4587055.

- ↑ Ammenwerth, E.; Nykänen, P.; Rigby, M. et al. (2013). "Clinical decision support systems: Need for evidence, need for evaluation". Artificial Intelligence in Medicine 59 (1): 1–3. doi:10.1016/j.artmed.2013.05.001. PMID 23810731.

- ↑ Marcilly, R.; Peute, L.; Beuscart-Zephir, M.C. (2016). "From Usability Engineering to Evidence-based Usability in Health IT". Studies in Health Technology and Informatics 222: 126–38. PMID 27198098.

- ↑ Turner, P.; Kushniruk, A.; Nohr, C. (2017). "Are We There Yet? Human Factors Knowledge and Health Information Technology - The Challenges of Implementation and Impact". Yearbook of Medical Informatics 26 (1): 84-91. doi:10.15265/IY-2017-014. PMC PMC6239238. PMID 29063542. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC6239238.

- ↑ Coiera, E.; Ash, J.; Berg, M. (2016). "The Unintended Consequences of Health Information Technology Revisited". Yearbook of Medical Informatics 10 (1): 163–9. doi:10.15265/IY-2016-014. PMC PMC5171576. PMID 27830246. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC5171576.

- ↑ Schiff, G.D.; Amato, M.G.; Eguale, T. et al. (2015). "Computerised physician order entry-related medication errors: Analysis of reported errors and vulnerability testing of current systems". BMJ Quality and Safety 24 (4): 264-71. doi:10.1136/bmjqs-2014-003555. PMC PMC4392214. PMID 25595599. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4392214.

- ↑ Amato, M.G.; Salazar, A.; Hickman, T.T. et al. (2017). "Computerized prescriber order entry-related patient safety reports: analysis of 2522 medication errors". JAMIA 24 (2): 316–322. doi:10.1093/jamia/ocw125. PMC 27678459. PMID 25595599. https://www.ncbi.nlm.nih.gov/pmc/articles/27678459.

- ↑ Cresswell, K.M.; Bates, D.W.; Williams, R. et al. (2014). "Evaluation of medium-term consequences of implementing commercial computerized physician order entry and clinical decision support prescribing systems in two 'early adopter' hospitals". JAMIA 21 (e2): e194-202. doi:10.1136/amiajnl-2013-002252. PMC PMC4173168. PMID 24431334. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4173168.

- ↑ Thomas, M.T. (2 May 2017). "Making Software 'Correct by Construction'". Lecture at Museum of London. Gresham College. https://www.gresham.ac.uk/lectures-and-events/making-software-correct-by-construction.

- ↑ Ammenwerth, E.; Rigby, M., ed. (2016). Evidence-Based Health Informatics. IOS Press. ISBN 9781614996354.

- ↑ Wyatt, J.C. (2016). "Evidence-based Health Informatics and the Scientific Development of the Field". Studies in Health Technology and Informatics 222: 14–24. PMID 27198088.

- ↑ Sheikh, A.; Atun, R.; Bates, D.W. (2014). "The need for independent evaluations of government-led health information technology initiatives". BMJ Quality and Safety 23 (8): 611-3. doi:10.1136/bmjqs-2014-003273. PMID 24950693.

- ↑ Scott, P. (2015). "Exploiting the information revolution: call for independent evaluation of the latest English national experiment". Journal of Innovation in Health Informatics 22 (1): 244-9. doi:10.14236/jhi.v22i1.139. PMID 25924557.

- ↑ Garg, A.X.; Adhikari, N.K.; McDonald, H. et al. (2005). "Effects of computerized clinical decision support systems on practitioner performance and patient outcomes: A systematic review". JAMA 293 (10): 1223-38. doi:10.1001/jama.293.10.1223. PMID 15755945.

Notes

This presentation is faithful to the original, with only a few minor changes to presentation. Grammar and punctuation was edited to American English, and in some cases additional context was added to text when necessary. In some cases important information was missing from the references, and that information was added.