Difference between revisions of "Journal:An extract-transform-load process design for the incremental loading of German real-world data based on FHIR and OMOP CDM: Algorithm development and validation"

Shawndouglas (talk | contribs) (Created stub. Saving and adding more.) |

Shawndouglas (talk | contribs) (Finished adding rest of content.) |

||

| (3 intermediate revisions by the same user not shown) | |||

| Line 18: | Line 18: | ||

|website = [https://medinform.jmir.org/2023/1/e47310 https://medinform.jmir.org/2023/1/e47310] | |website = [https://medinform.jmir.org/2023/1/e47310 https://medinform.jmir.org/2023/1/e47310] | ||

|download = [https://medinform.jmir.org/2023/1/e47310/PDF https://medinform.jmir.org/2023/1/e47310/PDF] (PDF) | |download = [https://medinform.jmir.org/2023/1/e47310/PDF https://medinform.jmir.org/2023/1/e47310/PDF] (PDF) | ||

}} | }} | ||

==Abstract== | ==Abstract== | ||

'''Background''': In the Medical Informatics in Research and Care in University Medicine (MIRACUM) consortium, an IT-based [[Clinical trial | '''Background''': In the Medical Informatics in Research and Care in University Medicine (MIRACUM) consortium, an IT-based [[Clinical trial management system|clinical trial recruitment support system]] was developed based on the Observational Medical Outcomes Partnership (OMOP) Common Data Model (CDM). Currently, OMOP CDM is populated with German [[Fast Healthcare Interoperability Resources]] (FHIR) data using an [[Extract, transform, load|extract-transform-load]] (ETL) process, which was designed as a bulk load. However, the computational effort that comes with an everyday full load is not efficient for daily recruitment. | ||

'''Objective''': The aim of this study is to extend our existing ETL process with the option of incremental loading to efficiently support daily updated data. | '''Objective''': The aim of this study is to extend our existing ETL process with the option of incremental loading to efficiently support daily updated data. | ||

| Line 40: | Line 34: | ||

==Introduction== | ==Introduction== | ||

===Background and significance=== | ===Background and significance=== | ||

Randomized controlled clinical trials are the gold standard to “measure the effectiveness of a new intervention or treatment.”<ref>{{Cite journal |last=Hariton |first=Eduardo |last2=Locascio |first2=Joseph J |date=2018-12 |title=Randomised controlled trials – the gold standard for effectiveness research: Study design: randomised controlled trials |url=https://obgyn.onlinelibrary.wiley.com/doi/10.1111/1471-0528.15199 |journal=BJOG: An International Journal of Obstetrics & Gynaecology |language=en |volume=125 |issue=13 |pages=1716–1716 |doi=10.1111/1471-0528.15199 |issn=1470-0328 |pmc=PMC6235704 |pmid=29916205}}</ref> However, randomized controlled clinical trials are limited regarding the representative number of persons included and, therefore, are restricted in their external generalizability. To gain more unbiased evidence, observational studies focus on real-world data from large heterogeneous populations. | |||

To support observational research, we at the Institute for Medical Informatics and Biometry at Technische Universität Dresden already provide a transferable [[Extract, transform, load|extract-transform-load]] (ETL) process<ref name=":0">{{Cite journal |last=Peng |first=Yuan |last2=Henke |first2=Elisa |last3=Reinecke |first3=Ines |last4=Zoch |first4=Michéle |last5=Sedlmayr |first5=Martin |last6=Bathelt |first6=Franziska |date=2023-01 |title=An ETL-process design for data harmonization to participate in international research with German real-world data based on FHIR and OMOP CDM |url=https://linkinghub.elsevier.com/retrieve/pii/S1386505622002398 |journal=International Journal of Medical Informatics |language=en |volume=169 |pages=104925 |doi=10.1016/j.ijmedinf.2022.104925}}</ref> to transform German real-world data to the Observational Medical Outcomes Partnership (OMOP) Common Data Model (CDM)<ref>{{Cite web |date=2023 |title=Standardized Data: The OMOP Common Data Model |url=https://www.ohdsi.org/data-standardization/ |publisher=Observational Health Data Sciences and Informatics}}</ref> provided by Observational Health Data Sciences and Informatics (OHDSI).<ref>{{Cite journal |last=Hripcsak |first=George |last2=Duke |first2=Jon D. |last3=Shah |first3=Nigam H. |last4=Reich |first4=Christian G. |last5=Huser |first5=Vojtech |last6=Schuemie |first6=Martijn J. |last7=Suchard |first7=Marc A. |last8=Park |first8=Rae Woong |last9=Wong |first9=Ian Chi Kei |last10=Rijnbeek |first10=Peter R. |last11=van der Lei |first11=Johan |date=2015 |title=Observational Health Data Sciences and Informatics (OHDSI): Opportunities for Observational Researchers |url=https://pubmed.ncbi.nlm.nih.gov/26262116 |journal=Studies in Health Technology and Informatics |volume=216 |pages=574–578 |issn=1879-8365 |pmc=4815923 |pmid=26262116}}</ref> This transformation effort supports the possibilities for multicentric and even international studies. Due to the heterogeneity of the structure and content of the data from the data integration centers within the Medical Informatics Initiative Germany (MI-I)<ref>{{Cite journal |last=Semler |first=Sebastian |last2=Wissing |first2=Frank |last3=Heyder |first3=Ralf |date=2018-07 |title=German Medical Informatics Initiative: A National Approach to Integrating Health Data from Patient Care and Medical Research |url=http://www.thieme-connect.de/DOI/DOI?10.3414/ME18-03-0003 |journal=Methods of Information in Medicine |language=en |volume=57 |issue=S 01 |pages=e50–e56 |doi=10.3414/ME18-03-0003 |issn=0026-1270 |pmc=PMC6178199 |pmid=30016818}}</ref>, the [[Health Level 7]] (HL7)<ref>{{Cite journal |last=Kabachinski |first=Jeff |date=2006-09 |title=What is Health Level 7? |url=http://array.aami.org/doi/10.2345/i0899-8205-40-5-375.1 |journal=Biomedical Instrumentation & Technology |language=en |volume=40 |issue=5 |pages=375–379 |doi=10.2345/i0899-8205-40-5-375.1 |issn=0899-8205}}</ref> [[Fast Healthcare Interoperability Resources]] (FHIR) communication standard was specified among all German university [[hospital]]s. Consequently, we used FHIR as the source for our ETL process. The FHIR specification is given by the core data set of the MI-I.<ref>{{Cite web |title=The Medical Informatics Initiative’s core data set |work=Medical Informatics Initiative Germany |url=https://www.medizininformatik-initiative.de/en/medical-informatics-initiatives-core-data-set |publisher=Technologie- und Methodenplattform für die vernetzte medizinische Forschung e.V |accessdate=07 November 2022}}</ref> FHIR resources can be read from an FHIR Gateway<ref>{{Cite web |last=MIRACUM |date=2023 |title=miracum / fhir-gateway |work=GitHub |url=https://github.com/miracum/fhir-gateway |accessdate=15 March 2023}}</ref> ([[PostgreSQL]] database) or FHIR Server (e.g., HAPI<ref>{{Cite web |last=HAPI FHIR |date=2023 |title=hapifhir / hapi-fhir |work=GitHub |url=https://github.com/hapifhir/hapi-fhir |accessdate=15 March 2023}}</ref> or Blaze<ref>{{Cite web |last=Samply |date=2023 |title=samply / blaze |work=GitHub |url=https://github.com/samply/blaze |accessdate=15 March 2023}}</ref>). As the target of our ETL process, we used OMOP CDM v5.3.1.<ref>{{Cite web |last=OHDSI |title=OMOP CDM v5.3.1 |work=GitHub |url=https://ohdsi.github.io/CommonDataModel/cdm531.html |accessdate=07 November 2022}}</ref> The implementation of the ETL process was done using the open-source framework Java SpringBatch.<ref>{{Cite web |last=Spring |title=Spring Batch - Reference Documentation |url=https://docs.spring.io/spring-batch/docs/current/reference/html/index.html |publisher=Pivotal, Inc |archiveurl=https://web.archive.org/web/20220815163728/https://docs.spring.io/spring-batch/docs/current/reference/html/index.html |archivedate=15 August 2022 |accessdate=07 November 2022}}</ref> Our ETL process has been implemented in accordance with the default assumption as described in ''The Book of OHDSI''<ref>{{Cite web |date=11 January 2021 |title=The Book of OHDSI |work=GitHub |url=https://ohdsi.github.io/TheBookOfOhdsi/ |publisher=OHDSI |accessdate=19 April 2022}}</ref>, where the OHDSI community defines the ETL process as a full load to transfer data from source to target systems. | |||

This approach is efficient for a dedicated study where data gets loaded once without any update afterward; however, it is inefficient when it comes to the need for updated data on a daily basis. The latter is the case for the developments around the improvement and support of the recruitment process for clinical trials, which the Medical Informatics in Research and Care in University Medicine (MIRACUM)<ref>{{Cite journal |last=Prokosch |first=Hans-Ulrich |last2=Acker |first2=Till |last3=Bernarding |first3=Johannes |last4=Binder |first4=Harald |last5=Boeker |first5=Martin |last6=Boerries |first6=Melanie |last7=Daumke |first7=Philipp |last8=Ganslandt |first8=Thomas |last9=Hesser |first9=Jürgen |last10=Höning |first10=Gunther |last11=Neumaier |first11=Michael |date=2018-07 |title=MIRACUM: Medical Informatics in Research and Care in University Medicine: A Large Data Sharing Network to Enhance Translational Research and Medical Care |url=http://www.thieme-connect.de/DOI/DOI?10.3414/ME17-02-0025 |journal=Methods of Information in Medicine |language=en |volume=57 |issue=S 01 |pages=e82–e91 |doi=10.3414/ME17-02-0025 |issn=0026-1270 |pmc=PMC6178200 |pmid=30016814}}</ref> consortium, as part of the MI-I funded by the German Federal Ministry of Education and Research, is working on. In this context, an IT-based [[Clinical trial management system|clinical trial recruitment support system]] (CTRSS) based on OMOP CDM was implemented.<ref>{{Cite journal |last=Reinecke |first=Ines |last2=Gulden |first2=Christian |last3=Kü |last4=Mmel |first4=Miché |last5=le |last6=Nassirian |first6=Azadeh |last7=Blasini |first7=Romina |last8=Sedlmayr |first8=Martin |date=2020 |title=Design for a Modular Clinical Trial Recruitment Support System Based on FHIR and OMOP |url=https://ebooks.iospress.nl/doi/10.3233/SHTI200142 |journal=Digital Personalized Health and Medicine |pages=158–162 |doi=10.3233/SHTI200142}}</ref> The CTRSS consists of a screening list for recruitment teams that provides potential candidates for clinical trials updated on a daily base. To enable the CTRSS to provide recruitment proposals, it is necessary to transform the data in FHIR format at each site from the 10 MIRACUM data integration centers into the standardized format of OMOP CDM. The procession of FHIR resources to OMOP CDM through our ETL process has already been successfully tested and integrated at all 10 German university hospitals of the MIRACUM consortium. | |||

So far, our ETL process is restricted to a bulk load of FHIR resources to OMOP CDM. This implied that all FHIR resources are read from the source. To enable the CTRSS to provide daily recruitment proposals, our ETL process has to be executed every day as a full load. However, an everyday full load is not efficient because often only a small amount of source data has changed during loading periods, which results in unnecessary long execution times considering a full load for daily executions. Consequently, the computational effort that comes with the daily execution of the bulk load is not efficient in the context of the CTRSS. | |||

Thus, a new approach is needed to only process FHIR resources that were created, updated, or deleted (CUD) since the last execution of the ETL process once an initial load has been executed. This loading option is known as "incremental loading." | |||

===Objective=== | |||

To keep the bulk load option for dedicated studies and still be performant toward daily changes in the source data, a combination of bulk load and incremental load is needed. To reduce the additional effort in implementing a second independent ETL process for incremental loading, it is our aim to extend our existing ETL process with the option of incremental loading. During our research, we focused on the following four research questions: | |||

#What requirements need to be considered when integrating incremental loading into our existing ETL process design? | |||

#What approaches already exist for incremental ETL processes? | |||

#How can the identified requirements from research question one be implemented in our existing ETL process design? | |||

#Does incremental loading provide an advantage over daily bulk loading? | |||

==Methods== | |||

===Analysis of the Existing ETL FHIR-to-OMOP process=== | |||

To determine the requirements for integrating incremental loading into our existing ETL process design, we performed an impact analysis focusing on the whole ETL process, as well as, in more detail, the three main components of it, namely, Reader, Processor, and Writer, as presented by Peng ''et al.''<ref name=":0" /> Regarding the whole ETL process, the following three requirements were needed: | |||

*Requirement A: It is necessary to provide the user with the ability to distinguish between bulk loading and incremental loading. | |||

*Requirement B: For incremental loading, it is further essential that the Reader of the ETL process is able to detect changes in the source system and reads only CUD-FHIR resources on a daily basis. | |||

*Requirement C: During the processing of updated and deleted FHIR resources, duplicates and obsolete data should be avoided in OMOP CDM to guarantee data correctness. | |||

Considering the semantic mapping from FHIR MI-I Core Data Set (CDS) to OMOP CDM and the Writer of the ETL process, as described by Peng ''et al.''<ref name=":0" />, incremental loading has no impact on both. In summary, incremental loading requires an adjustment of the implementation of the Reader and Processor. | |||

===Literature review=== | |||

To identify approaches that might be adaptable to our existing ETL design and fulfill the three requirements in the previous section, we conducted a first literature review on July 14, 2021; a second one on November 28, 2022; and a third one on February 22, 2023 (Multimedia Appendices 1, 2, and 3). Table 1 includes the search strings and the number of results for three literature databases. | |||

{| | |||

| style="vertical-align:top;" | | |||

{| class="wikitable" border="1" cellpadding="5" cellspacing="0" width="100%" | |||

|- | |||

| colspan="3" style="background-color:white; padding-left:10px; padding-right:10px;" |'''Table 1.''' Literature review: database, search string, and number of results. | |||

|- | |||

! style="background-color:#e2e2e2; padding-left:10px; padding-right:10px;" |Database | |||

! style="background-color:#e2e2e2; padding-left:10px; padding-right:10px;" |Search string | |||

! style="background-color:#e2e2e2; padding-left:10px; padding-right:10px;" |Results (''n'') | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |PubMed | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |All fields: (incremental) AND ((etl) OR (extract transform load)) | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |7 | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |IEEE Xplore | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |((“All Metadata”: incremental) AND (“All Metadata”: etl OR “All Metadata”: extract transform load)) | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |15 | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Web of Science | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |ALL=(incremental) AND (ALL=(etl) OR ALL=(extract transform load)) | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |46 | |||

|- | |||

|} | |||

|} | |||

We included only articles from 2011 to 2022 in English. After removing duplicates, 51 items were left. These were screened independently by two authors (EH and MZ). Through the title and abstract screening, we identified 12 relevant articles. After the screening of the full texts, we included eight articles within our research. Reasons for excluding the other articles were other meanings of the abbreviation “ETL,” ETL tools without regard to theoretical approaches of incremental loads, focus on application instead of ETL process and theoretical approach, and quality and error handling without focus on a theoretical approach. | |||

Only two of the eight articles addressed ETL processes for loading patient data into OMOP CDM. Lynch ''et al.''<ref name=":1">{{Cite journal |last=Lynch |first=Kristine E. |last2=Deppen |first2=Stephen A. |last3=DuVall |first3=Scott L. |last4=Viernes |first4=Benjamin |last5=Cao |first5=Aize |last6=Park |first6=Daniel |last7=Hanchrow |first7=Elizabeth |last8=Hewa |first8=Kushan |last9=Greaves |first9=Peter |last10=Matheny |first10=Michael E. |date=2019-10 |title=Incrementally Transforming Electronic Medical Records into the Observational Medical Outcomes Partnership Common Data Model: A Multidimensional Quality Assurance Approach |url=http://www.thieme-connect.de/DOI/DOI?10.1055/s-0039-1697598 |journal=Applied Clinical Informatics |language=en |volume=10 |issue=05 |pages=794–803 |doi=10.1055/s-0039-1697598 |issn=1869-0327 |pmc=PMC6811349 |pmid=31645076}}</ref> introduced an approach for incremental transformation from the data warehouse to OMOP CDM to prevent incremental load errors. They suggest basing the development on a [[quality assurance]] (QA) process dependent on the [[data quality]] framework by Kahn ''et al.''<ref>{{Cite journal |last=Kahn |first=Michael G. |last2=Callahan |first2=Tiffany J. |last3=Barnard |first3=Juliana |last4=Bauck |first4=Alan E. |last5=Brown |first5=Jeff |last6=Davidson |first6=Bruce N. |last7=Estiri |first7=Hossein |last8=Goerg |first8=Carsten |last9=Holve |first9=Erin |last10=Johnson |first10=Steven G. |last11=Liaw |first11=Siaw-Teng |date=2016-09-11 |title=A Harmonized Data Quality Assessment Terminology and Framework for the Secondary Use of Electronic Health Record Data |url=https://up-j-gemgem.ubiquityjournal.website/articles/141 |journal=eGEMs (Generating Evidence & Methods to improve patient outcomes) |volume=4 |issue=1 |pages=18 |doi=10.13063/2327-9214.1244 |issn=2327-9214 |pmc=PMC5051581 |pmid=27713905}}</ref> Furthermore, they generated ETL batch tracking ids for each record of data during the transformation to OMOP CDM. For 1:1 mappings, they created custom columns in the standardized OMOP CDM tables, and for 1:''n'' or ''n'':1 mappings, they used a parallel mapping table to store the ETL batch id and a link to the corresponding record in OMOP CDM. Secondly, Lenert ''et al.''<ref name=":2">{{Cite journal |last=Lenert |first=Leslie A |last2=Ilatovskiy |first2=Andrey V |last3=Agnew |first3=James |last4=Rudisill |first4=Patricia |last5=Jacobs |first5=Jeff |last6=Weatherston |first6=Duncan |last7=Deans Jr |first7=Kenneth R |date=2021-07-30 |title=Automated production of research data marts from a canonical fast healthcare interoperability resource data repository: applications to COVID-19 research |url=https://academic.oup.com/jamia/article/28/8/1605/6276433 |journal=Journal of the American Medical Informatics Association |language=en |volume=28 |issue=8 |pages=1605–1611 |doi=10.1093/jamia/ocab108 |issn=1527-974X |pmc=PMC8243354 |pmid=33993254}}</ref> describe an automated transformation of clinical data into two CDMs (OMOP and PCORnet database) by using FHIR. Therefore, they use the so-called subscriptions of FHIR resources. These subscriptions trigger a function to create a copy of the FHIR resource and its transmission into another system whenever a FHIR resource is created or updated. | |||

Despite OMOP CDM being the target database, the literature search revealed different concepts for incremental ETL itself. Kathiravelu ''et al.''<ref>{{Cite journal |last=Kathiravelu |first=Pradeeban |last2=Sharma |first2=Ashish |last3=Galhardas |first3=Helena |last4=Van Roy |first4=Peter |last5=Veiga |first5=Luís |date=2019-06 |title=On-demand big data integration: A hybrid ETL approach for reproducible scientific research |url=http://link.springer.com/10.1007/s10619-018-7248-y |journal=Distributed and Parallel Databases |language=en |volume=37 |issue=2 |pages=273–295 |doi=10.1007/s10619-018-7248-y |issn=0926-8782}}</ref> described the caching of new or updated data in a temporary table. Of the eight articles, seven described various methods for incremental updates, particularly focusing on change data capture (CDC). All describe different categories of CDC, like timestamp-based, audit column–based, trigger-based, log-based, [[application programming interface]]–based, and data-based snapshots<ref name=":1" /><ref name=":2" /><ref name=":3">{{Cite journal |last=Wen |first=Wei Jun |date=2014-10 |title=Research on the Incremental Updating Mechanism of Marine Environmental Data Warehouse |url=https://www.scientific.net/AMM.668-669.1378 |journal=Applied Mechanics and Materials |volume=668-669 |pages=1378–1381 |doi=10.4028/www.scientific.net/AMM.668-669.1378 |issn=1662-7482}}</ref><ref name=":4">{{Cite book |date=2020 |editor-last=Smys |editor-first=S. |editor2-last=Senjyu |editor2-first=Tomonobu |editor3-last=Lafata |editor3-first=Pavel |title=Second International Conference on Computer Networks and Communication Technologies: ICCNCT 2019 |url=http://link.springer.com/10.1007/978-3-030-37051-0 |series=Lecture Notes on Data Engineering and Communications Technologies |language=en |publisher=Springer International Publishing |place=Cham |volume=44 |doi=10.1007/978-3-030-37051-0 |isbn=978-3-030-37050-3}}</ref><ref name=":5">{{Cite journal |last=Sun |first=Yue-yue |date=2022-05 |title=Research and implementation of an efficient incremental synchronization method based on Timestamp |url=https://ieeexplore.ieee.org/document/9814725/ |journal=2022 3rd International Conference on Computing, Networks and Internet of Things (CNIOT) |publisher=IEEE |place=Qingdao, China |pages=158–162 |doi=10.1109/CNIOT55862.2022.00035 |isbn=978-1-6654-6910-4}}</ref><ref name=":6">{{Cite journal |last=Hu |first=Yong |last2=Dessloch |first2=Stefan |date=2014-09 |title=Extracting deltas from column oriented NoSQL databases for different incremental applications and diverse data targets |url=https://linkinghub.elsevier.com/retrieve/pii/S0169023X14000615 |journal=Data & Knowledge Engineering |language=en |volume=93 |pages=42–59 |doi=10.1016/j.datak.2014.07.002}}</ref><ref name=":7">{{Cite journal |last=Wei Du |last2=Zou |first2=Xianxia |date=2015-08 |title=Differential snapshot algorithms based on Hadoop MapReduce |url=http://ieeexplore.ieee.org/document/7382113/ |journal=2015 12th International Conference on Fuzzy Systems and Knowledge Discovery (FSKD) |publisher=IEEE |place=Zhangjiajie, China |pages=1203–1208 |doi=10.1109/FSKD.2015.7382113 |isbn=978-1-4673-7682-2}}</ref>: (1) Lynch ''et al.''<ref name=":1" /> and (2) Lenert ''et al.''<ref name=":2" /> focused on triggers; (3) Wen<ref name=":3" /> focused on timestamps and triggers; (4) Thulasiram and Ramaiah<ref name=":4" /> and (5) Sun<ref name=":5" /> focused on timestamps; (6) Hu and Dessloch<ref name=":6" /> focused on timestamps, audit columns, logs, triggers, and snapshots; and (7) Wei Du and Zou<ref name=":7" /> focused on snapshots and MapReduce. | |||

In summary, the literature review revealed adaptable approaches, which can be applied for the implementation of requirements B and C. However, no approaches could be found in the literature for requirement A. For this reason, we have to define a new method to enable both bulk and incremental loading in one ETL process. The concrete integration of the approaches into our existing ETL design is described in more detail in the following sections. | |||

===Incremental ETL process design=== | |||

====Enabling both bulk and incremental loading==== | |||

For the specification, if the ETL process should be executed as bulk or incremental load, we added a new Boolean parameter in the configuration file of the ETL process called <tt>APP_BULKLOAD_ENABLED</tt>. According to the desired loading option, the parameter has to be adjusted before executing the ETL process, with “true” results in a bulk load and “false” results in an incremental load. During the execution of the ETL process, this parameter is further taken into account for the Reader and Processor of the ETL process<ref name=":0" /> to distinguish between the needs of bulk and incremental load (e.g., to ensure that the OMOP CDM database is not emptied at the beginning of the ETL process execution during an incremental load). | |||

====Focusing on CUD-FHIR resources since the last ETL execution==== | |||

Our purpose of incremental loading was to focus only on CUD-FHIR resources since the last time the ETL process was executed (whether as bulk or incremental load). Consequently, the ETL process for incremental load has to filter only CUD-FHIR resources from the source. The literature research showed that there are various CDC approaches to detect changes in the source. In our case, FHIR resources in the FHIR Gateway and FHIR Server contain [[metadata]], such as a timestamp indicating when an FHIR resource was created, updated, or deleted in the source (FHIR Gateway: <tt>column last_updated_at</tt>; FHIR Server: <tt>meta.lastUpdated</tt>). That is why we used the timestamp-based CDC approach to filter FHIR resources, which have a timestamp specification after the last ETL execution time. | |||

To ensure filtering for the incremental load, we added two new parameters in the configuration file of the ETL process: <tt>DATA_BEGINDATE</tt> and <tt>DATA_ENDDATE</tt>. Both parameters have to be adjusted before executing the ETL process as incremental load. During the execution, the ETL process takes these two parameters into account and only reads FHIR resources from the source that has a metadata timestamp specification that is in [<tt>DATA_BEGINDATE</tt>, <tt>DATA_ENDDATE</tt>]. | |||

====Guarantee data correctness in OMOP CDM==== | |||

To avoid duplicates in OMOP CDM when processing updated and deleted FHIR resources, their existence in OMOP CDM has to be checked during processing. The FHIR resources themselves do not have a flag that indicates whether they are new or have been changed. Only deleted FHIR resources can be identified by a specific flag in the metadata. To assess the existence of FHIR resources in OMOP CDM, a comparison of the data of the read FHIR resources with the data already available in OMOP CDM has to be done. | |||

The literature research showed an approach to generate a unique tracking id per source data during the transformation process and its storage in OMOP CDM.<ref name=":1" /> We decided against the approach of generating an additional id because FHIR resources already contain two identifying FHIR elements themselves: id and identifier. The id represents the logical id of the resource per resource type, while the identifier specifies an identifier that is part of the source data. Both FHIR elements allow the unique identification of an FHIR resource per resource type. However, the standardized OMOP CDM tables do not provide the possibility to store this information from FHIR. Furthermore, OMOP CDM has its own primary keys for each record in a table independent of the id and identifier used in FHIR. Consequently, after transforming FHIR resources to OMOP CDM, the identifying data from FHIR resources will be lost. | |||

To solve this problem, we need to store the mapping between the id and identifier used in FHIR with the id used in OMOP CDM. Due to the fact that the id of an FHIR resource is only unique per resource type and one FHIR resource can be stored in OMOP CDM in multiple tables, we additionally have to specify the resource type. As mentioned above, Lynch ''et al.''<ref name=":1" /> presented an approach to store the mapping between tracking ids for source records and ids used in OMOP CDM by using a mapping table and custom columns in OMOP CDM. We have slightly customized this approach and adapted it into our ETL design. Contrary to the use of both mapping tables and custom columns, we considered each approach separately. | |||

Our first approach uses mapping tables for each FHIR resource type in a separate schema in OMOP CDM. With this approach, the Writer of the ETL process has to fill additional mapping tables beside the standardized tables in OMOP CDM. Our second approach focuses on two new columns in the standardized tables in OMOP CDM called “fhir_logical_id” and “fhir_identifier.” These columns store the id and identifier of the FHIR resource. Furthermore, we appended an abbreviation of the resource type as a prefix to the id and identifier of FHIR (eg, “med-” for Medication, “mea-” for MedicationAdministration, or “mes-” for MedicationStatement FHIR resources). In consequence, the combination of the prefix with the id and identifier and its storage in OMOP CDM enables the unique identification of FHIR resources in OMOP CDM. Since the mapping tables and two new columns are required exclusively for the ETL process, the analysis of data across multiple OMOP CDM databases is not affected. | |||

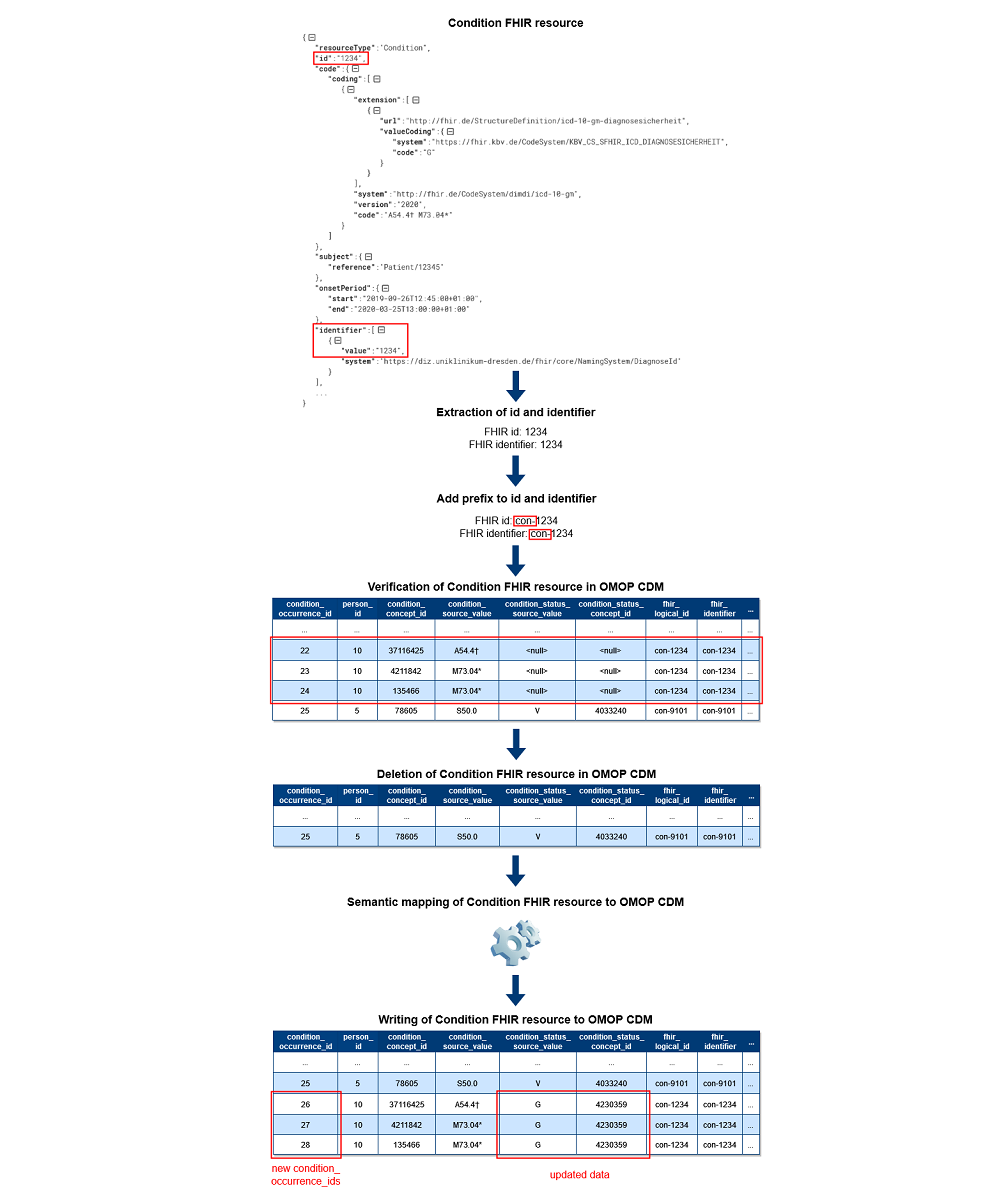

Based on the unique identification of FHIR resources in OMOP CDM, it is now possible to guarantee data correctness in OMOP CDM during incremental loading. Figure 1 shows the exemplary data flow for Condition FHIR resources for the second approach with two new columns. First, the Processor extracts the id and identifier used in FHIR. After that, the prefix is added to both values. Regardless of whether the data was created, updated, or deleted in the source, the ETL process next verifies each processed FHIR resource’s existence in OMOP CDM using the mapping tables or two new columns. During the verification, records are deleted in OMOP CDM if they were found. This approach is also used for updated FHIR resources to avoid incomplete updates for cross-domain mappings in OMOP CDM. Consequently, we do not perform updates on the existing records in OMOP CDM except Patient and Encounter FHIR resources to ensure referential integrity in OMOP CDM. In case FHIR resources are marked as deleted in the source, the processing is completed. Otherwise, the same semantic mapping logic as for bulk loading<ref name=":0" /> applies afterward, and the data of the FHIR resources are written to OMOP CDM as new records with new OMOP ids. | |||

[[File:Fig1 Henke JMIRMedInfo2023 11.png|1000px]] | |||

{{clear}} | |||

{| | |||

| style="vertical-align:top;" | | |||

{| border="0" cellpadding="5" cellspacing="0" width="1000px" | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |<blockquote>'''Figure 1.''' Excerpt of the data flow of the Condition Processor. CDM: Common Data Model; FHIR: Fast Healthcare Interoperability Resources; OMOP: Observational Medical Outcomes Partnership.</blockquote> | |||

|- | |||

|} | |||

|} | |||

===Evaluation of the incremental load process=== | |||

For the evaluation of the incremental load process, we defined and executed two ETL test designs. First, we tested which approach to store the mapping between id and identifier used in FHIR with the id used in OMOP CDM was the most performant. For this purpose, we implemented a separate ETL process version for each approach. Afterward, we executed the ETL process as bulk load first and as incremental load afterward, and compared the execution times between the mapping table approach and the column approach. For further evaluation of the incremental load process, we have chosen the most performant approach, resulting in a new optimized ETL process version for the second ETL test design. | |||

To test the achievement of the three requirements identified during the initial analysis of our ETL process, we defined and executed a second ETL test design (Table 2) that compares the results of bulk loading with those of incremental loading regarding performance and data correctness. Our hypotheses here are that the execution time of incremental loading alone is less than bulk loading, including daily updates, and that the amount of data per table in OMOP CDM is identical after incremental loading and bulk loading, including daily updates. | |||

{| | |||

| style="vertical-align:top;" | | |||

{| class="wikitable" border="1" cellpadding="5" cellspacing="0" width="100%" | |||

|- | |||

| colspan="2" style="background-color:white; padding-left:10px; padding-right:10px;" |'''Table 2.''' Extract-transform-load test design regarding performance and data correctness. | |||

|- | |||

! style="background-color:#e2e2e2; padding-left:10px; padding-right:10px;" |Test focus | |||

! style="background-color:#e2e2e2; padding-left:10px; padding-right:10px;" |Hypothesis | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Performance | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |t(bulk loading (3 mon)) + t(incremental loading (1 d)) < t(bulk loading (3 mon)) + t(bulk loading (3 mon + 1 d)) | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Data correctness | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |#((bulk loading (3 mon)) + (incremental loading (1 d))) = #(bulk loading (3 mon + 1 d)) | |||

|- | |||

|} | |||

|} | |||

For both ETL test designs, we used a total of 3,802,121 synthetic FHIR resources version R4 based on the MI-I CDS version 1.0, which were generated using random values. Furthermore, we simulated CUD-FHIR resources for testing incremental loading for one day. For the simulation, we checked the frequency distribution of CUD data per domain in our source system with real-world data for eight days and calculated the average value (see Multimedia Appendix 4). In addition, we set up one OMOP CDM v5.3.1 database as the target and executed the ETL process according to our test designs. For both ETL tests, we tracked the execution times based on the time stamps in the logging file of the ETL process until the corresponding job finished successfully. In a second step, we recorded the data quantity for each filled table in OMOP CDM and compared the results between the two ETL loading options for the second ETL test. | |||

==Results== | |||

===Architecture of the ETL process=== | |||

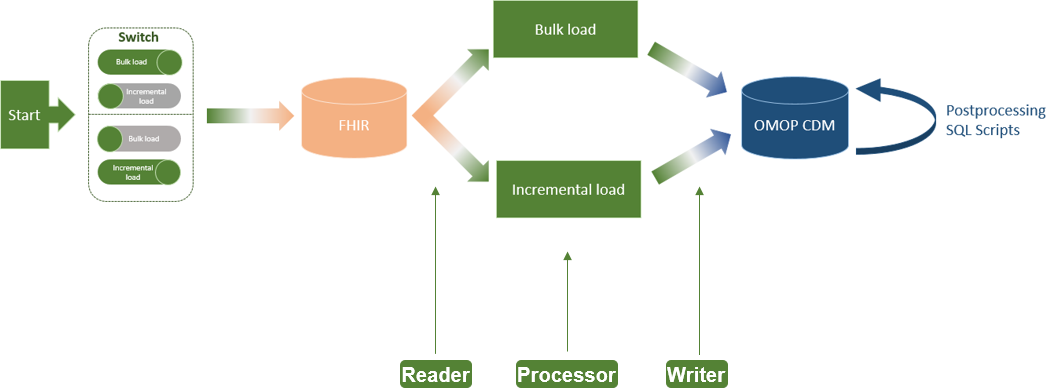

The implemented ETL process extension for incremental loading of FHIR resources to OMOP CDM has not changed the basic architecture of the ETL process, as proposed by Peng ''et al.''<ref name=":0" />, consisting of Reader, Processor, and Writer (Figure 2). The only addition is a switch at the beginning of the ETL process, which allows the user to select between bulk load and incremental load (requirement A). Moreover, we configured the Reader for incremental loading of CUD-FHIR resources on a daily basis (requirement B). In the Processor, we added the logic of the verification of CUD-FHIR resources and their deletion from OMOP CDM if they already exist (requirement C). | |||

[[File:Fig2 Henke JMIRMedInfo2023 11.png|900px]] | |||

{{clear}} | |||

{| | |||

| style="vertical-align:top;" | | |||

{| border="0" cellpadding="5" cellspacing="0" width="900px" | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |<blockquote>'''Figure 2.''' Architecture of the FHIR-to-OMOP extract-transform-load process including incremental load. CDM: Common Data Model; FHIR: Fast Healthcare Interoperability Resources; OMOP: Observational Medical Outcomes Partnership.</blockquote> | |||

|- | |||

|} | |||

|} | |||

The ETL process covering bulk and incremental load is available in the OHDSI repository ETL-German-FHIR-Core.<ref>{{Cite web |last=OHDSI |date=2023 |title=OHDSI / ETL-German-FHIR-Core |work=GitHub |url=https://github.com/OHDSI/ETL-German-FHIR-Core |accessdate=15 March 2023}}</ref> | |||

===Findings of the first ETL test=== | |||

The first ETL test focused on the performance measurement of the mapping table approach versus the column approach. First, we executed both ETL approaches as a bulk load. The column approach took about 30 minutes to transform FHIR resources to OMOP CDM. In contrast, the mapping table approach was still not finished after four hours. Therefore, we stopped the ETL execution and did not test the incremental loading anymore. Consequently, for the incremental ETL design, we decided to use the column approach due to its better performance and executed the subsequent performance evaluations with it. | |||

===Findings of the second ETL test=== | |||

The second ETL test dealt with testing our two hypotheses in Table 2. First, we compared the execution times between a bulk load (three months plus one day) and an initial bulk load (three months) followed by an incremental load (one day). For this, each loading option was executed three times. Based on the results, we calculated the average execution times. The performance results (Multimedia Appendix 5) show that an initial bulk load (13.31 minutes) followed by a daily incremental load (2.12 minutes) is more efficient than an everyday full load (17.07 minutes). Looking at the percentage improvement in performance, it can be shown that incremental loading had 87.5% less execution time than a daily full load (2.12 minutes compared to 17.07 minutes). Referring to our first hypothesis, we were able to prove our initial assumption. | |||

After the execution of both loading options, we further checked the data quantity for each filled table in OMOP CDM and compared the results of it. As shown in Table 3, both loading options resulted in the same amount of data (Multimedia Appendix 5). Consequently, we were also able to confirm our second hypothesis regarding data correctness in OMOP CDM. | |||

{| | |||

| style="vertical-align:top;" | | |||

{| class="wikitable" border="1" cellpadding="5" cellspacing="0" width="60%" | |||

|- | |||

| colspan="3" style="background-color:white; padding-left:10px; padding-right:10px;" |'''Table 3.''' Results of the data quantity comparison in the Observational Medical Outcomes Partnership Common Data Model (OMOP CDM) between bulk and incremental load. | |||

|- | |||

! style="background-color:#e2e2e2; padding-left:10px; padding-right:10px;" |Data field | |||

! style="background-color:#e2e2e2; padding-left:10px; padding-right:10px;" |Bulk load (three months + one day; ''n'') | |||

! style="background-color:#e2e2e2; padding-left:10px; padding-right:10px;" |Bulk load (three months) + incremental load (one day) (''n'') | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Care_site | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |152 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |152 | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Condition_occurrence | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |800,640 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |800,640 | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Death | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |857 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |857 | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Drug_exposure | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |1,171,521 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |1,171,521 | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Fact_relationship | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |2,323,894 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |2,323,894 | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Measurement | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |231,369 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |231,369 | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Observation | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |511,844 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |511,844 | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Observation_period | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |15,037 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |15,037 | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Person | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |15,037 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |15,037 | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Procedure_occurrence | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |168,384 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |168,384 | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Source_to_concept_map | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |251 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |251 | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Visit_detail | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |43,929 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |43,929 | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Visit_occurrence | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |29,898 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |29,898 | |||

|- | |||

|} | |||

|} | |||

==Discussion== | |||

===Principal findings=== | |||

Based on the partial results for research questions one and two, we defined three methods to integrate incremental loading into our ETL process design. In this context, the three identified requirements from the initial analysis could be implemented by taking existing approaches from the literature into account (research question three). Moreover, the incremental load process was tested at 10 university hospitals in Germany and ensures daily data transfer to OMOP CDM for the CTRSS. This proves that the ETL process is also suitable for real-world data, although it was developed with synthetic data. | |||

Currently, our ETL process requires FHIR resources following the MI-I core data set specification. However, our initial requirements analysis showed that the implemented incremental ETL logic does not affect the semantic mapping from FHIR to OMOP CDM described by Peng ''et al.''<ref name=":0" /> In consequence, the incremental ETL logic is independent of the data available in the FHIR format. Therefore, it can be used to incrementally transform international FHIR profiles such as the US Core Profiles<ref>{{Cite web |date=2023 |title=US Core Implementation Guide |work=HL7 FHIR |url=https://www.hl7.org/fhir/us/core/ |publisher=Health Level 7 International |accessdate=15 March 2023}}</ref> to OMOP CDM. | |||

===Limitations=== | |||

Nevertheless, the ETL process has limitations in its execution capabilities. As our FHIR resources comprise a logical id corresponding to the id in our source system, our ETL process is currently not able to deal with changing server end points, resulting in changing logical ids. Additionally, so far, we have not included an option to automatically start incremental loading nor do we support real-time streaming (e.g., via Apache Kafka<ref>{{Cite web |date=2023 |title=Apache Kafka |url=https://kafka.apache.org/ |publisher=Apache Software Foundation |accessdate=15 March 2023}}</ref>). These limitations are part of future work. | |||

To evaluate incremental loading compared to bulk loading, we performed two ETL tests (research question four). The results of the performance tests showed that the column approach is more performant than the mapping table approach. Our suspected explanation for this is that the mapping table approach requires additional tables to be filled besides the standardized tables in OMOP CDM. Consequently, during the verification of FHIR resources in OMOP CDM, a lookup and deletion in several tables (mapping tables and standardized tables) is necessary, whereas the column approach only accesses the standardized tables. | |||

Referring to our two hypotheses regarding performance and data correctness between incremental loading and bulk loading, we showed that our initial assumptions were proven. With the option of an incremental ETL process, we were able to reduce execution times to provide data in OMOP CDM on a daily basis, without data loss compared to the bulk load ETL process. For our future work, we want to further evaluate at what point bulk load is more worthwhile than incremental loading. The results of these evaluations will be incorporated into the automation concept. | |||

During the productive use of the ETL process, we identified two issues that have to be considered in the context of incremental loading to OMOP CDM. First, OHDSI provides a wide range of open-source tools (e.g., ATLAS<ref>{{Cite web |last=OHDSI |date=2023 |title=OHDSI / Atlas |work=GitHub |url=https://github.com/OHDSI/Atlas |accessdate=15 March 2023}}</ref>) for cohort definitions or statistical analyses. To make ATLAS work on the data in OMOP CDM, a summary report has to be generated in advance using ACHILLES<ref>{{Cite web |last=OHDSI |date=2023 |title=OHDSI / Achilles |work=GitHub |url=https://github.com/OHDSI/Achilles |accessdate=15 March 2023}}</ref> (Automated Characterization of Health Information at Large-Scale Longitudinal Evidence Systems), an [[R (programming language)|R package]] that provides characterization and visualization. Regarding the incremental ETL process, ACHILLES has to be run after each successful execution of the incremental ETL process. | |||

A second issue that needs to be addressed relates to the ids in the standardized tables in OMOP CDM. The incremental loading process requires the assignment of new ids in the OMOP CDM. While this was not a problem during development, it becomes obvious when a large amount of data is processed. In this context, the maximum id in the tables of OMOP CDM was reached, which led to a failure of the ETL process. We need to pay special attention to this point and find a solution (e.g., by reusing deleted ids or by changing the ETL process in a real updating ETL process). As this problem does not occur during the bulk load process, a current workaround is to start that process if the incremental load fails, which is possible as our process comprises bulk and incremental load options. | |||

==Conclusions== | |||

The presented ETL process from FHIR to OMOP CDM now enables both bulk and incremental loading. To receive daily updated recruitment proposals with the CTRSS, the ETL process no longer needs to be executed as a bulk load every day. One initial load supplemented by incremental loads per day meets the requirements of the CTRSS while being more performant. Moreover, since the incremental ETL logic is not restricted to the MI-I CDS specification, it can also be used for international studies that require daily updated data from FHIR resources in OMOP CDM. To be able to use not only the logic of incremental loading internationally, but the whole ETL process itself, the support of arbitrary FHIR profiles is needed. This requires a modularization and generalization of current ETL processes. For that, we will evaluate the extension to metadata-driven ETL in the near future. | |||

==Abbreviations, acronyms, and initialisms== | |||

*'''ACHILLES''': Automated Characterization of Health Information at Large-Scale Longitudinal Evidence Systems | |||

*'''CDC''': change data capture | |||

*'''CDM''': Common Data Model | |||

*'''CDS''': Core Data Set | |||

*'''CTRSS''': clinical trial recruitment support system | |||

*'''CUD''': created, updated, or deleted | |||

*'''ETL''': extract-transform-load | |||

*'''FHIR''': Fast Healthcare Interoperability Resources | |||

*'''HL7''': Health Level Seven | |||

*'''MI-I''': Medical Informatics Initiative Germany | |||

*'''MIRACUM''': Medical Informatics in Research and Care in University Medicine | |||

*'''OHDSI''': Observational Health Data Sciences and Informatics | |||

*'''OMOP''': Observational Medical Outcomes Partnership | |||

==Supplementary information== | |||

*[https://jmir.org/api/download?alt_name=medinform_v11i1e47310_app1.xlsx&filename=89f74351-403d-11ee-9361-2d531ddb8182.xlsx Multimedia Appendix 1]: Results and screenings of the literature review on July 14, 2021 (.xlsx) | |||

*[https://jmir.org/api/download?alt_name=medinform_v11i1e47310_app2.xlsx&filename=8a2dbca1-403d-11ee-9361-2d531ddb8182.xlsx Multimedia Appendix 2]: Results and screenings of the literature review on November 28, 2022 (.xlsx) | |||

*[https://jmir.org/api/download?alt_name=medinform_v11i1e47310_app3.xlsx&filename=8a56c871-403d-11ee-9361-2d531ddb8182.xlsx Multimedia Appendix 3]: Results and screenings of the literature review on February 22, 2023 (.xlsx) | |||

*[https://jmir.org/api/download?alt_name=medinform_v11i1e47310_app4.xlsx&filename=8a7cc701-403d-11ee-9361-2d531ddb8182.xlsx Multimedia Appendix 4]: Frequency distribution of created, updated, and deleted data (.xlsx) | |||

*[https://jmir.org/api/download?alt_name=medinform_v11i1e47310_app5.xlsx&filename=8a95cd41-403d-11ee-9361-2d531ddb8182.xlsx Multimedia Appendix 5]: Results of the second extract-transform-load test. | |||

==Acknowledgements== | |||

The research reported in this work was accomplished as part of the German Federal Ministry of Education and Research within the Medical Informatics Initiative, Medical Informatics in Research and Care in University Medicine (MIRACUM) consortium (FKZ: 01lZZ180L; Dresden). The article processing charge was funded by the joint publication funds of the Technische Universität, Dresden, including the Carl Gustav Carus Faculty of Medicine, and the Sächsische Landesbibliothek – Staats- und Universitätsbibliothek, Dresden, as well as the Open Access Publication Funding of the Deutsche Forschungsgemeinschaft. | |||

===Author contributions=== | |||

All authors contributed substantially to this work. EH and MZ conducted the literature review. EH and YP contributed to the extract-transform-load (ETL) process design and implementation. EH, YP, IR, and FB reviewed the ETL process design and implementation. YP contributed to the ETL process execution and evaluation. EH prepared the original draft. EH, YP, IR, FB, MZ, and MS reviewed and edited the manuscript. MS contributed toward the resources. All authors have read and agreed to the current version of the manuscript and take responsibility for the scientific integrity of the work. | |||

===Conflicts of interest=== | |||

None declared. | |||

==References== | ==References== | ||

Latest revision as of 23:47, 4 December 2023

| Full article title | An extract-transform-load process design for the incremental loading of German real-world data based on FHIR and OMOP CDM: Algorithm development and validation |

|---|---|

| Journal | JMIR Medical Informatics |

| Author(s) | Henke, Elisa; Peng, Yuan; Reinecke, Ines; Zoch, Michéle; Sedlmayr, Martin; Bathelt, Franziska |

| Author affiliation(s) | Technische Universität Dresden |

| Primary contact | Email: elisa dot henke at tu dash dresden dot de |

| Editors | Lovis, Christian |

| Year published | 2023 |

| Volume and issue | 11 |

| Article # | e47310 |

| DOI | 10.2196/47310 |

| ISSN | 2291-9694 |

| Distribution license | Creative Commons Attribution 4.0 International |

| Website | https://medinform.jmir.org/2023/1/e47310 |

| Download | https://medinform.jmir.org/2023/1/e47310/PDF (PDF) |

Abstract

Background: In the Medical Informatics in Research and Care in University Medicine (MIRACUM) consortium, an IT-based clinical trial recruitment support system was developed based on the Observational Medical Outcomes Partnership (OMOP) Common Data Model (CDM). Currently, OMOP CDM is populated with German Fast Healthcare Interoperability Resources (FHIR) data using an extract-transform-load (ETL) process, which was designed as a bulk load. However, the computational effort that comes with an everyday full load is not efficient for daily recruitment.

Objective: The aim of this study is to extend our existing ETL process with the option of incremental loading to efficiently support daily updated data.

Methods: Based on our existing bulk ETL process, we performed an analysis to determine the requirements of incremental loading. Furthermore, a literature review was conducted to identify adaptable approaches. Based on this, we implemented three methods to integrate incremental loading into our ETL process. Lastly, a test suite was defined to evaluate the incremental loading for data correctness and performance compared to bulk loading.

Results: The resulting ETL process supports bulk and incremental loading. Performance tests show that the incremental load took 87.5% less execution time than the bulk load (2.12 minutes compared to 17.07 minutes) related to changes of one day, while no data differences occurred in OMOP CDM.

Conclusions: Since incremental loading is more efficient than a daily bulk load, and both loading options result in the same amount of data, we recommend using bulk load for an initial load and switching to incremental load for daily updates. The resulting incremental ETL logic can be applied internationally since it is not restricted to German FHIR profiles.

Keywords: extract-transform-load, ETL, incremental loading, OMOP CDM, FHIR, interoperability, Observational Medical Outcomes Partnership Common Data Model; Fast Healthcare Interoperability Resources

Introduction

Background and significance

Randomized controlled clinical trials are the gold standard to “measure the effectiveness of a new intervention or treatment.”[1] However, randomized controlled clinical trials are limited regarding the representative number of persons included and, therefore, are restricted in their external generalizability. To gain more unbiased evidence, observational studies focus on real-world data from large heterogeneous populations.

To support observational research, we at the Institute for Medical Informatics and Biometry at Technische Universität Dresden already provide a transferable extract-transform-load (ETL) process[2] to transform German real-world data to the Observational Medical Outcomes Partnership (OMOP) Common Data Model (CDM)[3] provided by Observational Health Data Sciences and Informatics (OHDSI).[4] This transformation effort supports the possibilities for multicentric and even international studies. Due to the heterogeneity of the structure and content of the data from the data integration centers within the Medical Informatics Initiative Germany (MI-I)[5], the Health Level 7 (HL7)[6] Fast Healthcare Interoperability Resources (FHIR) communication standard was specified among all German university hospitals. Consequently, we used FHIR as the source for our ETL process. The FHIR specification is given by the core data set of the MI-I.[7] FHIR resources can be read from an FHIR Gateway[8] (PostgreSQL database) or FHIR Server (e.g., HAPI[9] or Blaze[10]). As the target of our ETL process, we used OMOP CDM v5.3.1.[11] The implementation of the ETL process was done using the open-source framework Java SpringBatch.[12] Our ETL process has been implemented in accordance with the default assumption as described in The Book of OHDSI[13], where the OHDSI community defines the ETL process as a full load to transfer data from source to target systems.

This approach is efficient for a dedicated study where data gets loaded once without any update afterward; however, it is inefficient when it comes to the need for updated data on a daily basis. The latter is the case for the developments around the improvement and support of the recruitment process for clinical trials, which the Medical Informatics in Research and Care in University Medicine (MIRACUM)[14] consortium, as part of the MI-I funded by the German Federal Ministry of Education and Research, is working on. In this context, an IT-based clinical trial recruitment support system (CTRSS) based on OMOP CDM was implemented.[15] The CTRSS consists of a screening list for recruitment teams that provides potential candidates for clinical trials updated on a daily base. To enable the CTRSS to provide recruitment proposals, it is necessary to transform the data in FHIR format at each site from the 10 MIRACUM data integration centers into the standardized format of OMOP CDM. The procession of FHIR resources to OMOP CDM through our ETL process has already been successfully tested and integrated at all 10 German university hospitals of the MIRACUM consortium.

So far, our ETL process is restricted to a bulk load of FHIR resources to OMOP CDM. This implied that all FHIR resources are read from the source. To enable the CTRSS to provide daily recruitment proposals, our ETL process has to be executed every day as a full load. However, an everyday full load is not efficient because often only a small amount of source data has changed during loading periods, which results in unnecessary long execution times considering a full load for daily executions. Consequently, the computational effort that comes with the daily execution of the bulk load is not efficient in the context of the CTRSS.

Thus, a new approach is needed to only process FHIR resources that were created, updated, or deleted (CUD) since the last execution of the ETL process once an initial load has been executed. This loading option is known as "incremental loading."

Objective

To keep the bulk load option for dedicated studies and still be performant toward daily changes in the source data, a combination of bulk load and incremental load is needed. To reduce the additional effort in implementing a second independent ETL process for incremental loading, it is our aim to extend our existing ETL process with the option of incremental loading. During our research, we focused on the following four research questions:

- What requirements need to be considered when integrating incremental loading into our existing ETL process design?

- What approaches already exist for incremental ETL processes?

- How can the identified requirements from research question one be implemented in our existing ETL process design?

- Does incremental loading provide an advantage over daily bulk loading?

Methods

Analysis of the Existing ETL FHIR-to-OMOP process

To determine the requirements for integrating incremental loading into our existing ETL process design, we performed an impact analysis focusing on the whole ETL process, as well as, in more detail, the three main components of it, namely, Reader, Processor, and Writer, as presented by Peng et al.[2] Regarding the whole ETL process, the following three requirements were needed:

- Requirement A: It is necessary to provide the user with the ability to distinguish between bulk loading and incremental loading.

- Requirement B: For incremental loading, it is further essential that the Reader of the ETL process is able to detect changes in the source system and reads only CUD-FHIR resources on a daily basis.

- Requirement C: During the processing of updated and deleted FHIR resources, duplicates and obsolete data should be avoided in OMOP CDM to guarantee data correctness.

Considering the semantic mapping from FHIR MI-I Core Data Set (CDS) to OMOP CDM and the Writer of the ETL process, as described by Peng et al.[2], incremental loading has no impact on both. In summary, incremental loading requires an adjustment of the implementation of the Reader and Processor.

Literature review

To identify approaches that might be adaptable to our existing ETL design and fulfill the three requirements in the previous section, we conducted a first literature review on July 14, 2021; a second one on November 28, 2022; and a third one on February 22, 2023 (Multimedia Appendices 1, 2, and 3). Table 1 includes the search strings and the number of results for three literature databases.

| |||||||||||||||

We included only articles from 2011 to 2022 in English. After removing duplicates, 51 items were left. These were screened independently by two authors (EH and MZ). Through the title and abstract screening, we identified 12 relevant articles. After the screening of the full texts, we included eight articles within our research. Reasons for excluding the other articles were other meanings of the abbreviation “ETL,” ETL tools without regard to theoretical approaches of incremental loads, focus on application instead of ETL process and theoretical approach, and quality and error handling without focus on a theoretical approach.

Only two of the eight articles addressed ETL processes for loading patient data into OMOP CDM. Lynch et al.[16] introduced an approach for incremental transformation from the data warehouse to OMOP CDM to prevent incremental load errors. They suggest basing the development on a quality assurance (QA) process dependent on the data quality framework by Kahn et al.[17] Furthermore, they generated ETL batch tracking ids for each record of data during the transformation to OMOP CDM. For 1:1 mappings, they created custom columns in the standardized OMOP CDM tables, and for 1:n or n:1 mappings, they used a parallel mapping table to store the ETL batch id and a link to the corresponding record in OMOP CDM. Secondly, Lenert et al.[18] describe an automated transformation of clinical data into two CDMs (OMOP and PCORnet database) by using FHIR. Therefore, they use the so-called subscriptions of FHIR resources. These subscriptions trigger a function to create a copy of the FHIR resource and its transmission into another system whenever a FHIR resource is created or updated.

Despite OMOP CDM being the target database, the literature search revealed different concepts for incremental ETL itself. Kathiravelu et al.[19] described the caching of new or updated data in a temporary table. Of the eight articles, seven described various methods for incremental updates, particularly focusing on change data capture (CDC). All describe different categories of CDC, like timestamp-based, audit column–based, trigger-based, log-based, application programming interface–based, and data-based snapshots[16][18][20][21][22][23][24]: (1) Lynch et al.[16] and (2) Lenert et al.[18] focused on triggers; (3) Wen[20] focused on timestamps and triggers; (4) Thulasiram and Ramaiah[21] and (5) Sun[22] focused on timestamps; (6) Hu and Dessloch[23] focused on timestamps, audit columns, logs, triggers, and snapshots; and (7) Wei Du and Zou[24] focused on snapshots and MapReduce.

In summary, the literature review revealed adaptable approaches, which can be applied for the implementation of requirements B and C. However, no approaches could be found in the literature for requirement A. For this reason, we have to define a new method to enable both bulk and incremental loading in one ETL process. The concrete integration of the approaches into our existing ETL design is described in more detail in the following sections.

Incremental ETL process design

Enabling both bulk and incremental loading

For the specification, if the ETL process should be executed as bulk or incremental load, we added a new Boolean parameter in the configuration file of the ETL process called APP_BULKLOAD_ENABLED. According to the desired loading option, the parameter has to be adjusted before executing the ETL process, with “true” results in a bulk load and “false” results in an incremental load. During the execution of the ETL process, this parameter is further taken into account for the Reader and Processor of the ETL process[2] to distinguish between the needs of bulk and incremental load (e.g., to ensure that the OMOP CDM database is not emptied at the beginning of the ETL process execution during an incremental load).

Focusing on CUD-FHIR resources since the last ETL execution

Our purpose of incremental loading was to focus only on CUD-FHIR resources since the last time the ETL process was executed (whether as bulk or incremental load). Consequently, the ETL process for incremental load has to filter only CUD-FHIR resources from the source. The literature research showed that there are various CDC approaches to detect changes in the source. In our case, FHIR resources in the FHIR Gateway and FHIR Server contain metadata, such as a timestamp indicating when an FHIR resource was created, updated, or deleted in the source (FHIR Gateway: column last_updated_at; FHIR Server: meta.lastUpdated). That is why we used the timestamp-based CDC approach to filter FHIR resources, which have a timestamp specification after the last ETL execution time.

To ensure filtering for the incremental load, we added two new parameters in the configuration file of the ETL process: DATA_BEGINDATE and DATA_ENDDATE. Both parameters have to be adjusted before executing the ETL process as incremental load. During the execution, the ETL process takes these two parameters into account and only reads FHIR resources from the source that has a metadata timestamp specification that is in [DATA_BEGINDATE, DATA_ENDDATE].

Guarantee data correctness in OMOP CDM

To avoid duplicates in OMOP CDM when processing updated and deleted FHIR resources, their existence in OMOP CDM has to be checked during processing. The FHIR resources themselves do not have a flag that indicates whether they are new or have been changed. Only deleted FHIR resources can be identified by a specific flag in the metadata. To assess the existence of FHIR resources in OMOP CDM, a comparison of the data of the read FHIR resources with the data already available in OMOP CDM has to be done.

The literature research showed an approach to generate a unique tracking id per source data during the transformation process and its storage in OMOP CDM.[16] We decided against the approach of generating an additional id because FHIR resources already contain two identifying FHIR elements themselves: id and identifier. The id represents the logical id of the resource per resource type, while the identifier specifies an identifier that is part of the source data. Both FHIR elements allow the unique identification of an FHIR resource per resource type. However, the standardized OMOP CDM tables do not provide the possibility to store this information from FHIR. Furthermore, OMOP CDM has its own primary keys for each record in a table independent of the id and identifier used in FHIR. Consequently, after transforming FHIR resources to OMOP CDM, the identifying data from FHIR resources will be lost.

To solve this problem, we need to store the mapping between the id and identifier used in FHIR with the id used in OMOP CDM. Due to the fact that the id of an FHIR resource is only unique per resource type and one FHIR resource can be stored in OMOP CDM in multiple tables, we additionally have to specify the resource type. As mentioned above, Lynch et al.[16] presented an approach to store the mapping between tracking ids for source records and ids used in OMOP CDM by using a mapping table and custom columns in OMOP CDM. We have slightly customized this approach and adapted it into our ETL design. Contrary to the use of both mapping tables and custom columns, we considered each approach separately.

Our first approach uses mapping tables for each FHIR resource type in a separate schema in OMOP CDM. With this approach, the Writer of the ETL process has to fill additional mapping tables beside the standardized tables in OMOP CDM. Our second approach focuses on two new columns in the standardized tables in OMOP CDM called “fhir_logical_id” and “fhir_identifier.” These columns store the id and identifier of the FHIR resource. Furthermore, we appended an abbreviation of the resource type as a prefix to the id and identifier of FHIR (eg, “med-” for Medication, “mea-” for MedicationAdministration, or “mes-” for MedicationStatement FHIR resources). In consequence, the combination of the prefix with the id and identifier and its storage in OMOP CDM enables the unique identification of FHIR resources in OMOP CDM. Since the mapping tables and two new columns are required exclusively for the ETL process, the analysis of data across multiple OMOP CDM databases is not affected.

Based on the unique identification of FHIR resources in OMOP CDM, it is now possible to guarantee data correctness in OMOP CDM during incremental loading. Figure 1 shows the exemplary data flow for Condition FHIR resources for the second approach with two new columns. First, the Processor extracts the id and identifier used in FHIR. After that, the prefix is added to both values. Regardless of whether the data was created, updated, or deleted in the source, the ETL process next verifies each processed FHIR resource’s existence in OMOP CDM using the mapping tables or two new columns. During the verification, records are deleted in OMOP CDM if they were found. This approach is also used for updated FHIR resources to avoid incomplete updates for cross-domain mappings in OMOP CDM. Consequently, we do not perform updates on the existing records in OMOP CDM except Patient and Encounter FHIR resources to ensure referential integrity in OMOP CDM. In case FHIR resources are marked as deleted in the source, the processing is completed. Otherwise, the same semantic mapping logic as for bulk loading[2] applies afterward, and the data of the FHIR resources are written to OMOP CDM as new records with new OMOP ids.

|

Evaluation of the incremental load process

For the evaluation of the incremental load process, we defined and executed two ETL test designs. First, we tested which approach to store the mapping between id and identifier used in FHIR with the id used in OMOP CDM was the most performant. For this purpose, we implemented a separate ETL process version for each approach. Afterward, we executed the ETL process as bulk load first and as incremental load afterward, and compared the execution times between the mapping table approach and the column approach. For further evaluation of the incremental load process, we have chosen the most performant approach, resulting in a new optimized ETL process version for the second ETL test design.

To test the achievement of the three requirements identified during the initial analysis of our ETL process, we defined and executed a second ETL test design (Table 2) that compares the results of bulk loading with those of incremental loading regarding performance and data correctness. Our hypotheses here are that the execution time of incremental loading alone is less than bulk loading, including daily updates, and that the amount of data per table in OMOP CDM is identical after incremental loading and bulk loading, including daily updates.

| ||||||||

For both ETL test designs, we used a total of 3,802,121 synthetic FHIR resources version R4 based on the MI-I CDS version 1.0, which were generated using random values. Furthermore, we simulated CUD-FHIR resources for testing incremental loading for one day. For the simulation, we checked the frequency distribution of CUD data per domain in our source system with real-world data for eight days and calculated the average value (see Multimedia Appendix 4). In addition, we set up one OMOP CDM v5.3.1 database as the target and executed the ETL process according to our test designs. For both ETL tests, we tracked the execution times based on the time stamps in the logging file of the ETL process until the corresponding job finished successfully. In a second step, we recorded the data quantity for each filled table in OMOP CDM and compared the results between the two ETL loading options for the second ETL test.

Results

Architecture of the ETL process

The implemented ETL process extension for incremental loading of FHIR resources to OMOP CDM has not changed the basic architecture of the ETL process, as proposed by Peng et al.[2], consisting of Reader, Processor, and Writer (Figure 2). The only addition is a switch at the beginning of the ETL process, which allows the user to select between bulk load and incremental load (requirement A). Moreover, we configured the Reader for incremental loading of CUD-FHIR resources on a daily basis (requirement B). In the Processor, we added the logic of the verification of CUD-FHIR resources and their deletion from OMOP CDM if they already exist (requirement C).

|

The ETL process covering bulk and incremental load is available in the OHDSI repository ETL-German-FHIR-Core.[25]

Findings of the first ETL test

The first ETL test focused on the performance measurement of the mapping table approach versus the column approach. First, we executed both ETL approaches as a bulk load. The column approach took about 30 minutes to transform FHIR resources to OMOP CDM. In contrast, the mapping table approach was still not finished after four hours. Therefore, we stopped the ETL execution and did not test the incremental loading anymore. Consequently, for the incremental ETL design, we decided to use the column approach due to its better performance and executed the subsequent performance evaluations with it.

Findings of the second ETL test

The second ETL test dealt with testing our two hypotheses in Table 2. First, we compared the execution times between a bulk load (three months plus one day) and an initial bulk load (three months) followed by an incremental load (one day). For this, each loading option was executed three times. Based on the results, we calculated the average execution times. The performance results (Multimedia Appendix 5) show that an initial bulk load (13.31 minutes) followed by a daily incremental load (2.12 minutes) is more efficient than an everyday full load (17.07 minutes). Looking at the percentage improvement in performance, it can be shown that incremental loading had 87.5% less execution time than a daily full load (2.12 minutes compared to 17.07 minutes). Referring to our first hypothesis, we were able to prove our initial assumption.

After the execution of both loading options, we further checked the data quantity for each filled table in OMOP CDM and compared the results of it. As shown in Table 3, both loading options resulted in the same amount of data (Multimedia Appendix 5). Consequently, we were also able to confirm our second hypothesis regarding data correctness in OMOP CDM.

| |||||||||||||||||||||||||||||||||||||||||||||

Discussion

Principal findings

Based on the partial results for research questions one and two, we defined three methods to integrate incremental loading into our ETL process design. In this context, the three identified requirements from the initial analysis could be implemented by taking existing approaches from the literature into account (research question three). Moreover, the incremental load process was tested at 10 university hospitals in Germany and ensures daily data transfer to OMOP CDM for the CTRSS. This proves that the ETL process is also suitable for real-world data, although it was developed with synthetic data.

Currently, our ETL process requires FHIR resources following the MI-I core data set specification. However, our initial requirements analysis showed that the implemented incremental ETL logic does not affect the semantic mapping from FHIR to OMOP CDM described by Peng et al.[2] In consequence, the incremental ETL logic is independent of the data available in the FHIR format. Therefore, it can be used to incrementally transform international FHIR profiles such as the US Core Profiles[26] to OMOP CDM.

Limitations

Nevertheless, the ETL process has limitations in its execution capabilities. As our FHIR resources comprise a logical id corresponding to the id in our source system, our ETL process is currently not able to deal with changing server end points, resulting in changing logical ids. Additionally, so far, we have not included an option to automatically start incremental loading nor do we support real-time streaming (e.g., via Apache Kafka[27]). These limitations are part of future work.

To evaluate incremental loading compared to bulk loading, we performed two ETL tests (research question four). The results of the performance tests showed that the column approach is more performant than the mapping table approach. Our suspected explanation for this is that the mapping table approach requires additional tables to be filled besides the standardized tables in OMOP CDM. Consequently, during the verification of FHIR resources in OMOP CDM, a lookup and deletion in several tables (mapping tables and standardized tables) is necessary, whereas the column approach only accesses the standardized tables.

Referring to our two hypotheses regarding performance and data correctness between incremental loading and bulk loading, we showed that our initial assumptions were proven. With the option of an incremental ETL process, we were able to reduce execution times to provide data in OMOP CDM on a daily basis, without data loss compared to the bulk load ETL process. For our future work, we want to further evaluate at what point bulk load is more worthwhile than incremental loading. The results of these evaluations will be incorporated into the automation concept.