Difference between revisions of "Journal:Development and implementation of an LIS-based validation system for autoverification toward zero defects in the automated reporting of laboratory test results"

Shawndouglas (talk | contribs) (Saving and adding more.) |

Shawndouglas (talk | contribs) (Saving and adding more.) |

||

| Line 114: | Line 114: | ||

|} | |} | ||

[[File:Fig3 Jin BMCMedInfoDecMak21 21.png|1100px]] | |||

{{clear}} | |||

{| | |||

| STYLE="vertical-align:top;"| | |||

{| border="0" cellpadding="5" cellspacing="0" width="1100px" | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|<blockquote>'''Figure 3.''' Correctness verification interface. The result of CRP passes automatic warning according to the No.002009 rule and displays green. The technician judges whether the automated warning operates correctly.</blockquote> | |||

|- | |||

|} | |||

|} | |||

===Integrety validation=== | |||

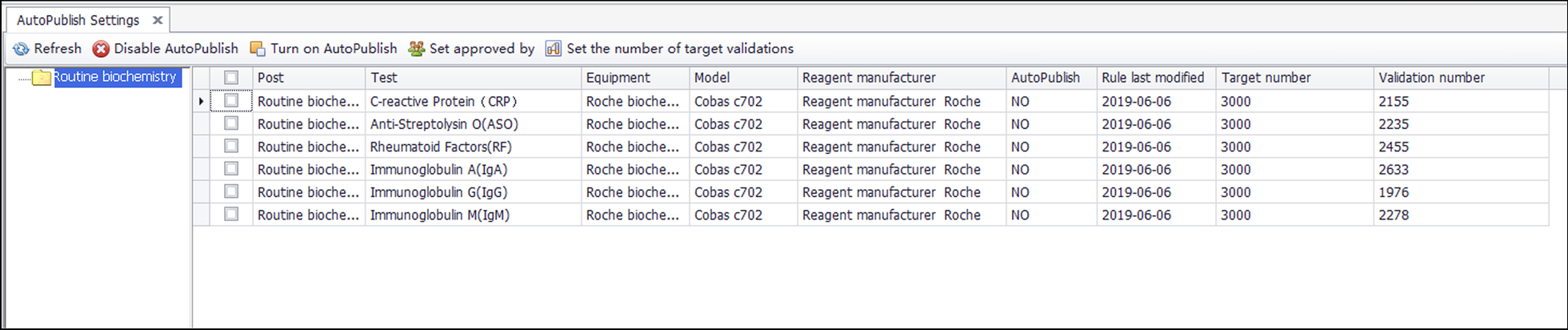

Integrity validation can be started only after the correctness verification of all rules of a project is completed. It is implemented as follows. (1) After the report shows the result of the automatic warning, if the system detects that the report has been changed, a dialog box will pop up and ask the reviewer to select the reason for the modification. These reasons include (a) a rule execution error, (b) a rule setting value that is inappropriate, (c) the required addition of new rules, (d) the lack of involvement of other issues related to automatic review, and (e) automatic warning and prompt modification. The LIS records the modified content and the reasons for personnel analysis. (2) If the laboratory wants to implement automated reporting, a validation number, such as 5,000, can be set according to the complexity of the project review. (3) If the automatic warning result of the report is green (approved), the personnel will issue the report directly, and the validation number of the report will automatically increase by one. (4) If the validation number of all items on the report exceeds the set number, the report will be automatically released. (5) If the automatic warning result of the report is green (approved), but the result is modified, with the reason for the modification specified as any of a, b, or c, then the LIS will clear the validation number for the related items and stop automated reporting. Figure 4 shows the integrity validation process. The validation goals and validation amount for six projects are shown in Fig. 5. | |||

[[File:Fig4 Jin BMCMedInfoDecMak21 21.png|900px]] | |||

{{clear}} | |||

{| | |||

| STYLE="vertical-align:top;"| | |||

{| border="0" cellpadding="5" cellspacing="0" width="900px" | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|<blockquote>'''Figure 4.''' The integrity validation process.</blockquote> | |||

|- | |||

|} | |||

|} | |||

[[File:Fig5 Jin BMCMedInfoDecMak21 21.png|1100px]] | |||

{{clear}} | |||

{| | |||

| STYLE="vertical-align:top;"| | |||

{| border="0" cellpadding="5" cellspacing="0" width="1100px" | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|<blockquote>'''Figure 5.''' Integrity validation target number settings and recording interface. The validation targets of the six projects in the above figure are all 3000, and the validation number is between 1900 and 2500. The corresponding reports cannot be released automatically.</blockquote> | |||

|- | |||

|} | |||

|} | |||

===Accuracy guarantee=== | |||

The accuracy of the autoverification includes whether the rules can be identified and whether there are omissions (completeness) in the report review. Therefore, our method confirms the accuracy of the new method from these two aspects. We perform function correction and system improvement through correctness verification and integrity validation. In the validation system, we design the following logic to ensure the accuracy of the function: | |||

# The new rule is automatically deleted if it fails the correctness verification within 10 days. | |||

# The rule is not allowed to be modified. | |||

# If the rule fails the correctness verification, it is forbidden to be converted. | |||

# If the autoverification of a single project fails the integrity validation, the historical validation amount is cleared. | |||

===Data collection=== | |||

The validation data of 30 assays from October 2019 to January 2020 were collected for analysis, and in total, 833 early warning rules were obtained. A total of 926,195 reports was used to evaluate the accuracy of the new method. | |||

===Time consumption statistics=== | |||

We used HBV as an example to introduce the comparison of the validation time before and after the new method was used. In the measurement of the validation time, we divided the complete autoverification into 10 stages. Time statistics were collected for manual verification and new method verification for each step. We used systematic records and estimates to develop time statistics for different stages. | |||

===Satisfaction survey=== | |||

We used questionnaires to evaluate the effectiveness of new methods used by laboratory technicians. The survey was launched using the online tool [https://www.wjx.cn/ WJX], which feeds back the percentage of responses and the total numbers. | |||

==Results== | |||

===Correctness verification results=== | |||

Among the 833 rules, 782 (93.88%) were successfully verified for correctness, with a total of 3,814 validations, including 2,230 (58.47%) released tests and 1,584 (41.53%) intercepted tests. The inconsistencies were verified, and 51 (0.06%) error rules were deleted. The reasons for verification failure are shown in Table 2. | |||

{| | |||

| STYLE="vertical-align:top;"| | |||

{| class="wikitable" border="1" cellpadding="5" cellspacing="0" width="70%" | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" colspan="4"|'''Table 2.''' List of reasons for correctness verification failure | |||

|- | |||

! style="background-color:#dddddd; padding-left:10px; padding-right:10px;"|Error type | |||

! style="background-color:#dddddd; padding-left:10px; padding-right:10px;"|Proportion (%) | |||

! style="background-color:#dddddd; padding-left:10px; padding-right:10px;"|Sample | |||

! style="background-color:#dddddd; padding-left:10px; padding-right:10px;"|Solution | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|Human error | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|63.3 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|Incorrect English letter case in the text of the rules, resulting in no warning | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|Reset the rules | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|Specific warning target | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|24.9 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|Early warning of diagnostic results and microscopy results in a special report interface for pathology | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|Add a supplementary algorithm code | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|Algorithm code error | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|8.4 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|HPV typing results could not be verified with the Delta Check; the results of the microbial project identification could not be correlated with a variety of drug sensitivity combinations | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|Fix the algorithm code | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|Software compatibility problem | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|3.4 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|Problem with the precision of the number comparison script | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|Fix the algorithm code | |||

|- | |||

|} | |||

|} | |||

Revision as of 15:31, 10 June 2021

| Full article title |

Development and implementation of an LIS-based validation system for autoverification toward zero defects in the automated reporting of laboratory test results |

|---|---|

| Journal | BMC Medical Informatics and Decision Making |

| Author(s) | Jin, Di; Wang, Dezhi; Wang, Jiajia; Li, Bijuan; Cheng, Yating; Mo, Nanxun; Deng, Xiaoyan; Tao, Ran |

| Author affiliation(s) | Jinan Kingmed Center for Clinical Laboratory, Guangzhou Medical University |

| Primary contact | Email: Online form |

| Year published | 2021 |

| Volume and issue | 21 |

| Article # | 174 |

| DOI | 10.1186/s12911-021-01545-3 |

| ISSN | 1472-6947 |

| Distribution license | Creative Commons Attribution 4.0 International |

| Website | https://bmcmedinformdecismak.biomedcentral.com/articles/10.1186/s12911-021-01545-3 |

| Download | https://bmcmedinformdecismak.biomedcentral.com/track/pdf/10.1186/s12911-021-01545-3.pdf (PDF) |

|

|

This article should be considered a work in progress and incomplete. Consider this article incomplete until this notice is removed. |

Abstract

Background: For laboratory informatics applications, validation of the autoverification function is one of the critical steps to confirm its effectiveness before use. It is crucial to verify whether the programmed algorithm follows the expected logic and produces the expected results. This process has always relied on the assessment of human–machine consistency and is mostly a manually recorded and time-consuming activity with inherent subjectivity and arbitrariness that cannot guarantee a comprehensive, timely, and continuous effectiveness evaluation of the autoverification function. To overcome these inherent limitations, we independently developed and implemented a laboratory information system (LIS)-based validation system for autoverification.

Methods: We developed a correctness verification and integrity validation method (hereinafter referred to as the "new method") in the form of a human–machine dialog. The system records personnel review steps and determines whether the human–machine review results are consistent. Laboratory personnel then analyze the reasons for any inconsistency according to system prompts, add to or modify rules, reverify, and finally improve the accuracy of autoverification.

Results: The validation system was successfully established and implemented. For a dataset consisting of 833 rules for 30 assays, 782 rules (93.87%) were successfully verified in the correctness verification phase, and 51 rules were deleted due to execution errors. In the integrity validation phase, 24 projects were easily verified, while the other six projects still required the additional rules or changes to the rule settings. Taking the Hepatitis B virus test as an example, from the setting of 65 rules to the automated releasing of 3,000 reports, the validation time was reduced from 452 (manual verification) to 275 hours (new method), a reduction in validation time of 177 hours. Furthermore, 94.6% (168/182) of laboratory users believed the new method greatly reduced the workload, effectively controlled the report risk, and felt satisfied. Since 2019, over 3.5 million reports have been automatically reviewed and issued without a single clinical complaint.

Conclusion: To the best of our knowledge, this is the first report to realize autoverification validation as a human–machine interaction. The new method effectively controls the risks of autoverification, shortens time consumption, and improves the efficiency of laboratory verification.

Keywords: autoverification, correctness verification, integrity validation, human–computer interaction, risk management, laboratory information system

Background

Autoverification—the use of automated computer-based rules to initially validate laboratory test results[1]—is a powerful tool for the batch processing of test results and has been widely used in recent years. It has obvious advantages in reducing reporting errors, shortening turnaround time (TAT), and improving audit efficiency.[1][2][3][4][5]

Current status and challenges

Our self-developed autoverification system has been used for six years in many disciplines, such as biochemistry, immunology, hematology, microbiology, molecular diagnostics, and pathology. To date, 25,487 rules have been set. The system judges test results 1.1 million times a day and provides audit recommendations for 250,000 report forms, accounting for 87% of the total number of report forms. Approximately 80,000 reports are automatically generated every day. To ensure the effectiveness and safety of the autoverification system, its validation process is very important. The College of American Pathologists' laboratory accreditation checklist item GEN.43875[6] and International Organization for Standardization's ISO 15189:2012 requirement 5.9.2b[7] both require that autoverification systems undergo functional verification before use.

According to published studies, in laboratories that use autoverification, the majority of laboratories have performed personnel-based and automatic system audits with the same results—manually recorded consistency—and reached a conclusion after a statistical analysis of the results.[2][4][8][9] The manual verification method is less difficult to operate but has the following limitations:

- Massive validation workload: Based on the requirements of WS/T 616-2018 (a China Health Organization recommended standard)[10] for validation of the autoverification of quantitative clinical laboratory test results, every test and every sample type involved in the autoverification procedure should be tested; the validation time should be no less than three months and/or the number of reports released should be no less than 50,000; and periodic verification should be performed every year for no less than 10 working days and/or for no less than 5,000 reports. The validation workload is large, and it is difficult to rely on manual comparison and recording, which greatly increases the postanalytical workload.

- Reporting risk: During manual verification, personnel are prone to inertia or judgment errors. The lack of a system control mechanism for this kind of validation can generate reporting risks and directly affect clinical diagnosis and treatment.[2]

Therefore, there is an urgent need to design a verification method that minimizes the workload and systematically controls risks. We report a rule verification system with a small workload and ease of operation that can be used as a reference for self-built and automatic test auditing for laboratories and manufacturers.

Methods

System design

Based on the Clinical and Laboratory Standards Institute's (CLSI) AUTO10 standard[11] and current review processes, we established an autoverification system including 11 rule categories. Technicians set the rules according to audit requirements and rule categories. Each item can set multiple rules, including limited range check, combined mode judgment, Delta check, sampling time validity judgment, sample abnormality judgement (e.g., hemolysis, lipemia), and quality control check. The autoverification system determines whether the report is abnormal according to the rules. Tests that do not trigger contradiction mode are displayed in green, while failed tests (triggering rules, contradictory modes set by the rules) are displayed in red, and the cause of the contradiction is indicated. If all the tests in the report are green, the barcode of the report is also green. If any test in the report is red, the report shows a red barcode, which signals a warning in the system.

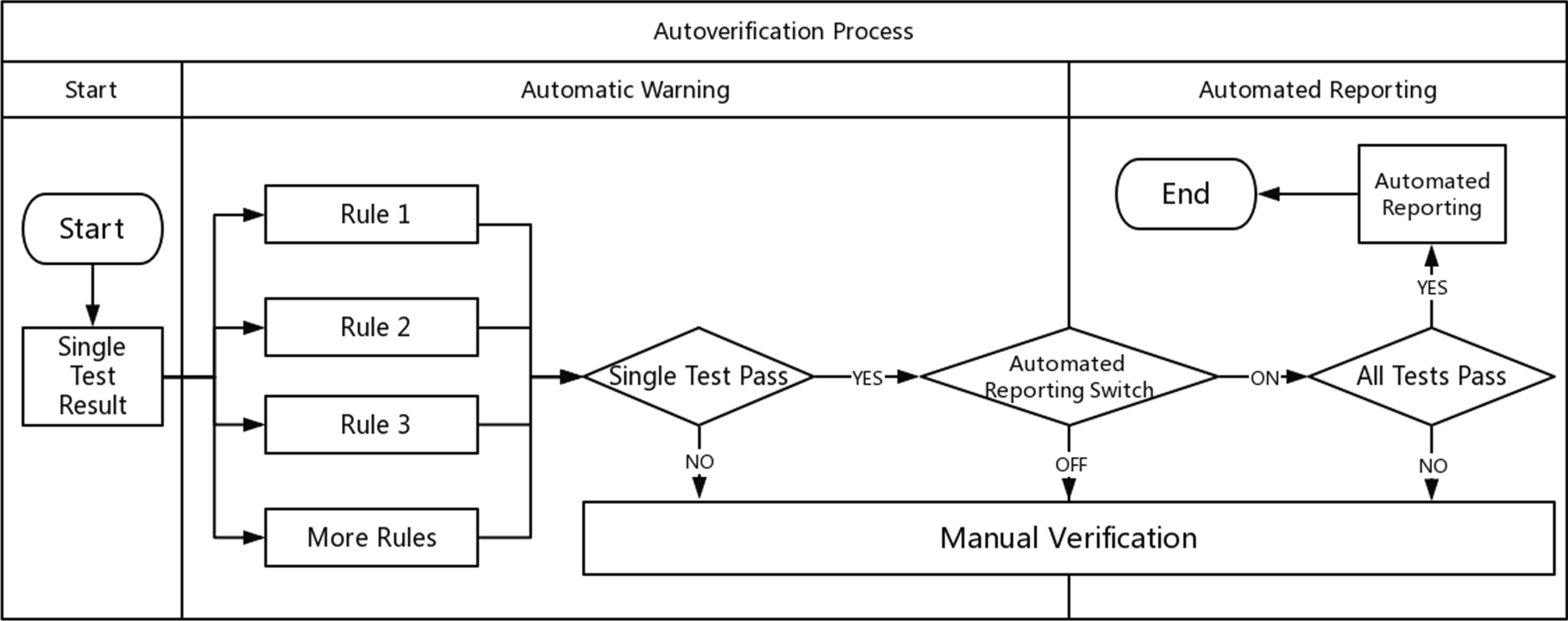

According to the above steps, the autoverification system displays colors and abnormal prompts after judging the rules in a process called automatic early warning. The automatic warning is only for judgment and is not involved in the decision to issue a report. Based on this, the system automatically sends out a report with a green barcode in a process called automated reporting. Automatic early warning and automatic reporting comprise autoverification. This system is especially useful in the review of complex diagnostic projects (e.g., molecular diagnostics, pathological testing). These projects prompt absurd values from personnel. For some moderately complex projects (e.g., biochemical, blood), the combination of report reviewing, automatic warning, and automated reporting is equivalent to the autoverification system in a large number of literature reports and laboratory information system (LIS) automatic reports. The autoverification process used by our laboratory is shown in Fig. 1.

|

Validation scheme

On the premise that automatic audits are divided into automatic warnings and automatic reports, we divide the verification system into two stages. The first stage is called correctness verification, which verifies that the operation of the rules is consistent with the expectations set by the personnel. If there is a problem, the responsible party may be the program development department. The second stage is called integrity validation. Based on the results from the first stage, this stage verifies whether the set rules include all the elements from the personnel’s audit report. The functional design of the two-stage system is shown in Table 1.

| ||||||||||||||||||||

Correctness verification

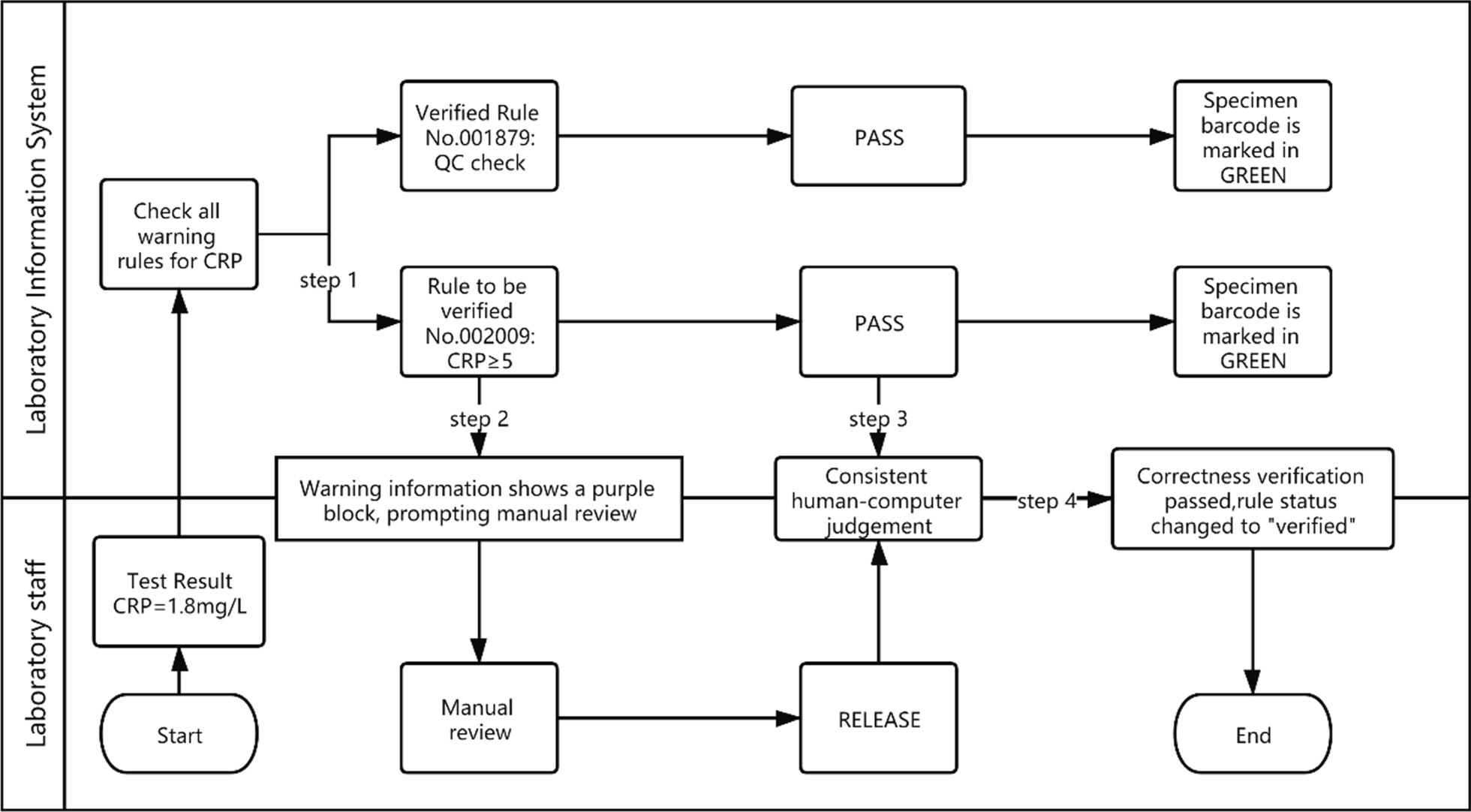

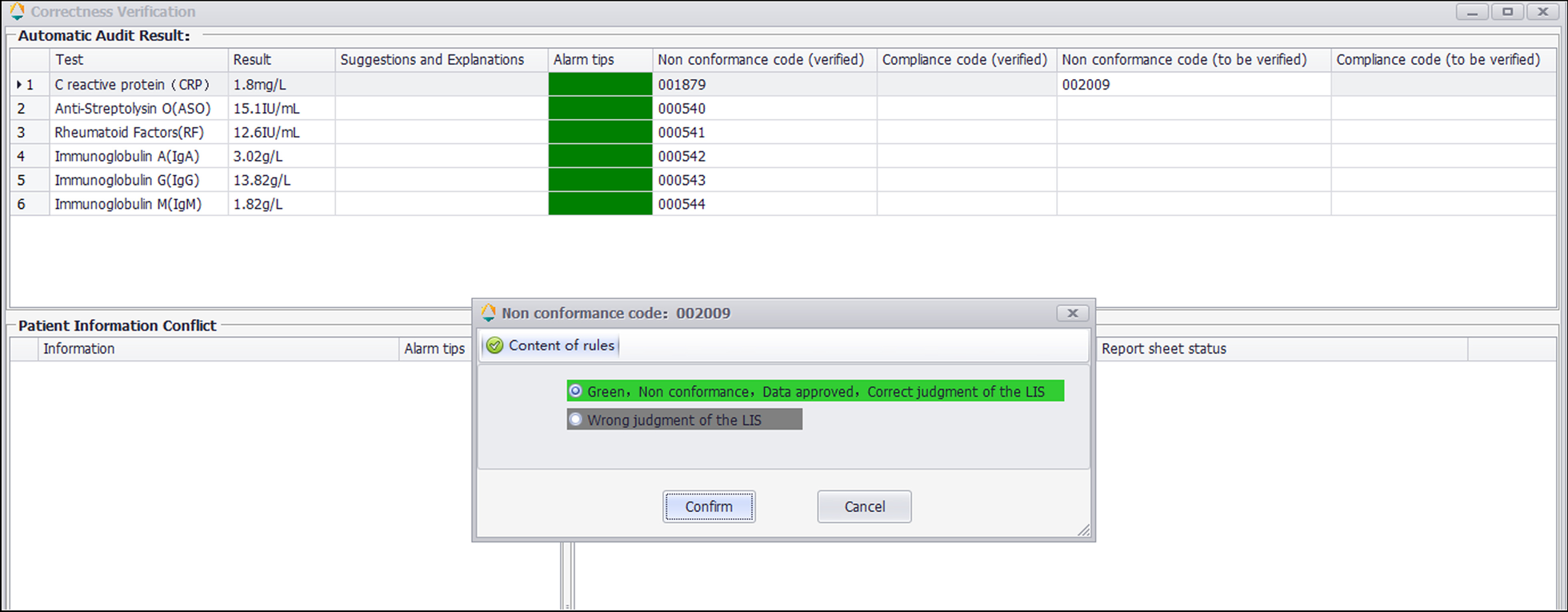

The correctness verification phase confirms whether the execution of a single rule is correct. It is implemented as follows: (1) For newly added rules, the system adds the label "Pending Verification." (2) When the report is reviewed, the system displays the rule judgment result, and a purple color block is displayed to remind the staff to judge whether the execution result of the "Pending Verification" rule is correct. (3) The staff input the judgment result. (4) The system changes the rule status according to the staff input. If it is consistent, the rule label is set to "verified," prompting the personnel to continue to the next stage of verification. If it is inconsistent, the staff are prompted to delete the rule. Figure 2 is a diagram representing this correctness verification process using the example of C-reactive protein (CRP). Figure 3 shows an example of the correctness verification interface.

|

|

Integrety validation

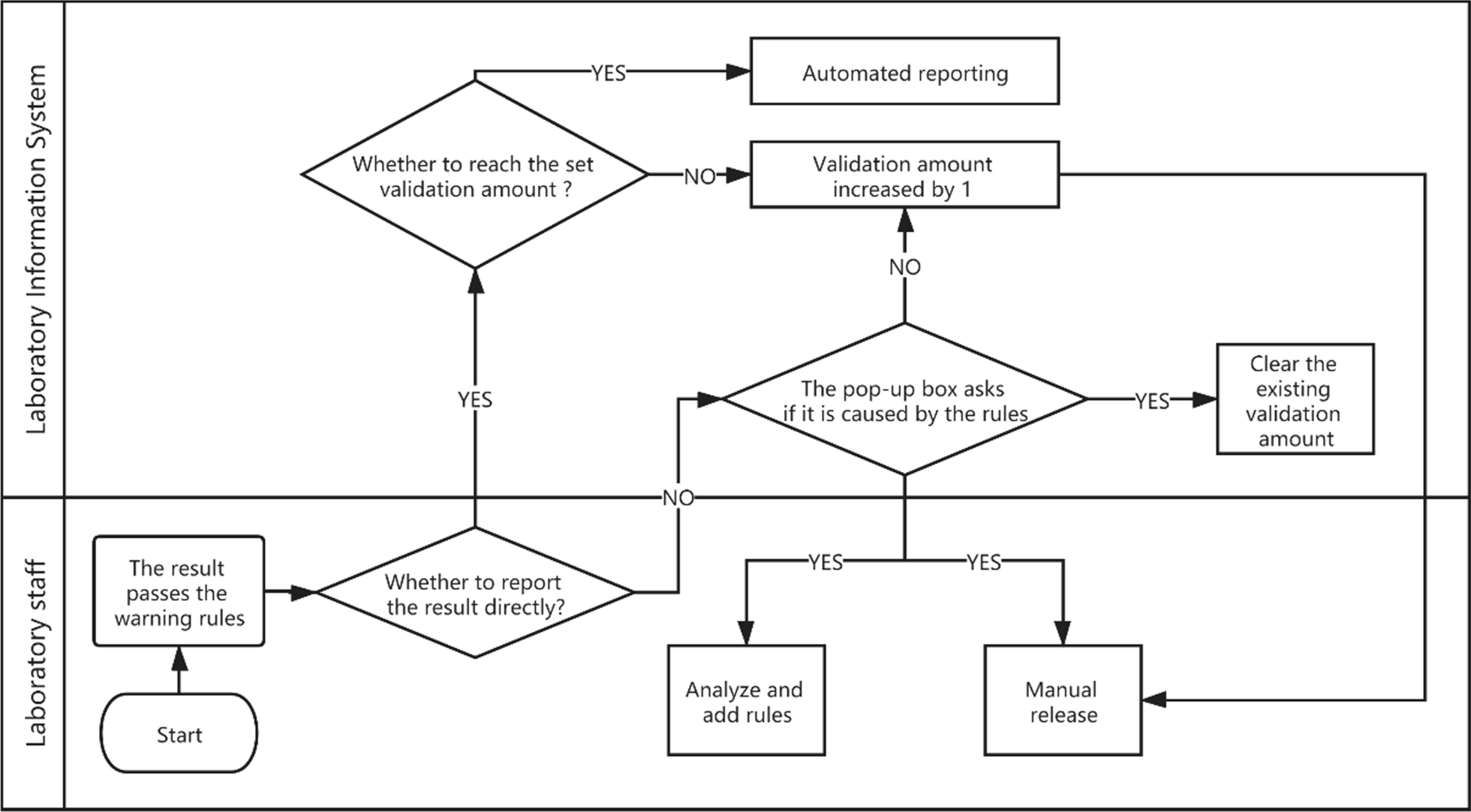

Integrity validation can be started only after the correctness verification of all rules of a project is completed. It is implemented as follows. (1) After the report shows the result of the automatic warning, if the system detects that the report has been changed, a dialog box will pop up and ask the reviewer to select the reason for the modification. These reasons include (a) a rule execution error, (b) a rule setting value that is inappropriate, (c) the required addition of new rules, (d) the lack of involvement of other issues related to automatic review, and (e) automatic warning and prompt modification. The LIS records the modified content and the reasons for personnel analysis. (2) If the laboratory wants to implement automated reporting, a validation number, such as 5,000, can be set according to the complexity of the project review. (3) If the automatic warning result of the report is green (approved), the personnel will issue the report directly, and the validation number of the report will automatically increase by one. (4) If the validation number of all items on the report exceeds the set number, the report will be automatically released. (5) If the automatic warning result of the report is green (approved), but the result is modified, with the reason for the modification specified as any of a, b, or c, then the LIS will clear the validation number for the related items and stop automated reporting. Figure 4 shows the integrity validation process. The validation goals and validation amount for six projects are shown in Fig. 5.

|

|

Accuracy guarantee

The accuracy of the autoverification includes whether the rules can be identified and whether there are omissions (completeness) in the report review. Therefore, our method confirms the accuracy of the new method from these two aspects. We perform function correction and system improvement through correctness verification and integrity validation. In the validation system, we design the following logic to ensure the accuracy of the function:

- The new rule is automatically deleted if it fails the correctness verification within 10 days.

- The rule is not allowed to be modified.

- If the rule fails the correctness verification, it is forbidden to be converted.

- If the autoverification of a single project fails the integrity validation, the historical validation amount is cleared.

Data collection

The validation data of 30 assays from October 2019 to January 2020 were collected for analysis, and in total, 833 early warning rules were obtained. A total of 926,195 reports was used to evaluate the accuracy of the new method.

Time consumption statistics

We used HBV as an example to introduce the comparison of the validation time before and after the new method was used. In the measurement of the validation time, we divided the complete autoverification into 10 stages. Time statistics were collected for manual verification and new method verification for each step. We used systematic records and estimates to develop time statistics for different stages.

Satisfaction survey

We used questionnaires to evaluate the effectiveness of new methods used by laboratory technicians. The survey was launched using the online tool WJX, which feeds back the percentage of responses and the total numbers.

Results

Correctness verification results

Among the 833 rules, 782 (93.88%) were successfully verified for correctness, with a total of 3,814 validations, including 2,230 (58.47%) released tests and 1,584 (41.53%) intercepted tests. The inconsistencies were verified, and 51 (0.06%) error rules were deleted. The reasons for verification failure are shown in Table 2.

| ||||||||||||||||||||||||

References

- ↑ 1.0 1.1 Li, J.; Cheng, B; Ouyang, H. et al. (2018). "Designing and evaluating autoverification rules for thyroid function profiles and sex hormone tests". Annals of Clinical Biochemistry 55 (2): 254–63. doi:10.1177/0004563217712291. PMID 28490181.

- ↑ 2.0 2.1 2.2 Wang, Z.; Peng, C.; Kang, H. et al. (2019). "Design and evaluation of a LIS-based autoverification system for coagulation assays in a core clinical laboratory". BMC Medical Informatics and Decision Making 19 (1): 123. doi:10.1186/s12911-019-0848-2. PMC PMC6609390. PMID 31269951. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC6609390.

- ↑ Wu, J.; Pan, M.; Ouyang, H. et al. (2018). "Establishing and Evaluating Autoverification Rules with Intelligent Guidelines for Arterial Blood Gas Analysis in a Clinical Laboratory". SLS Technology 23 (6): 631–40. doi:10.1177/2472630318775311. PMID 29787327.

- ↑ 4.0 4.1 Randell, E.W.; Short, G; Lee, N. et al. (2018). "Strategy for 90% autoverification of clinical chemistry and immunoassay test results using six sigma process improvement". Data in Brief 18: 1740-1749. doi:10.1016/j.dib.2018.04.080. PMC PMC5998219. PMID 29904674. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC5998219.

- ↑ Randell, E.W.; Short, G; Lee, N. et al. (2018). "Autoverification process improvement by Six Sigma approach: Clinical chemistry & immunoassay". Clinical Biochemistry 55: 42–8. doi:10.1016/j.clinbiochem.2018.03.002. PMID 29518383.

- ↑ College of American Pathologists (21 August 2017). "Laboratory General Checklist - CAP Accreditation Program" (PDF). https://elss.cap.org/elss/ShowProperty?nodePath=/UCMCON/Contribution%20Folders/DctmContent/education/OnlineCourseContent/2017/LAP-TLTM/checklists/cl-gen.pdf.

- ↑ "ISO 15189:2012 Medical laboratories — Requirements for quality and competence". International Organization for Standardization. November 2012. https://www.iso.org/standard/56115.html.

- ↑ Palmieri, R.; Falbo, R.; Caoowllini, F. et al. (2018). "The development of autoverification rules applied to urinalysis performed on the AutionMAX-SediMAX platform". Clinica Chimica Acta 485: 275–81. doi:10.1016/j.cca.2018.07.001. PMID 29981288.

- ↑ Sediq, A.M.-E., Abdel-Azeez, A.G.H. (2014). "Designing an autoverification system in Zagazig University Hospitals Laboratories: Preliminary evaluation on thyroid function profile". Annals of Saudi Medicine 34 (5): 427–32. doi:10.5144/0256-4947.2014.427. PMC PMC6074554. PMID 25827700. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC6074554.

- ↑ "WS/T 616-2018 (WST 616-2018)". Chinese Standard. 20 August 2018. https://www.chinesestandard.net/PDF/English.aspx/WST616-2018.

- ↑ "AUTO10 Autoverification of Clinical Laboratory Test Results, 1st Edition". Clinical and Laboratory Standards Institute. 31 October 2006. https://clsi.org/standards/products/automation-and-informatics/documents/auto10/.

Notes

This presentation is faithful to the original, with only a few minor changes to presentation, though grammar and word usage was substantially updated for improved readability. In some cases important information was missing from the references, and that information was added. For this version, a definition of "autoverification" was added to the introductory sentence of the background.