Journal:Electronic laboratory notebooks in a public–private partnership

| Full article title | Electronic laboratory notebooks in a public–private partnership |

|---|---|

| Journal | PeerJ Computer Science |

| Author(s) | Vaas, Lea A.I.; Witt, Gesa; Windshügel, Björn; Bosin, Andrea; Serra, Giovanni; Bruengger, Adrian; Winterhalter, Mathias; Gribbon, Philip; Levy-Petelinkar, Cindy J.; Kohler, Manfred |

| Author affiliation(s) | Fraunhofer Institute for Molecular Biology and Applied Ecology, University of Cagliari, Basilea Pharmaceutica International AG, Jacobs University Bremen, GlaxoSmithKline |

| Primary contact | Email: manfred dot kohler at ime dot fraunhofer dot de |

| Editors | Baker, Mary |

| Year published | 2016 |

| Volume and issue | 2 |

| Page(s) | e83 |

| DOI | 10.7717/peerj-cs.83 |

| ISSN | 2167-8359 |

| Distribution license | Creative Commons Attribution 4.0 International |

| Website | https://peerj.com/articles/cs-83/ |

| Download | https://peerj.com/articles/cs-83.pdf (PDF) |

|

|

This article should not be considered complete until this message box has been removed. This is a work in progress. |

Abstract

This report shares the experience during selection, implementation and maintenance phases of an electronic laboratory notebook (ELN) in a public–private partnership project and comments on users' feedback. In particular, we address which time constraints for roll-out of an ELN exist in granted projects and which benefits and/or restrictions come with out-of-the-box solutions. We discuss several options for the implementation of support functions and potential advantages of open-access solutions. Connected to that, we identified willingness and a vivid culture of data sharing as the major item leading to success or failure of collaborative research activities. The feedback from users turned out to be the only angle for driving technical improvements, but also exhibited high efficiency. Based on these experiences, we describe best practices for future projects on implementation and support of an ELN supporting a diverse, multidisciplinary user group based in academia, NGOs, and/or for-profit corporations located in multiple time zones.

Keywords: public–private partnership, open access, Innovative Medicines Initiative, electronic laboratory notebook, New Drugs for Bad Bugs, IMI, PPP, ND4BB, collaboration, sharing information

Introduction

Laboratory notebooks (LNs) are vital documents of laboratory work in all fields of experimental research. The LN is used to document experimental plans, procedures, results and considerations based on these outcomes. The proper documentation establishes the precedence of results, particularly for inventions of intellectual property (IP). The LN provides the main evidence in the event of disputes relating to scientific publications or patent application. A well-established routine for documentation discourages data falsification by ensuring the integrity of the entries in terms of time, authorship, and content.[1] LNs must be complete, clear, unambiguous and secure. A remarkable example is Alexander Fleming’s documentation, leading to the discovery of penicillin.[2]

The recent development of many novel technologies brought up new platforms in life sciences requiring specialized knowledge. As an example, next-generation sequencing and protein structure determination are generating datasets, which are becoming increasingly prevalent especially in molecular life sciences.[3] The combination and interpretation of these data requires experts from different research areas[4], leading to large research consortia.

In consortia involving multidisciplinary research, the classical paper-based version of a LN is an impediment to efficient data sharing and information exchange. Most of the data from these large-scale collaborative research efforts will never exist in a hard copy format but will be generated in a digitized version. An analysis of this data can be performed by specialized software and dedicated hardware. The classical application of a LN fails in these environments. It is commonly replaced by digital reporting procedures, which can be standardized.[5][6][7] Besides the advantages for daily operational activities, an electronic laboratory notebook (ELN) yields long-term benefits regarding data maintenance. These include, but are not limited to, items listed in Table 1.[8] The order of mentioned points is not expressing any ranking. Besides general tasks, some specific tasks have to be facilitated, especially in the field of drug discovery. One such specific task is searching for chemical structures and substructures in a virtual library of chemical structures and compounds (see Table 1, last item in column “Potentially”). Enabling such a function in an ELN hosting reports about wet-lab work dealing with known drugs and/or compounds to be evaluated would allow dedicated information retrieval for the chemical compounds or (sub-) structures of interest.

| ||||||

Interestingly, although essential for the success of research activities in collaborative settings, the above mentioned advantages are rarely realized by users during daily documentation activities and institutional awareness in academic environment is often lacking.

Since funding agencies and stakeholders are becoming aware of the importance of transparency and reproducibility in both experimental and computational research[9][10][11], the use of digitalized documentation, reproducible analyses and archiving will be a common requirement for funding applications on national and international levels.[12][13][14]

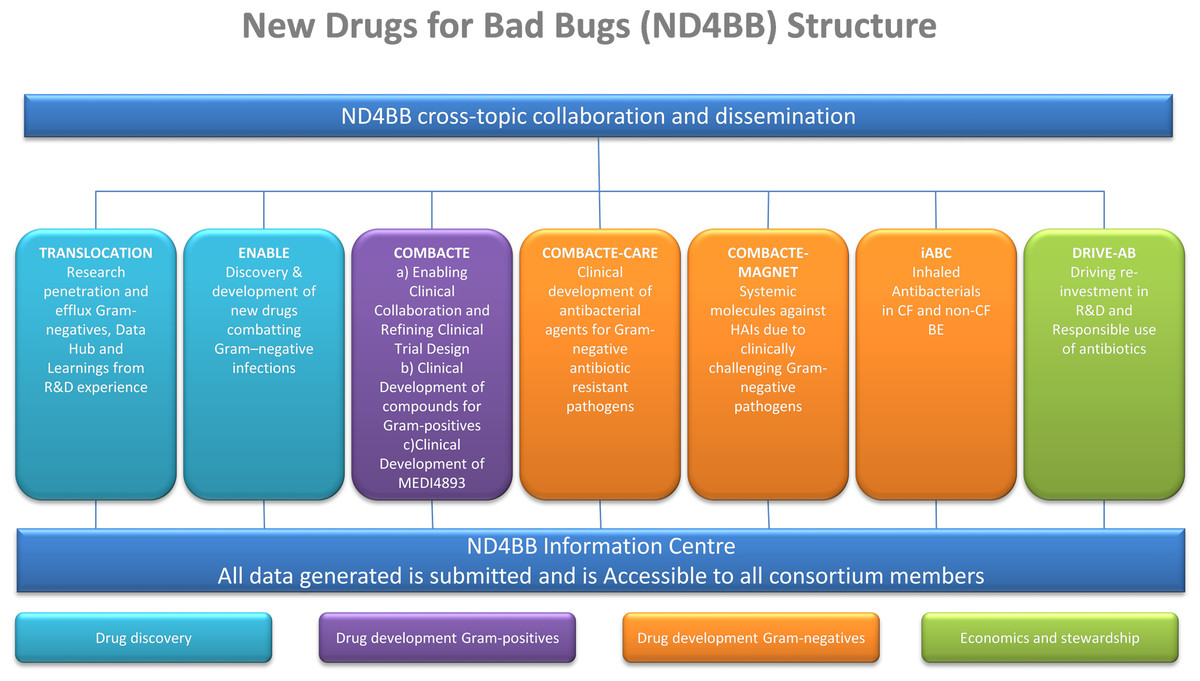

A typical example for a large private-public partnership is the Innovative Medicines Initiative (IMI) New Drugs for Bad Bugs (ND4BB) program[15][16] (see Fig. 1 for details). The program’s objective is to combat antibiotic resistance in Europe by tackling the scientific, regulatory, and business challenges that are hampering the development of new antibiotics.

|

The TRANSLOCATION consortium focus on (i) improving the understanding of the overall permeability of Gram-negative bacteria, and (ii) enhancing the efficiency of antibiotic research and development through knowledge sharing, data sharing and integrated analysis. To meet such complex needs, the TRANSLOCATION consortium was established as a multinational and multisite public–private partnership (PPP) with 15 academic partners, five pharmaceutical companies and seven small- and medium-sized enterprises (SMEs).[17][18][19]

In this article we describe the process of selecting and implementing an ELN in the context of the multisite PPP project TRANSLOCATION, comprising about 90 bench scientists in total. Furthermore we present the results from a survey evaluating the users’ experiences and the benefit for the project two years post-implementation. Based on our experiences, the specific needs in a PPP setting are summarized and lessons learned will be reviewed. As a result, we propose recommendations to assist future users avoiding pitfalls when selecting and implementing ELN software.

Methods

Selection and implementation of an ELN solution

The IMI project call requested a high level of transparency enabling the sharing of data to serve as an example for future projects. The selected consortium TRANSLOCATION had a special demand for an ELN due to its structure — various labs and partners spread widely across Europe needed to report into one common repository — and due to the final goal, data was required to be stored and integrated into one central information hub, the ND4BB Information Centre. Fortunately, no legacy data had to be migrated into the ELN.

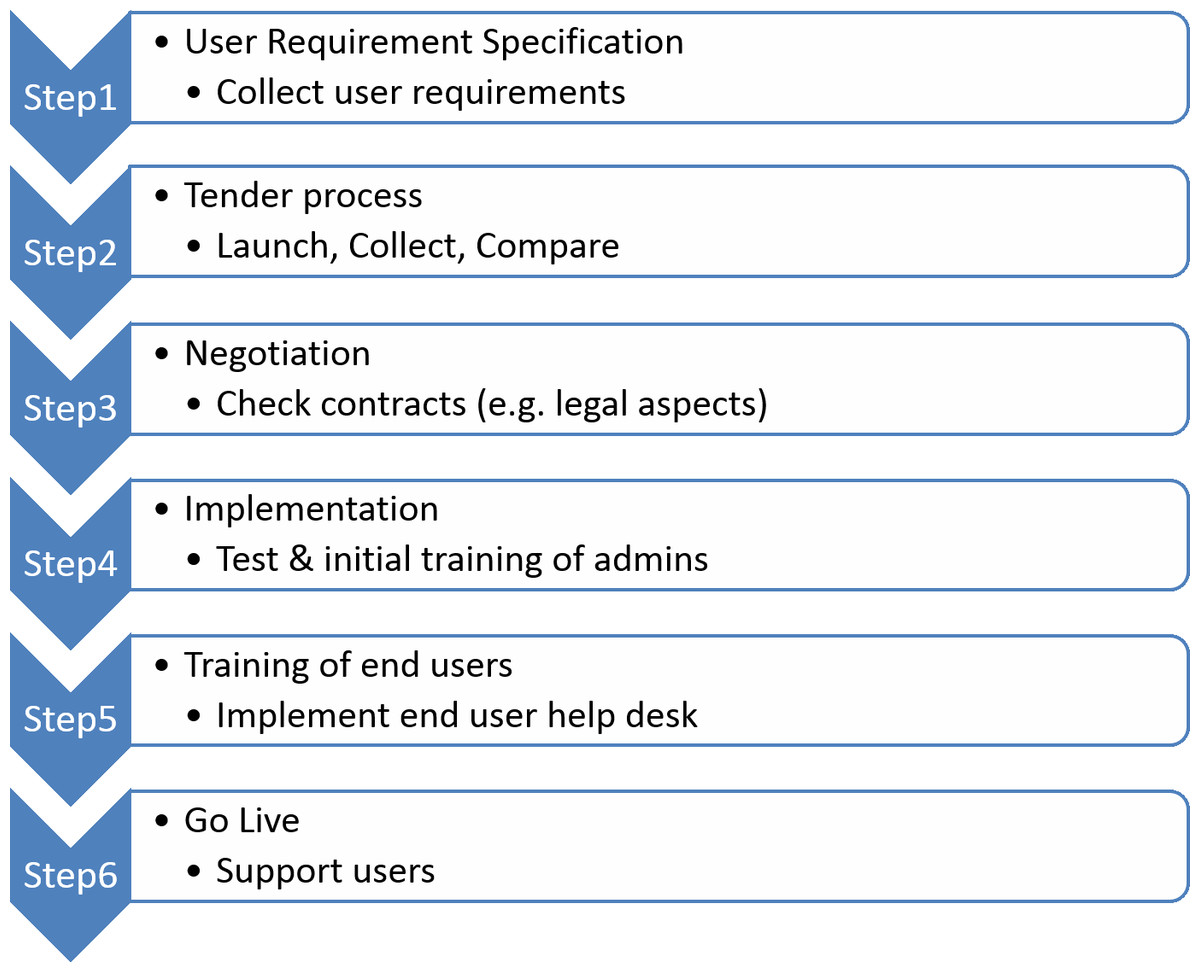

The standard process for the introduction of new software follows a highly structured multi-phase procedure[20][21], as outlined in Fig. 2.

|

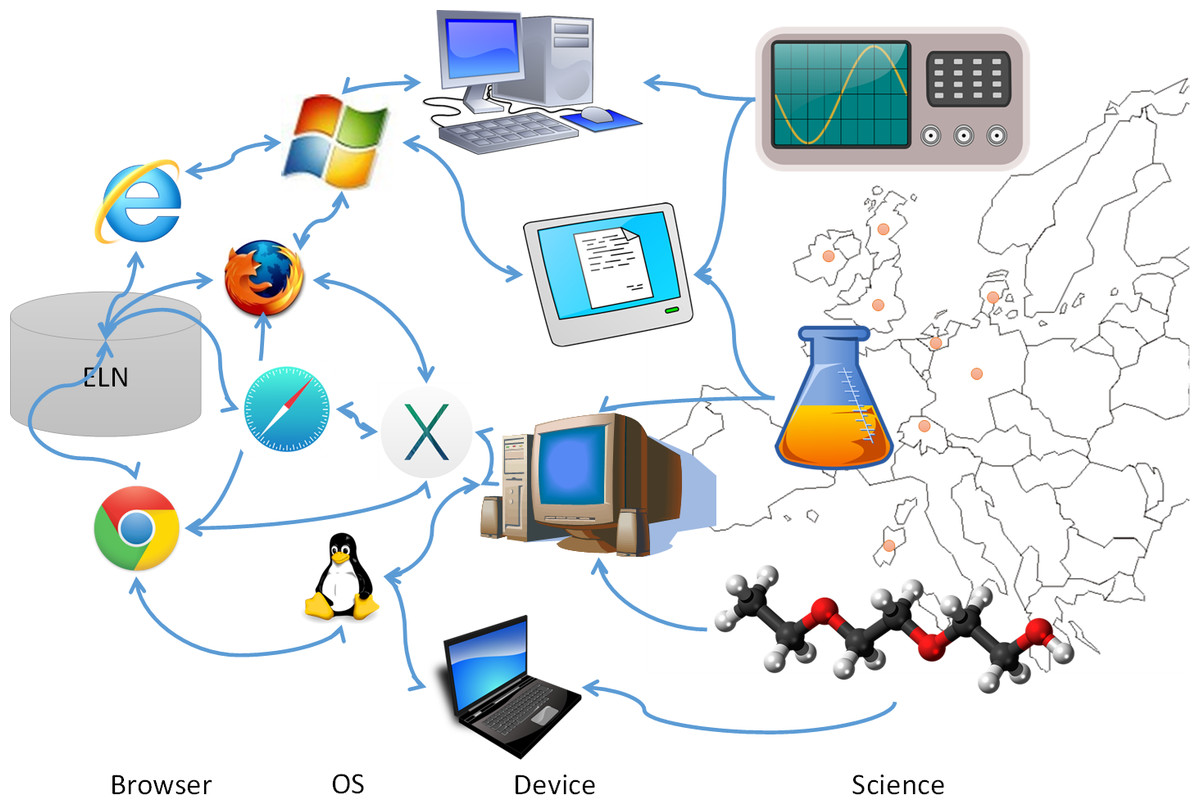

For the first step, we had to manage a large and highly heterogeneous user group (Fig. 3) that would be using the ELN, scheduled for roll-out within six months after project launch. All personnel of the academic partners were requested to enter data into the same ELN, potentially leading to unmet individual user requirements, especially for novices and inexperienced users.

|

As a compromise for step 1 (Fig. 2), we assembled a collection of user requirement specifications (URS) based on the experiences of one laboratory that had already implemented an ELN. We further selected a small group of super users based on their expertise in documentation processes, representing different wet laboratories and in silico environments. The resulting URS was reviewed by IT and business experts from academic as well as private organisations of the consortium. The final version of the URS is available as Supplemental File 1.

In parallel, based on literature (Rubacha, Rattan & Hossel, 2011) and internet searches, presentations of widely used ELNs were evaluated to gain insight into state-of-the-art ELNs. This revealed a wide variety of functional and graphical user interface (GUI) implementations differing in complexity and costs. The continuum between simple out-of-the-box solutions and highly sophisticated and configurable ELNs with interfaces to state-of-the-art analytical tools were covered by the presentations. Notably, the requirements specified by super users also ranged from “easy to use” to “highly individually configurable.” Based on this information, it was clear that the ELN selected for this consortium would never ideally fit all user expectations. Furthermore, the exact number of users and configuration of user groups were unknown at the onset of the project. The most frequently or highest prioritized items of the collected user requirements are listed in Table 2. We divided the gathered requirements into ‘core’ meaning essential and ‘non-core’ meaning ‘nice to have, but not indispensable.’ Further, we list here only the items, which were mentioned by more than two super users from different groups. The full list of URS is available in Supplemental File 1.

| ||||||

Based on the user URS, a tender process (Step 2, Fig. 2) was initiated in which vendors were invited to respond via a request for proposal (RFP) process. The requirement of the proposed ELN to support both chemical and biological research combined with the need to access the ELN by different operating systems (Windows, Linux, Mac OS) (see Fig. 3) reduced the number of appropriate vendors. Their response provided their offerings aligned to the proposed specifications.

Key highlights and drawbacks of the proposed solutions were collected as well as approximations for the number of required licenses and maintenance costs. The cost estimates for licenses were not comparable because some systems require individual licenses whereas others used bulk licenses. At the time of selection, the exact number of users was not available.

Interestingly, the number of user specifications available out-of-the-box differed by less than 10 percent between systems with the lowest (67) and highest (73) number of proper features. Thus, highlights and drawbacks became a more prominent issue in the selection process.

For the third step, (Fig. 2), the two vendors meeting all the core user requirements and the highest number of non-core user requirements were selected to provide a more detailed online demonstration of their ELN solution to a representative group of users from different project partners. In addition, a quote for 50 academic and 10 commercial licenses was requested. The direct comparison did not result in a clear winner; both systems included features that were instantly required, but each lacked some of the essential functionalities.

For the final decision, only features which were different in both systems and supported the PPP were ranked between 1 = low (e.g., academic user licenses are cheap) and 5 = high (e.g., cloud hosting) as important for the project. The decision between the two tested systems was then based on the higher number of positively ranked features, which revealed most important after presentation and internal discussions of super users. The main drivers for the final decision are listed in Table 3. In total, the chosen system got 36 positive votes on listed features meeting all high ranked demands listed in Table 3, while the runner-up had 24 positive votes on features. However, if the system had to be set up in the envisaged consortium it turned out to be too expensive and complex in maintenance.

|

The complete process, from the initial collection of URS data until the final selection of the preferred solution, took less than five months.

Following selection, the product was tested before specific training was offered to the user community.[22] Parallel support frameworks were rolled out at this time, including a help desk as a single point of contact (SPoC) for end users.

The fourth step (Fig. 2) was the implementation of the selected platform. This deployment was simple and straightforward because it was available as software as a service (SaaS) hosted as a cloud solution. Less than one week after signing the contract, the administrative account for the software was created and the online training of key administrators commenced. The duration of training was typically less than two hours, including tasks such as user and project administration.

To accomplish step 5 (Fig. 2), internal training material was produced based on the experiences made during the initial introduction of the ELN to the administrative group. This guaranteed that all users would receive applicable training. During this initial learning period, the system was also tested for the requested user functionalities. Workarounds were defined for missing features detected during the testing period and integrated into the training material.

One central feature of an ELN in its final stage is the standardization of minimally required information. These standardizations include, but are not limited to:

- Define required metadata fields per experiment (e.g., name of the author, date of creation, experiment type, keywords, aim of experiment, executive summary, introduction).

- Define agreed naming conventions for projects.

- Define agreed naming conventions for titles of experiments.

- Prepare (super user agreed) list of values for selection lists.

- Define type of data which should be placed in the ELN (e.g., raw data, curated data).

We did not define specific data formats since we could not predict all the different types of data sets that would be utilized during the lifetime of the ELN. Instead, we gave some best practise advice on arranging data (Supplemental Files 3 and 4), facilitating its reuse by other researchers. The initially predefined templates, however, were only rarely adopted, with some groups using nearly the same structure to document their experiments. More support especially for creating templates may help users to document their results more easily.

For the final phase, the go live, high user acceptance was the major objective. A detailed plan was created to support users during their daily work with the ELN. This comprised the setup of a support team (the project specific ELN-Helpdesk) as a SPoC and detailed project-specific online training, including documentation for self-training. As part of the governance process, we created working instructions describing all necessary administrative processes provided by the ELN-Helpdesk.

Parallel to the implementation of the ELN-Helpdesk, a quarterly electronic newsletter was rolled out. This was used advantageously to remind potential users that the ELN is an obligatory central repository for the project. The newsletter also provided a forum for users to access information and news, messaging to remind them the value of this collaborative project.

Documents containing training slide sets, frequently asked questions (FAQs) and best practice spreadsheet templates (Supplemental File 4) have been made available directly within the ELN to give users rapid access to these documents while working with the ELN. In addition, the newsletter informed all users about project-specific updates or news about the ELN.

Results

Operation of the ELN

Software operation can be generally split into technical component/cost issues and end-user experiences. The technical component considers stability, performance and maintenance, whereas the end-user experience is based on the capability and usability of the software.

Technical solution

The selected ELN, hosted as a SaaS solution with a cloud-based service centre, provided a stable environment with acceptable performance, e.g., login <15 sec, opening an experiment with five pages <20 sec (for further technical details, please see Supplemental File 1). During the evaluation period of two years, two major issues emerged. The first involved denial of access to the ELN for more than three hours, due to an external server problem, which was quickly and professionally solved after contacting the technical support, and the other was related to the introduction of a new user interface (see below).

The administration was simple and straightforward, comprising mainly minor configurations at the project start and user management during the runtime. One issue was the gap in communication regarding the number of active users, causing a steady increase in number of licenses.

A particular disadvantage using the selected SaaS solution concerned system upgrades. There was little notice of upcoming changes, and user warnings were hidden in a weekly mailing. To keep users updated, weekly or biweekly mails about the ELN were sent to the user community by the vendor. Although these messages were read by users initially, interest diminished over time. Consequently, users were confused when they accessed the system after an upgrade and the functionality or appearance of the ELN had changed. On the other side, system upgrades were performed over weekends to minimize system downtime.

The costs per user were reasonable, especially for the academic partners for whom the long-term availability of the system, even after project completion, could be assured. This seemed to be an effect of the competitive market that caused a substantial drop in price during the last years.

User experience

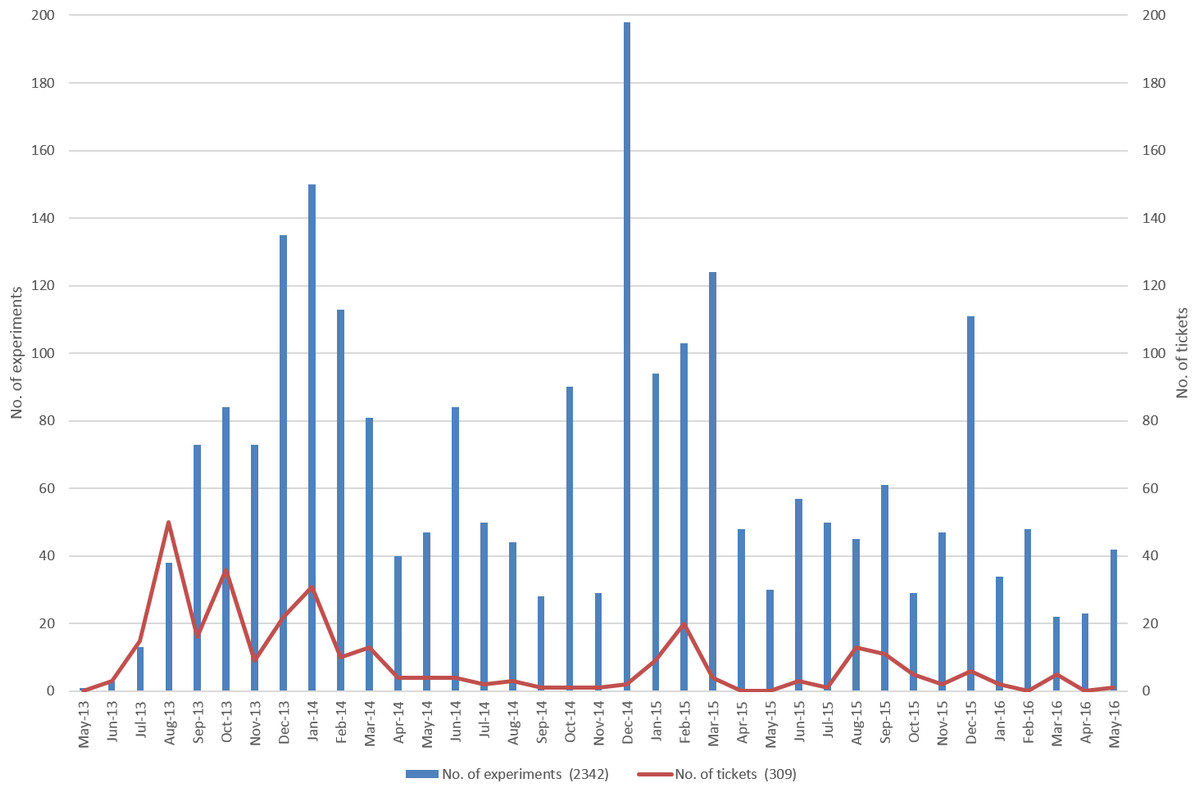

In total, more than 100 users were registered during the first two years runtime, whereas the maximum number of parallel user accounts was 87; i.e., 13 users left the project for different reasons. The number of 87 users is composed of admins (n = 3), external reviewers (n = 4), project owners (n = 26) which are reviewing and countersigning as principal investigators (PIs), and normal users (n = 41). Depending on the period, the number of newly entered experiments per month ranged between 20 and 200 (Fig. 4, blue bars). The size of the uploaded or entered data was heterogeneous and comprised experiments with less than 1 MB, i.e., data from small experimental assays, but also contained data objects much larger than 100 MB, e.g., raw data from mass spectroscopy. Interestingly, users structured similar experiments in different ways. Some users created single experiments for each test set, while other combined data from different test sets into one experiment.

|

In an initial analysis, we evaluated user experience by the number of help desk tickets created during the live time of the system (Fig. 4, orange line).

During the initial phase (2013.06–2013.10) most of the tickets were associated with user access to the ELN. However, after six months (2013.12–2014.01), many requests were related to functionality, especially those from infrequent ELN users. In February 2015, the vendor released an updated graphical user interface (GUI), resulting in a higher number of tickets referring to modified or missing functions and the slow response of the system. The higher number of tickets in August/September 2015 were related to a call for refreshing assigned user accounts. However, overall the number of tickets was within the expected range (<10 per month).

There was a clear decline in frequent usage during the project runtime. The ELN was officially introduced in June 2013, and the number of experiments increased during the following month as expected (Fig. 4, blue bars). The small decrease in November 2013 was anticipated as a reaction to a new release, which caused some issues on specific operating system/browser combinations and language settings. Support for Windows XP also ended at this time. Some of the issues were resolved with the new release (December 2013). The increase in new experiments in October 2014 and subsequent decline in November 2014 is correlated to a reminder sent out to the members of the project to record all activities into the ELN. The same is true for September/October 2015. The last quarter of each year illustrates year-end activities, including the conclusion of projects and the completion of corresponding paperwork, as reflected in the chart (Fig. 4, orange line).

Overall, the regular documentation of experiments in the ELN appeared to be unappealing to researchers. This infrequent usage prompted us to carry out a survey of user acceptance in May/June 2015 (detailed description of analysis methods including KNIME workflow[23] and raw data are provided in Supplemental File 2). The primary aim was to evaluate user experiences compared to expectations in more detail. In addition, the administrative team wanted to get feedback to determine what could be done to the existing support structure to increase usage or simplify the routine documentation of laboratory work. Overall, 77 users (see Table 4) were invited to participate in the survey. Two users left the project during the runtime of the survey. We received feedback from 60 (=80%) out of the remaining 75 users. Two questionnaires were rejected due to less than 20% answered questions. The number of evaluated questionnaires is 58 (see Supplemental File 5).

| ||||||||||||||||||||||||||||||||||||

References

- ↑ Myers, J.D. (10 July 2014). "Collaborative Electronic Notebooks as Electronic Records: Design Issues for the Secure Electronic Laboratory Notebook (ELN)". ResearchGate. ResearchGate GmbH. https://www.researchgate.net/publication/228705896_Collaborative_electronic_notebooks_as_electronic_records_Design_issues_for_the_secure_electronic_laboratory_notebook_eln. Retrieved 08 January 2015.

- ↑ Bennett, J.W.; Chung, K.T. (2001). "Alexander Fleming and the discovery of penicillin". Advances in Applied Microbiology 49: 163–84. doi:10.1016/S0065-2164(01)49013-7. PMID 11757350.

- ↑ Du, P.; Kofman, J.A. (2007). "Electronic Laboratory Notebooks in Pharmaceutical R&D: On the Road to Maturity". JALA 12 (3): 157–65. doi:10.1016/j.jala.2007.01.001.

- ↑ Ioannidis, J.P.; Greenland, S.; Hlatky, M.A. et al. (2014). "Increasing value and reducing waste in research design, conduct, and analysis". Lancet 383 (9912): 166–75. doi:10.1016/S0140-6736(13)62227-8. PMC PMC4697939. PMID 24411645. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4697939.

- ↑ Special Programme for Research and Training in Tropical Diseases (2006). "Handbook: Quality practices in basic biomedical research". World Health Organization. pp. 122. http://www.who.int/tdr/publications/training-guideline-publications/handbook-quality-practices-biomedical-research/en/.

- ↑ Schnell, S. (2015). "Ten Simple Rules for a Computational Biologist's Laboratory Notebook". PLoS Computational Biology 11 (9): e1004385. doi:10.1371/journal.pcbi.1004385. PMC PMC4565690. PMID 26356732. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4565690.

- ↑ Nussbeck, S.Y.; Weil, P.; Menzel, J. et al. (2014). "The laboratory notebook in the 21st century: The electronic laboratory notebook would enhance good scientific practice and increase research productivity". EMBO Reports 15 (6): 631–4. doi:10.15252/embr.201338358. PMC PMC4197872. PMID 24833749. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4197872.

- ↑ Sandve, G.K.; Nekrutenko, A.; Taylor, J.; Hovig, E. (2013). "Ten simple rules for reproducible computational research". PLoS Computational Biology 9 (10): e1003285. doi:10.1371/journal.pcbi.1003285. PMC PMC3812051. PMID 24204232. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3812051.

- ↑ Bechhofer, S.; Buchan, I.; De Roure, D. et al. (2013). "Why linked data is not enough for scientists". Future Generation Computer Systems 29 (2): 599-611. doi:10.1016/j.future.2011.08.004.

- ↑ White, T.E.; Dalrymple, R.L.; Noble, D.W.A. et al. (2015). "Reproducible research in the study of biological coloration". Animal Behaviour 106: 51–57. doi:10.1016/j.anbehav.2015.05.007.

- ↑ Woelfle, M.; Olliaro, P.; Todd, M.H. (2011). "Open science is a research accelerator". Nature Chemistry 3 (10): 745-8. doi:10.1038/nchem.1149. PMID 21941234.

- ↑ Deutsche Forschungsgemeinschaft (September 2013). "Proposals for Safeguarding Good Scientific Practice" (PDF). https://www.mpimet.mpg.de/fileadmin/publikationen/Volltexte_diverse/DFG-Safeguarding_Good_Scientific_Practice_DFG.pdf.

- ↑ Directorate-General for Research & Innovation (2013). "Guidelines to the Rules on Open Access to Scientific Publications and Open Access to Research Data in Horizon 2020" (PDF). https://ec.europa.eu/research/participants/data/ref/h2020/grants_manual/hi/oa_pilot/h2020-hi-oa-pilot-guide_en.pdf. Retrieved 12 September 2016.

- ↑ Payne, D.J.; Miller, L.F.; Findlay, D. et al. (2015). "Time for a change: addressing R&D and commercialization challenges for antibacterials". Philosophical Transactions of the Royal Society B: Biological Sciences 370 (1670): 20140086. doi:10.1098/rstb.2014.0086.

- ↑ Kostyanev, T.; Bonten, M.J.; O'Brien, S. et al. (2016). "The Innovative Medicines Initiative's New Drugs for Bad Bugs programme: European public-private partnerships for the development of new strategies to tackle antibiotic resistance". Journal of Antimicrobial Chemotherapy 71 (2): 290-5. doi:10.1093/jac/dkv339. PMID 26568581.

- ↑ Rex, J.H. (2014). "ND4BB: addressing the antimicrobial resistance crisis". Nature Reviews Microbiology 12: 231–32. doi:10.1038/nrmicro3245.

- ↑ Stavenger, R.A.; Winterhalter, M. (2014). "TRANSLOCATION project: How to get good drugs into bad bugs". Science Translational Medicine 6 (228): 228ed7. doi:10.1126/scitranslmed.3008605. PMID 24648337.

- ↑ "ND4BB Translocation". ND4BB. http://translocation.eu/. Retrieved 22 September 2015.

- ↑ 20.0 20.1 Nehme, A.; Scoffin, R.A. (2006). "9. Electronic Laboratory Notebooks". In Ekins, S.; Wang, B.. Computer Applications in Pharmaceutical Research and Development. Wiley. pp. 209–27. ISBN 9780471737797.

- ↑ Milsted, A.J.; Hale, J.R.; Grey, J.G.; Neylon, C. (2013). "LabTrove: a lightweight, web based, laboratory "blog" as a route towards a marked up record of work in a bioscience research laboratory". PLoS One 8 (7): e67460. doi:10.1371/journal.pone.0067460. PMC PMC3720848. PMID 23935832. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3720848.

- ↑ Iyer, R.; Kudrle, W.. "Implementation of an Electronic Lab Notebook to Integrate Research and Education in an Undergraduate Biotechnology Program". Technology Interface International Journal 12 (2): 5–12. http://tiij.org/issues/issues/spring2012/spring_summer_2012.htm.

- ↑ Berthold, M.R.; Cebron, N.; Dill, F. et al. (2008). "KNIME: The Konstanz Information Miner". In Preisach, C.; Burkhardt, H.; Schmidt-Thieme, L.; Decker, R. (PDF). Data Analysis, Machine Learning and Applications. Springer-Verlag Berlin Heidelberg. pp. 319–326. doi:10.1007/978-3-540-78246-9. ISBN 9783540782469. http://www.inf.uni-konstanz.de/bioml2/publications/Papers2007/BCDG+07_knime_gfkl.pdf.

Notes

This presentation is faithful to the original, with only a few minor changes to presentation, including regionalizing spelling. In some cases important information was missing from the references, and that information was added. The original lists references in alphabetical order; this version lists them in order of appearance due to the nature of the wiki. Supplemental file names have been clarified in the text.