Difference between revisions of "Journal:Epidemiological data challenges: Planning for a more robust future through data standards"

Shawndouglas (talk | contribs) (Saving and adding more.) |

Shawndouglas (talk | contribs) (Saving and adding more.) |

||

| Line 43: | Line 43: | ||

First, epidemiological data on the internet are presented to the user through a variety of interfaces. These interfaces vary widely not only in their appearance but also in their functionality. Some data are openly available through clear modern web interfaces, complete with well-documented programmer-friendly [[application programming interface]]s (APIs), while others are displayed as static web pages that require error-prone and brittle web scraping. Still others are offered as machine-readable documents (e.g., comma-separate values [CSV], Microsoft Excel, Extensible Markup Language [XML], Adobe PDF). Finally, some necessitate contacting a human, who then prepares and sends the requested data manually. | First, epidemiological data on the internet are presented to the user through a variety of interfaces. These interfaces vary widely not only in their appearance but also in their functionality. Some data are openly available through clear modern web interfaces, complete with well-documented programmer-friendly [[application programming interface]]s (APIs), while others are displayed as static web pages that require error-prone and brittle web scraping. Still others are offered as machine-readable documents (e.g., comma-separate values [CSV], Microsoft Excel, Extensible Markup Language [XML], Adobe PDF). Finally, some necessitate contacting a human, who then prepares and sends the requested data manually. | ||

Second, there are many data formats. Data containers (e.g., CSV, JavaScript Object Notation [JSON]) and element formats (e.g., timestamp format, location name format) may differ. Character encodings<ref name="ZentgrafWhat15">{{cite web |url=http://kunststube.net/encoding/ |title=What Every Programmer Absolutely, Positively Needs To Know About Encodings And Character Sets To Work With Text |author=Zentgraf, D.C. |work=Kunststube |date=27 April 2015 |accessdate=23 August 2016}}</ref> (e.g., ASCII, UTF-8) and line endings<ref name=" | Second, there are many data formats. Data containers (e.g., CSV, JavaScript Object Notation [JSON]) and element formats (e.g., timestamp format, location name format) may differ. Character encodings<ref name="ZentgrafWhat15">{{cite web |url=http://kunststube.net/encoding/ |title=What Every Programmer Absolutely, Positively Needs To Know About Encodings And Character Sets To Work With Text |author=Zentgraf, D.C. |work=Kunststube |date=27 April 2015 |accessdate=23 August 2016}}</ref> (e.g., ASCII, UTF-8) and line endings<ref name="AtwoodTheGreat10">{{cite web |url=https://blog.codinghorror.com/the-great-newline-schism/ |title=The Great Newline Schism |author=Atwood, J. |work=Coding Horror |date=18 January 2010 |accessdate=01 October 2016}}</ref> (e.g., <tt>\r\n</tt>, <tt>\n</tt>) may also differ. Compounding these issues, formats can change over time (e.g., renaming or reordering spreadsheet columns). More broadly, these challenges are closely tied to schema, data model, and vocabulary standardization. | ||

Finally, there are differences among institutions in their reporting habits; even within a single institution, there are often reporting nuances among diseases. For example, one context may be reported monthly (e.g., Q fever in Australia), while another context is reported weekly (e.g., influenza in the U.S.) or even more finely (e.g., 2014 West African Ebola outbreak). Furthermore, what is meant by “weekly” in one context may be different than another context (e.g., CDC epi weeks vs. irregular reporting intervals in Poland, as described later). | Finally, there are differences among institutions in their reporting habits; even within a single institution, there are often reporting nuances among diseases. For example, one context may be reported monthly (e.g., Q fever in Australia), while another context is reported weekly (e.g., influenza in the U.S.) or even more finely (e.g., 2014 West African Ebola outbreak). Furthermore, what is meant by “weekly” in one context may be different than another context (e.g., CDC epi weeks vs. irregular reporting intervals in Poland, as described later). | ||

Together, these challenges make large-scale public health data analysis and modeling significantly more difficult and time-consuming. Gathering, cleaning, and eliciting relevant data often require more time than the actual analysis itself. This paper discusses these three key technical challenges involving public health-related epidemiological data, in detail and with examples that were identified through detailed analysis of data deposition practices around the globe. Building from this analysis, we offer a framework of best practices comprised of modern standards that should be adhered to when releasing epidemiological data to the public. Such a framework will enable a more robust future for accurate and high-confidence epidemiological data and analysis. | Together, these challenges make large-scale public health data analysis and modeling significantly more difficult and time-consuming. Gathering, cleaning, and eliciting relevant data often require more time than the actual analysis itself. This paper discusses these three key technical challenges involving public health-related epidemiological data, in detail and with examples that were identified through detailed analysis of data deposition practices around the globe. Building from this analysis, we offer a framework of best practices comprised of modern standards that should be adhered to when releasing epidemiological data to the public. Such a framework will enable a more robust future for accurate and high-confidence epidemiological data and analysis. | ||

==Discussion== | |||

===Interface challenges=== | |||

The interface is the mechanism by which data are presented to a user for consumption. | |||

Epidemiological data repositories implementing current best practices provide an interactive web-based searching and filtering interface that enables users to easily export desired data in a variety of formats. These are generally accompanied by an API that allows users to programmatically acquire desired data. For example, if one wants to download the latest influenza surveillance data weekly, instead of manually navigating an interactive web interface each week to export the data, the process could be automated by writing code that interacts with the API. Such an interface provides the simplest and most powerful method of data acquisition. Examples of this type of interface are the U.S. Centers for Disease Control and Prevention's (CDC) [https://data.cdc.gov/ Data Catalog] and the World Health Organization's (WHO) [https://www.who.int/data/gho Global Health Observatory] (GHO). | |||

While an interactive web-based interface coupled with an API is a best practice, it can be complex and expensive to implement. Many public health departments are under resource constraints and depend on older websites that tend to release data in one of two ways: 1) data are uploaded in some common format (e.g., CSV, Microsoft Excel, PDF) or 2) data are displayed in Hypertext Markup Language (HTML) tables. An example of the first is seen on Israel's Ministry of Health website, where data are provided weekly in Microsoft Excel formats.<ref name="MHIWeekly16">{{cite web |url=https://www.health.gov.il/UnitsOffice/HD/PH/epidemiology/Pages/epidemiology_report.aspx?WPID=WPQ7&PN=1 |title=Weekly and Periodic Epidemiological Reports |author=Ministry of Health Isreal |accessdate=04 September 2016}}</ref> An example of the second is seen on Australia's Department of Health website, where data are provided within simply-formatted HTML tables.<ref name="ADoHNotifications16">{{cite web |url=http://www9.health.gov.au/cda/source/rpt_1_sel.cfm |title=Notifications of all diseases by Month |work=National Notifiable Diseases Surveillance System |author=Australian Government Department of Health |accessdate=04 September 2016}}</ref> | |||

Data uploaded in a common format can often be automatically downloaded and processed, and HTML tables can generally be automatically scraped and processed. While HTML scraping is often straightforward, there are some instances where it can be quite difficult. One example of a difficult-to-scrape data source is the Robert Koch Institute SurvStat 2.0 website.<ref name="RKISurvStat">{{cite web |url=https://survstat.rki.de/ |title=SurvStat@RKI 2.0 |author=Robert Koch Institute |accessdate=04 September 2016}}</ref> Although the service is capable of providing epidemiological data at superior spatial and temporal resolutions (county- and week-level, respectively), the interface is not easily amenable to scraping. First, the HTTP requests formed by the ASP.NET application cannot be easily reverse-engineered; this necessitates the use of browser-automation software like [http://www.seleniumhq.org/ Selenium], which enables automating website user interaction, such as mouse clicks and keyboard presses, for data scraping. Second, the selection of new filters, attributes, and display options results in a newly-refreshed page for each change; because many options are required to obtain each desired dataset, scraping can take a long time. | |||

Additionally, while there may be no technical barriers to downloading or scraping data, there may be barriers relating to a website's terms of service (TOS). In some instances, the TOS may prevent users from scraping or downloading data en masse; this is sometimes done to prevent unreasonable load on the website, for example. Ignoring the TOS raises ethical issues that are often overlooked in research; after all, the goals of most epidemiological researchers are benevolent, and the data are public and usually funded by taxpayers. Ignoring a website's TOS could also raise logistical issues related to publishing and institutional review board (IRB) approval. | |||

A concern underlying all scraping efforts is that data scraping scripts are brittle. Web scraping relies on patterns in the HTML/CSS source code of a website. If an institution modifies its layouts, even slightly, scrapers may exhibit unexpected behavior. | |||

In some cases, a human must be contacted directly, who then prepares and sends the requested data. However, these manually requested and prepared data are often saddled with many restrictions. For example, when one of the authors contacted a ministry of health for more detailed epidemiological data, the data were offered with a five-page data request form that significantly restricted use and sharing of the data. Furthermore, it stated that it would take “up to 3 months” to be released because of the review and approval from the various data owners (local, state, and territory health departments). These types of restrictions and hurdles to data access prevent the development and adoption of advanced [[Data analysis|analytics]]. | |||

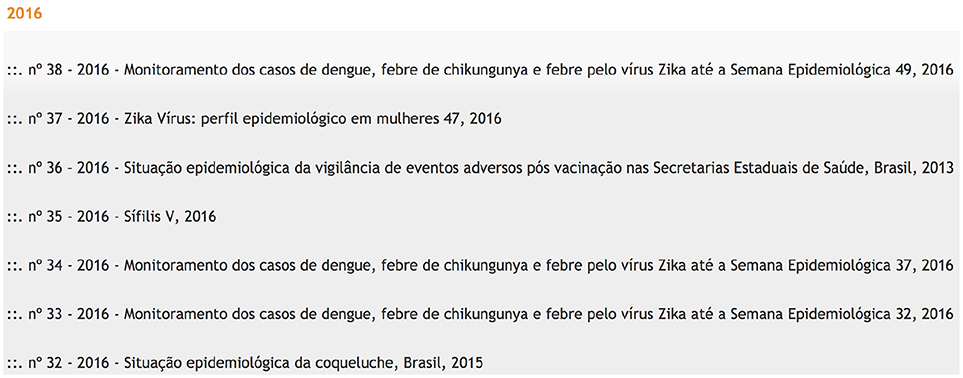

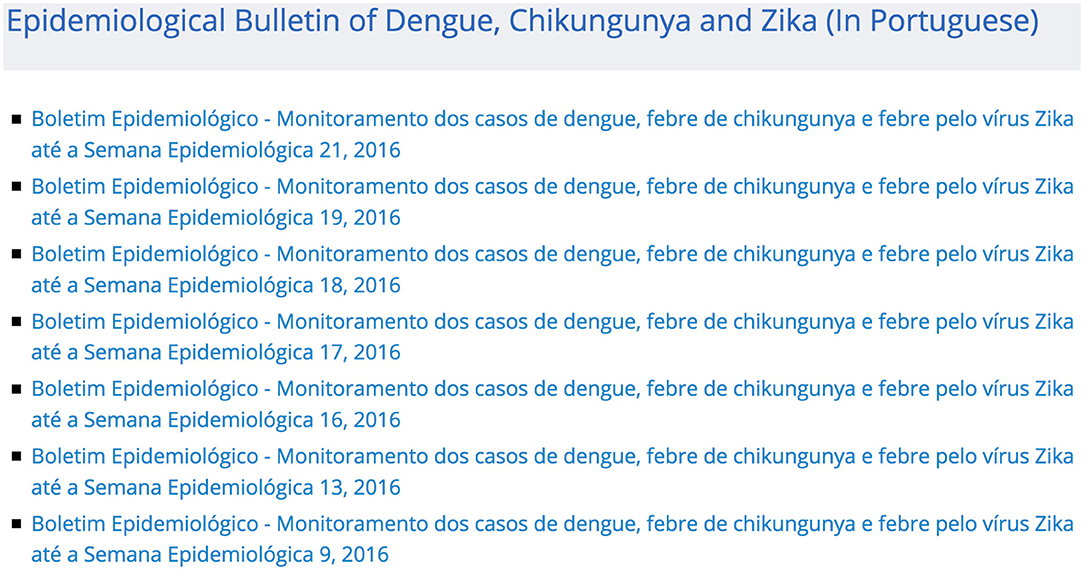

Finally, finding epidemiological data interfaces or data within an interface is often a time-consuming and error-prone task. For example, the Zika virus epidemic has resulted in increased global attention for Brazil, but it has not resulted in a single easy-to-understand machine-readable interface.<ref name="CoelhoEpidemi16">{{cite journal |title=Epidemiological data accessibility in Brazil |journal=The Lancet Infectious Diseases |author=Coelho, F.C.; Codeço, C.T.; Cruz, O.G. et al. |volume=16 |issue=5 |pages=524–5 |year=2016 |doi=10.1016/S1473-3099(16)30007-X |pmid=27068487}}</ref> Until just recently, Brazil's Ministry of Health maintained two separate lists of mosquito-borne illness epidemiological bulletins.<ref name="MSEpidemArch">{{cite web |url=http://www.combateaedes.saude.gov.br/en/epidemiological-situation |archiveurl=https://web.archive.org/web/20160703011414/http://www.combateaedes.saude.gov.br/en/epidemiological-situation |title=Epidemiological Situation |author=Ministério da Saúde |work=Preventing and combating Dengue, Chikungunya e Zika |archivedate=03 July 2016 |accessdate=19 January 2017}}</ref><ref name="MSBoletins">{{cite web |url=https://www.saude.gov.br/boletins-epidemiologicos |title=Boletins epidemiológicos |author=Ministério da Saúde |publisher=Governo do Brasil |accessdate=19 January 2017}}</ref> Although these lists pointed to the exact same bulletins, the page archiving ''Boletim Epidemiológico''<ref name="MSBoletins" /> is consistently more up-to-date than the "Epidemiological Situation" page<ref name="MSEpidemArch" /> (see Figures 1, 2). Having multiple interfaces increases the likelihood of human error when collecting epidemiological data. For instance, if one assumes that there is only one official source for Zika, the most current information may be overlooked. | |||

[[File:Fig1 Fairchild FrontPubHealth2018 6.jpg|963px]] | |||

{{clear}} | |||

{| | |||

| STYLE="vertical-align:top;"| | |||

{| border="0" cellpadding="5" cellspacing="0" width="963px" | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"| <blockquote>'''Fig. 1''' Screenshot showing part of the mosquito-borne illness epidemiological bulletin list available at 'Boletim Epidemiológico'. This is the most current and complete list, with data available through the 38th week of 2016.</blockquote> | |||

|- | |||

|} | |||

|} | |||

[[File:Fig2 Fairchild FrontPubHealth2018 6.jpg|963px]] | |||

{{clear}} | |||

{| | |||

| STYLE="vertical-align:top;"| | |||

{| border="0" cellpadding="5" cellspacing="0" width="963px" | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"| <blockquote>'''Fig. 2''' Screenshot showing part of the mosquito-borne illness epidemiological bulletin list available at the "Epidemiological Situation" page. This list only goes through week 21 of 2016 and is missing a number of weeks when compared to the list in Figure 1. This screenshot was taken at the same time as the one in Figure 1.</blockquote> | |||

|- | |||

|} | |||

|} | |||

| Line 54: | Line 97: | ||

==Notes== | ==Notes== | ||

This presentation is faithful to the original, with only a few minor changes to presentation. In some cases important information was missing from the references, and that information was added. | This presentation is faithful to the original, with only a few minor changes to presentation. In some cases important information was missing from the references, and that information was added. Footnotes in the original were turned into external links for this version. Where a URL from the original was found to no longer work, an archived version of the page was cited. | ||

<!--Place all category tags here--> | <!--Place all category tags here--> | ||

Revision as of 19:59, 27 April 2020

| Full article title | Epidemiological data challenges: Planning for a more robust future through data standards |

|---|---|

| Journal | Frontiers in Public Health |

| Author(s) |

Fairchild, Geoffrey; Tasseff, Byron; Khalsa, Hari; Generous, Nicholas; Daughton, Ashlynn R.; Velappan, Nileena; Priedhorsky, Reid; Deshpande, Alina |

| Author affiliation(s) | Los Alamos National Laboratory |

| Primary contact | Email: gfairchild at lanl dot gov |

| Editors | Efird, Jimmy T. |

| Year published | 2018 |

| Volume and issue | 6 |

| Article # | 336 |

| DOI | 10.3389/fpubh.2018.00336 |

| ISSN | 2296-2565 |

| Distribution license | Creative Commons Attribution 4.0 International |

| Website | https://www.frontiersin.org/articles/10.3389/fpubh.2018.00336/full |

| Download | https://www.frontiersin.org/articles/10.3389/fpubh.2018.00336/pdf (PDF) |

|

|

This article should be considered a work in progress and incomplete. Consider this article incomplete until this notice is removed. |

Abstract

Accessible epidemiological data are of great value for emergency preparedness and response, understanding disease progression through a population, and building statistical and mechanistic disease models that enable forecasting. The status quo, however, renders acquiring and using such data difficult in practice. In many cases, a primary way of obtaining epidemiological data is through the internet, but the methods by which the data are presented to the public often differ drastically among institutions. As a result, there is a strong need for better data sharing practices. This paper identifies, in detail and with examples, the three key challenges one encounters when attempting to acquire and use epidemiological data: (1) interfaces, (2) data formatting, and (3) reporting. These challenges are used to provide suggestions and guidance for improvement as these systems evolve in the future. If these suggested data and interface recommendations were adhered to, epidemiological and public health analysis, modeling, and informatics work would be significantly streamlined, which can in turn yield better public health decision-making capabilities.

Keywords: data, computational epidemiology, public health, disease modeling, informatics, disease surveillance

Introduction

At the heart of disease surveillance and modeling are epidemiological data. These data are generally presented as a time series of cases, T, for a geographic region, G, and for a demographic, D. The type of cases presented may vary depending on the context. For example, T may be a time series of confirmed or suspected cases, or it might be hospitalizations or deaths; in some circumstances, it may be a summation of some combination of these (e.g., confirmed + suspected cases). G is most commonly a political boundary; it might be a country, state/province, county/district, city, or sub-city region, such as a postal code or United States (U.S.) Census Bureau census tract. Depending on the context, D may simply be the the entire population of G, or it might be stratified by age, sex, race, education, or other relevant factors.

Epidemiological data have a variety of uses. From a public health perspective, they can be used to gain an understanding of population-level disease progression. This understanding can in turn be used to aid in decision-making and allocation of resources. Recent outbreaks like Ebola and Zika have demonstrated the value of accessible epidemiological data for emergency preparedness and the need for better data sharing.[1] These data may influence vaccine distribution[2], and hospitals can anticipate surge capacity during an outbreak, allowing them to obtain extra temporary help if necessary.[3][4]

From a modeler's perspective, high-quality reference data (also commonly referred to as "ground truth data") are needed to enable prediction and forecasting.[5] These data can be used to parameterize compartmental models[6] as well as stochastic agent-based models[7][8][9][10][11], and they can also be used to train and validate machine learning and statistical models.[12][13][14][15][16][17][18][19]

The internet has become the predominant way to publish, share, and collect epidemiological data. While data standards exist for observational studies[20] and clinical research[21], for example, no such standards exist for the publication of the kind of public health-related epidemiological data described above. Despite the strong need to share and consume data, there are many legal, technical, political, and cultural challenges in implementing a standardized epidemiological data framework.[22][23] As a result, the methods by which data are presented to the public often differ significantly among data-sharing institutions (e.g., public health departments, ministries of health, data collection or aggregation services). Moreover, these problems are not unique to epidemiological data; the issues described in this paper are common across many different disciplines.

First, epidemiological data on the internet are presented to the user through a variety of interfaces. These interfaces vary widely not only in their appearance but also in their functionality. Some data are openly available through clear modern web interfaces, complete with well-documented programmer-friendly application programming interfaces (APIs), while others are displayed as static web pages that require error-prone and brittle web scraping. Still others are offered as machine-readable documents (e.g., comma-separate values [CSV], Microsoft Excel, Extensible Markup Language [XML], Adobe PDF). Finally, some necessitate contacting a human, who then prepares and sends the requested data manually.

Second, there are many data formats. Data containers (e.g., CSV, JavaScript Object Notation [JSON]) and element formats (e.g., timestamp format, location name format) may differ. Character encodings[24] (e.g., ASCII, UTF-8) and line endings[25] (e.g., \r\n, \n) may also differ. Compounding these issues, formats can change over time (e.g., renaming or reordering spreadsheet columns). More broadly, these challenges are closely tied to schema, data model, and vocabulary standardization.

Finally, there are differences among institutions in their reporting habits; even within a single institution, there are often reporting nuances among diseases. For example, one context may be reported monthly (e.g., Q fever in Australia), while another context is reported weekly (e.g., influenza in the U.S.) or even more finely (e.g., 2014 West African Ebola outbreak). Furthermore, what is meant by “weekly” in one context may be different than another context (e.g., CDC epi weeks vs. irregular reporting intervals in Poland, as described later).

Together, these challenges make large-scale public health data analysis and modeling significantly more difficult and time-consuming. Gathering, cleaning, and eliciting relevant data often require more time than the actual analysis itself. This paper discusses these three key technical challenges involving public health-related epidemiological data, in detail and with examples that were identified through detailed analysis of data deposition practices around the globe. Building from this analysis, we offer a framework of best practices comprised of modern standards that should be adhered to when releasing epidemiological data to the public. Such a framework will enable a more robust future for accurate and high-confidence epidemiological data and analysis.

Discussion

Interface challenges

The interface is the mechanism by which data are presented to a user for consumption.

Epidemiological data repositories implementing current best practices provide an interactive web-based searching and filtering interface that enables users to easily export desired data in a variety of formats. These are generally accompanied by an API that allows users to programmatically acquire desired data. For example, if one wants to download the latest influenza surveillance data weekly, instead of manually navigating an interactive web interface each week to export the data, the process could be automated by writing code that interacts with the API. Such an interface provides the simplest and most powerful method of data acquisition. Examples of this type of interface are the U.S. Centers for Disease Control and Prevention's (CDC) Data Catalog and the World Health Organization's (WHO) Global Health Observatory (GHO).

While an interactive web-based interface coupled with an API is a best practice, it can be complex and expensive to implement. Many public health departments are under resource constraints and depend on older websites that tend to release data in one of two ways: 1) data are uploaded in some common format (e.g., CSV, Microsoft Excel, PDF) or 2) data are displayed in Hypertext Markup Language (HTML) tables. An example of the first is seen on Israel's Ministry of Health website, where data are provided weekly in Microsoft Excel formats.[26] An example of the second is seen on Australia's Department of Health website, where data are provided within simply-formatted HTML tables.[27]

Data uploaded in a common format can often be automatically downloaded and processed, and HTML tables can generally be automatically scraped and processed. While HTML scraping is often straightforward, there are some instances where it can be quite difficult. One example of a difficult-to-scrape data source is the Robert Koch Institute SurvStat 2.0 website.[28] Although the service is capable of providing epidemiological data at superior spatial and temporal resolutions (county- and week-level, respectively), the interface is not easily amenable to scraping. First, the HTTP requests formed by the ASP.NET application cannot be easily reverse-engineered; this necessitates the use of browser-automation software like Selenium, which enables automating website user interaction, such as mouse clicks and keyboard presses, for data scraping. Second, the selection of new filters, attributes, and display options results in a newly-refreshed page for each change; because many options are required to obtain each desired dataset, scraping can take a long time.

Additionally, while there may be no technical barriers to downloading or scraping data, there may be barriers relating to a website's terms of service (TOS). In some instances, the TOS may prevent users from scraping or downloading data en masse; this is sometimes done to prevent unreasonable load on the website, for example. Ignoring the TOS raises ethical issues that are often overlooked in research; after all, the goals of most epidemiological researchers are benevolent, and the data are public and usually funded by taxpayers. Ignoring a website's TOS could also raise logistical issues related to publishing and institutional review board (IRB) approval.

A concern underlying all scraping efforts is that data scraping scripts are brittle. Web scraping relies on patterns in the HTML/CSS source code of a website. If an institution modifies its layouts, even slightly, scrapers may exhibit unexpected behavior.

In some cases, a human must be contacted directly, who then prepares and sends the requested data. However, these manually requested and prepared data are often saddled with many restrictions. For example, when one of the authors contacted a ministry of health for more detailed epidemiological data, the data were offered with a five-page data request form that significantly restricted use and sharing of the data. Furthermore, it stated that it would take “up to 3 months” to be released because of the review and approval from the various data owners (local, state, and territory health departments). These types of restrictions and hurdles to data access prevent the development and adoption of advanced analytics.

Finally, finding epidemiological data interfaces or data within an interface is often a time-consuming and error-prone task. For example, the Zika virus epidemic has resulted in increased global attention for Brazil, but it has not resulted in a single easy-to-understand machine-readable interface.[29] Until just recently, Brazil's Ministry of Health maintained two separate lists of mosquito-borne illness epidemiological bulletins.[30][31] Although these lists pointed to the exact same bulletins, the page archiving Boletim Epidemiológico[31] is consistently more up-to-date than the "Epidemiological Situation" page[30] (see Figures 1, 2). Having multiple interfaces increases the likelihood of human error when collecting epidemiological data. For instance, if one assumes that there is only one official source for Zika, the most current information may be overlooked.

|

|

References

- ↑ Chretien, J.P.; Rivers, C.M.; Johansson, M.A. (2016). "Make Data Sharing Routine to Prepare for Public Health Emergencies". PLoS One 13 (8): e1002109. doi:10.1371/journal.pmed.1002109. PMC PMC4987038. PMID 27529422. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4987038.

- ↑ Centers for Disease Control and Prevention (2018). "Allocating and Targeting Pandemic Influenza Vaccine During an Influenza Pandemic". U.S. Department of Health and Human Services. https://asprtracie.hhs.gov/technical-resources/resource/2846/guidance-on-allocating-and-targeting-pandemic-influenza-vaccine.

- ↑ Nap, R.E.; Andriessen, M.P.; Meessen, N.E. et al. (2007). "Pandemic influenza and hospital resources". Emerging Infectious Diseases 13 (11): 1714-9. doi:10.3201/eid1311.070103. PMC PMC3375786. PMID 18217556. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3375786.

- ↑ Hota, S.; Fried, E.; Burry, L. et al. (2010). "Preparing your intensive care unit for the second wave of H1N1 and future surges". Critical Care Medicine 38 (4 Suppl.): e110–9. doi:10.1097/CCM.0b013e3181c66940. PMID 19935417.

- ↑ Moran, K.R.; Fairchild, G.; Generous, N. et al. (2016). "Epidemic Forecasting is Messier Than Weather Forecasting: The Role of Human Behavior and Internet Data Streams in Epidemic Forecast". Journal of Infectious Diseases 214 (Suppl. 4): S404-S408. doi:10.1093/infdis/jiw375. PMC PMC5181546. PMID 28830111. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC5181546.

- ↑ Hethcore, H.W. (2000). "The Mathematics of Infectious Diseases". SIAM Review 42 (4): 599–653. doi:10.1137/S0036144500371907.

- ↑ Eubank, S.; Guclu, H.; Kumar, V.S. et al. (2004). "Modelling disease outbreaks in realistic urban social networks". Nature 429 (6988): 180–4. doi:10.1038/nature02541. PMID 15141212.

- ↑ Busset, K.R.; Chen, J.; Feng, X. et al. (2009). "EpiFast: A fast algorithm for large scale realistic epidemic simulations on distributed memory systems". Proceedings of the 23rd international conference on Supercomputing: 430–39. doi:10.1145/1542275.1542336.

- ↑ Chao, D.L.; Halstead, S.B.; Halloran, M.E. et al. (2012). "Controlling dengue with vaccines in Thailand". PLoS Neglected Tropical Diseases 6 (10): e1876. doi:10.1371/journal.pntd.0001876. PMC PMC3493390. PMID 23145197. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3493390.

- ↑ Grefenstette, J.J.; Brown, S.T.; Rosenfeld, R. et al. (2013). "FRED (a Framework for Reconstructing Epidemic Dynamics): An open-source software system for modeling infectious diseases and control strategies using census-based populations". BMC Public Health 13: 940. doi:10.1186/1471-2458-13-940. PMC PMC3852955. PMID 24103508. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3852955.

- ↑ McMahon, B.H.; Manore, C.A.; Hyman, J.M. et al. (2014). "Coupling Vector-host Dynamics with Weather Geography and Mitigation Measures to Model Rift Valley Fever in Africa". Mathematical Modelling of Natural Phenomena 9 (2): 161–77. doi:10.1051/mmnp/20149211. PMC PMC4398965. PMID 25892858. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4398965.

- ↑ Viboud, C.; Boëlle, P.Y.; Carrat, F. et al. (2003). "Prediction of the spread of influenza epidemics by the method of analogues". American Journal of Epidemiology 158 (10): 996-1006. doi:10.1093/aje/kwg239. PMID 14607808.

- ↑ Polgreen, P.M.; Chen, Y.; Pennock, D.M. et al. (2008). "Using internet searches for influenza surveillance". Clinical Infectious Diseases 47 (11): 1443-8. doi:10.1086/593098. PMID 18954267.

- ↑ Ginsberg, J.; Mohebbi, M.H.; Patel, R.S. et al. (2009). "Detecting influenza epidemics using search engine query data". Nature 457 (7232): 1012-4. doi:10.1038/nature07634. PMID 19020500.

- ↑ Signorini, A.; Segre, A.M.; Polgreen, P.M. et al. (2011). "The use of Twitter to track levels of disease activity and public concern in the U.S. during the influenza A H1N1 pandemic". PLoS One 6 (5): e19467. doi:10.1371/journal.pone.0019467. PMC PMC3087759. PMID 21573238. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3087759.

- ↑ Shaman, J.; Karspeck, A.; Yang, W. et al. (2013). "Real-time influenza forecasts during the 2012-2013 season". Nature Communications 4: 2837. doi:10.1038/ncomms3837. PMC PMC3873365. PMID 24302074. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3873365.

- ↑ Generous, N.; Fairchild, G.; Deshpande, A. et al. (2014). "Global disease monitoring and forecasting with Wikipedia". PLoS Computational Biology 10 (11): e1003892. doi:10.1371/journal.pcbi.1003892. PMC PMC4231164. PMID 25392913. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4231164.

- ↑ Hickmann, K.S.; Fairchild, G.; Priedhorsky, R. et al. (2015). "Forecasting the 2013-2014 influenza season using Wikipedia". PLoS Computational Biology 11 (5): e1004239. doi:10.1371/journal.pcbi.1004239. PMC PMC4431683. PMID 25974758. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4431683.

- ↑ Fairchild, G.; Del Valle, S.Y.; De Silva, L. et al. (2015). "Eliciting Disease Data from Wikipedia Articles". Proceedings of the 2015 International AAAI Conference on Weblogs and Social Media: 26–33. PMC PMC5511739. PMID 28721308. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC5511739.

- ↑ STROBE Initiative. "STROBE Statement". University of Bern. https://www.strobe-statement.org/. Retrieved 01 October 2018.

- ↑ Clinical Data Interchange Standards Consortium. "CDISC". https://www.cdisc.org/. Retrieved 01 October 2018.

- ↑ Pisani, E.; AbouZahr, C. (2010). "Sharing health data: Good intentions are not enough". Bulletin of the World Health Organization 88 (6): 462–6. doi:10.2471/BLT.09.074393. PMC PMC2878150. PMID 20539861. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC2878150.

- ↑ Edelstein, M.; Sane, J. (17 April 2015). "Overcoming Barriers to Data Sharing in Public Health: A Global Perspective". Chatham House. https://www.chathamhouse.org/publication/overcoming-barriers-data-sharing-public-health-global-perspective.

- ↑ Zentgraf, D.C. (27 April 2015). "What Every Programmer Absolutely, Positively Needs To Know About Encodings And Character Sets To Work With Text". Kunststube. http://kunststube.net/encoding/. Retrieved 23 August 2016.

- ↑ Atwood, J. (18 January 2010). "The Great Newline Schism". Coding Horror. https://blog.codinghorror.com/the-great-newline-schism/. Retrieved 01 October 2016.

- ↑ Ministry of Health Isreal. "Weekly and Periodic Epidemiological Reports". https://www.health.gov.il/UnitsOffice/HD/PH/epidemiology/Pages/epidemiology_report.aspx?WPID=WPQ7&PN=1. Retrieved 04 September 2016.

- ↑ Australian Government Department of Health. "Notifications of all diseases by Month". National Notifiable Diseases Surveillance System. http://www9.health.gov.au/cda/source/rpt_1_sel.cfm. Retrieved 04 September 2016.

- ↑ Robert Koch Institute. "SurvStat@RKI 2.0". https://survstat.rki.de/. Retrieved 04 September 2016.

- ↑ Coelho, F.C.; Codeço, C.T.; Cruz, O.G. et al. (2016). "Epidemiological data accessibility in Brazil". The Lancet Infectious Diseases 16 (5): 524–5. doi:10.1016/S1473-3099(16)30007-X. PMID 27068487.

- ↑ 30.0 30.1 Ministério da Saúde. "Epidemiological Situation". Preventing and combating Dengue, Chikungunya e Zika. Archived from the original on 03 July 2016. https://web.archive.org/web/20160703011414/http://www.combateaedes.saude.gov.br/en/epidemiological-situation. Retrieved 19 January 2017.

- ↑ 31.0 31.1 Ministério da Saúde. "Boletins epidemiológicos". Governo do Brasil. https://www.saude.gov.br/boletins-epidemiologicos. Retrieved 19 January 2017.

Notes

This presentation is faithful to the original, with only a few minor changes to presentation. In some cases important information was missing from the references, and that information was added. Footnotes in the original were turned into external links for this version. Where a URL from the original was found to no longer work, an archived version of the page was cited.