Journal:Identifying risk management challenges in laboratories

| Full article title | Identifying risk management challenges in laboratories |

|---|---|

| Journal | Accreditation and Quality Assurance |

| Author(s) | Tziakou, Evdoxia; Fragkaki, Argyro G.; Platis, Agapios N. |

| Author affiliation(s) | Hellenic Open University, National Centre for Scientific Research “Demokritos”, University of the Aegean |

| Primary contact | fragkaki at bio dot demokritos dot gr |

| Year published | 2023 |

| Volume and issue | 28(3) |

| DOI | 10.1007/s00769-023-01540-3 |

| ISSN | 1432-0517 |

| Distribution license | Creative Commons Attribution 4.0 International |

| Website | https://link.springer.com/article/10.1007/s00769-023-01540-3 |

| Download | https://link.springer.com/content/pdf/10.1007/s00769-023-01540-3.pdf (PDF) |

|

|

This article should be considered a work in progress and incomplete. Consider this article incomplete until this notice is removed. |

Abstract

Over the years, risk management has gained significant importance in laboratories of every kind. The safety of workers, the accuracy and reliability of laboratory results, issues of financial sustainability, and protection of the environment play an important role in decision-making in both industry and service-based labs. In order for a laboratory to be considered reliable and safe, and therefore competitive, it is recommended to comply with the requirements of international standards and other regulatory documents, as well as use tools and risk management procedures.

In this paper, information is summarized concerning the terms “risk” and “risk management,” which are then approached through the latest International Organization for Standardization (ISO) standard ISO 9001, ISO/IEC 17025, and ISO 14001 standards. The process of risk management based on the ISO 31000 standard is described, and the options for treatment and the techniques that can be applied in the risk management process based on the latest ISO 31010 standard are grouped and indicated. Additionally, a literature review examines the reasons that have led laboratories to integrate risk management techniques into their quality management systems, the most common mistakes that occur in the various phases of laboratory tests, their causes, and their consequences, as well as the proposed treatments. The aim of this work is to highlight significant challenges concerning the need to implement management procedures in the daily routine, while warning, raising awareness of, and informing about existing risk management methods that can be implemented, methodologically and technically, to laboratories, under internationally recognized and updated standards.

Keywords: risk-based thinking, risk management, risk assessment techniques, laboratories, control measures

Introduction

The issue of risk management has existed for thousands of years. [1] The first noted practice of risk management is in the Tigris-Euphrates valley in 3200 BC by the Ašipu, who are considered to be, among others, an early example of risk management consultants. [2, 3]. The Asipu carried out risk analysis for each alternative action related to the risky event to be studied, and after the completion of the analysis they proposed the most favorable alternative. The last step was to issue a final report engraved on a clay tablet that was given to the customer. [4]

The difference between modern risk analysts and the Ašipu of ancient Babylon is that the former express their results as mathematical probabilities and intervals of confidence, while the latter with certainty, confidence, and power. However, to determine the causal relationship between cause and effect, both the ancient ancestors and current researchers rely on observational methods. [2]. After World War II, large companies with diversified portfolios of physical assets began to develop self-insurance, which covered the financial consequences of an adverse event or accidental losses. [5, 6] Modern risk management was implemented after 1955 and was first applied in the insurance industry. [7]

The English term “risk” comes from the Greek word “rhiza,” which refers to the dangers of sailing around a cliff. [2] According to Kumamoto and Henley [8], the term “risk” is defined as a combination of five factors: probability, outcome, significance, causal scenario, and affected population. As far as a laboratory is concerned, “risk” is the probability of a laboratory error which may have adverse consequences [9], as it includes factors that threaten health and safety of staff, environment, organization's facilities, organization's financial sustainability, operational productivity, and service quality. [10] Therefore, for testing laboratories, as risk can be considered the inability to meet customer needs, the provision of incorrect analytical results and failure to meet accreditation requirements damage laboratory's reputation. [11]

Plebani [12] defines risk management as the process by which risk is assessed and strategies are developed to manage it. The goal of any risk management process is to identify, evaluate, address, and reduce the risk to an acceptable level. [13] According to Dikmen et al. [14], risk management involves identifying sources of uncertainty (risk identification) and assessing the consequences of uncertain events/conditions (risk analysis), thus creating response strategies based on expected results and, finally, based on the feedback received from the actual results and the emerging risk, the steps of identification, analysis and repetitive response events are performed throughout the life cycle of a project to ensure that the project objectives are achieved. Kang et al. [15] define risk management as an act of classification, analysis, and response to unforeseen risks, which are involved during the implementation of a project. Risk management involves maximizing the opportunity and impact of positive events and reducing the likelihood and impact of negative events to achieve the project objectives.

The concept of risk is already known to laboratories as it was indirectly included and in previous versions of International Organization for Standardization (ISO) standard ISO 9001 and, mainly via preventive measures to eliminate possible non-compliances and prevent their recurrence (i.e., ISO 9001:2008 [16], ISO/IEC 17025:2005 [17]). In the new versions of ISO 9001:2015 [18] and ISO/IEC 17025:2017 [19], however, the presence of risk-based thinking is more pronounced and imperative.

As the revised version of ISO/IEC 17025 is in line with ISO 9001 in terms of management requirements, a laboratory should examine the impact of threats as well as seize opportunities to increase management system efficiency to achieve improved results and to avoid negative effects. [20] There is no longer a separate clause on preventive measures, and the concept of preventive action is expressed through the application of the risk and opportunities approach. The concept of risk is implied in each paragraph of the standard related to the factors that affect the validity of the results. Such factors are staff, facilities, environmental conditions, equipment, metrological traceability, technical records, etc. In addition, the creation of a formal risk management system is not a requirement of the standard, but each laboratory can choose the approach which is satisfactory and can be implemented for its needs. [19, 20]

The revised ISO 14001 [20] is also in line with ISO 9001. Risk-based thinking provides a structured approach to managing environmental issues that are likely to affect the organization. Identifying environmental risks and potential opportunities is vital to an organization's success.

Finally, in ISO 31000:2018 [21], risk management is considered to be the coordinated activities carried out for the management and control of an organization in relation to risk. Therefore, in order for a laboratory to comply with the new versions of the standards, it is important to understand the risk-based thinking and to examine the functions, procedures, and activities related to risks and opportunities. To address the concern, this paper aims to explore the implementation of a risk-based thinking framework in testing or calibration laboratories and highlight the challenges that arise as part of that implementation.

The risk management process

The risk management process can be applied at all levels of an organization, from strategy to project implementation. In addition, it must be an integral part of management and decision making and integrated into the structure, functions, and processes of the organization. [9] The integrated risk management process relies on a well-structured risk-based thinking which encompasses the whole quality management system (QMS).

In this context, the risk assessment stage consists of three sub-stages: risk identification, risk analysis, and risk evaluation. The purpose of risk identification is to find, recognize, and describe the risks that positively or negatively affect the achievement of the objectives of the organization, even those whose sources are not under its control. [9] According to Elkington and Smallman [22], risk identification is the most important phase of risk analysis, and emphasis is given in the fact that potential risks should be identified at each stage. Hallikas et al. [23] also state that the identification phase is fundamental to implement risk management, as by recognizing sources of risk, future uncertainties can be identified, and preventive measures can be taken. During risk analysis, the impact of a risk is assessed while during risk evaluation any additional action is determined.

After completing the risk assessment stage, the risk is addressed by avoiding risk, taking or increasing risk to pursue an opportunity, removing the risk source, changing the likelihood, changing the consequences, sharing the risk (e.g., through contracts, insurance), or maintaining the risk with a documented decision. All the above steps should be monitored and reviewed to ensure and improve the quality and effectiveness of risk management. The results of the process should be recorded and reported throughout the organization to provide information for decision making, for the improvement of risk management activities and for the interaction with stakeholders [9].

Risk assessment techniques

Risk assessment—which is often expressed in relation to sources, possible events, consequences, and likelihood—can be a very difficult process, especially when these relationships are complex. A variety of risk assessment techniques is depicted in Table 1. Though the choice of techniques is not random, some factors must be first taken into account, such as the purpose of the assessment, the needs of stakeholders, any legal, regulatory and contractual requirements, the operating environment and the scenario, how much important is the decision to be made, any defined decision criteria and their form, the time available before a decision is made, the given information and expertise, and the complexity of the situation. [24]

| ||||||||||||||||||||||||

The most used techniques for identifying risk are the failure modes and effects analysis (FMEA), as well as the failure modes, effects, and criticality analysis (FMECA). FMEA/FMECA can be applied at all levels of an organization and performed at any level of analysis of a system, from block diagrams to detailed elements of a system or steps of a process. [25] This fact leads to several sub-types of FMEA such as system FMEA, design FMEA, process FMEA, and service delivery FMEA. As defined by its name, FMEA is a systematic method designed to identify potential failure modes for a product or process before it occurs and to assess the risk. In FMEA, the system or process under consideration is broken down into individual components. For each element, the ways in which it may fail, the causes and effects of failure are examined. FMECA is a FMEA followed by criticality analysis, which means that for each failure its importance is also assessed. The calculation of the risk in FMEA method includes the multiplication of the three risk parameters severity (S), occurrence (O), and detection (D) in order to produce a risk priority number (RPN, RPN = S × O × D). However, in FMECA, failure modes are classified by their criticality. [26] A quantitative measure of criticality can be derived from actual failure rates and a quantitative measure of consequences, if known. FMEA can be used to provide information for analysis to other techniques such as fault tree analysis (FTA). FTA is a commonly used technique for understanding consequences and likelihood of risk. It is a logic diagram that represents the relationships between an adverse event, which is typically a system failure, and the causes of the event which are the component of failure. It uses logic gates and events to model the above-mentioned relationships. FTA can be used both qualitatively to identify the potential causes and pathways to the peak event and quantitatively to calculate the probability that the peak event will occur. [27, 28]

Another technique which is commonly used in organizations is failure reporting, analysis and corrective action system (FRACAS). It is a technique for identifying and correcting identfied deficiencies in a system or a product and, thus, prevent further occurrence of them. [29] It is based upon the systematic reporting and analysis of failures, making maintenance of historical data a crucial issue. It is also necessary for the organization to have a database management system. The database is established to store all the required data, which include records on all reported failures, failure analyses, and corrective actions. [30]

Risk identification and treatment in laboratories

Risk identification is the first and most important phase of risk management. [22] In the identification phase, the possible sources of risk which concern the entire activity of the laboratory are recorded. [31]

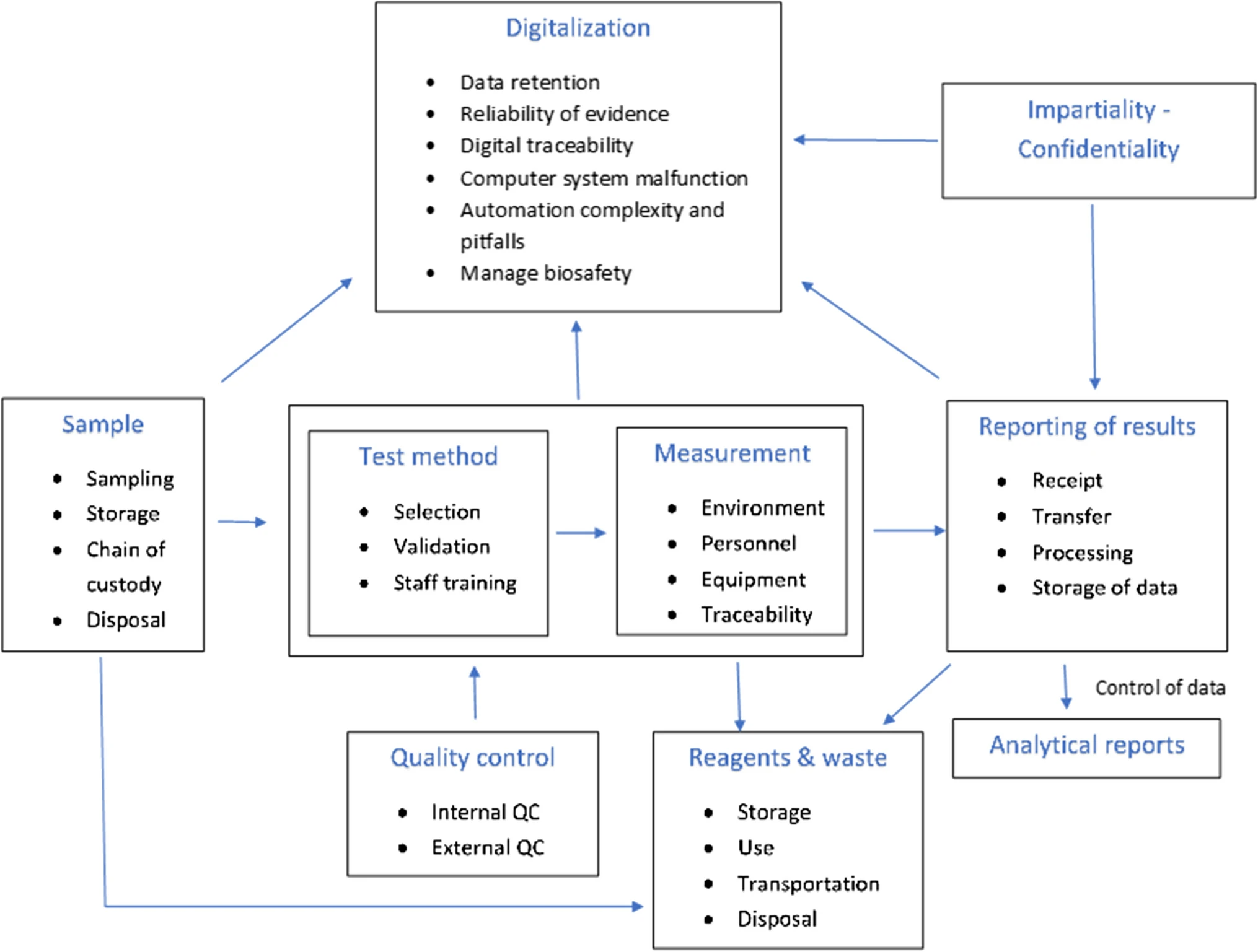

To identify potential sources of risk associated with the testing process, laboratories should create a process map outlining the steps in the testing process from generating the request for test to reporting the testing result. This map should include all stages of the pre-analytical, analytical, and post-analytical process. [10] An example of such a map is given in Fig. 1.

|

According to the research of both Plebani and Carraro [32] and Plebani [13], most errors occur during the pre-analytical stages, ranging between 46 to 68%, followed by post-analytical errors, ranging from 19 to 47%, while during the analytical stage the fewest errors occur, ranging from 7 to 13%. Table 2 outlines the main sources of risk in each of these three stages.

| |||||||||||||||

After completing the risk assessment stage, the laboratory is asked to select an appropriate treatment to maintain the risk at an acceptable level. [37] The measures taken should be monitored for their effectiveness to evaluate the success of any failure reduction effort. This evaluation is achieved by monitoring the values of the quality indicators set by the laboratory. [35] Examples of quality indicators are given in Table 3. According to Lippi et al. [38], the most effective strategy to reduce uncertainties in diagnostic laboratories is to develop and implement an integrated QMS. The success of efforts to reduce errors must be monitored to assess the effectiveness of the measures taken. [39]

| ||||||||||||||||||||||

Possible risks in laboratories are identified below in more detail along with their appropriate treatment.

Sample

Samples are the items sent by customers to the laboratory for testing or calibration in order for detailed and reliable results to be provided in a predefined time scale. Therefore, the consequences can be serious if there is loss or any other inconsistency with the samples, their analysis, and related procedures. Normally, samples taken from the laboratory go through a sampling process prior to analytical procedures. Heterogeneous samples must be homogenized before sampling, otherwise the results obtained are not reliable, especially when the analytes are contained in traces in the sample under study. [12]

Sample-related inconsistencies of the pre-analytical phase have already been summarized in Table 2. The strategy to prevent pre-analytical errors consists of five interrelated steps [43,44,45]:

- Develop clear written procedures.

- Enhance professionals' training.

- Automate both support functions and executive functions.

- Monitor quality indicators.

- Improve communication between professionals and encourage interservice collaboration.

The written procedures should clearly describe how the sample is analyzed, collected, labeled, transported, and prepared. To ensure that written procedures are followed consistently, those performing the pre-analytical activities must be well-trained to understand, in addition to the appropriate procedures, their important steps and the consequences of not following the instructions faithfully. [46]

Modern technologies, such as robotics and information management systems, should also help reduce errors. A LIMS is a computerized system that collects, processes, and stores information produced by the laboratory. Although originally created solely to automate experimental data, it is nowadays used in many laboratory activities. [47,48,49,50] The typical LIMS will have sample management functions such as sample registration, barcode labeling, and sample tracking. Using an integrated LIMS, the chain of custody (CoC) and audit trail records of the samples can be systematically kept, and operations can be improved by adding features such as model management and sample testing, while statistics concerning the number and type of samples, analytical findings, reporting time, number and origin of customers, and many other attributes can be very easily estimated or tracked. [12, 51]. Thus, by automating certain steps at pre-analytic workstations, the number of people participating in this phase and the number of manual steps required are reduced. In addition, barcodes simplify the routing and tracking of samples. For example, a computerized order entry system (COES) simplifies the examination order by eliminating the need for the order to be transcribed by a second person. [35]

To avoid problems caused by a lack of adequate storage space, the laboratory should regularly assess its capacity to handle samples, including available storage space. Therefore, the laboratory should know or proactively estimate the number of samples it can handle and store at a given time, in a manner that whenever the number of incoming samples exceeds its handling capacity, measures such as temporary reassignment of personnel or arrangement of makeshift storage areas can be taken in a timely manner. [12]

Personnel

The human factor is present at every stage of the pre-analytical, analytical, and post-analytical process. According to Ho and Chen [52], human error is the leading cause of laboratory accidents. Wurtz et al. [53] reported that reduced mental and physical condition, due to exhaustion, is often the cause of laboratory accidents. However, apart from causing accidents, the human factor is also responsible for any mistake that can occur at any stage of the analytical process, from the collection and recording of samples to the processing of results. The occurrence of human error and its subsequent outcome usually cannot be predicted. [12]

The techniques used to assess the human factor's contribution to the reliability and security of a system are called the human factor reliability analysis (HRA). [24] The first industry to develop and implement HRA was the nuclear industry [54], concluding that most accidents were due to human error rather than equipment malfunction. Since then, HRA has been applied to many “high risk” industries, such as the aerospace, railways, shipping, automotive, oil, gas, chemical, military components, and air traffic control industries. HRA has been also applied in the healthcare sector, in the installation of telecommunications equipment, in the design of computer software and hardware, as well as in many manual operations such as lathe operation.

To avoid unpleasant consequences occurred by human factor, personnel throughout the organization should be trained appropriately and effectively to be able to perform procedures in accordance with the requirements of ISO standards or other regulations. [35] Furthermore, to avoid staff burnout, some laboratory automation systems should be installed and implemented in laboratories, including electric wheeled vehicle systems to transport the samples and systems with automatic result verification procedures. [55] McDonald et al. [56] argue that using a LIMS with highly automated laboratory equipment, researchers can perform repeatable experiments without human intervention. Finally, another measure that can reduce the occurrence of human errors is the continuous supervision of staff, followed by raising staff awareness of errror causes through regular training. [12]

Reagents and waste

The ever-increasing number of laboratories in recent years has led to an increase in industrial waste, such as solid waste, liquid waste, and sewage. Most liquid waste is hazardous industrial waste, which affects human health and causes environmental pollution. The management of this waste therefore involves many risks. There have been, for example, many accidents and injuries due to mismanagement of chemical waste. [34, 52] Yu and Chou [57] reported that the most common risk in laboratories is chemical reagents because they can cause immediate damage or cumulative pathological changes to the inside and outside of the human body, as they can cause fire, explosion, poisoning, and corrosion. Additionally, Lin et al. [34], following their research on university laboratories-related fires and explosions related to chemical reagents, state that many causes of accidents were based on improper chemical management, including improper storage, use, transportation, and disposal of chemicals.

To mitigate or even avoid the adverse risks of chemicals, their proper management is required. For example, the storage of chemical reagents should be done by category, in a controlled environment and in well-ventilated areas. Flammable materials should be separated from non-flammable materials and stored in areas that provide protection from projectiles, while stock checks should be frequent. Special care should be also taken when transporting them. For example, wooden trays could be used for transport, and always with the use of personal protective equipment. [58]

Waste should be recycled and disposed of properly. For example, chemicals should be neutralized before disposal. It is necessary to install smoke alarms, fire extinguishers, surveillance cameras, showers, and eyewash stations, as well as signs clearly marking escape corridors, collective protection, and personal protection. Detailed procedures, clear instructions, and appropriate training are also of crucial importance. [52, 58]

Environmental conditions

Laboratories are exposed to various types of hazards (e.g., biological, chemical, radioactive, and others), making them highly hazardous environments. [59] Moreover, the work environment of the laboratories may be exposed to more than one hazard at the same time, which further increases risk. Marque et al. [58] emphasize that chemistry laboratories, for example, are unhealthy and dangerous environments, and those involved in research work are exposed to many potential sources of risk as they not only treat chemical reagents but also encounter equipment that is a source of heat and electricity. In chemical laboratories, there are many potential risks as they contain flammable, explosive, and poisonous chemicals. There are also risks in biological laboratories, especially for laboratory staff who treat pathogens, as they are much more likely to be infected with an infectious disease than anyone else. [60]

Exposure to chemicals in the workplace can have adverse, acute, or long-term health effects. The dangers from potential exposure to chemicals are many and various, as some substances are toxic, carcinogenic, or irritating, and others are flammable or pose a risk of biological contamination. [61, 62] In 2006, Chiozza and Plebani [63] reported that two decades ago, laboratory workers performed their work without following any safety procedures and, thus, put themselves in danger. Ho and Chen [52] noted that poor management of chemical waste in universities and non-profit laboratories has caused many accidents over the years. Poor management of chemical waste can harm laboratory workers and lead to environmental pollution. [36]

The U.S. Commission on Chemical Safety and Hazard Investigation has studied 120 laboratory accidents that occurred at various universities across the country from 2001 to 2011. [64] From 2000 to 2015, there were 34 laboratory accidents, which caused 49 deaths. These accidents were caused by explosion, exposure to biological agents, exposure to toxic substances, suffocation, electric shock, fire, exposure to ionizing radiation, and various other causes.

In addition to the risk to human health, of environmental pollution, and of destruction of laboratory property, there are other risk factors such as the production of unreliable results and customer dissatisfaction, which also lead to the laboratory's bad reputation, making the application of risk management process imperative to the sustainability of the laboratory. [12]

In order to tackle risk effectively, estimates based on effective risk management must be integrated into the analytical framework. [14] A prerequisite for the operation under appropriate environmental conditions, in a safe and competent laboratory, is the management by executives who can apply the theory of risk to reduce it to an acceptable level. Each laboratory should be able to assess the possible occurrence of errors and describe the steps needed to detect and prevent them to avoid any future adverse events. [65]

Test methods

It is common practice that appropriate standards or formal methods, whenever available and after verification, be preferred over methods developed internally in laboratories. Otherwise, in-house methods shall be fully validated. In this case, the risk is hidden in non-properly or partially validated methods instead of fully validated methods. In addition, an analytical method may have critical test steps or parameters, such as the quantity of reagents to be added in a particular step, the reaction time, or a specific step to proceed without delay. The absence of critical steps leads to erroneous results.

To avoid the risk arisen from the use of internal analytical methods that are not properly validated, the laboratory should ensure that the method developed is fully validated for its intended use. To achieve this, a general method validation procedure shall exist, based on relevant international protocols, compiled from certified analysts involved in the design of method validation having extensive experience in related issues. In addition, according to the latest version of the ISO/IEC 17025 standard, the specification of the method requirements must be recorded in detail during the method validation phase. Moreover, checks should be carried out to ensure that all requirements are met and, afterward, the declaration of method validity should be signed. Critical steps and parameters of the test methods should be appropriately marked in the respective written procedures to avoid any misunderstanding or omission by the analysts. Additionally, during staff methodological training, the importance of these points should be clearly explained. [12]

Measurement

In the measurement process, issues that have been already mentioned above, such as sample treatment, environmental conditions, personnel ,and test methods affect the measurement results.

Another critical aspect is the need for appropriate and valid (certified, if applicable) reference materials that must be used to determine the metrological traceability of the analytical results in accordance with the requirements of ISO/IEC 17025. There are two types of reference materials involved in chemical testing which are the matrix reference materials for the validation of the methods and the pure reference standards used for the calibration of the equipment. The laboratory should retain procedures for safe handling, transport, storage, and use of reference materials to avoid possible contamination or deterioration. In addition, the laboratory should have studies on the stability of the solutions of the working standards prepared from reference standards. Matrix reference materials must be stored strictly in accordance with the storage conditions recommended by the manufacturers, and relevant records should be kept appropriately. Before use, at least a visual inspection should be performed to confirm that the materials are intact. [12]

Although the occurrence and subsequent outcome of human error during test execution is usually unpredictable, it can nevertheless be minimized by strengthening personnel supervision and increasing awareness. Actions toward this effort include on-site monitoring, verbal review, and control of experimental records, including sampling, sample analysis, data handling, and reporting. The results of the monitoring actions should be maintained, and whenever a deviation is found, corrective actions should be immediately taken. To avoid the risk that may arise from any inappropriate environmental conditions during measurement, the critical requirements for environmental conditions should be clearly indicated in the written procedures of the method to raise the awareness of the involved analysts. In addition, the continuous monitoring and periodic review of environmental conditions and the maintenance of relevant records are significant prerequisites. These actions not only allow subsequent inspection or evaluation, but they also encourage analysts to stay aware of the appropriate environmental conditions when performing tests. [20, 66]

Quality control

The laboratory must have quality control procedures to monitor the validity of the tests performed and the quality of the results. Procedures are divided into internal and external quality control procedures. The main objective of internal quality control is to ensure the reliability of the results of the analytical process. [67] Internal quality control should be applied daily in the laboratory and for each laboratory test, to identify random and systematic errors as well as trends. For example, in each laboratory test, working standards of known concentration should be used to check the validity and accuracy of the test results through control charts, testing of internal blind samples, replicate tests using the same or different methods, or intermediate checks on measurement equipment. It is also useful for the laboratory to retain appropriate control of its QMS documents (e.g., procedures, instructions, calibration tables, specifications, alerts, etc.) for the purpose of updating and checking their validity, the control of the files, the control of the actions to treat threats and seizing opportunities, the control of corrective actions, etc.

External quality control, on the other hand, is performed by analyzing blind control samples sent to the laboratory by external, inter-laboratory comparison providers, to check the accuracy of results independently and objectively. The laboratory participating in an external quality control program must state the tests it performs, as well as the methods and instruments it uses, if required. [68, 69] The successful participation of a laboratory in external quality control programs provides objective proof of the adequacy of the laboratory to its customers, as well as to the accreditation and regulatory bodies the lab answeres to. [70] Non-valid results, however, indicate the existence of inconsistencies in the analysis chain of the samples, e.g., in the used test method, equipment, or personnel [71], and they must be carefully reviewed and appropriately corrected. Although tests must be performed in the same way as routine samples, the pre-analytical procedure, however, often differs as the samples sent may be in a different form (e.g., in a lyophilized form) or in different containers (e.g., in different types of tubes) and require different pre-analytical treatment. Therefore, the program organizer must provide clear instructions on the maintenance and preparation of external quality control samples. [72]

Results reporting

The final step in the testing process is results reporting. The risk of this stage may be the incorrect sending of results to the customer due to some falsification or even loss of data that may occur during the receipt, transfer, processing, and storage of data. Even if data handling software is used, data can be violated or modified by error or deceit. [73]

To avoid the risk of reporting incorrect results to clients, the laboratory shall have two- or even three-level data control procedures in place. When software is used to manipulate the data, these programs should be verified before use with appropriate protection to prevent tampering or accidental modification. However, for further improvement, these recording processes could be integrated into a LIMS which minimizes the need to manually transcribe data, and through appropriate electronic controls, data integrity can be ensured. The system could also record any failure as well as the corrective actions taken for reference. In cases where a declaration of conformity of the test to a specification or to a standard is requested, the laboratory is recommended to document the decision rules considering the relevant risk. This enhances the consistency of the results provided and avoids the risk of false acceptance or rejection. [12]

To prevent late reporting of results to the client, the combination of a LIMS with a web-based reporting system can be used. This combination is an important tool with which clinical and analytical laboratories can significantly speed up the process of presenting their test results to their customers, increasing their satisfaction and trust with the laboratory. [49]

Impartiality and confidentiality

Impartiality and confidentiality are two aspects that are included in the general requirements of the latest edition of ISO/IEC 17025, giving special attention to the importance of effective risk management resulting from the lack of these aspects. In particular, the laboratory is obliged to identify the risk associated with its impartiality on an ongoing basis and to demonstrate how to eliminate or minimize this risk. For example, it should identify the risk arising from its activities, relationships with stakeholders (e.g., top management representatives, clients, service providers, etc.) or staff relationships. Impartiality is defined in ISO/IEC 17025:2017 as the existence of subjectivity in relationships that may affect the laboratory's impartiality and are based on ownership, governance, administration, staff, shared resources, finances, contracts, and more.

However, impartiality is not always intentional; most of the time it is done unintentionally by hard-working, dedicated, honest, and competent employees who try to do their job impartially but still fail. It is then considered as cognitive bias, which is widespread and implicit. [74, 75] The impact of such implicit bias is significant, as it affects not only the examiner's judgment, but also it creates phenomena known as “bias cascade” and “bias snowball.” The “bias cascade” effect occurs when irrelevant information spills from one stage to another, thereby creating bias. In the “snowball bias” effect, bias is not only simply carried over from one stage to the next but sequentially grows as unrelated information from a variety of sources integrate and influence one another. [76,77,78,79] Therefore, it is extremely important to take the necessary steps to prevent and address bias.

The laboratory is also obliged to ensure the protection of confidential information concerning its customers and is responsible for managing all information received or generated during the execution of its activities. As impartiality and confidentiality adversely affect the laboratory's goals and compliance with the requirements of the ISO/IEC 17025 standard, they should be treated like any other risk. Therefore, an effective risk management system is required to deal with these risks. [11, 80]

The above is achieved by upper management's commitment to impartiality and adopting appropriate policies, procedures, and best practices to monitor the risk of bias and, where necessary, taking action to prevent or mitigate it. Undoubtedly, appropriate training is a critical parameter to assure impartiality and confidentiality. Staff must be trained in relation to cognitive bias so that they understand the existence and importance of appropriate policies, procedures, and practices. [11] Ensuring confidentiality in laboratories also requires an effective and clearly defined laboratory management system that provides all relevant information, in an accessible format, including documentation of the laboratory's confidentiality policies, procedures, and guidelines. By extension, a written confidentiality policy is essential, providing clear instructions to all laboratory workers and being signed by them. A confidentiality agreement is a standard practice for many businesses and can remain in place indefinitely, protecting the laboratory even after staff leave, as well as from outside partners. There should be also a plan that outlines exactly how laboratory personnel should react in case that workplace confidentiality policies or procedures are being violated.

Paper documents and records should be kept in a secure location inaccessible to non-laboratory personnel, and when no longer needed, are destroyed before disposal. Special care should be also taken with mobile phones to avoid pictures of classified documents to be taken for unintended use. In addition, the electronic documentation is stored on a secure network and viewed only on secure devices.

Digitalization

Modern laboratories are increasingly dependent on computers and other electronic devices for both their administrative and analytical functions. For example, the results obtained from the laboratory equipment are automatically stored in raw data files. While these technological developments create new opportunities, they also create risks. [73] Potential risks are data loss, the possibility of breaching and modifying raw data files generated by laboratory equipment, and incorrect input of information into the LIMS. According to Tully et al. [81], the main risks faced by digital forensic laboratories that use quality standards include:

- The existence of inaccurate or insufficient information in the technical files and the absence of a mechanism to detect subsequent changes in the files.

- Problems with computer systems security, energy supply, use of passwords for files opening or computers use, and data backup processes.

- Absence or insufficiency of detailed procedures for the processing of digital data, or the documented procedures are not followed consistently by the staff.

- Lack of strong quality control mechanisms and problems with validation of methods.

Computer systems used to store the data generated (raw and processed) by the laboratory can deal with problems that lead to information loss. Under some circumstances, the original data files can be recovered from hard drives using appropriate digital methods, but these can be costly and time consuming. However, even if digital data can be preserved, it is malleable and subject to undetectable changes in its content or metadata. The lack of proper data retention procedures makes it more difficult or even impossible to retrieve the original files and verify their integrity. In addition, standard backup procedures do not have the fidelity of digital forensic mechanisms.

One way to preserve digital data is to create backups. However, routine backup procedures do not have the fidelity of digital preservation mechanisms for some critical data, such as those of forensic laboratories. Data files created by laboratory equipment and stored on computers can be modified by mistake or intentionally. Depending on the data type and the modification method, it may be possible to detect changes or amendments. Normal backup procedures, even those updated to preserve digital forensics data, are not foolproof. Data can be falsified, and the computer system can be refreshed to make it appear that the change was made at some point in the past. [73]

To manage the risks of inadvertent alteration, as well as intentional breach, the solution is to update the traditional practices of tracking data provenance and use digitized ledgers of global custody. [82, 83] These ledgers can be implemented in such a way that they are inviolable and independently verifiable.

The use of automated systems by specialized laboratory staff who interpret the results of the analyses can help maintain consistency and increase the effectiveness of the analysis. However, these systems—including those with artificial intelligence (AI) and machine learning (ML)—can have errors, resulting in invalid results. [84] In addition, they are sensitive to bias due to poorly selected data sets, as well as lead to misinterpretations when the results are not fully understood. [85] For example, when automated AI/ML systems are used to support forensic research and forensic analysis, such as comparing faces in digital video or photographs, false-positive algorithms can lead to erroneous results. [73]

Financial risk

Over the last years and due to the outbreak of the economic and health crises worldwide, the tight financial policy in many laboratories either of the public or the private sector, along with various bureaucratic obstacles, may have negative impacts on their overall technological or economic growth. Laboratories should made efforts for cost cutting assuming that accurate knowledge of their test costs exists. It is common sense that many laboratories do not exactly know the cost of services they provide or, additionally, do not take early measures to timely upgrade their equipment and analytical instruments as well as to restore staff losses from resignations or retirements maybe due to reduced financial budget or state subsidies.

Adequate test cost accounting requires a joint effort between financial experts and those with a broad knowledge of laboratory testing. The absence of a solid cost accounting may lead to a risky incremental costing. As such, up-to-date cost information to price laboratory services, along with adequate programming of laboratory's financial and human needs (e.g., compiling a business plan), can prove to be efficient control measures for their financial sustainability and future development. Furthermore, top management representatives play a crucial role in finding or securing financial resources to cover the laboratory's human and infrastructure needs.

Conclusion

The complex activities and the operational framework of a modern laboratory involve many risks with adverse consequences for the testing outcomes, the health of workers, and the environment. For this reason, risk management procedures should be integrated into the laboratory's QMS procedures and become an integral part of their daily routine. Many methods have been developed to identify and assess risk. Most of them are described in ISO 31010:2019, while the general method instructions are referred to in ISO 31000:2018.

The major sources of risk in a laboratory are the personnel themselves, samples to be analyzed, chemical reagents and waste, equipment, test methods, measurements, non-updated quality control procedures, results reporting, impartiality and confidentiality, digitalization of data and information, and financial aspects. Implementing an efficient QMS, the laboratory can reduce the risks to a tolerable level when clear procedures, continuous supervision, inspections, timely training and continuous education of its staff, and upgrades of its equipment with systems automation are maintained. A continuous management of risks is necessary for the emergence of new priorities and the continuous implementation of necessary actions for the purpose of safety and prevention.

Risk management is fundamental to ensure a safe internal and external laboratory environment, as well as to assure the delivery of reliable and competent services. Moreover, the implementation of risk-based thinking can positively affect the outcome of regular assessments in order to explore opportunities for increasing the effectiveness of the QMS and preventing further negative effects.

Abbreviations, acronyms, and initialisms

- AI: artificial intelligence

- ALARP: as low as reasonably practicable

- CoC: chain of custody

- COES: computerized order entry system

- FMEA: failure modes and effects analysis

- FMECA: failure modes, effects, and criticality analysis

- FRACAS: failure reporting, analysis and corrective action system

- FTA: fault tree analysis

- HACCP: hazard analysis and critical control points

- HAZOP: hazard and operability analysis

- HRA: human factor reliability analysis

- ISO: International Organization for Standardization

- LIMS: laboratory information management system

- LOPA: layers of protection analysis

- ML: machine learning

- QMS: quality management system

- SFAIRP: so far as is reasonably practicable

- SWIFT: structured what-if technique

Acknowledgements

Author contributions

ET wrote the main manuscript text. AGF contributed to the writing process while both AGF and ANP made critical corrections. All authors reviewed the manuscript.

Funding

Open access funding provided by HEAL-Link Greece.

Conflict of interest

The authors declare no competing interests.

References

Notes

This presentation is faithful to the original, with changes to presentation, spelling, and grammar as needed. The PMCID and DOI were added when they were missing from the original reference.