Journal:Management and disclosure of quality issues in forensic science: A survey of current practice in Australia and New Zealand

| Full article title | Management and disclosure of quality issues in forensic science: A survey of current practice in Australia and New Zealand |

|---|---|

| Journal | Forensic Science International: Synergy |

| Author(s) | Heavey, Anna L.; Turbett, Gavin R.; Houck, Max M.; Lewis, Simon W. |

| Author affiliation(s) | Curtin University, PathWest Laboratory Medicine, Florida International University |

| Primary contact | s dot lewis at curtin dot edu dot au |

| Year published | 2023 |

| Volume and issue | 7 |

| Article # | 100339 |

| DOI | 10.1016/j.fsisyn.2023.100339 |

| ISSN | 2589-871X |

| Distribution license | Creative Commons Attribution-NonCommercial-NoDerivs 4.0 International |

| Website | https://www.sciencedirect.com/science/article/pii/S2589871X23000268 |

| Download | https://www.sciencedirect.com/science/article/pii/S2589871X23000268/pdfft (PDF) |

|

|

This article should be considered a work in progress and incomplete. Consider this article incomplete until this notice is removed. |

Abstract

The investigation of quality issues detected within the forensic process is a critical feature in robust quality management systems (QMSs) to provide assurance of the validity of reported laboratory results and inform strategies for continuous improvement and innovation. A survey was conducted to gain insight into the current state of practice in the management and handling of quality issues amongst the government service provider agencies of Australia and New Zealand. The results demonstrate the value of standardized quality system structures for the recording and management of quality issues, but also areas where inconsistent reporting increases the risk of overlooking important data to inform continuous improvement. With new international changes requiring mandatory reporting of quality issues, this highlights compliance challenges that agencies will face. This study reinforces the need for further research into the standardization of systems underpinning the management of quality issues in forensic science to support transparent and reliable justice outcomes.

Keywords: forensic science, quality issues, error, accreditation, disclosure

Introduction

The origins of modern quality management in forensic science can be traced back to the mid-twentieth century with the introduction of drunk driving legislation compelling testing laboratories to develop protocols for result validation, chain of custody, and sample storage conditions. [1] Since then, the field of forensic quality management has flourished through the development of international networks and professional organizations, reviews and inquiries into best practices, and the standardization of testing systems and methodologies. At the same time, accreditation of forensic science service provider agencies has become the expected norm and increased worldwide. [1] Whether operating as an accredited service or not, the importance of robust and fit-for-purpose quality management systems (QMSs) in forensic science cannot be understated in their ability to provide assurance that results being produced by forensic service providers are accurate, consistent, and on-time.

In Australia and New Zealand, the major providers of forensic science services are government agencies, and the structure of these providers varies between the countries and states/territories. Services in each jurisdiction are provided by one or more government sectors, with some jurisdictions’ service provided solely by the police, and others split between multiple government sectors, including health, justice, and science. [2] The directors of the government forensic agencies in Australia and New Zealand form the Australia New Zealand Forensic Executive Committee (ANZFEC), which sits as a governing body of the Australia New Zealand Policing Advisory Agency – National Institute of Forensic Science (ANZPAA-NIFS). ANZPAA-NIFS was established in 1992 with a strategic intent to promote and facilitate excellence in forensic science in the region. [2] The cross-jurisdictional oversight of ANZFEC and ANZPAA-NIFS is an important component in the overarching quality management of forensic science service provision in Australia and New Zealand, facilitating collaborative relationships between agencies and the wider forensic community to champion innovation, address priority needs, and promote the ongoing development and quality of the field. [3]

Crucial to the continuous improvement of any QMS, including forensic QMSs, is the identification and prevention of risks that may adversely affect the quality of the product or result. These risks may be identified proactively (preventative) or after an issue has been identified and addressed (corrective). [4] Forensic QMSs, such as those designed to comply with the requirements of ISO/IEC 17025 [4], are required to have processes in place for the management of these laboratory quality issues. In the ISO/IEC 17025 standard, this requirement is detailed under section 7.10 “Nonconforming work,” and the wording used is: “The laboratory shall have a procedure that shall be implemented when any aspect of its laboratory activities or results of this work do not conform to its own procedures or the agreed requirements of the customer.” The instructions for how the nonconformity is to be actioned are provided later in the standard under section 8.7 “Corrective actions” and can be summarized as follows:

- Take action to control the nonconformity and correct it;

- Take action to address the consequences of the nonconformity;

- Analyze the nonconformity to determine the root cause and, where necessary,;

- Take action to address the root cause to prevent reoccurrence of the nonconformity, and subsequently;

- Assess the effectiveness of the new control measures.

With many forensic service agencies operating QMSs compliant with standards such as ISO/IEC 17025, a wealth of information on the types of issues detected in forensic processes is available, with the potential to be shared and collated for purposes including detecting trends, identifying opportunities for research and development, and facilitating interagency comparison and benchmarking. [5]

However, there is limited published data on quality issues detected within forensic service provider agencies internationally. A fundamental concern for agencies with sharing this data publicly may be the risk of potential misuse or misunderstanding of the information. This concern may be further compounded by the lack of standardization in how that information is collected between agencies and the terminology used, making the sharing of this information difficult. [5] The implications of a lack of a standardized approach to quality issue investigation and management were highlighted in late 2022 by The Commission of Inquiry into Forensic DNA Testing in Queensland, particularly with regards to the perceived risk to transparency where a consistent approach is not apparent. [6]

Recent changes to new statutory guidance documents in the UK and international accreditation requirements highlights that this lack of standardization presents a current and urgent challenge to the field. The UK Forensic Science Regulator Code of Practice (released March 2023 and coming into effect in October 2023) requires the forensic unit (defined within the Code as “a legal entity or a defined part of a legal entity that performs any part of a forensic science activity”) to inform the Regulator of non-conforming work or “quality failures” that have the potential to attract adverse public comment, be against the public interest, or lead to a miscarriage of justice. [7] The American National Standards Institute - National Accreditation Board (ANAB) published AR 3125:2023 Accreditation Requirements for Forensic Testing and Calibration Laboratories in February 2023, with a new clause for proficiency testing requiring the laboratory to notify ANAB within 30 days when an expected result is not attained. [8] Both of these documents go further than the ISO/IEC 17025 standard in providing examples of the types of “non-conforming work” or “unexpected results” that would compel reporting. However, the examples are not exhaustive in what might be seen in an operational forensic agency and thus are open to interpretation. The subjective application of requirements such as these invites risks, for example, the over-reporting of unwarranted issues may choke the resources of both the reporting agency and the regulator or accreditation body being reported to, preventing timely and effective carriage of justice and action on critical findings. Under-reporting of critical issues, even if done with no malintent, risks not only the perceived integrity of the forensic agency, but also the non-identification of systemic issues potentially threatening justice outcomes and public confidence in forensic science. The matter of mandatory reporting is complicated further by the fact that some quality issues only become apparent a considerable time later, whether being undetected at the time or not categorized as a quality issue at the time as technology or reporting thresholds evolve. For example, in the case of reanalysis of cold case DNA evidence collected many years prior, when testing methods were not sensitive enough to detect underlying contaminants [9], or where retrospective review of method validation studies identifies deficiencies in the analysis used to set analytical thresholds that have been in operation for a considerable time. [6] Therefore, the sense of security that a system of mandatory reporting provides to current investigations or court matters may be false, as undetected and unreported issues will inevitably still exist even in the most willing and compliant of environments.

As the foundation to a program of research investigating quality issues in forensic science, a survey was designed and conducted on the current state of practice in forensic quality management and the management of quality issues amongst the government forensic service provider agencies of Australia and New Zealand. The objective of the survey was to gain perspective on the diversity of forensic services offered by these agencies and the quality structures surrounding them, along with insights into the current categorization, management, and disclosure of quality issues within those agencies. With the focus on transparent reporting of quality issues in forensic science now gaining momentum in international jurisdictions, the issue of effective communication on these issues is a clear and present concern to the field. This paper presents the results of this survey within the context of current practice in forensic quality management. Further we discuss these results and their significance in the environment of operational forensic science service provider agencies.

Survey methodology and participant demographics

The survey was designed and conducted using the online tool Qualtrics for ease of use and submission by participants.

The target participants for this survey were forensic quality practitioners or forensic practitioners involved in the management of quality issues working in the government forensic science service provider agencies of Australia and New Zealand. A request to approach suitable participants was made to ANZFEC via ANZPAA-NIFS. The agencies with representatives on the Quality Specialist Advisory Group (QSAG) of ANZPAA-NIFS were targeted. Permission was granted by all of the 15 agencies represented on QSAG to allow the research team to approach their staff. An invitation to potential participants was circulated to the QSAG members by ANZPAA-NIFS on behalf of the research team, and the invitation stated that it could also be forwarded to other suitable practitioners within the agency. Invitees who responded positively were forwarded a participant information sheet and participation consent form for return. Upon confirmation of consent, a unique alphanumeric identifier was assigned and forwarded to the participant along with a link to the online survey tool. The unique identifier was generated using online random number and letter generators and was to be used to ensure that each survey response was deidentified to anyone outside of the research team.

The participants were required to enter their unique identifier on the front page of the survey and to confirm consent before they were able to progress into the survey questions. Other than the unique identifier, no identifying information on the participants or the agency they represented was collected in the survey tool. The survey consisted of multiple sections, with certain questions only being displayed based on previous answers. The survey was designed to allow participants to return to previous questions and amend responses or pause and restart the survey at any time prior to final submission. The section designations in the survey are reflected in the headings presented in the results below.

Survey questions were designed as either requiring selection from a set of provided options (nominal scale), or as free text fields for unstructured data collection. All questions with options provided also included a space for additional free text comment to be made. All questions were set as forced response.

The full survey logic and functionality was pretested prior to deployment by volunteers outside of the research team with familiarity of the subject matter.

Uptake for the survey was successful with 16 participants from 13 of the 15 QSAG agencies responding. The participants represent all of the states and territories of Australia (including the federal jurisdiction), and New Zealand. All participants in the survey self-declared as being employed in a quality manager or quality practitioner role, with more than 85% of respondents indicating that their quality management role was responsible for multiple forensic science disciplines.

Survey results and discussion

Accreditation of disciplines

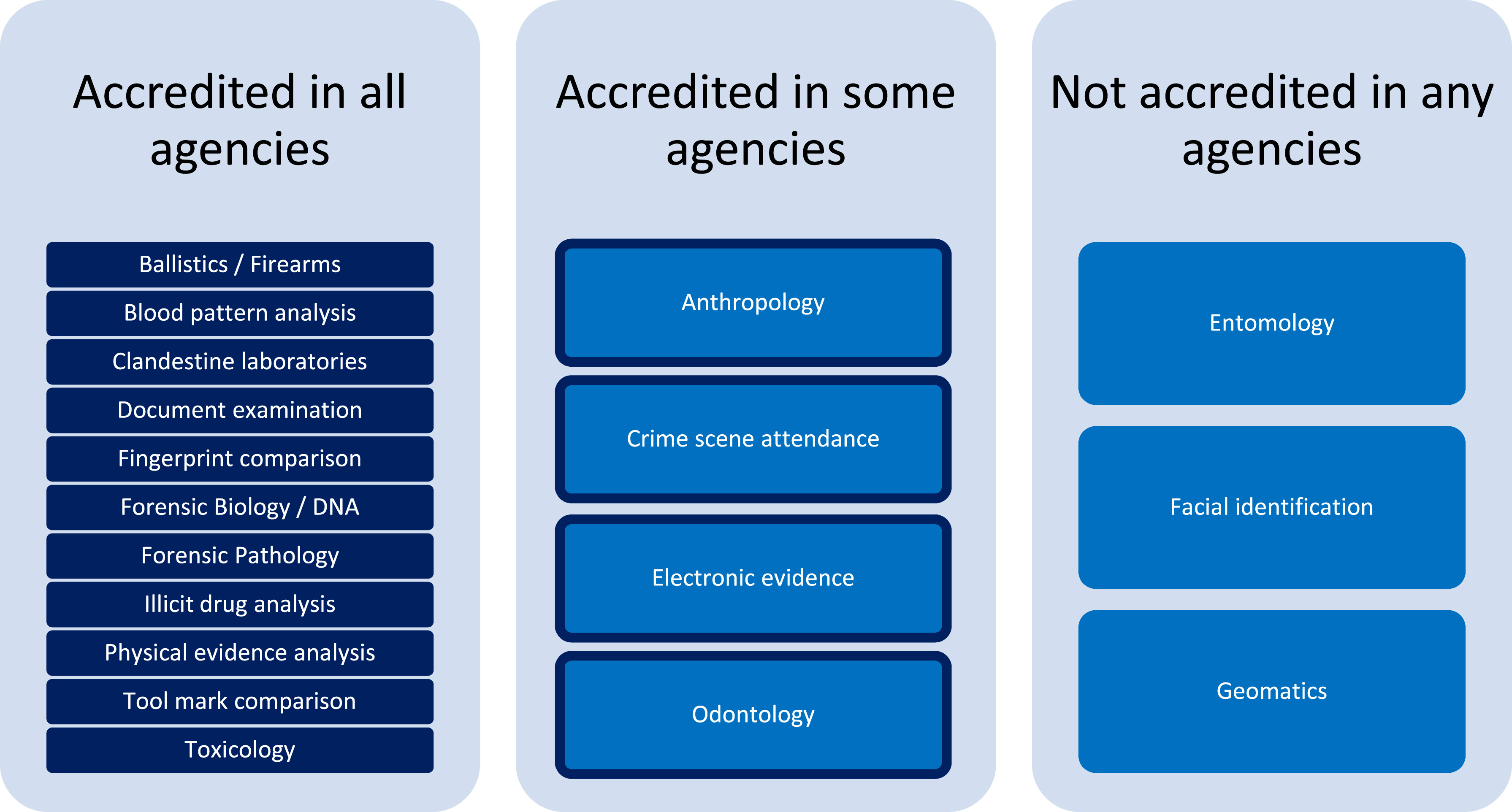

With accreditation of ANZFEC agencies being a mandated aim for the region [1], it is not surprising that all agencies represented by the survey participants are accredited to ISO/IEC 17025. However, the responses presented in Fig. 1 show that, of the various forensic disciplines that are represented across the agencies surveyed, some are not accredited across all the agencies where that discipline is offered and, in a few cases, none of the agencies where the discipline is available are accredited for that service.

|

The survey responses with regards to the non-accreditation or partial accreditation of some disciplines may be indicative of the particular challenges associated with these areas. For example, in the case of anthropology and odontology, very few of the agencies surveyed were responsible for the provision of these services (three agencies and two agencies, respectively), and all are part of multidisciplinary forensic service agencies. The highly specialized nature of these fields, coupled with accreditation for these disciplines being under a different international standard (ISO 15189) to the other disciplines within the same agencies (ISO/IEC 17025) may be a consideration made for business decisions not to pursue accreditation. Entomology is a similarly rare field amongst the surveyed agencies, only offered by one. In the case of disciplines where there is a sole subject matter expert, the logistics of requirements such as technical peer review of results can be a barrier to accreditation in cases of limited staffing resources and may lend a preference to testing validity of the science and results as part of the court evidence process. [10]

The field of electronic evidence deals in an environment of rapidly changing technology and evidence types. This presents other unique challenges from an accreditation perspective, for example, the swift adoption of new and novel analysis techniques may preclude lengthy validation studies or the ability to source appropriate and relevant proficiency testing programs. [11] The field of facial identification (or facial image comparison) faces similar challenges to electronic evidence management, with the rapidly changing nature of image samples being presented and technology available to perform analysis. Further, the contrasting techniques of manual examiner comparison and automated facial recognition, along with the diversity of applications the techniques may be used for in different jurisdictions can make the development of standardized practice difficult, although expert scientific working groups are progressing this space. [12,13]

Quality issue management

What is considered a “quality issue”?

Evidence of how the forensic facility identifies and mitigates risks to the quality of results and how it identifies and manages nonconforming work is an accreditation requirement, meaning that records must be kept and available. Whilst the standards are explicit in the necessity for these quality issues to be noted, managed, and recorded, the nature of what constitutes a “quality issue” worthy of recording is less clear. [4,14]

All participants indicated that their agency has a documented process for the management of quality issues (including one which is a new document currently being drafted). Other than the one in draft, all participants also noted that the documented process included instructions for the logging/recording of quality issues.

Participants were asked to indicate which types of issues would generally be considered as a “quality issue” that needed to be managed/logged against a list of provided examples (Table 1).

| ||||||||||||||||||||||||||||||||||||

The responses across the participants demonstrate high agreement in some categories, with all participants managing/logging customer complaints and 15 out of the 16 participants agreeing that adherence to documented procedures, correction of issued results, and non-conformances identified at audit were all considered “quality issues” requiring management/logging. The single negative response for each of these categories was received from participants representing the same agency but responsible for different disciplines. One of these disciplines within the agency is not accredited to ISO/IEC 17025, which may influence the response given.

Participant responses were split on whether delayed results (exceeding agreed reporting time to client) would be managed/logged as “quality issues.” The issue of timely provision of results has been noted as an area of concern for the industry for some time, both in the risk of delays to the justice system [15,16] and as a risk to the provision of quality scientific services. [17] Reasons for this divide in answers may be due to the nuances of individual agency client service level agreements (or equivalent arrangements) where there is either no agreed reporting times specified, or an accepted percentage of delayed reports allowed before it is considered a nonconformity. To analyze the root cause of this discrepancy between participants, this category will be explored further in future research stages.

Participants were provided with an opportunity to list any other types of issues, in their own words, that would generally be considered as quality issues for management/logging. Comments were received from three participants, and included:

- “Risk identified”;

- “Planned deviation from process”; and

- “Issues relating to chain of custody, training, procedural errors or deficiencies, fieldwork or environmental conditions, facilities and software (upgrades, limitations, outages).”

Those responses where limited detail was provided will be explored further in later phases of the research.

How are “quality issues” recorded?

Much like the category of issues which require management/logging, the specifics of how these issues are to be recorded is also undefined in the standard requirements. The predominant tool (or form) associated with QMSs prescribed by ISO/IEC 17025 and ISO 9001 [14], (along with other management systems, such as ISO 45001: Occupational health and safety management systems [18]) is the corrective action request (CAR). A CAR is a mechanism of documenting a problem or potential problem identified in the management system which requires that the root cause of the problem be mitigated or removed to prevent recurrence. [19] Generally, the CAR process will also include documentation of the details of the investigation conducted to determine the root cause of the problem, actions taken to address the root cause, and evidence to demonstrate that the effectiveness of those actions has been reviewed and additional actions taken as required.

A root cause is the underlying issue that resulted in the non-conformance or issue. Root cause analysis (RCA) is the process used to identify the root cause of any issue. [20] There are several approaches to conducting an RCA involving a variety of methods and tools. [21] RCA can be considered a component of problem-solving, with the outcome providing information on the fundamental cause of an issue to be removed or mitigated to facilitate continuous improvement of the QMS.

CARs may be recognized by other names depending on the organization, for example, an “opportunity for improvement request” or a “continuous improvement request”. However named, the process of having a mechanism for the documentation of issues detected (actual or potential) in the QMS and the actions taken to address these is a critical component of these systems and one of the most valuable tools in the continuous improvement cycle, if utilized effectively. [21]

In the context of forensic science service provision, maintaining records of issues identified is not just a necessary feature of the QMS, but also crucial in demonstrating the robustness of the analysis process and subsequent results being provided. [9] Transparency with regards to the analytical and interpretive process involves not only demonstrating the scientific validity of the methodology being used but also the clear and accurate communication of any limitations to be associated with the results provided, which includes the clear disclosure of issues that were identified during the process and how these may influence the validity of the results obtained.

As noted above, the specifics of what types of issues are recorded and how these issues are recorded is not defined in the guiding management system standards. All forensic service providers operating to one of the ISO standards will have some version of a CAR in their systems; however, this may not be used to record all issues detected, and alternative methods of documentation will be used either instead of, or in addition to, the CAR.

To better understand the various means used to record the types of quality issues identified in Table 1, survey participants who indicated that a particular issue would be logged were asked to indicate how each type of issue would be recorded within their agency. To accommodate types of issues which may be recorded in different ways dependent on the particular situation, participants were able to select multiple methods of recording for each issue type, as applicable. The responses were grouped into the following categories for the purposes of review and discussion: Reporting and delivery of results; Quality assurance programs; Equipment issues; Audits and documented procedures, and; Customer feedback and monitoring of court testimony.

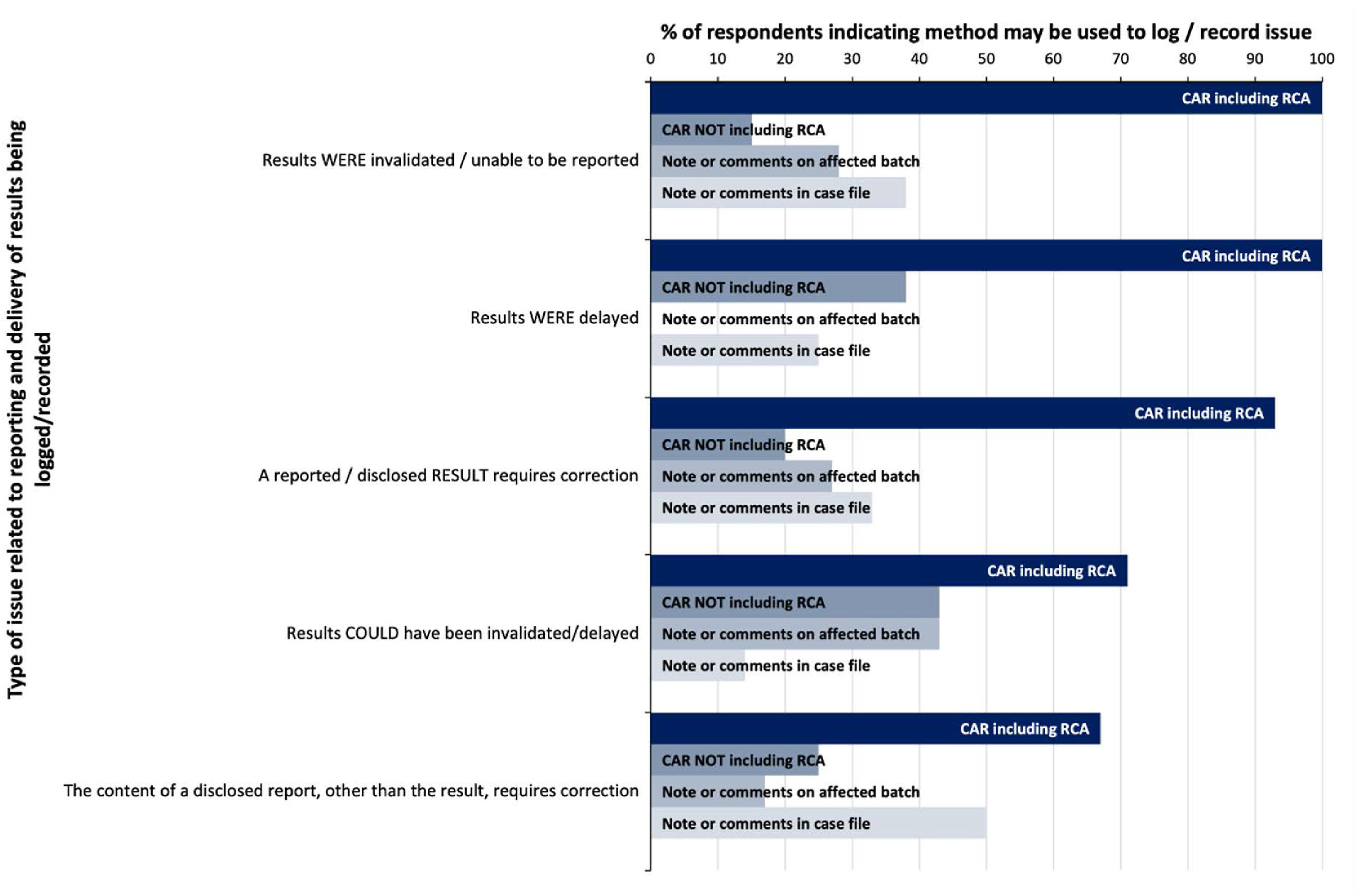

Reporting and delivery of results

The provision of accurate, timely, and unambiguous results is a fundamental measure of the successful provision of forensic science services, and therefore issues that are identified which affect the delivery of those results require close attention. [22] Issues related to the reporting and delivery of results were among the highest logged by participants in the survey (Table 1), with the highest proportion of participants indicating that issues where a reported or disclosed result required correction (15 out of 16) would be recorded. The next two highest recorded issues included scenarios where results were invalidated or unable to be reported (13 out of 16), and where results could have been invalidated or delayed (14 out of 16).

However, whilst all respondents logging these issues indicated that a root cause analysis would be performed in the case of invalid or unreportable results, only 72% indicated the same would be performed for results that could have been delayed (Fig. 2).

|

Although only half of participants indicated that actual delayed reporting of results was deemed as a quality issues requiring recording (Table 1), all of those participants who do record actual delays would document these as a CAR (or equivalent) and perform root cause analysis (Fig. 2).

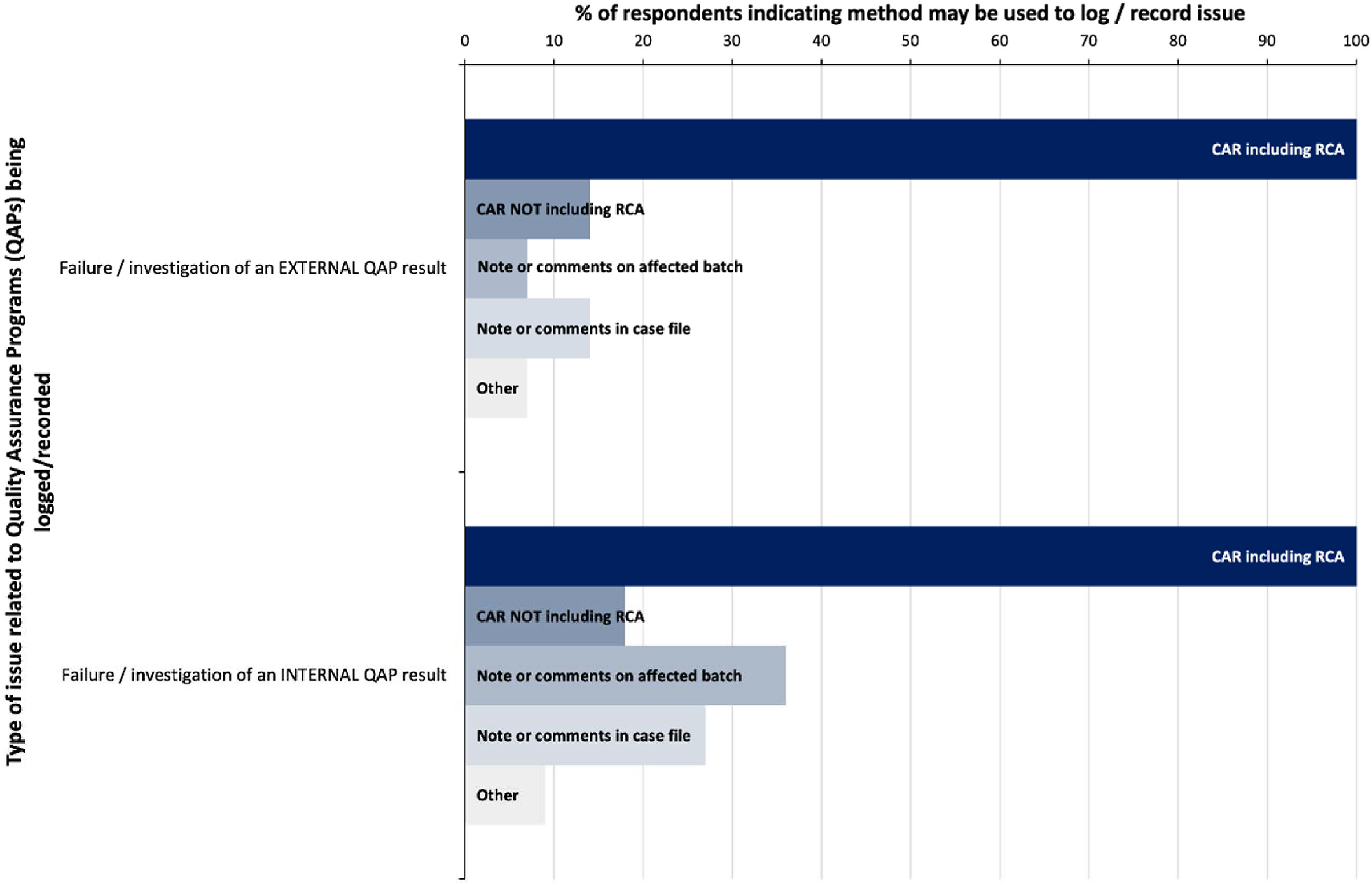

Quality assurance programs

Quality assurance programs (QAPs) are used by forensic science agencies to test the systems and processes in place. [22] The nature of QAPs can vary greatly and will be dependent on the nature of the services being provided. QAPs can be both internal (programs developed in-house to monitor system capability and competency such as testing of samples where ground truth is known by the program administrator or monitoring of contamination minimization processes through environmental samples) or external (programs sourced externally such as commercial proficiency testing programs or inter-laboratory comparison exercises). When used effectively, QAPs assist the agency to identify vulnerabilities in the quality system and are a valuable tool in the proactive identification of risks to the quality of results. Just as importantly, QAPs can highlight successes in the quality system and provide tangible evidence of the robustness of the procedures in place to protect the agency's quality of output.

Where the results of QAPs indicate a possible failure or risk in the system this may be deemed a quality issue for investigation, and in the case of external QAPs, 14 of the 16 respondents indicated this would be recorded. By comparison, only 11 respondents would log the same for an internal QAP (Table 1). All of those who would log these issues indicated that they may involve root cause analysis in the management of the issue (Fig. 3).

|

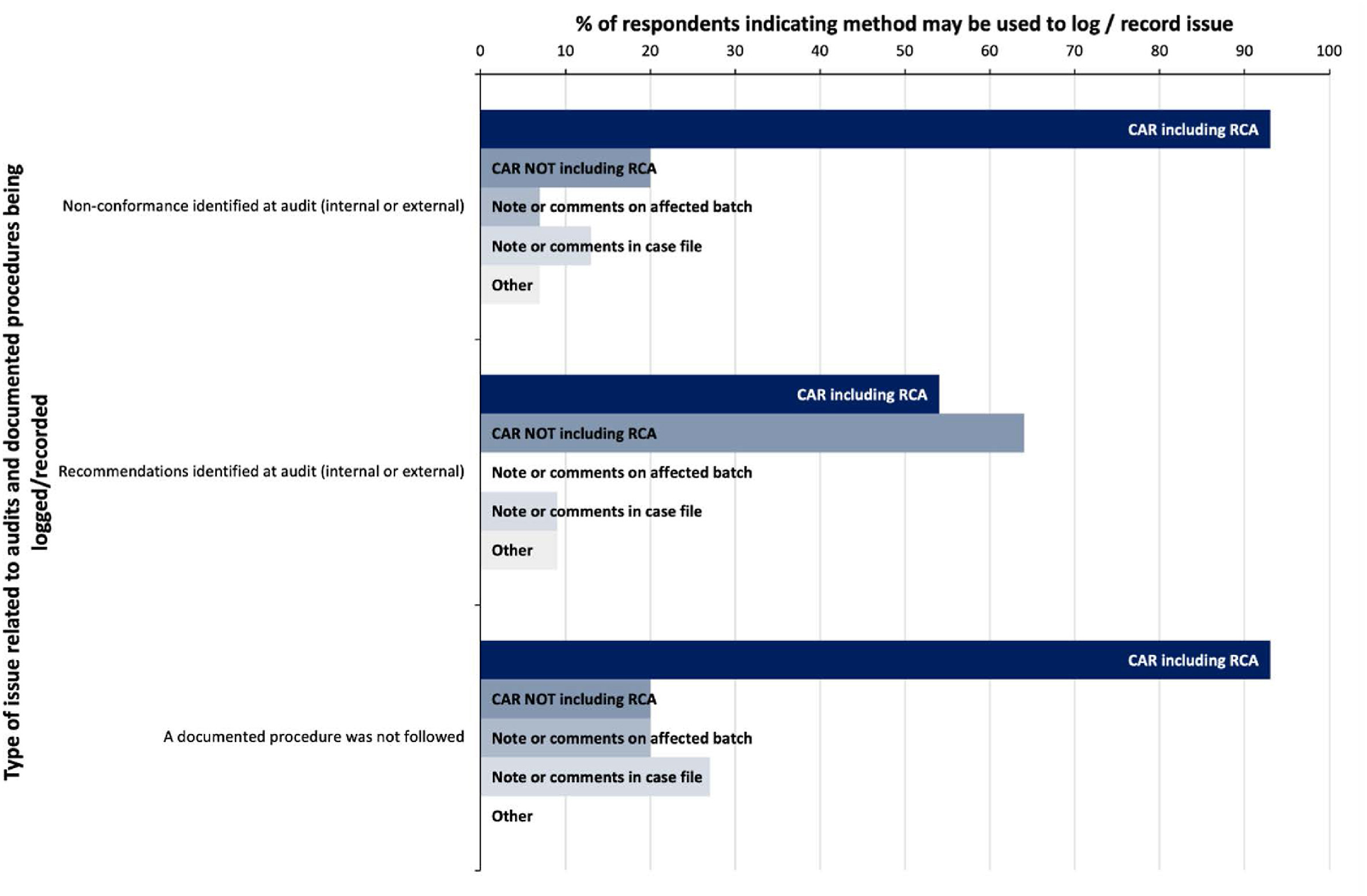

Audits and documented procedures

Audits are a flagship component of any QMS as a means of monitoring the compliance of the agency's system against the standard it has been designed to meet, as well as how that system aligns with the actual processes and procedures being performed within the agency. [22] A robust forensic science audit program will include review of both the competence of the QMS and processes, as well as technical (scientific) competence. Where the actual practice does not conform with the required (or documented) practice, a non-conformance will be noted by the auditor to be investigated and addressed by the auditee.

Supporting the QMS is the requirement for the agency to document the system, including the processes and procedures used, as part evidence of its compliance with the guiding standard. [4] It is therefore the expectation that actual practice will be performed in accordance with the documented practice. Misalignment of these two factors can be indicative of risks to quality outputs, introducing the potential for inconsistent application of processes, or procedures which are outside the scope of required standards. Fifteen out of 16 survey respondents indicated that they would log both non-conformances identified at audit and where a documented procedure was not followed as quality issues (Table 1), and over 90% of those indicated that the investigation may include a root cause analysis (Fig. 4). This high level of recording and investigative analysis of issues related to audit findings and documented procedures suggests that surveyed agencies place great significance of these types of issues as a symptom of risk to quality.

|

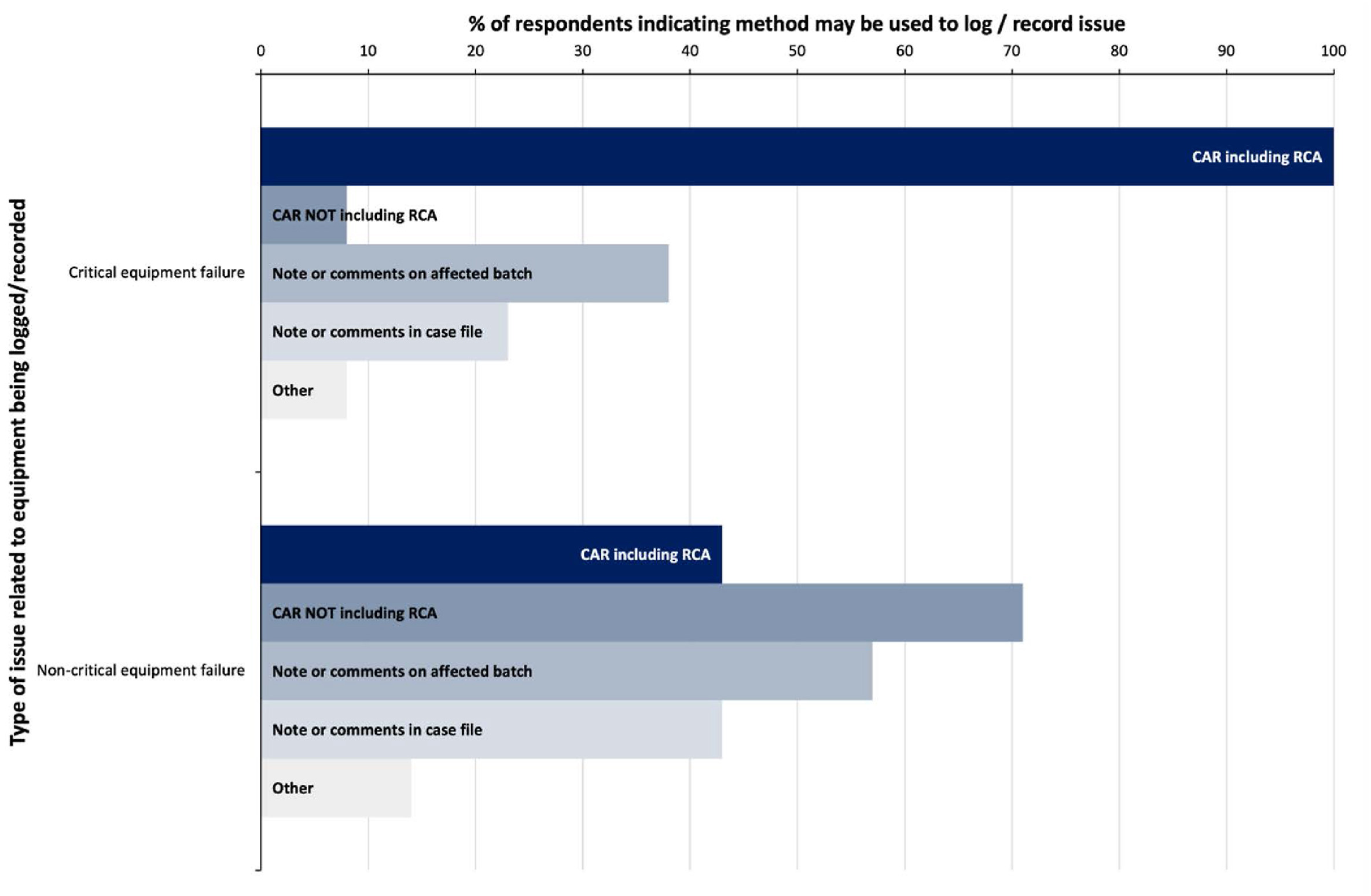

Equipment issues

Equipment involved in the provision of forensic science services can be loosely grouped into the categories of "critical" and "non-critical." The distinction between the two being the degree to which the failure of the equipment impacts on the validity of results. For example, a genetic analyzer used to perform DNA analysis is critical to the DNA testing process, whereas the flask used to measure a general cleaning reagent for dilution is non-critical.

Equipment which has an effect on the validity of results must be considered with regards to its selection, implementation, performance monitoring, and ongoing maintenance. [4] However, dependent on the criticality of the piece of equipment, the procedures required to assure this may vary. In the survey, participants were asked to consider whether equipment failure would be considered a quality issue and whether the distinction of critical versus non-critical became a factor. Thirteen out of 16 participants would record a critical equipment failure, whilst only seven would record failure of non-critical equipment (Table 1). Of those, 100% would consider logging the critical failures as a CAR with a root cause analysis, whist non-critical equipment failures are more likely to be managed without root cause analysis (Fig. 5).

|

This demonstrates the perceived distinction between critical and non-critical with regards to equipment used in the forensic process and the impact it has on the provision of a quality service. In this survey, the terms "critical" and "non-critical" were not defined for the participants, therefore the responses received will be subjective dependent on the understanding of these terms. How these terms are defined and designated for the purposes of managing quality issues will be explored in future studies.

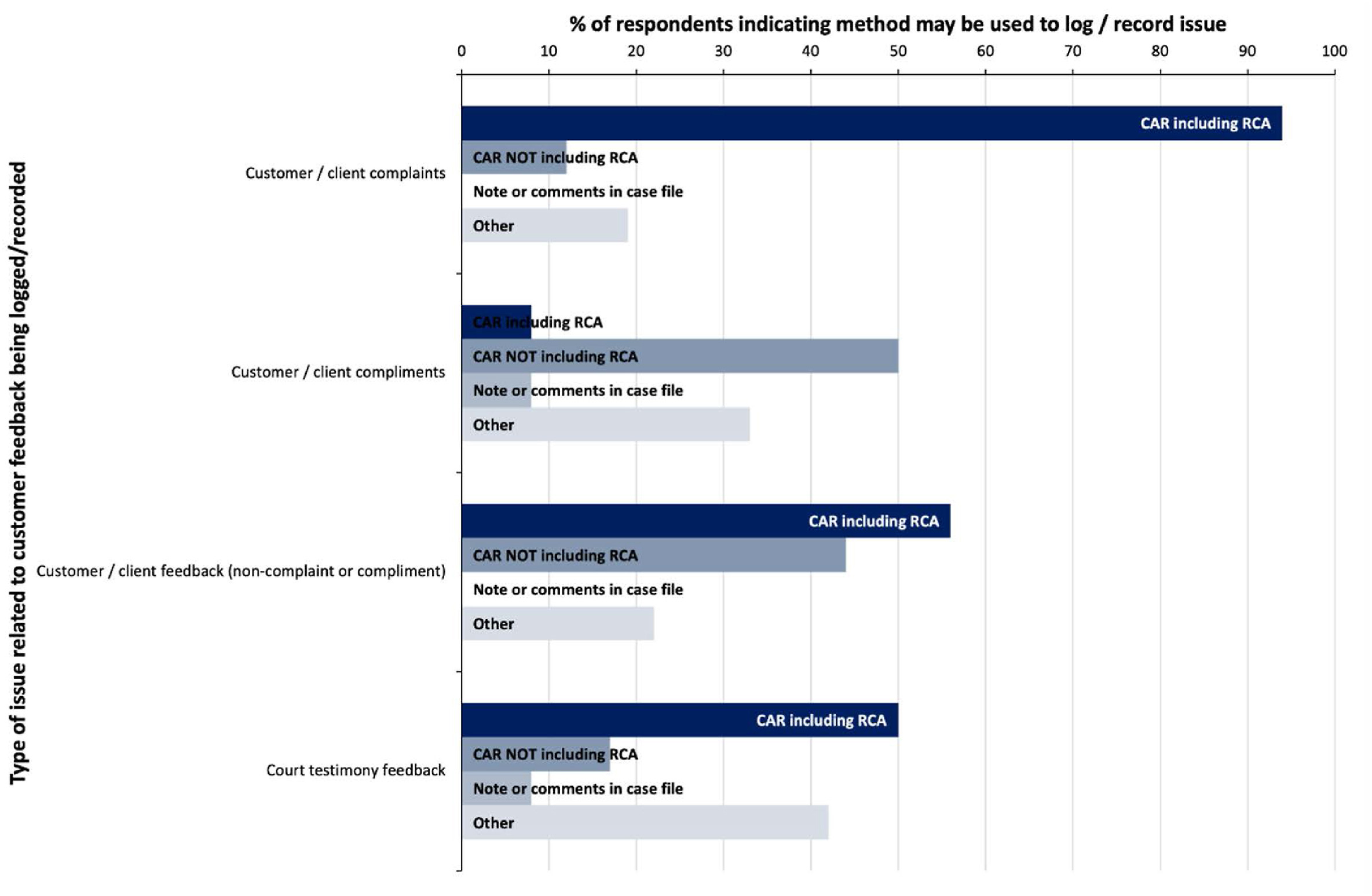

Customer feedback and monitoring of court testimony

For any service-based business, a strong knowledge of customer needs and expectations is crucial to designing fit-for-purpose service delivery strategies. Seeking regular and varied customer feedback to measure how the service aligns with those expectations is a standard feature of such models. [14] In that respect, forensic science service provision is a complex beast. In Australia and New Zealand, the majority of forensic science services are operated by government agencies to provide for the jurisdictions they represent, so when it comes to “seeking customer feedback,” who do we define as the customer? For the purposes of this standard requirement, the baseline used by most agencies will be those individuals or institutions who have a direct liaison with the outputs of the agency, for example the investigators who submit the exhibits for testing or the legal counsel who receive the reports for trial and call the analysts as expert witnesses. Other sources of external feedback may also be incorporated, such as independent reviews of the service provider or feedback related to the forensic service in the form of legal judgement transcripts.

“Customer feedback” for the purposes of this survey was categorized as either complaints or compliments/feedback (non-complaint or compliment). “Customer complaints” was the only type of quality issue surveyed which was noted as being logged by 100% of participants (Table 1), with almost all respondents indicating that root cause analysis would be considered in the event of a complaint (Fig. 6). The use of CARs as a logging tool across all types of customer feedback was much lower than amongst other types of issues, and a higher proportion of respondents indicated that other methods of logging customer feedback (inclusive of complaints, compliments and feedback) would be used, generally being some form of customer feedback database.

|

The role of the forensic scientist as expert witness is often the ultimate conclusion in the forensic service process, and one which is responsible for translating analytical methodologies and the significance of the results into a public forum to be evaluated in the context of an adversarial legal system (in Australia and New Zealand). Thus, the monitoring of forensic expert court testimony plays an important part in quality assurance of the forensic process. Although court testimony monitoring is a requirement for forensic facilities accredited to ISO/IEC 17025, how this is to be managed is not specified. [8,23] Individual agency procedures may account for the few participants who did not indicate that “Court testimony feedback” would be logged as a quality issue. How this feedback is captured and managed will be investigated further in subsequent stages of this project.

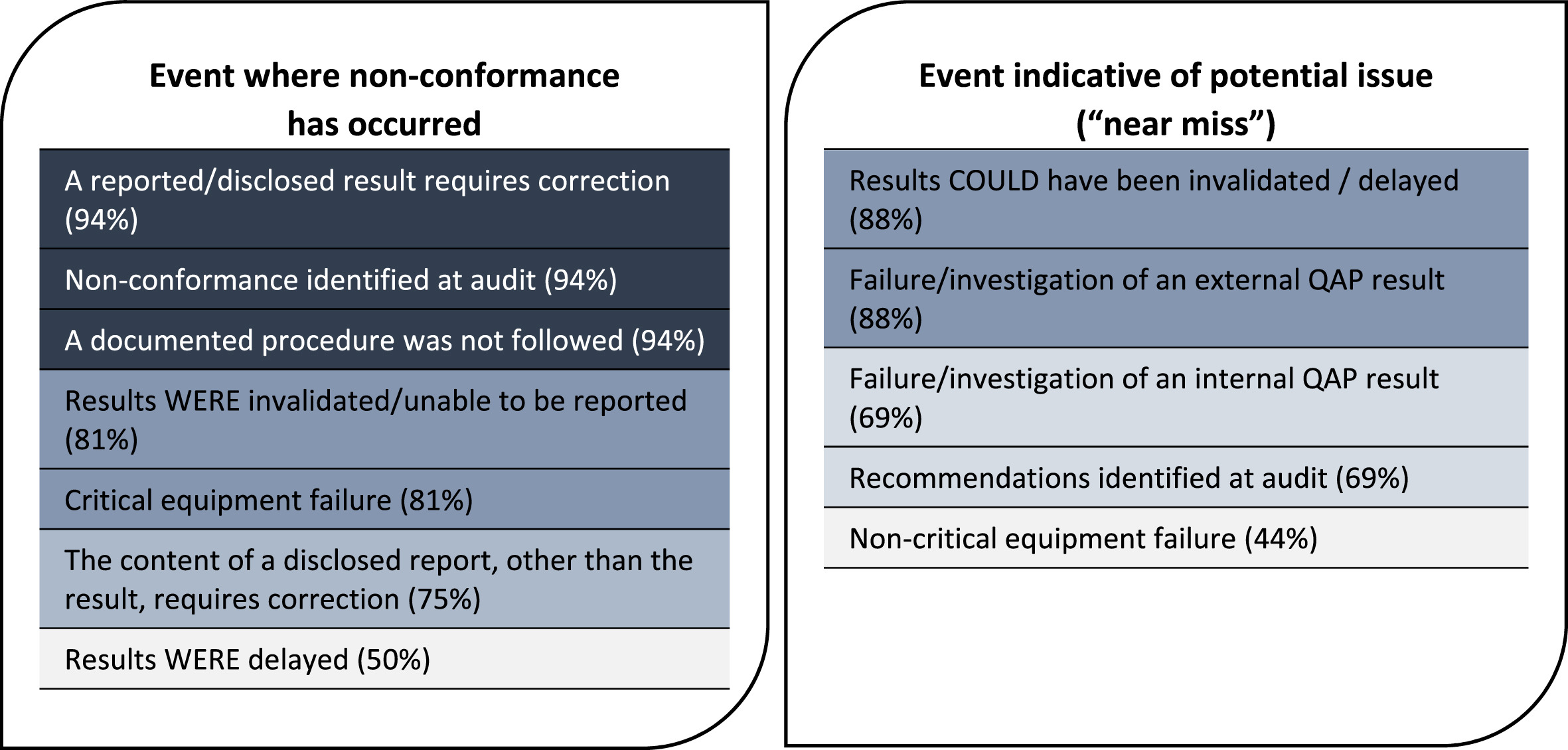

Recording of "near miss" events

A number of the issues participants were asked to respond to may be considered as indicative of a potential issue in the quality system that requires action, but not a scenario where an actual non-conformance has occurred. These types of events may be referred to as “near miss” events. The results of the survey indicated that the proportion of participants who would consider logging “near miss” events as quality issues is notably lower than for events where a non-conformance has occurred (Fig. 7).

|

The lists in Fig. 7 were also compared with the corresponding data for each issue on whether root cause analysis may be used in the investigation from Fig. 2, Fig. 3, Fig. 4, Fig. 5, and Fig. 6. For the events where a non-conformance has occurred, the combined average of responses indicating that root cause analysis may be used across all events listed was 92%, whereas the average for the listed “near miss” events was only 74%.

The differences in the rates of recording and level of investigation used for “near miss” events compared with other quality issues highlights an important area for consideration with regards to quality issue management in forensics. Whilst there can be limited arguments against the importance of the identification, analysis, and correction of actual non-conformances within the QMS, a core feature of robust quality management is the design of systems to identify risks to quality so that they can be proactively strengthened to prevent non-conformances from even occurring in the first place. [24] This is where the identification of “near miss” events becomes critical to inform continuous, preventive improvement of the quality system, and consideration should be given to applying root cause analysis at this stage in order to determine the most effective means of preventing issues from occurring. Failing to log and action near-miss events will likely lead to an increase in actual non-conformances, some of which could have been prevented from occurring.

Terminology

Inconsistent definitions of terminology related to “error” and associated issues in forensic science have been noted as a key challenge to the transparent communication on this topic not just with end users of forensic information and the public, but also between forensic agencies themselves. [25] Only two participants in this survey indicated that their agency has a documented glossary of terms related to quality issues, with a further seven respondents indicating that “some” terms are defined within relevant standard operating procedures as appropriate.

All respondents were asked to define (based on their own knowledge and expertise) a number of terms commonly associated with quality issues, with selected results for some of the terms surveyed presented in Table 2.

| ||||||||||

References

Notes

This presentation is faithful to the original, with changes to presentation, spelling, and grammar as needed. The PMCID and DOI were added when they were missing from the original reference. Everything else remains true to the original article, per the "NoDerivatives" portion of the distribution license.