Difference between revisions of "LII:Choosing and Implementing a Cloud-based Service for Your Laboratory/Cloud computing in the laboratory"

Shawndouglas (talk | contribs) (Created as needed.) |

Shawndouglas (talk | contribs) (→Citation information for this chapter: Update) |

||

| Line 13: | Line 13: | ||

'''Title''': ''Choosing and Implementing a Cloud-based Service for Your Laboratory'' | '''Title''': ''Choosing and Implementing a Cloud-based Service for Your Laboratory'' | ||

'''Edition''': | '''Edition''': Second edition | ||

'''Author for citation''': Shawn E. Douglas | '''Author for citation''': Shawn E. Douglas | ||

| Line 19: | Line 19: | ||

'''License for content''': [https://creativecommons.org/licenses/by-sa/4.0/ Creative Commons Attribution-ShareAlike 4.0 International] | '''License for content''': [https://creativecommons.org/licenses/by-sa/4.0/ Creative Commons Attribution-ShareAlike 4.0 International] | ||

'''Publication date''': August | '''Publication date''': August 2023 | ||

<!--Place all category tags here--> | <!--Place all category tags here--> | ||

Latest revision as of 21:03, 16 August 2023

4. Cloud computing in the laboratory

It took three chapters, but now—with all that background in-hand—we can finally address how cloud computing relates to the various type of laboratories spread across multiple sectors. Let's first begin with what an average software-enabled laboratory's data workflow might look. We can then address the benefits of cloud computing within the scope of that workflow and the regulations that affect it. Afterwards, we can examine different deployment approaches and the associated costs and benefits.

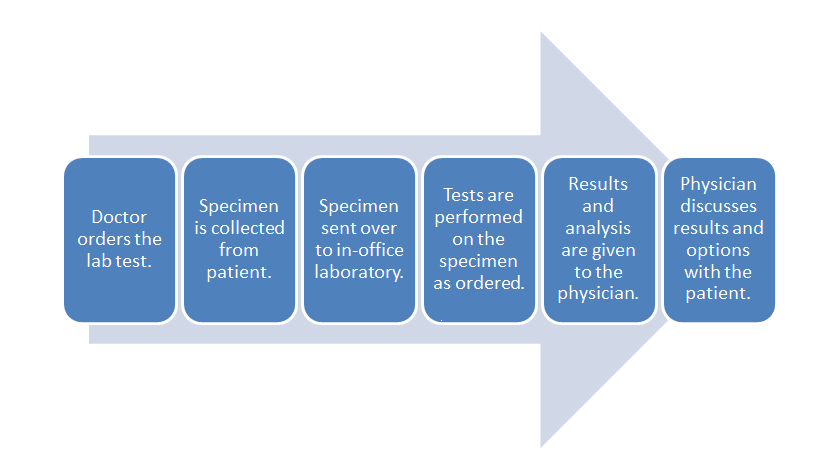

Let's turn to a broad example of laboratory workflow using diagnostic testing of a patient as an example. Figure 5 depicts a generic workflow for this process, with a doctor first ordering a laboratory test for a patient. This may be done through a Computerized physician order entry (CPOE) module found in an electronic health record (EHR) system, or through some other electronic system. In turn, a test order appears in the electronic system of the laboratory. This might occur in the lab's own CPOE module, usually integrated in some way with a laboratory information system (LIS) or a laboratory information management system (LIMS). The patient then provides the necessary specimens, either at a remote location, with the specimens then getting shipped to the main lab, or directly at the lab where the testing it to be performed. Whenever the specimens are received at the laboratory, they must be checked-in or registered in the LIS or LIMS, as part of an overall responsibility of maintaining chain of custody. At this delivery point, the laboratory's own processes and workflows take over, with the LIS or LIMS aiding in the assurance that the specimens are accurately tracked from delivery to final results getting reported (and then some, if specimen retention times are necessary).

That internal laboratory workflow will look slightly different for each clinical lab, but a broad set of steps will be realized with each lab. One or more specimens get registered in the system, barcoded labels get generated, tests and any other preparative activities are scheduled for the specimens, laboratory personnel are assigned, the scheduled workloads are completed, the results get analyzed and verified, and if nothing is out-of-specification (OOS) or abnormal, the results get logged and placed in a deliverable report. Barring any mandated specimen retention times, the remaining specimen material is properly disposed. At this point, most of the clinical laboratory's obligations are met; the resulting report is sent to the ordering physician, who in turn shares the results with the original patient.

When we look at these workflows today, a computerized component is usually associated with them. From the CPOE and EHR to the LIS and LIMS, software systems and other laboratory automation have been an increasing area of focus for improving laboratory efficiencies, reducing human error, shortening turnaround times, and improving regulatory compliance.[1][2][3] In the laboratory, the LIS and LIMS are able to electronically maintain an audit trail of sample movement, analyst activities, record alteration, and much more. The modern LIS and LIMS are also able to limit access to data and functions based on assigned role, track versioning of documents, manage scheduling, issue alarms and alerts for OOS results, maintain training records, and much more.

Yet with all the benefits of software use in the laboratory comes additional risks involving the security and protection of the data that software manages, transmits, and receives. Under some circumstances, these risks may become more acute by moving software systems to cloud computing environments. We broadly discussed many of those risks in the previous chapter. Data security and regulatory risk demand that labs moving into the cloud carefully consider the appropriate and necessary use of user access controls, networking across multitenancy or shared infrastructures, and encryption and security controls offered by the cloud service provider (CSP). Technology risk demands that labs embracing cloud have staff with a constant eye on what's changing in the industry and what tools can extend the effective life span of their cloud implementations. Operational risk demands that labs integrating cloud solutions into their workflows have redundancy plans in place for how to mitigate risks from physical disasters and other threats to their data hosted in public and private clouds. Vendor risk demands that labs thoroughly vet their cloud service providers, those providers' long-term stability, and their fall-back plans should they suffer a major business loss. And finally, financial risk demands that labs turning to the cloud should have staff who are well-versed in financial management and can effectively understand the current and future costs of implementing cloud solutions in the lab.

However, this talk of risk shouldn't scare away laboratory staff considering a move to the cloud; the cloud can benefit automated laboratories in many ways. However, it does require a thoughtful, organization-wide approach to managing the risks (as discussed in Chapter 3). Let's take a look at the benefits a laboratory could realize from moving to cloud-based solutions.

4.1 Benefits

Whether or not you fully buy into the ability of cloud computing to help you laboratory depends on a number of factors, including industry served, current data output, anticipated future data output, the regulations affecting your lab, your organization's budget, and your organization's willingness to adopt and enforce sound risk management policies and controls. A tiny material testing laboratory with relatively simple workflows and little in the way of anticipated growth in the short term may be content with using their in-house systems. A biological research group with several laboratories geographically spread across the continent creating and managing large data sets for developing new medical innovations, all while operating in a competitive environment, may see the cloud as an opportunity to grow the organization and perform more efficient work.

In fact, the biomedical sciences in general have been a good fit for cloud computing. "Omics" laboratories in particular have shown promise for cloud-based data management, with many researchers over the years demonstrating various methods of managing the "big data" networking and sharing of omics data in the cloud.[4][5][6][7] These efforts have focused on managing large data sets more efficiently while being able to securely share those data sets with researchers around the world. This data sharing—particularly while considering FAIR data principles that aim to make data findable, accessible, interoperable, and reusable[8]—is of significant benefit to research laboratories around the world; controlled access to that data via standards-based cloud computing methods certainly lends to those FAIR principles.[9]

Another area where laboratory-driven cloud computing makes sense is that of the internet of things (IoT) and networked sensors that collect data. From wearable sensors, monitors, and point-of-care diagnostic systems to wireless sensor networks, Bluetooth-enabled mobile devices, and even IoT-enabled devices and equipment directly in the laboratory, connecting those devices to cloud services and storage provides near-seamless integration of data producing instruments with laboratory workflows.[10][11] Monitoring temperature, humidity, and other ambient temperatures of freezers, fridges, and incubators while maintaining calibration and maintenance data becomes more automated.[11] Usage data on high-use instruments can be uploaded to the cloud and analyzed to enable predictive maintenance down the road.[11] And outdoor pollution monitoring systems that use low-cost, networked sensors can, upon taking a reading (a trigger event), send the result to a cloud service (at times with the help of edge computing), where a bit of uploaded code processes the data upload on-demand.[12] In all these cases, the automated collection and analysis of data using cloud components—which in turn makes that data accessible from anywhere in the world with internet access—allows laboratorians to rapidly gain advantages in how they work.

Panning outward, we see other benefits of cloud to a broad set of laboratories. Agilent Technologies, an analytical instrument developer and manufacturer, argues that the cloud can transform siloed, disparate data and information in a non-cloud, on-premises informatics solution into more actionable knowledge and wisdom, which by extension adds value to the laboratory. They also argue overall value to the lab is increased by[13]:

- "providing a higher level of connectivity and consistency for lab informatics systems and processes";

- enabling "lab managers to integrate functionality and bring context to every phase of the continuum of value, without increasing cost or risk";

- allowing IT personnel "to do more with less";

- enabling "faster, easier, more mobile access to data and tools" for lab technicians (globally);

- allowing lab leaders to reduce costs (including capital expenditure costs) and "increase team morale by enabling streamlined, self-service access to resources" ; and

- enabling labs to expand their "digital transformation initiatives," with cloud as the catalyst.

Other benefits provided to labs by cloud computing include:

- providing "the ability to easily increase or decrease their use [of infrastructure and services] as business objectives change and keep the organization nimble and competitive" (i.e., added scalability while operating more research tasks with massive data sets)[14];

- limiting responsibility of physical (in-person) access and protection of stored data to the CSP (though this comes with its own caveats concerning backing up with another provider)[15];

- limiting responsibility for technical hardware and other assets to the CSP as the organization grows and changes[15];

- inheriting the existing security protocols and compliance procedures of the provider (though again with caveats concerning vetting the CSP's security, and the inability to do so at times)[15]; and

- ensuring "that different teams are not simultaneously replicating workloads – creating greater efficiencies throughout organizations" (under the scope of the real-time update capacity of cloud globally).[16]

To sum this all up, let's look at what cloud computing is, care of Chapter 1:

an internet-based computing paradigm in which standardized and virtualized resources are used to rapidly, elastically, and cost-effectively provide a variety of globally available, "always-on" computing services to users on a continuous or as-needed basis

First, cloud technology is standardized, just like many of the techniques used in laboratories. A standardized approach to cloud computing assists with cloud services remaining compliant, which laboratories also must do. Yes, there's plenty of responsibility in ensuring all data management and use in the cloud is done so in a compliant fashion, but standardized approaches based on sound security principles help limit a laboratory's extended risk. Second, cloud technology is virtualized, meaning compute services and resources are more readily able to be recovered should system failure or disaster strike. Compare this to traditional infrastructures that inherently end up with longer periods of downtime, something which most laboratories cannot afford to have. Third, cloud services are built to be provisioned rapidly, elastically, and cost-effectively. In the case of laboratories and their workflows—especially in high-throughput labs—having scalable compute services that can be ramped up rapidly on a pay-what-you-use basis is certainly appealing to the overall business model. Finally, having those services available from anywhere with internet service, at any time, greatly expands numerous aspects of laboratory operations. Laboratorians can access data from anywhere at any time, facilitating research and discovery. Additionally, this enables remote and wireless data collection and upload from all but the most remote of locations, making environmental or even public health laboratory efforts more flexible and nimble.

4.2 Regulatory considerations

In Chapter 2, we examined standards and regulations influencing cloud computing. We also noted that there's an "elephant in the room" in the guise of data privacy and protection considerations in the cloud. Many of the regulations and data security considerations mentioned there apply not only to financial firms, manufacturers, and software developers, but also laboratories of all shapes and sizes. At the heart of it all is keeping client, customer, and organizational data out of the hands of people who shouldn't have access to it, a significant regulatory hurdle.

Most regulation of data and information in an enterprise, including laboratories, is based on several aspects of the data and information: its sensitivity, its location or geography, and its ownership.[17][18][19] Not coincidentally, these same aspects are often applied to data classification efforts of an organization, which attempt to determine and assign relative values to the data and information managed and communicated by the organization. This classification in turn allows the organization to better discover the risks associated with those classifications, and allow data owners to realize that all data shouldn't be treated the same way.[17] And well-researched regulatory efforts recognize this as well.

Take for example the European Union's General Data Protection Regulation (GDPR). The GDPR stipulates how personal data is collected, used, and stored by organizations in the E.U., as well as by organizations providing services to individuals and organizations in the E.U.[20] The GDPR appears to classify "personal data" as sensitive "in relation to fundamental rights and freedoms," formally defined as "any information relating to an identified or identifiable natural person."[21] This is the sensitivity aspect of the regulation. GDPR also addresses location at many points, from data transfers outside the E.U. to the location of the "main establishment" of a data owner or "controller."[21] As for ownership, GDPR refers to this aspect of data as the "controller," defined as "the natural or legal person, public authority, agency or other body which, alone or jointly with others, determines the purposes and means of the processing of personal data."[21] The word "controller" appears more than 500 times in the regulation, emphasizing the importance of ownership or control of data.[21] However, cloud providers using hybrid or multicloud approaches pose a challenge to labs, as verifying GDPR compliance in these deployments gets even more complicated. The lab would likely have to turn to key documents such as the CSP's SOC 2 audit report (discussed later) to get a fuller picture of GDPR compliance.[22]

While data privacy and protection regulations like GDPR, the Personal Data Protection Law (KVKK), and California Consumer Privacy Act (CCPA) take a broad approach, affecting most any organization doing cloud- or non-cloud business while handling sensitive and protected data, some regulations are more focused. The U.S.' Health Insurance Portability and Accountability Act (HIPAA) is huge for any laboratory handling electronic protected health information (ePHI), including in the cloud. The regulation is so significant that the U.S. Department of Health & Human Services (HHS) has released its own guidance on HIPAA and cloud computing.[23] That guidance highlights the sensitivity (ePHI), location (whether inside or outside the U.S.), and ownership (HIPAA covered entities and business associates) of data. That ownership part is important, as it addresses the role a CSP takes in this regard[23]:

When a covered entity engages the services of a CSP to create, receive, maintain, or transmit ePHI (such as to process and/or store ePHI), on its behalf, the CSP is a business associate under HIPAA. Further, when a business associate subcontracts with a CSP to create, receive, maintain, or transmit ePHI on its behalf, the CSP subcontractor itself is a business associate. This is true even if the CSP processes or stores only encrypted ePHI and lacks an encryption key for the data. Lacking an encryption key does not exempt a CSP from business associate status and obligations under the HIPAA Rules. As a result, the covered entity (or business associate) and the CSP must enter into a HIPAA-compliant business associate agreement (BAA), and the CSP is both contractually liable for meeting the terms of the BAA and directly liable for compliance with the applicable requirements of the HIPAA Rules.

Clinical and public health laboratories are already affected by HIPAA, but understanding how moving to the cloud affects those HIPAA requirements is vital and not always clear.[23] In particular, the idea of a CSP as a business associate must be taken seriously, in conjunction with its shared responsibility policy and compliance products. Laboratories that need to be HIPAA-compliant should be prepared to do their research on HIPAA and the cloud by reading the HHS guide and other reference material, as well consulting with experts on the topic when in-house expertise isn't available.

Another area of concern for laboratories is GxP or "good practice" quality guidelines and regulations. Take for example the pharmaceutical manufacturer and their laboratories, which must take sufficient precautions to ensure that any manufacturing documentation (data, information, etc.) related to good manufacturing practice (GMP) requirements (e.g., via E.U. GMP Annex 11, U.S. 21 CFR Part 211, or Germany's AMWHV) is securely stored yet available for a specific retention period "in the event of closure of the manufacturing or testing site where the documentation is stored."[24] As the ECA Academy notes, in the cloud computing realm, it would be up to the laboratory to get provisions added into the CSP's service-level agreement (SLA) to address the GMP's necessity for data availability, and to decide whether maintaining a local backup of the data would be appropriate (as in, perhaps, a hybrid cloud scenario).

That part about the SLA is important. Although a formal contract will address agreed-upon services, it's usually in vague terms; the SLA, on the other hand, defines all the responsibility the cloud provider holds, as well as your laboratory, for the supply and use of the CSP's services.[25] These are your primary protections, along with vetting the CSP you use. This may be difficult, however, particularly in a public cloud, where auditing the security controls and protections of the CSP will be limited at best. To ensure GxP compliance in the cloud, your lab will have to examine the various certifications and compliance offerings of the CSP and hope that the CSP staff handling your GxP data are trained on the requirements of GxP in the cloud.[26] However, public cloud providers like Microsoft[27] and Google[28] provide their own documentation and guidance on using their services in GxP environments. As Microsoft notes, however, "there is no GxP certification for cloud service providers."[27] Instead, the CSPs focus on meeting quality management and information security standards and employing their own best practices that match up with GxP requirements. Finally, they may also have an independent third party conduct GxP qualification reviews, with the resulting qualification guidelines detailing GxP responsibility between the CSP and the laboratory.[27][28]

Ultimately, navigating the challenge of ensuring your laboratory's move to the cloud complies with necessary regulations is a tricky matter. Ensuring the CSP you choose actually meets HIPAA, GxP, and other requirements isn't always a guaranteed proposition by simply auditing the CSPs whitepapers and other associated compliance documents. However, experts such as Linford & Co.'s Nicole Hemmer and IDBS' Damien Tiller emphasize that the most comprehensive CSP documentation to examine towards gaining a more complete picture of the CSP's security is the SOC 2 (SOC for Service Organizations: Trust Services Criteria) report.[29][30] A CSP's SOC 2 audit results outline nearly 200 information security, data integrity, data availability, and data retention controls and any non-conformities with those controls (the CSP must show those controls have been effectively in place over a six- to 12-month period). The SOC 2 report has other useful aspects, including a full service description and audit observations, making it the best tool for a laboratory to judge a CSP's ability to assist with regulatory compliance.[30]

4.3 Deployment approaches

Chapter 2 also looked at deployment approaches in detail, so this section won't get too in-depth. However, the topic of choosing the right deployment approach for your laboratory is still an important one. As Agilent Technologies noted in its 2019 white paper Cloud Adoption for Lab Informatics, a laboratory's "deployment approach is a key factor for laboratory leadership since the choice of private, public, or hybrid deployment will impact the viability of solutions in the other layers of informatics" applied by the lab.[13] In some cases, like most software, the deployment choice will be relatively straightforward, but other aspects of laboratory requirements may complicate that decision further.

Broadly speaking, any attempt to move you LIS, LIMS, or electronic laboratory notebook (ELN) to the cloud will almost certainly involve a software as a service (SaaS) approach.[13] If the deployment is relatively simple, with well-structured data, this is relatively painless. However, in cases where your LIS, LIMS, or ELN need to interface with additional business systems like an enterprise resource planning (ERP) system or other informatics software such as a picture archiving and communication system (PACS), an argument could be made that a platform as a service (PaaS) approach may be warranted, due to its greater flexibility.[13]

But laboratories don't run on software alone; analytical instruments are also involved. Those instruments are usually interfaced to the lab's software systems to better capture raw and modified instrument data directly. However, addressing how your cloud-based informatics systems handle instrument data can be challenging, requiring careful attention to cloud service and deployment models. Infrastructure as a service (IaaS) may be a useful service model to look into due to its versatility in being deployed in private, hybrid, and public cloud models.[13] As Agilent notes: "This model enables laboratories to minimize the IT footprint in the lab to only the resources needed for instrument control and acquisition. The rest of the data system components can be virtualized in the cloud and thus take advantage of the dynamic scaling and accessibility aspects of the cloud."[13] It also has easy scalability, enables remote access, and simplifies disaster recovery.[13]

Notice that Agilent still allows for the in-house IT resources necessary to handle the control of instruments and the acquisition of their data. This brings up an important point about instruments. When it comes to instruments, realistic decisions must be made concerning whether or not to take instrument data collection and management into the cloud, keep it local, or enact a hybrid workflow where instrument data is created locally but uploaded to the cloud later.[31] In 2017, the Association of Public Health Laboratories (APHL) emphasized latency issues as a real concern when it comes to instrument control computing systems, finding that most shouldn't be in the cloud. The APHL went on to add that those systems can, however, "be designed to share data in network storage systems and/or be integrated with cloud-based LIMS through various communication paths."[15]

So what should a laboratory do with instrument systems? PI Digital's general manager, Kaushal Vyas, recognized the difficulties in deciding what to do with instrument systems in September 2020, breaking the decision down into five key considerations. First, consider the potential futures for your laboratory instruments and how any developing industry trends may affect those instruments. Do you envision your instruments becoming less wired? Will mobile devices be able to interface with them? Are they integrated via a hub? And does a cloud-based solution that manages those integrations across multiple locations make sense at some future point? Second, talk to the people who actually use the instruments and understand the workflows those instruments participate in. Are analysts loading samples directly into the machine and largely getting results from the same location? Or will results need to be accessed from different locations? In the case of the latter, cloud management of instrument data may make more sense. Third, are there additional ways to digitize your workflows in the lab? Perhaps later down the road? Does enabling from-anywhere access to instruments with the help of the cloud make sense for the lab? This leads to the fourth consideration, which actually touches upon the prior three: location, location, location. Where are the instruments located and from where will results, maintenance logs, and error logs be read? If these tasks don't need to be completed in real time, it's possible some hybrid cloud solution would work for you. And finally—as has been emphasized throughout the guide—ask what regulations and security requirements drive instrument deployment and use. In highly regulated environments like pharmaceutical research and production, moving instrument data off-premises may not be an option.[31]

One additional consideration that hasn't been fully discussed yet is whether or not to go beyond a hybrid cloud—depending on one single cloud vender to integrate with on-premises systems—to a cloud approach that involves more than one cloud vendor. We examine this consideration, as well as how it related to vendor lock-in, in the following subsection.

4.3.1 Hybrid cloud, multicloud, and the vendor lock-in conundrum

In Chapter 1, we compared and contrasted hybrid cloud, multicloud, and distributed cloud deployments. Remember that hybrid cloud integrates a private cloud or local IT infrastructure with a public cloud, multicloud multiplies public cloud into services from two or more vendors, and distributed cloud takes a public cloud and expands it to multiple edge locations. Hybrid and distributed deployments typically involve one public cloud vendor, and multicloud involves more than one public cloud vendor. But why would any laboratory want to spread its operations across two or more CSPs?

"Multicloud has the benefit of reducing vendor lock-in by implementing resource utilization and storage across more than one public cloud provider," we said in Chapter 1. The benefits of choosing a multicloud option over other options are a bit contentious however. Writing for the Carnegie Endowment in 2020, Maurer and Hinck address the issues of multicloud in detail[32]:

Proponents argue that this approach has benefits such as avoiding vendor lock-in, increasing resilience to outages, and taking advantage of competitive pricing. However, each of these points is contested; for instance, some might argue that ensuring a CSP’s infrastructure architecture is secure is a much better way to enhance resilience than replicating workloads across multiple CSPs ... However, using multiple cloud providers can create greater complexity for organizations in terms of managing their cloud usage and creating more potential points of vulnerability, as the Cloud Security Alliance discussed in a May 2019 report.

However, that very same complexity, found with most any cloud technology, brings with it risks related to vendor lock-in, seemingly forming a vicious circle. Carnegie Endowment's Levite and Kalwany noted in November 2020[33]:

... the complex technology behind cloud services exacerbates risks of vendor lock-in. There are two main factors to consider here: the level of interoperability (how easy it is to make different cloud services work together as well as work with on-site consumer IT systems), and portability (how easy it is to switch data and applications from one cloud service to another, as well as from on-site systems to the cloud and back). Standards enabling both interoperability and portability of cloud services are likely to emerge as [among other things] a means of avoiding vendor lock-in.

To be sure, interoperability and portability are important concepts, not only in cloud computing[33][34] but also in laboratory data management, particularly with clinical data.[35][36] The U.S.' current SHIELD initiative speaks to that, aiming "to improve the quality, interoperability and portability of laboratory data within and between institutions so that diagnostic information can be pulled from different sources or shared between institutions to help illuminate clinical management and understand health outcomes."[35] You see this same emphasis taking place in regards to cloud systems and biomedical research data sharing and the associated benefits of having interoperable, portable data using cloud systems.[4][5][6][7]

As Levite and Kalwany point out, interoperability and portability is also useful in the discussion on whether or not to use two or more CSPs. Let's again highlight their questions[33] (which will also be important to the next chapter on choosing and implementing your cloud solutions):

- How interoperable or compatible is one public CSP's services with another public CSP's services, as well as with your on-premises IT systems?

- How portable or transferable are your data and applications when needing to move them from one cloud service to another, as well as to and from your on-premises solutions?

These two questions, along with the vendor lock-in conundrum, are illustrated well in the ongoing saga of the Pentagon's JEDI project. The Joint Enterprise Defense Infrastructure (JEDI) project was officially kicked into gear in 2018 with a request for proposal (RFP), seeking to find a singular cloud computing provider to help usher in a new cloud-based era for Defense Department operations. The adamancy towards choosing only one CSP, despite the sheer enormity of the contract, quickly raised the eyebrows or watchdogs and major industry players even before the RFP was released. Claims of one provider having too much influence over government IT, as well an already skewed bidding process in favor of Amazon, complicated matters further.[37] Defending the stance of choosing only one CSP, the Digital Defense Service's deputy director Tim Van Name cited an "overall complexity" increase that would come from awarding the RFP to multiple CSPs, given the challenges already inherent to Defense Department data management in and out of the battlefield. Van Name also cited a "lack of standardization and interoperability" of complex cloud deployments as another barrier to accessing data when and where they need it.[38] This has inevitably led to a number of lawsuits before and after Microsoft was awarded the contract. With legal battles raging and an overarching "urgent, unmet requirement" by the Defense Department still not being realized because of the litigation, the government was rumored to be considering taking a loss on the single-vendor JEDI plan and moving forward with a multicloud plan, particularly with the departure of senior officials who originally backed the single cloud proposal.[39][40][41] The success of the CIA's C2E cloud-based program, which was awarded to five cloud providers, may have proven to be a further catalyst[37], as by July 2021, the JEDI project was finally pulled by the DoD[42] and related lawsuits by Amazon were dismissed.[43] Almost immediately afterwards, the DoD announced a new proposal, the Joint Warfighter Cloud Capability (JWCC) project, with a rough projection of being awarded to multiple vendors by April 2022.[43] The DoD limited bids to four vendors—Amazon, Google, Microsoft, and Oracle—with all four gaining a piece of the indefinite-delivery, indefinite-quantity contract by December 2022.[44][45] An April 2023 report in SIGNAL on the JWCC provided further insight on the unique approach to the contract, highlighting that despite all the stated complexity, some definitive benefits emerge from a multicloud arrangement[46]:

Officials structured the JWCC vehicle to be a one enterprise contract and set up direct relationships with the cloud service providers (CSPs)—which is unique. [Hosting and Compute Center] works with DoD customers to shape their cloud service requirements and interacts directly with the four private sector cloud companies on the contract, instead via a third-party ... 'No contract in the department has that direct relationship with the CSPs,' McArthur said. 'Having that single point to be able to go to the vendors and be able to [interact with them] when it comes to meeting capabilities, needing to drive cost parity or cybersecurity incidents, those are groundbreaking things for the department at large.'

What does all this mean for the average laboratory? At a minimum, there may be some benefit to having, for example, redundant data backup in the cloud, particularly if your lab feels that sensitive data can be safely moved to the cloud, and that there's overall cost savings long-term (vs. investing in local data storage). But that "either-or" view doesn't entirely address potential risks associated with vendor lock-in and the need for data availability and durability. As such, having data stored in multiple locations makes sense, even if that means moving data to two different cloud providers. With a bit of analysis of data access patterns and a solid understanding of your data types and sensitivities, it may be possible to make this sort of multicloud data storage more cost-effective.[47] However, remember that the traceability of your data is also important, and if quality data goes into the cloud service, then it will be easier to find, in theory. As is, it's already a challenge for many laboratories to locate original data—particularly older data—and some labs still don't have clear data storage policies.[48] As such, any transition to the cloud should also be considering the value of reinforcing existing data management strategies or, forbid they don't exist, getting started on developing such strategies. In conjunction with reviewing or creating data management strategies, data cleansing may be required to make the data more meaningful, findable, and actionable.[49][50] This concept holds true even if a multicloud deployment doesn't make sense for you but a hybrid cloud deployment with local backups does. This hybrid approach may especially make sense if the lab uses a scientific data management system (SDMS). As Agilent notes, if you already have an on-premises SDMS, "extending the data storage capacity by connecting to a cloud storage location is a logical first step. This hybrid approach can use cloud storage in both a passive/active capacity while also taking advantage of turnkey archival solutions that the cloud offers."[13]

But what about the concept of vendor lock-in overall? How much should a laboratory worry about this scenario, particularly in regards to, for example, moving from an on-premises LIMS to one hosted in the cloud? Some like tech reporter Kimberley Mok, writing for Protocol, argue that as major CSPs begin to make multicloud-friendly upgrades to their infrastructure, and as users perform more rigorous vetting of CSPs and migrate to more portable, containerized software solutions, the threat of vendor lock-in is gradually diminishing.[51] However, business owners remain fearful. A summer 2020 survey published by business communications company Mitel found that among more than 1,100 European IT decision makers, a top contractual priority with a CSP was avoiding vendor lock-in; "46 percent of respondents [said they] want the ability to change provider quickly if the service contract is not fulfilled."[52] HashiCorp's third annual State of Cloud Strategy Survey in 2023 found that 48 percent of respondents thought single-vendor lock-in was driving multicloud adoption, further highlighting an increasing concern among businesses turning to the cloud.[53]

In the end, the laboratory will have to come down to a crucial decision: do we outsource services to several specialists (i.e., disaggregation) or stick to one, risking vendor lock-in? U.K. business software developer Advanced's chief product officer Amanda Grant provides this insight[54]:

For larger enterprises, disaggregation works best. The vast operations they undertake require a level of scalability and expertise that is best delivered by specialist companies. These specialists can target specific and more complex needs as well as support mission-critical operations directly. [For mid-sized organizations,] disaggregation demands a lot of internal investment. They would need in-house expertise to manage multiple suppliers and successfully integrate the services. The alternative would be to use an open ecosystem that brings all their cloud software solutions together in one place. This enables users to consume all of their cloud applications with ease while minimizing risk of vendor lock-in.

It's possible that as cloud computing continues to evolve, interoperability and portability of data, as well as tools for better data traceability, will continue to be a priority for the industry overall, potentially through continued standardization efforts.[34] As a result, perhaps the discussions of vendor lock-in will be less commonplace, with multicloud deployments becoming common discussion. The adoption of open-source technologies as part of an organization's cloud migration may also help with interoperability with other cloud platforms in the future.[55] In the meantime, laboratory decision makers will have to weigh the sensitivity of their data, that data's cloud-readiness, the laboratory budget, the potential risks, and the potential rewards of moving its operations to more complex but productive hybrid cloud and multicloud environments. The final decisions will differ, sometimes drastically, from lab to lab, but the risk management processes and considerations from Chapter 3 should be the same: same starting point but different destinations.

That leads us to the next chapter of our guide on the managed security service provider (MSSP), an entity that provides monitoring and management of security devices and systems in the cloud, among other things. These providers make make it considerably easier, and more secure, for a laboratory to implement cloud computing in their organization.

References

- ↑ Weinstein, M.; Smith, G. (2007). "Automation in the Clinical Pathology Laboratory". North Carolina Medical Journal (2): 130–31. doi:10.18043/ncm.68.2.130.

- ↑ Prasad, P.J.; Bodhe, G.L. (2012). "Trends in laboratory information management system". Chemometrics and Intelligent Laboratory Systems 118: 187–92. doi:10.1016/j.chemolab.2012.07.001.

- ↑ Casey, E.; Souvignet, T.R. (2020). "Digital transformation risk management in forensic science laboratories". Forensic Science International 316: 110486. doi:10.1016/j.forsciint.2020.110486.

- ↑ 4.0 4.1 Onsong, G.; Erdmann, J.; Spears, M.D. et al. (2014). "Implementation of Cloud based Next Generation Sequencing data analysis in a clinical laboratory". BMC Research Notes 7: 314. doi:10.1186/1756-0500-7-314. PMC PMC4036707. PMID 24885806. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4036707.

- ↑ 5.0 5.1 Afgan, E.; Sloggett, C.; Goonasekera, N. et al. (2015). "Genomics Virtual Laboratory: A Practical Bioinformatics Workbench for the Cloud". PLoS One 10 (10): e0140829. doi:10.1371/journal.pone.0140829. PMC PMC4621043. PMID 26501966. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4621043.

- ↑ 7.0 7.1 Ogle, C.; Reddick, D.; McKnight, C.; Biggs, T.; Pauly, R.; Ficklin, S.P.; Feltus, F.A.; Shannigrahi, S. (2021). "Named data networking for genomics data management and integrated workflows". Frontiers in Big Data 4: 582468. doi:10.3389/fdata.2021.582468. PMC PMC7968724. PMID 33748749. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC7968724.

- ↑ Wilkinson, M.D.; Dumontier, M.; Aalbersberg, I.J. et al. (2016). "The FAIR Guiding Principles for scientific data management and stewardship". Scientific Data 3: 160018. doi:10.1038/sdata.2016.18. PMC PMC4792175. PMID 26978244. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4792175.

- ↑ Mons, B.; Neylon, C.; Velterop, J. et al. (2017). "Cloudy, increasingly FAIR; revisiting the FAIR Data guiding principles for the European Open Science Cloud". Information Services & Use 37 (1): 49–56. doi:10.3233/ISU-170824.

- ↑ Mayer, M.; Baeumner, A.J. (2019). "A Megatrend Challenging Analytical Chemistry: Biosensor and Chemosensor Concepts Ready for the Internet of Things". Chemical Reviews 119 (13): 7996–8027. doi:10.1021/acs.chemrev.8b00719. PMID 31070892.

- ↑ 11.0 11.1 11.2 Borfitz, D. (17 April 2020). "IoT In The Lab Includes Digital Cages And Instrument Sensors". BioIT World. https://www.bio-itworld.com/news/2020/04/17/iot-in-the-lab-includes-digital-cages-and-instrument-sensors. Retrieved 28 July 2023.

- ↑ Idrees, Z.; Zou, Z.; Zheng, L. (2018). "Edge Computing Based IoT Architecture for Low Cost Air Pollution Monitoring Systems: A Comprehensive System Analysis, Design Considerations & Development". Sensors 18 (9): 3021. doi:10.3390/s18093021. PMC PMC6163730. PMID 30201864. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC6163730.

- ↑ 13.0 13.1 13.2 13.3 13.4 13.5 13.6 13.7 Agilent Technologies (21 February 2019). "Cloud Adoption for Lab Informatics: Trends, Opportunities, Considerations, Next Steps" (PDF). Agilent Technologies. https://www.agilent.com/cs/library/whitepaper/public/whitepaper-cloud-adoption-openlab-5994-0718en-us-agilent.pdf. Retrieved 28 July 2023.

- ↑ Ward, S. (9 October 2019). "Cloud Computing for the Laboratory: Using data in the cloud - What it means for data security". Lab Manager. https://www.labmanager.com/cloud-computing-for-the-laboratory-736. Retrieved 28 July 2023.

- ↑ 15.0 15.1 15.2 15.3 Association of Public Health Laboratories (2017). "Breaking Through the Cloud: A Laboratory Guide to Cloud Computing" (PDF). Association of Public Health Laboratories. https://www.aphl.org/aboutAPHL/publications/Documents/INFO-2017Jun-Cloud-Computing.pdf. Retrieved 28 July 2023.

- ↑ White, R. (20 June 2023). "A Helpful Guide to Cloud Computing in a Laboratory". InterFocus Blog. InterFocus Ltd. https://www.mynewlab.com/blog/a-helpful-guide-to-cloud-computing-in-a-laboratory/. Retrieved 28 July 2023.

- ↑ 17.0 17.1 Simorjay, F.; Chainier, K.A.; Dillard, K. et al. (2014). "Data classification for cloud readiness" (PDF). Microsoft Corporation. https://download.microsoft.com/download/0/A/3/0A3BE969-85C5-4DD2-83B6-366AA71D1FE3/Data-Classification-for-Cloud-Readiness.pdf. Retrieved 28 July 2023.

- ↑ Tolsma, A. (2018). "GDPR and the impact on cloud computing: The effect on agreements between enterprises and cloud service providers". Deloitte. https://www2.deloitte.com/nl/nl/pages/risk/articles/cyber-security-privacy-gdpr-update-the-impact-on-cloud-computing.html. Retrieved 28 July 2023.

- ↑ Eustice, J.C. (2018). "Understand the intersection between data privacy laws and cloud computing". Legal Technology, Products, and Services. Thomson Reuters. https://legal.thomsonreuters.com/en/insights/articles/understanding-data-privacy-and-cloud-computing. Retrieved 28 July 2023.

- ↑ "Google Cloud & the General Data Protection Regulation (GDPR)". Google Cloud. https://cloud.google.com/privacy/gdpr. Retrieved 28 July 2023.

- ↑ 21.0 21.1 21.2 21.3 "Regulation (EU) 2016/679 of the European Parliament and of the Council of 27 April 2016 on the protection of natural persons with regard to the processing of personal data and on the free movement of such data, and repealing Directive 95/46/EC (General Data Protection Regulation) (Text with EEA relevance)". EUR-Lex. European Union. 27 April 2016. https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX%3A32016R0679. Retrieved 28 July 2023.

- ↑ Telling, C. (3 June 2019). "How cloud computing is changing the laboratory ecosystem". CloudTech. https://cloudcomputing-news.net/news/2019/jun/03/how-cloud-computing-changing-laboratory-ecosystem/. Retrieved 28 July 2023.

- ↑ 23.0 23.1 23.2 Office for Civil Rights (23 December 2022). "Guidance on HIPAA & Cloud Computing". Health Information Privacy. U.S. Department of Health & Human Services. https://www.hhs.gov/hipaa/for-professionals/special-topics/health-information-technology/cloud-computing/index.html. Retrieved 28 July 2023.

- ↑ "Cloud Computing: Regulations for the Return Transmission of Data in the Event of Business Discontinuation". 16 December 2020. https://www.gmp-compliance.org/gmp-news/cloud-computing-regulations-for-the-return-transmission-of-data-in-the-event-of-business-discontinuation. Retrieved 28 July 2023.

- ↑ Caldwell, D. (16 February 2019). "Contracts vs. Service Level Agreements". ASG Blog. Archived from the original on 30 September 2020. https://web.archive.org/web/20200930063443/https://www.asgnational.com/miscellaneous/contracts-vs-service-level-agreements/. Retrieved 28 July 2023.

- ↑ McDowall, B. (27 August 2020). "Clouds or Clods? Software as a Service in GxP Regulated Laboratories". CSols Blog. CSols, Inc. https://www.csolsinc.com/blog/clouds-or-clods-software-as-a-service-in-gxp-regulated-laboratories/. Retrieved 28 July 2023.

- ↑ 27.0 27.1 27.2 Mazzoli, R. (26 January 2023). "Good Clinical, Laboratory, and Manufacturing Practices (GxP)". Microsoft Documentation. Microsoft, Inc. https://learn.microsoft.com/en-us/compliance/regulatory/offering-gxp. Retrieved 28 July 2023.

- ↑ 28.0 28.1 "Using Google Cloud in GxP Systems". Google Cloud. May 2020. https://cloud.google.com/security/compliance/cloud-gxp-whitepaper. Retrieved 28 July 2023.

- ↑ Colby, L. (1 February 2023). "2023 Trust Services Criteria (TSCs) for SOC 2 Reports". Linford & Company IT Audit & Compliance Blog. Linford and Co. LLP. https://linfordco.com/blog/trust-services-critieria-principles-soc-2/. Retrieved 28 July 2023.

- ↑ 30.0 30.1 Tiller, D. (2019). "Is the Cloud a Safe Place for Your Data?: How Life Science Organizations Can Ensure Integrity and Security in a SaaS Environment" (PDF). IDBS. Archived from the original on 08 March 2023. https://web.archive.org/web/20210308231558/https://storage.pardot.com/468401/1614781936jHqdU6H6/Whitepaper_Is_the_cloud_a_safe_place_for_your_data.pdf. Retrieved 28 July 2023.

- ↑ 31.0 31.1 Vyas, K. (September 2020). "Cloud vs. on-premises solutions – 5 factors to consider when planning the connectivity strategy for your healthcare instrument". Planet Innovation: Perspectives. https://planetinnovation.com/perspectives/cloud-vs-on-premises-solutions/. Retrieved 28 July 2023.

- ↑ Maurer, T.; Hinck, G. (31 August 2020). "Cloud Security: A Primer for Policymakers". Carnegie Endowment for International Peace. https://carnegieendowment.org/2020/08/31/cloud-security-primer-for-policymakers-pub-82597. Retrieved 28 July 2023.

- ↑ 33.0 33.1 33.2 Levite, A.; Kalwani, G. (9 November 2020). "Cloud Governance Challenges: A Survey of Policy and Regulatory Issues". Carnegie Endowment for International Peace. https://carnegieendowment.org/2020/11/09/cloud-governance-challenges-survey-of-policy-and-regulatory-issues-pub-83124. Retrieved 28 July 2023.

- ↑ 34.0 34.1 Ramalingam, C.; Mohan, P. (2021). "Addressing Semantics Standards for Cloud Portability and Interoperability in Multi Cloud Environment". Symmetry 13: 317. doi:10.3390/sym13020317.

- ↑ 35.0 35.1 Office of the Assistant Secretary for Planning and Evaluation. "SHIELD - Standardization of Lab Data to Enhance Patient-Centered Outcomes Research and Value-Based Care". U.S. Department of Health & Human Services. https://aspe.hhs.gov/shield-standardization-lab-data-enhance-patient-centered-outcomes-research-and-value-based-care. Retrieved 28 July 2023.

- ↑ Futrell, K. (22 February 2021). "COVID-19 highlights need for laboratory data sharing and interoperability". Medical Laboratory Observer. https://www.mlo-online.com/information-technology/lis/article/21210723/covid19-highlights-need-for-laboratory-data-sharing-and-interoperability. Retrieved 28 July 2023.

- ↑ 37.0 37.1 Gregg, A. (5 August 2018). "Pentagon doubles down on ‘single-cloud’ strategy for $10 billion contract". The Washington Post. Archived from the original on 07 August 2018. https://web.archive.org/web/20180807051800if_/https://www.washingtonpost.com/business/capitalbusiness/pentagon-doubles-down-on-single-cloud-strategy-for-10-billion-contract/2018/08/05/352cfee8-972b-11e8-810c-5fa705927d54_story.html?utm_term=.130ed427f134. Retrieved 28 July 2023.

- ↑ Mitchell, B. (8 March 2018). "DOD defends its decision to move to commercial cloud with a single award". FedScoop. https://www.fedscoop.com/dod-pentagon-jedi-cloud-contract-single-award/. Retrieved 28 July 2023.

- ↑ Gregg, A. (10 February 2021). "With a $10 billion cloud-computing deal snarled in court, the Pentagon may move forward without it". The Washington Post. Archived from the original on 11 February 2021. https://web.archive.org/web/20210211065108if_/https://www.washingtonpost.com/business/2021/02/10/jedi-contract-pentagon-biden/. Retrieved 28 July 2023.

- ↑ Alspach, K. (5 March 2021). "Microsoft Could Lose JEDI Contract If AWS Case Isn’t Dismissed: Report". CRN. https://www.crn.com/news/cloud/microsoft-could-lose-jedi-contract-if-aws-case-isn-t-dismissed-report. Retrieved 28 July 2023.

- ↑ Nix, N. (7 March 2021). "Microsoft’s $10 billion Pentagon deal at risk amid Amazon fight". The Seattle Times. https://www.seattletimes.com/business/microsofts-10-billion-pentagon-deal-at-risk-amid-amazon-fight/. Retrieved 28 July 2023.

- ↑ Miller, R. (6 July 2021). "Nobody wins as DoD finally pulls the plug on controversial $10B JEDI contract". TechCrunch. https://techcrunch.com/2021/07/06/nobody-wins-as-dod-finally-pulls-the-plug-on-controversial-10b-jedi-contract/. Retrieved 15 August 2023.

- ↑ 43.0 43.1 Shepardson, D. (9 July 2021). "U.S. judge ends Amazon challenge to $10 bln cloud contract after Pentagon cancellation". Reuters. https://www.reuters.com/legal/government/us-judge-ends-amazon-challenge-10-bln-cloud-contract-after-pentagon-cancellation-2021-07-09/. Retrieved 15 August 2023.

- ↑ Miller, R. (19 November 2021). "Pentagon announces new cloud initiative to replace ill-fated JEDI contract". TechCrunch. https://techcrunch.com/2021/11/19/pentagon-announces-new-cloud-initiative-to-replace-ill-fated-jedi-contract/. Retrieved 15 August 2023.

- ↑ Lopez, C.T. (12 December 2022). "Department Names Vendors to Provide Joint Warfighting Cloud Capability". DoD News. U.S. Department of Defense. https://www.defense.gov/News/News-Stories/Article/Article/3243483/department-names-vendors-to-provide-joint-warfighting-cloud-capability/. Retrieved 15 August 2023.

- ↑ Underwood, K. (17 April 2023). "The Joint Warfighting Cloud Capability Is Breaking Barriers". SIGNAL. https://www.afcea.org/signal-media/defense-operations/joint-warfighting-cloud-capability-breaking-barriers. Retrieved 15 August 2023.

- ↑ Waibel, P.; Matt, J.; Hochreiner, C. et al. (2017). "Cost-optimized redundant data storage in the cloud". Service Oriented Computing and Applications 11: 411–26. doi:10.1007/s11761-017-0218-9.

- ↑ Anderson, M.E.; Ray, S.C. (2017). "It's 10 pm; Do You Know Where Your Data Are? Data Provenance, Curation, and Storage". Circulation Research 120 (10): 1551-1554. doi:10.1161/CIRCRESAHA.116.310424. PMC PMC5465863. PMID 28495991. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC5465863.

- ↑ "Clean up your server instance before migration". Atlassian Support. Atlassian. 17 March 2021. https://support.atlassian.com/migration/docs/clean-up-your-server-instance-before-migration/. Retrieved 28 July 2023.

- ↑ SADA Says (22 August 2019). "9 STEPS FOR MIGRATING DATABASES TO THE CLOUD". SADA, Inc. https://sada.com/insights/blog/9-steps-for-migrating-databases-to-the-cloud/. Retrieved 28 July 2023.

- ↑ Mok, K. (1 December 2020). "Should we really be worried about vendor lock-in in 2020?". Protocol. https://www.protocol.com/manuals/new-enterprise/vendor-lockin-cloud-saas. Retrieved 28 July 2023.

- ↑ "Cloud Communications: European Companies Show Newfound Maturity". Mitel. 20 July 2020. Archived from the original on 04 December 2021. https://web.archive.org/web/20211204082428/https://www.mitel.com/en-gb/about/newsroom/press-releases/european-companies-show-newfound-maturity. Retrieved 28 July 2023.

- ↑ Paul, F. (24 July 2023). "HashiCorp State of Cloud Strategy Survey 2023: The tech sector perspective". HashiCorp. https://www.hashicorp.com/blog/hashicorp-state-of-cloud-strategy-survey-2023-the-tech-sector-perspective. Retrieved 15 August 2023.

- ↑ "How to avoid cloud vendor lock-in and take advantage of multi-cloud". InformationAge. 2023. https://www.information-age.com/how-to-avoid-cloud-vendor-lock-in-advantage-multi-cloud-16437/. Retrieved 28 July 2023.

- ↑ Nangare, S. (13 December 2019). "An Overview of Cloud Migration and Open Source". DevOps.com. https://devops.com/an-overview-of-cloud-migration-and-open-source/. Retrieved 28 July 2023.

Citation information for this chapter

Chapter: 4. Cloud computing in the laboratory

Title: Choosing and Implementing a Cloud-based Service for Your Laboratory

Edition: Second edition

Author for citation: Shawn E. Douglas

License for content: Creative Commons Attribution-ShareAlike 4.0 International

Publication date: August 2023