LII:Laboratory Technology Planning and Management: The Practice of Laboratory Systems Engineering

Title: Laboratory Technology Planning and Management: The Practice of Laboratory Systems Engineering

Author for citation: Joe Liscouski

License for content: Creative Commons Attribution 4.0 International

Publication date: December 2020

|

|

This article should be considered a work in progress and incomplete. Consider this article incomplete until this notice is removed. |

Introduction

What separates successful advanced laboratories from all the others? It's largely their ability to meet their goals, with the effective use of resources: people, time, money, equipment, data, and information. The fundamental goals of laboratory work haven’t changed, but they are under increased pressure to do more and do it faster, with a better return on investment (ROI). Laboratory managers have turned to electronic technologies (e.g., computers, networks, robotics, microprocessors, database systems, etc.) to meet those demands. However, without effective planning, technology management, and education, those technologies will only get labs part of the way to meeting their needs. We need to learn how to close the gap between getting part-way there and getting where we need to be. The practice of science has changed; we need to meet that change to be successful.

This document was written to get people thinking more seriously about the technologies used in laboratory work and how those technologies contribute to meeting the challenges labs are facing. There are three primary concerns:

- The need for planning and management: When digital components began to be added to lab systems, it was a slow incremental process: integrators and microprocessors grew in capability as the marketplace accepted them. That development gave us the equipment we have now, equipment that can be used in isolation or in a networked, integrated system. In either case, they need attention in their application and management to protect electronic laboratory data, ensure that it can be effectively used, and ensure that the systems and products put in place are both the right ones, and that they fully contribute to improvements in lab operations.

- The need for more laboratory systems engineers (LSEs): There is increasing demand for people who have the education and skills needed to accomplish the points above and provide research and testing groups with the support they need.[a]

- The need to collaborate with vendors: In order to develop the best products needed for laboratory work, vendors should be provided more user input. Too often vendors have an idea for a product or modifications to existing products, yet they lack a fully qualified audience to bounce ideas off of. With the planning in the first concern in place, we should be able to approach vendors and say, with confidence, "this is what is needed" and explain why.

If the audience for this work were product manufacturing or production facilities, everything that was being said would have been history. The efficiency and productivity of production operations directly impacts profitability and customer satisfaction; the effort to optimize operations would have been an essential goal. When it comes to laboratory operations, that same level of attention found in production operations must be in place to accelerate laboratory research and testing operations, reducing cost and improving productivity. Aside from a few lab installations in large organizations, this same level of attention isn’t given, as people aren’t educated as to its importance. The purpose of this work is to present ideas of what laboratory technology challenges can be addressed through planning activities using a series of goals.

This material is an expansion upon two presentations:

- "Laboratory Technology Management & Planning," 2nd Annual Lab Asset & Facility Management in Pharma 2019, San Diego, CA, October 22, 2019

- "How Digital Technologies are Changing the Landscape of Lab Operations," Lab Manager webinar, April 2020

Directions in lab operations

The lab of the future

People often ask what the lab of the future (LOF) is going to look like, as if there were a design or model that we should be aspiring toward. There isn’t. Your lab's future is in your hands to mold, a blank sheet of paper upon which you define your lab's future by setting objectives, developing a functional physical and digital architecture, planning processes and implementations, and managing technology that supports both scientific and laboratory information management. If that sound scary, it’s understandable. But you must take the time to educate yourself and bring in people (e.g., LSEs, consultants, etc.) who can assist you.

Too often, if vendors and consultants are asked what the LOF is going to look like, the response lines up with their corporate interests. No one knows what the LOF is because there isn’t a singular future, but rather different futures for different types of labs. (Just think of all the different scientific disciplines that exist; one future doesn’t fit all.) Your lab's future is in your hands. What do you want it to be?

The material in this document isn’t intended to define your LOF, but to help you realize it once the framework has been created, and you are in the best position to create it. As you create that framework, you'll be asking:

- Are you satisfied with your lab's operations? What works and what doesn’t? What needs fixing and how shall it be prioritized?

- Has management raised any concerns?

- What do those working in the lab have to say?

- How is your lab going to change in the next one to five years?

- Does your industry have a working group for lab operations, computing, and automation?

Adding to question five, many companies tend to keep the competition at arm's length, minimizing contact for fear of divulging confidential information. However, if practically everyone is using the same set of test procedures from a trusted neutral source (e.g., ASTM International, United States Pharmacopeia, etc.), there’s nothing confidential there. Instead of developing automated versions of the same procedure independently, companies can join forces, spread the cost, and perhaps come up with a better solution. With that effort as a given, you collectively have something to approach the vendor community with and say “we need this modification or new product.” This is particularly beneficial to the vendor when they receive a vetted product requirements document to work from.

Again, you don’t wait for the lab of the future to happen, you create it. If you want to see the direction lab operations in the future can take, look to the manufacturing industry: it has everything from flexible manufacturing, cooperative robotics[1][2], and so on.[b] This is appropriate in both basic and applied research, as well as quality control.

Both manufacturing and lab work are process-driven with a common goal: a high-quality product whose quality can be defended through appeal to process and data integrity.

Lab work can be broadly divided into two activities, with parallels to manufacturing: experimental procedure development (akin to manufacturing process development) and procedure execution (product production). (Note: Administrative work is part of lab operations but not an immediate concern here.) As such, we have to address the fact that lab work is part original science and part production work based on that science, e.g., as seen with quality control, clinical chemistry, and high-throughput screening labs. The routine production work of these and other labs can benefit most from automation efforts. We need to think more broadly about the use of automation technologies—driving their development—instead of waiting to see what vendors develop.

Where manufacturing and lab work differ is in the scale of the work environment, the nature of the work station equipment, the skills needed to carry out the work, and the adaptability of those doing the work to unexpected situations.

My hope is that this guide will get laboratory managers and other stakeholders to begin thinking more about planning and technology management, as well as the need for more education in that work.

Trends in science applications

If new science isn’t being developed, vendors will add digital hardware and software technology to existing equipment to improve capabilities and ease-of-use, separating themselves from the competition. However, there is still an obvious need for an independent organization to evaluate that technology (i.e., the lab version of Consumer Reports); as is, that evaluation process, done properly, would be time consuming for individual labs and would require a consistent methodology. With the increased use of automation, we need to do this better, such that the results can be used more widely (rather than every lab doing their own thing) and with more flexibility, using specialized equipment designed for automation applications.

Artificial intelligence (AI) and machine learning (ML) are two other trending topics, but they are not quite ready for widespread real-world applications. However, modern examples still exist:

- Having a system that can bring up all relevant information on a research question—a sort of super Google—or a variation of IBM’s Watson could have significant benefits.

- Analyzing complex data or large volumes of data could be beneficial, e.g., the analysis of radio astronomy data to find fast radio bursts (FRB).[3]

- "[A] team at Glasgow University has paired a machine-learning system with a robot that can run and analyze its own chemical reaction. The result is a system that can figure out every reaction that's possible from a given set of starting materials."[4]

- HelixAI is using Amazon's Alexa as a digital assitant for laboratory work.[5]

However there are problems using these technologies. ML systems have been shown to be susceptible to biases in their output depending on the nature and quality of the training materials. As for AI, at least in the public domain, we really don’t know what that is, and what we think it is keeps changing as purported example emerge. One large problem for lab use is whether or not you can trust the results of an AI's output. We are used to the idea that lab systems and methods have to be validated before they are trusted, so how do you validate a system based on ML or AI?

Education

The major issue in all of this is having people educated to the point where they can successfully handle the planning and management of laboratory technology. One key point: most lab management programs focus on personnel issues, but managers also have to understand the capabilities and limitations of information technology and automation systems.

One result of the COVID-19 pandemic is that we are seeing the limitations of the four-year undergraduate degree program in science and engineering, as well as the state of remote learning. With the addition of information technologies, general systems thinking and modeling[c], statistical experimental design, and statistical process control have become multidisciplinary fields. We need options for continuing education throughout people’s careers so they can maintain their competence and learn new material as needed.

Making laboratory informatics and automation work

Making laboratory informatics and automation work? "Isn’t that a job for IT or lab personnel?" someone might ask. One of the problems in modern science is the development of specialists in disciplines. The laboratory and IT fields have many specialities, and specialists can be very good within those areas while at the same time not having an appreciation of wider operational issues. Topics like lab operations, technology management, and planning aren’t covered in formal education courses, and they're often not well-covered in short courses or online programs.

“Making it work” depends on planning performed at a high enough level in the organization to encompass all affected facilities and departments, including IT and facilities management. This wider perspective gives us the potential for synergistic operations across labs, consistent policies for facilities management and IT, and more effective use of outside resources (e.g., lab information technology support staff [LAB-IT], laboratory automation engineers [LAEs][d], equipment vendors, etc.).

We need to apply the same diligence to planning lab operations as we do any other critical corporate resource. Planning provides a structure for enabling effective and successful lab operations.

Introduction to this section

The common view of science laboratories is that of rooms filled with glassware, lab benches, and instruments being used by scientists to carry out experiments. While this is a reasonable perspective, what isn’t as visually obvious is the end result of that work: the development of knowledge, information, and data.

The progress of laboratory work—as well as the planning, documentation, analytical results related to that work—have been recorded in paper-based laboratory notebooks for generations, and people are still using them today. However, these aren't the only paper records that have existed and are still in use; scientists also depend on charts, log books, administrative records, reports, indexes, and reference material. The latter half of the twentieth century introduced electronics into the lab and with it electronic recording in the form of computers and data storage systems. Early adopters of these technologies had to extend their expertise into the information technology realm because there were few people who understood both these new devices and their application to lab work—you had to be an expert in both laboratory science and computer science.

In the 1980s and 90s, computers became commonplace and where once you had to understand hardware, software, operating systems, programming and application packages, you then simply had to know how to turn them on; no more impressive arrays of blinking lights, just a blinking cursor waiting for you to do something.

As systems gained ease-of-use, however, we lost the basic understanding of what these system were and what they did, that they had faults, and that if we didn’t plan for their effective use and counter those faults, we were opening ourselves to unpleasant surprises. The consequences at times were system crashes, lost data, and a lack of a real understanding of how the output of an instrument was transformed into a set of numbers, which meant we couldn’t completely account for the results we were reporting.

We need to step back, take control, and institute effective technology planning and management, with appropriate corresponding education, so that the various data we are putting into laboratory informatics technologies have the desired outcome. We need to ensure that these technologies are providing a foundation for improving laboratory operations efficiency and a solid return on investment (ROI), while substantively advancing your business' ability to work and be productive. That's the purpose of the work we'll be discussing.

The point of planning

The point of planning and technology management is pretty simple: to ensure ...

- that the right technologies are in people's hands when they need them, and

- that those technologies complement each other as much as possible.

These are straightforward statements with a lot packed into them.

Regarding the first point, the key words are “the right technologies.” In order to define what that means, lab personnel have to understand the technologies in question and how they apply to their work. If those personnel have used or were taught about the technologies under consideration, it should be easy enough to do. However, laboratory informatics doesn’t fall into that basket of things. The level of understanding has to be more than superficial. While personnel don’t have to be software developers, they do have to understand what is happening within informatics systems, and how data processing handles their data and produces results. Determining the “right technologies” depends on the quality and depth of education possessed by lab personnel, and eventually by lab information technology support staff (LAB-IT?) as they become involved in the selection process.

The second point also has a lot buried inside it. Lab managers and personnel are used to specifying and purchasing items (e.g., instruments) as discrete tools. When it comes to laboratory informatics, we’re working with things that connect to each other, in addition to performing a task. When we explore those connections, we need to assess how they are made, what we expect to gain, what compatibility issues exist, how to support them, how to upgrade them, what their life cycle is, etc. Most of the inter-connected devices people encounter in their daily lives are things that were expected to be connected with using a limited set of choices; the vendors know what those choices are and make it easy to do so, or otherwise their products won’t sell. The laboratory technology market, on the other hand, is too open-ended. The options for physical connections might be there, but are they the right ones, and will they work? Do you have a good relationship with your IT people, and are they able to help (not a given)? Again, education is a major factor.

Who is responsible for laboratory technology planning and management (TPM)?

When asking who is responsible for TPM, the question really is "who are the TPM stakeholders," or "who has an invested interest in seeing TPM prove successful?"

- Corporate or organizational management: These stakeholders set priorities and authorize funding, while also rationalizing and coordinating goals between groups. Unless the organization has a strong

scientific base, they may not appreciate the options and benefits of TPM in lab work, or the possibilities of connecting the lab into the rest of the corporate data structure.

- Laboratory management: These stakeholders are responsible for developing and implementing plans, as well as translating corporate goals into lab priorities.

- Laboratory personnel: These stakeholders are the ones that actually do the work. However, they are in the best position to understand where technologies can be applied. They would also be relied on to provide user requirements documents for new projects and meet both internal and external (e.g., Food and Drug Administration [FDA], Environmental Protection Agency [EPA], International Organization for Standardization [ISO], etc.) performance guidelines.

- IT management and their support staff: While these stakeholders' traditional role is the support of computers, connected devices (e.g., printers, etc.) and network infrastructure, they may also be the first line of support for computers connected to lab equipment. IT staff either need to be educated to meet that need and support lab personnel, or have additional resources available to them. They may also be asked to participate in planning activities as subject matter experts on computing hardware and software.

- LAB-IT specialists: These stakeholders act as the "additional resources" alluded to in the previous point. These are crossover specialists that span the lab and IT spaces and can provide informed

support to both. In most organizations, aside from large science-based companies, this isn’t a real "position," although once stated, it’s role is immediately recognized. In the past, I’ve also referenced these stakeholders as being “laboratory automation engineers.”[6]

- Facility management: These stakeholders need to ensure that the facilities support the evolving state of laboratory workspace requirements as traditional formats change to support robotics, instrumentation, computers, material flow, power, and HVAC requirements.

Carrying out this work is going to rely heavily on expanding the education of those participating in the planning work; the subject matter goes well beyond material covered in degree programs.

Why put so much effort into planning and technology management?

Earlier we mentioned paper laboratory notebooks, the most common recording device since scientific research began (although for sheer volume, it may have been eclipsed by computer hard drives). Have you ever wondered about the economics of laboratory notebooks? Cost is easy to understand, but the value of the data and information that is recorded there requires further explanation.

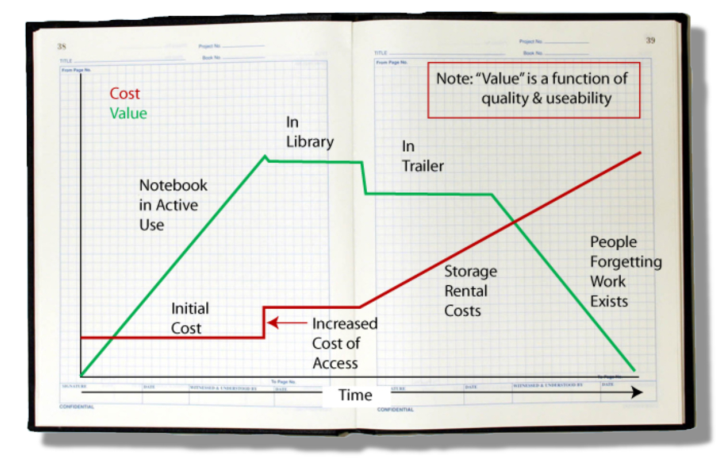

The value of the material recorded in a notebook depends on two key factors: the quality of the work and an inherent ability to put that documented work to use. The quality of the work is a function of those doing the work, how diligent they are, and the veracity of what has been written down. The inherent ability to use it depends upon the clarity of the writing, people’s ability to understand it without recourse to the author, and access to the material. That last point is extremely important. Just by glancing at Figure 1, you can figure out where this is going.

|

As a scientist’s notebook fills with entries, it gains value because of the content. Once filled, it reaches an upper limit and is placed in a library. There it takes a slight drop in value because its ease-of-access has changed; it isn’t readily at hand. As library space fills, the notebooks are moved to secondary storage (in one company I worked at, secondary storage consisted of trailers in a parking lot). Costs go up due to the cost of owning or renting the secondary storage and the space they take. The object's value drops, not because of the content but due to the difficulty in retrieving that content (e.g., which trailer? which box?). Unless the project is still active, the normal turn-over of personnel (e.g., via promotions, movement around the company, leaving the company) mean that institutional memory diminishes and people begin to forget the work exists. If few researchers can remember it, find it, and access it, the value drops regardless of the resources that went into the work. That is compounded by the potential for physical deterioration of the object (e.g., water damage, mice, etc.).

Preventing the loss of access to the results of your investment in R&D projects will rely on information technology. That reliance will be built upon planning an effective informatics environment, which is precisely where this discussion is going. How is putting you lab results into a computer system any different than a paper-based laboratory notebook? There are obvious things like faster searching and so on, but from our previous discussion on them, not much is different; you still have essentially a single point of failure, unless you plan for that eventuality. That is the fundamental difference and what will drive the rest of this writing:

Planning builds in reliability, security, and protection against loss. (Oh, and it allows us to work better, too!)

You could plan for failure in a paper-based system by making copies, but those copies still represent paper that has to be physically managed. With electronic systems, we can plan for failure by using automated backup procedures that make faithful copies, as many as we’d like, at low cost. This issue isn’t unique to laboratory notebooks, but it is a problem for organizations that depends on paper records.

Footnotes

- ↑ See Elements of Laboratory Technology Management and the LSE material in this document.

- ↑ See the "Scientific Manufacturing" section of Elements of Laboratory Technology Management.

- ↑ By “general systems” I’m not referring to simply computer systems, but the models and systems found under “general systems theory” in mathematics.

- ↑ Regarding LAB-IT and LAEs, my thinking about these titles has changed over time; the last section of this document “Laboratory systems engineers” goes into more detail.

About the author

Initially educated as a chemist, author Joe Liscouski (joe dot liscouski at gmail dot com) is an experienced laboratory automation/computing professional with over forty years experience in the field, including the design and development of automation systems (both custom and commercial systems), LIMS, robotics and data interchange standards. He also consults on the use of computing in laboratory work. He has held symposia on validation and presented technical material and short courses on laboratory automation and computing in the U.S., Europe, and Japan. He has worked/consulted in pharmaceutical, biotech, polymer, medical, and government laboratories. His current work centers on working with companies to establish planning programs for lab systems, developing effective support groups, and helping people with the application of automation and information technologies in research and quality control environments.

References

- ↑ Bourne, D. (2013). "My boss the robot". Scientific American 308 (5): 38–41. doi:10.1038/scientificamerican0513-38. PMID 23627215.

- ↑ Cook, B. (2020). "Collaborative Robots: Mobile and Adaptable Labmates". Lab Manager 15 (11): 10–13. https://www.labmanager.com/laboratory-technology/collaborative-robots-mobile-and-adaptable-labmates-24474.

- ↑ Hsu, J. (24 September 2018). "Is it aliens? Scientists detect more mysterious radio signals from distant galaxy". NBC News MACH. https://www.nbcnews.com/mach/science/it-aliens-scientists-detect-more-mysterious-radio-signals-distant-galaxy-ncna912586. Retrieved 04 February 2021.

- ↑ Timmer, J. (18 July 2018). "AI plus a chemistry robot finds all the reactions that will work". Ars Technica. https://arstechnica.com/science/2018/07/ai-plus-a-chemistry-robot-finds-all-the-reactions-that-will-work/5/. Retrieved 04 February 2021.

- ↑ "HelixAI - Voice Powered Digital Laboratory Assistants for Scientific Laboratories". HelixAI. http://www.askhelix.io/. Retrieved 04 February 2021.

- ↑ Liscouski, J.G. (2006). "Are You a Laboratory Automation Engineer?". SLAS Technology 11 (3): 157-162. doi:10.1016/j.jala.2006.04.002.