LII:Laboratory Technology Planning and Management: The Practice of Laboratory Systems Engineering

Title: Laboratory Technology Planning and Management: The Practice of Laboratory Systems Engineering

Author for citation: Joe Liscouski, with editorial modifications by Shawn Douglas

License for content: Creative Commons Attribution 4.0 International

Publication date: December 2020

Introduction

What separates successful advanced laboratories from all the others? It's largely their ability to meet their goals, with the effective use of resources: people, time, money, equipment, data, and information. The fundamental goals of laboratory work haven’t changed, but they are under increased pressure to do more and do it faster, with a better return on investment (ROI). Laboratory managers have turned to electronic technologies (e.g., computers, networks, robotics, microprocessors, database systems, etc.) to meet those demands. However, without effective planning, technology management, and education, those technologies will only get labs part of the way to meeting their needs. We need to learn how to close the gap between getting part-way there and getting where we need to be. The practice of science has changed; we need to meet that change to be successful.

This document was written to get people thinking more seriously about the technologies used in laboratory work and how those technologies contribute to meeting the challenges labs are facing. There are three primary concerns:

- The need for planning and management: When digital components began to be added to lab systems, it was a slow incremental process: integrators and microprocessors grew in capability as the marketplace accepted them. That development gave us the equipment we have now, equipment that can be used in isolation or in a networked, integrated system. In either case, they need attention in their application and management to protect electronic laboratory data, ensure that it can be effectively used, and ensure that the systems and products put in place are both the right ones, and that they fully contribute to improvements in lab operations.

- The need for more laboratory systems engineers (LSEs): There is increasing demand for people who have the education and skills needed to accomplish the points above and provide research and testing groups with the support they need.[a]

- The need to collaborate with vendors: In order to develop the best products needed for laboratory work, vendors should be provided more user input. Too often vendors have an idea for a product or modifications to existing products, yet they lack a fully qualified audience to bounce ideas off of. With the planning in the first concern in place, we should be able to approach vendors and say, with confidence, "this is what is needed" and explain why.

If the audience for this work were product manufacturing or production facilities, everything that was being said would have been history. The efficiency and productivity of production operations directly impacts profitability and customer satisfaction; the effort to optimize operations would have been an essential goal. When it comes to laboratory operations, that same level of attention found in production operations must be in place to accelerate laboratory research and testing operations, reducing cost and improving productivity. Aside from a few lab installations in large organizations, this same level of attention isn’t given, as people aren’t educated as to its importance. The purpose of this work is to present ideas of what laboratory technology challenges can be addressed through planning activities using a series of goals.

This material is an expansion upon two presentations:

- "Laboratory Technology Management & Planning," 2nd Annual Lab Asset & Facility Management in Pharma 2019, San Diego, CA, October 22, 2019

- "How Digital Technologies are Changing the Landscape of Lab Operations," Lab Manager webinar, April 2020

Directions in lab operations

The lab of the future

People often ask what the lab of the future (LOF) is going to look like, as if there were a design or model that we should be aspiring toward. There isn’t. Your lab's future is in your hands to mold, a blank sheet of paper upon which you define your lab's future by setting objectives, developing a functional physical and digital architecture, planning processes and implementations, and managing technology that supports both scientific and laboratory information management. If that sound scary, it’s understandable. But you must take the time to educate yourself and bring in people (e.g., LSEs, consultants, etc.) who can assist you.

Too often, if vendors and consultants are asked what the LOF is going to look like, the response lines up with their corporate interests. No one knows what the LOF is because there isn’t a singular future, but rather different futures for different types of labs. (Just think of all the different scientific disciplines that exist; one future doesn’t fit all.) Your lab's future is in your hands. What do you want it to be?

The material in this document isn’t intended to define your LOF, but to help you realize it once the framework has been created, and you are in the best position to create it. As you create that framework, you'll be asking:

- Are you satisfied with your lab's operations? What works and what doesn’t? What needs fixing and how shall it be prioritized?

- Has management raised any concerns?

- What do those working in the lab have to say?

- How is your lab going to change in the next one to five years?

- Does your industry have a working group for lab operations, computing, and automation?

Adding to question five, many companies tend to keep the competition at arm's length, minimizing contact for fear of divulging confidential information. However, if practically everyone is using the same set of test procedures from a trusted neutral source (e.g., ASTM International, United States Pharmacopeia, etc.), there’s nothing confidential there. Instead of developing automated versions of the same procedure independently, companies can join forces, spread the cost, and perhaps come up with a better solution. With that effort as a given, you collectively have something to approach the vendor community with and say “we need this modification or new product.” This is particularly beneficial to the vendor when they receive a vetted product requirements document to work from.

Again, you don’t wait for the lab of the future to happen, you create it. If you want to see the direction lab operations in the future can take, look to the manufacturing industry: it has everything from flexible manufacturing, cooperative robotics[1][2], and so on.[b] This is appropriate in both basic and applied research, as well as quality control.

Both manufacturing and lab work are process-driven with a common goal: a high-quality product whose quality can be defended through appeal to process and data integrity.

Lab work can be broadly divided into two activities, with parallels to manufacturing: experimental procedure development (akin to manufacturing process development) and procedure execution (product production). (Note: Administrative work is part of lab operations but not an immediate concern here.) As such, we have to address the fact that lab work is part original science and part production work based on that science, e.g., as seen with quality control, clinical chemistry, and high-throughput screening labs. The routine production work of these and other labs can benefit most from automation efforts. We need to think more broadly about the use of automation technologies—driving their development—instead of waiting to see what vendors develop.

Where manufacturing and lab work differ is in the scale of the work environment, the nature of the work station equipment, the skills needed to carry out the work, and the adaptability of those doing the work to unexpected situations.

My hope is that this guide will get laboratory managers and other stakeholders to begin thinking more about planning and technology management, as well as the need for more education in that work.

Trends in science applications

If new science isn’t being developed, vendors will add digital hardware and software technology to existing equipment to improve capabilities and ease-of-use, separating themselves from the competition. However, there is still an obvious need for an independent organization to evaluate that technology (i.e., the lab version of Consumer Reports); as is, that evaluation process, done properly, would be time consuming for individual labs and would require a consistent methodology. With the increased use of automation, we need to do this better, such that the results can be used more widely (rather than every lab doing their own thing) and with more flexibility, using specialized equipment designed for automation applications.

Artificial intelligence (AI) and machine learning (ML) are two other trending topics, but they are not quite ready for widespread real-world applications. However, modern examples still exist:

- Having a system that can bring up all relevant information on a research question—a sort of super Google—or a variation of IBM’s Watson could have significant benefits.

- Analyzing complex data or large volumes of data could be beneficial, e.g., the analysis of radio astronomy data to find fast radio bursts (FRB).[3]

- "[A] team at Glasgow University has paired a machine-learning system with a robot that can run and analyze its own chemical reaction. The result is a system that can figure out every reaction that's possible from a given set of starting materials."[4]

- HelixAI is using Amazon's Alexa as a digital assitant for laboratory work.[5]

However there are problems using these technologies. ML systems have been shown to be susceptible to biases in their output depending on the nature and quality of the training materials. As for AI, at least in the public domain, we really don’t know what that is, and what we think it is keeps changing as purported example emerge. One large problem for lab use is whether or not you can trust the results of an AI's output. We are used to the idea that lab systems and methods have to be validated before they are trusted, so how do you validate a system based on ML or AI?

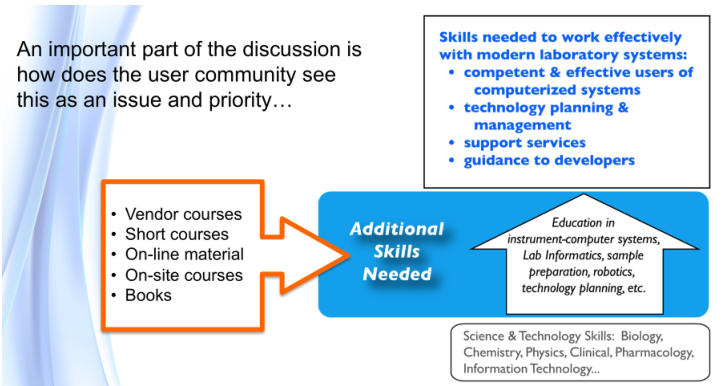

Education

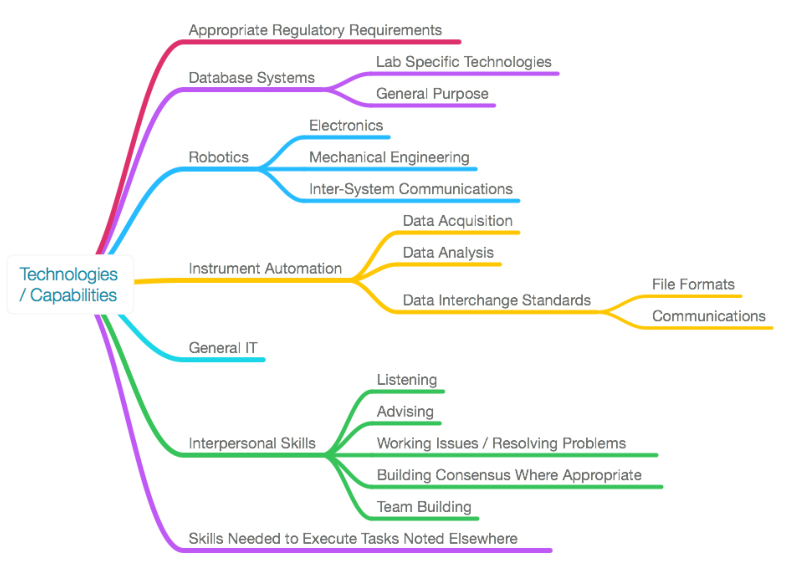

The major issue in all of this is having people educated to the point where they can successfully handle the planning and management of laboratory technology. One key point: most lab management programs focus on personnel issues, but managers also have to understand the capabilities and limitations of information technology and automation systems.

One result of the COVID-19 pandemic is that we are seeing the limitations of the four-year undergraduate degree program in science and engineering, as well as the state of remote learning. With the addition of information technologies, general systems thinking and modeling[c], statistical experimental design, and statistical process control have become multidisciplinary fields. We need options for continuing education throughout people’s careers so they can maintain their competence and learn new material as needed.

Making laboratory informatics and automation work

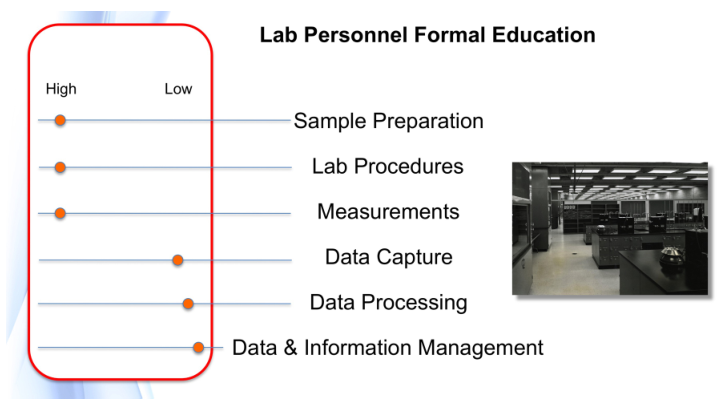

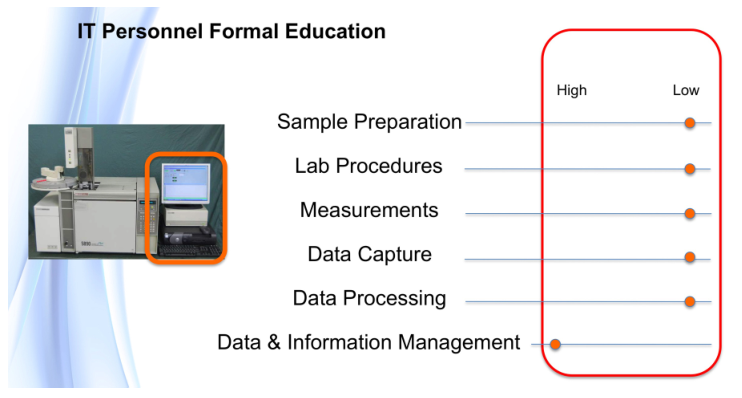

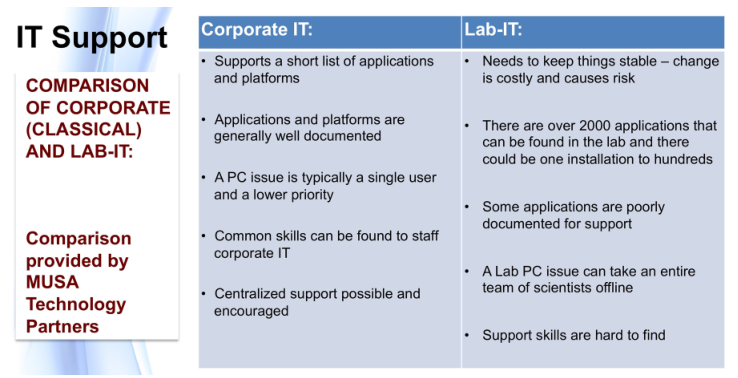

Making laboratory informatics and automation work? "Isn’t that a job for IT or lab personnel?" someone might ask. One of the problems in modern science is the development of specialists in disciplines. The laboratory and IT fields have many specialties, and specialists can be very good within those areas while at the same time not having an appreciation of wider operational issues. Topics like lab operations, technology management, and planning aren’t covered in formal education courses, and they're often not well-covered in short courses or online programs.

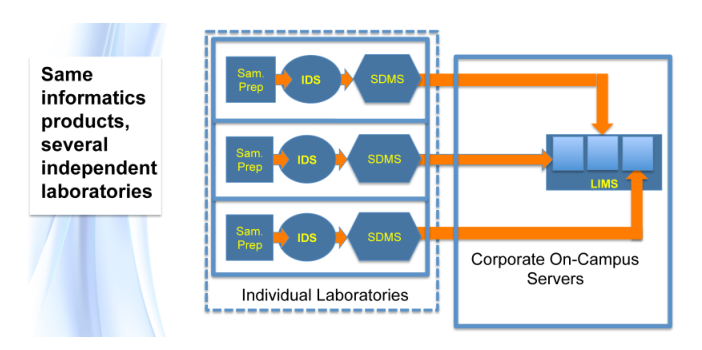

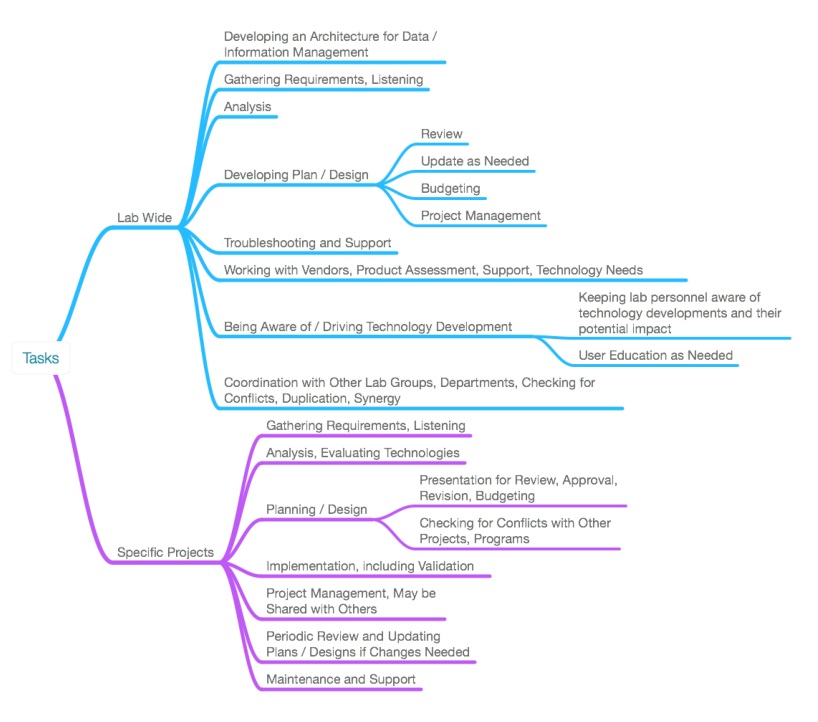

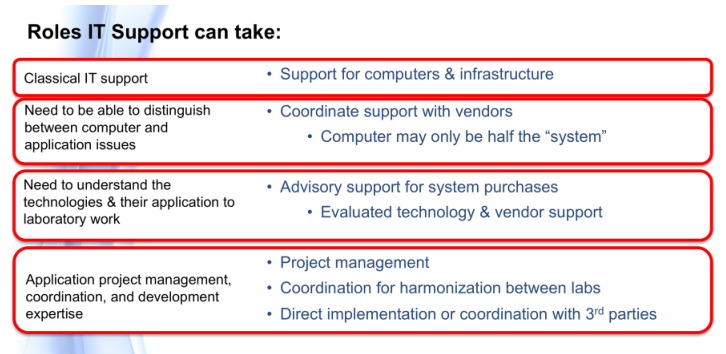

“Making it work” depends on planning performed at a high enough level in the organization to encompass all affected facilities and departments, including information technology (IT) and facilities management. This wider perspective gives us the potential for synergistic operations across labs, consistent policies for facilities management and IT, and more effective use of outside resources (e.g., lab information technology support staff [LAB-IT], laboratory automation engineers [LAEs][d], equipment vendors, etc.).

We need to apply the same diligence to planning lab operations as we do any other critical corporate resource. Planning provides a structure for enabling effective and successful lab operations.

Introduction to this section

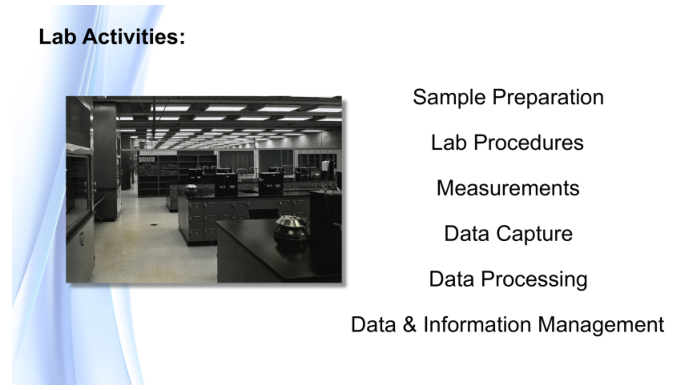

The common view of science laboratories is that of rooms filled with glassware, lab benches, and instruments being used by scientists to carry out experiments. While this is a reasonable perspective, what isn’t as visually obvious is the end result of that work: the development of knowledge, information, and data.

The progress of laboratory work—as well as the planning, documentation, analytical results related to that work—have been recorded in paper-based laboratory notebooks for generations, and people are still using them today. However, these aren't the only paper records that have existed and are still in use; scientists also depend on charts, log books, administrative records, reports, indexes, and reference material. The latter half of the twentieth century introduced electronics into the lab and with it electronic recording in the form of computers and data storage systems. Early adopters of these technologies had to extend their expertise into the information technology realm because there were few people who understood both these new devices and their application to lab work—you had to be an expert in both laboratory science and computer science.

In the 1980s and 90s, computers became commonplace and where once you had to understand hardware, software, operating systems, programming and application packages, you then simply had to know how to turn them on; no more impressive arrays of blinking lights, just a blinking cursor waiting for you to do something.

As systems gained ease-of-use, however, we lost the basic understanding of what these systems were and what they did, that they had faults, and that if we didn’t plan for their effective use and counter those faults, we were opening ourselves to unpleasant surprises. The consequences at times were system crashes, lost data, and a lack of a real understanding of how the output of an instrument was transformed into a set of numbers, which meant we couldn’t completely account for the results we were reporting.

We need to step back, take control, and institute effective technology planning and management, with appropriate corresponding education, so that the various data we are putting into laboratory informatics technologies have the desired outcome. We need to ensure that these technologies are providing a foundation for improving laboratory operations efficiency and a solid return on investment (ROI), while substantively advancing your business' ability to work and be productive. That's the purpose of the work we'll be discussing.

The point of planning

The point of planning and technology management is pretty simple: to ensure ...

- that the right technologies are in people's hands when they need them, and

- that those technologies complement each other as much as possible.

These are straightforward statements with a lot packed into them.

Regarding the first point, the key words are “the right technologies.” In order to define what that means, lab personnel have to understand the technologies in question and how they apply to their work. If those personnel have used or were taught about the technologies under consideration, it should be easy enough to do. However, laboratory informatics doesn’t fall into that basket of things. The level of understanding has to be more than superficial. While personnel don’t have to be software developers, they do have to understand what is happening within informatics systems, and how data processing handles their data and produces results. Determining the “right technologies” depends on the quality and depth of education possessed by lab personnel, and eventually by lab information technology support staff (LAB-IT?) as they become involved in the selection process.

The second point also has a lot buried inside it. Lab managers and personnel are used to specifying and purchasing items (e.g., instruments) as discrete tools. When it comes to laboratory informatics, we’re working with things that connect to each other, in addition to performing a task. When we explore those connections, we need to assess how they are made, what we expect to gain, what compatibility issues exist, how to support them, how to upgrade them, what their life cycle is, etc. Most of the inter-connected devices people encounter in their daily lives are things that were expected to be connected with using a limited set of choices; the vendors know what those choices are and make it easy to do so, or otherwise their products won’t sell. The laboratory technology market, on the other hand, is too open-ended. The options for physical connections might be there, but are they the right ones, and will they work? Do you have a good relationship with your IT people, and are they able to help (not a given)? Again, education is a major factor.

Who is responsible for laboratory technology planning and management (TPM)?

When asking who is responsible for TPM, the question really is "who are the TPM stakeholders," or "who has an invested interest in seeing TPM prove successful?"

- Corporate or organizational management: These stakeholders set priorities and authorize funding, while also rationalizing and coordinating goals between groups. Unless the organization has a strong scientific base, they may not appreciate the options and benefits of TPM in lab work, or the possibilities of connecting the lab into the rest of the corporate data structure.

- Laboratory management: These stakeholders are responsible for developing and implementing plans, as well as translating corporate goals into lab priorities.

- Laboratory personnel: These stakeholders are the ones that actually do the work. However, they are in the best position to understand where technologies can be applied. They would also be relied on to provide user requirements documents for new projects and meet both internal and external (e.g., Food and Drug Administration [FDA], Environmental Protection Agency [EPA], International Organization for Standardization [ISO], etc.) performance guidelines.

- IT management and their support staff: While these stakeholders' traditional role is the support of computers, connected devices (e.g., printers, etc.) and network infrastructure, they may also be the first line of support for computers connected to lab equipment. IT staff either need to be educated to meet that need and support lab personnel, or have additional resources available to them. They may also be asked to participate in planning activities as subject matter experts on computing hardware and software.

- LAB-IT specialists: These stakeholders act as the "additional resources" alluded to in the previous point. These are crossover specialists that span the lab and IT spaces and can provide informed support to both. In most organizations, aside from large science-based companies, this isn’t a real "position," although once stated, its role is immediately recognized. In the past, I’ve also referenced these stakeholders as being “laboratory automation engineers.”[6]

- Facility management: These stakeholders need to ensure that the facilities support the evolving state of laboratory workspace requirements as traditional formats change to support robotics, instrumentation, computers, material flow, power, and HVAC requirements.

Carrying out this work is going to rely heavily on expanding the education of those participating in the planning work; the subject matter goes well beyond material covered in degree programs.

Why put so much effort into planning and technology management?

Earlier we mentioned paper laboratory notebooks, the most common recording device since scientific research began (although for sheer volume, it may have been eclipsed by computer hard drives). Have you ever wondered about the economics of laboratory notebooks? Cost is easy to understand, but the value of the data and information that is recorded there requires further explanation.

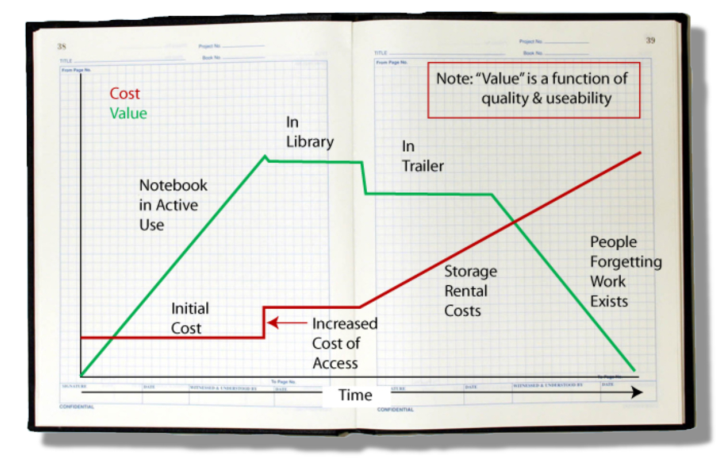

The value of the material recorded in a notebook depends on two key factors: the quality of the work and an inherent ability to put that documented work to use. The quality of the work is a function of those doing the work, how diligent they are, and the veracity of what has been written down. The inherent ability to use it depends upon the clarity of the writing, people’s ability to understand it without recourse to the author, and access to the material. That last point is extremely important. Just by glancing at Figure 1, you can figure out where this is going.

|

As a scientist’s notebook fills with entries, it gains value because of the content. Once filled, it reaches an upper limit and is placed in a library. There it takes a slight drop in value because its ease-of-access has changed; it isn’t readily at hand. As library space fills, the notebooks are moved to secondary storage (in one company I worked at, secondary storage consisted of trailers in a parking lot). Costs go up due to the cost of owning or renting the secondary storage and the space they take. The object's value drops, not because of the content but due to the difficulty in retrieving that content (e.g., which trailer? which box?). Unless the project is still active, the normal turn-over of personnel (e.g., via promotions, movement around the company, leaving the company) mean that institutional memory diminishes and people begin to forget the work exists. If few researchers can remember it, find it, and access it, the value drops regardless of the resources that went into the work. That is compounded by the potential for physical deterioration of the object (e.g., water damage, mice, etc.).

Preventing the loss of access to the results of your investment in R&D projects will rely on information technology. That reliance will be built upon planning an effective informatics environment, which is precisely where this discussion is going. How is putting you lab results into a computer system any different than a paper-based laboratory notebook? There are obvious things like faster searching and so on, but from our previous discussion on them, not much is different; you still have essentially a single point of failure, unless you plan for that eventuality. That is the fundamental difference and what will drive the rest of this writing:

- Planning builds in reliability, security, and protection against loss. (Oh, and it allows us to work better, too!)

You could plan for failure in a paper-based system by making copies, but those copies still represent paper that has to be physically managed. With electronic systems, we can plan for failure by using automated backup procedures that make faithful copies, as many as we’d like, at low cost. This issue isn’t unique to laboratory notebooks, but it is a problem for organizations that depends on paper records.

The difference between writing on paper and using electronic systems isn’t limited to how the document is realized. If you were to use a typewriter, the characters would show up on the paper and you'd be able to read them; all you needed was the ability to read (which could include braille formats) and understand what was written. However, if you were using a word processor, the keystrokes would be captured by software, displayed on the screen, placed in the computer’s memory, and then written to storage. If you want to read the file, you need something—software—to retrieve it from storage, interpret the file contents, determine how to display it, and then display it. Without that software the file is useless. A complete backup process has to include the software needed to read the file, plus all the underlying components that it depends upon. You could correctly argue that the hardware is required as well, but there are economic tradeoffs as well as practical ones; you could transfer the file to other hardware and read it there for example.

That point brings us to the second subject of this writing: technology management. What do I have to do to make sure that I have the right tools to enable me to work? The problem is simple enough when all you're concerned with is writing and preparing accompanying graphics. Upon shifting the conversation to laboratory computing, it gets more complicated. Rather than being concerned with one computer and a few software packages, you have computers that acquire and process data in real-time[e], transmit it to other computers for storage in databases, and systems that control sample processing and administrative work. Not only do the individual computer systems and the equipment and people they support have to work well, but also they have to work cooperatively, and that is why we have to address planning and technology management in laboratory work.

That brings us to a consideration of what lab work is all about.

Different ways of looking at laboratories

When you think about a “laboratory,” a lot depends on your perspective: are you on the outside looking in, do you work in a lab, or are you taking that high school chemistry class? When someone walks into a science laboratory, the initial impression is that of confusing collection of stuff, unless they're familiar with the setting. “Stuff” can consist of instruments, glassware, tubing, robots, incubators, refrigerators and freezers, and even petri dishes, cages, fish tanks, and more depending on the kind of work that is being pursued.

From a corporate point of view, a "laboratory" can appear differently and have different functions. Possible corporate views of the laboratory include:

- A laboratory is where questions are studied, which may support other projects or provide a source of new products, acting as basic and applied R&D. What is expected out of these labs is the development of new knowledge, usually in the form of reports or other documentation that can move a project forward.

- A laboratory acts as a research testing facility (e.g., analytical, physical properties, mechanical, electronics, etc.) that supports research and manufacturing through the development of new test methods, special analysis projects, troubleshooting techniques, and both routine and non-routine testing. The laboratory's results come in the form of reports, test procedures, and other types of documented information.

- A laboratory acts as a quality assurance/quality control (QA/QC) facility that provides routine testing, producing information in support of production facilities. This can include incoming materials testing, product testing, and product certification.

Typically, stakeholders outside the lab are looking for some form of result that can be used to move projects and other work forward. They want it done quickly and at low cost, but also want the work to be of high quality and reliability. Those considerations help set the goals for lab operations.

Within the laboratory there are two basic operating modes or workflows: project-driven or task-driven work. With project-driven workflows, a project goal is set, experiments are planned and carried out, the results are evaluated, and a follow-up course of action is determined. This all requires careful documentation for the planning and execution of lab work. This can also include developing and revising standard operating procedures (SOPs). Task-driven workflows, on the other hand, essentially depend on the specific steps of a process. A collection of samples needs to be processed according to an SOP, and the results recorded. Depending upon the nature of the SOP and the number of samples that have to be processed, the work can be done manually, using instruments, or with partial or full automation, including robotics. With the exception of QA/QC labs, a given laboratory can use a combination of these modes or workflows over time as work progresses and the internal/external resources become available. QA/QC labs are almost exclusively task-driven; contract testing labs are as well, although they may take on project-driven work.

Within the realm of laboratory informatics, project-focused work centers on the electronic laboratory notebook (ELN), which can be described as a lab-wide diary of work and results. Task-driven work is organized around the laboratory information management system (LIMS)—or laboratory information system (LIS) in clinical lab settings—which can be viewed as a workflow manager of tests to be done, results to be recorded, and analyses to be finalized. Both of these technologies replaced the paper-based laboratory notebook discussed earlier, coming with considerable improvements in productivity. And although ELNs are considerably more expensive than paper systems, the short- and long-term benefits of an ELN overshadow that cost issue.

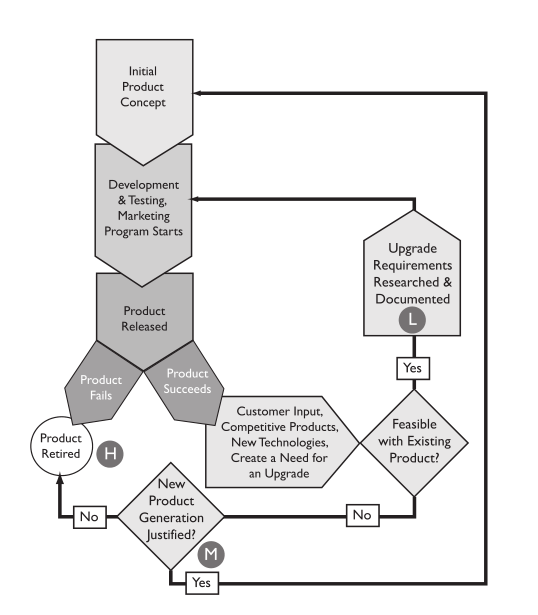

Labs in transition, from manual operation to modern facilities

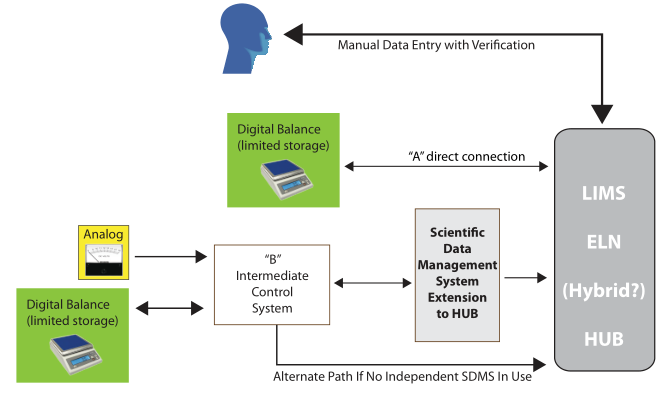

Laboratories didn’t start with lots of electronic components; they began with people, lab benches, glassware, Bunsen burners, and other equipment. Lab operations were primarily concerned with peoples' ability to work. The technology was fairly simple by today’s standards (Figure 2), and an individual’s skills were the driving factor in producing quality results.

For the most part, the skills you learned in school were the skills you needed to be successful here as far as technical matters went; management education was another issue. That changed when electronic instrumentation became available. Analog instruments such as scanning spectrophotometers, chromatographs, mass spectrometer, differential scanning calorimeters, tensile testers, and so on introduced a new career path to laboratory work: the instrument specialist, who combined an understanding of the basic science with the an understanding of the instrument’s design, as well as how to use it (and modify it where needed), maintain it, troubleshoot issues, and analyze the results. Specialization created a problem for schools: they couldn’t afford all the equipment, find knowledgeable instructors, and encourage room in the curriculum for the expanding subject matter. Schools were no longer able to educate people to meet the requirements of industry and graduate-level academia. And then digital electronics happened. Computers first became attached to instruments, and then incorporated into the instrumentation.[f]

The addition of computer hardware and software to an instrument increased the depth of specialization in those techniques. Not only did you have to understand the science noted above, but also the use of computer programs used to work with the instrument, how to collect the data, and how to perform the analysis. An entire new layer of skills was added to an already complex subject.

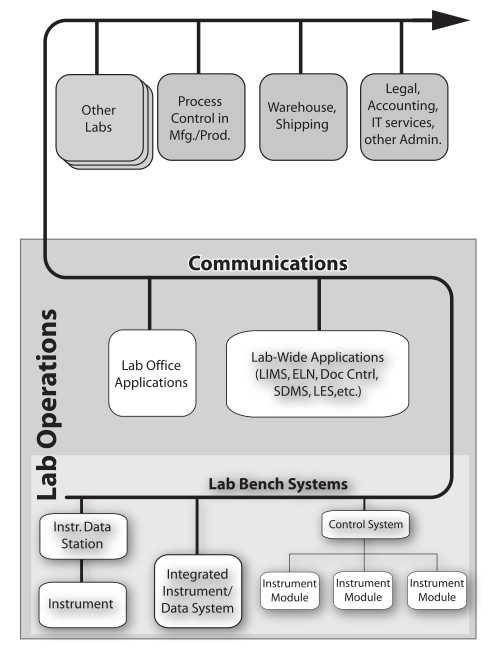

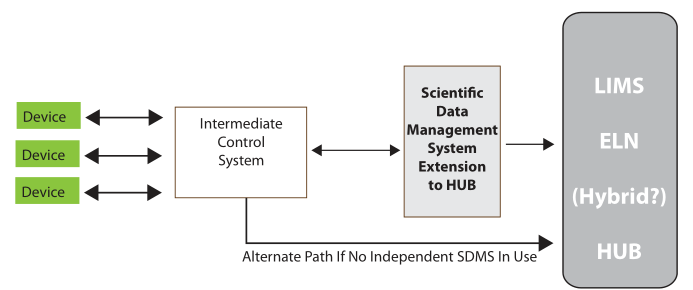

The latest level of complexity added to laboratory operations has been the incorporation of LIMS, ELNs, scientific data management systems (SDMS), and laboratory execution systems (LES) either as stand-alone modules or combined into more integrated packages or "platforms."

There's a plan for that?

It is rare to find a lab that has an informatics plan or strategy in place before the first computer comes through the door; those machines enter as part of an instrument-computer control system. Several computers may use that route to become part of the lab's technology base before people realize that they need to start taking lab computing seriously, including how to handle backups, maintenance, support, etc.

First computers come into the lab, and then the planning begins, often months later, as an incremental planning effort, which is the complete reverse of how things need to be developed. Planning is essential as soon as you decide that a lab space will be created. That almost never happens, in part because no one has told you that is required, let alone why or how to go about it.

Thinking about a model for lab operations

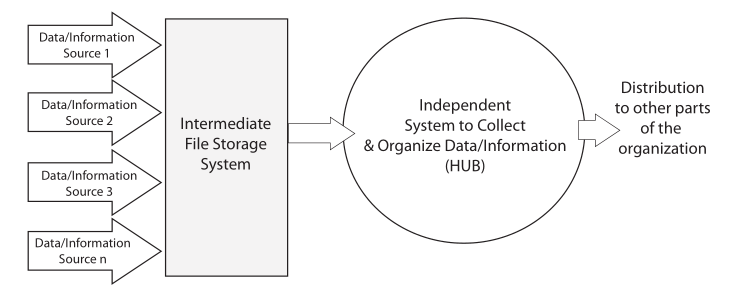

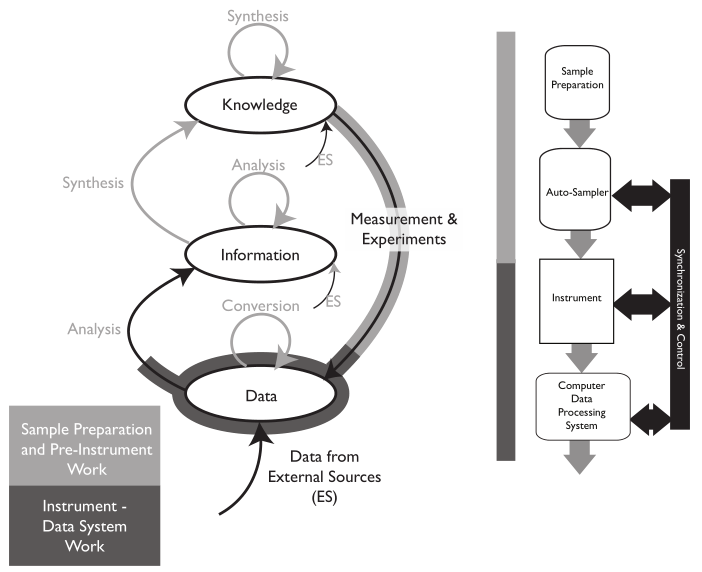

The basic purpose of laboratory work is to answer questions. “How do we make this work?” “What is it?” “What’s the purity of this material?” These questions and others like them occur in chemistry, physics, and the biological sciences. Answering those questions is a matter of gathering data and information through observation and experimental work, organizing it, analyzing it, and determining the next steps needed as the work moves forward (Figure 3). Effective organization is essential, as lab personnel will need to search data and information, extract it, move it from one data system to another for analysis, make decisions, update planning, and produce interim and ultimately final reports.

|

Once the planning is done, scientific work generally begins with collecting observations and measurements (Data/Information Sources 1–4, Figure 3) from a variety of sources. Lab bench work usually involves instrumentation, and many instruments have computer controls and data systems as part of them. This is the more visible part of lab work and the one that matches people’s expectations for a “scientific lab.” This is where most of the money is spent on equipment, materials, and people’s expertise and time. All that expenditure of resources results in “the pH of the glowing liquid is 6.5,” “the concentration of iron in the icky stuff is 1500 ppm,” and so on. That’s the end result of all those resources, time, and effort put into the scientific workflow. That’s why you built a million-dollar facility (in some spheres of science such as astronomy, high energy physics, and the space sciences, the cost of collection is significantly higher). So what do you do with those results? Prior to the 1970s, the collection points were paper: forms, notebooks, and other document, all with their earlier discussed issues.

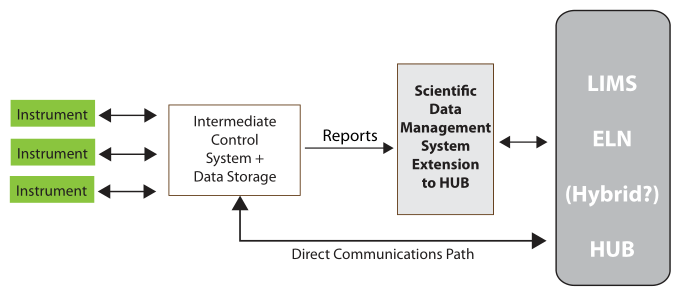

The material on those instrument data systems needs to be moved to an intermediate system for long-term storage and reference (the second step of Figure 3). This is needed because those initial data systems may fail, be replaced, or added to as the work continues. After all, the data and information they’ve collected needs to be preserved, organized, and managed to support continued lab work.

The analyzed results need to be collected into a reference system that is the basis of long-term analysis, management/administration work, and reporting. This last system in the flow is the central hub of lab activities; it is also the distribution point for material sent to other parts of the organization (the third and fourth stages of Figure 3). While it is natural for scientists to focus on the production of data and information, the organization and centralized management of the results of laboratory work needs to be a primary consideration. That organization will be focused of short- and long-term data analysis and evaluation. The results of this get used to demonstrate the lab's performance towards meeting its goals, and it will show those investing in your work that you’ve got your management act together, which is useful when looking for continued support.

Today, those systems come in two basic forms: LIMS and ELN. The details of those systems are the subject of a number of articles and books.[7] Without getting into too much detail:

- LIMS are used to support testing labs managing sample workflows and planning, as well as cataloging results (e.g., short text and numerical information).

- ELNs are usually found in research functioning as an electronic diary of lab work for one or more scientists and technicians. The entries may contain extensive textural material, numerical entries, charts, graphics, etc. The ELN is generally more flexible than a LIMS.

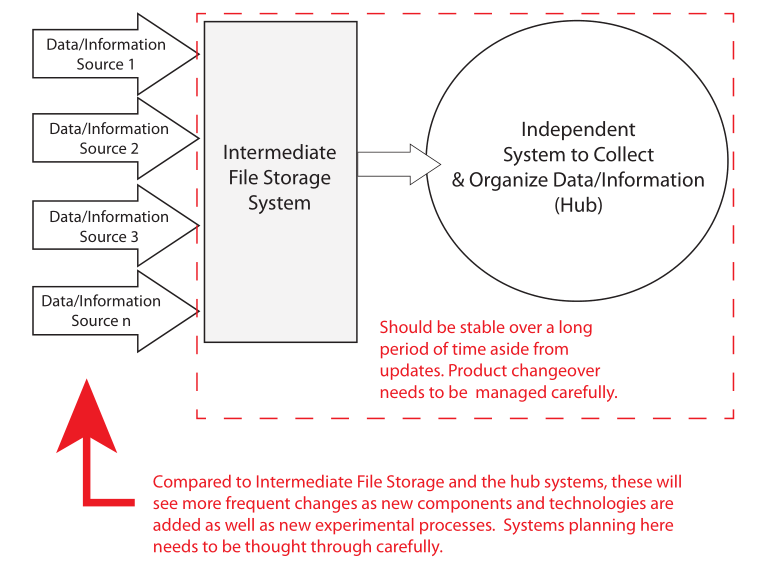

That distinction is simplistic; some labs support both activities and need both types of systems, or even a hybrid package. However, the description is sufficient to get us to the next point: the lifespan of systems varies, depending on where you are looking in Figure 3's model. Figure 4 gives a comparison.

|

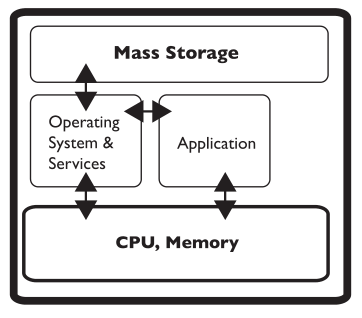

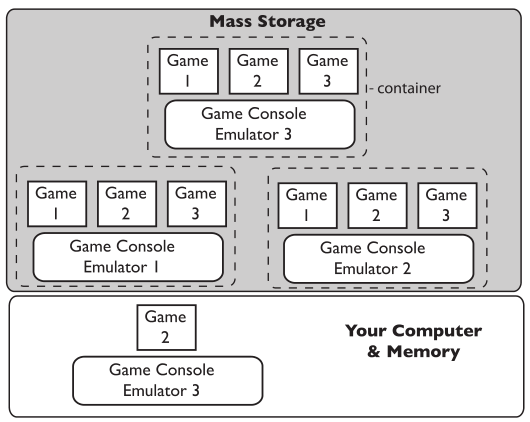

The experimental methods/procedures used in lab work will change over time as the needs of the lab change. Older instruments may be updated and new ones introduced. Retirement is a problem, particularly if data systems are part of the equipment. You have to have access to the data. That need will live on long past the equipment's life. That is one reason that moving data and information to an intermediate system like an SDMS is important. However, in some circumstances, even that isn’t going to be sufficient (regulated industries where the original data structures and software that generated them need to be preserved as an operating entity). In those cases, you may have old computers stacked up just in case you need access to their contents. A better way is to virtualize the systems as containers on servers that support a virtualized environment.

Virtualization—making an electronic copy of computer system and running on a server—is potentially a useful technology in lab work; while it won’t participate in day-to-day activities it does have a role. Suppose you have an instrument-data system that is being replaced or retired. Maybe the computer is showing signs of aging or failing. What do you do with the files and software that are on the computer portion of the combination? You can’t dispose of them because you may need access to those data files and software later. On the other hand, do you really want to collect computer systems that have to be maintained just to have access to the data if and when you need it? Instead, virtualization is a software/hardware technology that allows you to make a complete copy of everything that is on that computer—including operating system files, applications, and data files—and stores it in one big file referred to as a “container.” That container can be moved to a computer that is a virtual server and has software that emulates various operating environment, allowing the software in the container to run as if it were on its own computer hardware. A virtual server can support a lot of containers, and the operating systems in those containers can be updated as needed. The basic idea is that you don’t need access to a separate physical computer; you just need the ability to run the software that was on it. If your reaction to that is one of dismay and confusion, it’s time to buy your favorite IT person a cup of coffee and have a long talk. We’ll get into more details when we cover data backup issues.

Why is this important to you?

While the science behind producing results is the primary reason your lab exists, gaining the most value from the results is essential to the organization overall. That value is going to be governed by the quality of the results, ease of access, the ability to find and extract needed information easily, and a well-managed K/I/D architecture. All of that addresses a key point from management’s perspective: return on investment or ROI. If you can demonstrate that your data systems are well organized and maintained, and that you can easily find and use the results from experimental work and contribute to advancing the organization’s goals, you’ll make it easier to demonstrate solid ROI and gain funding for projects, equipment, and people needed to meet your lab's goals.

The seven goals of planning and managing lab technologies

The preceding material described the need for planning and managing lab technologies, and making sure lab personnel are qualified and educated to participate in that work. The next step is the actual planning. There are at least two key aspects to that work: planning activities that are specific and unique to your lab(s) and addressing broader scope issues that are common to all labs. The discussion found in the rest of this guide is going to focus on the latter points.

Effective planning is accomplished by setting goals and determining how you are going to achieve them. The following sections of this guide look at those goals, specifically:

- Supporting an environment that fosters productivity and innovation

- Developing high-quality data and information

- Managing knowledge, information, and data effectively, putting them in a structure that encourages use and protects value

- Ensuring a high level of data integrity at every step

- Addressing security throughout the lab

- Acquiring and developing "products" that support regulatory requirements

- Addressing systems integration and harmonization

The material below begins the sections on goal setting. Some of these goals are obvious and understandable, others like “harmonization” are less so. The goals are provided as an introduction rather than an in-depth discussion. The intent is to offer something suitable for the purpose of this material and a basis for a more detailed exploration at a later point. The intent of these goals is not to tell you how to do things, but rather what things need to be addressed. The content is provided as a set of questions that you need to think about. The answers aren't mine to give, but rather yours to develop and implement; it's your lab. In many cases, developing and implementing those answers will be a joint effort by all stakeholders.

First goal: Support an environment that fosters productivity and innovation

In order to successfully plan for and manage lab technologies, the business environment should ideally be committed to fostering a work environment that encourages productivity and innovation. This requires:

- proven, supportable workflow methodologies;

- educated personnel;

- fully functional, inter-departmental cooperation;

- management buy-in; and

- systems that meet users' needs.

This is one of those statements that people tend to read, say “sure,” and move on. But before you do that, let’s take a look at a few points. Innovation may be uniquely human (not even going to consider AI), and the ability to be “innovative” may not be universal.

People need to be educated, be able to separate true facts from “beliefs,” and question everything (which may require management support). Innovation doesn’t happen in a highly structured environment, you need the freedom to question, challenge, etc. You also need the tools to work with. The inspiration that leads to innovation can happen anywhere, anytime. All of a sudden all the pieces fit. And then what? That is where a discussion of tools and this work come together.

If a sudden burst of inspiration hits, you want to do it now and not after traveling to an office, particularly if it is weekend or vacation. You need access to knowledge (e.g., documents, reports), information, and data (K/I/D). In order to do that, a few things have to be in place:

- Business and operations K/I/D must be accessible.

- Systems security has to be such that a qualified user can gain access to K/I/D remotely, while preventing its unauthorized use.

- Qualified users must have the hardware and software tools required to access the K/I/D, work with it, and transmit the results of that work to whoever needs to see it.

- Qualified users must also be able to remotely initiate actions such as testing.

Those elements depend on a well-designed laboratory and corporate informatics infrastructure. Laboratory infrastructure is important because that is where the systems are that people need access to, and corporate infrastructure is important since corporate facilities have to provide access, controls, and security. Implementation of those corporate components has to be carefully thought through; they must be strong enough to frustrate unwarranted access (e.g., multi-factor logins) while allowing people to get real work done.

All of this requires flexibility and trust in people, an important part of corporate culture. This will become more important as society adjusts to new modes of working (e.g., working online due to a pandemic) and the realization that the fixed format work week isn’t the only way people can be productive. For example, working from home or off-site is increasingly commonplace. Laboratory professionals work in two modes: intellectual, which can be done anywhere, and the lab bench, where physical research tasks are performed. We need to strike a balance between those modes and the need for in-person vs virtual contact.

Let's take another look at the previous Figure 3, which offered one possible structure for organizing lab systems:

|

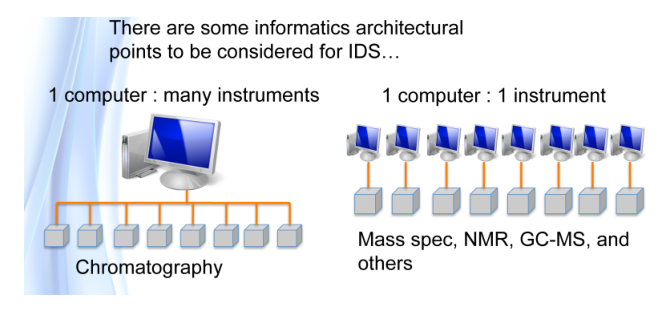

This use of an intermediate file storage system like an SDMS and the aggregation of some instruments to a common computer (e.g., one chromatographic data system for all chromatographs vs. one per instrument) becomes more important for two reasons: 1. it limits the number of systems that have to be accessed to search, organize, extract, and work with K/D/I, and 2. it makes it easier to address security concerns. There are additional reasons why this organization of lab systems is advantageous, but we’ll cover those in later installments. The critical point here is a sound informatics architecture is key to supporting innovation. People need access to tools and K/D/I when they are working, regardless of where they are working from. As such, those same people need to be well-versed in the capabilities of the systems available to them, how to access them, use them, and how to recognize “missing technologies,” capabilities they need but don’t have access to or simply don't exist.

Imagine this. A technology expert consults for two large organizations, one tightly controlled (Company A), the other with a liberal view of trusting people to do good work (Company B). In the first case, getting work done can be difficult, with the expert fighting through numerous reviews, sign-offs, and politics. Company A has a stated philosophy that they don’t want to be the first in the market with a new product, but would rather be a strong number two. They justify their position through the cost of developing markets for new products: let someone else do the heavy lifting and follow behind them. This is not a culture that spawns innovation. Company B, however, thrives on innovation. While processes and procedures are certainly in place, the company has a more relaxed philosophy about work assignments. If the expert has a realizable idea, Company B lets them run with it, as long as they complete their assigned workload in a timely fashion. This is what spurs the human side of innovation.

Second goal: Develop high-quality data and information

Asking staff to "develop high-quality data and information" seems like a pretty obvious point, but this is where professional experience and the rest of the world part company. Most of the world treats “data” and “information” as interchangeable words. Not here.

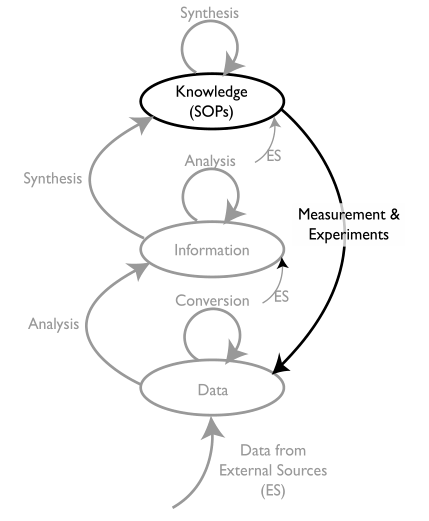

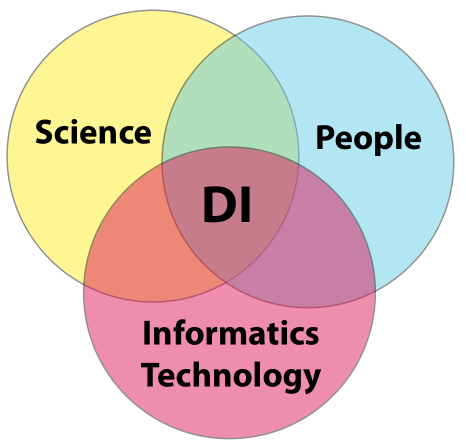

There are three key words that are going to be important in this discussion of goals: knowledge, information, and data (K/I/D). We’ll start with “knowledge”. The type of knowledge we will be looking at is at the laboratory/corporate level, the stuff that governs how a laboratory operates, including reports, administrative material, and most importantly standard operating procedures (SOPs). SOPs tell us how lab work is carried out via its methods, procedures, etc. (This subject parallels the topic of “data integrity,” which will be covered later.) Figure 5 positions K/I/D with respect to each other within laboratory processes.

|

The diagram in Figure 5 is a little complicated, and we’ll get into the details as the material develops. For the moment, we’ll concentrate on the elements in black.

As noted above, SOPs guide activities within the lab. As work is defined—both research and testing—SOPs have to be developed so that people know how to carry out their tasks consistently. Our first concern then is proper management of SOPs. Sounds simple, but in practice it isn’t. It’s a matter of first developing and updating the procedures, documenting them, and then managing both the documents and the lab personnel using them.

When developing, updating, and documenting procedures, a lab will primarily be looking at the science its working with and how regulatory requirements affect it, particularly in research environments. Once developed, those procedures will eventually need to be updated. But why is an update to a procedure needed? What will the effects of the update be based on the changes that were made, and how do the results of the new version compare to the previous version? That last point is important, and to answer it you need a reference sample that has been run repeatedly under the older version so that you have a solid history of the results (i.e., control chart) over time. You also need the ability to run that same reference sample under the new procedure to show that there are no differences, or that differences can be accounted for. If differences persist, what do you do about the previous test results under the old procedure?

The idea of running one or more stable reference samples periodically is a matter of instituting statistical process control over the analysis process. It can show that a process is under control, detect drift in results, and demonstrate that the lab is doing its job properly. If multiple analysts are doing the same work, it can also reveal how their work compares and if there are any problems. It is in effect looking over their shoulders, but that just comes with the job. If you find that the amount of reference material is running low, then phase in a replacement, running both samples in parallel to get a documented comparison with a clean transition from one reference sample to another. It’s a lot of work and it’s annoying, but you’ll have a solid response when asked “are you confident in these results?” You can then say, “Yes, and here is the evidence to back it up.”

After the SOPs have been documented, they must then be effectively managed and implemented. First, take note of the education and experience required for lab personnel to properly implement any SOP. Periodic evaluation (or even certification) would be useful to ensure things are working as they should. This is particularly true of procedures that aren’t run often, as people may forget things.

Another issue of concern with managing SOPs is how to manage versioning. Consider two labs. Lab 1 is a well-run lab. When a new procedure is issued, the lab secretary visits each analyst, takes their copy of the old method, destroys it, provides a copy of the new one, requires the analyst sign for receipt, and later requires a second signature after the method has been reviewed and understood. Additional education is also provided on an as-needed basis. Lab 2 has good intentions, but it's not as proactive as Lab 1. Lab 2 retains all documents on a central server. Analysts are able to copy a method to their machines and use it. However, there is no formalized method of letting people know when a new method is released. At any given time there may be several analysts running the same method using different versions of the related SOP. The end result is having a mix of samples run by different people according to different SOPs.

This comparison of two labs isn’t electronic versions vs. paper, but rather a formal management structure vs. a loose one. There’s no problem maintaining SOPs in an electronic format, as there are many benefits, but there shouldn’t be any question about the current version, and there should be a clear process for notifying people about updates while also ensuring that analysts are currently educated in the new method's use.

Managing this set of problems—analyst education, versions of SOPs, qualification of equipment, current reagents, etc.— was the foundation for one of the early original ELNs, SmartLab by Velquest, now developed as a LES by Dassault Systèmes as part of the BIOVIA product line. And while Dassault's LES, and much of the Biovia product line, narrowly focuses on their intended market, the product remains suitable for any lab where careful control over procedure execution is warranted. This is important to note, as a LES is designed to guide a person through a procedure from start to finish, making it one step away from engaging in a full robotics system (robotics may play a role in stages of the process). The use of an LES doesn’t mean that personnel aren’t trusted or deemed incompetent; rather, it is a mechanism for developing documented evidence that methods have been executed correctly. That evidence builds confidence in results.

LESs are available from several vendors, often as part of their LIMS or ELN offerings. Using any of these systems requires planning and scripting (a gentler way of saying “programming”), and the cost of implementation has to be balanced against the need (does the execution of a method require that level of sophistication) and ROI.

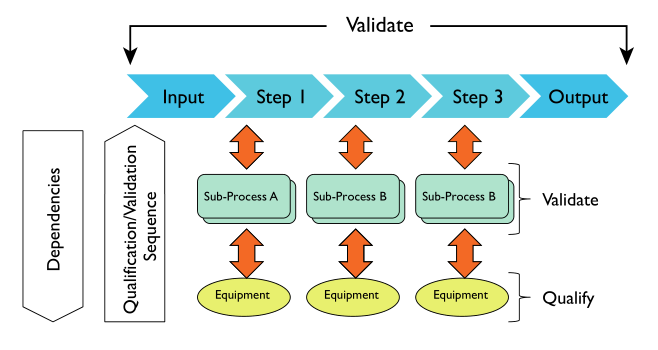

Up to this point, we’ve looked at developing and managing SOPs, as well as at least one means of controlling experiment/procedure execution. However, there are other ways of going about this, including manual and full robotics systems. Figure 6 takes us farther down the K/I/D model to elaborate further on experiment/procedure execution.[g]

|

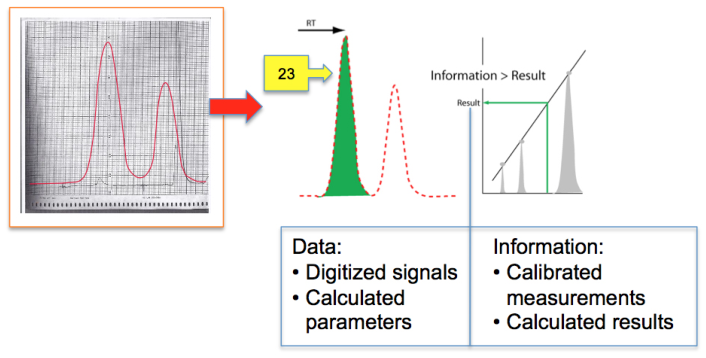

As we move from knowledge development and management (i.e., SOPs), and then on to sample preparation (i.e., pre-experiment), the next step is usually some sort of measurement by an instrument, whether it is pH meter or spectrometer, yielding your result. That brings us to two words we noted earlier: "data" and "information." We'll note the differences between the two using a gas chromatography system as an example (Figure 7), as it and other base chromatography systems are among the most widely used of upper-tier instrumentation and widely found in labs where chemical analysis is performed.

|

As we look at Figure 7, we notice to the right of the vertical blue line is an output signal from a gas chromatograph. This is what chromatographers analyzed and measured when they carried out their work. The addition of a computer made life easier by removing the burden of calculations, but it also added complexity to the work in the form of having to manage the captured electronic data and information. An analog-to-digital (A/D) converter transformed those smooth curves to a sequence of numbers that are processed to yield parameters that described the peaks, which in turn were used to calculate the amount of substance in the sample. Everything up to that last calculation—left of the vertical blue line—is “data,” a set of numerical values that, taken individually, have no meaning by themself. It is only when we combine it with other data sets that we can calculate a meaningful result, which gives us “information.”

The paragraph above describes two different types of data:

1. the digitized detector output or "raw data," constituting a series of readings that could be plotted to show the instrument output; and

2. the processed digitized data that provides descriptors about the output, with those descriptors depending largely upon the nature of the instrument (in the case of chromatography, the descriptors would be peak height, retention time, uncorrected peak area, peak widths, etc.).

Both are useful and neither of them should be discarded; the fact that you have the descriptors doesn’t mean you don’t need the raw data. The descriptors are processed data that depends on user-provided parameters. Changing the parameter can change the processing and the values assigned to those descriptors. If there are accuracy concerns, you need the raw data as a backup. Since storage is cheap, there really isn’t any reason to discard anything, ever. (And in some regulatory environments, keeping raw data is mandated for a period of time.)

If you want to study the data and how it was processed to yield a result, you need more data, specifically the reference samples (standards) used to evaluate each sample. An instrument file by itself is almost useless without the reference material run with that sample. Ideally, you’d want a file that contains all the sample and reference data that was analyzed in one session. That might be a series of manual samples analyzed or an entire auto-sampler tray.

Everything we've discussed here positively contributes to developing high-quality data and information. When methods are proven and you have documented evidence that they were executed by properly educated personnel using qualified reagents and instruments, you then have the instrument data to support each sample result and any other information gleaned from that data.

You might wonder what laboratorians did before computers. They dealt with stacks of spectra, rolls of chromatograms, and laboratory notebooks, all on paper. If they wanted to find the data (e.g., a pen trace on paper) for a sample, they turned to the lab's physical filing system to locate it.[h] Why does this matter? That has to do with our third goal.

Third goal: Manage K/I/D effectively, putting them in a structure that encourages use and protects value

In the previous section we introduced three key elements of laboratory work: knowledge, information, and data (K/I/D). Each of these is “database” structures (“data” in the general sense). We also looked at SOP management as an example of knowledge management, and distinguished “data” and “information” management as separate but related concerns. We also introduced flow diagrams (Figures 5 and 6) that show the relationship and development of each of those elements.

In order for those elements to justify the cost of their development, they have to be placed in systems that encourage utilization and thus retain their value. Modern informatics tools assist in many ways:

- Document management systems support knowledge databases (and some LIMS and ELNs inherently support document management).

- LIMS and ELNs provide a solid base for laboratory information, and they may also support other administrative and operational functions.

- Instrument data systems and SDMS collect instrument output in the form of reports, data, and information.

You may notice there is significant functional redundancy as vendors try to create the “ultimate laboratory system.” Part of lab management’s responsibility is to define what the functional architecture should look like based on their current and perceived needs, rather than having it defined for them. It’s a matter of knowing what is required and seeing what fits rather than fitting requirements into someone else’s idea of what's needed.

Managing large database systems is only one aspect of handling K/I/D. Another aspect involves the consideration of cloud vs. local storage systems. What option works best for your situation, is the easiest to manage, and is supported by IT? We also have to address the data held in various desktop and mobile computing devices, as well as bench top systems like instrument data systems. There are a number of considerations here, not the least of which is product turnover (e.g., new systems, retired systems, upgrades/updates, etc.). (Some of these points will be covered latter on in other sections.)

What you should think about now is the number of computer systems and software packages that you use on a daily basis, some of which are connected to instruments. How many different vendors are involved? How big are vendors (e.g., small companies/limit staff, large organizations)? How often do they upgrade their systems? What’s the likelihood they’ll be around in two or five years?

Also ask what data file formats the vendor uses; these formats vary widely among vendors. Some put everything in CSV files, others in proprietary formats. In the latter case, you may not be able to use the data files without the vendor's software. In order to maintain the ability to work with instrument data, you will have to manage the software needed to open files and work with it, in addition to just making sure you have copies of the data files. In short, if you have an instrument-computer combination that does some really nice stuff and you want to preserve the ability to gain value from that instrument's data files, you have to make a backup copy of the software environment and the data files. This is particularly important if you're considering retiring a system that you'll still want to access data from, plus you may have to maintain any underlying software license. This is where the previous conversation about virtualization and containers comes in.

If you think about a computer system it has two parts: hardware (e.g., circuit boards, hard drive, memory, etc.) and software (e.g., the OS, applications, data files, etc.). From the standpoint of the computer’s processor, everything is either data or instructions read from one big file on the hard drive, which the operating system has segmented for housing different types of files (that segmentation is done for your convenience; the processor just sees it all as a source of instructions and data). Virtualization takes everything on the hard drive, turns it into a complete file, and places that file onto a virtualization server where it is stored as a file called a “container.” That server allows you to log in, open a container, and run it as though it were still on the original computer. You may not be able to connect it the original instruments to the containerized environment, but all the data processing functions will still be there. As such, a collection of physical computers can become a collection of containers. An added benefit of virtualizations applies when you're worried about an upgrade creating havoc with your application; instead, make a container as a backup.[i]

The advantage of all this is that you continue to have the ability to gain value and access to all of your data and information even if the original computer has gone to the recycle bin. This of course assumes your IT group supports virtualization servers, which provide an advantage in that they are easier to maintain and don’t take up much space. In larger organization this may already be happening, and in smaller organizations a conversation may be had to determine IT's stance. The potential snag in all this is whether or not the software application's vendor license will cover the operation of their software on a virtual server. That is something you may want to negotiate as part of the purchase agreement when you buy the system.

This section has shown that effective management of K/I/D is more than just the typical consideration of database issues, system upgrades, and backups. You also have to maintain and support the entire operating system, the application, and the data file ecosystem so that you have both the files needed and the ability to work with them.

Fourth goal: Ensure a high level of data integrity at every step

“Data integrity” is an interesting couple of words. It shows up in marketing literature to get your attention, often because it's a significant regulatory concern. There are different aspects to the topic, and the attention given often depends on a vendor's product or the perspective of a particular author. In reality, it touches on all areas of laboratory work. The following is an introduction to the goal, with more detail given in later sections.

Definitions of data integrity

There are multiple definitions of "data integrity." A broad encyclopedic definition can be found at Wikipedia, described as "the maintenance of, and the assurance of, data accuracy and consistency over its entire life-cycle" and "a critical aspect to the design, implementation, and usage of any system that stores, processes, or retrieves data."[8]

Another definition to consider is from a more regulatory perspective, that of the FDA. In their view, data integrity focuses on the completeness, consistency, accuracy, and validity of data, particularly through a mechanism called the ALCOA+ principles. This means the data should be[9]:

- Attributable: You can link the creation or alteration of data to the person responsible.

- Legible: The data can be read both visually and electronically.

- Contemporaneous: The data was created at the same time that the activity it relates to was conducted.

- Original: The source or primary documents relating to the activity the data records are available, or certified versions of those documents are available, e.g., a notebook or raw database. (This is one reason why you should collect and maintain as much data and information from an instrument as possible for each sample.)

- Accurate: The data is free of errors, and any amendments or edits are documented.

Plus, the data should be:

- Complete: The data must include all related analyses, repeated results, and associated metadata.

- Consistent: The complete data record should maintain the full sequence of events, with date and time stamps, such that the steps can be repeated.

- Enduring: The data should be able to be retrieved throughout its intended or mandated lifetime.

- Available: The data is able to be accessed readily by authorized individuals when and where they need it.

Both definitions revolve around the same point: the data a lab produces has to be reliable. The term "data integrity" and its associated definitions are a bit misleading. If you read the paragraphs above you get the impression that the focus in on the results of laboratory work, when in fact it is about every aspect of laboratory work, including the methods used and those who conduct those methods.

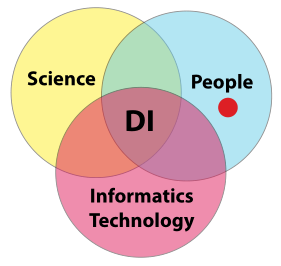

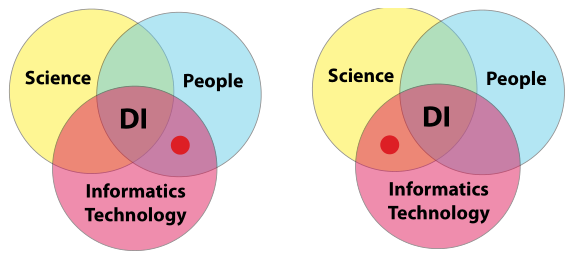

In order to gain meaningful value from laboratory K/I/D, you have to be assured of its integrity; “the only thing worse than no data, is data you can’t trust.” That is the crux of the matter. How do you build that trust? Building a sense of confidence in a lab's data integrity efforts requires addressing three areas of concern and their paired intersections: science, people, and informatics technology. Once we have successfully managed those areas and intersection points, we are left with the intersection common to all of them: constructed confidence in a laboratory's data integrity efforts (Figure 8).

|

The science

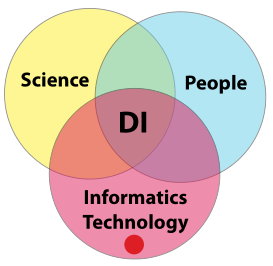

We’ll begin with a look at the scientific component of the conversation (Figure 9). Regardless of the kinds of questions being addressed, the process of answering them is rooted in methods and procedures. Within the context of this guide, those methods have to be validated or else your first step in building confidence has failed. If those methods end with electronic measurements, then that equipment (including settings, algorithms, analysis, and reporting) have to be fully understood and qualified for use in the validated process. The manufacturer's default settings should either be demonstrated as suitable or avoided.

The people

People (Figure 10) need to be thoroughly educated and competent to meet the needs of the laboratory's operational procedures and scientific work. That education needs to extend beyond the traditional undergraduate program and include the specifics of instrumental techniques used. A typical four-year program doesn’t have the time to cover the basic science and the practical aspects of how science is conducted in modern labs, and few schools can afford the equipment needed to meet that challenge. This broader educational emphasis is part of the intersection of people and science.

Another aspect of “people” is the development of a culture that contributes to data integrity. Lab personnel need to be educated on the organization’s expectations of how lab work needs to be managed and maintained. This includes items such as records retention, dealing with erroneous results, and what constitutes original data. They should also be fully aware of corporate and regulatory guidelines and the effort needed to enforce them.[j] This is another instance where education beyond that provided in the undergraduate curriculum is needed.

Informatics technology

Laboratory informatics technology (Figure 11) is another area where data integrity can either be enhanced or lost. The lab's digital architecture needs to be designed to support relatively easy access (within the scope of necessary security considerations) to the lab's data from the raw digitized detector output, through intermediate processed stages and to the final processed information. Unnecessary duplication of K/D/I must be avoided. You also need to ensure that the products chosen for lab work are suitable for the work and have the ability to be integrated electronically. After all, the goal is to avoid situations where the output of one system is printed and then manually entered into another.

The implementation and use of informatics technology should be the result of careful product selection and their intentional design—from the lab bench to central database systems such as LIMS, ELN, SDMS, etc.—rather than haphazard approach of an aggregate of lab computers.

Other areas of concern with informatics technology include backups, security, and product life cycles, which will be addressed in later sections. If as we continue onward through these goals it appears like everything touches on data integrity, it's because it does. Data integrity can be considered an optimal result of the sum of well-executed laboratory operations.

The intersection points

Two of the three intersection points deserve minor elaboration (Figure 12). First, the intersection of people and informatics technologies has several aspects the address. The first is laboratory personnel’s responsibility—which may be shared with corporate or LAB-IT—for the selection and management of informatics products. The second is the fact that this requires those personnel to be knowledgeable concerning the application of informatics technologies in laboratory environments. Ensure the selected personnel have the appropriate backgrounds and knowledge to consider, select, and effectively use those products and technologies.

The other intersection point to be addressed is that of science with informatics technology. Here, stakeholders are concerned with product selection, system design (for automated processes), and system integration and communication with other systems and instruments. Again, as noted above, we go into more detail in later sections. The primary point here, however, can be summed up as determining whether or not the products selected for your scientific endeavors are compatible with your data integrity goals.

|

Addressing the needs of these two intersection points requires deliberate effort and many planning questions regarding vendor support, quality of design, system interoperability, result output, and scientific support mechanisms. Questions to ask include:

- Vendor support: How responsive are vendors to product issues? Do you get a fast, usable response or are you left hanging? A product that is having problems can affect data quality and reliability.

- Quality of design: How easy is the system to use? Are controls, settings, and working parameters clearly defined and easily understood? Do you know what effect changes in those points will have on your results? Has the system been tuned to your needs (not adjusted to give you the answers you want, but set to give results that truly represent the analysis)? Problems with adjusting settings properly can distort results. (This is one area where data integrity may be maintained throughout a process, and then lost because of improper or untested controls on an instrument's operation.)

- System interoperability: Will there be any difficulty in integrating a software product or instrument into a workflow? Problems with sample container compatibility, operation, control software, etc. can cause errors to develop in the execution of a process flow. For example, problems with pipette tips can cause errors in fluid delivery.

- Result output: Is an electronic transfer of data possible, or does the system produce printed output (which means someone typing results into another system)? How effective is the communications protocol; is it based on a standard or does it require custom coding, which could be error prone or subject to interference? Is the format of the data file one that prevents changes to the original data? For example, CSV files allow easy editing and have the potential for corruption, nullifying data integrity efforts.

- Scientific support mechanisms: Does the product fully meet the intended need for functionality, reliability, and accuracy?

The underlying goal in this section goes well beyond the material that is covered in schools. Technology development in instrumentation and the application of computing and informatics is progressing rapidly, and you can’t assume that everything is working as advertised, particularly for your application. Software has bugs and hardware has limitations. Applying healthy skepticism towards products and requiring proof that things work as needed protect the quality of your work.

If you’re a scientist reading this material, you might wonder why you should care. The answer is simply this: it is the modern evolution of how laboratory work gets done and how results are put to use. If you don’t pay attention to the points noted, data integrity may be compromised. You may also find yourself the unhappy recipient of a regulatory warning letter.

While there are some outcomes that could occur that you prefer didn't, there are also positive outcomes to come from your data integrity efforts: your work will be easier and protected from loss, results will be easier to organize and analyze, and you’ll have a better functioning lab. You’ll also have fewer unpleasant surprises when technology changes occur and you need to transition from one way of doing things to another. Yet there's more to protecting the integrity of your K/I/D than addressing the science, people, and information technology of your lab. The security of your lab and its information systems must also be addressed.

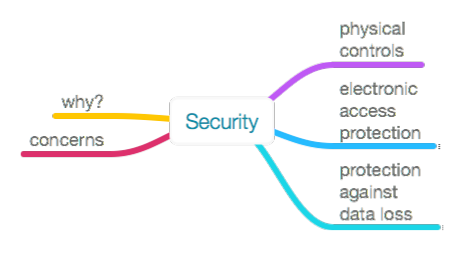

Fifth goal: Addressing security throughout the lab

Security is about protection, and there are two considerations in this matter: what are we protecting and how do we enact that protection? The first is easily answered by stating that we're protecting our ability to effectively work, as well as the results of that work. This is largely tied to the laboratory's data integrity efforts. The second consideration, however, requires a few more words.

Broadly speaking, security is not a popular subject in science, as it is viewed as not advancing scientific work or the development of K/I/D. Security is often viewed as inhibiting work by imposing a behavioral structure on people's freedom to do their work how they wish. Given these perceptions, it should be a lab's goal to create a functional security system that provides the protection needed while at the same time minimizing the intrusion in people’s ability to work.

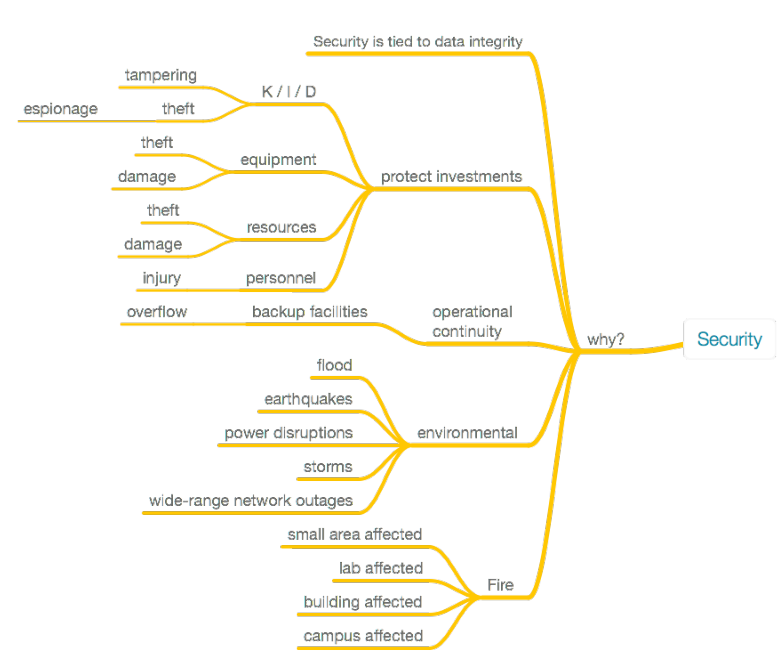

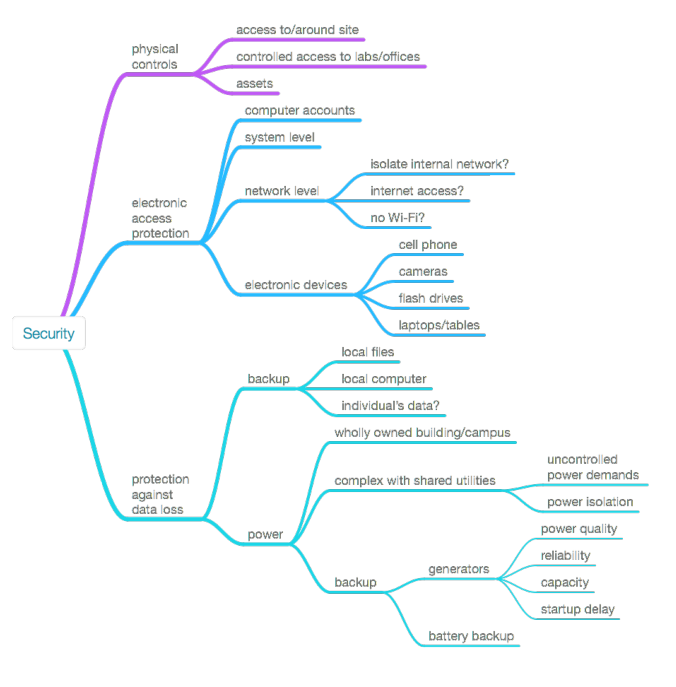

This section will look at a series of topics that address the physical and electronic security of laboratory work. Those major topics are shown in Figure 13 below. The depth of the commentary will vary, with some topics getting discussed at length and others by brief reference to others' work.

|

Why must security be addressed in the laboratory? There are many reasons, which are best diagramed, as seen in Figure 14:

|

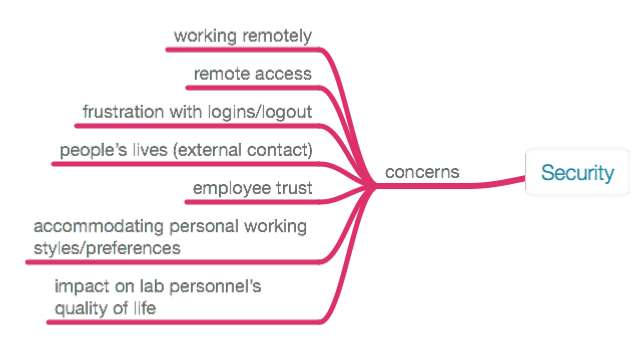

All of these reasons have one thing in common: they affect our ability to work and access the results of that work. This requires a security plan. In the end, implemented security efforts either preserve those abilities, or they reduce the value and utility of the work and results, particularly if security isn't implemented well or adds a burden to personnel's ability to work. While addressing these reasons and their corresponding protections, we should keep in mind a number of issues when developing and implementing a security plan within the lab (Figure 15). Issues like remote access have taken on particular significance over the course of the COVID-19 pandemic.

|

When the subject of security comes up, people's minds usually go in one of two directions: physical security (i.e., controlled access) and electronic security (i.e., malware, viruses, ransomware, etc.). We’re going to come at it from a different angle: how do the people in your lab want to work? Instead of looking at a collection of solutions to security issues, we’re going to first consider how lab personnel want to be working and within what constraints, and then we'll see what tools can be used to make that possible. Coming at security from that perspective will impact the tools you use and their selection, including everything from instrument data systems to database products, analytical tools, and cloud computing. The lab bench is where work is executed, and the planning and thinking take place between our ears, something that can happen anywhere. How do we provide people with the freedom to be creative and work effectively (something that may be different for each of us) while maintaining a needed level of physical and intellectual property security? Too often security procedures seem to be designed to frustrate work, as noted in the previous Figure 15.

The purpose of security procedures are to protect intellectual property, data integrity, resources, our ability to work, and lab personnel, all of which can be impacted by the reasons given in the prior Figure 14. However, the planning for how to approach these security procedures requires the coordination with and cooperation of several stakeholders within and tangentially related to the laboratory. Ensure these and any other necessary stakeholders are involved with the security planning efforts of your laboratory:

- Facilities management: These stakeholders manage the physical infrastructure you are working in and have overall responsibility for access control and managing the human security assets in larger companies. In smaller companies and startups, the first line of security may be the receptionist; how well trained are they to deal with the subject?

- IT groups: These stakeholders will be responsible for designing and maintaining (along with facilities management) the electronic security systems, which range from passkeys to networks.