Journal:Secure record linkage of large health data sets: Evaluation of a hybrid cloud model

| Full article title | Secure record linkage of large health data sets: Evaluation of a hybrid cloud model |

|---|---|

| Journal | JMIR Medical Informatics |

| Author(s) | Brown, Adrian P.; Randall, Sean M. |

| Author affiliation(s) | Curtin University |

| Primary contact | Email: adrian dot brown at curtin dot edu dot au |

| Editors | Eysenbach, G. |

| Year published | 2020 |

| Volume and issue | 8(9) |

| Article # | e18920 |

| DOI | 10.2196/18920 |

| ISSN | 2291-9694 |

| Distribution license | Creative Commons Attribution 4.0 International |

| Website | https://medinform.jmir.org/2020/9/e18920/ |

| Download | https://medinform.jmir.org/2020/9/e18920/pdf (PDF) |

Abstract

Background: The linking of administrative data across agencies provides the capability to investigate many health and social issues, with the potential to deliver significant public benefit. Despite its advantages, the use of cloud computing resources for linkage purposes is scarce, with the storage of identifiable information on cloud infrastructure assessed as high-risk by data custodians.

Objective: This study aims to present a model for record linkage that utilizes cloud computing capabilities while assuring custodians that identifiable data sets remain secure and local.

Methods: A new hybrid cloud model was developed, including privacy-preserving record linkage techniques and container-based batch processing. An evaluation of this model was conducted with a prototype implementation using large synthetic data sets representative of administrative health data.

Results: The cloud model kept identifiers on-premises and used privacy-preserved identifiers to run all linkage computations on cloud infrastructure. Our prototype used a managed container cluster in Amazon Web Services to distribute the computation using existing linkage software. Although the cost of computation was relatively low, the use of existing software resulted in an overhead of processing of 35.7% (149/417 minutes execution time).

Conclusions: The result of our experimental evaluation shows the operational feasibility of such a model and the exciting opportunities for advancing the analysis of linkage outputs.

Keywords: cloud computing, medical record linkage, confidentiality, data science

Introduction

Background

In the last 10 years, innovative development of software applications, wearables, and the internet of things has changed the way we live. These technological advances have also changed the way we deliver health services and provide a rapidly expanding information resource, with the potential for data-driven breakthroughs in the understanding, treatment, and prevention of disease. Additional information from patient-related devices like mobile phone and Google search histories[1], wearable devices[2], and mobile phone apps[3] provides new opportunities for monitoring, managing, and improving health outcomes in new and innovative ways. The key to unlocking these data is in relating details at the individual patient level to provide an understanding of risk factors and appropriate interventions.[4] The linking, integration, and analysis of these data has recently been described as "population data science."[5]

Record linkage is a technique for finding records within and across one or more data sets thought to refer to the same person, family, place, or event.[6] Coined in 1946, the term describes the process of assembling the principal life events of an individual from birth to death.[7] In today’s digital age, the capacity of systems to match records has increased, yet the aim remains the same: linking records to construct individual chronological histories and undertake studies that deliver significant public benefit.

An important step in the evolution of data linkage is to develop infrastructure with the capacity to link data across agencies to create enduring integrated data sets. Such resources provide the capability to investigate many health and social issues. A number of collaborative groups have invested in a large-scale record linkage infrastructure to achieve national linkage objectives.[8][9] The establishment of research centers specializing in the analysis of big data also recognizes the issue of increasing data size and complexity.[10]

As the demand for data linkage increases, a core challenge will be to ensure that the systems are scalable. Record linkage is computationally expensive, with a potential comparison space equivalent to the Cartesian product of the record sets being linked, making linkage of large data sets (in the tens of millions or greater) a considerable challenge. Optimizing systems, removing manual operations, and increasing the level of autonomy for such processes is essential.

A wide range of software is currently used for record linkage. System deployments range from single desktop machines to multiple servers, with most being hosted internally to organizations. The functional scope of packages varies greatly, with manual processes and scripts required to help manage, clean, link, and extract data. Many packages struggle with large data set sizes.

Many industries have moved toward cloud computing as a solution for high computational workloads, data storage, and analytics.[11] An overview of cloud computing types and service models is shown in Table 1. The business benefits of cloud computing include usage-based costing, minimal upfront infrastructure investment, superior collaboration (both internally and externally), better management of data, and increased business agility.[12] Despite these advantages, uptake by the record linkage industry has been slow. One reason for this is that the storage of identifiable information on cloud infrastructure has been assessed as high-risk by data custodians. Although security in cloud computing systems has been shown to be more robust than some in-house systems[13], the media reporting of data breaches has created an impression of insecurity and vulnerability.[14] A culture of risk aversion leaves the record linkage units with expensive, dedicated equipment and computing resources that require managing, maintaining, and upgrading or replacing regularly.

| ||||||||||||||||||||

To leverage the advantages of cloud computing, we need to explore operational cloud computing models for record linkage that consider the specific requirements of all stakeholders. In addition, linkage infrastructure requires the development and implementation of robust security and information governance frameworks as part of adopting a cloud solution.

Related work

Some research on algorithms that address the computational burden of the comparison and classification tasks in record linkage has been undertaken. Most work on distributed and parallel algorithms for record linkage is specific to the MapReduce paradigm[15], a programming model for processing large data sets in parallel on a cluster. Few sources detail the comparison and classification tasks themselves, with the focus on load balancing algorithms to address issues associated with data skew. These works attempt to optimize the workload distribution across nodes while removing as many true negatives from the comparison space as possible.[16][17][18][19] Load balancing algorithms typically use multiple MapReduce jobs and different indexing methods to tackle the data skew problem. Indexing methods include standard blocking[17][18], density-based blocking[16], and locality sensitive hashing (LSH) [20], with varying success in optimizing the workload distribution.[20]

Pita et al.[21] have built on the MapReduce-based work and demonstrated good performance and quality using a Spark-based workflow for probabilistic linkage. Spark was chosen for in-memory processing, ease of programming, scalability, and the new resilient distributed data set model. Like MapReduce, Spark continues to be used to address the issues with linkage and data skew on larger data sets. Spark solutions for full entity resolution are being developed, with different indexing techniques used to address workload distribution. The SparkER tool by Gagliardelli et al[22] uses LSH, meta-blocking, and a block purging process to remove high-frequency blocking keys. Mestre et al.[23] presented a sorted neighborhood implementation with an adaptive window size, which uses three Spark transformation steps to distribute the data and minimize data skew.

Outside of the Hadoop ecosystem, which MapReduce and Spark are a part of, there have been some efforts to address the linkage of larger data sets through other parallel processing techniques. Sehili et al.[24] presented a modified version of PPJoin, called P4Join, that can parallelize record matching on graphics processing units (GPUs), claiming an execution time improvement of up to 20 times. Despite its potential for significant improvements in runtime performance, there has not been any further work published on P4Join using larger data sets or on clusters of GPU nodes. More recently, Boratto et al.[25] evaluated a hybrid algorithm using both GPUs and central processing units (CPUs) with much larger data sets. Although restricted to single (highly specified) machines, these evaluations show promise, provided that the approach can be applied within a compute cluster. Again, there is not yet any further work available.

The blocking techniques used in these studies are based on the same techniques used for traditional probabilistic and deterministic linkages.[15] There are many blocking techniques used in these conventional approaches to record linkages that reduce the comparison space significantly, even when running a linkage on a single machine.[26] However, these approaches become inefficient as data set sizes become larger. They also come with a trade-off; the creation of blocks that reduce the comparisons required for linkage will inevitably reduce the coverage of true matches, resulting in more missed matches.

Much of the work in distributed linkage algorithms is focused on performance, often at the expense of linkage accuracy. Adapting these blocking techniques to distribute workload across many compute nodes has reduced the comparisons efficiently. Unfortunately, this increased efficiency has impacted the accuracy further, reducing comparisons at the expense of missing more true matches. There is still a trade-off between performance and accuracy, and further work is required to address it.

Data flow and release for record linkage

As data custodians are responsible for the use of their data, researchers must demonstrate to custodians that all aspects of privacy, confidentiality, and security have been addressed. The release of personal identifiers for linkage can be restricted, with privacy regulations such as the Health Insurance Portability and Accountability Act privacy rules[27] or E.U. regulations mandating the use of encrypted identifiers.[28] Standard record linkage methods and software are often unsuitable for linkage based on encrypted identifiers. Privacy-preserving record linkage (PPRL) techniques have been developed to enable linkage on encrypted identifiers.[29] These techniques typically use Bloom filters to store encrypted identifiers, a probabilistic data structure that can be used to approximate the equality of two sets. The emergence of these PPRL methods means that data custodians are not required to release personal identifiers. The use of PPRL methods in operational environments is still in its infancy, with limited tooling available. Available software includes the proprietary LinXmart[30], an R package called PPRL developed by the German Record Linkage Center[31], LSHDB[32], LinkIT[33], and the Secure Open Enterprise Master Patient Index (SOEMPI).[34] There is little published data on how much these systems are used outside of the organizations that created them. However, PPRL is a key technology that greatly opens the acceptability of cloud solutions for record linkage.

Record linkage process

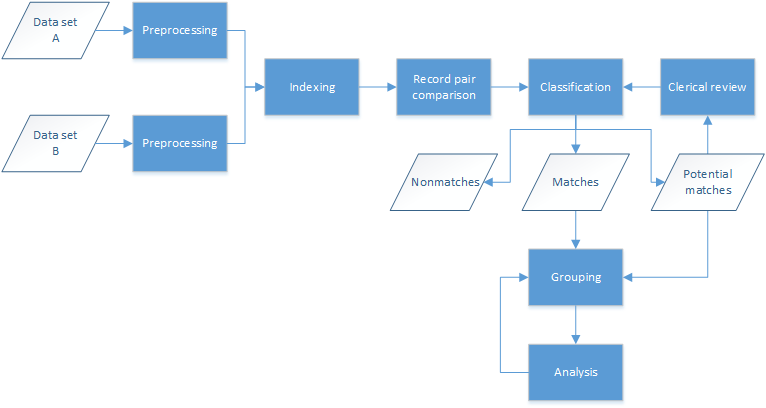

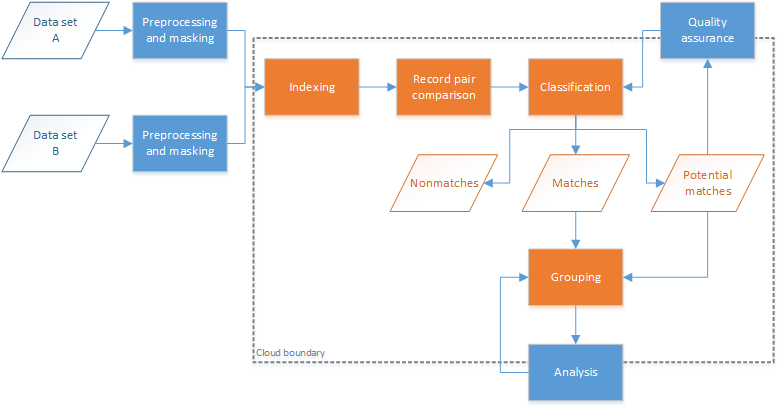

Record linkage typically follows a standard process for the matching of two or more data sets, as shown in Figure 1. The data sets first undergo some preprocessing, a cleaning and standardization step to ensure consistency with the formatting of fields across data sets. The next step (indexing) attempts to reduce the number of record-level comparisons required (the latter often referred to as the comparison space), removing comparisons that are most likely to be false matches. The indexing step typically groups data sets into overlapping blocks or clusters based on sets of field values and can provide up to 99% reduction in the comparison space. Record pair comparisons occur next, within the blocks or clusters determined during the indexing step; this comparison step is the most computationally expensive and often requires large data sets to be broken down into smaller subsets. Classification of the record pairs into matches, nonmatches, and potential matches results in groups of entities (or individuals) based on the match results. Potential matches can be assessed manually or through special tooling to determine whether they should be classified as matches or nonmatches. A common approach to grouping matches is to merge all records that link together into a single group; however, different approaches can be used to reduce linkage error.[35] Analysis of the entity groups is the last step, where candidate groups are clerically reviewed to determine if and how the records in these groups should be regrouped.

|

This paper presents two contributions to record linkage. First, it offers a model for record linkage that utilizes cloud computing capabilities while providing assurance that data sets remain secure and local. Lessons learned from many real-world record linkage projects, including several PPRL projects, have been instrumental in the design of this cloud model.[30][36][37] Second, the use of containers to distribute linkage workloads across multiple nodes is presented and evaluated within the cloud model.

Methods

Design of a cloud model for record linkage

The standard record linkage process relies on one party (known as the trusted third party [TTP]) having access to all data sets. Handling records containing identifiable data requires a sound information governance framework, with controls in place that manage potential risks. Even with a well-managed information security system in place, access to some data sets may still be restricted. The TTP also requires infrastructure that can help manage data sets, matching processes and linkage key extractions over time. As the number and size of data sets grow, the computational needs and storage capacity must grow with it. However, the computation requirements for data linkage are often sporadic bursts of intense workloads, leaving expensive hardware sitting idle for extended periods.

Dedicated data linkage units in government and academic institutions exist across Australia, Canada, and the United Kingdom, acting as trusted third parties for data custodians. These data linkage units were established from the need to link data for health research at the population level. Some data linkage units are involved in the linkage of other sectors such as justice; however, the primary output of these organizations is linked data for health research. It is essential that a cloud model for record linkage takes into account the linkage practices and processes that have been developed by these organizations.

Our cloud model for record linkage addresses the limitations of data release and the computational needs of the linkage process. Data custodians and linkage units retain control of their identifiable information, while the matching of data sets between custodians occurs within a secure cloud environment.

Tenets of the record linkage cloud model

The adopted model was founded on three overarching design principles.

1. The privacy of individuals in the data is protected. One of the most important responsibilities for data custodians and linkage units is information security. Data sets contain private, and often sensitive, information on people, and it is vital that appropriate controls are in place to mitigate any potential risks. Some data sets have restrictions on where they can be held, requiring them to be kept local and protected. All computation and storage within the cloud infrastructure must be done on privacy-preserved versions of these data sets.

2. Computation and storage are outsourced to the cloud infrastructure. Computation requirements for data linkage are often sporadic bursts of intense workloads, followed by periods of low use or even inactivity. The ability to provision resources for computation as and when required means you only pay for what you use. This computation is generally associated with large sets of input and output data, so it makes sense to keep these data as close to the computation as possible. Storage may not necessarily be cheap, but many cloud computing providers guarantee high levels of durability and availability, with encryption and redundancy capabilities.

3. Cloud platform services are used over infrastructure services. Once data are stored within a cloud environment, additional platform as a service (PaaS) offerings for analysis of the data should be leveraged. These are managed services over the top of infrastructure services (such as virtual machines) and can be started and stopped as needed.

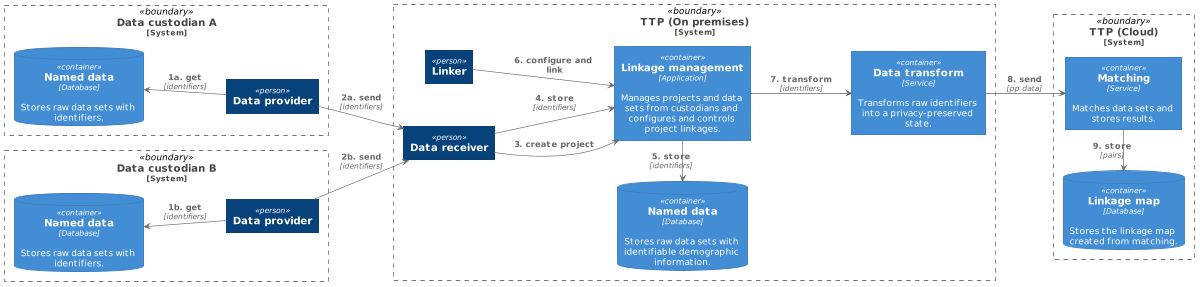

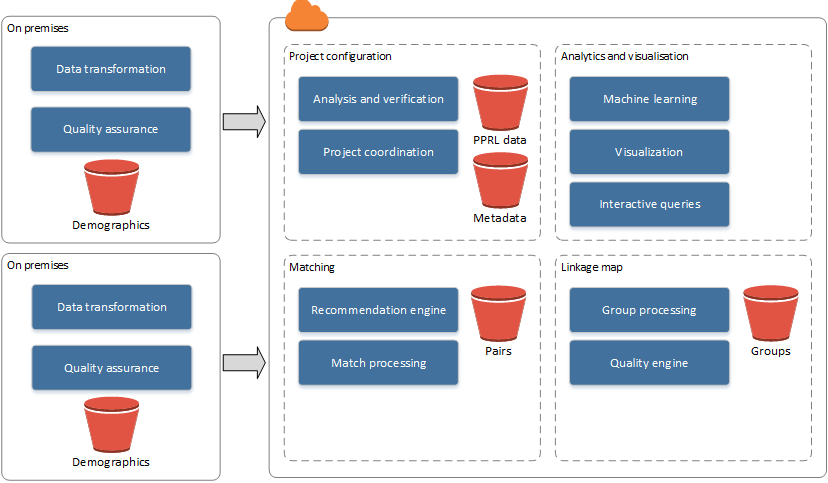

High-level architectural model

Not all storage and computation can be performed within a cloud environment without impacting privacy; the storage of raw identifiers (such as name, date of birth, and address) must often remain on-premises. The heavy computational workloads for record linkage, the record pair comparisons, and classification are therefore undertaken on privacy-preserved versions of these data sets. These privacy-preserved data sets must be created on premises and uploaded to cloud storage. The remainder of the linkage process continues within the cloud environment. However, some parts of the classification and analysis steps may be done interactively by the user from an on-premises client app, annotating results from cloud-based analytics with locally stored details (i.e., identifiers). An overview of the components and data flows involved in the hybrid TTP model is shown in Figure 2. This model satisfies our cloud model tenets and provides the linkage unit with the ability to scale their infrastructure on-demand. The matching (classification) component can utilize scalable platform services available by the cloud provider to match large privacy-preserved data sets as required. All major cloud providers have platform services that can provide computation on-demand for the processing of big data. The linkage map persists, as it contains no identifiable information, and it can also be analyzed using available cloud platform services.

|

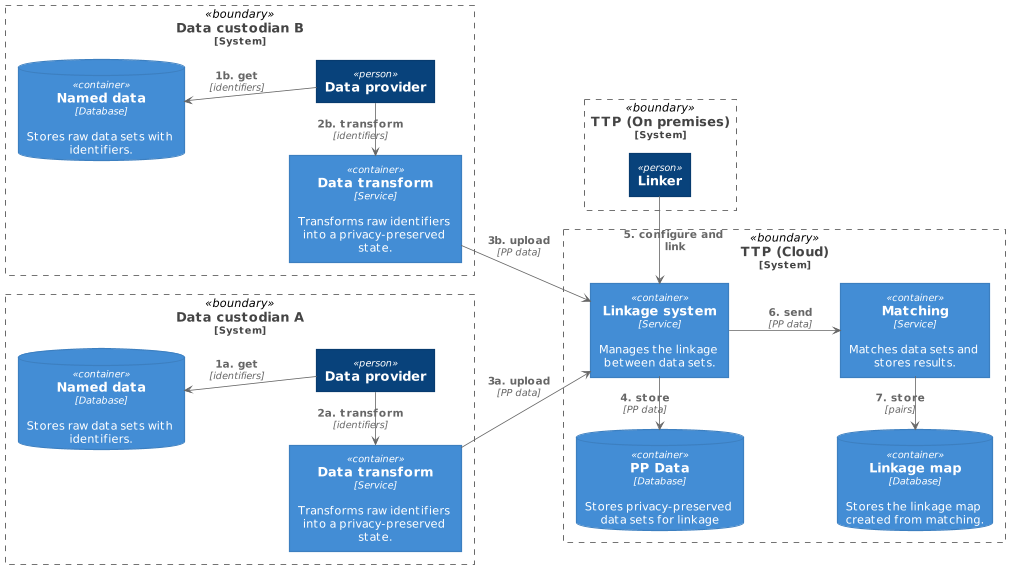

Keeping identifiers at the data custodian level (on-premises) while matching on privacy-preserved data within cloud infrastructure enables linkages of data sets between data custodians. This model does not require any raw identifiers to be released, and thus, a hybrid model is no longer necessary. The TTP can then be hosted fully in the cloud, as shown in Figure 3. There are two immediate ways to achieve this: either one of the custodians manages the cloud infrastructure themselves or an independent third party controls it and provides it as a service to all custodians. A custodian could act as a TTP for all custodians involved in the linkage if this is acceptable to the parties involved. Otherwise, it may be more amenable to go with an independent TTP.

|

Although the full cloud TTP model may be useful in some situations, it is unlikely that this would be a desirable model with the dedicated data linkage units. Processes in cleaning, standardization, and quality analysis with personal identifiers have developed and matured over many years. Switching to a model where they no longer have access to personal identifiers would affect the accuracy of the linkage and ultimately the quality of the health research that used the linked data. The hybrid model replaces only the matching component, allowing many existing linkage processes to remain.

Scaling computation-heavy workloads

Record pair comparison and classification tasks are the most computationally intensive tasks in the linkage process, although they are heavily affected by the indexing method used. The single process limitation of most linkage apps makes it difficult to cater to increasingly large data sets, regardless of indexing. Increasing memory and CPU resources for these single-process apps provides some ability to increase capacity, but this may not be sustainable in the longer term.

Although MapReduce appears to be a promising paradigm for addressing large-scale record linkage, two issues emerge. First, they consider only the creation of record pairs, whether matches or potential matches, without any thought as to how these record pairs are to come together to form entity groups. The grouping task is also an important part of the data matching process, and the grouping method used can significantly reduce matching errors.[35] Second, MapReduce algorithms do not appear to be readily used, if at all, within an operational linkage environment. Organizational change can be slow, and there is much investment in the existing matching algorithms and apps currently used. It may be operationally more acceptable to continue using these apps where possible.

The comparison and classification tasks of the record linkage process are an embarrassingly parallel problem if the indexing task can produce disjoint sets of record pairs (blocks) for comparison. With the rapid uptake of containerization and the availability of container management and orchestration capability, a viable option for many organizations is to reuse existing apps deployed in containers and run in parallel. Matching tasks on disjoint sets can be run independently and in parallel. The matches and potential matches produced by each matching task can, in turn, be processed independently by grouping tasks. The number of sets that are run in parallel would then only be limited by the number of container instances available.

Indexing solutions are imperfect on real-world data; however, producing disjoint sets for matching is difficult without an unacceptable drop in pairs completeness (a measure of the coverage of true positives). There is inevitably some overlap between blocks, as multiple passes with different blocking keys are typically used to ensure accurate results. This overlap prevents independent processing and can be handled in one of two ways: (1) the blocks of pairs for classification can be calculated in full before duplicates are removed, and the classification task can be run; or (2) duplicate matches and potential matches are removed following the classification task. The main disadvantage of the first option is that this requires a potentially massive set of pairs to be created upfront, as the comparison space is typically orders of magnitude larger than the set of matches and potential matches. Many linkage systems combine their indexing and classification tasks for efficiency, and it is often easier to ignore duplicate matches until completion. The disadvantage of the second option is that overlapping block sets result in overlapping match sets, preventing the independent grouping of matches from each classification task.

Regardless of the indexing method used to reduce the comparison space for matching, the resulting blocks require grouping into manageable size bins that can be distributed to parallel tasks. A bin, therefore, refers to a subset of record pairs grouped together for efficient matching. Block value frequencies are calculated across data sets and used to calculate the size of the total comparison space. Records from these data sets are then copied into separate bins such that each bin has a comparison space of approximately equal size to every other bin.

Using this method, the comparison and classification of each bin are free to be executed on whatever compute capability is available. A managed container cluster is an ideal candidate; however, the container’s resources (CPU, memory, and disk) and the bin characteristics (e.g., maximum comparison space) need to be carefully chosen to ensure efficient resource use.

Development and experimental evaluation of the prototype

An evaluation of the hybrid cloud linkage model was conducted through the deduplication of different sized data sets on a prototype system. The experiments were designed to evaluate parallel matching using an existing matching app on a cluster of containers; to measure encryption, transfer, and execution times; and to assess the remote analysis of the matching pairs created.

A prototype system was developed with the on-premises component running on Microsoft Windows 10 and the cloud components running on Amazon Web Services (AWS). The prototype focused on the matching part of the linkage model and utilized platform services where available. These services are described in Table 2.

| ||||||||||||||

Test data

Three synthetic data sets were generated to simulate population-level data sets: one of seven million records, another of 25 million records, and another of 50 million records. Although seven million records may not necessarily represent a large data set, a 50 million record data set is challenging for most linkage units. The data sets were created with a deliberately large number of matches per entity to increase the comparison space and to challenge the matching algorithm.

Data generation was conducted using a modified version of the Febrl data generator[38], an open-source data linkage system written in Python. Frequency distributions of the names and dates of birth of the population of Western Australia were used to generate the synthetic data sets. Randomly selected addresses were sourced from Australia’s National Address File, a publicly available data set.[39] Each data set contained first name, middle name, last name, date of birth, sex, address, and postcode fields. Each field had its own rate of errors and distribution of types of errors. These were based on previously published synthetic data error rates, deliberately set high to challenge matching accuracy.[40] Type of errors included replacement of values, field truncation, misspellings, deletions, insertions, use of alternate names, and values set to missing. Records had anywhere between zero to many thousands of duplicates within the data sets.

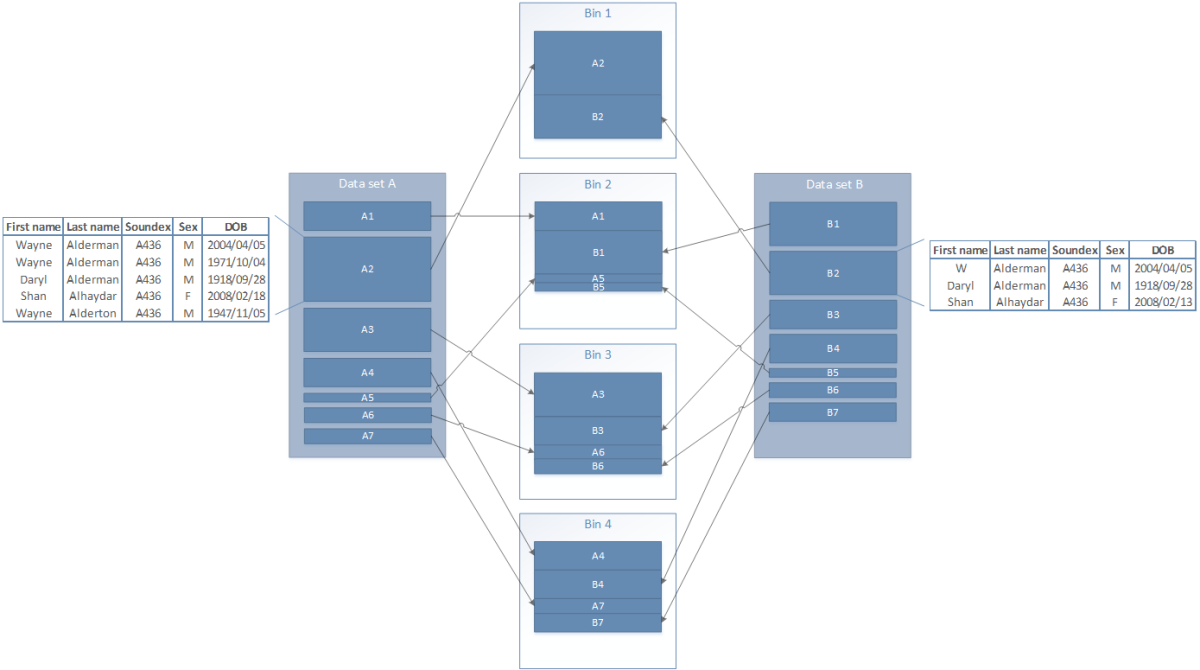

All available fields were used for matching in a probabilistic linkage. Two separate blocks were used: first name initial and last name Soundex, and date of birth and sex. Each pair output from the matching process included two record IDs, a score, a block (strategy) name, and the individual field-level comparison weights used to calculate the score.

Experiments

The on-premises component first transformed data sets containing named identifiers into a privacy-preserved state using Bloom filters. String fields were split into bigrams that were hashed 30 times into Bloom filters 512 bits in length. Numeric fields (including the specific date of birth elements) were cryptographically hashed using hash-based message authentication code Secure Hash Algorithm 2 (SHA2). These privacy-preserved data sets were compressed (using gzip) before being uploaded to Amazon’s object storage, S3. A configuration file was also uploaded, containing the necessary linkage parameters required for the probabilistic linkage. An AWS step function (a managed state machine) was then triggered to run through a set of tasks to complete the deduplication of the file as defined in the parameter file.

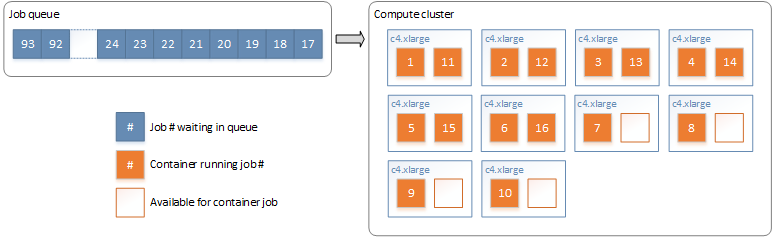

All step function tasks used on-demand resource provisioning for computation. A compute cluster managed by AWS Batch was configured with a maximum CPU count of 40 (10×c4.xlarge instance type). Each container was configured with 3.5 GB RAM and two CPUs, allowing up to 20 container instances to run at any one time.

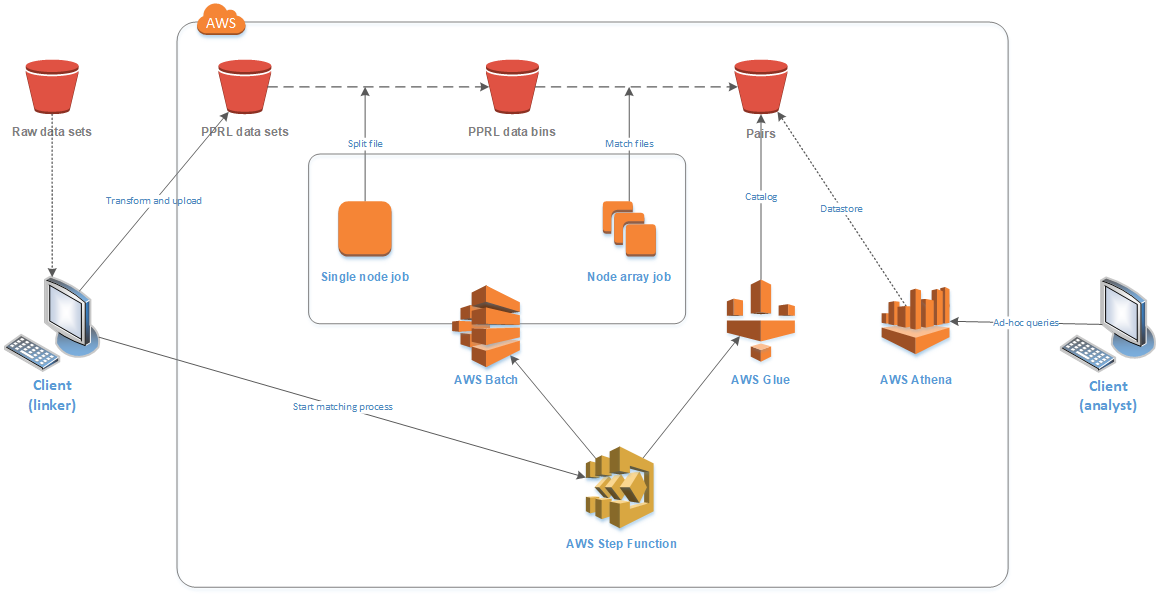

The first task ran as a single job, splitting the file into many bins of approximately equal comparison space, using blocking variables specified in the configuration file. By splitting on the blocking variables, the comparison space for the entire linkage remains unchanged. Each bin was stored in an S3 location with a consistent name suffixed with a sequential identifier. The second task ran a node array batch job, with a job queued for each bin to run on the compute cluster. Docker containers running a command-line version of the LinXmart linkage engine were executed on the compute nodes to deduplicate each bin independently. AWS Batch managed the job queue, assigning jobs to available nodes in the cluster, as shown in Figure 4.

|

LinXmart is a proprietary data linkage management system, and the LinXmart linkage engine was used because of our familiarity with the program and its ability to run as a Linux command-line tool. It accepts a local source data set and parameter file as inputs and produces a single pairs file as output. There were no licensing issues running LinXmart on AWS in this instance, as our institution has a license allowing unrestricted use. This linkage engine could be substituted, if desired, for others that similarly produce record pair files. The container was bootstrapped with a shell script that downloaded and decompressed the source files from S3 storage, ran the linkage engine program, and then compressed and uploaded the resulting pairs file to S3 storage. Each job execution was passed a sequential identifier by AWS Batch, which was used to identify a source bin datafile to download from S3 and mark the resulting pair file to upload to S3.

The third step function task classified and cataloged all new pairs files, using AWS Glue, making them available for use by other AWS analytical services. The results for each original data set were then able to be presented as a single table, although the data itself were stored as a series of individual text files. The prototype’s infrastructure and data flow are shown in Figure 5.

|

Once the deduplication linkages were complete, the on-premises component of the prototype was employed to query each data set’s pair table. The queries were typical of those used following a linkage run: pair count, pair score histogram, and pairs within a pair score range. This query component used the AWS Athena application programming interface (API) to execute the queries, which used Presto (an open-source distributed query engine) to apply the ad hoc structured query language queries to the cataloged pairs tables.

Results

Design of a cloud model for record linkage

The cloud model data matching process is shown in Figure 6. Essentially, every step in the record linkage process from indexing to group analysis is pushed to cloud infrastructure. Preprocessed data sets are transformed into a privacy-preserved state (masking) and uploaded to the cloud service for linking. The services within the cloud boundary now act as a TTP. The quality assurance and analysis steps sit on the boundary of the cloud as computation and query occur on the hosted cloud infrastructure, but the interactive analysis is performed by the analysis client on premises. If the analysis client has access to one or more of the raw data sets used in the linkage, these data can be annotated onto query results, giving the clerk more informed decisions and an experience to which they are accustomed.

|

As shown in the high-level architectural model in Figure 7, the demographic data (containing personal identifiers) continue to remain on premises with the data custodian. Responsibilities of the data custodians are limited to data transformation and quality assurance management. The responsibilities of the cloud services are covered under four main categories: project configuration, matching, linkage map, and analytics and visualization. The project configuration includes the services required for coordinating projects within and across separate data custodians. Privacy-preserved data sets are stored here as well as metadata on the data sets as a result of analysis and verification performed on the uploaded data sets. The matching category includes all match processing (classification) and pairs output as well as services for providing recommendations on linkage parameters (such as m and u likelihood estimates for probabilistic linkages) for linkages between privacy-preserved data sets.[41] The linkage map category holds the entity group information, the map between individual records, and the group in which they belong. This category also contains services for processing and creating groups from pairs as well as quality estimation and analysis. Analytics and visualization contain all analytical services provided to the on-premises clients.

|

This model also allows computation to be pushed onto inexpensive, on-demand hardware in a privacy-preserving state while retaining the advantage of seeing raw identifiers during other phases of the linkage process (e.g., quality assurance and analysis).

Experimental evaluation of the prototype

Each deduplication consisted of a single node job to split the data set into multiple bins, followed by a node array job for the matching of records within each bin. The split of data into bins is shown in Figure 8. In this example, all records with the same Soundex value will end up in the same bin.

|

The total comparison space was calculated using the blocking field frequencies in the data set. These frequencies represent the number of times each blocking field value occurs in the data, providing the ability to calculate the number of comparisons that will be performed for each blocking field value. First, the comparison space for each blocking field was calculated using the frequency of the value within the file. The total comparison space was the sum of each, and the bin count was determined by dividing this by the maximum desired comparison space for a single bin. The blocking field value with the largest comparison space was assigned to the first bin. The blocking field value with the next largest comparison space was assigned to the second bin. This process continued for each blocking field value, returning to the first bin when the end was reached. A file was created for each bin, which was then independently deduplicated. Blocking field values with a very high frequency are undesirable as they are usually less useful for linkage and are costly in terms of computation. Any blocking field value with a frequency higher than the maximum desired comparison space was discarded.

The total comparison space used for each data set, along with the bin count and pair count, is presented in Table 3. The two blocks used for the creation of separate bins for distribution across the processing cluster resulted in some duplication of comparisons and, thus, duplication of pairs.

| ||||||||||||||||||||||||||||||

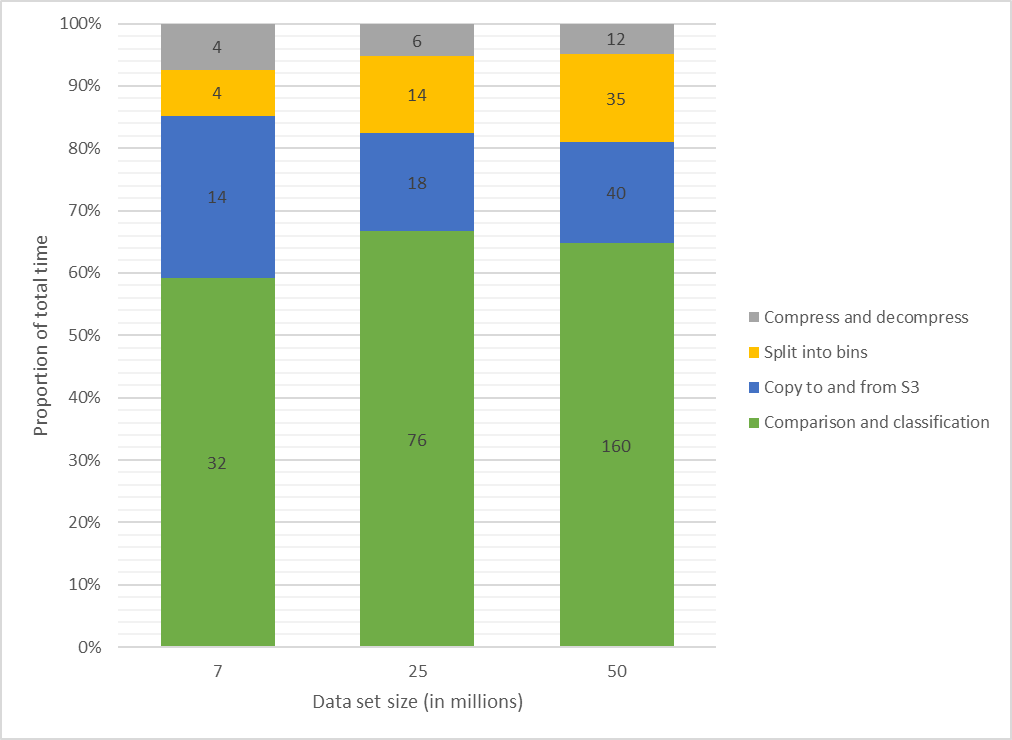

Approximately 60% of the time was spent on comparison and classification by each container (Figure 9). Much of the time was spent managing data in and out of the container itself. Splitting a data set into bins for parallel computation took between 7% (4/54 minutes) and 14% (35/247 minutes) of the total task time, a reasonable sacrifice considering the scalability factor this gives for the classification jobs. Provisioning of the compute resources took two to four minutes for each data set.

|

Running times of ad hoc queries on data sets are shown in Table 4; these were each executed five times on the client and run through the AWS Athena API. The mean execution time did not vary greatly across the differently sized data sets. With a simple count query taking around 25 seconds, there appears to be some initial setup time for provisioning the backend Presto cluster. This is expected and should not be considered an issue, particularly with all queries of the largest data set of 4.4 billion pairs taking less than one minute to execute.

| |||||||||||||||||||||||||

In terms of costs associated with the use of AWS cloud services for our evaluation, there were two main types. First, we had the cost of on-demand processing, which is typically charged by the second. This totaled just over U.S. $20 for the linkage processing used for all three data sets. The second was the cost of storage, charged per month. To retain the pairs files generated for all three data sets, it cost only U.S. $2 per month. Querying data via the Athena service is currently charged at U.S. $5 per terabyte scanned.

Discussion

Principal findings

Our results show that an effective cloud model can be successfully developed, which extends linkage capacity into cloud infrastructure. A prototype was built based on this model. The execution times of the prototype were reasonable and far shorter than one would expect when running the same software on a single hosted machine. Indeed, it is likely that on a single hosted machine, the large data set (50 million) would need to be broken up into smaller chunks and linkages on these chunks run sequentially.

The splitting of data for comparison into separate bins worked well for distributing the work and mapped easily to the AWS Batch mechanism for execution of a cluster of containers. The creation of an AWS step function to manage the process from start to end was relatively straightforward. Step functions provide out-of-the-box support for AWS Batch. However, custom Lambda functions were required to trigger the AWS Glue crawler and retrieve the results from the first data-split task so that the appropriate size batch job could be provisioned.

As the fields used for splitting the data were the same as those used for blocking on each node, the comparison space was not different from running a linkage of the entire data set on a single machine. With the same comparison space and probabilistic parameters, the accuracy of the linkage is also identical. Having a mechanism for distributing linkage processing on multiple nodes with no reduction in accuracy is certainly a massive advantage for data linkage units looking to extend their linkage capacity.

The AWS Batch job definition’s retry strategy was configured with five attempts, applying to each job in the batch. This provides some resilience to instance failures, outages, and failures triggered within the container. However, in our evaluation, this feature was never triggered. The timeout setting was set to a value well beyond what was expected as jobs that time out are not retried, and our prototype did not handle this particular scenario. Although our implementation of the step function provided no failure strategies for any task in the workflow, handling error conditions is supported, and retry mechanisms within the state machine can be created as desired. An operational linkage system would require these failure scenarios to be handled.

Improvements to the prototype will address some of the other limitations found in the existing implementation. For example, S3 data transfer times could be reduced by using a series of smaller result files for pairs and uploading all of these in parallel. The over-matching and duplication of pairs could be addressed by improving the indexing algorithm used to split data. Although there is inevitably going to be some overlap of blocks, our naïve implementation could be improved. Our algorithm for distributing blocks attempts to distribute workload as evenly as possible based on the estimated comparison space. Discarding overly large blocks helps prevent excessive load on single matching nodes. However, it relies on secondary blocks to match the records within and only partly prevents imbalanced load distribution. The block-based load balancing techniques developed for the MapReduce linkage algorithms can be applied here to mitigate data skew further, where record pairs are distributed for matching instead of blocks.

As improvements to PPRL techniques are developed over time, these changes can be factored in with little change to the model. Future work on the prototype will look to extend the capability of PPRL to use additional security advances such as homomorphic encryption[42] and function-hiding encryption.[43]

Conclusions

The model developed and evaluated here successfully extends linkage capability into the cloud. By using PPRL techniques and moving computation into cloud infrastructure, privacy is maintained while taking advantage of the considerable scalability offered by cloud solutions. The adoption of such a model will provide linkage units with the ability to process increasingly larger data sets without impacting data release protocols and individual patient privacy. In addition, the ability to store detailed linkage information provides exciting opportunities for increasing the quality of linkage and advancing the analysis of linkage outputs. Rich analytics, machine learning, automation, and visualization of these additional data will enable the next generation of quality assurance tooling for linkage.

Abbreviations

API: application programming interface

AWS: Amazon Web Services

CPU: central processing unit

GPU: graphics processing unit

LSH: locality sensitive hashing

PPRL: privacy-preserving record linkage

TTP: trusted third party

Acknowledgements

AB has been supported by an Australian Government Research Training Program Scholarship.

Author contributions

The core of this project was completed by AB as part of his PhD project. SR provided assistance and support for requirements analysis, testing, and data analysis. Both authors read, edited, and approved the final manuscript.

Conflict of interest

None declared.

References

- ↑ Abebe, R.; Hill, S.; Vaughan, J.W. et al. (2019). "Using Search Queries to Understand Health Information Needs in Africa". Proceedings of the Thirteenth International AAAI Conference on Web and Social Media 13 (1): 3–14. https://ojs.aaai.org/index.php/ICWSM/article/view/3360.

- ↑ Radin, J.M.; Wineinger, N.E.; Topol, E.J. et al. (2020). "Harnessing wearable device data to improve state-level real-time surveillance of influenza-like illness in the USA: A population-based study". The Lancet Digital Health 2 (2): e85–e93. doi:10.1016/S2589-7500(19)30222-5.

- ↑ Lai, S.; Farnham, A.; Ruktanonchai, N.W. et al. (2019). "Measuring mobility, disease connectivity and individual risk: A review of using mobile phone data and mHealth for travel medicine". Journal of Travel Medicine 26 (3): taz019. doi:10.1093/jtm/taz019. PMC PMC6904325. PMID 30869148. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC6904325.

- ↑ Khoury, M.J.; Iademarco, M.F.; Tiley, W.T. (2016). "Precision Public Health for the Era of Precision Medicine". American Journal of Prevantative Medicine 50 (3): 398-401. doi:10.1016/j.amepre.2015.08.031. PMC PMC4915347. PMID 26547538. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4915347.

- ↑ McGrail, K.; Jones, K. (2018). "Population Data Science: The science of data about people". Conference Proceedings for International Population Data Linkage Conference 2018 3 (4). doi:10.23889/ijpds.v3i4.918.

- ↑ Christen, P. (2012). Data Matching: Concepts and Techniques for Record Linkage, Entity Resolution, and Duplicate Detection. Springer-Verlag. doi:10.1007/978-3-642-31164-2. ISBN 9783642311642.

- ↑ Dunn, H.L. (1946). "Record Linkage". American Journal of Public Health and the Nation's Health 36 (12): 1412–6. PMC PMC1624512. PMID 18016455. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC1624512.

- ↑ Casey, J.A.; Schwartz, B.S.; Stewart, W.F. et al. (2016). "Using Electronic Health Records for Population Health Research: A Review of Methods and Applications". Annual Review of Public Health 37: 61–81. doi:10.1146/annurev-publhealth-032315-021353. PMC PMC6724703. PMID 26667605. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC6724703.

- ↑ Population Health Research Network (17 April 2014). "Population Health Research Network 2013 Independent Panel Review: Findings and Recommendations" (PDF). https://www.phrn.org.au/media/80607/phrn-2013-independent-review-findings-and-recommendations-v2-_final-report-april-17-2014-2.pdf. Retrieved 15 September 2020.

- ↑ UNSW Australia (2015). "Centre for Big Data Research in Health: Annual Report 2015" (PDF). https://cbdrh.med.unsw.edu.au/sites/default/files/CBDRH_Annual%20Report_2015_160609_Final.pdf. Retrieved 15 September 2020.

- ↑ Liversidge, J.; Spencer, J.; Weinstein, E. et al. (21 December 2018). "Predicts 2019: Cloud Adoption and Increasing Regulation Will Drive Investment in IT Vendor Management". Gartner Research. https://www.gartner.com/en/documents/3896211/predicts-2019-cloud-adoption-and-increasing-regulation-w. Retrieved 15 September 2020.

- ↑ Vasiljeva, T.; Shaikhulina, S.; Kreslins, K. (2017). "Cloud Computing: Business Perspectives, Benefits and Challenges for Small and Medium Enterprises (Case of Latvia)". Procedia Engineering 178: 443–51. doi:10.1016/j.proeng.2017.01.087.

- ↑ Khalil, I.M.; Khreishah, A.; Bouktig, S. et al. (2013). "Security Concerns in Cloud Computing". Proceedings of the 10th International Conference on Information Technology: New Generations: 411-416. doi:10.1109/ITNG.2013.127.

- ↑ John, J.; Norman, J. (2018). "Major Vulnerabilities and Their Prevention Methods in Cloud Computing". In Peter, J.; Alavi, A.; Javadi, B.. Advances in Big Data and Cloud Computing. Springer. pp. 11–26. doi:10.1007/978-981-13-1882-5_2. ISBN 9789811318825.

- ↑ 15.0 15.1 Abd El-Ghafar, R.M.; Gheith, M.H.; El-Bastawissy, A. et al. (2017). "Record linkage approaches in big data: A state of art study". Proceedings of the 13th International Computer Engineering Conference: 224-230. doi:10.1109/ICENCO.2017.8289792.

- ↑ 16.0 16.1 Dou, C.; Sun, D.; Chen, Y.-C. et al. (2016). "Probabilistic parallelisation of blocking non-matched records for big data". Proceedings of the 2016 IEEE International Conference on Big Data: 3465-3473. doi:10.1109/BigData.2016.7841009.

- ↑ 17.0 17.1 Chu, X.; Ilyas, I.F.; Koutris, P. (2016). "Distributed data deduplication". Proceedings of the VLDB Endowment 9 (11): 864–875. doi:10.14778/2983200.2983203.

- ↑ 18.0 18.1 Gazzari, L.; Herschel, M. (2020). "Towards task-based parallelization for entity resolution". SICS Software-Intensive Cyber-Physical Systems 35: 31–38. doi:10.1007/s00450-019-00409-6.

- ↑ Papadakis, G.; Skoutas, D.; Thanos, E. et al. (2020). "Blocking and Filtering Techniques for Entity Resolution: A Survey". ACM Computing Surveys 53 (2): 31. doi:10.1145/3377455.

- ↑ Karapiperis, D.; Verykios, V.S. (2016). "A fast and efficient Hamming LSH-based scheme for accurate linkage". Knowledge and Information Systems 49: 861–884. doi:10.1007/s10115-016-0919-y.

- ↑ Pita, R.; Pinto, C.; Melo, P. et al. (2015). "A Spark-based workflow for probabilistic record linkage of healthcare data" (PDF). Workshop Proceedings of the EDBT/ICDT 2015 Joint Conference: 1–10. http://ceur-ws.org/Vol-1330/paper-04.pdf.

- ↑ Gagliardelli, L.; Simonini, G.; Beneventano, D. et al. (2019). "SparkER: Scaling Entity Resolution in Spark". Proceedings of the 22nd International Conference on Extending Database Technology: 602–05. doi:10.5441/002/edbt.2019.66.

- ↑ Mestre, D.G.; Pires, C.E.S.; Nascimento, D.C. et al. (2017). "An efficient spark-based adaptive windowing for entity matching". Journal of Systems and Software 128: 1–10. doi:10.1016/j.jss.2017.03.003.

- ↑ Sehili, Z.; Kolb, L.; Borgs, C. et al. (2015). "Privacy Preserving Record Linkage with PPJoin" (PDF). Proceedings of the 16th Fachtagung für Datenbanksysteme in Business, Technologie und Web: 1–20. https://dbs.uni-leipzig.de/file/P4Join-BTW2015.pdf.

- ↑ Boratto, M.; Alonso, P.; Pinto, C. et al. (2019). "Exploring hybrid parallel systems for probabilistic record linkage". Journal of Supercomputing 75: 1137–1149. doi:10.1007/s11227-018-2328-3.

- ↑ Christen, P. (2012). "A Survey of Indexing Techniques for Scalable Record Linkage and Deduplication". IEEE Transactions on Knowledge and Data Engineering 24 (9): 1537–1555. doi:10.1109/TKDE.2011.127.

- ↑ Trinckes Jr., J.J. (2013). The Definitive Guide to Complying with the HIPAA/HITECH Privacy and Security Rules. CRC Press. ISBN 9781466507685.

- ↑ "Regulation (EU) 2016/679 of the European Parliament and of the Council of 27 April 2016 on the protection of natural persons with regard to the processing of personal data and on the free movement of such data, and repealing Directive 95/46/EC (General Data Protection Regulation) (Text with EEA relevance)". Publications Office of the EU. European Union. 27 April 2016. https://op.europa.eu/en/publication-detail/-/publication/3e485e15-11bd-11e6-ba9a-01aa75ed71a1/language-en. Retrieved 15 September 2020.

- ↑ Vatsala, D.; Sehili, Z.; Christen, P. et al. (2017). "Privacy-Preserving Record Linkage for Big Data: Current Approaches and Research Challenges". In Zomaya, A.; Sakrd, S.. Handbook of Big Data Technologies. Springer. pp. 851–95. doi:10.1007/978-3-319-49340-4_25. ISBN 9783319493404.

- ↑ 30.0 30.1 Boyd, J.H.; Randall, S.; Brown, A.P. et al. (2019). "Population Data Centre Profiles: Centre for Data Linkage". International Journal of Population Data Science 4 (2): 12. doi:10.23889/ijpds.v4i2.1139.

- ↑ Vatsalan, D.; Karapiperis, D.; Verykios, V.S. (2018). "Privacy-Preserving Record Linkage". In Sakr, S.; Zomaya, A.. Encyclopedia of Big Data Technologies. Springer. doi:10.1007/978-3-319-63962-8. ISBN 9783319639628.

- ↑ Karapiperis, D.; Gkoulalas-Divanis, A.; Verykios, V.S. (2016). "LSHDB: A parallel and distributed engine for record linkage and similarity search". Proceedings of the IEEE 16th International Conference on Data Mining Workshops: 1–4. doi:10.1109/ICDMW.2016.7867099.

- ↑ Bonomi, L.; Xiong, L.; Lu, J.J. (2013). "LinkIT: privacy preserving record linkage and integration via transformations". Proceedings of the 2013 ACM SIGMOD International Conference on Management of Data: 1029–32. doi:10.1145/2463676.2465259.

- ↑ Toth, C.; Durham, E.; Kantarcioglu, M. et al. (2014). "SOEMPI: A Secure Open Enterprise Master Patient Index Software Toolkit for Private Record Linkage". AMIA Annual Symposium Proceedings 2014: 1105–14. PMC PMC4419976. PMID 25954421. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4419976.

- ↑ 35.0 35.1 Randall, S.M.; Boyd, J.H.; Ferrante, A.M. et al. (2015). "Grouping methods for ongoing record linkage". Proceedings of the First International Workshop on Population Informatics for Big Data: 1–6. doi:10.13140/RG.2.1.2003.9120.

- ↑ Irvine, K.; Smith, M.; de Vos, R. et al.. "Real world performance of privacy preserving record linkage". Conference Proceedings for International Population Data Linkage Conference 2018 3 (4): 1–6. doi:10.23889/ijpds.v3i4.990.

- ↑ Lee, Y.A. (2019). "Medicineinsight: Scalable and Linkable General Practice Data Set". Proceeding of Health Data Analytics 2019.

- ↑ Christen, P.; Pudjijono, A. (2009). "Accurate Synthetic Generation of Realistic Personal Information". Proceedings of the 2009 Pacific-Asia Conference on Knowledge Discovery and Data Mining: 507–14. doi:10.1007/978-3-642-01307-2_47.

- ↑ Geoscape Australia (22 February 2016). "Geoscape Geocoded National Address File (G-NAF)". data.gov.au. Australian Government. https://data.gov.au/data/dataset/19432f89-dc3a-4ef3-b943-5326ef1dbecc. Retrieved 15 September 2020.

- ↑ Ferrante, A.; Boyd, J. (2012). "A transparent and transportable methodology for evaluating Data Linkage software". Journal of Biomedical Informatics 45 (1): 165–72. doi:10.1016/j.jbi.2011.10.006. PMID 22061295.

- ↑ Brown, A.P.; Randall, S.M.; Ferrante, A.M. et al. (2017). "Estimating parameters for probabilistic linkage of privacy-preserved datasets". BMC Medical Research Methodology 17 (1): 95. doi:10.1186/s12874-017-0370-0. PMC PMC5504757. PMID 28693507. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC5504757.

- ↑ Randall, S.M.; Brown, A.P.; Ferrante, A.M. et al. (2015). "Privacy preserving record linkage using homomorphic encryption" (PDF). Proceedings of the First International Workshop on Population Informatics for Big Data: 1–7. http://linkr.anu.edu.au/popinfo2015/papers/4-randall2015popinfo2.pdf.

- ↑ Lee, J.; Kim, D.; Kim, D. et al. (2018). "Instant Privacy-Preserving Biometric Authentication for Hamming Distance". IACR Cryptology ePrint Archive 2018: 1–28. https://api.semanticscholar.org/CorpusID:57760611.

Notes

This presentation is faithful to the original, with only a few minor changes to presentation. Grammar was cleaned up for smoother reading. In some cases important information was missing from the references, and that information was added. At the time of loading of this article, the links to the Additional File 1 and 2 were broken on the original site; a request to fix the errors has been sent to the journal.