Difference between revisions of "User:Shawndouglas/sandbox/sublevel5"

Shawndouglas (talk | contribs) |

Shawndouglas (talk | contribs) |

||

| Line 72: | Line 72: | ||

==Background== | ==Background== | ||

===An overview of big data approaches=== | ===An overview of big data approaches=== | ||

Big data technologies have received great attention due to their successful handling of high volume data compared to traditional approaches. A big data framework supports all kinds of data—including structured, semistructured, and unstructured data—while providing several features. Those features include predictive model design and big data mining tools that allow better decision-making processes through the selection of relevant information. | Big data technologies have received great attention due to their successful handling of high volume data compared to traditional approaches. A big data framework supports all kinds of data—including structured, semistructured, and unstructured data—while providing several features. Those features include predictive model design and big data mining tools that allow better decision-making processes through the selection of relevant [[information]]. | ||

Big data processing can be performed through two manners: batch processing and stream processing.<ref name="ShahrivariBeyond14">{{cite journal |title=Beyond Batch Processing: Towards Real-Time and Streaming Big Data |journal=Computers |author=Shahrivari, S. |volume=3 |issue=4 |pages=117-129 |year=2014 |doi=10.3390/computers3040117}}</ref> The first method is based on analyzing data over a specified period of time; it is adopted when there are no constraints regarding the response time. On the other hand, stream processing is suitable for applications requiring real-time feedback. Batch processing aims to process a high volume of data by collecting and storing batches to be analyzed in order to generate results. | Big data processing can be performed through two manners: batch processing and stream processing.<ref name="ShahrivariBeyond14">{{cite journal |title=Beyond Batch Processing: Towards Real-Time and Streaming Big Data |journal=Computers |author=Shahrivari, S. |volume=3 |issue=4 |pages=117-129 |year=2014 |doi=10.3390/computers3040117}}</ref> The first method is based on analyzing data over a specified period of time; it is adopted when there are no constraints regarding the response time. On the other hand, stream processing is suitable for applications requiring real-time feedback. Batch processing aims to process a high volume of data by collecting and storing batches to be analyzed in order to generate results. | ||

| Line 82: | Line 82: | ||

Big data stream mining methods—including classification, frequent pattern mining, and clustering—relieve computational effort through rapid extraction of the most relevant information; this objective is often achieved by mining data in a distributed manner. Those methods belong to one of the two following classes: data-based techniques and task-based techniques.<ref name="SinghASurvey15">{{cite journal |title=A survey on platforms for big data analytics |journal=Journal of Big Data |author=Singh, D.; Reddy, C.K. |volume=2 |page=8 |year=2015 |doi=10.1186/s40537-014-0008-6}}</ref> Data-based techniques allow summarizing the entire dataset or selecting a subset of the continuous flow of streaming data to be processed. Sampling is one of these techniques; it consists of choosing a small subset of data to be processed according to a statistical criterion. Another data-based method is load shedding which drops a part from the entire data, while the sketching technique establishes a random projection on a feature set. The synopsis data structures method and aggregation method belong also to the family of data-based techniques, the first one summarizing data streams and the latter representing a number of elements in one element by using a statistical measure. | Big data stream mining methods—including classification, frequent pattern mining, and clustering—relieve computational effort through rapid extraction of the most relevant information; this objective is often achieved by mining data in a distributed manner. Those methods belong to one of the two following classes: data-based techniques and task-based techniques.<ref name="SinghASurvey15">{{cite journal |title=A survey on platforms for big data analytics |journal=Journal of Big Data |author=Singh, D.; Reddy, C.K. |volume=2 |page=8 |year=2015 |doi=10.1186/s40537-014-0008-6}}</ref> Data-based techniques allow summarizing the entire dataset or selecting a subset of the continuous flow of streaming data to be processed. Sampling is one of these techniques; it consists of choosing a small subset of data to be processed according to a statistical criterion. Another data-based method is load shedding which drops a part from the entire data, while the sketching technique establishes a random projection on a feature set. The synopsis data structures method and aggregation method belong also to the family of data-based techniques, the first one summarizing data streams and the latter representing a number of elements in one element by using a statistical measure. | ||

Task-based techniques update existing methods or design new ones to reduce the computational time in the case of data stream processing. They are categorized into approximation algorithms that generate outputs with an acceptable error margin, a sliding window that analyzes recent data under the assumption that it is more useful than older data, and algorithm output granularity that processes data according to the available memory and time constraints. | |||

Big data approaches are essential for modern healthcare analytics; they allow real-time extraction of relevant information from a large amount of patient data. As a result, alerts are generated when the prediction model detects possible complications. This process helps to prevent health emergencies from occurring; it also assists medical professionals in [[Clinical decision support system|decision-making]] regarding disease diagnosis and provides special care recommendations. | |||

===Big data processing frameworks=== | |||

Concerning batch processing mode, the MapReduce framework is widely adopted; it allows distributed analysis of big data on a cluster of machines. Thus, simple computations are performed through two functions that consist of map and reduce. MapReduce relies on a master/slave architecture, with the master node allocating processing tasks to slave nodes and dividing data into blocks, and, then, structuring data into a set of keys/values as an input of map tasks. Each worker assigns a map task to slaves and reads the appropriate input data, and, after that, the system writes generated results of the map task into intermediate files. Then the reducer worker transmits results generated by the map task as an input of the reducer task. Finally, the results are written into final output files. MapReduce runs on Hadoop, an open-source framework that stores and analyzes data in a parallel manner through clusters. | |||

The entire framework is composed of two main components: Hadoop MapReduce and a distributed file system. Distributed file systems (HDFS) store data by duplicating it in many nodes. On the other hand, Hadoop MapReduce implements the MapReduce programming model; its master node stores [[metadata]] information such as locations of duplicated blocks, and it identifies locations of data nodes to recover missing blocks in failure cases. The data are split into several blocks, and the processing operations are made in the same machine. With Hadoop, other tools for data storage can be used instead of HDFS, such as HBase, Cassandra, and relational databases. [[Data warehouse|Data warehousing]] may be performed by other tools, for instance, Pig and Hive, while Apache Mahout is employed for machine learning purposes. When stream processing is required, Hadoop may not be a suitable choice since all input data must be available before starting MapReduce tasks. | |||

Recently, Storm from Twitter, S4 from Yahoo, Spark, and other programs were presented as solutions for processing incoming stream data. Each solution has its own peculiarities. | |||

'''Storm''' is an open-source framework to analyze data in real time, and it is composted of "spouts" and "bolts."<ref name="EvansApache15">{{cite journal |title=Apache Storm, a Hands on Tutorial |journal=Proceedings of the 2015 IEEE International Conference on Cloud Engineering |author=Evans, R. |page=2 |year=2015 |doi=10.1109/IC2E.2015.67}}</ref> Spouts can produce data or load data from an input queue, and bolts processes input streams and generate output streams. In Storm programming, a combination of a bolt and a spout results in a named topology. Storm has three nodes: the master node or nimbus, the worker node, and the zookeeper. The master node distributes and coordinates the execution of topology, while the worker node is responsible for executing spouts/bolts. Finally, the zookeeper synchronizes distributed coordination. | |||

'''S4''' is a distributed stream processing engine, inspired by the MapReduce model in order to process data streams.<ref name="NeumeyerS410">{{cite journal |title=S4: Distributed Stream Computing Platform |journal=Proceedings from the 2010 IEEE International Conference on Data Mining Workshops |author=Neumeyer, L.; Robbins, B.; Nair, A.; Kesari, A. |pages=170-177 |year=2010 |doi=10.1109/ICDMW.2010.172}}</ref> It was implemented by Yahoo through Java. Data streams feed to S4 as events. | |||

'''Spark''' can be applied to both batch and stream processing; therefore, spark may be considered a powerful framework compared with other tools such as Hadoop and Storm.<ref name="ZahariaApache16">{{cite journal |title=Apache Spark: A Unified Engine For Big Data Processing |journal=Communications of the ACM |author=Zaharia, M.; Xin, R.S.; Wendell, P. et al. |volume=59 |issue=11 |pages=56–65 |year=2016 |doi=10.1145/2934664}}</ref> It can access several data sources like HDFS, Cassandra, and HBase. Spark provides several interesting features, for example, iterative machine learning algorithms through the Mllib library, which provides efficient algorithms with high speed, structured data analysis using Hive, and graph processing based on GraphX and SparkSQL that restore data from many sources and manipulate them using the SQL languages. Before processing data streams, Spark divides them into small portions and transforms them into a set of RDDs (resilient distributed datasets) named DStream (Discretised Stream). | |||

'''Apache Flink''' is an open-source solution that analyzes data in both batch and real-time mode.<ref name="FriedmanIntro16">{{cite book |title=Introduction to Apache Flink: Stream Processing for Real Time and Beyond |author=Friedman, E.; Tzoumas, K. |publisher=O'Reilly Media |year=2016 |isbn=9781491976586}}</ref> The programming models of Flink and MapReduce share many similarities. Flink allows iterative processing and real-time computation on stream data collected by tools such as Flume and Kafka. Apache Flink provides several features like FlinkML, which represents a machine learning library capable of providing many learning algorithms for fast and scalable big data applications. | |||

'''MongoDB''' is a NoSQL database capable of storing a significant amount of data. MongoDB relies on the JSON standard (Java Script Object Notation) in order to store records. It consists of an open, human, and machine-readable format that makes data interchange easier compared to classical formats such as rows and tables. In addition, JSON scales better since join-based queries are not needed due to the fact that relevant data of a given record is contained in a single JSON document. Spark is easily integrated with MongoDB.<ref name="PluggeTheDef15">{{cite book |chapter=Introduction to MongoDB |title=The Definitive Guide to MongoDB: A complete guide to dealing with Big Data using MongoDB |author=Plugge, E.; Hows, D.; Membrey, P.; Hawkins, T. |publisher=APress |year=2015 |isbn=9781491976586}}</ref> | |||

Table 1 summarizes big data processing solutions. | |||

{| | |||

| STYLE="vertical-align:top;"| | |||

{| class="wikitable" border="1" cellpadding="5" cellspacing="0" width="80%" | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" colspan="6"|'''Table 1.''' Big data processing solutions | |||

|- | |||

|- | |||

! style="background-color:#e2e2e2; padding-left:10px; padding-right:10px;"|Framework | |||

! style="background-color:#e2e2e2; padding-left:10px; padding-right:10px;"|Type | |||

! style="background-color:#e2e2e2; padding-left:10px; padding-right:10px;"|Latency | |||

! style="background-color:#e2e2e2; padding-left:10px; padding-right:10px;"|Developed by | |||

! style="background-color:#e2e2e2; padding-left:10px; padding-right:10px;"|Stream primitive | |||

! style="background-color:#e2e2e2; padding-left:10px; padding-right:10px;"|Stream source | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|Hadoop | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|Batch | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|Minutes or more | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|Yahoo | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|Key-value | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|HDFS | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|Storm | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|Streaming | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|Subseconds | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|Twitter | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|Tuples | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|Spouts | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|Spark (streaming) | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|Batch/streaming | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|Few seconds | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|Berkley AMPLay | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|DStream | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|HDFS | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|S4 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|Streaming | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|Few seconds | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|Yahoo | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|Events | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|Networks | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|Flink | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|Batch/streaming | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|Few seconds | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|Apache Software Foundation | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|Key-value | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|Kafka | |||

|- | |||

|} | |||

|} | |||

==References== | ==References== | ||

Revision as of 17:55, 18 August 2018

|

|

This is sublevel2 of my sandbox, where I play with features and test MediaWiki code. If you wish to leave a comment for me, please see my discussion page instead. |

Sandbox begins below

| Full article title | Big data management for healthcare systems: Architecture, requirements, and implementation |

|---|---|

| Journal | Advances in Bioinformatics |

| Author(s) | El aboudi, Naoual; Benhilma, Laila |

| Author affiliation(s) | Mohammed V University |

| Primary contact | Email: nawal dot elaboudi at gmail dot com |

| Editors | Fdez-Riverola, Florentino |

| Year published | 2018 |

| Volume and issue | 2018 |

| Page(s) | 4059018 |

| DOI | 10.1155/2018/4059018 |

| ISSN | 1687-8035 |

| Distribution license | Creative Commons Attribution 4.0 International |

| Website | https://www.hindawi.com/journals/abi/2018/4059018/ |

| Download | http://downloads.hindawi.com/journals/abi/2018/4059018.pdf (PDF) |

|

|

This article should not be considered complete until this message box has been removed. This is a work in progress. |

Abstract

The growing amount of data in the healthcare industry has made inevitable the adoption of big data techniques in order to improve the quality of healthcare delivery. Despite the integration of big data processing approaches and platforms in existing data management architectures for healthcare systems, these architectures face difficulties in preventing emergency cases. The main contribution of this paper is proposing an extensible big data architecture based on both stream computing and batch computing in order to enhance further the reliability of healthcare systems by generating real-time alerts and making accurate predictions on patient health condition. Based on the proposed architecture, a prototype implementation has been built for healthcare systems in order to generate real-time alerts. The suggested prototype is based on Spark and MongoDB tools.

Introduction

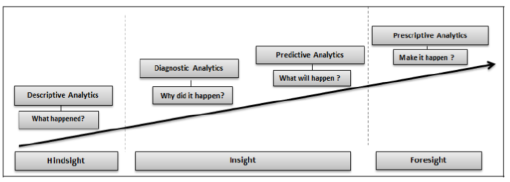

The proportion of elderly people in society is growing worldwide[1]; this phenomenon—referred to by the World Health Organization as "humanity’s aging"[1]—has many implications on healthcare services, especially in terms of cost. In the face of such a situation, relying on classical systems may result in a life quality decline for millions of people. Seeking to overcome this problem, a variety of different healthcare systems have been designed. Their common principle is transferring, on a periodical basis, medical parameters like blood pressure, heart rate, glucose level, body temperature, and ECG signals to an automated system aimed at monitoring in real time patients' health condition. Such systems provide quick assistance when needed since data is analyzed continuously. Automating health monitoring favors a proactive approach that relieves medical facilities by saving costs related to hospitalization, and it also enhances healthcare services by improving waiting time for consultations. Recently, the number of data sources in the healthcare industry has grown rapidly as a result of widespread use of mobile and wearable sensor technologies, which have flooded the healthcare arena with a huge amount of data. Therefore, it becomes challenging to perform healthcare data analysis based on traditional methods which are unfit to handle the high volume of diversified medical data. In general, the healthcare domain has four categories of analytics: descriptive, diagnostic, predictive, and prescriptive analytics. A brief description of each one of them is given below.

Descriptive analytics refers to describing current situations and reporting on them. Several techniques are employed to perform this level of analytics. For instance, descriptive statistics tools like histograms and charts are among the techniques used in descriptive analytics.

Diagnostic analysis aims to explain why certain events occurred and what the factors that triggered them are. For example, diagnostic analysis attempts to understand the reasons behind the regular readmission of some patients by using several methods such as clustering and decision trees.

Predictive analytics reflects the ability to predict future events; it also helps in identifying trends and determining probabilities of uncertain outcomes. An illustration of its role is to predict whether or not a patient will have complications. Predictive models are often built using machine learning techniques.

Prescriptive analytics proposes suitable actions leading to optimal decision-making. For instance, prescriptive analysis may suggest rejecting a given treatment in the case of a harmful side effect's high probability. Decision trees and Monte Carlo simulation are examples of methods applied to perform prescriptive analytics. Figure 1 illustrates analytics phases for the healthcare domain.[2] The integration of big data technologies into healthcare analytics may lead to better performance of medical systems.

|

In fact, big data refers to large datasets that combine the following characteristics[3]: volume, which refers to high amounts of data; velocity, which means that data is generated at a rapid pace; variety, which emphasizes that data comes under different formats; and veracity, which means that data originates from a trustworthy sources.

Another characteristic of big data is variability. It indicates variations that occur in the data flow rates. Indeed, velocity does not provide a consistent description of the data due to its periodic peaks and troughs. Another important aspect of big data is complexity; it arises from the fact that big data is often produced through many sources, which implies to perform many operations over the data, these operations include identifying relationships and cleansing and transforming data flowing from different origins.

Moreover, Oracle decided to introduce value as a key attribute of big data. According to Oracle, big data has a “low value density,” which means that raw data has a low value compared to its high volume. Nevertheless, analysis of important volumes of data may lead to obtaining a high value.

In the context of healthcare, high volumes of data are generated by multiple medical sources, and it includes, for example, biomedical images, lab test reports, physician written notes, and health condition parameters allowing real-time patient health monitoring. In addition to its huge volume and its diversity, healthcare data flows at high speed. As a result, big data approaches offer tremendous opportunities regarding healthcare systems efficiency.

The contribution of this research paper is to propose an extensible big data architecture for healthcare applications formed by several components capable of storing, processing, and analyzing the significant amount of data in real time and batch modes. This paper demonstrates the potential of using big data analytics in the healthcare domain to find useful information in highly valuable data.

The paper has been organized as follows: In the next section, a background of big data computing approaches and big data platforms is provided. Recent contributions on big data for healthcare systems are reviewed in the section after. Then, in the section "An extensible big data architecture for healthcare," the components of the proposed big data architecture for healthcare are described. The implementation process is reported in the penultimate section, followed by conclusions, along with recommendations for future research.

Background

An overview of big data approaches

Big data technologies have received great attention due to their successful handling of high volume data compared to traditional approaches. A big data framework supports all kinds of data—including structured, semistructured, and unstructured data—while providing several features. Those features include predictive model design and big data mining tools that allow better decision-making processes through the selection of relevant information.

Big data processing can be performed through two manners: batch processing and stream processing.[4] The first method is based on analyzing data over a specified period of time; it is adopted when there are no constraints regarding the response time. On the other hand, stream processing is suitable for applications requiring real-time feedback. Batch processing aims to process a high volume of data by collecting and storing batches to be analyzed in order to generate results.

Batch processing mode requires ingesting all data before processing it in a specified time. MapReduce represents a widely adopted solution in the field of batch computing[5]; it operates by splitting data into small pieces that are distributed to multiple nodes in order to obtain intermediate results. Once data processing by nodes is terminated, outcomes will be aggregated in order to generate the final results. Seeking to optimize computational resources use, MapReduce allocates processing tasks to nodes close to data location. This model has encountered a lot of success in many applications, especially in the field of bioinformatics and healthcare. Batch processing framework has many characteristics such as the ability to access all data and to perform many complex computation operations, and its latency is measured by minutes or more.

Stream processing offers another methodology to analysts. In real applications such as healthcare, intelligent transportation, and finance, a high amount of data is produced in a continuous manner. When the need of processing such data streams in real time arises, data analysis takes into consideration the continuous evolution of data and permanent change regarding statistical characteristics of data streams, referred to as concept drift.[6] Indeed, storing a large amount of data for further processing may be challenging in terms of memory resources. Moreover, real applications tend to produce noisy data containing missing values and contain redundant features, making data analysis complicated, as it requires important computational time. Stream processing reduces this computational burden by performing simple and fast computations for one data element or for a window of recent data, and such computations take seconds at most.

Big data stream mining methods—including classification, frequent pattern mining, and clustering—relieve computational effort through rapid extraction of the most relevant information; this objective is often achieved by mining data in a distributed manner. Those methods belong to one of the two following classes: data-based techniques and task-based techniques.[7] Data-based techniques allow summarizing the entire dataset or selecting a subset of the continuous flow of streaming data to be processed. Sampling is one of these techniques; it consists of choosing a small subset of data to be processed according to a statistical criterion. Another data-based method is load shedding which drops a part from the entire data, while the sketching technique establishes a random projection on a feature set. The synopsis data structures method and aggregation method belong also to the family of data-based techniques, the first one summarizing data streams and the latter representing a number of elements in one element by using a statistical measure.

Task-based techniques update existing methods or design new ones to reduce the computational time in the case of data stream processing. They are categorized into approximation algorithms that generate outputs with an acceptable error margin, a sliding window that analyzes recent data under the assumption that it is more useful than older data, and algorithm output granularity that processes data according to the available memory and time constraints.

Big data approaches are essential for modern healthcare analytics; they allow real-time extraction of relevant information from a large amount of patient data. As a result, alerts are generated when the prediction model detects possible complications. This process helps to prevent health emergencies from occurring; it also assists medical professionals in decision-making regarding disease diagnosis and provides special care recommendations.

Big data processing frameworks

Concerning batch processing mode, the MapReduce framework is widely adopted; it allows distributed analysis of big data on a cluster of machines. Thus, simple computations are performed through two functions that consist of map and reduce. MapReduce relies on a master/slave architecture, with the master node allocating processing tasks to slave nodes and dividing data into blocks, and, then, structuring data into a set of keys/values as an input of map tasks. Each worker assigns a map task to slaves and reads the appropriate input data, and, after that, the system writes generated results of the map task into intermediate files. Then the reducer worker transmits results generated by the map task as an input of the reducer task. Finally, the results are written into final output files. MapReduce runs on Hadoop, an open-source framework that stores and analyzes data in a parallel manner through clusters.

The entire framework is composed of two main components: Hadoop MapReduce and a distributed file system. Distributed file systems (HDFS) store data by duplicating it in many nodes. On the other hand, Hadoop MapReduce implements the MapReduce programming model; its master node stores metadata information such as locations of duplicated blocks, and it identifies locations of data nodes to recover missing blocks in failure cases. The data are split into several blocks, and the processing operations are made in the same machine. With Hadoop, other tools for data storage can be used instead of HDFS, such as HBase, Cassandra, and relational databases. Data warehousing may be performed by other tools, for instance, Pig and Hive, while Apache Mahout is employed for machine learning purposes. When stream processing is required, Hadoop may not be a suitable choice since all input data must be available before starting MapReduce tasks.

Recently, Storm from Twitter, S4 from Yahoo, Spark, and other programs were presented as solutions for processing incoming stream data. Each solution has its own peculiarities.

Storm is an open-source framework to analyze data in real time, and it is composted of "spouts" and "bolts."[8] Spouts can produce data or load data from an input queue, and bolts processes input streams and generate output streams. In Storm programming, a combination of a bolt and a spout results in a named topology. Storm has three nodes: the master node or nimbus, the worker node, and the zookeeper. The master node distributes and coordinates the execution of topology, while the worker node is responsible for executing spouts/bolts. Finally, the zookeeper synchronizes distributed coordination.

S4 is a distributed stream processing engine, inspired by the MapReduce model in order to process data streams.[9] It was implemented by Yahoo through Java. Data streams feed to S4 as events.

Spark can be applied to both batch and stream processing; therefore, spark may be considered a powerful framework compared with other tools such as Hadoop and Storm.[10] It can access several data sources like HDFS, Cassandra, and HBase. Spark provides several interesting features, for example, iterative machine learning algorithms through the Mllib library, which provides efficient algorithms with high speed, structured data analysis using Hive, and graph processing based on GraphX and SparkSQL that restore data from many sources and manipulate them using the SQL languages. Before processing data streams, Spark divides them into small portions and transforms them into a set of RDDs (resilient distributed datasets) named DStream (Discretised Stream).

Apache Flink is an open-source solution that analyzes data in both batch and real-time mode.[11] The programming models of Flink and MapReduce share many similarities. Flink allows iterative processing and real-time computation on stream data collected by tools such as Flume and Kafka. Apache Flink provides several features like FlinkML, which represents a machine learning library capable of providing many learning algorithms for fast and scalable big data applications.

MongoDB is a NoSQL database capable of storing a significant amount of data. MongoDB relies on the JSON standard (Java Script Object Notation) in order to store records. It consists of an open, human, and machine-readable format that makes data interchange easier compared to classical formats such as rows and tables. In addition, JSON scales better since join-based queries are not needed due to the fact that relevant data of a given record is contained in a single JSON document. Spark is easily integrated with MongoDB.[12]

Table 1 summarizes big data processing solutions.

| ||||||||||||||||||||||||||||||||||||||||||

References

- ↑ 1.0 1.1 World Health Organization; National Institute of Aging, ed. (October 2011). "Global Health and Aging". WHO. http://www.who.int/ageing/publications/global_health/en/.

- ↑ Gandomi, A.; Haider, M. (2015). "Beyond the hype: Big data concepts, methods, and analytics". International Journal of Information Management 35 (2): 137–44. doi:10.1016/j.ijinfomgt.2014.10.007.

- ↑ Chen, M.; Mao, S.; Liu, Y. (2014). "Big Data: A Survey". Mobile Networks and Applications 19 (2): 171–209. doi:10.1007/s11036-013-0489-0.

- ↑ Shahrivari, S. (2014). "Beyond Batch Processing: Towards Real-Time and Streaming Big Data". Computers 3 (4): 117-129. doi:10.3390/computers3040117.

- ↑ Dean, J.; Ghemawat, S. (2008). "MapReduce: Simplified data processing on large clusters". Communications of the ACM 51 (1): 107-113. doi:10.1145/1327452.1327492.

- ↑ Tatbul, N. (2010). "Streaming data integration: Challenges and opportunities". Proceedings from the 26th IEEE International Conference on Data Engineering Workshops: 155-158. doi:10.1109/ICDEW.2010.5452751.

- ↑ Singh, D.; Reddy, C.K. (2015). "A survey on platforms for big data analytics". Journal of Big Data 2: 8. doi:10.1186/s40537-014-0008-6.

- ↑ Evans, R. (2015). "Apache Storm, a Hands on Tutorial". Proceedings of the 2015 IEEE International Conference on Cloud Engineering: 2. doi:10.1109/IC2E.2015.67.

- ↑ Neumeyer, L.; Robbins, B.; Nair, A.; Kesari, A. (2010). "S4: Distributed Stream Computing Platform". Proceedings from the 2010 IEEE International Conference on Data Mining Workshops: 170-177. doi:10.1109/ICDMW.2010.172.

- ↑ Zaharia, M.; Xin, R.S.; Wendell, P. et al. (2016). "Apache Spark: A Unified Engine For Big Data Processing". Communications of the ACM 59 (11): 56–65. doi:10.1145/2934664.

- ↑ Friedman, E.; Tzoumas, K. (2016). Introduction to Apache Flink: Stream Processing for Real Time and Beyond. O'Reilly Media. ISBN 9781491976586.

- ↑ Plugge, E.; Hows, D.; Membrey, P.; Hawkins, T. (2015). "Introduction to MongoDB". The Definitive Guide to MongoDB: A complete guide to dealing with Big Data using MongoDB. APress. ISBN 9781491976586.

Notes

This presentation is faithful to the original, with only a few minor changes to presentation. Grammar was cleaned up for smoother reading. In some cases important information was missing from the references, and that information was added.