Difference between revisions of "Journal:Design of generalized search interfaces for health informatics"

Shawndouglas (talk | contribs) (Created stub. Saving and adding more.) |

Shawndouglas (talk | contribs) (Fixed deprecated parameters) |

||

| (13 intermediate revisions by the same user not shown) | |||

| Line 19: | Line 19: | ||

|download = [https://www.mdpi.com/2078-2489/12/8/317/pdf https://www.mdpi.com/2078-2489/12/8/317/pdf] (PDF) | |download = [https://www.mdpi.com/2078-2489/12/8/317/pdf https://www.mdpi.com/2078-2489/12/8/317/pdf] (PDF) | ||

}} | }} | ||

==Abstract== | ==Abstract== | ||

In this paper, we investigate [[Ontology (information science)|ontology]]-supported interfaces for [[health informatics]] search tasks involving large document sets. We begin by providing background on health informatics, [[machine learning]], and ontologies. We review leading research on health informatics search tasks to help formulate high-level design criteria. We then use these criteria to examine traditional design strategies for search interfaces. To demonstrate the utility of the criteria, we apply them to the design of the ONTology-supported Search Interface (ONTSI), a demonstrative, prototype system. ONTSI allows users to plug-and-play document sets and expert-defined domain ontologies through a generalized search interface. ONTSI’s goal is to help align users’ common vocabulary with the domain-specific vocabulary of the plug-and-play document set. We describe the functioning and utility of ONTSI in health informatics search tasks through a [[workflow]] and a scenario. We conclude with a summary of ongoing evaluations, limitations, and future research. | In this paper, we investigate [[Ontology (information science)|ontology]]-supported interfaces for [[health informatics]] search tasks involving large document sets. We begin by providing background on health informatics, [[machine learning]], and ontologies. We review leading research on health informatics search tasks to help formulate high-level design criteria. We then use these criteria to examine traditional design strategies for search interfaces. To demonstrate the utility of the criteria, we apply them to the design of the ONTology-supported Search Interface (ONTSI), a demonstrative, prototype system. ONTSI allows users to plug-and-play document sets and expert-defined domain ontologies through a generalized search interface. ONTSI’s goal is to help align users’ common vocabulary with the domain-specific vocabulary of the plug-and-play document set. We describe the functioning and utility of ONTSI in health informatics search tasks through a [[workflow]] and a scenario. We conclude with a summary of ongoing evaluations, limitations, and future research. | ||

| Line 31: | Line 26: | ||

==Introduction== | ==Introduction== | ||

[[Health informatics]] is concerned with emergent technological systems that improve the quality and availability of care, promote the sharing of knowledge, and support the performance of proactive health and wellness tasks by motivated individuals.<ref name=":0">{{Cite journal |last=Wickramasinghe |first=Nilmini |date=2019/08 |title=Essential Considerations for Successful Consumer Health Informatics Solutions |url=http://www.thieme-connect.de/DOI/DOI?10.1055/s-0039-1677909 |journal=Yearbook of Medical Informatics |language=en |volume=28 |issue=01 |pages=158–164 |doi=10.1055/s-0039-1677909 |issn=0943-4747 |pmc=PMC6697544 |pmid=31419828}}</ref> Subareas of health informatics may include medical informatics, nursing informatics, [[Consumer health informatics|consumer informatics]], [[cancer informatics]], and [[Pharmacoinformatics|pharmacy informatics]], to name a few. Simply put, health informatics is concerned with harnessing technology for finding new ways to help stakeholders work with health [[information]] to be able to perform health-related tasks more effectively. | |||

Users in the health domain are increasingly taking advantage of computer-based resources in their tasks. For instance, a 2017 Canadian survey found that 32% of respondents within their last month had used at least one mobile application for health-related tasks. Even more, those under the age of 35 are twice as likely to do so.<ref name=":1">{{Cite web |last=Canadian Medical Association |date=2018 |title=The Future of Technology in Health and Health Care: A Primer |url=https://www.cma.ca/sites/default/files/pdf/health-advocacy/activity/2018-08-15-future-technology-health-care-e.pdf |publisher=Canadian Medical Association |archiveurl=https://web.archive.org/web/20190430220959/https://www.cma.ca/sites/default/files/pdf/health-advocacy/activity/2018-08-15-future-technology-health-care-e.pdf |archivedate=30 April 2019}}</ref> Furthermore, studies have calculated that over 58% of Americans have used tools like Google and other domain-specific tools to support their health informatics search tasks, with search being one of the most important and central tasks in most health informatics activities.<ref name=":2">{{Cite journal |last=Demiris |first=G. |date=2016 |title=Consumer Health Informatics: Past, Present, and Future of a Rapidly Evolving Domain |url=http://www.thieme-connect.de/DOI/DOI?10.15265/IYS-2016-s005 |journal=Yearbook of Medical Informatics |language=en |volume=25 |issue=S 01 |pages=S42–S47 |doi=10.15265/IYS-2016-s005 |issn=0943-4747 |pmc=PMC5171509 |pmid=27199196}}</ref><ref name=":3">{{Cite journal |last=Zuccon, G.; Koopman, B. |year=2014 |editor-last=Goeuriot, L.; Jones, G.J.F.; Kelly, L. et al. |title=Integrating understandability in the evaluation of consumer health search engines |url=http://ceur-ws.org/Vol-1276/ |journal=CEUR Workshop Proceedings |volume=1276 |pages=32–35 |urn=urn:nbn:de:0074-1276-1}}</ref> | |||

Yet, search can be challenging, particularly for health informatics tasks that utilize large and complex document sets. For such tasks, health informatics tools may require the use of domain-specific vocabulary. Aligning with this vocabulary can be a significant challenge within health tasks, as they can involve a lexicon of intricate nomenclature, deeply layered relations, and lengthy descriptions that are misaligned with common vocabulary. For instance, one highly cited medical research paper defines the term “chromosomal instability” as “an elevated rate of chromosome mis-segregation and breakage, results in diverse chromosomal aberrations in tumor cell populations.” In this example, those unfamiliar with the defined term could find parsing its definition just as significant a challenge as the term itself.<ref name="CJCThe150">{{Cite journal |last=Chinese Journal of Cancer |date=2017-12 |title=The 150 most important questions in cancer research and clinical oncology series: questions 67–75: Edited by Chinese Journal of Cancer |url=https://cancercommun.biomedcentral.com/articles/10.1186/s40880-017-0254-z |journal=Chinese Journal of Cancer |language=en |volume=36 |issue=1 |pages=86, s40880–017–0254-z |doi=10.1186/s40880-017-0254-z |issn=1944-446X |pmc=PMC5664810 |pmid=29092716}}</ref> Thus, when communicating across vocabularies, users may struggle to describe the requirements of their search task in a way that is understandable by health informatics tools.<ref>{{Cite journal |last=Mehta, N.; Pandit, A. |date=2018-06-01 |title=Concurrence of big data analytics and healthcare: A systematic review |url=https://www.sciencedirect.com/science/article/abs/pii/S1386505618302466 |journal=International Journal of Medical Informatics |language=en |volume=114 |pages=57–65 |doi=10.1016/j.ijmedinf.2018.03.013 |issn=1386-5056}}</ref><ref>{{Cite journal |last=Thiébaut |first=Rodolphe |last2=Cossin |first2=Sébastien |last3=Informatics |first3=Section Editors for the IMIA Yearbook Section on Public Health and Epidemiology |date=2019/08 |title=Artificial Intelligence for Surveillance in Public Health |url=http://www.thieme-connect.de/DOI/DOI?10.1055/s-0039-1677939 |journal=Yearbook of Medical Informatics |language=en |volume=28 |issue=01 |pages=232–234 |doi=10.1055/s-0039-1677939 |issn=0943-4747 |pmc=PMC6697516 |pmid=31419837}}</ref> To deal with this challenge, [[Ontology (information science)|ontologies]] can be a valuable mediating resource in the design of user-facing interfaces of health informatics tools.<ref name=":7">{{Cite book |last=Saleemi, M.M.; Rodríguez, N.D.; Lilius, J.; Porres, I. |date=2011 |editor-last=Balandin |editor-first=Sergey |editor2-last=Koucheryavy |editor2-first=Yevgeni |editor3-last=Hu |editor3-first=Honglin |editor4-last=NEW2AN |editor5-last=RuSMART |title=Smart Spaces and Next Generation Wired/Wireless Networking |url=https://www.worldcat.org/title/mediawiki/oclc/844916767 |chapter=A Framework for Context-aware Applications for Smart Spaces |series=Lecture notes in computer science |publisher=Springer |place=Heidelberg |pages=14–25 |isbn=978-3-642-22874-2 |oclc=844916767}}</ref> That is, ontologies can bridge the vocabularies of users with the vocabulary of their task and its tools. Yet, the use of ontologies in user-facing interface design is not well established. Furthermore, health informatics tools that present a generalized interface, one that can support search tasks across any number of domain vocabularies and document sets, can allow users to transfer their experience between tasks, presenting users with information-centric perspectives during their performances rather than technology-centered perspectives.<ref name=":16">{{Cite journal |last=Gibson |first=C. J. |last2=Dixon |first2=B. E. |last3=Abrams |first3=K. |date=2015 |title=Convergent evolution of health information management and health informatics |url=http://www.thieme-connect.de/DOI/DOI?10.4338/ACI-2014-09-RA-0077 |journal=Applied Clinical Informatics |language=en |volume=06 |issue=01 |pages=163–184 |doi=10.4338/ACI-2014-09-RA-0077 |issn=1869-0327 |pmc=PMC4377568 |pmid=25848421}}</ref><ref name=":17">{{Cite journal |last=Fang |first=Ruogu |last2=Pouyanfar |first2=Samira |last3=Yang |first3=Yimin |last4=Chen |first4=Shu-Ching |last5=Iyengar |first5=S. S. |date=2016-06-14 |title=Computational Health Informatics in the Big Data Age: A Survey |url=https://doi.org/10.1145/2932707 |journal=ACM Computing Surveys |volume=49 |issue=1 |pages=12:1–12:36 |doi=10.1145/2932707 |issn=0360-0300}}</ref> For this, there is a need to distill criteria that can guide designers during the creation of ontology-supported interfaces for health informatics search tasks involving large document sets. | |||

The goal of this paper is to investigate the following research questions: | |||

*What are the criteria for the structure and design of generalized ontology-supported interfaces for health informatics search tasks involving large document sets? | |||

*If such criteria can be distilled, can they then be used to help create such interfaces? | |||

In this paper, we examine health informatics, machine learning, and ontologies. We then review leading research on health informatics search tasks. From this analysis, we formulate criteria for the design of ontology-supported interfaces for health informatics search tasks involving large document sets. We then use these criteria to contrast the traditional design strategies for search interfaces. To demonstrate the utility of the criteria in design, we will use them to structure the design of a tool, ONTSI (ONTology-supported Search Interface). ONTSI allows users to plug-and-play their document sets and expert-defined ontology files to perform health informatics search tasks. We describe ONTSI through a functional workflow and an illustrative usage scenario. We conclude with a summary of ongoing evaluation efforts, future research, and our limitations.<ref name=":19">{{Cite journal |last=Köhler |first=Sebastian |last2=Carmody |first2=Leigh |last3=Vasilevsky |first3=Nicole |last4=Jacobsen |first4=Julius O B |last5=Danis |first5=Daniel |last6=Gourdine |first6=Jean-Philippe |last7=Gargano |first7=Michael |last8=Harris |first8=Nomi L |last9=Matentzoglu |first9=Nicolas |last10=McMurry |first10=Julie A |last11=Osumi-Sutherland |first11=David |date=2019-01-08 |title=Expansion of the Human Phenotype Ontology (HPO) knowledge base and resources |url=https://doi.org/10.1093/nar/gky1105 |journal=Nucleic Acids Research |volume=47 |issue=D1 |pages=D1018–D1027 |doi=10.1093/nar/gky1105 |issn=0305-1048 |pmc=PMC6324074 |pmid=30476213}}</ref> | |||

==Background== | |||

In this section, we describe the concepts and terminology used when discussing ontology-supported interfaces for health informatics search tasks involving large document sets. We begin with background on health informatics. Next, we examine [[machine learning]]. We conclude with coverage of ontologies and their utility as a mediating resource for both human- and computer-facing use. | |||

===Health informatics=== | |||

Health informatics is broadly concerned with emergent technological systems for improving the quality and availability of care, promoting the sharing of knowledge, and supporting the performance of proactive health and wellness practices by motivated individuals.<ref name=":0" /> Initially, the need for expanded health and wellness services stemmed from rising population levels combined with the growing complexity of medical sciences. These issues made it challenging to maintain quality care within increasingly stressed medical systems.<ref name=":4">{{Cite journal |last=Carayon |first=Pascale |last2=Hoonakker |first2=Peter |date=2019/08 |title=Human Factors and Usability for Health Information Technology: Old and New Challenges |url=http://www.thieme-connect.de/DOI/DOI?10.1055/s-0039-1677907 |journal=Yearbook of Medical Informatics |language=en |volume=28 |issue=01 |pages=071–077 |doi=10.1055/s-0039-1677907 |issn=0943-4747 |pmc=PMC6697515 |pmid=31419818}}</ref> Thus, a central objective for health informatics is the development of strategies to tackle large-scale problems that harm trained medical professionals’ ability to perform their tasks in a timely and effective manner. For instance, [[telehealth]] services allowed doctors to practice remote medicine, providing care to those without local medical services. Another early innovation was standardized [[Electronic health record|electronic health care records]] (EHRs), where patient records were given standardized encodings to provide an increased ability to track, compare, manage, and share personal health information.<ref name=":2" /> Some examples of current research directions are the push for stronger patient privacy, personalized medicine, and the expansion of healthcare into underserved regions and communities.<ref name=":0" /><ref name=":1" /><ref name=":2" /><ref>{{Cite journal |last=Gamache |first=Roland |last2=Kharrazi |first2=Hadi |last3=Weiner |first3=Jonathan P. |date=2018/08 |title=Public and Population Health Informatics: The Bridging of Big Data to Benefit Communities |url=http://www.thieme-connect.de/DOI/DOI?10.1055/s-0038-1667081 |journal=Yearbook of Medical Informatics |language=en |volume=27 |issue=01 |pages=199–206 |doi=10.1055/s-0038-1667081 |issn=0943-4747 |pmc=PMC6115205 |pmid=30157524}}</ref><ref>{{Cite journal |last=Brewer |first=LaPrincess C. |last2=Fortuna |first2=Karen L. |last3=Jones |first3=Clarence |last4=Walker |first4=Robert |last5=Hayes |first5=Sharonne N. |last6=Patten |first6=Christi A. |last7=Cooper |first7=Lisa A. |date=2020-01-14 |title=Back to the Future: Achieving Health Equity Through Health Informatics and Digital Health |url=https://mhealth.jmir.org/2020/1/e14512 |journal=JMIR mHealth and uHealth |language=EN |volume=8 |issue=1 |pages=e14512 |doi=10.2196/14512 |pmc=PMC6996775 |pmid=31934874}}</ref> | |||

The rising production and availability of health-related data has resulted in a growing number of data-intensive tasks within health. Both private and public entities like health industry companies, government bodies, and everyday citizens are turning to health informatics tools as they manage and activate their health data.<ref name=":1" /> A growing number of health-related tasks involve searching document sets. During these tasks, the aim of the user is to use the information described within their document set to increase their understanding of a topic or concept. For example, a search task could be a practitioner searching the EHRs of their patients, a member of the general public using public materials for their general health concerns, or a researcher performing a literature review.<ref name=":4" /><ref name=":5">{{Cite journal |last=Wu |first=Charley M. |last2=Meder |first2=Björn |last3=Filimon |first3=Flavia |last4=Nelson |first4=Jonathan D. |date=2017-08 |title=Asking better questions: How presentation formats influence information search. |url=http://doi.apa.org/getdoi.cfm?doi=10.1037/xlm0000374 |journal=Journal of Experimental Psychology: Learning, Memory, and Cognition |language=en |volume=43 |issue=8 |pages=1274–1297 |doi=10.1037/xlm0000374 |issn=1939-1285}}</ref><ref name=":6">{{Cite journal |last=Talbot |first=Justin |last2=Lee |first2=Bongshin |last3=Kapoor |first3=Ashish |last4=Tan |first4=Desney S. |date=2009-04-04 |title=EnsembleMatrix: Interactive visualization to support machine learning with multiple classifiers |url=https://dl.acm.org/doi/10.1145/1518701.1518895 |journal=Proceedings of the SIGCHI Conference on Human Factors in Computing Systems |language=en |publisher=ACM |place=Boston MA USA |pages=1283–1292 |doi=10.1145/1518701.1518895 |isbn=978-1-60558-246-7}}</ref> In general, a search task involves the generation of a query based on an information-seeking objective. The computation systems of these tools then use this query to map and extract relevant documents out from the document set.<ref name=":5" /> Powerful technologies like machine learning are increasingly being integrated within tools to help perform rapid and automated computation on document sets.<ref name=":3" /> Yet, when taking advantage of these technologies, designers must be mindful of human factors when generating the user-facing interfaces of their tools, as a task cannot be performed effectively without direction from an empowered user.<ref name=":4" /> | |||

===Machine learning=== | |||

Machine learning techniques are increasingly being utilized to tackle analytic problems once considered too complex to solve in an effective and timely manner.<ref name=":6" /> Yet, recent analysis<ref name=":8">{{Cite journal |last=Hohman |first=Fred |last2=Kahng |first2=Minsuk |last3=Pienta |first3=Robert |last4=Chau |first4=Duen Horng |date=2019-08 |title=Visual Analytics in Deep Learning: An Interrogative Survey for the Next Frontiers |url=https://ieeexplore.ieee.org/document/8371286/ |journal=IEEE Transactions on Visualization and Computer Graphics |volume=25 |issue=8 |pages=2674–2693 |doi=10.1109/TVCG.2018.2843369 |issn=1941-0506 |pmc=PMC6703958 |pmid=29993551}}</ref><ref name=":9">{{Cite journal |last=Yuan |first=Jun |last2=Chen |first2=Changjian |last3=Yang |first3=Weikai |last4=Liu |first4=Mengchen |last5=Xia |first5=Jiazhi |last6=Liu |first6=Shixia |date=2020-11-25 |title=A survey of visual analytics techniques for machine learning |url=https://doi.org/10.1007/s41095-020-0191-7 |journal=Computational Visual Media |language=en |volume=7 |issue=1 |pages=3–36 |doi=10.1007/s41095-020-0191-7 |issn=2096-0433}}</ref><ref name=":10">{{Cite journal |last=Endert |first=A. |last2=Ribarsky |first2=W. |last3=Turkay |first3=C. |last4=Wong |first4=B. L. William |last5=Nabney |first5=I. |last6=Blanco |first6=I. Díaz |last7=Rossi |first7=F. |date=2017 |title=The State of the Art in Integrating Machine Learning into Visual Analytics |url=https://onlinelibrary.wiley.com/doi/abs/10.1111/cgf.13092 |journal=Computer Graphics Forum |language=en |volume=36 |issue=8 |pages=458–486 |doi=10.1111/cgf.13092 |issn=1467-8659}}</ref> on the human factors in machine learning environments have found that the current design strategies continually limit users’ ability to take part in the analytic process. More so, it has produced a generation of machine learning-integrated tools that are failing to provide users a complete understanding on how computational systems of their tools arrive at their results. This has significantly reduced users’ control and lowered the ability to achieve task objectives. In response, there is a growing desire to promote the “human-in-the-loop,” bringing the benefits of human reasoning back to the forefront of the design process.<ref name=":11">{{Cite journal |last=Jusoh |first=S |last2=Awajan |first2=A |last3=Obeid |first3=N |date=2020-05 |title=The Use of Ontology in Clinical Information Extraction |url=https://iopscience.iop.org/article/10.1088/1742-6596/1529/5/052083 |journal=Journal of Physics: Conference Series |volume=1529 |pages=052083 |doi=10.1088/1742-6596/1529/5/052083 |issn=1742-6588}}</ref><ref name=":12">{{Cite journal |last=Lytvyn |first=Vasyl |last2=Dosyn |first2=Dmytro |last3=Vysotska |first3=Victoria |last4=Hryhorovych |first4=Andrii |date=2020-08 |title=Method of Ontology Use in OODA |url=https://ieeexplore.ieee.org/document/9204107/ |journal=2020 IEEE Third International Conference on Data Stream Mining & Processing (DSMP) |publisher=IEEE |place=Lviv, Ukraine |pages=409–413 |doi=10.1109/DSMP47368.2020.9204107 |isbn=978-1-7281-3214-3}}</ref><ref name=":13">{{Cite journal |last=Román-Villarán |first=E. |last2=Pérez-Leon |first2=F. P. |last3=Escobar-Rodriguez |first3=G. A. |last4=Martínez-García |first4=A. |last5=Álvarez-Romero |first5=C. |last6=Parra-Calderón |first6=C. L. |date=2019-08-21 |title=An Ontology-Based Personalized Decision Support System for Use in the Complex Chronically Ill Patient |url=https://pubmed.ncbi.nlm.nih.gov/31438026 |journal=Studies in Health Technology and Informatics |volume=264 |pages=758–762 |doi=10.3233/SHTI190325 |issn=1879-8365 |pmid=31438026}}</ref> | |||

When considering the interaction loop of a machine learning-integrated tool, Sacha ''et al.''<ref>{{Cite book |last=Sacha, D.; Sedlmair, M.; Zhang L.et al. |date= |year=2016 |editor-last=Verleysen |editor-first=Michel |others=ESSAN, Université catholique de Louvain, Katholieke Universiteit Leuven |title=24th European Symposium on Artificial Neural Networks, Computational Intelligence and Machine Learning: ESANN 2016 |url=https://www.worldcat.org/title/mediawiki/oclc/964654436 |chapter=Human-centered Machine Learning Through Interactive Visualization: Review and Open Challenges |publisher=Ciaco - i6doc.com |place=Louvain-la-Neuve, Belgique |pages=641–646 |isbn=978-2-87587-027-8 |oclc=964654436}}</ref> present a five-stage conceptual framework: producing and accessing data, preparing data for tool use, selecting a machine learning model, visualizing computation in the tool interface, and users applying analytic reasoning to validate and direct further use. Assessing this framework, a machine learning-integrated search tool must provide users with a functional workflow where: | |||

#users communicate their task requirements as a query; | |||

#users ask their tool to apply that query as input within its computational system; | |||

#the tool performs its computation, mapping the features against the document set; | |||

#the tool represents the results of the computation in its interface; | |||

#users assess whether they are or are not satisfied with the results; and | |||

#users restart the interaction loop with adjustments or conclude their use of the tool. | |||

Thus, a primary responsibility for users within machine learning environments is the need to assess how well the results of machine learning have aligned with their task objectives. A systematic review by Amershi ''et al.''<ref>{{Cite journal |last=Amershi |first=Saleema |last2=Cakmak |first2=Maya |last3=Knox |first3=William Bradley |last4=Kulesza |first4=Todd |date=2014-12-22 |title=Power to the People: The Role of Humans in Interactive Machine Learning |url=https://ojs.aaai.org/index.php/aimagazine/article/view/2513 |journal=AI Magazine |language=en |volume=35 |issue=4 |pages=105–120 |doi=10.1609/aimag.v35i4.2513 |issn=2371-9621}}</ref> suggests six considerations for the user’s role in arbitrating machine learning performance: | |||

*Users are people, not oracles (i.e., they should not be expected to repeatedly answer whether a model is right or wrong). | |||

*People tend to give more positive than negative feedback. | |||

*People need a demonstration of how machine learning should behave. | |||

*People naturally want to provide more than just data labels. | |||

*People value transparency. | |||

*Transparency can help people provide better labels. | |||

===Ontologies=== | |||

In search tasks involving large document sets, many challenges can arise that reduce performance [[Quality (business)|quality]], harm user satisfaction, and increase the time for task completion.<ref name=":8" /><ref name=":9" /><ref name=":10" /> Often, these challenges result from misalignment between the vocabularies used by the document sets, storage maintainers, interface designers, and users. For instance, Qing ''et al.''<ref name=":14">{{Cite journal |last=Zeng |first=Qing T. |last2=Tse |first2=Tony |date=2006-01-01 |title=Exploring and Developing Consumer Health Vocabularies |url=https://doi.org/10.1197/jamia.M1761 |journal=Journal of the American Medical Informatics Association |volume=13 |issue=1 |pages=24–29 |doi=10.1197/jamia.M1761 |issn=1067-5027 |pmc=PMC1380193 |pmid=16221948}}</ref> outline the difficulties faced when translating between common and domain vocabularies in health tasks. They describe a study that found that up to 50% of health expressions by consumers were not represented by [[public health]] vocabularies.<ref name=":14" /> | |||

Within the pipeline of a search task, both the human and computational system can only perform optimally if communication is strong.<ref name=":15">{{Cite book |last=Arp |first=Robert |last2=Smith |first2=Barry |last3=Spear |first3=Andrew D. |date=2015 |title=Building ontologies with Basic Formal Ontology |publisher=Massachusetts Institute of Technology |place=Cambridge, Massachusetts |isbn=978-0-262-52781-1}}</ref> Ontologies are representational artifacts that reflect the entities, relations, and structures of its domain. Ontologies are of three types: a philosophical ontology for describing and structuring reality, a domain ontology for structuring the entities and relations of a knowledge base, and a top-level ontology for interfacing between different domain ontologies.<ref name=":15" /> Ontologies provide the flexibility, extensibility, generality, and expressiveness necessary to bridge the gap when mapping domain knowledge for effective computer-facing and human-facing use.<ref name=":7" /> For this purpose, ontologies are increasingly being used within tools to help users perform their challenging search tasks.<ref>{{Cite journal |last=Bikakis, N.; Sellis, T. |year=2016 |title=Exploration and Visualization in the Web of Big Linked Data: A Survey of the State of the Art |url=https://arxiv.org/abs/1601.08059 |journal=arXiv |arxiv=1601.08059}}</ref><ref>{{Cite journal |last=Carpendale |first=Sheelagh |last2=Chen |first2=Min |last3=Evanko |first3=Daniel |last4=Gehlenborg |first4=Nils |last5=Görg |first5=Carsten |last6=Hunter |first6=Larry |last7=Rowland |first7=Francis |last8=Storey |first8=Margaret-Anne |last9=Strobelt |first9=Hendrik |date=2014-03 |title=Ontologies in Biological Data Visualization |url=https://ieeexplore.ieee.org/document/6777435/ |journal=IEEE Computer Graphics and Applications |volume=34 |issue=2 |pages=8–15 |doi=10.1109/MCG.2014.33 |issn=1558-1756}}</ref><ref>{{Cite journal |last=Dou |first=Dejing |last2=Wang |first2=Hao |last3=Liu |first3=Haishan |date=2015-02 |title=Semantic data mining: A survey of ontology-based approaches |url=http://ieeexplore.ieee.org/document/7050814/ |journal=Proceedings of the 2015 IEEE 9th International Conference on Semantic Computing (IEEE ICSC 2015) |publisher=IEEE |place=Anaheim, CA, USA |pages=244–251 |doi=10.1109/ICOSC.2015.7050814 |isbn=978-1-4799-7935-6}}</ref><ref>{{Cite journal |last=Livingston |first=Kevin M. |last2=Bada |first2=Michael |last3=Baumgartner |first3=William A. |last4=Hunter |first4=Lawrence E. |date=2015-04-23 |title=KaBOB: ontology-based semantic integration of biomedical databases |url=https://doi.org/10.1186/s12859-015-0559-3 |journal=BMC Bioinformatics |volume=16 |issue=1 |pages=126 |doi=10.1186/s12859-015-0559-3 |issn=1471-2105 |pmc=PMC4448321 |pmid=25903923}}</ref><ref>{{Cite book |last=Salkic, S.; Softic, S.; Taraghi, B. Ebner, M. |date= |year=2015 |editor-last=Pongratz |editor-first=Hans |editor2-last=Gesellschaft für Informatik |title=DeLFI 2015 - die 13. E-Learning Fachtagung Informatik der Gesellschaft für Informatik e.V: 1.-4. September 2015 München, Deutschland |url=https://www.worldcat.org/title/mediawiki/oclc/927803453 |chapter=Linked data driven visual analytics for tracking learners in a PLE |series=GI-Edition Lecture Notes in Informatics Proceedings |publisher=Ges. für Informatik |place=Bonn |pages=329–331 |isbn=978-3-88579-641-1 |oclc=927803453}}</ref> | |||

When creating an ontology, experts construct a network of entities and relations, which together yield various structures.<ref>{{Cite book |last=Jakus |first=Grega |last2=Milutinović |first2=Veljko |last3=Omerović |first3=Sanida |last4=Tomažič |first4=Sašo |date=2013 |title=Concepts, ontologies, and knowledge representation |url=https://www.worldcat.org/title/mediawiki/oclc/841495258 |series=SpringerBriefs in computer science |publisher=Springer |place=New York |isbn=978-1-4614-7821-8 |oclc=841495258}}</ref><ref>{{Cite journal |last=Rector, A.; Schulz, S.; Rodrigues, J.M. et al. |date=2019-01-01 |title=On beyond Gruber: “Ontologies” in today’s biomedical information systems and the limits of OWL |url=https://www.sciencedirect.com/science/article/pii/S2590177X19300010 |journal=Journal of Biomedical Informatics |language=en |volume=100 Suppl. |pages= |at=100002 |doi=10.1016/j.yjbinx.2019.100002 |issn=1532-0464}}</ref> Ontology entities reflect the objects of the domain, like a phenotype in a medical abnormality ontology, a processor in a computer architecture ontology, or a precedent in a legal ontology.<ref>{{Cite journal |last=Rodríguez |first=Natalia Díaz |last2=Cuéllar |first2=M. P. |last3=Lilius |first3=Johan |last4=Calvo-Flores |first4=Miguel Delgado |date=2014-04 |title=A survey on ontologies for human behavior recognition |url=https://dl.acm.org/doi/10.1145/2523819 |journal=ACM Computing Surveys |language=en |volume=46 |issue=4 |pages=1–33 |doi=10.1145/2523819 |issn=0360-0300}}</ref> In some ontologies, like the top-level ontology, Basic Formal Ontology, designers go as far as denoting qualities such as materiality, object composition, and spatial qualities in reality.<ref name=":15" /> Ontology entities are encoded with information about their role in the vocabulary, definitions, descriptions, and contexts, as well as metadata that can inform the performance of future ontology engineering tasks. | |||

Ontology relations are the links between ontology entities that express the quality of interaction between them and the domain as a whole.<ref>{{Cite journal |last=Katifori |first=Akrivi |last2=Torou |first2=Elena |last3=Vassilakis |first3=Costas |last4=Lepouras |first4=Georgios |last5=Halatsis |first5=Constantin |date=2008-06 |title=Selected results of a comparative study of four ontology visualization methods for information retrieval tasks |url=http://ieeexplore.ieee.org/document/4632101/ |journal=2008 Second International Conference on Research Challenges in Information Science |publisher=IEEE |place=Marrakech |pages=133–140 |doi=10.1109/RCIS.2008.4632101 |isbn=978-1-4244-1677-6}}</ref> When assessing ontology relations, Arp ''et al.''<ref name=":15" /> distinguish relations under the categories of universal–universal (dog “is_a” animal), particular–universal (this dog “instance_of” dog), and particular–particular (this dog “continuant_parts” of this dog grouping). Domain ontology relations are realized through unique interoperability between ontology entities. For instance, an animal ontology may have an ontology entity reflecting the concept of a “human,” which may have the ontology relations “domesticates/is domesticated by” between it and the “dog” ontology entity. | |||

After defining the entities, relations, and other features of an ontology, experts record their work in ontology files of standardized data formats like RDF, OWL, and OBO. These ontology files are then distributed amongst users. They can then be integrated into the computational and human-facing systems of tools for use during tasks. Some examples of current ontology use are information extraction on unstructured text, behavior modeling of intellectual agents, and an increasing number of human-facing visualization tasks such as [[Clinical decision support system|decision support systems]] within critical care environments.<ref name=":11" /><ref name=":12" /><ref name=":13" /> | |||

==Methods== | |||

In this section, we describe the methods used for criteria formulation. We begin with a review of literature for health informatics search tasks. Based on the insights gained from this review, we distill a set of criteria. We then use these criteria to contrast traditional design strategies for interfaces of search tasks. | |||

===Task review=== | |||

Here, we review some research on interfaces for health informatics search tasks. We used Google Scholar, IEEE Xplore, and PubMed to conduct an exhaustive search of articles and reviews published between 2015 and 2021. We have divided our findings into three sections. First, we explore research on health data, [[information management]], and information-centric interfaces. This is followed by research discussing the types of search tasks and their use in structuring the design of interfaces for health informatics. Finally, we investigate the requirements for aligning vocabularies for health informatics search tasks. | |||

====Health data, information management, and information-centric interfaces==== | |||

Health data is constantly generated, highlighted by reports that within just a year the U.S. healthcare system created 150 new exabytes of data.<ref>{{Cite journal |last=Raghupathi |first=Wullianallur |last2=Raghupathi |first2=Viju |date=2014-02-07 |title=Big data analytics in healthcare: promise and potential |url=https://doi.org/10.1186/2047-2501-2-3 |journal=Health Information Science and Systems |language=en |volume=2 |issue=1 |doi=10.1186/2047-2501-2-3 |issn=2047-2501 |pmc=PMC4341817 |pmid=25825667}}</ref> Yet, the information that is expressed by this data, such as personal medical records, research publications, and consumer health media, is not useful unless it can be effectively understood and utilized by users. As such, it is critical to examine the challenges facing users when performing their tasks, and through this establish novel strategies for supporting the activation of health data. | |||

Fang ''et al.''<ref name=":17" /> explore the pressing challenges for accessing health data under the four categories: volume, variety, velocity, and veracity. They find that the volume of health care data creates challenges in the management of data sources and stores. They describe that existing strategies are struggling, and that novel designs should be established for scaling data services. They explain that a variety of challenges come with the management of data characteristics, ranging from unstructured datapoints generated from sources like sensors, to structured data entities like research papers and medical documents. For this, they state that designers should concentrate on aligning with the characteristics of the information being encountered. Next, they explore the challenges of velocity, which involves the rate at which users require their data to move from source to activation within their task. They highlight novel research in the networking and data management space. Finally, they explore veracity challenges, such as the assessment and validation of [[data quality]] and the quality of information that the data may produce. | |||

Gibson ''et al.''<ref name=":16" /> provide a review of the evolving fields of [[health information management]] and [[Informatics (academic field)|informatics]]. They review the topics of data capture, digital e-record systems, aggregate health management, healthcare funding models, data-oriented evidence-based medicine, consumer health applications, health governance, personal health access, and genomic personalized medicine. Similar to Fang ''et al.''<ref name=":17" />, they note that the predominant work for health informatics should be concerned with presenting users with information-centric perspectives during their performances rather than technology-centered perspectives. More so, they describe that users in healthcare “must often navigate and understand complex clinical workflows to effectively … capture, store, or exchange information.” In other words, task [[workflow]]s are already complex; therefore, effective interfaces should promote information encounters that help users perform better, rather than engage in unrelated technical details. | |||

From the above research, we distill the criterion: Designs should maintain an information-centric interface that is flexible with respect to the dynamic requirements of search tasks like veracity of data sources, variety of data types, and evolving needs of users for health informatics. | |||

====Search tasks and structuring the design of interfaces for health informatics==== | |||

Russell-Rose ''et al.''<ref name="Russell-RoseInform18">{{Cite journal |last=Russell-Rose, T.; Chamberlain, J.; Azzopardi, L. |date= |year=2018 |title=Information retrieval in the workplace: A comparison of professional search practices |url=https://www.sciencedirect.com/science/article/abs/pii/S0306457318300220 |journal=Information Processing & Management |language=en |volume=54 |issue=6 |pages=1042–1057 |doi=10.1016/j.ipm.2018.07.003 |issn=0306-4573}}</ref> describe professional health workplace tasks. They find that the most prevalent types of search tasks are literature reviews for overviewing a topic, scoping reviews for rapidly inspecting the possible relevance of an information source, rapid evidence reviews for appraising the overall quality of a scoping review, and, finally, systematic reviews for exploring a topic in a robust manner. | |||

During search tasks, users often lack the ability to perceive how their query decisions impact, relate, and interact with the document set. This is an important consideration for users who might want to adjust a query to better align with their information-seeking objectives. A further study by Russell-Rose ''et al.''<ref>{{Cite journal |last=Russell-Rose |first=Tony |last2=Chamberlain |first2=Jon |date=2017-10-02 |title=Expert Search Strategies: The Information Retrieval Practices of Healthcare Information Professionals |url=https://medinform.jmir.org/2017/4/e33 |journal=JMIR Medical Informatics |language=EN |volume=5 |issue=4 |pages=e7680 |doi=10.2196/medinform.7680 |pmc=PMC5643841 |pmid=28970190}}</ref> analyzes search strategies performed by healthcare professionals. They find that a large majority of participants have a general desire to utilize advanced search functionalities when available. This suggests that users are not hesitant to take advantage of resources that they believe help optimize their task performance. Huurdeman<ref name="HuurdemanDyn17">{{Cite journal |last=Huurdeman, H.C. |year=2017 |title=Dynamic Compositions: Recombining Search User Interface Features for Supporting Complex Work Tasks |url=http://ceur-ws.org/Vol-1798/ |journal=CEUR Workshop Proceedings |volume=1798 |pages=22–25 |URN=nbn:de:0074-1798-7}}</ref> outlines that for this, a good course of action is to leverage query corrections, autocomplete, and suggestions. Yet, they find that such additions can be harmful if those features do not provide appropriate domain context. That is, resources must allow users to be contextually aware of how their query aligns with the contents of document sets, as well as the conditions of computational technologies used by interfaces. | |||

In the same research, Huurdeman<ref name="HuurdemanDyn17" /> investigates complex tasks involving information search and information-seeking models when using multistage search systems. In this research, they explore requirements that designers must account for when supporting users. Challenging search tasks require users to learn about the searched domain, understand how their objectives align, and formulate their objectives into a way that can be used by their tool. In other words, query building requires users to be domain cognizant, as they must communicate information-seeking objectives in a way that is understood by the tool, yet also aligns with the information found within the document sets. Thus, a health informatics tool that supports search tasks should provide the opportunity for understanding the domain of the document set being explored. | |||

Zahabi ''et al.''<ref>{{Cite journal |last=Zahabi |first=Maryam |last2=Kaber |first2=David B. |last3=Swangnetr |first3=Manida |date=2015-08-01 |title=Usability and Safety in Electronic Medical Records Interface Design: A Review of Recent Literature and Guideline Formulation |url=https://doi.org/10.1177/0018720815576827 |journal=Human Factors |language=en |volume=57 |issue=5 |pages=805–834 |doi=10.1177/0018720815576827 |issn=0018-7208}}</ref> describe a set of nine requirements for designers when considering how to design usable interfaces for health informatics search tasks, summarized as: | |||

*Naturalness: The workflow of the system must present a natural task progression. | |||

*Consistency: The parts of the system should present similar functional language. | |||

*Prevent errors: Be proactive in the prevention of potential errors. | |||

*Minimizing cognitive load: Align cognitive load to the requirements of the task. | |||

*Efficient interaction: Be efficient in the number of steps to complete a task. | |||

*Forgiveness and feedback: Supply proper and prompt feedback opportunities. | |||

*Effective use of language: Promote clear and understandable communication. | |||

*Effective information presentation: Align with information characteristics. | |||

*Customizability/flexibility: The system should remain flexible to the task requirements. | |||

Additional research by Dudley ''et al.''<ref>{{Cite journal |last=Dudley |first=John J. |last2=Kristensson |first2=Per Ola |date=2018-06-13 |title=A Review of User Interface Design for Interactive Machine Learning |url=https://doi.org/10.1145/3185517 |journal=ACM Transactions on Interactive Intelligent Systems |volume=8 |issue=2 |pages=8:1–8:37 |doi=10.1145/3185517 |issn=2160-6455}}</ref> reviews user interface design for machine learning environments. They provide a set of principles that can be used by designers: | |||

*Make task goals and constraints explicit. | |||

*Support user understanding of model uncertainty and confidence. | |||

*Capture intent rather than input. | |||

*Provide effective data representations. | |||

*Exploit interactivity and promote rich interactions. | |||

*Engage the user. | |||

From this research, we can distill two criteria. First, designs should provide interaction loops that promote prompt and effective feedback opportunities for the user. Second, designs should provide representations that are natural and consistent to the requirements of the information source, the user, and the task. | |||

====Aligning vocabularies for health informatics search tasks==== | |||

When considering interfaces for health informatics search tasks, a major challenge for users is the need to overcome problem formulation deficiencies when encountering unfamiliar domains. This is because, according to Harvey ''et al.''<ref name=":18">{{Cite journal |last=Harvey |first=Morgan |last2=Hauff |first2=Claudia |last3=Elsweiler |first3=David |date=2015-08-09 |title=Learning by Example: Training Users with High-quality Query Suggestions |url=https://dl.acm.org/doi/10.1145/2766462.2767731 |journal=Proceedings of the 38th International ACM SIGIR Conference on Research and Development in Information Retrieval |language=en |publisher=ACM |place=Santiago Chile |pages=133–142 |doi=10.1145/2766462.2767731 |isbn=978-1-4503-3621-5}}</ref>, users have been found to consistently suffer from four major issues during the performance of search tasks: | |||

*difficulty understanding the domain being searched; | |||

*an inability to apply their domain expertise; | |||

*lacking the capacity to formulate an effective search query within the interface that accurately reflects their information-seeking objective; and | |||

*deficient understanding of how to assess results produced by search, to decide whether the search has or has not satisfied their objective. | |||

Harvey ''et al.''<ref name=":18" /> show that in domains with complex vocabularies, such as health and medicine, the disparity of potential users’ prior knowledge is extreme. They find that non-expert users routinely do not possess enough domain knowledge to address their information-seeking needs. This can cause significant issues during query formulation. As a result, non-expert users must first step away from their tool to learn specialized vocabulary before they can begin query building. Both Soldaini ''et al.''<ref>{{Cite journal |last=Soldaini |first=Luca |last2=Yates |first2=Andrew |last3=Yom-Tov |first3=Elad |last4=Frieder |first4=Ophir |last5=Goharian |first5=Nazli |date=2015-07-16 |title=Enhancing web search in the medical domain via query clarification |url=https://doi.org/10.1007/s10791-015-9258-y |journal=Information Retrieval Journal |language=en |volume=19 |issue=1-2 |pages=149–173 |doi=10.1007/s10791-015-9258-y |issn=1386-4564}}</ref> and Anderson and Wischgoll<ref>{{Cite journal |last=Anderson |first=James D |last2=Wischgoll |first2=Thomas |date=2020-01-26 |title=Visualization of Search Results of Large Document Sets |url=https://www.ingentaconnect.com/content/ist/ei/2020/00002020/00000001/art00006;jsessionid=1ui77rulkmwwg.x-ic-live-03 |journal=Electronic Imaging |volume=2020 |issue=1 |pages=388–1–388-7 |doi=10.2352/ISSN.2470-1173.2020.1.VDA-388}}</ref> describe that this issue can still affect even experts. This is because experts often must make assumptions when attuning to their tool. | |||

There is growing research targeting the generation and application of mediation resources to help reduce the communication gap while using health informatics tools. Zeng ''et al.''<ref name=":14" /> investigate the development of consumer health vocabularies for reducing the discourse gap between lay people and medical information document sets. Furthermore, Soldaini ''et al.''<ref>{{Citation |last=Soldaini |first=Luca |last2=Cohan |first2=Arman |last3=Yates |first3=Andrew |last4=Goharian |first4=Nazli |last5=Frieder |first5=Ophir |date=2015 |editor-last=Hanbury |editor-first=Allan |editor2-last=Kazai |editor2-first=Gabriella |editor3-last=Rauber |editor3-first=Andreas |editor4-last=Fuhr |editor4-first=Norbert |title=Retrieving Medical Literature for Clinical Decision Support |url=http://link.springer.com/10.1007/978-3-319-16354-3_59 |work=Advances in Information Retrieval |language=en |publisher=Springer International Publishing |place=Cham |volume=9022 |pages=538–549 |doi=10.1007/978-3-319-16354-3_59 |isbn=978-3-319-16353-6 |accessdate=2021-09-23}}</ref> explore the use of novel query computation strategies to improve the quality of medical literature retrieval during search tasks. In their quantitative study, they contrast models generated using combinations of algorithms, vocabularies, and feature weights, assessing the computational performance of different query reformulation techniques. The results of their study suggest “greatly improved retrieval performance” when utilizing combined machine learning and bridged vocabularies. More so, they provide insight regarding the quality of options that can support computational systems for health informatics search tasks. | |||

From the above research, we can distill two criteria. First, designs should provide interactions that allow users to efficiently prepare, perform, assess, and adjust their machine learning to align with information-seeking objectives of search tasks. Second, designs should provide mediation opportunities that assist users in communicating information-seeking objectives into the domain-specific vocabulary of the document set. | |||

===High-level criteria=== | |||

In Table 1, we provide five criteria based on the above review. | |||

{| | |||

| style="vertical-align:top;" | | |||

{| class="wikitable" border="1" cellpadding="5" cellspacing="0" width="80%" | |||

|- | |||

| colspan="2" style="background-color:white; padding-left:10px; padding-right:10px;" |'''Table 1.''' The criteria for guiding the design of interfaces for health informatics search tasks involving large document sets. For abbreviation purposes, design criteria will be referenced in the text as DC#, where # is its assigned number. | |||

|- | |||

! style="background-color:#dddddd; padding-left:10px; padding-right:10px;" |DC# | |||

! style="background-color:#dddddd; padding-left:10px; padding-right:10px;" |Design criteria | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |DC1 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Provide an information-centric interface that shows flexibility towards the evolving needs of users and the dynamic requirements of search tasks like the veracity of data sources and variety of information types. | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |DC2 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Provide interaction loops that supply prompt and effective feedback for users during the performance of search tasks. | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |DC3 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Provide natural and consistent representations that allow users to understand the constraints, processes, and results provided by the interface. | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |DC4 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Provide interactions that allow users to efficiently prepare, perform, assess, and adjust their machine learning to align with the information-seeking objectives of search tasks. | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |DC5 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Provide mediation opportunities that assist users in communicating and bridge their information-seeking objectives into the vocabulary of the document set. | |||

|- | |||

|} | |||

|} | |||

===Analysis of traditional interface strategies for health informatics search tasks=== | |||

We now assess the traditional design strategies for interfaces for health informatics search tasks. Wilson’s comprehensive Search User Interface Design<ref>{{Cite book |last=Wilson |first=Max L. |date=2012 |title=Search user interface design |url=https://www.worldcat.org/title/mediawiki/oclc/780340844 |series=Synthesis lectures on information concepts, retrieval, and services |publisher=Morgan & Claypool |place=San Rafael, Calif. |isbn=978-1-60845-689-5 |oclc=780340844}}</ref> provides a complete survey of the history and current state of search interfaces. Based on their survey, and in particular their discussion of input and control features within the modern search user interfaces, two base strategies and one extension strategy for search interfaces are realized: “structured” interfaces, “unstructured” interfaces, and, in extension, “query expansion” interfaces. Table 2 provides a summary of how the above criteria align with each interface strategy. | |||

{| | |||

| style="vertical-align:top;" | | |||

{| class="wikitable" border="1" cellpadding="5" cellspacing="0" width="80%" | |||

|- | |||

| colspan="4" style="background-color:white; padding-left:10px; padding-right:10px;" |'''Table 2.''' A summary matrix of alignment between the criteria and interface strategies. Full descriptions are found within their respective sections. “Strong” is assigned if a characteristic of the interface strategy promotes alignment with the requirements of the design criterion. “Weak” is assigned if a characteristic of the strategy does not promote alignment with the requirements of the design criterion. “Variable” is assigned if the interface strategy has the potential to align with the criterion; however, such an alignment is not innate and must be actively pursued. | |||

|- | |||

! style="background-color:#dddddd; padding-left:10px; padding-right:10px;" |DC# | |||

! style="background-color:#dddddd; padding-left:10px; padding-right:10px;" |Structured | |||

! style="background-color:#dddddd; padding-left:10px; padding-right:10px;" |Unstructured | |||

! style="background-color:#dddddd; padding-left:10px; padding-right:10px;" |Query expansion | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |DC1 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Weak | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Variable | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Strong | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |DC2 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Strong | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Strong | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Variable | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |DC3 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Variable | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Variable | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Variable | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |DC4 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Variable | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Strong | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Strong | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |DC5 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Weak | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Weak | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Strong | |||

|- | |||

|} | |||

|} | |||

====Structured interface strategy==== | |||

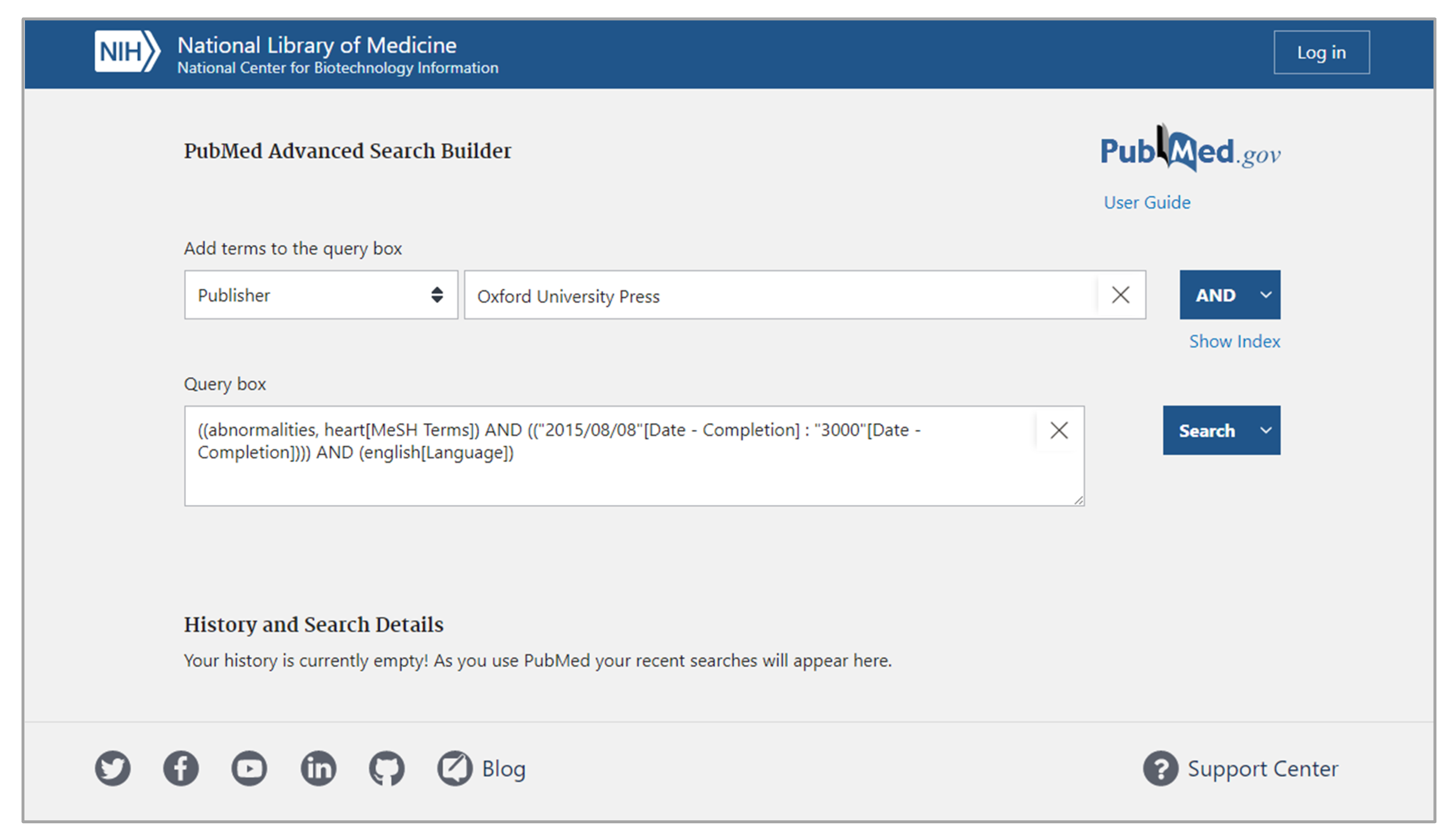

The structured interface strategy creates designs that regulate input during query building. This is achieved by maintaining heavily restricted input control profiles. Designers who implement the structured interface strategy into their interfaces presuppose a search task with specific expectations for input, bounding queries to a limited input profile. One common bounding technique is to constrain query lengths and limiting query content to a controlled set of terms.<ref>{{Cite journal |last=Zielstorff, R.D. |date= |year=2003 |title=Controlled vocabularies for consumer health |url=https://www.sciencedirect.com/science/article/pii/S1532046403000960 |journal=Journal of Biomedical Informatics |language=en |volume=36 |issue=4-5 |pages=326–333 |doi=10.1016/j.jbi.2003.09.015 |issn=1532-0464}}</ref> This restricted scope is considered the sole acceptable input profile, and thus it allows designers to generate interfaces that limit the possible range of inputs and restrict all inputs that fall outside of that range. Designers typically achieve this by using interface elements like dropdowns, checkboxes, and radio buttons instead of elements like text boxes with free typing. For example, Figure 1 depicts the PubMed Advance Search Builder, which implements the structured interface strategy in its design. This interface requires users to select specific query term types from a restricted list, which then guides user input.<ref name=":22">{{Cite web |last=National Center for Biotechnology Information |title=PubMed.gov |url=https://pubmed.ncbi.nlm.nih.gov/ |publisher=National Institutes of Health |accessdate=18 January 2021}}</ref> | |||

[[File:Fig1 Demelo Information21 12-8.png|900px]] | |||

{{clear}} | |||

{| | |||

| style="vertical-align:top;" | | |||

{| border="0" cellpadding="5" cellspacing="0" width="900px" | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |<blockquote>'''Figure 1.''' An example of a structured interface strategy for a search task in PubMed Advanced Search Builder. In this use case, a query item was generated for a MeSH term for heart abnormalities, a completion date after August 8, 2015, and in the English language, with the publisher of Oxford University Press soon to be added. Source: Image generated on 18 January 2021, using the [https://pubmed.ncbi.nlm.nih.gov/advanced/ public web portal] provided by the National Center for Biotechnical Information.</blockquote> | |||

|- | |||

|} | |||

|} | |||

Since input control is restricted, a strength of the structured interface strategy is that designers can use information characteristics to prescribe the full range of query formulations. This allows for the use of representational and computational designs that optimize for the expected characteristics of the restricted input profiles, per DC2. This strategy provides a designer-friendly environment that is hardened against unwanted queries, which, if effectively communicated in the design of result representations, could allow for alignment with DC3 and DC4. Yet, it can be challenging to designers to use structured interface strategies in a generalized setting. This is because when a document set is swapped, hardened approaches may not align with the information characteristics of the new document set. This negatively affects the flexibility of the interface, and in turn alignment with DC1. A potential weakness of the structured interface strategy is that it requires users to possess expertise on both the controlled vocabulary of the interface as well as the vocabulary of the document set being searched. If this is not known, user experience can suffer, drastically affecting alignment with DC5. Within the context of health informatics, such weaknesses reduce the users’ ability to effectively perform search tasks. This is because the controlled vocabularies within the health and medical domains demand significant expertise and result in numerous points of failure during the query formation process.<ref>{{Cite journal |last=McCray |first=Alexa T. |last2=Tse |first2=Tony |date=2003 |title=Understanding Search Failures in Consumer Health Information Systems |url=https://www.ncbi.nlm.nih.gov/pmc/articles/PMC1479930/ |journal=AMIA Annual Symposium Proceedings |volume=2003 |pages=430–434 |issn=1942-597X |pmc=1479930 |pmid=14728209}}</ref><ref>{{Cite journal |last=Keselman |first=Alla |last2=Browne |first2=Allen C. |last3=Kaufman |first3=David R. |date=2008-07-01 |title=Consumer Health Information Seeking as Hypothesis Testing |url=https://doi.org/10.1197/jamia.M2449 |journal=Journal of the American Medical Informatics Association |volume=15 |issue=4 |pages=484–495 |doi=10.1197/jamia.M2449 |issn=1067-5027 |pmc=PMC2442260 |pmid=18436912}}</ref> | |||

====Unstructured interface strategy==== | |||

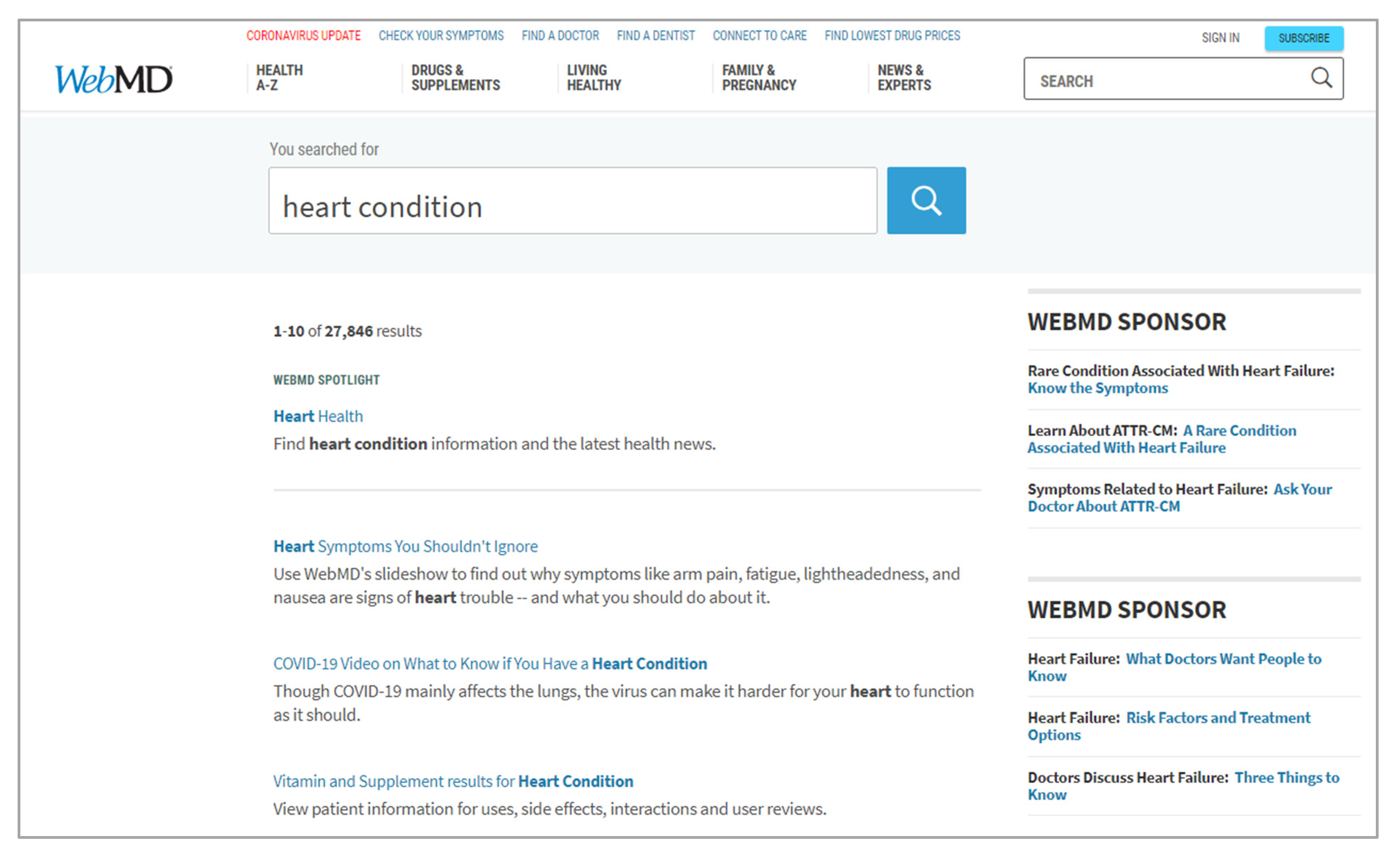

The unstructured interface strategy creates designs that provide limited input regulation. Unlike the structured interface strategy, it provides an open input control that accepts most input profiles during query building. Designers who implement the unstructured interface strategy do so without presupposing particular input, only accounting for general user error. That is, this input can originate from anywhere, such as common vocabulary, rather than from a pre-determined set of terms provided by the designer. Often, this input is directed to a single interface element. Implementations of the unstructured interface strategy typically present a text box that allows users to freely type their own text into the interface. These implementations will perform some input processing prior to use; however, the presentation of this processing to users is usually limited to correcting typographical errors rather than semantic ones. For example, the interface of Google aligns with the unstructured interface strategy, presenting users with an open, text-box input control without domain-specific assumptions or requirements. Of course, Google’s computational systems use extensive processing between receiving input from users and presenting the results of computation back to users.<ref>{{Cite journal |last=Luo |first=Jake |last2=Wu |first2=Min |last3=Gopukumar |first3=Deepika |last4=Zhao |first4=Yiqing |date=2016-01-01 |title=Big Data Application in Biomedical Research and Health Care: A Literature Review |url=https://doi.org/10.4137/BII.S31559 |journal=Biomedical Informatics Insights |language=en |volume=8 |pages=BII.S31559 |doi=10.4137/BII.S31559 |issn=1178-2226 |pmc=PMC4720168 |pmid=26843812}}</ref> Yet, users themselves are not informed of how their results came to be, even after changing to Google Instant.<ref>{{Cite journal |last=Qvarfordt |first=Pernilla |last2=Golovchinsky |first2=Gene |last3=Dunnigan |first3=Tony |last4=Agapie |first4=Elena |date=2013-07-28 |title=Looking ahead: query preview in exploratory search |url=https://dl.acm.org/doi/10.1145/2484028.2484084 |journal=Proceedings of the 36th international ACM SIGIR conference on Research and development in information retrieval |language=en |publisher=ACM |place=Dublin Ireland |pages=243–252 |doi=10.1145/2484028.2484084 |isbn=978-1-4503-2034-4}}</ref> Another example of an implementation of the unstructured interface strategy is WebMD’s search interface. This interface processes a free-text input with basic sanitization techniques before generating features for its search engine system, as depicted in Figure 2. | |||

[[File:Fig2 Demelo Information21 12-8.png|900px]] | |||

{{clear}} | |||

{| | |||

| style="vertical-align:top;" | | |||

{| border="0" cellpadding="5" cellspacing="0" width="900px" | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |<blockquote>'''Figure 2.''' An example of an unstructured interface strategy for a search task on WebMD Search Interface. In this use case, the free–text query “heart condition” was generated. Source: Image generated on 18 January 2021, using the [https://www.webmd.com/search/search_results/default.aspx?query=heart%20condition public web portal] provided by WebMD.</blockquote> | |||

|- | |||

|} | |||

|} | |||

A strength of the unstructured interface strategy is that it supports the use of any vocabulary during query building, allowing for the natural activation of common vocabulary during task performance, in alignment with DC4. Additionally, this removes the requirement for users to possess input expertise and control profiles that typically come with a structured interface strategy, per DC1 and DC4. Designers can still implement prompt and effective feedback during task performance, thereby supporting DC2. If the constraints, processes, and results of their task performance are effectively communicated in result representations, DC3 and DC4 can be well supported. However, by allowing for the direct use of common vocabulary in lieu of a presupposed controlled vocabulary, the unstructured interface strategy suffers where the structured interface strategy excels. That is, poor implementations of the unstructured interface strategy can produce interfaces that do not provide mediation for users to translate their common vocabulary into the domain-specific vocabulary. In doing so, users are not being helped in understanding how their query building has impacted their search performance. For example, these poorly implemented interfaces may take input literally and bring users directly to a result page without providing context as to how the results were found, negatively affecting DC1 and DC5. This potential for promoting weak alignment between user and information source can lead to a significant drop in the quality of search performance. This can be an especially important requirement to address for health informatics interfaces, as it has been found that users routinely struggle to craft effective query terms during their health-related search tasks.<ref name=":21">{{Citation |last=Jimmy |last2=Zuccon |first2=Guido |last3=Koopman |first3=Bevan |date=2018 |editor-last=Pasi |editor-first=Gabriella |editor2-last=Piwowarski |editor2-first=Benjamin |editor3-last=Azzopardi |editor3-first=Leif |editor4-last=Hanbury |editor4-first=Allan |title=Choices in Knowledge-Base Retrieval for Consumer Health Search |url=http://link.springer.com/10.1007/978-3-319-76941-7_6 |work=Advances in Information Retrieval |publisher=Springer International Publishing |place=Cham |volume=10772 |pages=72–85 |doi=10.1007/978-3-319-76941-7_6 |isbn=978-3-319-76940-0 |accessdate=2021-09-23}}</ref> | |||

====Query expansion interface strategy==== | |||

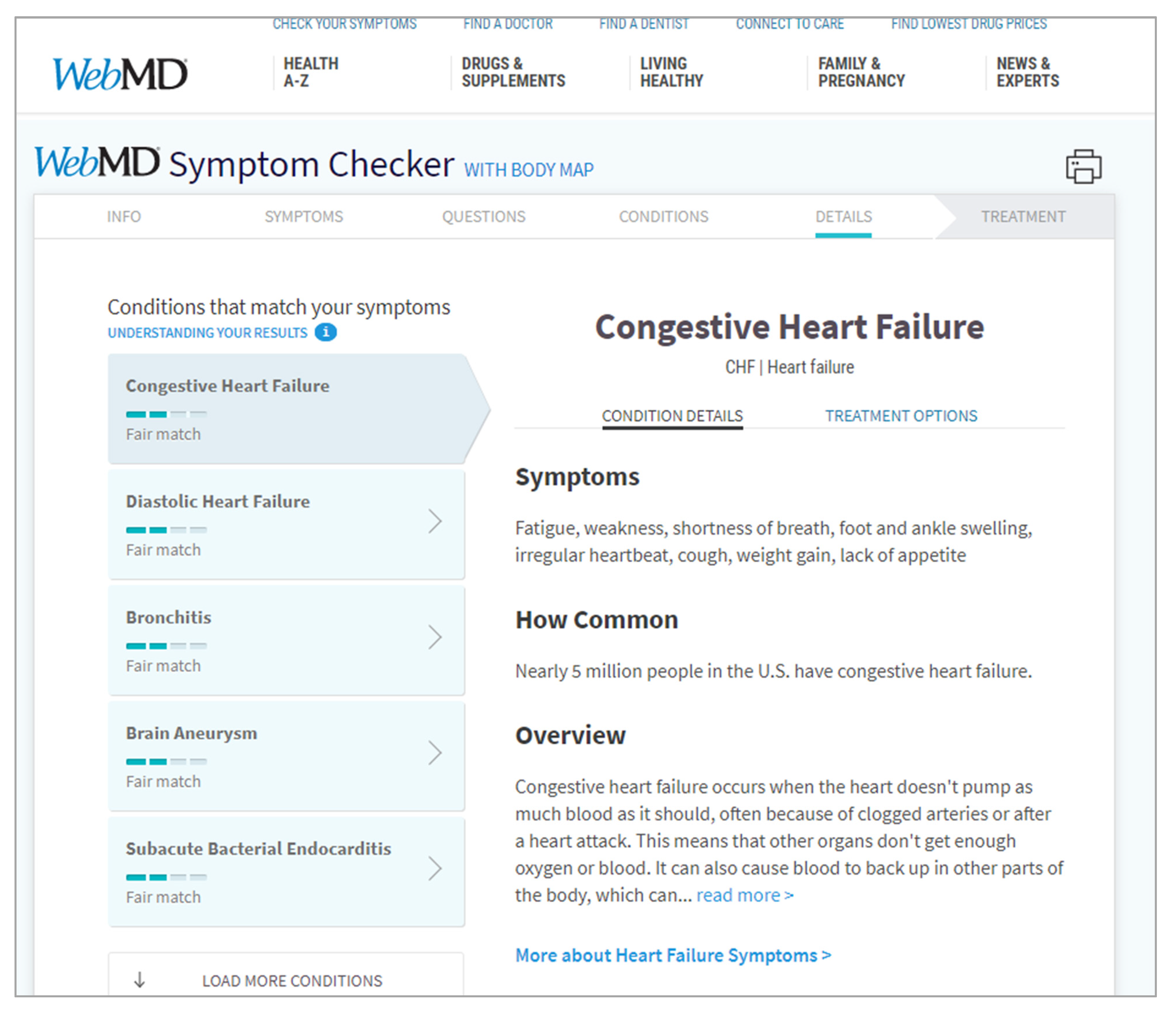

The query expansion interface strategy is an extension of both the structured and unstructured interface strategies. That is, this strategy expands by adding mediation opportunities to bridge the vocabulary of the user with the vocabulary of the document set both within the representational as well as the computational systems.<ref name=":20">{{Cite journal |last=Azad, H.K.; Deepak, A. |date= |year=2019 |title=A new approach for query expansion using Wikipedia and WordNet |url=https://www.sciencedirect.com/science/article/abs/pii/S0020025519303263 |journal=Information Sciences |language=en |volume=492 |pages=147–163 |doi=10.1016/j.ins.2019.04.019 |issn=0020-0255}}</ref> These mediation opportunities are typically implemented within two parts of the interaction loop. The first is during input, where mediating opportunities present during query building. Often, these mediation opportunities come as cues that suggest to users how their common vocabulary could align with the vocabulary of the domain, and visa-versa. An example of an implementation of the structured-like query expansion interface strategy is WebMD’s Symptom Checker, shown in Figure 3. This example interface goes through a series of controlled stages of query building that are structured by numerous opportunities for mediation. The second is during the processing prior to document set mapping. Like other strategies, a system can apply natural language processing techniques to the input, where the text string provided as input is tokenized into its parts. From this, the system sanitizes token parts to remove trivial tokens like the stop words “the,” “a,” and “an,” and any remaining tokens are then inserted as features in search engine systems. In more complex systems, additional sanitization techniques can be used.<ref>{{Cite journal |last=Jimmy, J.; Zuccon, G.; Palotti, J. et al. |year=2018 |title=Overview of the CLEF 2018 Consumer Health Search Task |url=http://ceur-ws.org/Vol-2125/ |journal=CEUR Workshop Proceedings |volume=2125 |pages=1–15 |URN=nbn:de:0074-2125-0}}</ref> Yet instead of immediately inserting the remaining tokens as features into the computational systems, the query expansion interface strategy builds upon the input profile by injecting insight provided by mediating resources, such as related terms, synonyms, and other expansion opportunities.<ref>{{Cite journal |last=Capuano |first=Nicola |last2=Longhi |first2=Andrea |last3=Salerno |first3=Saverio |last4=Toti |first4=Daniele |date=2015-05-04 |title=Ontology-driven Generation of Training Paths in the Legal Domain |url=https://online-journals.org/index.php/i-jet/article/view/4609 |journal=International Journal of Emerging Technologies in Learning (iJET) |language=en |volume=10 |issue=7 |pages=14–22 |doi=10.3991/ijet.v10i7.4609 |issn=1863-0383}}</ref> In other words, these systems utilize mediating resources to computationally expand the query. Some examples of mediating resources are knowledge bases like WordNet and Wikipedia, and ontologies like The Human Phenotype Ontology.<ref name=":19" /><ref name=":20" /><ref name=":23">{{Cite journal |last=Köhler |first=Sebastian |last2=Gargano |first2=Michael |last3=Matentzoglu |first3=Nicolas |last4=Carmody |first4=Leigh C |last5=Lewis-Smith |first5=David |last6=Vasilevsky |first6=Nicole A |last7=Danis |first7=Daniel |last8=Balagura |first8=Ganna |last9=Baynam |first9=Gareth |last10=Brower |first10=Amy M |last11=Callahan |first11=Tiffany J |date=2021-01-08 |title=The Human Phenotype Ontology in 2021 |url=https://academic.oup.com/nar/article/49/D1/D1207/6017351 |journal=Nucleic Acids Research |language=en |volume=49 |issue=D1 |pages=D1207–D1217 |doi=10.1093/nar/gkaa1043 |issn=0305-1048 |pmc=PMC7778952 |pmid=33264411}}</ref> | |||

[[File:Fig3 Demelo Information21 12-8.png|900px]] | |||

{{clear}} | |||

{| | |||

| style="vertical-align:top;" | | |||

{| border="0" cellpadding="5" cellspacing="0" width="900px" | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |<blockquote>'''Figure 3.''' An example of a structured–like query expansion interface strategy for a search task in WebMD’s Symptom Checker. In this use case, users are guided along a series of query building opportunities, allowing them to enter various symptoms and personal health criteria while aligning their personal vocabulary with the information resource vocabulary. Source: Image generated on 18 January 2021, using the [https://symptoms.webmd.com/ public web portal] provided by WebMD.</blockquote> | |||

|- | |||

|} | |||

|} | |||

A strength of the combined approach of the query expansion interface strategy is its strong efforts to eliminate the weaknesses associated with the structured and unstructured interface strategies while still maintaining their strengths. That is, by allowing the continued use of common vocabulary during the process of query building, users can have higher confidence about what the interface is asking of them, and what they are telling the interface to do, helping with DC4 and DC5. Furthermore, by integrating the use of mediating resources like ontologies, designers can demonstrate to users the quality of their query building and how their vocabulary decisions affect the performance of their search tasks, supporting DC2 and DC3.<ref>{{Citation |last=Lüke |first=Thomas |last2=Schaer |first2=Philipp |last3=Mayr |first3=Philipp |date=2012 |editor-last=Zaphiris |editor-first=Panayiotis |editor2-last=Buchanan |editor2-first=George |editor3-last=Rasmussen |editor3-first=Edie |editor4-last=Loizides |editor4-first=Fernando |title=Improving Retrieval Results with Discipline-Specific Query Expansion |url=http://link.springer.com/10.1007/978-3-642-33290-6_44 |work=Theory and Practice of Digital Libraries |publisher=Springer Berlin Heidelberg |place=Berlin, Heidelberg |volume=7489 |pages=408–413 |doi=10.1007/978-3-642-33290-6_44 |isbn=978-3-642-33289-0 |accessdate=2021-09-23}}</ref> Yet, with the added complexities of query expansion, computational systems may be required to perform more work before arriving at a final set of search results. Therefore, designers of systems taking advantage of query expansion should consider the impact on performance and responsiveness and counteract them to maintain alignment with DC2. For the query expansion interface strategy to be successful, designers must clearly communicate to users how exactly their query building has affected their search. If this communication is not provided, it can leave users confused regarding how their decisions have affected their search and can make it challenging for them to assess task performance, negatively affecting DC2. Such limitations may not provide optimal alignment in communication between the system, the user, and the information resource.<ref name=":21" />] That is, if a selected mediating resource does not provide an effective mapping between vocabularies, then query expansion can weaken the quality of search tasks. To address this challenge, designers can utilize user-supplied ontologies, as per DC1. This provides users the freedom to select mediating resources that they believe can best support their task performance, rather than being restricted to a tool-provided mediating resource. A user study by Jay ''et al.''<ref>{{Cite journal |last=Jay |first=Caroline |last2=Harper |first2=Simon |last3=Dunlop |first3=Ian |last4=Smith |first4=Sam |last5=Sufi |first5=Shoaib |last6=Goble |first6=Carole |last7=Buchan |first7=Iain |date=2016-01-14 |title=Natural Language Search Interfaces: Health Data Needs Single-Field Variable Search |url=https://www.jmir.org/2016/1/e13 |journal=Journal of Medical Internet Research |language=EN |volume=18 |issue=1 |pages=e4912 |doi=10.2196/jmir.4912 |pmc=PMC4731680 |pmid=26769334}}</ref> compares users as they perform the same task set using two interfaces, one with a structured multiple variable input profile, the other with an unstructured single variable input profile. In this study, they find that users felt their needs and expectations were better fulfilled using the single-input profile, performing their tasks quicker, with more ease of use and learnability, and with a higher appraisal of results. Designers must carefully select how they activate query expansion such that it addresses the needs of the task, the information, and the user. | |||

==Results== | |||

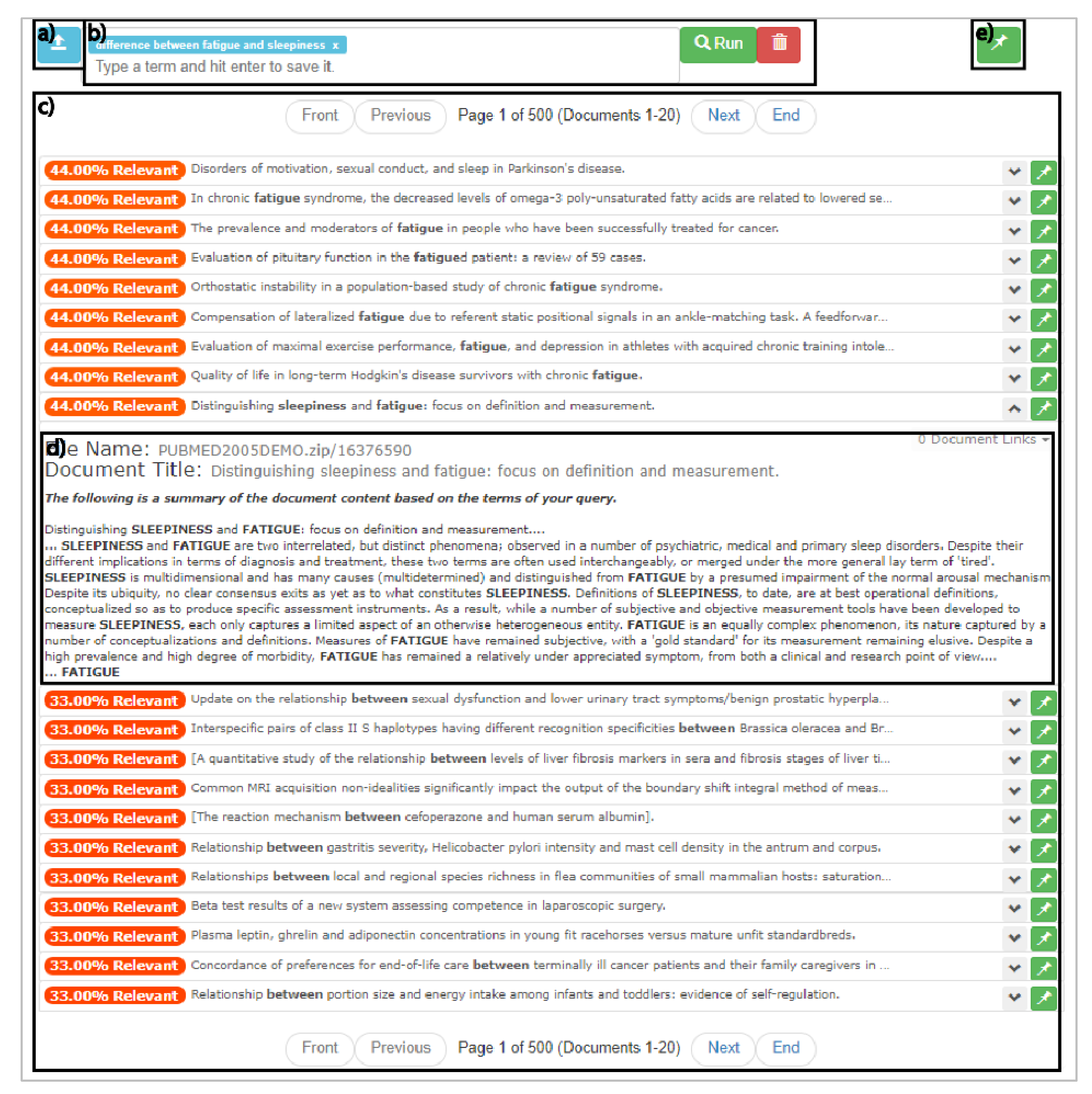

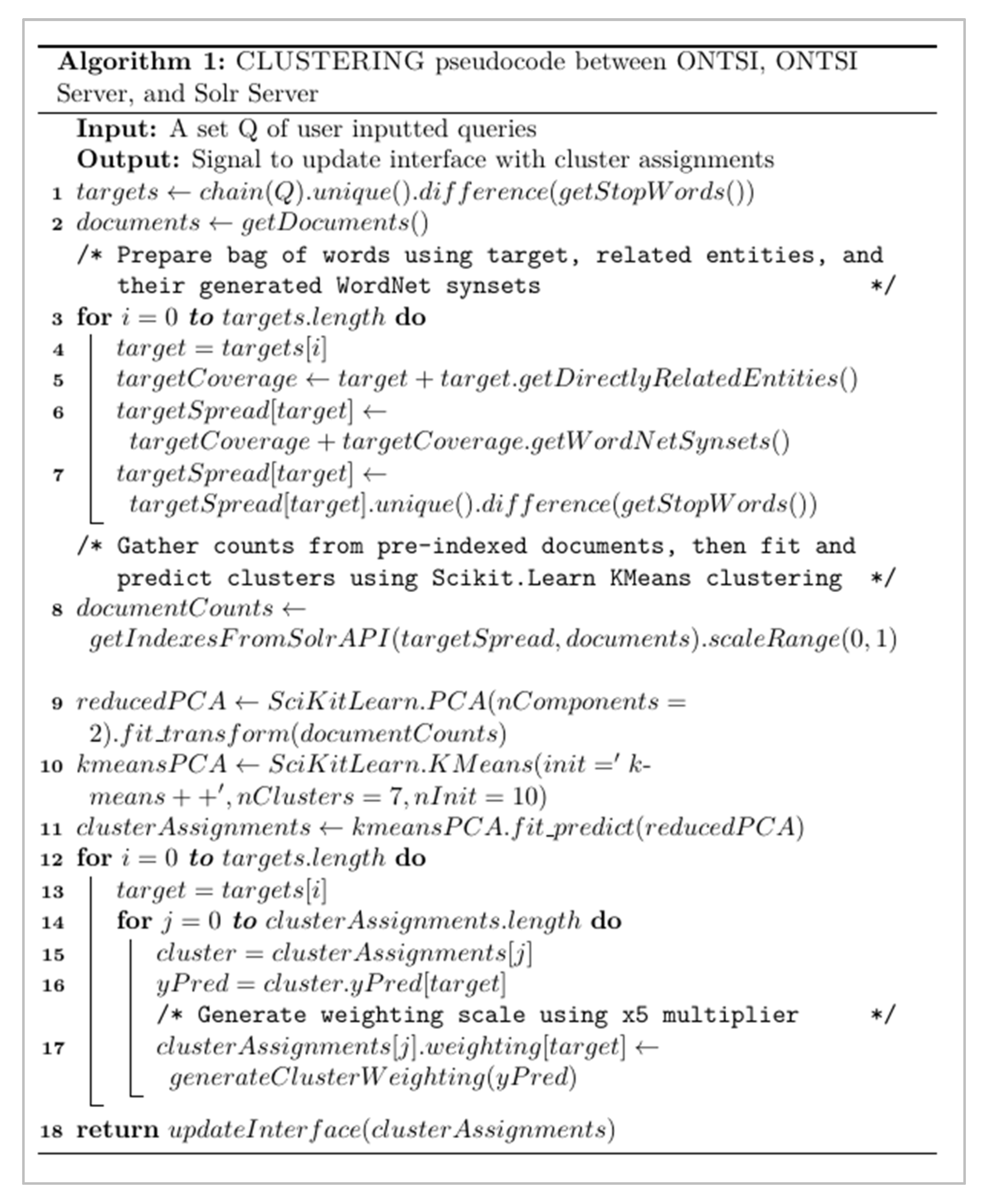

In this section we describe ONTSI, a generalized ontology-supported interface for health informatics search tasks involving large document sets created using the above-discussed criteria. We outline how the criteria were used to structure ONTSI’s design. We then discuss the technical scope of ONTSI, concluding with ONTSI’s functional workflow. | |||

===Design scope=== | |||

Table 3 highlights the role of each criterion in the design of ONTSI. | |||

{| | |||

| style="vertical-align:top;" | | |||

{| class="wikitable" border="1" cellpadding="5" cellspacing="0" width="80%" | |||

|- | |||

| colspan="2" style="background-color:white; padding-left:10px; padding-right:10px;" |'''Table 3.''' The role of each criterion within the design of ONTSI. The incorporation of these criteria in ONTSI’s implementation is discussed within the workflow and usage scenario. | |||

|- | |||

! style="background-color:#dddddd; padding-left:10px; padding-right:10px;" |DC# | |||

! style="background-color:#dddddd; padding-left:10px; padding-right:10px;" |ONTSI implementation | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |DC1 | |||

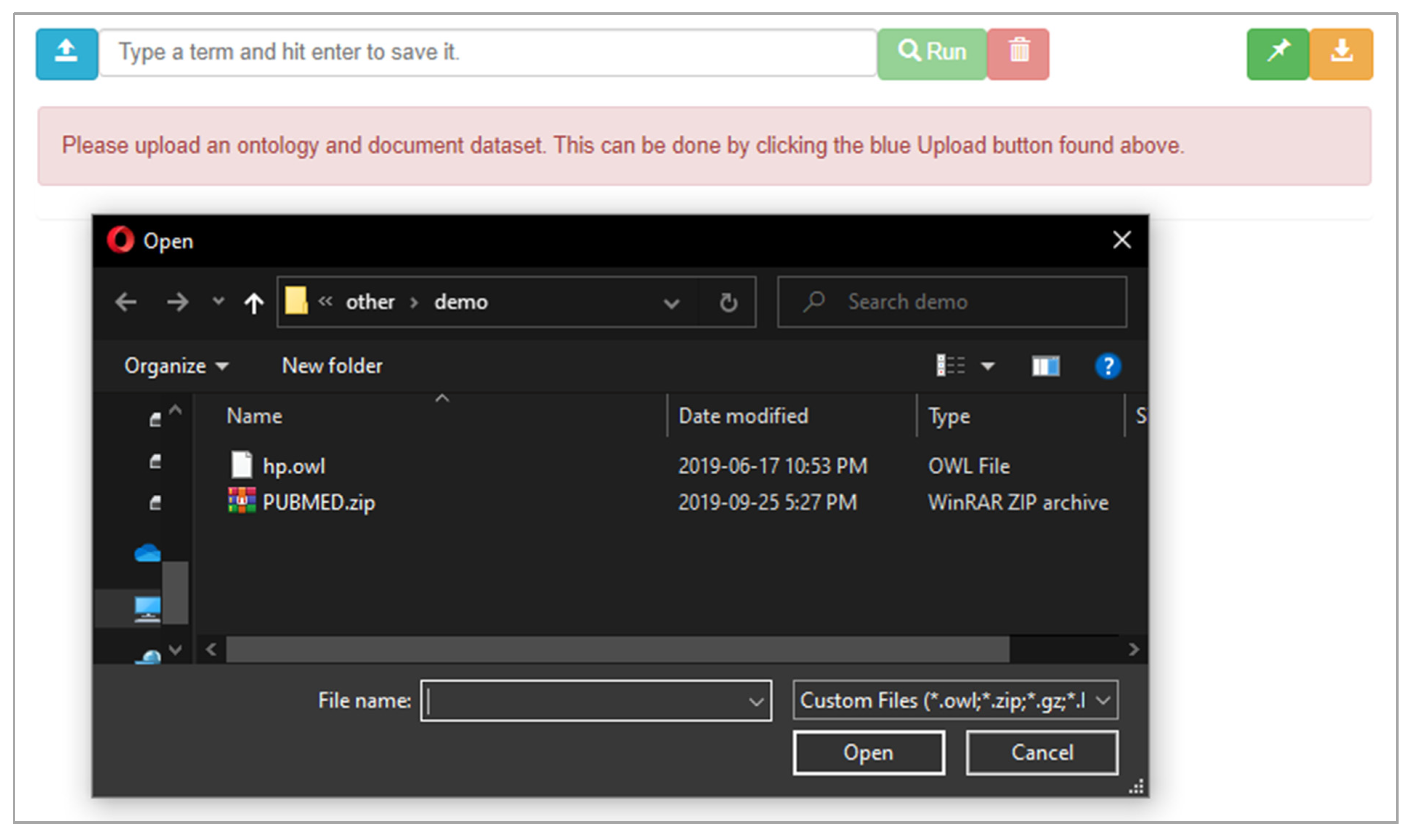

| style="background-color:white; padding-left:10px; padding-right:10px;" |ONTSI leverages powerful third-party computational technology. Specifically, pre-built machine learning packages like SciKit-Learn are integrated within ONTSI, and highly optimized indexing is provided by The Apache Software Foundation’s Solr product.<ref name="Solr">{{cite web |url=https://solr.apache.org/ |title=Apache Solr |publisher=Apache Software Foundation |accessdate=18 January 2021}}</ref> Additionally, ONTSI’s interface provides users with clear text-based alerts, which reflect their current performance status. | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |DC2 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |ONTSI supports an iterative interaction loop to allow users perform repeated sets of search tasks. That is, within iterative interactions, users can save the results they regard relevant in a persistent location within the tool, while still allowing further performances to occur. | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |DC3 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |ONTSI provides visual representations to help analyze and judge the relevance of search results. | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |DC4 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |ONTSI utilizes modern visualization and computational technologies like D3.js to provide powerful interaction opportunities. | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |DC5 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |ONTSI supports the use of a common vocabulary during query building using the query expansion strategy. Specifically, when using ONTSI, users upload both a document set and an ontology file, which are then integrated into the workflow of the computational systems of ONTSI. Users can interact with a search textbox that allows for unstructured text input. ONTSI provides domain-specific vocabulary suggestions that can assist users in guiding their performance and promote alignment between their vocabulary and domain-specific vocabulary. | |||

|- | |||

|} | |||

|} | |||

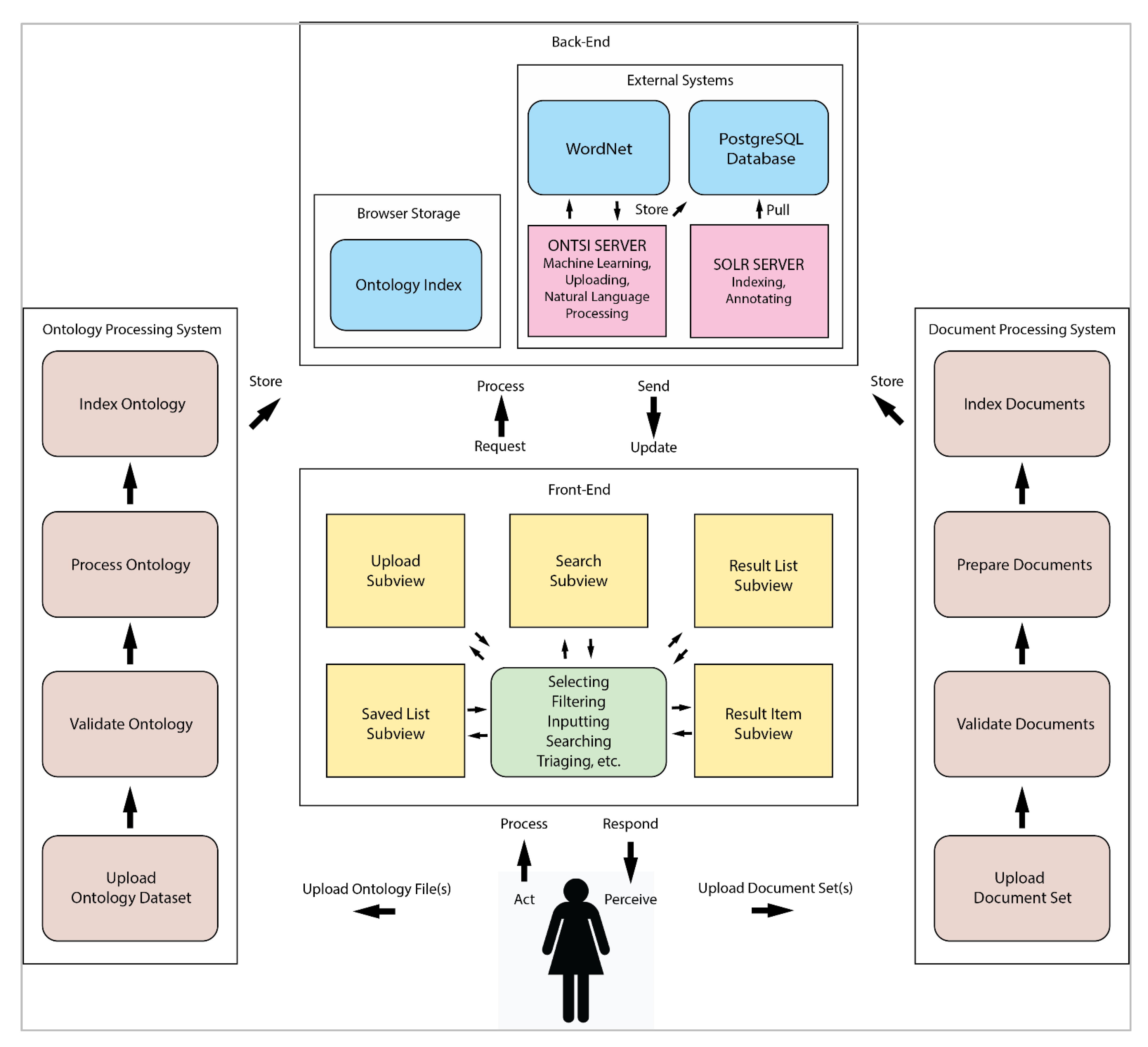

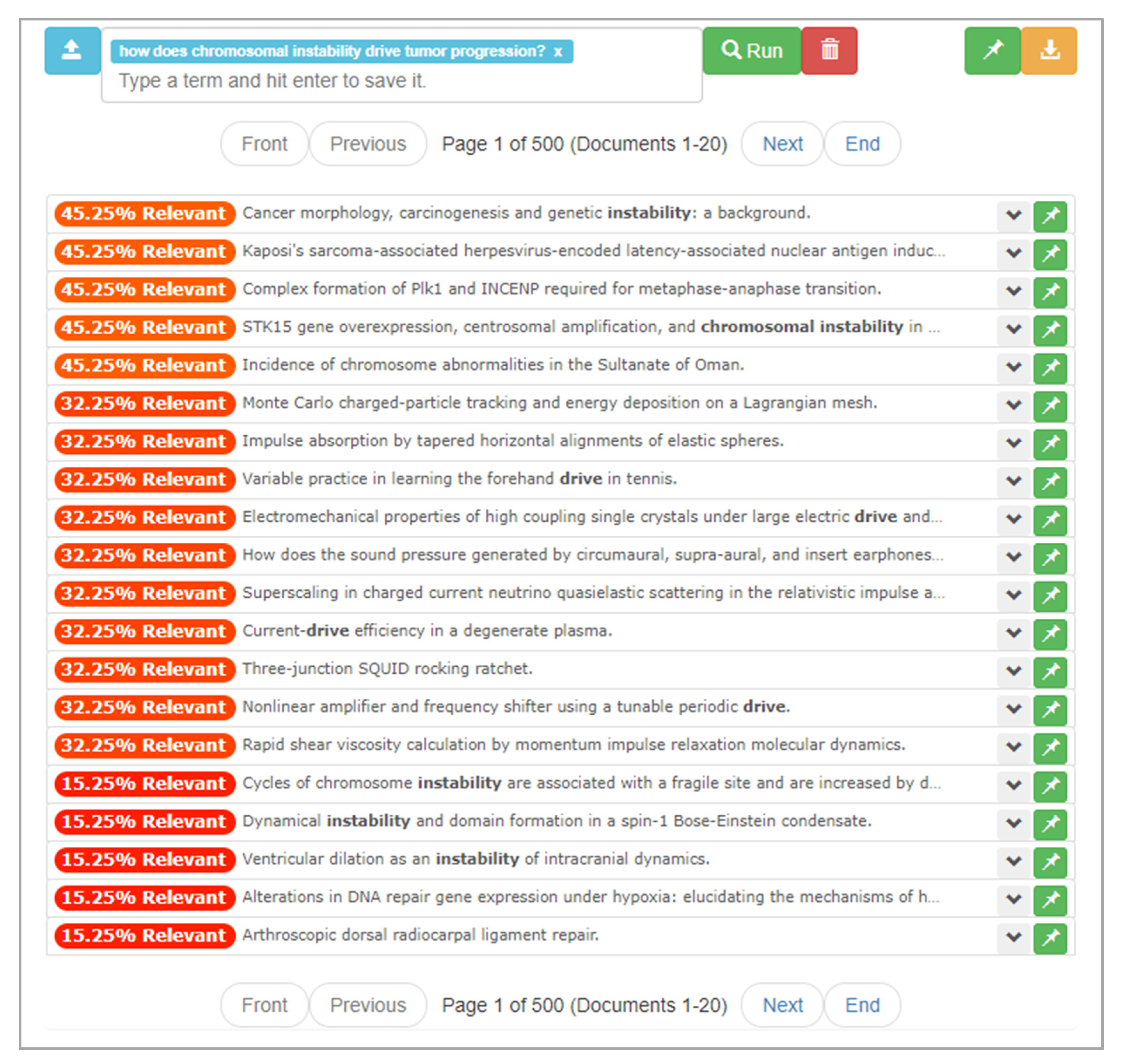

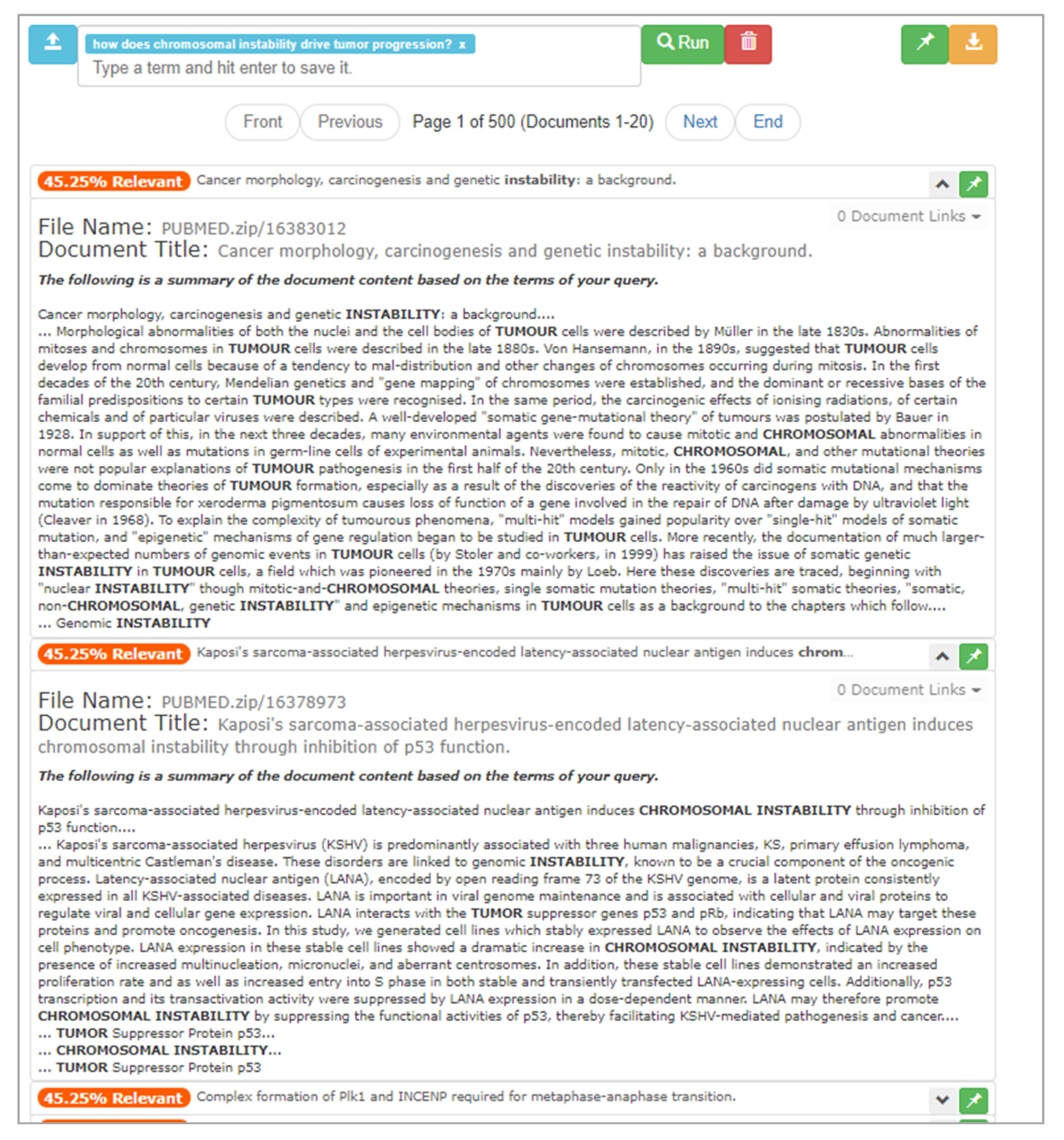

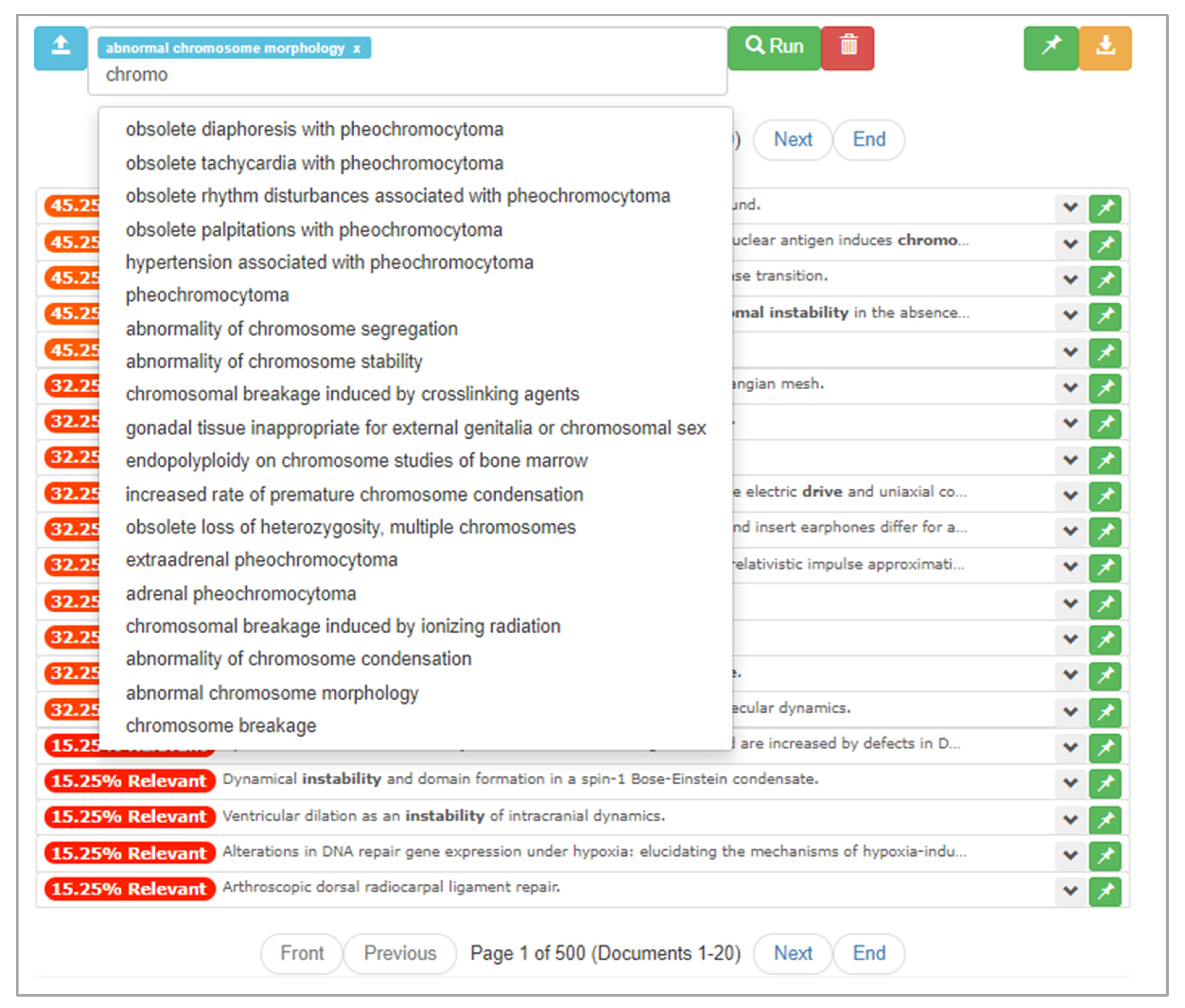

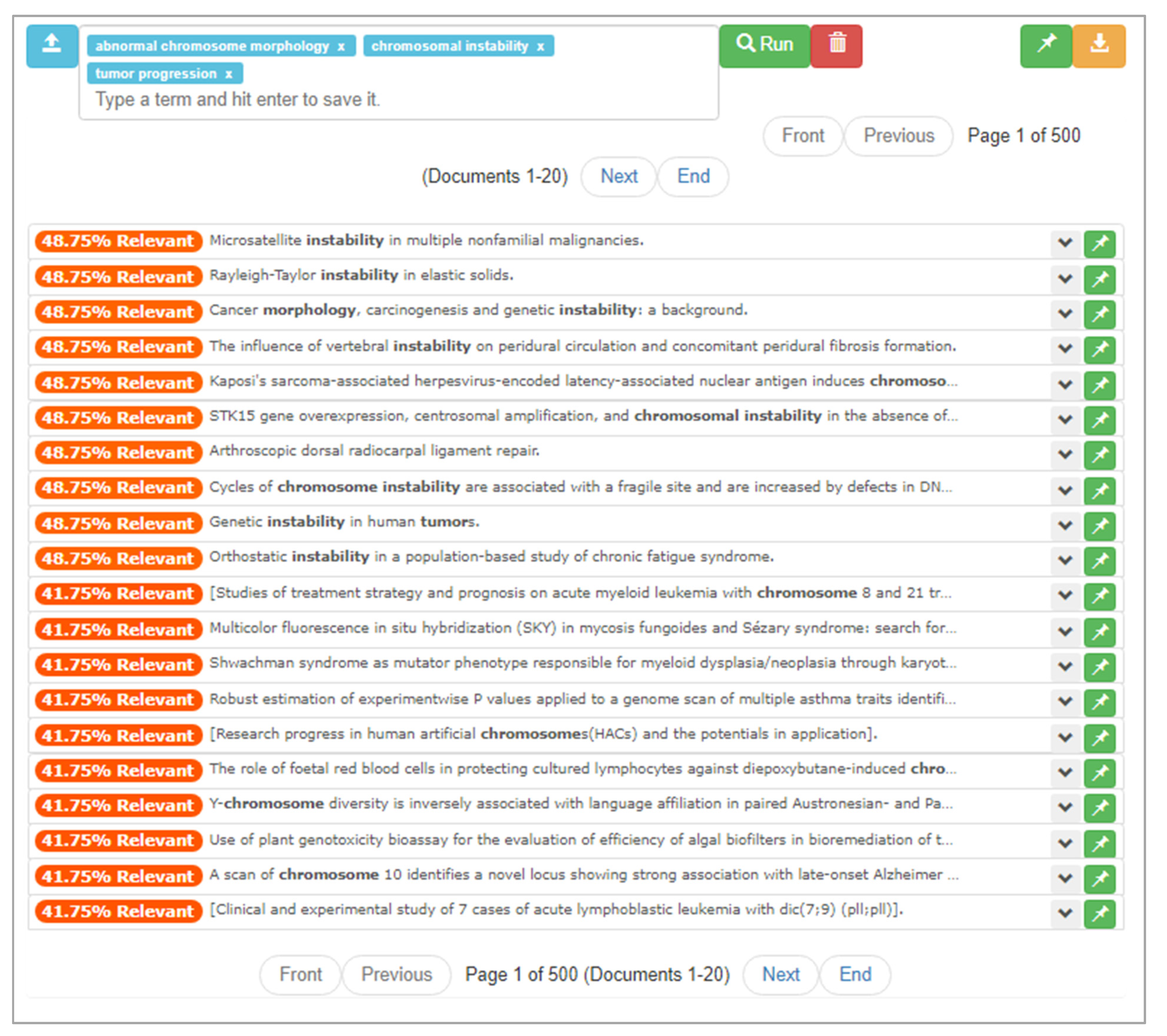

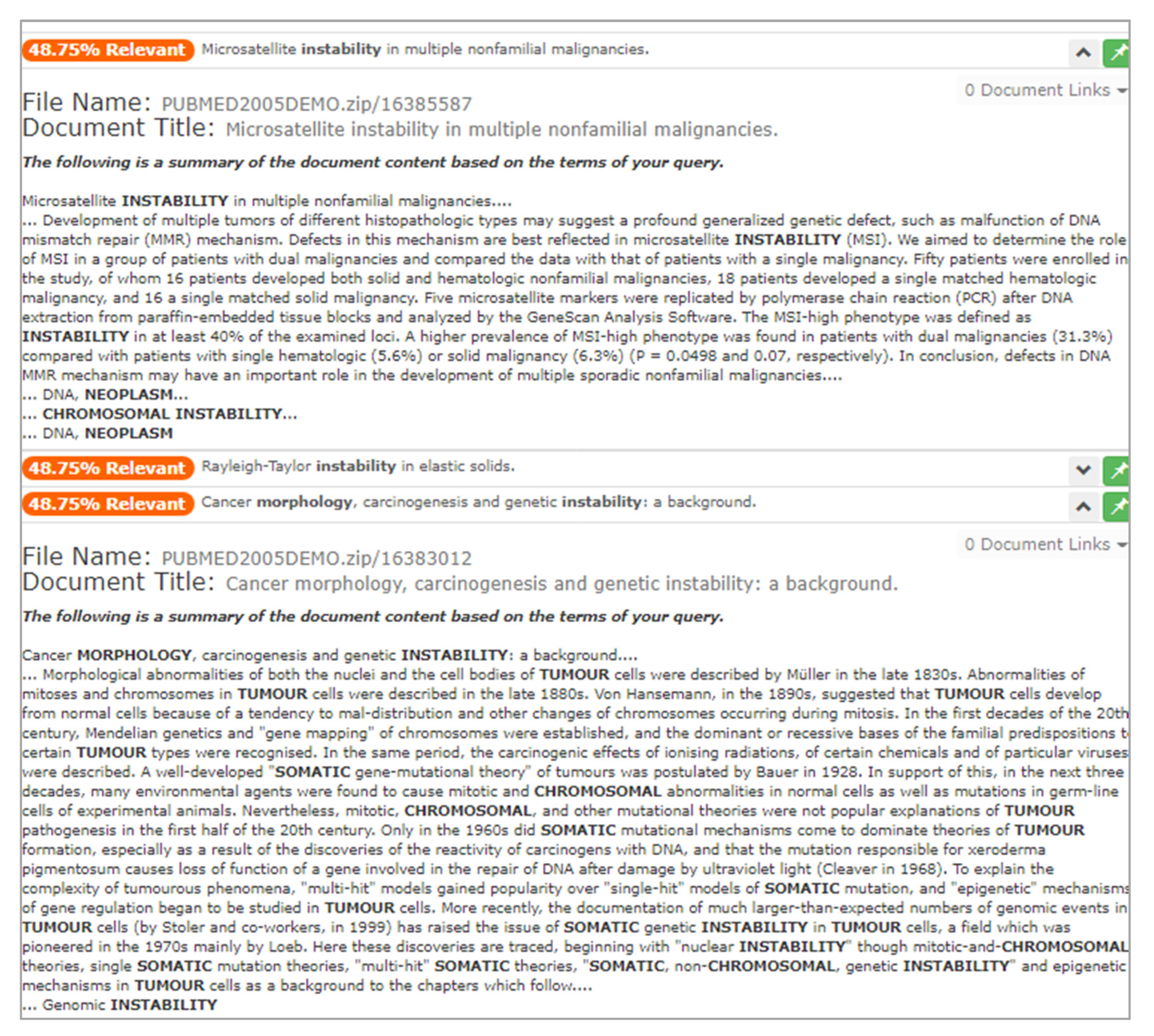

===Technical scope=== | |||