LII:Directions in Laboratory Systems: One Person's Perspective

Title: Directions in Laboratory Systems: One Person's Perspective

Author for citation: Joe Liscouski, with editorial modifications by Shawn Douglas

License for content: Creative Commons Attribution-ShareAlike 4.0 International

Publication date: November 2021

Introduction

The purpose of this work is to provide one person's perspective on planning for the use of computer systems in the laboratory, and with it a means of developing a direction for the future. Rather than concentrating on “science first, support systems second,” it reverses that order, recommending the construction of a solid support structure before populating the lab with systems and processes that produce knowledge, information, and data (K/I/D).

Intended audience

This material is intended for those working in laboratories of all types. The biggest benefit will come to those working in startup labs since they have a clean slate to work with, as well as those freshly entering into scientific work as it will help them understand the roles of various systems. Those working in existing labs will also benefit by seeing a different perspective than they may be used to, giving them an alternative path for evaluating their current structure and how they might adjust it to improve operations.

However, all labs in a given industry can benefit from this guide since one of its key points is the development of industry-wide guidelines to solving technology management and planning issues, improving personnel development, and more effectively addressing common projects in automation, instrument communications, and vendor relationships (resulting in lower costs and higher success rates). This would also provide a basis for evaluating new technologies (reducing risks to early adopters) and fostering product development with the necessary product requirements in a particular industry.

About the content

This material follows in the footsteps of more than 15 years of writing and presentation on the topic. That writing and presentation—compiled here—includes:

- Are You a Laboratory Automation Engineer? (2006)

- Elements of Laboratory Technology Management (2014)

- A Guide for Management: Successfully Applying Laboratory Systems to Your Organization's Work (2018)

- Laboratory Technology Management & Planning (2019)

- Notes on Instrument Data Systems (2020)

- Laboratory Technology Planning and Management: The Practice of Laboratory Systems Engineering (2020)

- Considerations in the Automation of Laboratory Procedures (2021)

- The Application of Informatics to Scientific Work: Laboratory Informatics for Newbies (2021)

While that material covers some of the “where do we go from here” discussions, I want to bring a lot of it together in one spot so that we can see what the entire picture looks like, while still leaving some of the details to the titles above. Admittedly, there have been some changes in thinking over time from what was presented in those pieces. For example, the concept of "laboratory automation engineering" has morphed into "laboratory systems engineering," given that in the past 15 years the scope of laboratory automation and computing has broadened significantly. Additionally, references to "scientific manufacturing" are now replaced with "scientific production," since laboratories tend to produce ideas, knowledge, results, information, and data, not tangible widgets. And as the state of laboratories continues to dynamically evolve, there will likely come more changes.

Of special note is 2019's Laboratory Technology Management & Planning webinars. They provide additional useful background towards what is covered in this guide.

Looking forward and back: Where do we begin?

The "laboratory of the future" and laboratory systems engineering

The “laboratory of the future” (LOF) makes for an interesting playground of concepts. People's view of the LOF is often colored by their commercial and research interests. Does the future mean tomorrow, next month, six years, or twenty years from now? In reality, it means all of those time spans coupled with the length of a person's tenure in the lab, and the legacy they want to leave behind.

However, with those varied time spans we’ll need flexibility and adaptability for managing data and information while also preserving access and utility for the products of lab work, and that requires organization and planning. Laboratory equipment will change and storage media and data formats will evolve. The instrumentation used to collect data and information will change, and so will the computers and software applications that manage that data and information. Every resource that has been expended in executing lab work has been to develop knowledge, information, and data (K/I/D). How are you going to meet that management challenge and retain the expected return on investment (ROI)? Answering it will be one of the hallmarks of the LOF. It will require a deliberate plan that touches on every aspect of lab work: people, equipment and systems choices, and relationships with vendors and information technology support groups. Some points reside within the lab while others require coordination with corporate groups, particularly when we address long-term storage, ease of access, and security (both physical and electronic).

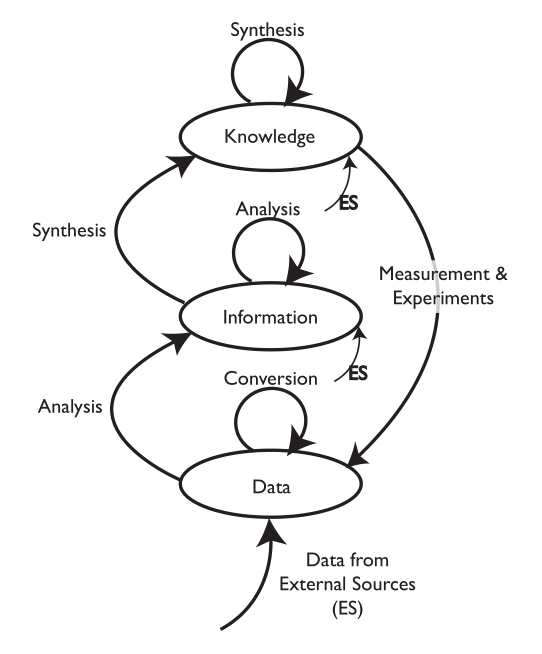

During discussions of the LOF, some people focus on the technology behind the instruments and techniques used in lab work, and they will continue to impress us with their sophistication. However, the bottom line of those conversations is their ability to produce results: K/I/D.

Modern laboratory work is a merger of science and information technology. Some of the information technology is built into instruments and equipment, the remainder supports those devices or helps manage operations. That technology needs to be understood, planned, and engineered into smoothly functioning systems if labs are to function at a high level of performance.

Given all that, how do we prepare for the LOF, whatever that future turns out to be? One step is the development of “laboratory systems engineering” as a means of bringing structure and discipline to the use of informatics, robotics, and automation to lab systems.

But who is the laboratory systems engineer (LSE)? The LSE is someone able to understand and be conversant in both the laboratory science and IT worlds, relating them to each other to the benefit of lab operation effectiveness while guiding IT in performing their roles. A fully dedicated LSE will understand a number of important principles:

- Knowledge, information, and data should always be protected, available, and usable.

- Data integrity is paramount.

- Systems and their underlying components should be supportable, meaning they are proven to meet users' needs (validated), capable of being modified without causing conflicts with results produced by previous versions, documented, upgradable (without major disruption to lab operations), and able to survive upgrades in connected systems.

- Systems should be integrated into lab operations and not exist as isolated entities, unless there are overriding concerns.

- Systems should be portable, meaning they are able to be relocated and installed where appropriate, and not restricted to a specific combination of hardware and/or software that can’t be duplicated.

- There should be a smooth, reproducible (bi-directional, if appropriate), error-free (including error detection and correction) flow of results, from data generation to the point of use or need.

But how did we get here?

The primary purpose of laboratory work is developing and carrying out scientific methods and experiments, which are used to answer questions. We don’t want to lose sight of that, or the skill needed to do that work. Initially the work was done manually, which inevitably limited the amount of data and information that could be produced, and in turn the rate at which new knowledge could be developed and distributed.

However, the introduction of electronic instruments changed that, and the problem shifted from data and information production to data and information utilization (including the development of new knowledge), distribution, and management. That’s where we are today.

Science plays a role in the production of data and information, as well as the development of knowledge. In between we have the tools used in data and information collection, storage, analysis, etc. That’s what we’ll be talking about in this document. The equipment and concepts we’re concerned with here are the tools used to assist in conducting that work and working with the results. They are enablers and amplifiers of lab processes. As in almost any application, the right tools used well are an asset.

|

One problem with the distinction between science and informatics tools is that lab personnel understand the science, but they largely don't understand the intricacies of information and computing technologies that comprise the tools they use to facilitate their work. Laboratory personnel are educated in a variety of scientific disciplines, each having its own body of knowledge and practices, each requiring specialization. Shouldn't the same apply to the expertise needed to address the “tools”? On one hand we have chromatographers, spectroscopists, physical chemists, toxicologists, etc., and on the other roboticists, database experts, network specialists, and so on. In today's reality these are not IT specialists but rather LSEs who understand how to apply the IT tools to lab work with all the nuances and details needed to be successful.

Moving forward

If we are going to advance laboratory science and its practice, we need the right complement of experienced people. Moving forward to address laboratory- and organization-wide productivity needs a different perspective; rather than ask how we improve things at the bench, ask how we improve the processes and organization.

This guide is about long-term planning for lab automation and K/I/D management. The material in this guide is structured in sections, and each section starts with a summary, so you can read the summary and decide if you want more detail. However, before you toss it on the “later” pile, read the next few bits and then decide what to do.

Long-term planning is essential to organizational success. The longer you put it off, the more expensive it will be, the longer it will take to do it, and the more entrenched behaviors you'll have to overcome. Additionally, you won’t be in a position to take advantage of the developing results.

It’s time to get past the politics and the inertia and move forward. Someone has to take the lead on this, full-time or part-time, depending on the size of your organization (i.e., if there's more than one lab, know that it affects all of them). Leadership should be from the lab side, not the IT side, as IT people may not have the backgrounds needed and may view everything through their organizations priorities. (However, their support will be necessary for a successful outcome.)

The work conducted on the lab bench produces data and information. That is the start of realizing the benefits from research and testing work. The rest depends upon your ability to work with that data and information, which in turn depends on how well your data systems are organized and managed. This culminates in maximizing benefit at the least cost, i.e., ROI. It’s important to you, and it’s important to your organization.

Planning has to be done at least four levels:

- Industry-wide (e.g., biotech, mining, electronics, cosmetics, food and beverage, plastics, etc.)

- Within your organization

- Within your lab

- Within your lab processes

One important aspect of this planning process—particularly at the top, industry-wide level—is the specification of a framework to coordinate product, process (methods), or standards research and development at the lower levels. This industry-wide framework is ideally not a “this is what you must do” but rather a common structure that can be adapted to make the work easier and, as a basis for approaching vendors for products and product modifications that will benefit those in your industry, give them confidence that the requests have a broader market appeal. If an industry-wide approach isn’t feasible, then larger companies may group together to provide the needed leadership. Note, however, that this should not be perceived as an industry/company vs. vendor effort; rather, this is an industry/company working with vendors. The idea of a large group effort is to demonstrate a consensus viewpoint and that vendors' development efforts won’t be in vain.

The development of this framework, among other things, should cover:

- Informatics

- Communications (networking, instrument control and data, informatics control and data, etc.)

- Physical security (including power)

- Data integrity and security

- Cybersecurity

- The FAIR principles (the findability, accessibility, interoperability, and reusability of data[2])

- The application of cloud and virtualization technologies

- Long-term usable access to lab information in databases without vendor controls (i.e., the impact of software as a service and other software subscription models)

- Bi-directional data interchange between archived instrument data in standardized formats and vendor software, requiring tamper-proof formats

- Instrument design for automation

- Sample storage management

- Guidance for automation

- Education for lab management and lab personnel

- The conversion of manual methods to semi- or fully automated systems

These topics affect both a lab's science personnel and its LSEs. While some topics will be of more interest to the engineers than the scientists, both groups have a stake in the results, as do any IT groups.

As digital systems become more entrenched in scientific work, we may need to restructure our thinking from “lab bench” and “informatics” to “data and information sources” and “digital tools for working, organizing, and managing those elements." Data and information sources can extend to third-party labs and other published material. We have to move from digital systems causing incremental improvements (today’s approach), to a true revolutionary restructuring of how science is conducted.

Laboratory computing

Key point: Laboratory systems are planned, designed, and engineered. They are not simply a collection of components. Laboratory computing is a transformational technology, one which has yet to fully emerge in large part because those who work in laboratories with computing aren’t fully educated about it to take advantage of it.

Laboratory computing has always been viewed as an "add-on" to traditional laboratory work. These add-ons have the potential to improve our work, make it faster, and make it more productive (see Appendix 1 for more details).

The common point-of-view in the discussion of lab computing has been focused on the laboratory scientist or manager, with IT providing a supporting role. That isn’t the only viewpoint available to us, however. Another viewpoint is from that of the laboratory systems engineer (LSE), who focuses on data and information flow. This latter viewpoint should compel us to reconsider the role of computing in the laboratory and the higher level needs of laboratory operations.

Why is this important? Data and information generation may represent the end of the lab bench process, but it’s just the beginning of its use in broader scientific work. The ability to take advantage of those elements in the scope of manufacturing and corporate research and development (R&D) is where the real value is realized. That requires planning for storage, access, and utilization over the long term.

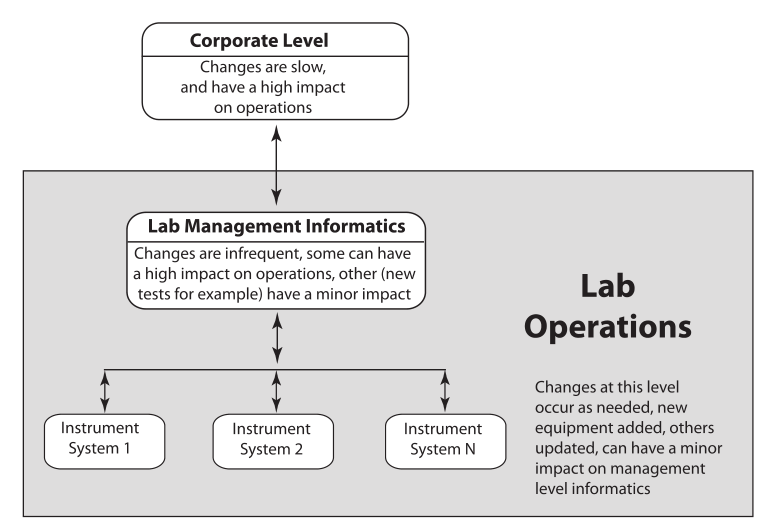

The problem with the traditional point-of-view (i.e., instrumentation first, with computing in a supporting role) is that the data and information landscape is built supporting the portion of lab work that is the most likely to change (Figure 2). You wind up building an information architecture to meet the requirements of diverse data structures instead of making that architecture part of the product purchase criteria. Systems are installed as needs develop, not as part of a pre-planned information architecture.

|

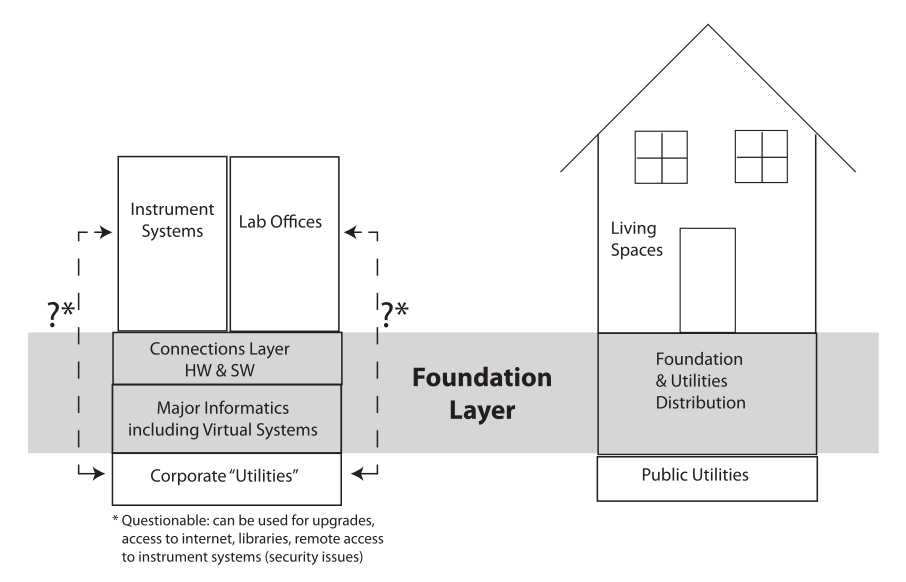

Designing an informatics architecture has some things in common with building a house. You create the foundation first, a supporting structure that everything sits on. Adding the framework sets up the primary living space, which can be modified as needed without disturbing the foundation (Figure 3). If you built the living space first and then wanted to install a foundation, you’d have a mess to deal with.

|

The same holds true with laboratory informatics. Set the foundation—the laboratory information management system (LIMS), electronic laboratory notebook (ELN), scientific data management system (SDMS), etc.—first, then add the data and information generation systems. That gives you a common set of requirements for making connections and can clarify some issues in product selection and systems integration. It may seem backwards if your focus is on data and information production, but as soon as you realize that you have to organize and manage those products, the benefits will be clear.

You might wonder how you go about setting up a LIMS, ELN, etc. before the instrumentation is set. However it isn’t that much of a problem. You know why your lab is there and what kind of work you plan to do. That will guide you in setting up the foundation. The details of tests can be added as need. Most of that depends on having your people educated in what the systems are and how to use them.

Our comparison between a building and information systems does bring up some additional points. A building's access to utilities runs through control points; water and electricity don’t come in from the public supply to each room but run through central control points that include a distribution system with safety and management features. We need the same thing in labs when it comes to network access. In our current society, access to private information for profit is a fact of life. While there are desirable features of lab systems available through network access (remote checking, access to libraries, updates, etc.), they should be controlled so that those with malicious intent are prevented access, and data and information are protected. Should instrument systems and office computers have access to corporate and external networks? That’s your decision and revolves around how you want your lab run, as well as other applicable corporate policies.

The connections layer in Figure 3 is where devices connect to each other and the major informatics layer. This layer includes two functions: basic networking capability and application-to-application transfer. Take for example moving pH measurements to a LIMS or ELN; this is where things can get very messy. You need to define what that is and what the standards are to ensure a well-managed system (more on that when we look at industry-wide guidelines).

To complete the analogy, people do move the living space of a house from one foundation to another, often making for an interesting experience. Similarly, it’s also possible to change the informatics foundation from one product set to another. It means exporting the contents of the database(s) to a product-independent format and then importing into the new system. If you think this is something that might be in your future, make the ability to engage in that process part of the product selection criteria. Like moving a house, it isn’t going to be fun.[3][4][5] The same holds true for ELN and SDMS.

How automation affects people's work in the lab

Key point: There are two basic ways lab personnel can approach computing: it’s a black box that they don’t understand but is used as part of their work, or they are fully aware of the equipment's capabilities and limitations and know how to use it to its fullest benefit.

While lab personnel may be fully educated in the science behind their work, the role of computing—from pH meters to multi-instrument data systems—may be viewed with a lack of understanding. That is a significant problem because they are responsible for the results that those systems produce, and they may not be aware of what happens to the signals from the instruments, where the limitations lie, and what can turn a well-executed procedure to junk because an instrument or computer setting wasn’t properly evaluated and used.

In reality, automation has both a technical impact on their work and an impact on themselves. These are outlined below.

Technical impact on work:

- It can make routine work easier and more productive, reducing costs and improving ROI (more on that below).

- It can allow work to be performed that might otherwise be too expensive to entertain. There are techniques such as high-throughput screening and statistical experimental design that are useful in laboratory work but might be avoided because the effort of generating the needed data is too labor-intensive and time-consuming. Automated systems can relieve that problem and produce the volumes of data those techniques require.

- It can improve accuracy and reproducibility. Automated systems, properly designed and implemented, are inherently more reproducible than a corresponding manual system.

- It can increase safety by limiting people's exposure to hazardous situations and materials.

- It can also be a financial hole if proper planning and engineering aren’t properly applied to a project. “Scope creep,” changes in direction, and changes in project requirements and personnel are key reasons that projects are delayed or fail.

Impact on the personnel themselves:

- It can increase technical specialization, potentially improving work opportunities and people's job satisfaction. Having people move into a new technology area gives them an opportunity to grow both personally and professionally.

- Full automation of a process can cause some jobs to end, or at least change them significantly (more on that below).

- It can elevate routine work to more significant supervisory roles.

Most of these impacts are straightforward to understand, but several require further elaboration.

It can make routine work easier and more productive, reducing costs and improving ROI

This sounds like a standard marketing pitch; is there any evidence to support it? In the 1980s, clinical chemistry labs were faced with a problem: the cost for their services was set on an annual basis without any adjustments permitted for rising costs during that period. If costs rose, income dropped; the more testing they did the worse the problem became. They addressed this problem as a community, and that was a key factor in their success. Clinical chemistry labs do testing on materials taken from people and animals and run standardized tests. This is the kind of environment that automation was created for, and they, as a community, embarked on a total laboratory automation (TLA) program. That program had a number of factors: education, standardization of equipment (the tests were standardized so the vendors knew exactly what they needed in equipment capabilities), and the development of instrument and computer communications protocols that enabled the transfer of data and information between devices (application to application).

Abbreviations, acronyms, and initialisms

ELN: Electronic laboratory notebook

K/D/I: Knowledge, data, and information

LIMS: Laboratory information management system

LOF: Laboratory of the future

LSE: Laboratory systems engineer

ROI: Return on investment

SDMS: Scientific data management system

TLA: Total laboratory automation

Footnotes

About the author

Initially educated as a chemist, author Joe Liscouski (joe dot liscouski at gmail dot com) is an experienced laboratory automation/computing professional with over forty years of experience in the field, including the design and development of automation systems (both custom and commercial systems), LIMS, robotics and data interchange standards. He also consults on the use of computing in laboratory work. He has held symposia on validation and presented technical material and short courses on laboratory automation and computing in the U.S., Europe, and Japan. He has worked/consulted in pharmaceutical, biotech, polymer, medical, and government laboratories. His current work centers on working with companies to establish planning programs for lab systems, developing effective support groups, and helping people with the application of automation and information technologies in research and quality control environments.

References

- ↑ Liscouski, J. (2015). Computerized Systems in the Modern Laboratory: A Practical Guide. PDA/DHI. pp. 432. ASIN B010EWO06S. ISBN 978-1933722863.

- ↑ Wilkinson, M.D.; Dumontier, M.; Aalbersberg, I.J. et al. (2016). "The FAIR Guiding Principles for scientific data management and stewardship". Scientific Data 3: 160018. doi:10.1038/sdata.2016.18. PMC PMC4792175. PMID 26978244. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4792175.

- ↑ Fish, M.; Minicuci, D. (2005). "Overcoming the Challenges of a LIMS Migration" (PDF). Research & Development 47 (2). http://apps.thermoscientific.com/media/SID/Informatics/PDF/Article-Overcoming-the-Challanges.pdf.

- ↑ Fish, M.; Minicuci, D. (1 April 2013). "Overcoming daunting business challenges of a LIMS migration". Scientist Live. https://www.scientistlive.com/content/overcoming-daunting-business-challenges-lims-migration.

- ↑ "Overcoming the Challenges of Legacy Data Migration". FreeLIMS.org. CloudLIMS.com, LLC. 29 June 2018. https://freelims.org/blog/legacy-data-migration-to-lims.html. Retrieved 13 November 2021.