Difference between revisions of "LII:Organizational Memory and Laboratory Knowledge Management: Its Impact on Laboratory Information Flow and Electronic Notebooks"

Shawndouglas (talk | contribs) (Saving and adding more.) |

Shawndouglas (talk | contribs) (Saving and adding more.) |

||

| Line 23: | Line 23: | ||

The initial interest discussed herein is on the topic of using LLMs to create an effective organizational memory (OM) and how that OM can benefit scientific organizations. Following that, we'll then examine how that potential technology impacts information flow, integration, and productivity, as well as what it could mean for developing [[electronic laboratory notebook]]s (ELNs). We’ll also have to extend that discussion to having AI and OM systems work with LIMS, [[scientific data management system]]s (SDMS), instrument data systems (IDSs), engineering tools, and field work found in various industries. | The initial interest discussed herein is on the topic of using LLMs to create an effective organizational memory (OM) and how that OM can benefit scientific organizations. Following that, we'll then examine how that potential technology impacts information flow, integration, and productivity, as well as what it could mean for developing [[electronic laboratory notebook]]s (ELNs). We’ll also have to extend that discussion to having AI and OM systems work with LIMS, [[scientific data management system]]s (SDMS), instrument data systems (IDSs), engineering tools, and field work found in various industries. | ||

This work is not a "how to acquire and implement" article but rather a prompt for "something to think about and pursue" if makes sense within your organization. The idea is the creation of an effective OM (i.e., an extensive document and information database) that fills a gap in scientific and [[laboratory informatics]], one that can be used effectively with an AI tool to search, organize, synthesize, and present material in an immediately applicable way. We need to seriously think about what we want from these systems and what our requirements are for them before the rapid pace of development produces products that need extensive modifications to be useful in scientific, laboratory, field, and engineering work. | This work is not a "how to acquire and implement" article but rather a prompt for "something to think about and pursue" if makes sense within your organization. The idea is the creation of an effective OM (i.e., an extensive document and information database) that fills a gap in scientific and [[laboratory informatics]]{{efn|By addressing both "scientific" and "laboratory," we recognize that not all scientific work occurs in a laboratory.}}, one that can be used effectively with an AI tool to search, organize, synthesize, and present material in an immediately applicable way. We need to seriously think about what we want from these systems and what our requirements are for them before the rapid pace of development produces products that need extensive modifications to be useful in scientific, laboratory, field, and engineering work. | ||

===Why should you read this?=== | ===Why should you read this?=== | ||

| Line 87: | Line 87: | ||

==Organizational memory and scientific information flow== | ==Organizational memory and scientific information flow== | ||

The introduction of laboratory informatics into scientific work is often on an "as needed" basis. An instrument is purchased, and, in most cases, it is either accompanied by an external computer or has one within it. Regardless, the end result is the same: a computer is in the lab, and the subject of scientific and laboratory informatics begins to take shape. As the work develops, more computerized equipment is put in place, and the informatics landscape grows. The point is that computer systems are set in place to support software tools to solve particular problems, such as data management, inventory management, etc., but these aren’t planned acquisitions that are designed to fit into a pre-described informatics architecture. Suppose we are going to begin thinking in terms of OM and its effective advancement and use. In that case, an architecture is what is called for to make sure that the OM system is fed the materials it needs, and that the AI component has material to work with. (Note: Our emphasis is going to be on the OM; the AI is just a tool for accessing, extracting, and working with the OM contents.) | |||

===Scientific and laboratory information flow=== | |||

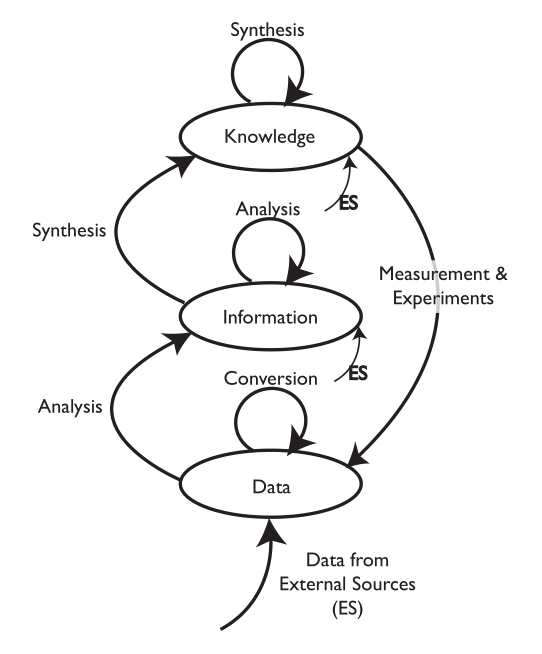

The basic flow model of a lab's knowledge, information, and data (K/I/D) is represented in Figure 1. | |||

[[File:Fig1 Liscouski DirectLabSysOnePerPersp21.png|400px]] | |||

{{clear}} | |||

{| | |||

| style="vertical-align:top;" | | |||

{| border="0" cellpadding="5" cellspacing="0" width="400px" | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |<blockquote>'''Figure 1.''' Basic K/I/D model. Databases for knowledge, information, and data (K/I/D) are represented as ovals, and the processes acting on them as arrows. A more detailed description of this model appeared originally in ''Computerized Systems in the Modern Laboratory: A Practical Guide'', though a slightly modified version of that is included in the Supplementary information as Attachment 1.</blockquote> | |||

|- | |||

|} | |||

|} | |||

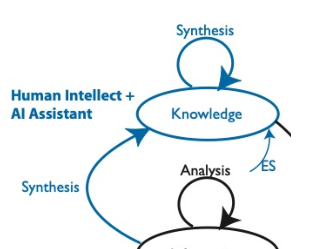

Figure 2 zooms into the top of that model and highlights the position of AI-driven OM within the greater K/I/D model. | |||

[[File:Fig2 Liscouski OrgMem24.png|333px]] | |||

{{clear}} | |||

{| | |||

| style="vertical-align:top;" | | |||

{| border="0" cellpadding="5" cellspacing="0" width="333px" | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |<blockquote>'''Figure 2.''' Top of the K/I/D model. The portion of the diagram in blue shows the process role of the AI-driven organizational memory. Bringing in information from external sources (ES) requires careful control over access privileges and security.</blockquote> | |||

|- | |||

|} | |||

|} | |||

| Line 95: | Line 123: | ||

==Footnotes== | ==Footnotes== | ||

{{reflist|group=lower-alpha}} | {{reflist|group=lower-alpha}} | ||

==Supplementary information== | |||

* Attachment 1: ''[[LII:Laboratory Informatics: Information and Workflows|Laboratory Informatics: Information and Workflows]]'' | |||

* Attachment 2: | |||

==About the author== | ==About the author== | ||

Revision as of 19:56, 10 April 2024

Title: Organizational Memory and Laboratory Knowledge Management: Its Impact on Laboratory Information Flow and Electronic Notebooks

Author for citation: Joe Liscouski, with editorial modifications by Shawn Douglas

License for content: Creative Commons Attribution-ShareAlike 4.0 International

Publication date: April 2024

|

|

This article should be considered a work in progress and incomplete. Consider this article incomplete until this notice is removed. |

Introduction

Beginning in the 1960s, the application of computing to laboratory work was focused on productivity: the reduction of the amount of work and cost needed to generate results, along with an improvement in the return on investment (ROI). This was very much a bottom-up approach, addressing the most labor-intensive issues and moving progressively to higher levels of data and information processing and productivity.

The efforts began with work at Perkin-Elmer, Nelson Analytical, Spectra Physics, Digital Equipment Corporation, and many others on the computer controlled recording and processing of instrument data. Once we learned how to acquire the data, robotic tools were introduced to help process samples and make them ready for introduction into instruments, that with the connection to a computer for data acquisition further increased productivity. That was followed by an emphasis on the storage, management, and analysis of that data through the application of laboratory information management systems (LIMS) and other software. With the recent development of artificial intelligence (AI) systems and large language models (LLMs), we are ready to consider the next stage in automation and system’s application: organizational memory and laboratory knowledge management.

This piece discusses the convergence of a set of technologies and their application to scientific work. The development of software systems like ChatGPT, Gemini, and others[1] means that with a bit of effort the ROI in research and testing can be greatly improved.

The initial interest discussed herein is on the topic of using LLMs to create an effective organizational memory (OM) and how that OM can benefit scientific organizations. Following that, we'll then examine how that potential technology impacts information flow, integration, and productivity, as well as what it could mean for developing electronic laboratory notebooks (ELNs). We’ll also have to extend that discussion to having AI and OM systems work with LIMS, scientific data management systems (SDMS), instrument data systems (IDSs), engineering tools, and field work found in various industries.

This work is not a "how to acquire and implement" article but rather a prompt for "something to think about and pursue" if makes sense within your organization. The idea is the creation of an effective OM (i.e., an extensive document and information database) that fills a gap in scientific and laboratory informatics[a], one that can be used effectively with an AI tool to search, organize, synthesize, and present material in an immediately applicable way. We need to seriously think about what we want from these systems and what our requirements are for them before the rapid pace of development produces products that need extensive modifications to be useful in scientific, laboratory, field, and engineering work.

Why should you read this?

Most of the products used in scientific work (whether in the lab, field, office, etc.) are designed for a specific application (working with instruments, for example) or adapted from general-purpose tools used in various industries and settings. The ideas discussed here need further development, as do the tools specifically for the needs of the scientific community. Still, that work needs to begin as a community effort to gain possible benefits. We need to guide the development of technologies so that they meet the needs of the scientific community rather than try to adapt them once they are delivered to the general marketplace.

LLM systems have shown rapid development and deployment in almost every facet of industries throughout 2023. Unless something drastic happens, development will only accelerate, given the potential impact on business operations and interest of technology-driven companies. The scientific community needs to not only ensure its unique needs (once they’ve been defined) are included in LLM development and are met, but also that the resultant output reflects empirical rigor.

Organizational memory

A researcher came into our analytical lab and asked about some results reported a few years earlier. One chemist recalled the project as well as the person in charge of that work, who had since left the company. The researcher thought he had a better approach to the problem being studied in the original work and was asked to investigate it. The bad news is that all the work, both analytical and previous research notes, was written into paper laboratory notebooks (1960s). Because of their age, they had left the library and were stored in banker’s boxes in a trailer in the parking lot. There, they were subject to water damage and rodents. Most of that material was unusable, and the investigation was dropped.

Many laboratories have similar stories to the above, lamenting the loss of knowledge within the overall organization due to poor knowledge management practices. Knowledge management has been a human activity for thousands of years since the first pictographs were placed on cave walls. The technology being used, the amount of knowledge generated, and our ability to work with it has changed over many centuries. Today, the subject of organizational knowledge management has seen evolving interest as organizations have moved from disparate archives and libraries of physical documents to more organized "computer-based organizational memories"[2] where a higher level of productivity can be had.

Walsh and Ungson define organizational memory as "stored information from an organization's history that can be brought to bear on present decisions," with that information being "stored as a consequence of implementing decisions to which they refer, by individual recollections, and through shared interpretations."[2] Until recently, many electronic approaches to OM development have relied on document management systems (DMSs) with keyword indexing and local search engines for retrieval. While those are a start, we need more; search engines still rely too heavily on people to sort through their output to find and organize relevant material.

Recently—particularly in 2023—AI systems like the notable ChatGPT[3] have offered a means of searching, organizing, and presenting material in a form that requires little additional human effort. Initial versions have had several issues (e.g., "hallucinations,” a tame way of saying the AI fabricates and falsifies data and information[4]), but as new models and tools are developed to better address these issues[5][6], sufficient improvement may be shown so that those AI systems eventually may deliver on their potential. Outside of ChatGPT, there are similar systems available (e.g., Microsoft CoPilot and Google Gemini), and more are likely under development. Our intent is not to make a comparison since any effort will quickly become outdated.

Why are organizational memory systems important?

Research and development and supporting laboratory activities can be an expensive operation. ROI is one measure of the wisdom behind the investment in that work, which can be substantively affected by the informatics environment within the laboratory and the larger organization of which it is a part. We'll take a brief look at three approaches to OM systems: paper-based, electronic, and AI-driven systems.

1. Paper-based systems: Paper-based systems pose a high risk of knowledge loss. While paper notebooks are in active use, the user knows the contents and can find material quickly. However, once the notebook is filled and put first in a library and then in an archive, the memory of what is in it fades. Once the original contributor leaves his post (due to promotion, transfers, or outside employment), you’re left depending on someone's recall or brute force searching to retrieve the contents. The cost of using that paper-based work and trying to gain benefit from it increases significantly, and the benefit is questionable depending on the ability of the information to be found, understood, and put to use. All of this assumes that the material hasn’t been damaged or lost. Paper-based lab notebooks create a knowledge bottleneck. Digital solutions are needed for secure, long-term storage and efficient searchability of experimental data.

2. Electronic systems and search engines: Analytical and experimental reports, as well as other organizational documents, can be entered into a DMS with suitable keyword entries (i.e., metadata)[7], indexed, and searched via search engines local to the organization or lab. The problem with this approach is that you get a list of reference documents that must be reviewed manually to ferret out and organize relevant content, which is time-consuming and expensive. This work has to be prioritized along with other demands on people’s time. Suppose a LIMS—whether it's a true LIMS or LIMS-like spreadsheet implementation—or an SDMS is used. In that case, the search may not include material in these systems but may be limited to descriptions in reports. Until the advent of popularized AI in 2023, readily available capabilities faced limitations. Only organizations with substantial budgets and resources could independently pursue more comprehensive technologies.

3. AI-driven systems: Building upon electronic systems with query capability, we can use the stored documents to train and update an AI assistant (a special purpose variation of ChatGPT, Watsonx 5, or other AI, for example). Variations can be created that are limited to private material to provide data security, and later they may be extended to public documents on the internet with controls to avoid information leakage. Based on the material available to date and at least one user’s experience using ChatGPT v4, the results of a search question provided by the AI system were more comprehensive, better organized, and presented in a readable and useable fashion that made it immediately useful, instead of simply providing a starting point for further research work. One change noted from earlier AI models is a lower tendency to provide false references, and the references provided are seemingly more relevant, summarized, and accurate. (Note: Any information an AI provides should be checked for accuracy before use.) An additional benefit is that its incorporation becomes synergistic as more material is provided. Connecting an AI to a LIMS or SDMS would provide additional benefits. However, extreme care must be taken to prevent premature disclosure of results before they are signed off, and data security has to be a high priority.

Examples of some of these OM system approaches include:

- NASA Lessons Learned: A publicly searchable "database of lessons learned from contributors across NASA and other organizations," containing "the official, reviewed learned lessons from NASA programs and projects"[8]

- Xerox's Eureka: A service technician database that is credited with improving service and reducing equipment downtime[9]

- Salesforce's Einstein and Service Cloud: An AI-driven database for customer service issues used to improve operations internally and externally[10]

There are likely many more examples of OM work going on in companies that is kept confidential. Large biopharma operations, for example, are expected to be working on methods of organizing and mining their extensive internal research databases.

Of the three approaches noted above, the last provides the best opportunity to increase the ROI on laboratory work. It reduces the amount of additional human effort needed to make use of lab results. Yet how it is implemented can make a significant difference in the results. There are several key benefits to implementing such an AI-driven systems approach:

- Such a system can capture and retain past work, putting it in an environment where its value or utility will continue to be enhanced by making it available to more sophisticated analysis methods, as they are developed, and more projects, as they become defined. This includes mitigating the effects of staff turnover (i.e., forgetting what had been done) and improving data organization. However, for this to be most effective, several steps must be taken. Digital repositories must be created on centralized databases where all lab reports, results, and presentations are stored. Additionally, and entered data should be governed by standardized formats and protocols to ensure consistency and retrievability. Finally, metadata tagging needs to be robust, allowing data and information to be tagged with keywords, project names, dates, etc. for easier searching and retrieval. (This metadata approach may be driven by initiatives such as the Dublin Core Metadata Initiative [DCMI].[7])

- Such a system can broaden the scope of material that can be used to analyze past and current work, drawing upon internal and external resources while enforcing proper security controls.

- Such a system has the ability to analyze or re-analyze past work. Working with the amount of data generated by laboratory work can be daunting. An AI organizational memory system should be capable of continuously analyzing incoming data and re-analyzing past data to gain new insights, particularly if it can access external data with appropriate security protocols. This would include the ability to notify researchers of relevant new findings (e.g., RSS feeds, and database integrations) or remind them of past work that could be applied to current projects.

In addition, a well-designed OM system can improve:

- Knowledge retention: Organizations recognize that employee turnover is inevitable. When employees leave, they take their knowledge and experience with them. Organizational memory helps capture this invaluable tacit knowledge, ensuring that critical information, technical nuances, and expertise remain within the company.

- Efficiency and productivity: Having an accessible repository of past projects, decisions, and outcomes allows current employees to learn from previous successes and mistakes. This can significantly reduce redundant efforts, accelerate training, and improve decision-making processes.

- Innovation and competitive advantage: Companies can foster innovation by effectively utilizing past knowledge. Understanding historical context, past experiments, and the evolution of products or strategies can inspire new ideas and prevent reinvention of the wheel. This ongoing learning can be a significant competitive advantage, by reducing the risk of making uninformed or repetitive mistakes and by providing a deeper understanding of previous obstacles and potential solutions.

- Risk management: Organizational memory can play a crucial role in risk management. Companies can better anticipate and mitigate risks by maintaining records of past incidents, responses, and outcomes. This is particularly important in regulated industries with extensive compliance requirements.

- Cultural continuity: Organizational memory contributes to the building and preserving of institutional culture. Stories, successes, failures, and milestones form a narrative that helps inculcate values, mission, and vision among employees.

(Note: Some information in the previous two sets of bullet points was suggested by ChatGPT v4. While ChatGPT was used in the research phase of this piece, primarily for making inquiries about topics and testing ideas, the writing is the author’s effort and responsibility.)

The implementation of a modern OM system—particularly an AI-driven one—has numerous considerations that should be made prior to implementation. One significant issue that needs to be addressed is the impact on personnel. What we are discussing is the development of a tool that can be used by researchers, scientists, and organizations to further their work and take advantage of past efforts. People are often possessive about their work even though they understand it belongs to those paying for its execution. They don't want their work released prematurely or want to feel that someone or something is watching their work as it develops. The development of a system needs to emphasize that this is a tool, perhaps a guide, but not an evaluator or potential replacement. Trust-building through shared ethical principles can facilitate collaboration among lab members.

Other considerations that should be made before implementing AI-driven OM systems include:

- Infrastructure: Establish robust IT infrastructure capable of handling large datasets with high levels of security and accessibility. Cooperation between laboratory or scientific personnel and IT support is needed concerning access to instrument database structures, LIMS, SDMS, etc. First, a choice must be made on what to include and how to go about it without compromising lab operations or integrity.

- Data governance: Develop a clear policy for data management, including quality control, privacy, and sharing protocols.

- AI integration: Choose and customize AI tools for data analysis, natural language processing (NLP), predictive analytics, etc., that suit the laboratory's specific needs.

- Training: Ensure staff are trained in the technical skills to use the system and understand the importance of data entry and curation. Lab personnel need to understand what is being done and why. Care must be taken to ensure that only reviewed and approved material is made available to the OM system so that premature release or the release of work in progress does not occur; this work may be updated over time and the organization will want to avoid the inclusion of out-of-date material. This should be done with the cooperation of lab personnel and not as part of a corporate mandate so that researchers and scientists maintain control over their work and ensure data integrity and governance.

- Continuous improvement: Regularly update the system with new data and continuously improve the AI models as more data is collected.

- Security: Unless care is taken, an AI system can expose confidential information to the outside world. Take measures to ensure that internal and external sources of information are separated and that internal sources are protected against intrusion and leaking.

What information would we put into an OM system?

Put simply, everything could feasibly included in such a system. This could include monthly reports, research reports, project plans, monthly summaries, test results, vendor proposals, hazardous materials records (including disposal information and health concerns such as caution statements, treatment for exposure, etc.), inventory records, production records, and anything else that might contain potentially useful information across the company. That will require a lot of organization, but what would it mean to have all that data and information continuously searchable by an intelligent assistant? Again, as noted previously, security is paramount. (Note that personnel information is intentionally omitted due to privacy and confidentiality issues.)

Organizational memory and scientific information flow

The introduction of laboratory informatics into scientific work is often on an "as needed" basis. An instrument is purchased, and, in most cases, it is either accompanied by an external computer or has one within it. Regardless, the end result is the same: a computer is in the lab, and the subject of scientific and laboratory informatics begins to take shape. As the work develops, more computerized equipment is put in place, and the informatics landscape grows. The point is that computer systems are set in place to support software tools to solve particular problems, such as data management, inventory management, etc., but these aren’t planned acquisitions that are designed to fit into a pre-described informatics architecture. Suppose we are going to begin thinking in terms of OM and its effective advancement and use. In that case, an architecture is what is called for to make sure that the OM system is fed the materials it needs, and that the AI component has material to work with. (Note: Our emphasis is going to be on the OM; the AI is just a tool for accessing, extracting, and working with the OM contents.)

Scientific and laboratory information flow

The basic flow model of a lab's knowledge, information, and data (K/I/D) is represented in Figure 1.

|

Figure 2 zooms into the top of that model and highlights the position of AI-driven OM within the greater K/I/D model.

|

Acknowledgements

I’d like to thank Gretchen Boria for her help in improving this article and her contributions to it.

Footnotes

- ↑ By addressing both "scientific" and "laboratory," we recognize that not all scientific work occurs in a laboratory.

Supplementary information

- Attachment 1: Laboratory Informatics: Information and Workflows

- Attachment 2:

About the author

Initially educated as a chemist, author Joe Liscouski (joe dot liscouski at gmail dot com) is an experienced laboratory automation/computing professional with over forty years of experience in the field, including the design and development of automation systems (both custom and commercial systems), LIMS, robotics and data interchange standards. He also consults on the use of computing in laboratory work. He has held symposia on validation and presented technical material and short courses on laboratory automation and computing in the U.S., Europe, and Japan. He has worked/consulted in pharmaceutical, biotech, polymer, medical, and government laboratories. His current work centers on working with companies to establish planning programs for lab systems, developing effective support groups, and helping people with the application of automation and information technologies in research and quality control environments.

References

- ↑ Malhotra, T. (30 January 2024). "This AI Paper Unveils the Future of MultiModal Large Language Models (MM-LLMs) – Understanding Their Evolution, Capabilities, and Impact on AI Research". Marktechpost. Marketechpost Media, LLC. https://www.marktechpost.com/2024/01/30/this-ai-paper-unveils-the-future-of-multimodal-large-language-models-mm-llms-understanding-their-evolution-capabilities-and-impact-on-ai-research/. Retrieved 10 April 2024.

- ↑ 2.0 2.1 Walsh, James P.; Ungson, Gerardo Rivera (1 January 1991). "Organizational Memory". The Academy of Management Review 16 (1): 57. doi:10.2307/258607. http://www.jstor.org/stable/258607?origin=crossref.

- ↑ "ChatGPT 3.5". OpenAI OpCo, LLC. https://chat.openai.com/. Retrieved 10 April 2024.

- ↑ Emsley, Robin (19 August 2023). "ChatGPT: these are not hallucinations – they’re fabrications and falsifications" (in en). Schizophrenia 9 (1): 52, s41537–023–00379-4. doi:10.1038/s41537-023-00379-4. ISSN 2754-6993. PMC PMC10439949. PMID 37598184. https://www.nature.com/articles/s41537-023-00379-4.

- ↑ Fabbro, R. (29 March 2024). "Microsoft is apprehending AI hallucinations — and not just its own". Quartz. https://qz.com/microsoft-azure-ai-hallucinations-chatbots-1851374390. Retrieved 10 April 2024.

- ↑ Maurin, N. (15 March 2024). "The bank quant who wants to stop gen AI hallucinating". Risk.net. https://www.risk.net/risk-management/7959062/the-bank-quant-who-wants-to-stop-gen-ai-hallucinating. Retrieved 10 April 2024.

- ↑ 7.0 7.1 "About DCMI". Association for Information Science and Technology. https://www.dublincore.org/about/. Retrieved 10 April 2024.

- ↑ "NASA Lessons Learned". NASA. 26 July 2023. https://www.nasa.gov/nasa-lessons-learned/. Retrieved 10 April 2024.

- ↑ Doyle, K. (22 January 2016). "Xerox’s Eureka: A 20-Year-Old Knowledge Management Platform That Still Performs". Field Service Digital. ServiceMax. https://fsd.servicemax.com/2016/01/22/xeroxs-eureka-20-year-old-knowledge-management-platform-still-performs/. Retrieved 10 April 2024.

- ↑ Hiter, S. (9 April 2024). "Salesforce and AI: How Salesforce’s Einstein Transforms Sales". e-Week. https://www.eweek.com/artificial-intelligence/how-salesforce-drives-business-through-ai/. Retrieved 11 April 2024.