Difference between revisions of "Journal:Kadi4Mat: A research data infrastructure for materials science"

Shawndouglas (talk | contribs) (Created stub. Saving and adding more.) |

Shawndouglas (talk | contribs) (Finished adding rest of content.) |

||

| (10 intermediate revisions by the same user not shown) | |||

| Line 6: | Line 6: | ||

|title_full = Kadi4Mat: A research data infrastructure for materials science | |title_full = Kadi4Mat: A research data infrastructure for materials science | ||

|journal = ''Data Science Journal'' | |journal = ''Data Science Journal'' | ||

|authors = | |authors = Brandt, Nico; Griem, Lars; Herrmann, Christoph; Schoof, Ephraim; Tosato, Giovanna; Zhao, Yinghan;<br />Zschumme, Philipp; Selzer, Michael | ||

|affiliations = Karlsruhe Institute of Technology, Karlsruhe University of Applied Sciences, Helmholtz Institute Ulm | |affiliations = Karlsruhe Institute of Technology, Karlsruhe University of Applied Sciences, Helmholtz Institute Ulm | ||

|contact = Email: nico dot brandt at kit dot edu | |contact = Email: nico dot brandt at kit dot edu | ||

| Line 19: | Line 19: | ||

|download = [https://datascience.codata.org/articles/10.5334/dsj-2021-008/galley/1048/download/ https://datascience.codata.org/articles/10.5334/dsj-2021-008/galley/1048/download/] (PDF) | |download = [https://datascience.codata.org/articles/10.5334/dsj-2021-008/galley/1048/download/ https://datascience.codata.org/articles/10.5334/dsj-2021-008/galley/1048/download/] (PDF) | ||

}} | }} | ||

==Abstract== | ==Abstract== | ||

The concepts and current developments of a research data infrastructure for [[Materials informatics|materials science]] are presented, extending and combining the features of an [[electronic laboratory notebook]] (ELN) and a repository. The objective of this infrastructure is to incorporate the possibility of structured data storage and data exchange with documented and reproducible [[data analysis]] and [[Data visualization|visualization]], which finally leads to the publication of the data. This way, researchers can be supported throughout the entire research process. The software is being developed as a web-based and desktop-based system, offering both a graphical user interface (GUI) and a programmatic interface. The focus of the development is on the integration of technologies and systems based on both established as well as new concepts. Due to the heterogeneous nature of materials science data, the current features are kept mostly generic, and the structuring of the data is largely left to the users. As a result, an extension of the research data infrastructure to other disciplines is possible in the future. The source code of the project is publicly available under a permissive Apache 2.0 license. | The concepts and current developments of a research data infrastructure for [[Materials informatics|materials science]] are presented, extending and combining the features of an [[electronic laboratory notebook]] (ELN) and a repository. The objective of this infrastructure is to incorporate the possibility of structured data storage and data exchange with documented and reproducible [[data analysis]] and [[Data visualization|visualization]], which finally leads to the publication of the data. This way, researchers can be supported throughout the entire research process. The software is being developed as a web-based and desktop-based system, offering both a graphical user interface (GUI) and a programmatic interface. The focus of the development is on the integration of technologies and systems based on both established as well as new concepts. Due to the heterogeneous nature of materials science data, the current features are kept mostly generic, and the structuring of the data is largely left to the users. As a result, an extension of the research data infrastructure to other disciplines is possible in the future. The source code of the project is publicly available under a permissive Apache 2.0 license. | ||

| Line 31: | Line 26: | ||

==Introduction== | ==Introduction== | ||

In the engineering sciences, the handling of digital research data plays an increasingly important role in all fields of application.<ref name="SandfeldStrateg18">{{cite web |url=https://www.tib.eu/en/search/id/TIBKAT%3A1028913559/ |title=Strategiepapier - Digitale Transformation in der Materialwissenschaft und Werkstofftechnik |author=Sandfeld, S.; Dahmen, T.; Fischer, F.O.R. et al. |publisher=Deutsche Gesellschaft für Materialkunde e.V |date=2018}}</ref> This is especially the case, due to the growing amount of data obtained from experiments and simulations.<ref name="HeyTheData03">{{cite book |chapter=Chapter 36: The Data Deluge: An e‐Science Perspective |title=Grid Computing: Making the Global Infrastructure a Reality |author=Hey, T.; Trefethen, A. |editor=Berman, F.; Fox, G.; Hey, T. |publisher=John Wiley & Sons, Ltd |year=2003 |isbn=9780470867167 |doi=10.1002/0470867167.ch36}}</ref> The extraction of knowledge from these data is referred to as a data-driven, fourth paradigm of science, filed under the keyword "data science."<ref name="HeyTheFourth09">{{cite book |title=The Fourth Paradigm: Data-Intensive Scientific Discovery |author=Hey, T.; Tansley, S.; Tolle, K. |publisher=Microsoft Research |year=2009 |isbn=9780982544204 |url=https://www.microsoft.com/en-us/research/publication/fourth-paradigm-data-intensive-scientific-discovery/}}</ref> This is particularly true in [[Materials informatics|materials science]], as the research and understanding of new materials are becoming more and more complex.<ref name="HillMaterials16">{{cite journal |title=Materials science with large-scale data and informatics: Unlocking new opportunities |journal=MRS Bulletin |author=Hill, J.; Mulholland, G.; Persson, K. et al. |volume=41 |issue=5 |pages=399–409 |year=2016 |doi=10.1557/mrs.2016.93}}</ref> Without suitable [[Data analysis|analysis]] methods, the ever-growing amount of data will no longer be manageable. In order to be able to perform appropriate data analyses smoothly, the structured storage of research data and associated [[metadata]] is an important aspect. Specifically, a uniform research [[Information management|data management]] is needed, which is made possible by appropriate infrastructures such as research data repositories. In addition to uniform data storage, such systems can help to overcome inter-institutional hurdles in data exchange, compare theoretical and experimental data, and provide reproducible [[workflow]]s for data analysis. Furthermore, linking the data with persistent identifiers enables other researchers to directly reference them in their work. | |||

In particular, repositories for the storage and internal or public exchange of research data are becoming increasingly widespread. In particular, the publication of such data, either on its own or as a supplement to a text publication, is increasingly encouraged or sometimes even required.<ref name="NaughtonMaking16">{{cite journal |title=Making sense of journal research data policies |journal=Insights |author=Naughton, L.; Kernohan, D. |volume=29 |issue=1 |pages=84–9 |year=2016 |doi=10.1629/uksg.284}}</ref> In order to find a suitable repository, services such as re3data.org<ref name="PampelMaking13">{{cite journal |title=Making Research Data Repositories Visible: The re3data.org Registry |journal=PLoS One |author=Pampel, H.; Vierkant, P.; Scholze, F. et al. |volume=8 |issue=11 |at=e78080 |year=2013 |doi=10.1371/journal.pone.0078080}}</ref> or FAIRSharing<ref name="SansoneFAIR19">{{cite journal |title=FAIRsharing as a community approach to standards, repositories and policies |journal=Nature Biotechnology |author=Sansone, S.-A.; McQuilton, P.; Rocca-Serra, P. et al. |volume=37 |pages=358–67 |year=2019 |doi=10.1038/s41587-019-0080-8}}</ref> are available. These services also make it possible to find subject-specific repositories for materials science data. Two well-known examples are the Materials Project<ref name="JainComment13">{{cite journal |title=Commentary: The Materials Project: A materials genome approach to accelerating materials innovation |journal=APL Materials |author=Jain, A.; Ong, S.P.; Hautier, G. et al. |volume=1 |issue=1 |at=011002 |year=2013 |doi=10.1063/1.4812323}}</ref> and the NOMAD Repository.<ref name="DraxlNOMAD18">{{cite journal |title=NOMAD: The FAIR concept for big data-driven materials science |journal=MRS Bulletin |author=Draxl, C.; Scheffler, M. |volume=43 |issue=9 |pages=676–82 |year=2018 |doi=10.1557/mrs.2018.208}}</ref> Indexed repositories are usually hosted centrally or institutionally and are mostly used for the publication of data. However, some of the underlying systems can also be installed by the user, e.g., for internal use within individual research groups. Additionally, this allows full control over stored data as well as internal data exchanges, if this function is not already part of the repository. In this respect, open-source systems are particularly important, as this means independence from vendors and opens up the possibility of modifying the existing functionality or adding additional features, sometimes via built-in plug-in systems. Examples of such systems are CKAN<ref name="CKANHome">{{cite web |url=https://ckan.org/ |title=CKAN |publisher=CKAN Association |accessdate=19 May 2020}}</ref>, Dataverse<ref name="KinganIntro07">{{cite journal |title=An Introduction to the Dataverse Network as an Infrastructure for Data Sharing |journal=Sociological Methods & Research |author=King, G. |volume=36 |issue=2 |pages=173–99 |year=2007 |doi=10.1177/0049124107306660}}</ref>, [[DSpace]]<ref name="SmithDSpace03">{{cite journal |title=DSpace: An Open Source Dynamic Digital Repository |journal=D-Lib Magazine |author=Smith, M.; Barton, M.; Bass, M. et al. |volume=9 |issue=1 |year=2003 |doi=10.1045/january2003-smith}}</ref>, and Invenio<ref name="InvenioHome">{{cite web |url=https://invenio-software.org/ |title=Invenio |publisher=CERN |accessdate=19 May 2020}}</ref>, where the latter is the basis of Zenodo.<ref name="ZenodoHome">{{cite web |url=https://www.zenodo.org/ |title=Zenodo |author=European Organization for Nuclear Research |publisher=CERN |year=2013 |doi=10.25495/7GXK-RD71}}</ref> The listed repositories are all generic and represent only a selection of the existing open-source systems.<ref name="AmorimAComp16">{{cite journal |title=A comparison of research data management platforms: Architecture, flexible metadata and interoperability |journal=Universal Access in the Information Society |author=Amorim, R.C.; Castro, J.A.; da Silva, J.R. et al. |volume=16 |pages=851–62 |year=2017 |doi=10.1007/s10209-016-0475-y}}</ref> | |||

In addition to repositories, a second type of system increasingly being used in experiment-oriented research areas is the [[electronic laboratory notebook]] (ELN).<ref name="RubachaARev11">{{cite journal |title=A Review of Electronic Laboratory Notebooks Available in the Market Today |journal=SLAS Technology |author=Rubacha, M.; Rattan, A.K.; Hosselet, S.C. |volume=16 |issue=1 |year=2011 |doi=10.1016/j.jala.2009.01.002}}</ref> Nowadays, the functionality of ELNs goes far beyond the simple replacement of paper-based [[laboratory notebook]]s, and can also include aspects such as data analysis, as seen, for example, in [[Galaxy (biomedical software)|Galaxy]]<ref name="AfganTheGal18">{{cite journal |title=The Galaxy platform for accessible, reproducible and collaborative biomedical analyses: 2018 update |journal=Nucleic Acids Research |author=Afgan, E.; Baker, D.; Batut, B. et al. |volume=46 |issue=W1 |year=2018 |doi=10.1093/nar/gky379}}</ref> or [[Jupyter Notebook]].<ref name="KluyverJupyter16">{{cite book |chapter=Jupyter Notebooks—A publishing format for reproducible computational workflows |title=Positioning and Power in Academic Publishing: Players, Agents and Agendas |author=Kluyver, T.; Ragan-Kelley, B.; Pérez, F. et al. |editor=Loizides, F.; Schmidt, B. |publisher=IOS Press |pages=87–90 |year=2016 |doi=10.3233/978-1-61499-649-1-87}}</ref> Both systems focus primarily on providing accessible and reproducible computational research data. However, the boundary between unstructured and structured data is increasingly becoming blurred, the latter being traditionally only found in [[laboratory information management system]]s (LIMS).<ref name="BirdLab13">{{cite journal |title=Laboratory notebooks in the digital era: The role of ELNs in record keeping for chemistry and other sciences |journal=Chemical Society Reviews |author=Bird, C.L.; Willoughby, C.; Frey, J.G. |volume=42 |issue=20 |year=2013 |pages=8157–8175 |doi=10.1039/C3CS60122F}}</ref><ref name="ElliottThink09">{{cite journal |title=Thinking Beyond ELN |journal=Scientific Computing |author=Elliott, M.H. |volume=26 |issue=6 |pages=6–10 |year=2009 |archivedate=20 May 2011 |url=http://www.scientificcomputing.com/articles-IN-Thinking-Beyond-ELN-120809.aspx |archiveurl=https://web.archive.org/web/20110520065023/http://www.scientificcomputing.com/articles-IN-Thinking-Beyond-ELN-120809.aspx}}</ref><ref name="TaylorTheStatus06">{{cite journal |title=The status of electronic laboratory notebooks for chemistry and biology |journal=Current Opinion in Drug Discovery and Development |author=Taylor, K.T. |volume=9 |issue=3 |pages=348–53 |year=2006 |pmid=16729731}}</ref> Most existing ELNs are domain-specific and limited to research disciplines such as biology or chemistry.<ref name="TaylorTheStatus06" /> According to current knowledge, a system specifically tailored to materials science does not exist. For ELNs, there are also open-source systems such as [[eLabFTW]]<ref name="CarpiElabFTW17">{{cite journal |title=eLabFTW: An open source laboratory notebook for research labs |journal=Journal of Open Source Software |author=Carpi, N.; Minges, A.; Piel, M. |volume=2 |issue=12 |at=146 |year=2017 |doi=10.21105/joss.00146}}</ref>, [[sciNote]]<ref name="ScinoteHome">{{cite web |url=https://www.scinote.net/ |title=SciNote |publisher=SciNote LLC |accessdate=21 May 2020}}</ref>, or [[Chemotion ELN|Chemotion]].<ref name="TremouilhacChemotionELN17">{{cite journal |title=Chemotion ELN: An open source electronic lab notebook for chemists in academia |journal=Journal of Cheminformatics |author=Tremouilhac, P.; Nguyen, A.; Huang, Y.-C. et al. |volume=9 |at=54 |year=2017 |doi=10.1186/s13321-017-0240-0}}</ref> Compared to the repositories, however, the selection of ELNs is smaller. Furthermore, only the first two mentioned systems are generic. | |||

Thus, generic research data systems and software are available for both ELNs and repositories, which, in principle, could also be used in materials science. The listed open-source solutions are of particular relevance, as they can be adapted to different needs and are generally suitable for use in a custom installation within single research groups. However, both aspects can be a considerable hurdle, especially for smaller groups. Due to a lack of resources, structured research data management and the possibility of making data available for subsequent use is therefore particularly difficult for such groups.<ref name="HeidornShed08">{{cite journal |title=Shedding Light on the Dark Data in the Long Tail of Science |journal=Library Trends |author=Heidorn, P.B. |volume=57 |issue=2 |pages=280–99 |year=2008 |doi=10.1353/lib.0.0036}}</ref> What is finally missing is a system that can be deployed and used both centrally and decentrally, as well as internally and publicly, without major obstacles. The system should support researchers throughout the entire research process, starting with the generation and extraction of raw data, up to the structured storage, exchange, and analysis of the data, resulting in the final publication of the corresponding results. In this way, the features of the ELN and the repository are combined, creating a virtual research environment<ref name="CarusiVirt10">{{cite web |url=http://hdl.handle.net/10044/1/18568 |title=Virtual Research Environment Collaborative Landscape Study |author=Carusi, A.; Reimer, T.F. |publisher=JISC |date=17 January 2010 |doi=10.25561/18568}}</ref> that accelerates the generation of innovations by facilitating collaboration among researchers. In an interdisciplinary field like materials science, there is a special need to model the very heterogeneous workflows of researchers.<ref name="HillMaterials16" /> | |||

For this purpose, the research data infrastructure Kadi4Mat (Karlsruhe Data Infrastructure for Materials Sciences) is being developed at the Institute for Applied Materials (IAM-CMS) of the Karlsruhe Institute of Technology (KIT). The aim of the software is to combine the possibility of structured data storage with documented and reproducible workflows for data analysis and visualization tasks, incorporating new concepts with established technologies and existing solutions. In the development of the software, the FAIR Guiding Principles<ref name="WilkinsonTheFAIR16">{{cite journal |title=The FAIR Guiding Principles for scientific data management and stewardship |journal=Scientific Data |author=Wilkinson, M.D.; Dumontier, M.; Aalbersberg, I.J. et al. |volume=3 |pages=160018 |year=2016 |doi=10.1038/sdata.2016.18 |pmid=26978244 |pmc=PMC4792175}}</ref> for scientific data management are taken into account. Instances of the data infrastructure have already been deployed and today show how structured data storage and data exchange are made possible.<ref name="Kadi4MatHome">{{cite web |url=https://kadi.iam-cms.kit.edu/ |title=Kadi4Mat |publisher=Karlsruhe Institute of Technology |accessdate=30 September 2020}}</ref> Furthermore, the source code of the project is publicly available under a permissive Apache 2.0 license.<ref name="BrandtIAM20">{{cite journal |title=IAM-CMS/kadi: Kadi4Mat (Version 0.6.0) |journal=Zenodo |author=Brandt, N.; Griem, L.; Hermann, C. et al. |year=2020 |doi=10.5281/zenodo.4507826}}</ref> | |||

==Concepts== | |||

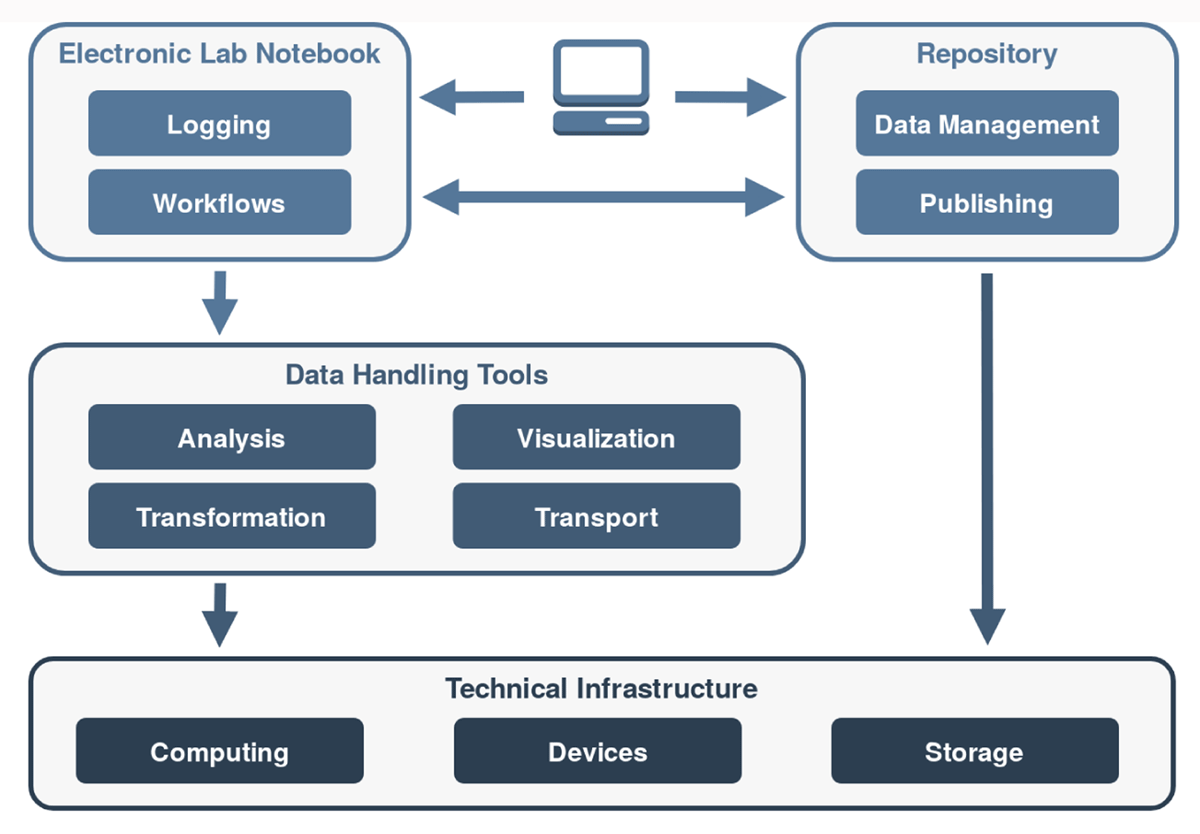

Kadi4Mat is logically divided into the two components—an ELN and a repository—which have access to various tools and technical infrastructures. The components can be used by web- and desktop-based applications, via uniform interfaces. Both a graphical and a programmatic interface are provided, using machine-readable formats and various exchange protocols. In Figure 1, a conceptual overview of the infrastructure of Kadi4Mat is presented. | |||

[[File:Fig1 Brandt DataSciJourn21 20-1.png|800px]] | |||

{{clear}} | |||

{| | |||

| STYLE="vertical-align:top;"| | |||

{| border="0" cellpadding="5" cellspacing="0" width="800px" | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"| <blockquote>'''Figure 1.''' Conceptual overview of the infrastructure of Kadi4Mat. The system is logically divided into the two components—an ELN and a repository—which have access to various data handling tools and technical infrastructures. The two components can be used both graphically and programmatically via uniform interfaces.</blockquote> | |||

|- | |||

|} | |||

|} | |||

===Electronic laboratory notebook=== | |||

The so-called workflows are of particular importance in the ELN component. A "workflow" is a generic concept that describes a well-defined sequence of sequential or parallel steps, which are processed as automatically as possible. This can include the execution of an analysis tool or the control and data retrieval of an experimental device. To accommodate such heterogeneity, concrete steps must be implemented as flexibly as possible, since they are highly user- and application-specific. In Figure 1, the types of tools shown in the second layer are used as part of the workflows, so as to implement the actual functionality of the various steps. These can be roughly divided into analysis, visualization, transformation, and transportation tasks. In order to keep the application of these tools as generic as possible, a combination of provided and user-defined tools is accessed. From a user’s perspective, it must be possible to provide such tools in an easy manner, while the execution of each tool must take place in a secure and functional environment. This is especially true for existing tools—e.g., a simple MATLAB<ref name="MATLAB">{{cite web |url=https://www.mathworks.com/products/matlab.html |title=MATLAB |publisher=MathWorks |accessdate=19 January 2021}}</ref> script—which require certain dependencies to be executed and must be equipped with a suitable interface to be used within a workflow. Depending on their functionality, the tools must in turn access various technical infrastructures. In addition to the use of the repository and computing infrastructure, direct access to devices is also important for more complex data analyses. The automation of a typical workflow of experimenters is only fully possible if data and metadata, created by devices, can be captured. However, such an integration is not trivial due to a heterogeneous device landscape, as well as proprietary data formats and interfaces.<ref name="HawkerLab07">{{cite journal |title=Laboratory Automation: Total and Subtotal |journal=Clinics in Laboratory Medicine |author=Hawker, C.D. |volume=27 |issue=4 |pages=749–70 |year=2007 |doi=10.1016/j.cll.2007.07.010}}</ref><ref name="PotthoffProc19">{{cite journal |title=Procedures for systematic capture and management of analytical data in academia |journal=Analytica Chimica Acta: X |author=Potthoff, J.; Tremouilhac, P.; Hodapp, P. et al. |volume=1 |at=100007 |year=2019 |doi=10.1016/j.acax.2019.100007}}</ref> In Kadi4Mat, it should also be possible to use individual tools separately, where appropriate, i.e., outside a workflow. For example, when using the web-based interface, a visualization tool for a custom data format may be used to generate a preview of a datum that can be directly displayed in a web browser. | |||

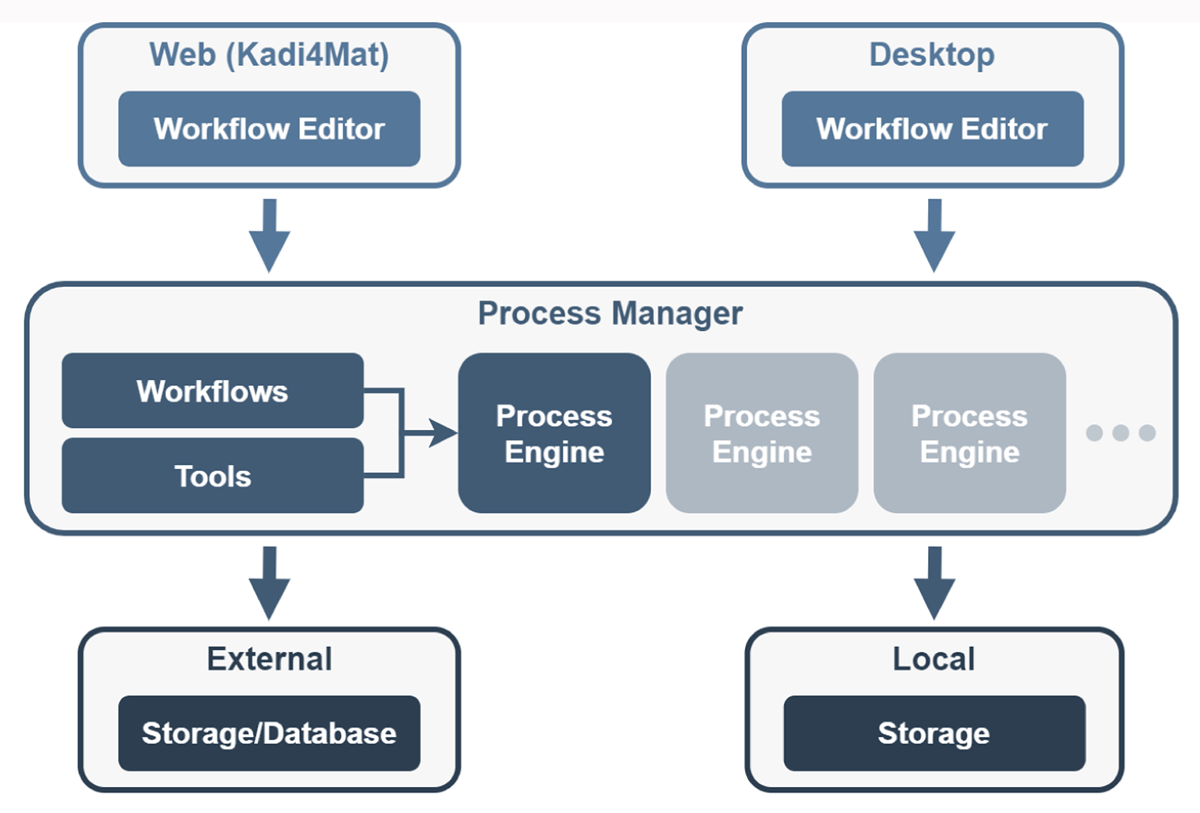

In Figure 2, the current concept for the integration of workflows in Kadi4Mat is shown. Different steps of a workflow can be defined with a graphical node editor. Either a web-based or a desktop-based version of such an editor can be used, the latter running as an ordinary application on a local workstation. With the help of such an editor, the different steps or tools to be executed are defined, linked, and, most importantly, parameterized. The execution of a workflow can be started via an external component called "Process Manager." This component in turn manages several process engines, which take care of executing the workflows. The process engines potentially differ in their implementation and functionality. A simple process engine, for example, could be limited to a sequential execution order of the different tasks, while another one could execute independent tasks in parallel. All engines process the required steps based on the [[information]] stored in the workflow. With appropriate transport tools, the data and metadata required for each step, as well as the resulting output, can be exported or imported from Kadi4Mat using the existing interfaces of the research data infrastructure. With similar tools, the use of other external data sources becomes possible, and with it the possibility to handle large amounts of data via suitable exchange protocols. The use of locally stored data is also possible when running a workflow on a local workstation. | |||

[[File:Fig2 Brandt DataSciJourn21 20-1.png|800px]] | |||

{{clear}} | |||

{| | |||

| STYLE="vertical-align:top;"| | |||

{| border="0" cellpadding="5" cellspacing="0" width="800px" | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"| <blockquote>'''Figure 2.''' Conceptual overview of the workflow architecture. Each workflow is defined using a graphical editor that is either directly integrated into the web-based interface of Kadi4Mat or locally, with a desktop application. The process manager provides an interface for executing workflows and communicates on behalf of the user with multiple process engines, to which the actual execution of workflows is delegated. The engines are responsible for the actual processing of the different steps, based on the information defined in a workflow. Data and metadata can either be stored externally or locally.</blockquote> | |||

|- | |||

|} | |||

|} | |||

Since the reproducibility of the performed steps is a key objective of the workflows, all meaningful information and metadata can be logged along the way. The logging needs to be flexible in order to accommodate different individual or organizational needs, and as such, it is also part of the workflow itself. Workflows can also be shared with other users, for example, via Kadi4Mat. Manual steps may require interaction during the execution of a workflow, for which the system must prompt the user. In summary, the focus of the ELN component thus points in a different direction than in classic ELNs, with the emphasis on the automation of the steps performed. This aspect in particular is similar to systems such as Galaxy<ref name="AfganTheGal18" />, which focuses on computational biology, or Taverna<ref name="WolstencroftTheTav13">{{cite journal |title=The Taverna workflow suite: designing and executing workflows of Web Services on the desktop, web or in the cloud |journal=Nucleic Acids Research |author=Wolstencroft, K.; Haines, R.; Fellows, D. et al. |volume=41 |issue=W1 |pages=W557–W561 |year=2013 |doi=10.1093/nar/gkt328}}</ref>, a dedicated workflow management system. Nevertheless, some typical features of classic ELNs are also considered in the ELN component, such as the inclusion of handwritten notes. | |||

===Repository=== | |||

In the repository component, data management is regarded as the central element, especially the structured data storage and exchange. An important aspect is the enrichment of data with corresponding descriptive metadata, which is required for its description, analysis, or search. Many repositories, especially those focused on publishing research data, use the metadata schema provided by DataCite<ref name="DataCite43">{{cite web |url=https://schema.datacite.org/meta/kernel-4.3/ |title= DataCite Metadata Schema for the Publication and Citation of Research Data. Version 4.3 |author=DataCite Metadata Working Group |publisher=DataCite e.V |date=16 August 2019}}</ref> and are either directly or heavily based on it. This schema is widely supported and enables the direct publication of data via the corresponding DataCite service. For use cases that go beyond data publications, it is limited in its descriptive power, at the same time. There are comparatively few subject-specific schemas available for engineering and material sciences. Two examples are EngMeta<ref name="SchemberaEngMeta20">{{cite journal |title=EngMeta: Metadata for computational engineering |journal=International Journal of Metadata, Semantics and Ontologies |author=Schembera, B.; Iglezakis, D. |volume=14 |issue=1 |pages=26–38 |year=2020 |doi=10.1504/IJMSO.2020.107792}}</ref> and NOMAD Meta Info.<ref name="GhiringhelliTowards17">{{cite journal |title=Towards efficient data exchange and sharing for big-data driven materials science: metadata and data formats |journal=Computational Materials |author=Ghiringhelli, L.M.; Carbogno, C.; Levchenko, S. et al. |volume=3 |at=46 |year=2017 |doi=10.1038/s41524-017-0048-5}}</ref> The first schema is created ''a priori'' and aims to provide a generic description of computer-aided engineering data, while the second schema is created ''a posteriori'', using existing computing inputs and outputs from the database of the NOMAD repository. | |||

The second approach is also pursued in a similar way in Kadi4Mat. Instead of a fixed metadata schema, the concrete structure is largely determined by the users themselves, and thus is oriented towards their specific needs. To aid with establishing common metadata vocabularies, a mechanism to create templates is provided. Templates can impose certain restrictions and validations on certain metadata. They are user-defined and can be shared within workgroups or projects, facilitating the establishment of metadata standards. Nevertheless, individual, generic metadata fields, such as a title or description of a data set, can be static. For different use cases such as data analysis, publishing, or the interoperability with other systems, additional conversions must be provided. This is not only necessary because of differing data formats, but also to map vocabularies of different schemas accordingly. Such converted metadata can either represent a subset of existing schemas or require additional fields, such as a license for the re-use of published data. In the long run, the objective in Kadi4Mat is to offer well-defined structures and semantics by making use of ontologies. In the field of materials science, there are ongoing developments in this respect, such as the European Materials Modelling Ontology.<ref name="GH_EMMO">{{cite web |url=https://github.com/emmo-repo |title=European Materials and Modelling Ontology |work=GitHub |author=European Materials Modelling Council |accessdate=24 May 2020}}</ref> However, a bottom-up procedure is considered as a more flexible solution, with the objective to generate an [[Ontology (information science)|ontology]] from existing metadata and relationships between different data sets. Such a two-pronged approach aims to be functional in the short term, while still staying extensible in the long term<ref name="GreenbergAMeta09">{{cite journal |title=A Metadata Best Practice for a Scientific Data Repository |journal=Journal of Library Metadata |author=Greenberg, J.; White, H.C.; Carrier, S. et al. |volume=9 |issue=3–4 |pages=194–212 |year=2009 |doi=10.1080/19386380903405090}}</ref>, although it heavily depends on how users manage their data and metadata with the options available. | |||

In addition to the metadata, the actual data must be managed as well. Here, one can distinguish between data managed directly by Kadi4Mat and linked data. In the simplest form, the former resides on a file system accessible by the repository, which means full control over the data. This requires a copy of each datum to be made available in Kadi4Mat, which makes it less suitable for very large amounts of data. The same applies to data analyses that are to be carried out on external computing infrastructures and must access the data for this purpose. Linked data, on the other hand, can be located on external data storage devices, e.g., high-performance computing infrastructures. This also makes it possible to integrate existing infrastructures and repositories. In these cases, Kadi4Mat can simply offer a view on top of such infrastructures or a more direct integration, depending on the concrete system in question. | |||

A further point to be addressed within the repository is the publication of data and metadata, including templates and workflows, that require persistent identifiers to be referenceable. Many existing repositories and systems are already specialized in exactly this use case and offer infrastructures for the long-term archiving of large amounts of data. Thus, an integration of suitable external systems is to be considered for this task in particular. From Kadi4Mat’s point of view, only certain basic requirements have to be ensured in order to enable the publishing of data. These include the assignment of a unique identifier within the system, the provision of metadata and licenses, necessary for a publication, and a basic form of user-guided [[quality control]]. The repository component thus also goes in a different direction than classic repositories. In a typical scientific workflow, it is primarily focused on all steps that take place between the initial data acquisition and the publishing of data. The component is therefore best described as a community repository that manages warm data (i.e., unpublished data that needs further analysis) and enables data exchange within specific communities, e.g., within a research group or project. | |||

==Implementation== | |||

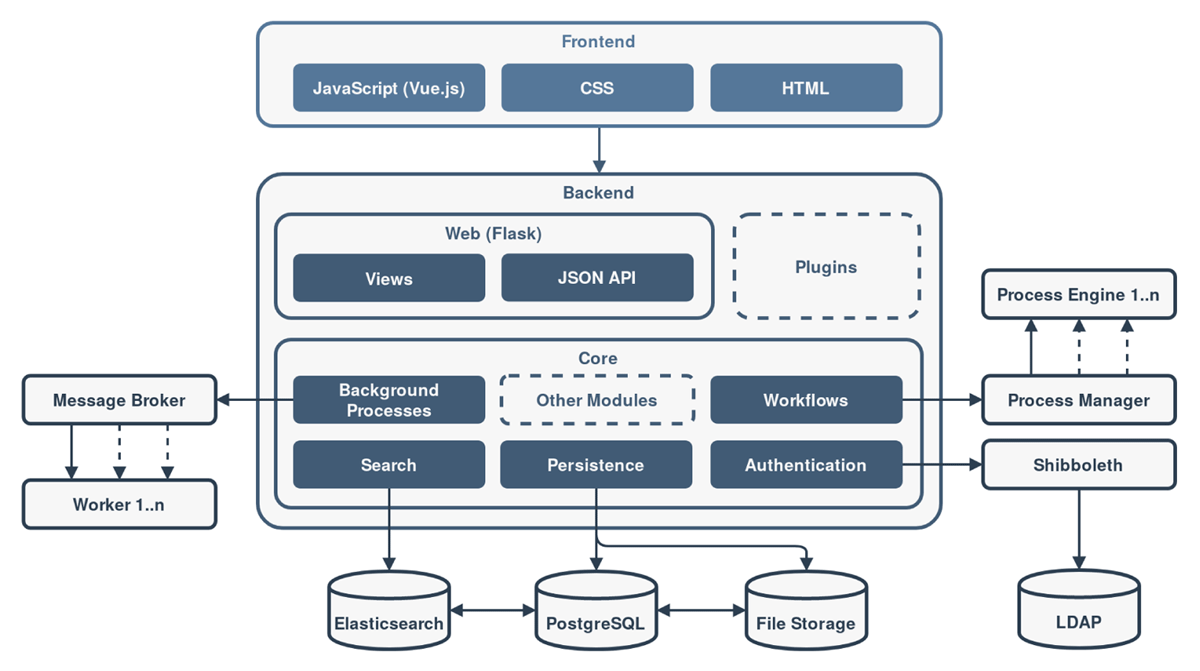

Kadi4Mat is built as a web-based application that employs a classic client-server architecture. A graphical front end is provided to be used with a normal web browser as a client, while the server is responsible for handling the back end and the integration of external systems. A high-level overview of the implementation is shown in Figure 3. The front end is based on the classic web technologies JavaScript, HTML, and CSS. In particular, the client-side JavaScript web framework Vue.js<ref name="VUE">{{cite web |url=https://vuejs.org/ |title=Vue.js - The Progressive JavaScript Framework |author=Vue Core Development Team |publisher=Evan You |accessdate=25 May 2020}}</ref> is used. The framework is especially suitable for the creation of single-page web applications (SPA), but it can also be used for individual sections of more classic applications, to incrementally add complex and dynamic user interface components to certain pages. Vue.js is mainly used for the latter, the benefit being a clear separation between the data and the presentation layer, as well as the easier re-use of user interface components. This aspect is combined with server-side rendering. Due to the technologies and standards employed, the use of the front end is currently limited to recent versions of modern web browsers such as Firefox, Chrome, or Edge. | |||

[[File:Fig3 Brandt DataSciJourn21 20-1.png|800px]] | |||

{{clear}} | |||

{| | |||

| STYLE="vertical-align:top;"| | |||

{| border="0" cellpadding="5" cellspacing="0" width="800px" | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"| <blockquote>'''Figure 3.''' Overview of the implementation of Kadi4Mat, separated into front end and back end. The front end uses classic web technologies and is usually operated via a web browser. In the back end, the functionality is split into the web and the core component. The former takes care of the external interfaces, while the latter contains most of the core functionality and handles the interfaces of other systems. A plugin component is also shown, which can be used to customize or extend the functionality of the system.</blockquote> | |||

|- | |||

|} | |||

|} | |||

In the back end, the framework Flask<ref name="Flask">{{cite web |url=https://palletsprojects.com/p/flask/ |title=Flask |publisher=The Pallets Project |accessdate=25 May 2020}}</ref> is used for the web component. The framework is implemented in Python and is compatible with the common web server gateway interface (WSGI), which specifies an interface between web servers and Python applications. As a so-called microframework, the functionality of Flask itself is limited to the basic features. This means that most of the functionality, which is unrelated to the web component, has to be added by custom code or suitable libraries. At the same time, more freedom is offered in the concrete choice of technologies. This is in direct contrast to web frameworks such as Django<ref name="Django">{{cite web |url=https://www.djangoproject.com/ |title=Django |publisher=The Django Software Foundation |accessdate=25 May 2020}}</ref>, which already provides a lot of functionality from scratch. The web component itself is responsible for handling client requests for specific endpoints and assigning them to the appropriate Python functions. Currently, either HTML or JSON is returned, depending on the endpoint. The latter is used as part of an HTTP [[application programming interface]] (API), to enable an internal and external programmatic data exchange. This API is based on the representational state transfer (REST) paradigm.<ref name="FieldingArch00">{{cite web |url=https://www.ics.uci.edu/~fielding/pubs/dissertation/top.htm |title=Architectural Styles and the Design of Network-based Software Architectures |author=Fielding, R.T. |publisher=University of California, Irvine |date=2000}}</ref> Support for other exchange formats could also be relevant in the future, particularly for implementing certain exchange formats for interoperability, such as OAI-PMH.<ref name="OAIProt">{{cite web |url=http://www.openarchives.org/pmh/ |title=Open Archives Initiative Protocol for Metadata Harvesting |publisher=Open Archives Initiative |accessdate=25 May 2020}}</ref> Especially for handling larger amounts of data, other exchange protocols besides HTTP are considered. | |||

A large part of the application consists of the core functionality, which is divided into different modules, as shown in Figure 3. This structure is mainly of an organizational nature. A microservice architecture is currently not implemented. Modules that access external components are particularly noteworthy, which is an aspect that will also be increasingly important in the future. External components can either run on the same hardware as Kadi4Mat itself or on separate systems available via a network interface. For the storage of metadata, the persistence module makes use of the relational database management system [[PostgreSQL]]<ref name="PostegreSQL">{{cite web |url=https://www.postgresql.org/ |title=PostegreSQL: The World's Most Advanced Open Source Relational Database |publisher=PostgreSQL Global Development Group |accessdate=30 September 2020}}</ref>, while the regular file system stores the actual data. Additionally, the software Elasticsearch<ref name="Elasticsearch">{{cite web |url=https://www.elastic.co/elasticsearch/ |title=Elasticsearch: The Heart of the Free and Open Elastic Stack |publisher=Elasticsearch B.V |accessdate=02 June 2020}}</ref> is used to index all the metadata that needs to be efficiently searchable. The aforementioned process manager<ref name="ZschummeIAM21">{{cite web |url=https://zenodo.org/record/4442553 |title=IAM-CMS/process-manager (Version 0.1.0) |author=Zschumme, P. |work=Zenodo |date=15 January 2021 |doi=10.5281/zenodo.4442553}}</ref>, which is currently implemented as a command line application, manages the execution of workflows by delegating each execution task to an available process engine.<ref name="ZschummeIAMEng21">{{cite web |url=https://zenodo.org/record/4442563 |title=IAM-CMS/process-engine (Version 0.1.0) |author=Zschumme, P. |work=Zenodo |date=15 January 2021 |doi=10.5281/zenodo.4442563}}</ref> While the current implementation of the process engine primarily uses the local file system of the machine on which it is running, users can add steps to synchronize data with the repository to their workflow at will. To increase performance with multiple parallel requests for workflow execution, the requests can be distributed to process engines running on additional servers. By wrapping the process manager with a simple HTTP API, for example, its interface can easily be used over a network. A message broker is used to decouple longer running or periodically executed background tasks from the rest of the application, by delegating them to one or more background worker processes. Apart from using locally managed user accounts or an LDAP system for authentication, Shibboleth<ref name="WalkerShibb17">{{cite web |url=https://spaces.at.internet2.edu/display/TI/TI.66.1 |title=Shibboleth Architecture Protocols and Profiles |author=Walker, D.; Cantor, S.; Carmody, S.; et al. |work=Internet2 Confluence |date=17 November 2017 |doi=http://doi.org/10.26869/TI.66.1}}</ref> can be used as well. From a technical point of view, Shibboleth is not a single system, but the interaction of several components, which together enable a distributed authentication procedure. Depending on the type of authentication, user attributes or group affiliations can also be used for authorization purposes in the future. | |||

Another component shown in Figure 3 involves the plugins. These can be used to customize or extend the basic functionality of certain procedures or actions, without having to modify or know the corresponding implementation in detail. Unlike the tools in a workflow, plugins make use of predefined hooks to add their custom functionality. While such a plugin has to be installed centrally by the system administrator for all users of a Kadi4Mat instance, the possibilities are also evaluated to be able to make use of individual plugins on the user level. | |||

==Results== | |||

The current functionalities of Kadi4Mat can either be utilized via the graphical user interface, with a browser, or via the HTTP API, with a suitable client. On top of the API, a Python library is developed, which makes it especially easy to interact with the different functionalities.<ref name="SchoofIAM20">{{cite web |url=https://zenodo.org/record/4088276 |title=IAM-CMS/kadi-apy: Kadi4Mat API Library (Version 0.4.1) |author=Schoof, E.; Brandt, N. |work=Zenodo |date=02 February 2021 |doi=10.5281/zenodo.4507865}}</ref> Besides using the library in Python code, it offers a command-line interface, enabling the integration with other programming or scripting languages. | |||

In the following, the most important features of Kadi4Mat are explained, based on its graphical user interface. The focus of the features implemented so far is on the repository component, the topics of structured data management and data exchange in particular, as well as on the workflows, which are a central part of the ELN’s functionality. After logging in to Kadi4Mat, it is possible to create different types of resources. The most important type of resource are the so-called records, which can link arbitrary data with descriptive metadata and serve as basic components that can be used in workflows and future data publications. In principle, a record can be used for all kinds of data, including data from simulations or experiments, and it can be linked to other records of related data sets, e.g., to the descriptions of the software and hardware devices used. | |||

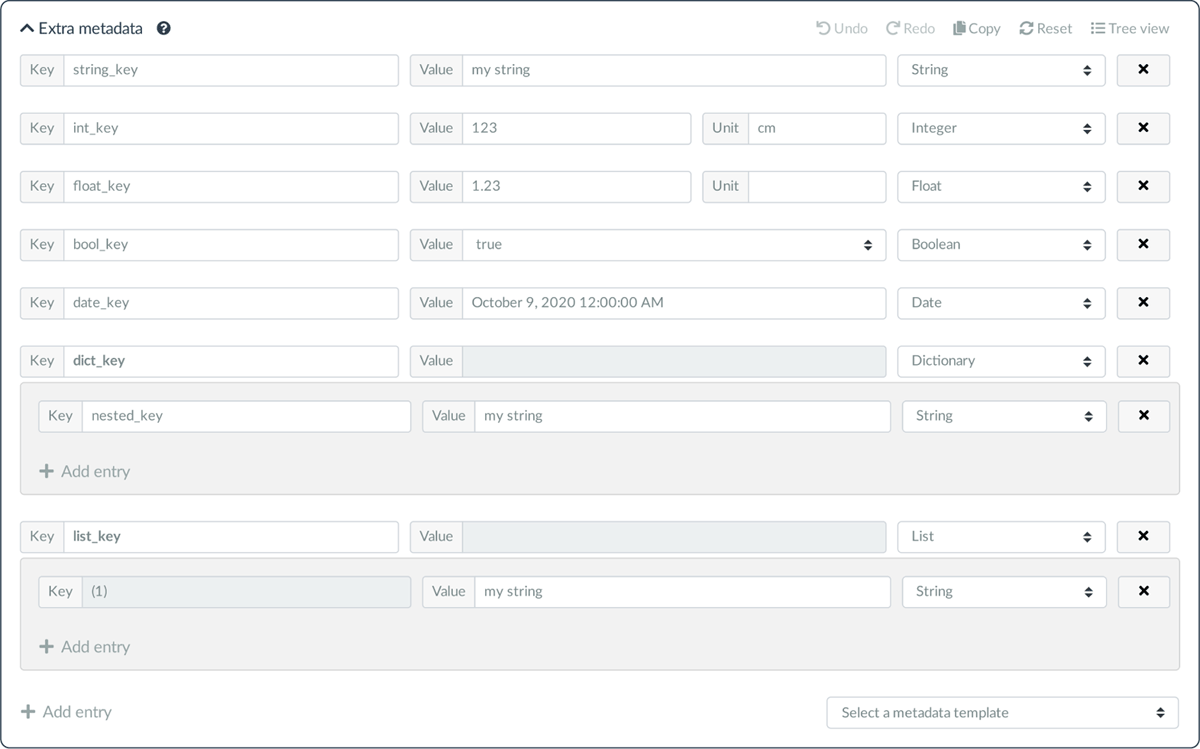

The metadata of a record includes both basic metadata, such as title or description, and domain-specific metadata, which can be specified generically, in the form of key/value pairs. The latter can be defined using a special editor, as shown in Figure 4. With the help of such metadata, a description of subject- and application-specific records becomes possible. This is particularly relevant in an interdisciplinary research field such as materials science, where using a fixed schema would be impracticable, due to the heterogeneity of the data formats and the corresponding metadata. The value of each metadata entry can be of different types, such as simple character strings or numeric types like integers and floating point numbers. Numeric values can also be provided with an arbitrary unit. Furthermore, nested types can be used to represent metadata structures of almost any complexity, for example in the form of lists. The input of such structures can be simplified by templates, which are specified in advance and can be combined as desired. While templates currently offer the same possibilities as the actual metadata, it is planned to add further validation functionalities, such as the specification of a selection of valid values for certain metadata keys. Wherever possible, automatically recorded metadata is also available in each record, such as the creator of the record or the creation date. The actual data of the record can be uploaded by the users and are currently stored on a file system, accessible by Kadi4Mat. It is possible to upload any number of files for each record. This can be helpful when dealing with a series of several hundred images of a simulated microstructure, for example, which all share the same metadata. | |||

[[File:Fig4 Brandt DataSciJourn21 20-1.png|900px]] | |||

{{clear}} | |||

{| | |||

| STYLE="vertical-align:top;"| | |||

{| border="0" cellpadding="5" cellspacing="0" width="900px" | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"| <blockquote>'''Figure 4.''' Screenshot of the generic metadata editor, showing the different types of metadata entries currently possible. The last two examples of type dictionary and list contain nested metadata entries. In the upper right corner, a menu is displayed that allows performing various actions, one of which switches to a tree-based overview of the metadata. The ability to select metadata templates is shown in the lower right corner.</blockquote> | |||

|- | |||

|} | |||

|} | |||

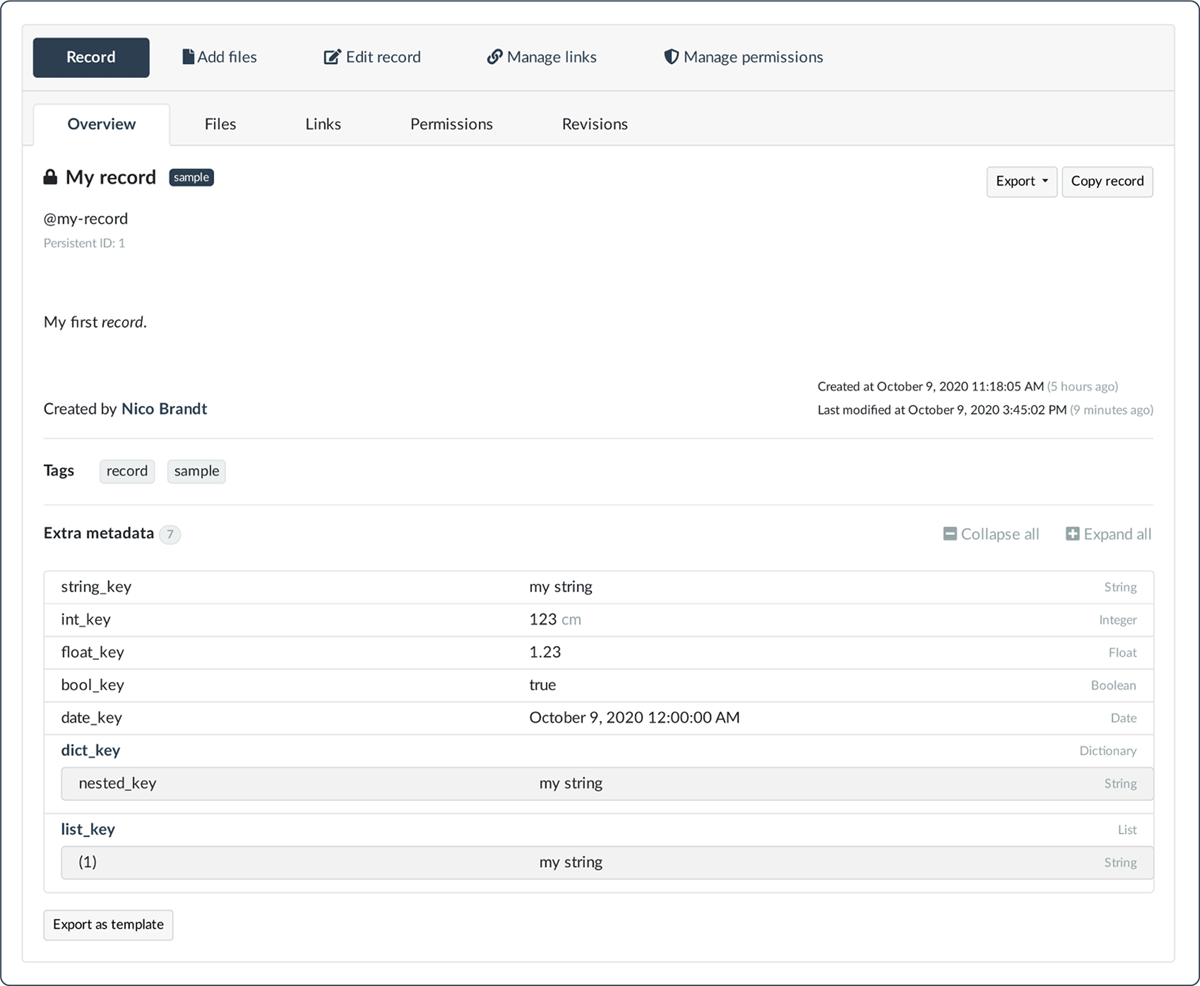

The created record can be viewed on an overview page that displays all metadata and linked files. Some common file formats include a preview that is directly integrated into the web browser, such as image files, PDFs, archives, or textual data. Furthermore, the access rights of the record are displayed on its overview page. Currently, two levels of visibility can be set when creating a record: public and private visibility. While public records can be viewed by every logged-in user—i.e., read rights are granted implicitly to each user—private records can initially only be viewed by their creator. Only the creator of a record can perform further actions, such as editing the metadata or deleting the entire record. Figure 5 shows the overview page of a record, including its metadata and the menu to perform the previously mentioned actions. | |||

[[File:Fig5 Brandt DataSciJourn21 20-1.png|900px]] | |||

{{clear}} | |||

{| | |||

| STYLE="vertical-align:top;"| | |||

{| border="0" cellpadding="5" cellspacing="0" width="900px" | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"| <blockquote>'''Figure 5.''' Screenshot of a record overview page. The basic metadata is shown, followed by the generic metadata entries (shown as extra metadata). The menu on the top allows various actions to be performed on the current record. The tabs below the menu are used to switch to other views that display the files and other resources associated with the current record, as well as access permissions and a history of metadata revisions.</blockquote> | |||

|- | |||

|} | |||

|} | |||

In order to grant different access rights to other users, even within private records, different roles can be defined for any user in a separate view. Currently, the roles are static, which means that they can be selected from a predefined list and are each linked to the corresponding fine-grained permissions. Because of these permissions, the possibility of custom roles or certain actions being linked to different user attributes becomes possible. In addition to roles for individual users, roles can also be defined for groups. These are simple groupings of several users which, similar to records, can be created and managed by the users themselves. The same roles that can be defined for individual users can be assigned to groups as well. Each member of the group is granted the corresponding access rights automatically. | |||

Finally, the overview page of a record also shows the resources linked to it. This refers in particular to the so-called collections. Collections represent simple, logical groupings of multiple records and can thus contribute to a better organization of resources. In terms of an ontology, collections can be regarded as classes, while records inside a collection represent concrete instances of such a class. Like records and groups, collections can be created and managed by users. Records can also be linked to other records. Each record link represents a separate resource, which in turn can contain certain metadata. The ability to specify generic metadata and such resource links already enables a basic ontology-like structure. This structure can be further improved in the future, e.g., by using different types of links, with varying semantics, and by allowing collections to be nested. | |||

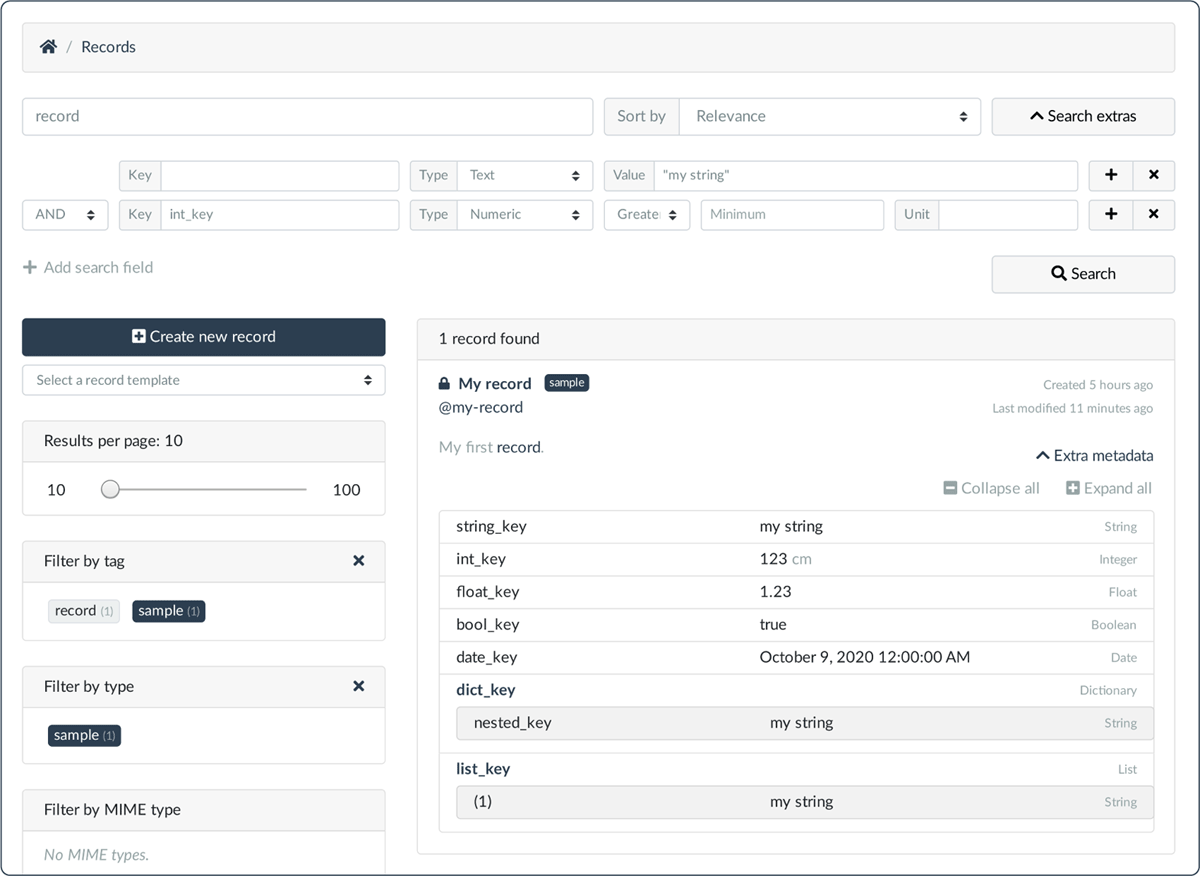

To be able to find different resources efficiently, especially records, a search function is included in Kadi4Mat. This allows searching in the basic metadata of resources and in the generic metadata of records via keywords or full text search. The values of nested generic metadata entries are flattened before they are indexed in ElasticSearch. This way, a common search mapping can be defined for all kinds of generic metadata. The search results can be sorted and filtered in various ways, for example, by using different user-defined tags or data formats, in the case of records. Figure 6 shows an example search of records, with the corresponding results. | |||

[[File:Fig6 Brandt DataSciJourn21 20-1.png|900px]] | |||

{{clear}} | |||

{| | |||

| STYLE="vertical-align:top;"| | |||

{| border="0" cellpadding="5" cellspacing="0" width="900px" | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"| <blockquote>'''Figure 6.''' Screenshot of the search functionality of records with the corresponding search results. In addition to providing a simple query for searching the basic metadata of a record, the generic metadata can also be searched by specifying desired keys, types, or values. The searchable types are derived from the actual types of the generic metadata entries, e.g., integers and floating point numbers are grouped together as numeric type. Various other options are offered for filtering and sorting the search results.</blockquote> | |||

|- | |||

|} | |||

|} | |||

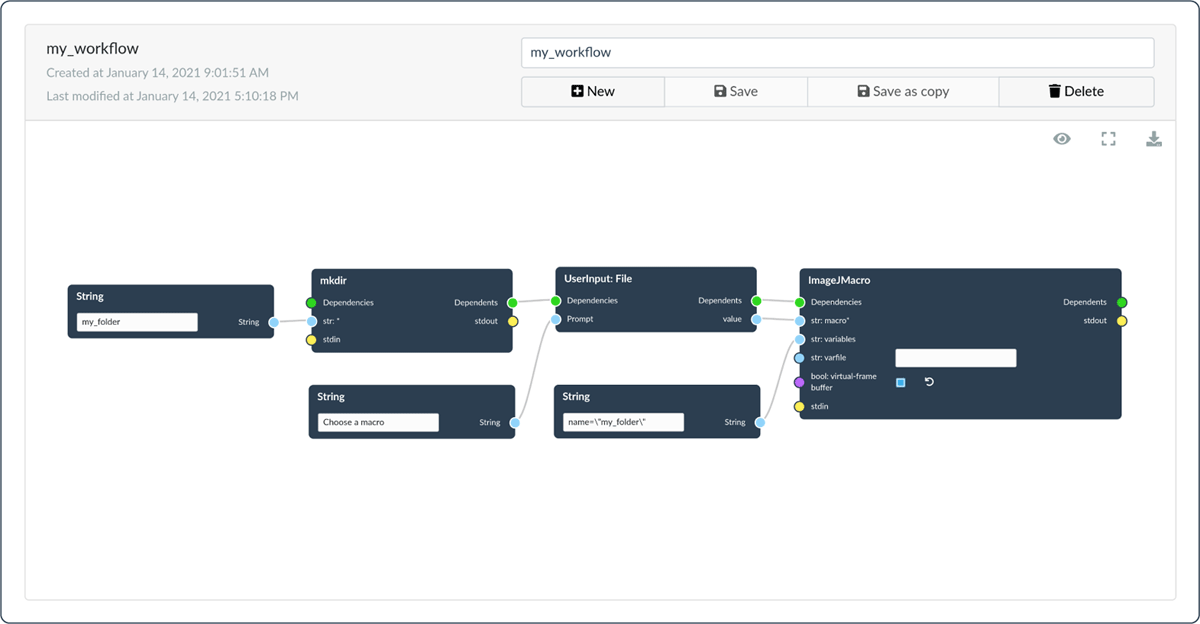

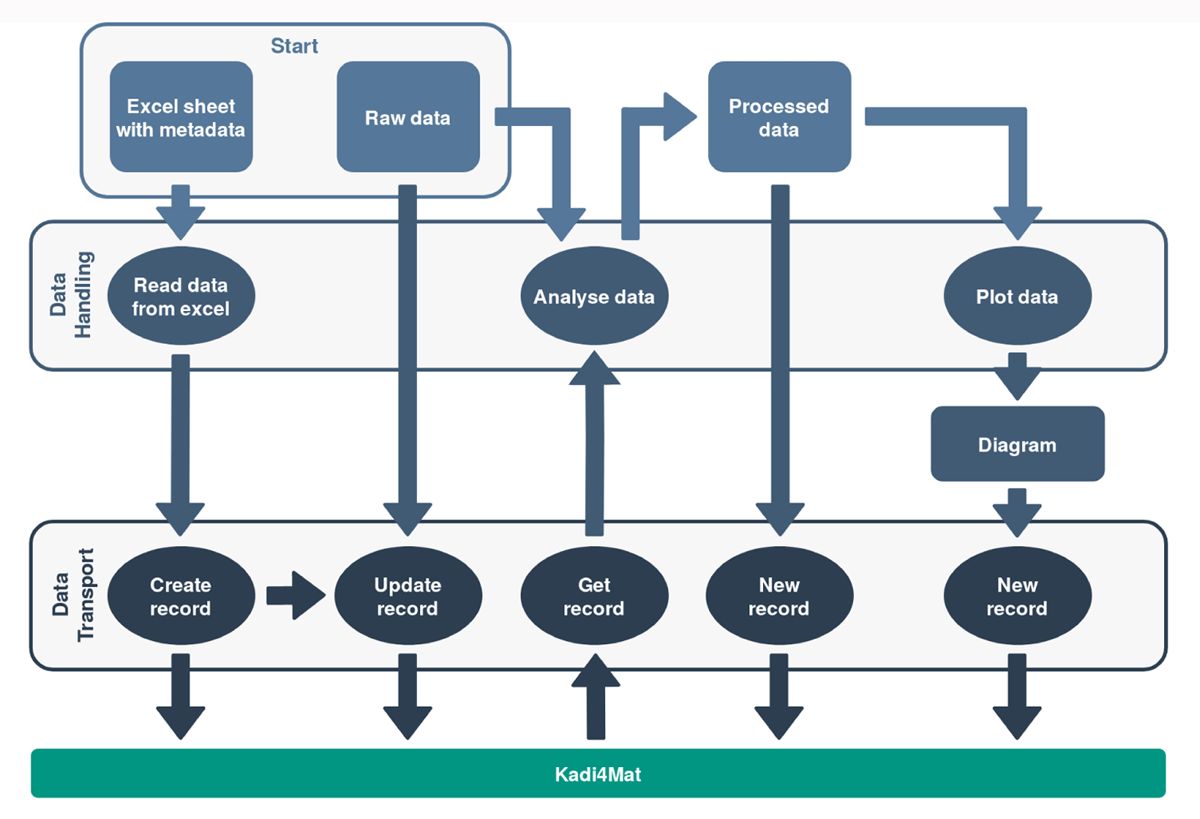

While the execution of workflows, via the web interfaces, and the ability to add user-defined tools are still under development, it is possible to define a workflow using a graphical node editor, running in the web browser. Figure 7 shows a simple example workflow created with this editor. A selection of predefined nodes can be combined and parameterized, while the resulting workflow can be downloaded. A custom JSON-based format is currently used to store the representation of a workflow. This format contains all the information for the node editor to correctly display the workflow and to derive a functional workflow representation for execution. The downloaded workflow file can be executed directly on a local workstation by using the command line interface of the process manager. All tools to actually run such a workflow need to be installed beforehand. A selection of tools is provided for various tasks<ref name="ZschummeIAMNodes21">{{cite web |url=https://zenodo.org/record/4094719 |title=IAM-CMS/workflow-nodes (Version 0.1.0) |author=Zschumme, P. |work=Zenodo |date=16 October 2020 |doi=10.5281/zenodo.4094719}}</ref>, including connecting to a Kadi4Mat instance by using a suitable wrapper on top of the aforementioned API library. Several common use cases have already been implemented, including the task of extracting metadata from Excel spreadsheets, often used to replace an actual ELN system, and importing it into Kadi4Mat. An overview of such a workflow is shown in Figure 8. Developments are also underway for data science applications, especially in the field of machine learning. The combination of the ELN and the repository fits particularly well with the requirements of such applications, which typically require lots of high-quality input data to function well. | |||

[[File:Fig7 Brandt DataSciJourn21 20-1.png|900px]] | |||

{{clear}} | |||

{| | |||

| STYLE="vertical-align:top;"| | |||

{| border="0" cellpadding="5" cellspacing="0" width="900px" | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"| <blockquote>'''Figure 7.''' Screenshot of a workflow created with the web-based node editor. Several String input nodes are shown, as well as a special node that prompts the user to enter a file (UserInput: File). The two tools ''mkdir'' and ''ImageJMacro'' are used to create a new directory and to execute an ImageJ<ref name="SchindelinTheImage15">{{cite journal |title=The ImageJ ecosystem: An open platform for biomedical image analysis |journal=Molecular Reproduction & Development |author=Schindelin, J.; Rueden, C.T.; Hiner, M.C. et al. |volume=82 |issue=7–8 |pages=518–29 |year=2015 |doi=10.1002/mrd.22489}}</ref> macro file, respectively. The latter uses the input file the user was asked for. Except for the input nodes, all nodes are connected via an explicit dependency.</blockquote> | |||

|- | |||

|} | |||

|} | |||

[[File:Fig8 Brandt DataSciJourn21 20-1.png|800px]] | |||

{{clear}} | |||

{| | |||

| STYLE="vertical-align:top;"| | |||

{| border="0" cellpadding="5" cellspacing="0" width="800px" | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"| <blockquote>'''Figure 8.''' Overview of an exemplary workflow using Kadi4Mat. The starting point is raw data and corresponding metadata stored in an Excel spreadsheet. The tools used in this workflow are divided into tools for data handling and tools for data transport, the latter referring to the Kadi4Mat integration. In a first conversion step, the metadata are transformed into a format readable by the API of Kadi4Mat and linked to the raw data by creating a new record. The raw data is further analyzed using the metadata stored in Kadi4Mat. Finally, the result of the analysis is plotted and both data sets are uploaded to Kadi4Mat as records. All records can be linked to each other in a further step, either as part of the workflow or separately.</blockquote> | |||

|- | |||

|} | |||

|} | |||

==Conclusion== | |||

The development and current functionality of the research data infrastructure Kadi4Mat is presented. The objective of this infrastructure is to combine the features of an ELN and a repository in such a way that researchers can be supported throughout the whole research process. The ongoing development aims at covering the heterogeneous use cases of materials science disciplines. For this purpose, flexible metadata schemas, workflows, and tools are especially important, as is the use of custom installations and instances. The basic functionality of the repository component is largely given by the features already implemented and can be used with a graphical as well as a programmatic interface. This includes, above all, uploading, managing, and exchanging data, as well as the associated metadata. The latter can be defined with a flexible metadata editor to accommodate the needs of different users and workgroups. A search functionality enables the efficient retrieval of the data. The essential infrastructure for workflows is implemented as a central part of the ELN component. Simple workflows can be defined with an initial version of the web-based node editor and executed locally using provided tools and the process manager’s command-line interface. Both main components are improved continuously. | |||

Various other features that have not yet been mentioned as part of the concept are planned or are already in the conception stage. These include the optional connection of several Kadi4Mat instances, a more direct, low-level access to data, and the integration of an app store, for the central administration of tools and plugins. | |||

The development of Kadi4Mat largely follows a bottom-up approach. Instead of developing concepts in advance, to cover as many use cases as possible, a basic technical infrastructure is established first. On this basis, further steps are evaluated in exchange with interested users and by implementing best practice examples. Due to the heterogeneous nature of materials science, most features are kept very generic. The concrete structuring of the data storage, the metadata, and the workflows is largely left to the users. As a positive side effect, an extension of the research data infrastructure to other disciplines is possible in the future. | |||

==Acknowledgements== | |||

This work is supported by the Federal Ministry of Education and Research (BMBF) in the projects FestBatt (project number 03XP0174E) and as part of the Excellence Strategy of the German Federal and State Governments, by the German Research Foundation (DFG) in the projects POLiS (project number 390874152) and SuLMaSS (project number 391128822) and by the Ministry of Science, Research and Art Baden-Württemberg in the project MoMaF – Science Data Center, with funds from the state digitization strategy digital@bw (project number 57). The authors are also grateful for the editorial support of Leon Geisen. | |||

===Competing interests=== | |||

The authors have no competing interests to declare. | |||

==References== | ==References== | ||

Latest revision as of 20:11, 24 February 2021

| Full article title | Kadi4Mat: A research data infrastructure for materials science |

|---|---|

| Journal | Data Science Journal |

| Author(s) |

Brandt, Nico; Griem, Lars; Herrmann, Christoph; Schoof, Ephraim; Tosato, Giovanna; Zhao, Yinghan; Zschumme, Philipp; Selzer, Michael |

| Author affiliation(s) | Karlsruhe Institute of Technology, Karlsruhe University of Applied Sciences, Helmholtz Institute Ulm |

| Primary contact | Email: nico dot brandt at kit dot edu |

| Year published | 2021 |

| Volume and issue | 20(1) |

| Article # | 8 |

| DOI | 10.5334/dsj-2021-008 |

| ISSN | 1683-1470 |

| Distribution license | Creative Commons Attribution 4.0 International |

| Website | https://datascience.codata.org/articles/10.5334/dsj-2021-008/ |

| Download | https://datascience.codata.org/articles/10.5334/dsj-2021-008/galley/1048/download/ (PDF) |

Abstract

The concepts and current developments of a research data infrastructure for materials science are presented, extending and combining the features of an electronic laboratory notebook (ELN) and a repository. The objective of this infrastructure is to incorporate the possibility of structured data storage and data exchange with documented and reproducible data analysis and visualization, which finally leads to the publication of the data. This way, researchers can be supported throughout the entire research process. The software is being developed as a web-based and desktop-based system, offering both a graphical user interface (GUI) and a programmatic interface. The focus of the development is on the integration of technologies and systems based on both established as well as new concepts. Due to the heterogeneous nature of materials science data, the current features are kept mostly generic, and the structuring of the data is largely left to the users. As a result, an extension of the research data infrastructure to other disciplines is possible in the future. The source code of the project is publicly available under a permissive Apache 2.0 license.

Keywords: research data management, electronic laboratory notebook, repository, open source, materials science

Introduction

In the engineering sciences, the handling of digital research data plays an increasingly important role in all fields of application.[1] This is especially the case, due to the growing amount of data obtained from experiments and simulations.[2] The extraction of knowledge from these data is referred to as a data-driven, fourth paradigm of science, filed under the keyword "data science."[3] This is particularly true in materials science, as the research and understanding of new materials are becoming more and more complex.[4] Without suitable analysis methods, the ever-growing amount of data will no longer be manageable. In order to be able to perform appropriate data analyses smoothly, the structured storage of research data and associated metadata is an important aspect. Specifically, a uniform research data management is needed, which is made possible by appropriate infrastructures such as research data repositories. In addition to uniform data storage, such systems can help to overcome inter-institutional hurdles in data exchange, compare theoretical and experimental data, and provide reproducible workflows for data analysis. Furthermore, linking the data with persistent identifiers enables other researchers to directly reference them in their work.

In particular, repositories for the storage and internal or public exchange of research data are becoming increasingly widespread. In particular, the publication of such data, either on its own or as a supplement to a text publication, is increasingly encouraged or sometimes even required.[5] In order to find a suitable repository, services such as re3data.org[6] or FAIRSharing[7] are available. These services also make it possible to find subject-specific repositories for materials science data. Two well-known examples are the Materials Project[8] and the NOMAD Repository.[9] Indexed repositories are usually hosted centrally or institutionally and are mostly used for the publication of data. However, some of the underlying systems can also be installed by the user, e.g., for internal use within individual research groups. Additionally, this allows full control over stored data as well as internal data exchanges, if this function is not already part of the repository. In this respect, open-source systems are particularly important, as this means independence from vendors and opens up the possibility of modifying the existing functionality or adding additional features, sometimes via built-in plug-in systems. Examples of such systems are CKAN[10], Dataverse[11], DSpace[12], and Invenio[13], where the latter is the basis of Zenodo.[14] The listed repositories are all generic and represent only a selection of the existing open-source systems.[15]

In addition to repositories, a second type of system increasingly being used in experiment-oriented research areas is the electronic laboratory notebook (ELN).[16] Nowadays, the functionality of ELNs goes far beyond the simple replacement of paper-based laboratory notebooks, and can also include aspects such as data analysis, as seen, for example, in Galaxy[17] or Jupyter Notebook.[18] Both systems focus primarily on providing accessible and reproducible computational research data. However, the boundary between unstructured and structured data is increasingly becoming blurred, the latter being traditionally only found in laboratory information management systems (LIMS).[19][20][21] Most existing ELNs are domain-specific and limited to research disciplines such as biology or chemistry.[21] According to current knowledge, a system specifically tailored to materials science does not exist. For ELNs, there are also open-source systems such as eLabFTW[22], sciNote[23], or Chemotion.[24] Compared to the repositories, however, the selection of ELNs is smaller. Furthermore, only the first two mentioned systems are generic.

Thus, generic research data systems and software are available for both ELNs and repositories, which, in principle, could also be used in materials science. The listed open-source solutions are of particular relevance, as they can be adapted to different needs and are generally suitable for use in a custom installation within single research groups. However, both aspects can be a considerable hurdle, especially for smaller groups. Due to a lack of resources, structured research data management and the possibility of making data available for subsequent use is therefore particularly difficult for such groups.[25] What is finally missing is a system that can be deployed and used both centrally and decentrally, as well as internally and publicly, without major obstacles. The system should support researchers throughout the entire research process, starting with the generation and extraction of raw data, up to the structured storage, exchange, and analysis of the data, resulting in the final publication of the corresponding results. In this way, the features of the ELN and the repository are combined, creating a virtual research environment[26] that accelerates the generation of innovations by facilitating collaboration among researchers. In an interdisciplinary field like materials science, there is a special need to model the very heterogeneous workflows of researchers.[4]

For this purpose, the research data infrastructure Kadi4Mat (Karlsruhe Data Infrastructure for Materials Sciences) is being developed at the Institute for Applied Materials (IAM-CMS) of the Karlsruhe Institute of Technology (KIT). The aim of the software is to combine the possibility of structured data storage with documented and reproducible workflows for data analysis and visualization tasks, incorporating new concepts with established technologies and existing solutions. In the development of the software, the FAIR Guiding Principles[27] for scientific data management are taken into account. Instances of the data infrastructure have already been deployed and today show how structured data storage and data exchange are made possible.[28] Furthermore, the source code of the project is publicly available under a permissive Apache 2.0 license.[29]

Concepts

Kadi4Mat is logically divided into the two components—an ELN and a repository—which have access to various tools and technical infrastructures. The components can be used by web- and desktop-based applications, via uniform interfaces. Both a graphical and a programmatic interface are provided, using machine-readable formats and various exchange protocols. In Figure 1, a conceptual overview of the infrastructure of Kadi4Mat is presented.

|

Electronic laboratory notebook

The so-called workflows are of particular importance in the ELN component. A "workflow" is a generic concept that describes a well-defined sequence of sequential or parallel steps, which are processed as automatically as possible. This can include the execution of an analysis tool or the control and data retrieval of an experimental device. To accommodate such heterogeneity, concrete steps must be implemented as flexibly as possible, since they are highly user- and application-specific. In Figure 1, the types of tools shown in the second layer are used as part of the workflows, so as to implement the actual functionality of the various steps. These can be roughly divided into analysis, visualization, transformation, and transportation tasks. In order to keep the application of these tools as generic as possible, a combination of provided and user-defined tools is accessed. From a user’s perspective, it must be possible to provide such tools in an easy manner, while the execution of each tool must take place in a secure and functional environment. This is especially true for existing tools—e.g., a simple MATLAB[30] script—which require certain dependencies to be executed and must be equipped with a suitable interface to be used within a workflow. Depending on their functionality, the tools must in turn access various technical infrastructures. In addition to the use of the repository and computing infrastructure, direct access to devices is also important for more complex data analyses. The automation of a typical workflow of experimenters is only fully possible if data and metadata, created by devices, can be captured. However, such an integration is not trivial due to a heterogeneous device landscape, as well as proprietary data formats and interfaces.[31][32] In Kadi4Mat, it should also be possible to use individual tools separately, where appropriate, i.e., outside a workflow. For example, when using the web-based interface, a visualization tool for a custom data format may be used to generate a preview of a datum that can be directly displayed in a web browser.

In Figure 2, the current concept for the integration of workflows in Kadi4Mat is shown. Different steps of a workflow can be defined with a graphical node editor. Either a web-based or a desktop-based version of such an editor can be used, the latter running as an ordinary application on a local workstation. With the help of such an editor, the different steps or tools to be executed are defined, linked, and, most importantly, parameterized. The execution of a workflow can be started via an external component called "Process Manager." This component in turn manages several process engines, which take care of executing the workflows. The process engines potentially differ in their implementation and functionality. A simple process engine, for example, could be limited to a sequential execution order of the different tasks, while another one could execute independent tasks in parallel. All engines process the required steps based on the information stored in the workflow. With appropriate transport tools, the data and metadata required for each step, as well as the resulting output, can be exported or imported from Kadi4Mat using the existing interfaces of the research data infrastructure. With similar tools, the use of other external data sources becomes possible, and with it the possibility to handle large amounts of data via suitable exchange protocols. The use of locally stored data is also possible when running a workflow on a local workstation.

|

Since the reproducibility of the performed steps is a key objective of the workflows, all meaningful information and metadata can be logged along the way. The logging needs to be flexible in order to accommodate different individual or organizational needs, and as such, it is also part of the workflow itself. Workflows can also be shared with other users, for example, via Kadi4Mat. Manual steps may require interaction during the execution of a workflow, for which the system must prompt the user. In summary, the focus of the ELN component thus points in a different direction than in classic ELNs, with the emphasis on the automation of the steps performed. This aspect in particular is similar to systems such as Galaxy[17], which focuses on computational biology, or Taverna[33], a dedicated workflow management system. Nevertheless, some typical features of classic ELNs are also considered in the ELN component, such as the inclusion of handwritten notes.

Repository

In the repository component, data management is regarded as the central element, especially the structured data storage and exchange. An important aspect is the enrichment of data with corresponding descriptive metadata, which is required for its description, analysis, or search. Many repositories, especially those focused on publishing research data, use the metadata schema provided by DataCite[34] and are either directly or heavily based on it. This schema is widely supported and enables the direct publication of data via the corresponding DataCite service. For use cases that go beyond data publications, it is limited in its descriptive power, at the same time. There are comparatively few subject-specific schemas available for engineering and material sciences. Two examples are EngMeta[35] and NOMAD Meta Info.[36] The first schema is created a priori and aims to provide a generic description of computer-aided engineering data, while the second schema is created a posteriori, using existing computing inputs and outputs from the database of the NOMAD repository.

The second approach is also pursued in a similar way in Kadi4Mat. Instead of a fixed metadata schema, the concrete structure is largely determined by the users themselves, and thus is oriented towards their specific needs. To aid with establishing common metadata vocabularies, a mechanism to create templates is provided. Templates can impose certain restrictions and validations on certain metadata. They are user-defined and can be shared within workgroups or projects, facilitating the establishment of metadata standards. Nevertheless, individual, generic metadata fields, such as a title or description of a data set, can be static. For different use cases such as data analysis, publishing, or the interoperability with other systems, additional conversions must be provided. This is not only necessary because of differing data formats, but also to map vocabularies of different schemas accordingly. Such converted metadata can either represent a subset of existing schemas or require additional fields, such as a license for the re-use of published data. In the long run, the objective in Kadi4Mat is to offer well-defined structures and semantics by making use of ontologies. In the field of materials science, there are ongoing developments in this respect, such as the European Materials Modelling Ontology.[37] However, a bottom-up procedure is considered as a more flexible solution, with the objective to generate an ontology from existing metadata and relationships between different data sets. Such a two-pronged approach aims to be functional in the short term, while still staying extensible in the long term[38], although it heavily depends on how users manage their data and metadata with the options available.

In addition to the metadata, the actual data must be managed as well. Here, one can distinguish between data managed directly by Kadi4Mat and linked data. In the simplest form, the former resides on a file system accessible by the repository, which means full control over the data. This requires a copy of each datum to be made available in Kadi4Mat, which makes it less suitable for very large amounts of data. The same applies to data analyses that are to be carried out on external computing infrastructures and must access the data for this purpose. Linked data, on the other hand, can be located on external data storage devices, e.g., high-performance computing infrastructures. This also makes it possible to integrate existing infrastructures and repositories. In these cases, Kadi4Mat can simply offer a view on top of such infrastructures or a more direct integration, depending on the concrete system in question.

A further point to be addressed within the repository is the publication of data and metadata, including templates and workflows, that require persistent identifiers to be referenceable. Many existing repositories and systems are already specialized in exactly this use case and offer infrastructures for the long-term archiving of large amounts of data. Thus, an integration of suitable external systems is to be considered for this task in particular. From Kadi4Mat’s point of view, only certain basic requirements have to be ensured in order to enable the publishing of data. These include the assignment of a unique identifier within the system, the provision of metadata and licenses, necessary for a publication, and a basic form of user-guided quality control. The repository component thus also goes in a different direction than classic repositories. In a typical scientific workflow, it is primarily focused on all steps that take place between the initial data acquisition and the publishing of data. The component is therefore best described as a community repository that manages warm data (i.e., unpublished data that needs further analysis) and enables data exchange within specific communities, e.g., within a research group or project.

Implementation

Kadi4Mat is built as a web-based application that employs a classic client-server architecture. A graphical front end is provided to be used with a normal web browser as a client, while the server is responsible for handling the back end and the integration of external systems. A high-level overview of the implementation is shown in Figure 3. The front end is based on the classic web technologies JavaScript, HTML, and CSS. In particular, the client-side JavaScript web framework Vue.js[39] is used. The framework is especially suitable for the creation of single-page web applications (SPA), but it can also be used for individual sections of more classic applications, to incrementally add complex and dynamic user interface components to certain pages. Vue.js is mainly used for the latter, the benefit being a clear separation between the data and the presentation layer, as well as the easier re-use of user interface components. This aspect is combined with server-side rendering. Due to the technologies and standards employed, the use of the front end is currently limited to recent versions of modern web browsers such as Firefox, Chrome, or Edge.

|

In the back end, the framework Flask[40] is used for the web component. The framework is implemented in Python and is compatible with the common web server gateway interface (WSGI), which specifies an interface between web servers and Python applications. As a so-called microframework, the functionality of Flask itself is limited to the basic features. This means that most of the functionality, which is unrelated to the web component, has to be added by custom code or suitable libraries. At the same time, more freedom is offered in the concrete choice of technologies. This is in direct contrast to web frameworks such as Django[41], which already provides a lot of functionality from scratch. The web component itself is responsible for handling client requests for specific endpoints and assigning them to the appropriate Python functions. Currently, either HTML or JSON is returned, depending on the endpoint. The latter is used as part of an HTTP application programming interface (API), to enable an internal and external programmatic data exchange. This API is based on the representational state transfer (REST) paradigm.[42] Support for other exchange formats could also be relevant in the future, particularly for implementing certain exchange formats for interoperability, such as OAI-PMH.[43] Especially for handling larger amounts of data, other exchange protocols besides HTTP are considered.