Difference between revisions of "Journal:Ten simple rules for managing laboratory information"

Shawndouglas (talk | contribs) (Finished adding rest of content.) |

Shawndouglas (talk | contribs) |

||

| (6 intermediate revisions by the same user not shown) | |||

| Line 55: | Line 55: | ||

All the data produced by your lab are derived from things you have purchased, including supplies (consumables), equipment, and contract manufactured reagents, such as oligonucleotides or synthetic genes. In many cases, data (and [[metadata]]) on items in your inventory may be just as important as experimentally derived data, and as such, should be managed according to the [[Journal:The FAIR Guiding Principles for scientific data management and stewardship|FAIR (findable, accessible, interoperable, and reusable) principles]].<ref name=":12">{{Cite journal |last=Wilkinson |first=Mark D. |last2=Dumontier |first2=Michel |last3=Aalbersberg |first3=IJsbrand Jan |last4=Appleton |first4=Gabrielle |last5=Axton |first5=Myles |last6=Baak |first6=Arie |last7=Blomberg |first7=Niklas |last8=Boiten |first8=Jan-Willem |last9=da Silva Santos |first9=Luiz Bonino |last10=Bourne |first10=Philip E. |last11=Bouwman |first11=Jildau |date=2016-03-15 |title=The FAIR Guiding Principles for scientific data management and stewardship |url=https://www.nature.com/articles/sdata201618 |journal=Scientific Data |language=en |volume=3 |issue=1 |pages=160018 |doi=10.1038/sdata.2016.18 |issn=2052-4463 |pmc=PMC4792175 |pmid=26978244}}</ref> Assembling an inventory of supplies and equipment with their associated locations can be handled in a few weeks by junior personnel without major interruption of laboratory operations, although establishing a thorough inventory may be more difficult and time-consuming for smaller labs with fewer members. Nevertheless, managing your lab inventory provides an immediate return on investment by positively impacting laboratory operations in several ways. People can quickly find the supplies and equipment they need to work, supplies are ordered with appropriate advance notice to minimize work stoppage, and data variation is reduced due to standardized supplies and the ability to track lot numbers easily (Fig. 1).<ref name=":6" /><ref name=":13">{{Cite journal |last=Perkel |first=Jeffrey M. |date=2015-08-06 |title=Lab-inventory management: Time to take stock |url=https://www.nature.com/articles/524125a |journal=Nature |language=en |volume=524 |issue=7563 |pages=125–126 |doi=10.1038/524125a |issn=0028-0836}}</ref><ref>{{Cite journal |last=Foster |first=Barbara L. |date=2005-09-01 |title=The Chemical Inventory Management System in academia |url=https://pubs.acs.org/doi/10.1016/j.chs.2005.01.019 |journal=Chemical Health & Safety |language=en |volume=12 |issue=5 |pages=21–25 |doi=10.1016/j.chs.2005.01.019 |issn=1074-9098}}</ref> | All the data produced by your lab are derived from things you have purchased, including supplies (consumables), equipment, and contract manufactured reagents, such as oligonucleotides or synthetic genes. In many cases, data (and [[metadata]]) on items in your inventory may be just as important as experimentally derived data, and as such, should be managed according to the [[Journal:The FAIR Guiding Principles for scientific data management and stewardship|FAIR (findable, accessible, interoperable, and reusable) principles]].<ref name=":12">{{Cite journal |last=Wilkinson |first=Mark D. |last2=Dumontier |first2=Michel |last3=Aalbersberg |first3=IJsbrand Jan |last4=Appleton |first4=Gabrielle |last5=Axton |first5=Myles |last6=Baak |first6=Arie |last7=Blomberg |first7=Niklas |last8=Boiten |first8=Jan-Willem |last9=da Silva Santos |first9=Luiz Bonino |last10=Bourne |first10=Philip E. |last11=Bouwman |first11=Jildau |date=2016-03-15 |title=The FAIR Guiding Principles for scientific data management and stewardship |url=https://www.nature.com/articles/sdata201618 |journal=Scientific Data |language=en |volume=3 |issue=1 |pages=160018 |doi=10.1038/sdata.2016.18 |issn=2052-4463 |pmc=PMC4792175 |pmid=26978244}}</ref> Assembling an inventory of supplies and equipment with their associated locations can be handled in a few weeks by junior personnel without major interruption of laboratory operations, although establishing a thorough inventory may be more difficult and time-consuming for smaller labs with fewer members. Nevertheless, managing your lab inventory provides an immediate return on investment by positively impacting laboratory operations in several ways. People can quickly find the supplies and equipment they need to work, supplies are ordered with appropriate advance notice to minimize work stoppage, and data variation is reduced due to standardized supplies and the ability to track lot numbers easily (Fig. 1).<ref name=":6" /><ref name=":13">{{Cite journal |last=Perkel |first=Jeffrey M. |date=2015-08-06 |title=Lab-inventory management: Time to take stock |url=https://www.nature.com/articles/524125a |journal=Nature |language=en |volume=524 |issue=7563 |pages=125–126 |doi=10.1038/524125a |issn=0028-0836}}</ref><ref>{{Cite journal |last=Foster |first=Barbara L. |date=2005-09-01 |title=The Chemical Inventory Management System in academia |url=https://pubs.acs.org/doi/10.1016/j.chs.2005.01.019 |journal=Chemical Health & Safety |language=en |volume=12 |issue=5 |pages=21–25 |doi=10.1016/j.chs.2005.01.019 |issn=1074-9098}}</ref> | ||

Many labs still use Excel to keep track of inventory despite the existence of several more sophisticated databases and LIMS (e.g., [[Benchling, Inc.|Benchling]], Quartzy, [[GenoFAB, Inc.|GenoFAB]], [[LabWare, Inc.|LabWare]], [[LabVantage Solutions, Inc.|LabVantage]], and [[TeselaGen Biotechnology, Inc.|TeselaGen]]).<ref name=":13" /> These can facilitate real-time inventory tracking unlike a static document, increasing the findability and accessibility of inventory data. While some systems are specialized for certain types of inventories (e.g., animal colonies or frozen reagents), others are capable of tracking any type of reagent or item imaginable.<ref name=":13" /> When considering what items to keep track of, there are three main considerations: expiration, maintenance, and ease of access. | Many labs still use Excel to keep track of inventory despite the existence of several more sophisticated databases and LIMS (e.g., [[Vendor:Benchling, Inc.|Benchling]], Quartzy, [[GenoFAB, Inc.|GenoFAB]], [[Vendor:LabWare, Inc.|LabWare]], [[Vendor:LabVantage Solutions, Inc.|LabVantage]], and [[Vendor:TeselaGen Biotechnology, Inc.|TeselaGen]]).<ref name=":13" /> These can facilitate real-time inventory tracking unlike a static document, increasing the findability and accessibility of inventory data. While some systems are specialized for certain types of inventories (e.g., animal colonies or frozen reagents), others are capable of tracking any type of reagent or item imaginable.<ref name=":13" /> When considering what items to keep track of, there are three main considerations: expiration, maintenance, and ease of access. | ||

Most labs manage their supplies through periodic cleanups of the lab, during which they sort through freezers, chemical cabinets, and other storage areas; review their contents; and dispose of supplies that are past their expiration date or are no longer useful. By actively tracking expiration dates and reagent use in a LIMS, you can decrease the frequency of such cleanups since the LIMS will alert users when expiration dates are approaching or when supplies are running low. This can prevent costly items from being wasted because they are expired or forgotten, and furthermore, the cost of products can be tracked and used to inform which experiments are performed. | Most labs manage their supplies through periodic cleanups of the lab, during which they sort through freezers, chemical cabinets, and other storage areas; review their contents; and dispose of supplies that are past their expiration date or are no longer useful. By actively tracking expiration dates and reagent use in a LIMS, you can decrease the frequency of such cleanups since the LIMS will alert users when expiration dates are approaching or when supplies are running low. This can prevent costly items from being wasted because they are expired or forgotten, and furthermore, the cost of products can be tracked and used to inform which experiments are performed. | ||

| Line 148: | Line 148: | ||

===Capture context in notebook entries=== | ===Capture context in notebook entries=== | ||

Organizing data in databases and capturing essential metadata describing the data production process can greatly simplify the process of documenting research projects in [[laboratory notebook]]s.<ref name=":19">{{Cite journal |last=Nussbeck |first=Sara Y |last2=Weil |first2=Philipp |last3=Menzel |first3=Julia |last4=Marzec |first4=Bartlomiej |last5=Lorberg |first5=Kai |last6=Schwappach |first6=Blanche |date=2014-06 |title=The laboratory notebook in the 21 st century: The electronic laboratory notebook would enhance good scientific practice and increase research productivity |url=https://www.embopress.org/doi/10.15252/embr.201338358 |journal=EMBO reports |language=en |volume=15 |issue=6 |pages=631–634 |doi=10.15252/embr.201338358 |issn=1469-221X |pmc=PMC4197872 |pmid=24833749}}</ref> Instead of needing to include copies of the protocols and the raw data produced by the experiment, the notebook entry can focus on the context, purpose, and results of the experiment. In the case of ELNs (e.g., [[ | Organizing data in databases and capturing essential metadata describing the data production process can greatly simplify the process of documenting research projects in [[laboratory notebook]]s.<ref name=":19">{{Cite journal |last=Nussbeck |first=Sara Y |last2=Weil |first2=Philipp |last3=Menzel |first3=Julia |last4=Marzec |first4=Bartlomiej |last5=Lorberg |first5=Kai |last6=Schwappach |first6=Blanche |date=2014-06 |title=The laboratory notebook in the 21 st century: The electronic laboratory notebook would enhance good scientific practice and increase research productivity |url=https://www.embopress.org/doi/10.15252/embr.201338358 |journal=EMBO reports |language=en |volume=15 |issue=6 |pages=631–634 |doi=10.15252/embr.201338358 |issn=1469-221X |pmc=PMC4197872 |pmid=24833749}}</ref> Instead of needing to include copies of the protocols and the raw data produced by the experiment, the notebook entry can focus on the context, purpose, and results of the experiment. In the case of ELNs (e.g., [[Vendor:sciNote, LLC|SciNote]], [[Vendor:LabArchives, LLC|LabArchives]], and [[Vendor:Bio-ITech BV|eLabJournal]]), entries can benefit from providing links to previous notebook entries, the experimental and analytical protocols used, and the datasets produced by the workflows. ELNs also bring additional benefits like portability, standardized templates, and improved sharing, and improved reproducibility. Finally, notebook entries should include the interpretation of the data as well as a conclusion pointing to the next experiment. The presence of this rich metadata and detailed provenance is critical to ensuring the FAIR principles are being met and your experiments are reproducible.<ref name=":12" /> | ||

==Rule 7: Separate parameters and variables== | ==Rule 7: Separate parameters and variables== | ||

Latest revision as of 18:59, 11 April 2024

| Full article title | Ten simple rules for managing laboratory information |

|---|---|

| Journal | PLoS Computational Biology |

| Author(s) | Berezin, Casey-Tyler; Aguilera, Luis U.; Billerbeck, Sonja; Bourne, Philip E.; Densmore, Douglas; Freemont, Paul; Gorochowski, Thomas E.; Hernandez, Sarah I.; Hillson, Nathan J.; King, Connor R.; Köpke, Michael; Ma, Shuyi; Miller, Katie M.; Moon, Tae Seok; Moore, Jason H.; Munsky, Brian; Myers, Chris J.; Nicholas, Dequina A.; Peccoud, Samuel J.; Zhou, Wen; Peccoud, Jean |

| Author affiliation(s) | Colorado State University, University of Groningen, University of Virginia, Boston University, Imperial College, University of Bristol, Lawrence Berkeley National Laboratory, US Department of Energy Agile BioFoundry, US Department of Energy Joint BioEnergy Institute, LanzaTech, University of Washington Medicine, Washington University in St. Louis, Cedars-Sinai Medical Centet, University of Colorado Boulder, University of California Irvine - Irvine |

| Primary contact | Email: jean dot peccoud at colostate dot edu |

| Editors | Markel, Scott |

| Year published | 2023 |

| Volume and issue | 19(12) |

| Article # | e1011652 |

| DOI | 10.1371/journal.pcbi.1011652 |

| ISSN | 1553-7358 |

| Distribution license | Creative Commons CC0 1.0 Universal |

| Website | https://journals.plos.org/ploscompbiol/article?id=10.1371/journal.pcbi.1011652 |

| Download | https://journals.plos.org/ploscompbiol/article/file?id=10.1371/journal.pcbi.1011652&type=printable (PDF) |

Abstract

Information is the cornerstone of research, from experimental data/metadata and computational processes to complex inventories of reagents and equipment. These 10 simple rules discuss best practices for leveraging laboratory information management systems (LIMS) to transform this large information load into useful scientific findings.

Keywords: laboratory information management, laboratory management, laboratory information management systems, LIMS, computational biology, mathematical modeling, transdisciplinary research

Introduction

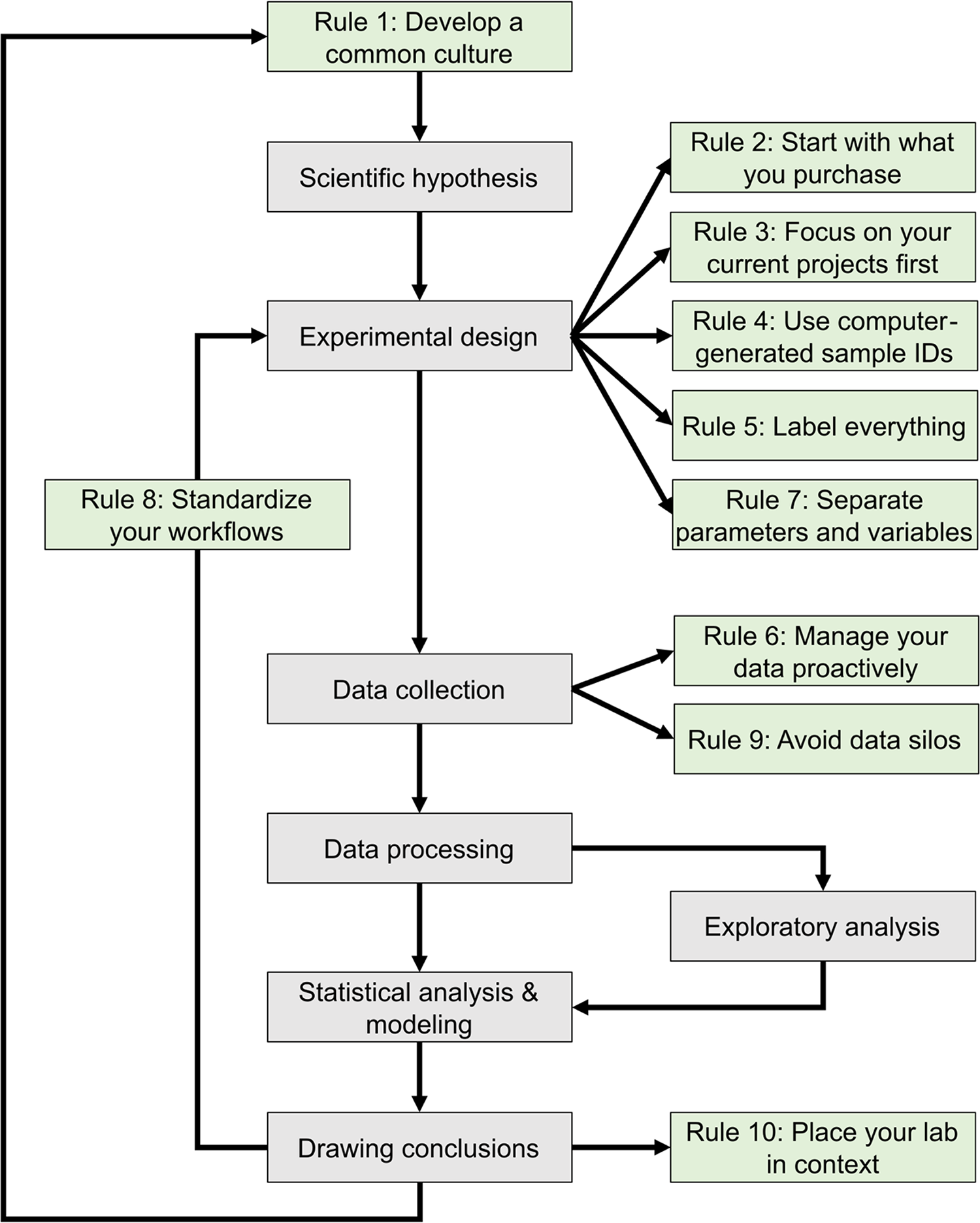

The development of mathematical models that can predict the properties of biological systems is the holy grail of computational biology.[1][2] Such models can be used to test biological hypotheses[3], quantify the risk of developing diseases[3], guide the development of biomanufactured products[4], engineer new systems meeting user-defined specifications, and much more.[4][5] Irrespective of a model’s application and the conceptual framework used to build it, the modeling process proceeds through a common iterative workflow. A model is first evaluated by fitting its parameters such that its behavior matches experimental data. Models that fit previous observations are then further validated by comparing the model predictions with the results of new observations that are outside the scope of the initial dataset (Fig. 1).

|

Historically, the collection of experimental data and the development of mathematical models were performed by different scientific communities.[6] Computational biologists had little control over the nature and quality of the data they could access. With the emergence of systems biology and synthetic biology, the boundary between experimental and computational biology has become increasingly blurred.[6] Many laboratories and junior scientists now have expertise in both producing and analyzing large volumes of digital data due to high-throughput workflows and an ever-expanding collection of digital instruments.[7] In this context, it is critically important to properly organize the exponentially growing volumes of experimental data to ensure they can support the development of models that can guide the next round of experiments.[8]

We are a group of scientists representing a broad range of scientific specialties, from clinical research to industrial biotechnology. Collectively, we have expertise in experimental biology, data science, and mathematical modeling. Some of us work in academia, while others work in industry. We have all faced the challenges of keeping track of laboratory operations to produce high-quality data suitable for analysis. We have experience using a variety of tools, including spreadsheets, open-source software, homegrown databases, and commercial solutions to manage our data. Irreproducible experiments, projects that failed to meet their goals, datasets we collected but never managed to analyze, and freezers full of unusable samples have taught us the hard way lessons that have led to these 10 simple rules for managing laboratory information.

This journal has published several sets of rules regarding best practices in overall research design[9][10], as well as the computational parts of research workflows, including data management[11][12][13] and software development practices.[14][15][16] The purpose of these 10 rules (Fig. 1) is to guide the development and configuration of laboratory information management systems (LIMS). LIMS typically offer electronic laboratory notebook (ELN), inventory, workflow planning, and data management features, allowing users to connect data production and data analysis to ensure that useful information can be extracted from experimental data and increase reproducibility.[17][18] These rules can also be used to develop training programs and lab management policies. Although we all agree that applying these rules increases the value of the data we produce in our laboratories, we also acknowledge that enforcing them is challenging. It relies on the successful integration of effective software tools, training programs, lab management policies, and the will to abide by these policies. Each lab must find the most effective way to adopt these rules to suit their unique environment.

Rule 1: Develop a common culture

Data-driven research projects generally require contributions from multiple stakeholders with complementary expertise. The success of these projects depends on entire teams developing a common vision of the projects' objectives and the approaches to be used.[19][20][21] Interdisciplinary teams, in particular, must establish a common language, as well as mutual expectations for experimental and publication timelines.[19] Unless the team develops a common culture, one stakeholder group can drive the project and impose its vision on the other groups. Although interdisciplinary (i.e., wet-lab and computational) training is becoming more common in academia, it is not unusual for experimentalists to regard data analysis as a technique they can acquire simply by hiring a student with computer programming skills. In a corporate environment, research informatics is often part of the information technology group whose mission is to support scientists who drive the research agenda. In both situations, the research agenda is driven by stakeholders who are unlikely to produce the most usable datasets because they lack sufficient understanding of data modeling.[20] Perhaps less frequently, there is also the situation where the research agenda is driven by people with expertise in data analysis. Because they may not appreciate the subtleties of experimental methods, they may find it difficult to engage experimentalists in collaborations aimed at testing their models.[20] Alternatively, their research may be limited to the analysis of disparate sets of previously published datasets.[19] Thus, interdisciplinary collaboration is key to maximizing the insights you gain from your data.

The development of a common culture, within a single laboratory or across interdisciplinary research teams, must begin with a thorough onboarding process for each member regarding general lab procedures, research goals, and individual responsibilities and expectations.[21][22] Implementing a LIMS requires perseverance by users, thus a major determinant of the success of a LIMS is whether end-users are involved in the culture development process.[17][23] When the input and suggestions of end-users are considered, they are more likely to engage with and apply good practices to the LIMS on a daily basis.[23] The long-term success of research endeavors then requires continued training and reevaluation of project goals and success (Fig.1).[19][21]

These 10 simple rules apply to transdisciplinary teams that have developed a common culture allowing experimentalists to gain a basic understanding of the modeling process and modelers to have some familiarity with the experimental processes generating the data they will analyze.[19] Teams that lack a common vision of data-driven research are encouraged to work toward acquiring this common vision through frequent communication and mutual goal setting.[19][20] Discussing these 10 simple rules in group meetings may aid in initiating this process.

Rule 2: Start with what you purchase

All the data produced by your lab are derived from things you have purchased, including supplies (consumables), equipment, and contract manufactured reagents, such as oligonucleotides or synthetic genes. In many cases, data (and metadata) on items in your inventory may be just as important as experimentally derived data, and as such, should be managed according to the FAIR (findable, accessible, interoperable, and reusable) principles.[24] Assembling an inventory of supplies and equipment with their associated locations can be handled in a few weeks by junior personnel without major interruption of laboratory operations, although establishing a thorough inventory may be more difficult and time-consuming for smaller labs with fewer members. Nevertheless, managing your lab inventory provides an immediate return on investment by positively impacting laboratory operations in several ways. People can quickly find the supplies and equipment they need to work, supplies are ordered with appropriate advance notice to minimize work stoppage, and data variation is reduced due to standardized supplies and the ability to track lot numbers easily (Fig. 1).[17][25][26]

Many labs still use Excel to keep track of inventory despite the existence of several more sophisticated databases and LIMS (e.g., Benchling, Quartzy, GenoFAB, LabWare, LabVantage, and TeselaGen).[25] These can facilitate real-time inventory tracking unlike a static document, increasing the findability and accessibility of inventory data. While some systems are specialized for certain types of inventories (e.g., animal colonies or frozen reagents), others are capable of tracking any type of reagent or item imaginable.[25] When considering what items to keep track of, there are three main considerations: expiration, maintenance, and ease of access.

Most labs manage their supplies through periodic cleanups of the lab, during which they sort through freezers, chemical cabinets, and other storage areas; review their contents; and dispose of supplies that are past their expiration date or are no longer useful. By actively tracking expiration dates and reagent use in a LIMS, you can decrease the frequency of such cleanups since the LIMS will alert users when expiration dates are approaching or when supplies are running low. This can prevent costly items from being wasted because they are expired or forgotten, and furthermore, the cost of products can be tracked and used to inform which experiments are performed.

LIMS can also support the use and service of key laboratory equipment. User manuals, service dates, warranties, and other identifying information can be attached directly to the equipment record, which allows for timely service and maintenance of the equipment. Adding equipment to the inventory can also prevent accidental losses in shared spaces where it is easy for people to borrow equipment without returning it. The label attached to the equipment (see Rule 5, later) acts as an indication of ownership that limits the risk of ownership confusion when almost identical pieces of equipment are owned by neighboring laboratories. As the laboratory inventory should focus on larger, more expensive equipment and supplies, inexpensive and easily obtained equipment (i.e., office supplies) may not need to be inventoried. An additional benefit of inventory management in a LIMS is the ability to create a record connecting specific equipment and supplies to specific people and projects, which can be used to detect potential sources of technical bias and variability (see Rules 4 and 5, later).

Rule 3: Focus on your current projects first

After establishing an inventory of supplies and equipment, it is natural to consider using a similar approach with the samples that have accumulated over the years in freezers or other storage locations. This can be overwhelming because the number of samples will be orders of magnitude larger than the number of supplies. In addition, documenting them is likely to require more effort than simply retrieving a product documentation from a vendor’s catalog.

Allocating limited resources to making an inventory of samples generated by past projects may not benefit current and future projects. A more practical approach is to prioritize tracking samples generated by ongoing projects and document samples generated by past projects on an as-needed basis.

Inventory your samples before you generate them

It is a common mistake to create sample records well after they were produced in the lab. The risks of this retroactive approach to recordkeeping include information loss, as well as selective recordkeeping, in which only some samples are deemed important enough to document while most temporary samples are not, even though they may provide critical information. (This mistake can be compounded in situations where regulatory requirements demand data integrity, including the contemporaneous recording of data.[27])

A more proactive approach avoids these pitfalls. When somebody walks into a lab to start an experiment, the samples that will be generated by this experiment should be known. It is possible to create the computer records corresponding to these samples before initiating the laboratory processes that generates the physical samples. The creation of a sample record can therefore be seen as part of the experiment planning process (Fig. 1). This makes it possible to preemptively print labels that will be used to track samples used at different stages of the process (see Rule 5, later).

It may also be useful to assign statuses to samples as they progress through different stages of their life cycle, such as “to do,” “in progress,” “completed,” or “canceled” to differentiate active works in progress from the backlog and samples generated by previous experiments. As the experimental process moves forward, data can be continually appended to the sample record. For example, the field to capture the concentration of a solution would be filled after the solution has been prepared. Thus, the success, or failure, of experiments can be easily documented and used to inform the next round of experiments.

Develop sample retention policies

It is always unpleasant to have to throw away poorly documented samples. The best strategy to avoid this outcome is to develop policies to discard only samples that will not be used in the future, a process rendered more objective and straightforward with adequate documentation. Properly structured workflows (see Rule 8, later) should define criteria for which samples should be kept and for how long. All lab members should be trained in these policies to ensure consistency, and policies should be revisited as new research operating procedures are initiated.

It can be tempting to keep every tube or plate that still contains something as a backup. This conservative strategy generates clutter, confusion, and reproducibility issues, especially in the absence of documentation. While it makes sense to keep some intermediates during the execution of a complex experimental workflow, the successful completion of the experiment should trigger the elimination of intermediates that have lost their purpose, have limited shelf life, and/or are not reusable. During this intermediate step, samples that are deemed as critical backup should be stored in a separate location from the working sample to minimize the likelihood of loss of both samples in case of electrical failure, fire, etc. Using clear labels (see Rules 4 and 5, later) and storing intermediate samples in dedicated storage locations can help with the enforcement of sample disposition policies.

Rule 4: Use computer-generated sample identification numbers

Generating sample names is probably not the best use of scientists’ creativity. Many labs still rely on manually generated sample names that may look something like “JP PCR 8/23 4.” Manually generated sample names are time-consuming to generate, difficult to interpret, and often contain insufficient information. Therefore, they should not be the primary identifier used to track samples.

Instead, computer-generated sample identification numbers (sample ID) should be utilized as the primary ID as they are able to overcome these limitations. Rather than describing the sample, a computer-generated sample ID provides a link between a physical sample and a database entry that contains more information associated with the sample. The Sample ID is the only piece of information that needs to be printed on the sample label (see Rule 5, later) because it allows researchers to retrieve all the sample information from a database. A sample tracking system should rely on both computer-readable and human-readable sample IDs.

Computer-readable IDs

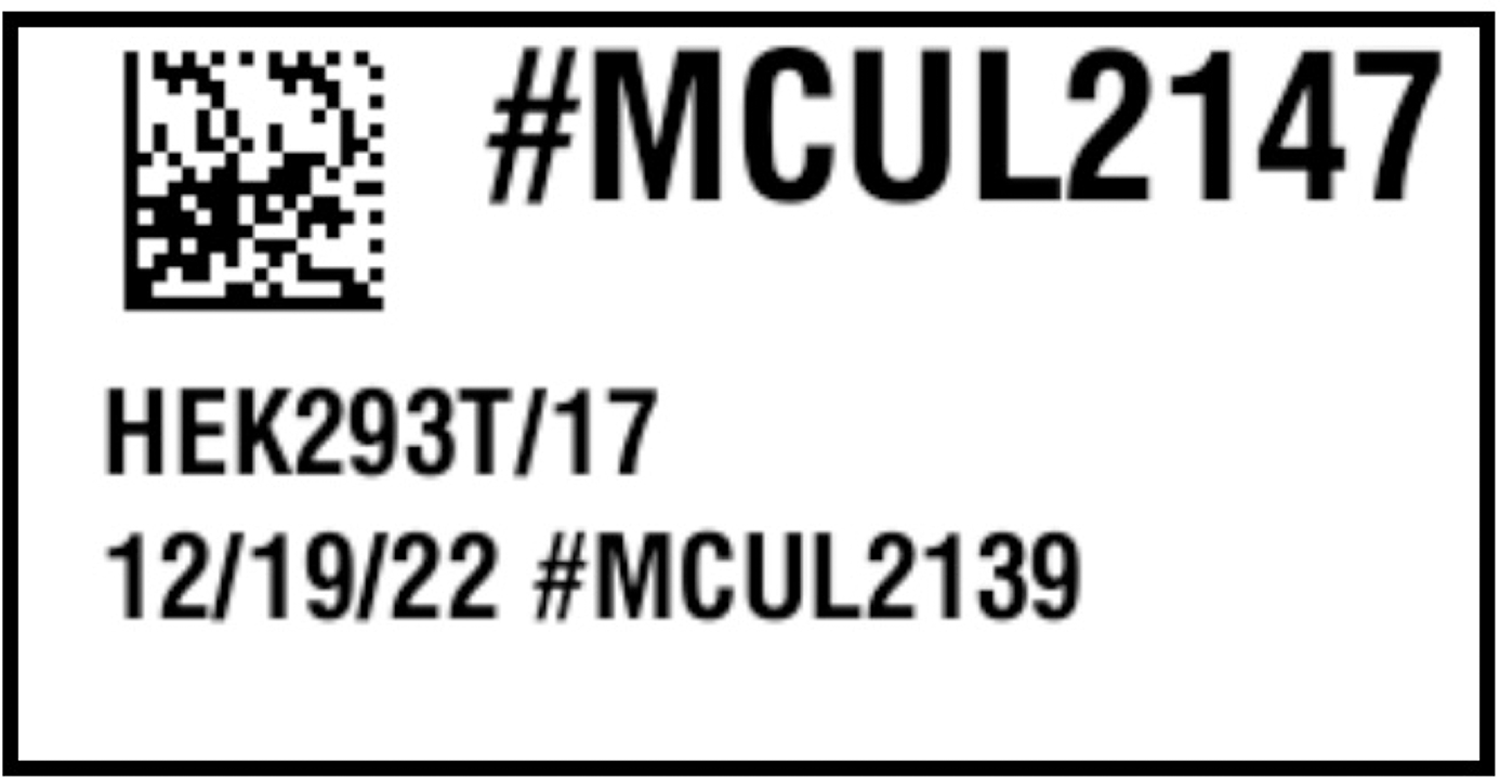

Since the primary purpose of a sample ID is to provide a link between a physical sample and the computer record that describes the sample, it saves time to rely on sample IDs that can be scanned by a reader or even a smartphone (Fig. 2).[28][29] Barcodes are special fonts to print data in a format that can be easily read by an optical sensor.[30] There are also newer alternatives, such as quick response (QR) codes, data matrices, or radio-frequency identification (RFID) to tag samples.[31][32] QR codes and data matrices are 2D barcodes that are cheaper to generate than RFID tags and store more data than traditional barcodes.[28] Nevertheless, these technologies encode a key that points to a database record.

|

Uniqueness is the most important property of the data encoded in barcodes, and the use of unique and persistent identifiers is a critical component of the findability of your (meta)data.[24] Several vendors now offer products with 2D barcodes printed on the side or bottom of the tube. It is common for such products, as well as lab-made reagents, to be split across multiple tubes or moved from one tube to another. In these cases, each of these “new” samples should have unique barcodes. A barcoding system can therefore facilitate the accurate identification of “parent” samples (e.g., a stock solution with ID X) and the unique “child” samples derived from them (e.g., aliquots of the stock solution with IDs Y and Z).

Human-readable IDs

While computer-readable IDs should be the main ID used when tracking a sample or supply, it is sometimes necessary for laboratory personnel to have a secondary sample ID they can read without the use of any equipment or while doing manual operations (i.e., handling samples).

To make an identifier readable by humans, it is best to keep the ID short and use their structure to provide contextual information. For example, the use of a prefix may help interpret the ID. For example, the ID #CHEM1234 would naturally be interpreted as a chemical or #MCUL2147 as a mammalian culture (Fig. 2).

Since these identifiers do not need to map to a unique database entry, human-readable IDs do not have the same uniqueness requirements as computer-readable IDs. For example, it may be acceptable to allow two labs using the same software environment to use the same human-readable ID, because this ID will only be seen in the context of a single lab. The software system should maintain the integrity of the relationships between the human-readable ID and the computer-readable ID by preventing users from editing these identifiers.

Rule 5: Label everything

Print labels to identify supplies, equipment, samples, storage locations, and any other physical objects used in your lab. Many labs are extensively relying on handwritten markings that create numerous problems.[17] A limited amount of information can be written on small sample containers, and like manually generated sample names, handwritten labels can be difficult to read or interpret.

Some labels are self-contained. For example, shipping labels include all the information necessary to deliver a package. However, in a laboratory environment, a sample label must not only identify a physical sample but also establish a connection to a record describing the sample and the data associated with it (Fig. 2).

Content of a label

Only two pieces of information are necessary on a label: a computer-readable sample ID printed as a barcode and a human-readable sample ID to make it easier for the researcher to work with the sample. If there is enough space to print more information on the label, your research needs should inform your label design. Ensure you have sufficient space to meet regulatory labeling requirements (e.g., biosafety requirements, hazards) and if desired, information such as the sample type, sample creator, date (e.g., of generation or expiration), or information about related samples (e.g., parent/child samples).

Label printing technology

Putting in place a labeling solution requires the integration of several elements, but once configured, proper use of label printing technologies makes it much faster and easier to print labels than to label tubes manually.

There are many types of label printers on the market today, and most are compatible with the Zebra Programming Language (ZPL) standard.[33] Labeling laboratory samples can be challenging due to harsh environmental conditions: exposure to liquid nitrogen or other chemicals, as well as ultra-low or high temperatures, will require specialized labels. For labeling plastic items, thermal transfer will print the most durable labels, especially if used with resin ribbon instead of wax, while inkjet printers can print durable labels for use on paper.[34][35][36] Furthermore, laboratory samples can be generated in a broad range of sizes, so labels should be adapted to the size of the object they are attached to. A high-resolution printer (300 dpi or greater) will make it possible to print small labels that will be easy to read by humans and scanners. Finally, select permanent or removable labels based on the application. Reusable items should be labeled with removable labels, whereas single-use containers are best labeled with permanent labels.

Label printing software applications can take data from a database or a spreadsheet and map different columns to the fields of label templates, helping to standardize your workflows (see Rule 8, later). They also support different formats and barcode standards. Of course, the label printing software needs to be compatible with the label printer. When selecting a barcode scanner, consider whether it supports the barcode standards that will be used in your label, as well as the size and shape of the barcodes it can scan. Inexpensive barcode scanners will have difficulty reading small barcodes printed on curved tubes with limited background, whereas professional scanners with high-performance imagers will effectively scan more challenging labels. When used, barcode scanners transmit a unique series of characters to the computer. How these characters are then used depends on the software application in which the barcode is read. Some applications will simply capture the content of the barcode. Other applications will process barcoded data in real-time to retrieve the content of the corresponding records.

Rule 6: Manage your data proactively

Many funding agencies now require investigators to include a data management and sharing plan with their research proposals[24][37][38], and journals have data sharing policies that authors need to uphold. However, the way many authors share their data indicates a poor understanding of data management.[39][40] Data should not be managed only when publishing the results of a project, they should be managed before the data collection starts.[41] Properly managed data will guide project execution by facilitating analysis as data gets collected (Fig. 1). Projects that do not organize their data will face difficulties during analysis, or worse, a loss of critical information that will negatively impact progress.

Use databases to organize your data

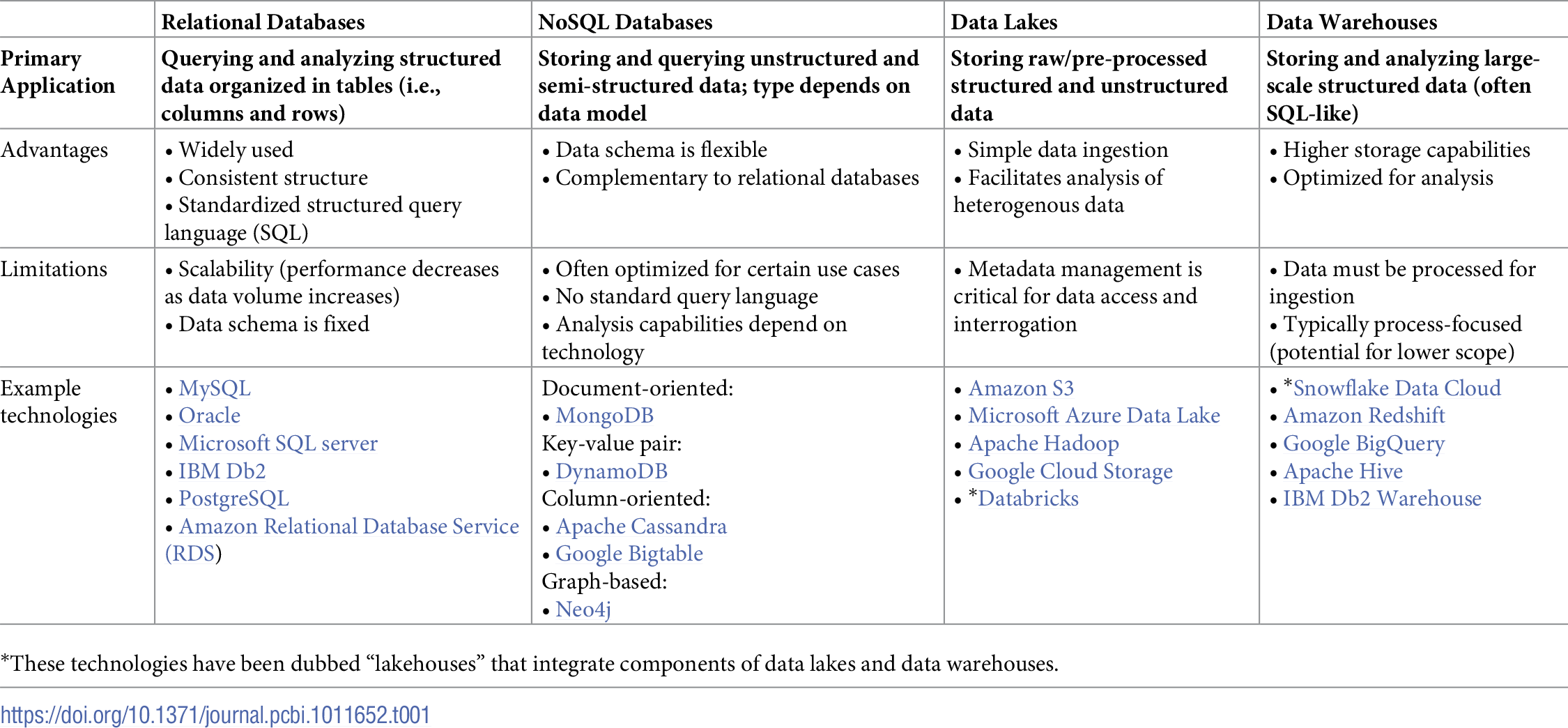

It can be tempting to only track data files through notebook entries or dump them in a shared drive (more in Rule 9). That simple data management strategy makes it very difficult to query data that may be spread across multiple files or runs, especially because a lot of contextual information must be captured in file names and directory structures using conventions that are difficult to enforce. Today, most data are produced by computer-controlled instruments that export tabular data (i.e., rows and columns) that can easily be imported into relational databases. Data stored in relational databases (e.g., MySQL) are typically explored using standard query language (SQL) and can be easily analyzed using a variety of statistical methods (Table 1). There are also no-code and low-code options, such as the Open Science Framework][42], AirTable, and ClickUp, which can also be used to track lab processes, develop standardized workflows, manage teams, etc.

|

In the age of big data applications enabled by cloud computing infrastructures, there are more ways than ever to organize data. Today, NoSQL (not only SQL) databases[43][44][45], data lakes[46][47][48], and data warehouses[49][50] provide additional avenues to manage complex sets of data that may be difficult to manage in relational databases (Table 1). All these data management frameworks make it possible to query and analyze data, depending on the size, type, and structure of your data as well as your analysis goals. NoSQL databases can be used to store and query data that is unstructured or otherwise not compatible with relational databases. Different NoSQL databases implement different data models to choose from depending on your needs (Table 1). Data lakes are primarily used for storing large-scale data with any structure. It is easy to input data into a data lake, but metadata management is critical for organizing, accessing, and interrogating the data. Data warehouses are best suited for storing and analyzing large-scale structured data. They are often SQL-like and are sometimes optimized for specific analytical workflows. These technologies are constantly evolving and the overlap between them is growing as captured in the idea of “lakehouses” such as Databricks and Snowflake Data Cloud (Table 1).

When choosing a data management system, labs must consider the trade-off between the cost of the service and the accessibility of the data (i.e., storage in a data lake may be cheaper than in a data warehouse, but retrieving/accessing the data may be more time-consuming or costly).[51] Many companies offer application programming interfaces (APIs) to connect their instruments and/or software to databases. In addition, new domain-specific databases continue to be developed.[52] If necessary, it is also possible to develop your own databases for particular instruments or file types.[53] Nevertheless, when uploading your data to a database, it is recommended to import them as interoperable nonproprietary file types (e.g., .csv instead of .xls for tabular data; .gb GenBank flat file instead of .clc Qiagen CLC Sequence Viewer format[54] for gene annotation data; see Rule 4 or Hart et al.[51] for more), so that the data can be accessed if a software is unavailable for any reason and to facilitate date sharing using tools such as git (see Rule 10, later).[14][24]

Link data to protocols

One of the benefits of data organization is the possibility of capturing critical metadata describing how the data were produced. Many labs have spent years refining protocols to be used in different experiments. Many of these protocols have minor variations that can significantly alter the outcome of an experiment. If not properly organized, this can cause major reproducibility issues and can be another uncontrolled source of technical variation. By linking protocol versions to the associated data that they produced (ideally all the samples generated throughout the experiment), it is possible to use this metadata to inform data reproducibility and analysis efforts.

Capture context in notebook entries

Organizing data in databases and capturing essential metadata describing the data production process can greatly simplify the process of documenting research projects in laboratory notebooks.[55] Instead of needing to include copies of the protocols and the raw data produced by the experiment, the notebook entry can focus on the context, purpose, and results of the experiment. In the case of ELNs (e.g., SciNote, LabArchives, and eLabJournal), entries can benefit from providing links to previous notebook entries, the experimental and analytical protocols used, and the datasets produced by the workflows. ELNs also bring additional benefits like portability, standardized templates, and improved sharing, and improved reproducibility. Finally, notebook entries should include the interpretation of the data as well as a conclusion pointing to the next experiment. The presence of this rich metadata and detailed provenance is critical to ensuring the FAIR principles are being met and your experiments are reproducible.[24]

Rule 7: Separate parameters and variables

Not all the data associated with an experiment are the same. Some data are controlled by the operator (i.e., parameters), whereas other data are observed and measured (i.e., variables). It is necessary to establish a clear distinction between set parameters and observed variables to improve reproducibility and analysis.

When parameters are not clearly identified, lab personnel may be tempted to change parameter values every time they perform experiments, which will increase the variability of observations. If, instead, parameter values are clearly identified and defined, then the variance of the observations produced by this set of parameters should be smaller than the variance of the observations produced using different parameter values.

Separating and recording the parameters and variables associated with an experiment makes it possible to build statistical models that compare the observed variables associated with different parameter values.[41][56] It also enables researchers to identify and account for both the underlying biological factors of interest (e.g., strain, treatment) and the technical and random (noise) sources of variation (e.g., batch effects) in an experiment.[56]

Utilizing metadata files is a convenient way of reducing variability caused by parameter value changes. A metadata file should include all the parameters needed to perform the same experiment with the same equipment. In an experimental workflow, pairing a metadata file with the quantified dataset is fundamental to reproducing the same experiment later.[51][55][57] Additionally, metadata files allow the user to assess whether multiple experiments were performed using the same parameters.

Rule 8: Standardize your workflows

Track your parameters from beginning to end

Experimental parameters have a direct influence on observations. However, some factors may have indirect effects on observations or affect observations further downstream in a pipeline. For example, the parameters of a DNA purification process may indirectly influence the quality of sequencing data derived from the extracted DNA.

To uncover such indirect effects, it is necessary to capture the sequence of operations in workflows. For the above example, this would include the DNA extraction, preparation of the sequencing library, and the sequencing run itself. When dealing with such workflows, it is not possible to use a single sample ID as the key connecting different datasets as in Rule 4. The workflow involves multiple samples (i.e., the biological sample or tissue, the extracted DNA, the sequencing library) that each have their own identifier. Comprehensive inventory and data management systems will allow you to track the sample lineage and flows of data produced at different stages of an experimental process.

Recording experimental parameters and workflows is especially critical when performing new experiments, since they are likely to change over time. As they are finalized, this information can be used to develop both standardized templates for documenting your workflow, as well as metrics for defining the success of each experiment, which can help you to optimize your experimental design and data collection efforts (Fig. 1).

Document your data processing pipeline

After the experimental data are collected, it is important to document the different steps used to process and analyze the data, such as if normalization was applied to the data, or if extreme values were not considered in the analyses. The use of ELNs and LIMS can facilitate standardized documentation: creating templates for experimental and analysis protocols can ensure that all the necessary information is collected, thereby improving reproducibility and publication efforts.[55][58]

Similarly, thorough source code documentation is necessary to disseminate your data and ensure that other groups can reproduce your analyses. There are many resources on good coding and software engineering practices[14][15][16][59], so we only touch on a few important points. Developing a “computational narrative” by writing comments alongside your code or using interfaces that allow for markdown (e.g., Jupyter Notebook, R Markdown) can make code more understandable.[60][61][62] Additionally, using syntax conventions and giving meaningful names to your code variables increases readability (i.e., use "average_mass = 10" instead of "am = 10"). Furthermore, documenting the libraries or software used and their versions is necessary to achieve reproducibility. Finally, implementing a version control system, such as git, protects the provenance of your work and enables collaboration.[63]

Rule 9: Avoid data silos

Depending on your workflows, you may collect information from different instruments or use several databases to store and interact with different types of data. Care must be taken to prevent any of these databases from becoming data silos: segregated groups of data that restrict collaboration and make it difficult to capture insights resulting from data obtained by multiple instruments.[47][49][64] Data lakes and data warehouses are good solutions for integrating data silos.[47][49][64]

Data silos not only stymie research efforts but also raise significant security issues when the silo is the sole storage location. Keeping your data management plan up-to-date with your current needs and utilizing the right databases for your needs can prevent this issue (see prior Rule 6). Regardless, it is crucial to back up your data in multiple places for when a file is corrupted, a service is unavailable, etc. Optimally, your data should always be saved in three different locations: two on-site and one in the cloud.[51] Of course, precautions should always be taken to ensure the privacy and security of your data online and in the cloud.[65][66]

Never delete data

As projects develop and data accumulates, it may be tempting to delete data that no longer seems relevant. Data may also be lost as computers are replaced, research personnel leave, and storage limits are reached. Poorly managed data can be easily lost simply because it is unorganized and difficult to query. However, while data collection remains expensive, data storage continues to get cheaper, so there is little excuse for losing or deleting data today. The exception may be intermediary data that is generated by reproducible data processing pipelines, which can be easily regenerated if and when necessary. Most data files can also be compressed to combat limitations on storage capacity.

Properly organized data is a treasure trove of information waiting to be discovered. By using computer-generated sample IDs (see prior Rule 4) and data lakes/warehouses (see prior Rule 6) to link data collected on different instruments, it is possible to extract and synthesize more information than originally intended in the project design. Data produced by different projects using common workflows (see prior Rule 8) can be analyzed to improve workflow performance. Data from failed experiments can be used to troubleshoot a problem affecting multiple projects.

Rule 10: Place your lab in context

Once you have developed a common culture (Rule 1), inventoried your laboratory (Rules 2 and 3), labeled your samples and equipment with computer-generated IDs (Rules 4 and 5), standardized your parameters and workflows (Rules 7 and 8), and backed up your data in several databases (Rules 6 and 9), what comes next?

Track processes occurring outside the lab

Laboratory operations and the data they produce are increasingly dependent on operations that take place outside of the lab. For example, the results of a polymerase chain reaction (PCR) assay will be affected by the primer design algorithm and the values of its parameters. They will also be affected by the quality of the primers manufactured by a specialized service provider. Even though the primer design and primer synthesis are not taking place in the lab, they are an integral part of the process of generating PCR data. They should therefore be captured in data flows (see prior Rule 8). Furthermore, the software and computational steps used to design experiments and analyze data they produce must also be properly recorded, to identify as many factors that may affect the data produced in the lab as possible.

Increase the accessibility of your work

There are several ways to place your lab in the greater scientific context and increase reproducibility. As discussed, using standardized, non-proprietary file types can increase ease of access within a lab and across groups.[14][51] You may also choose to make your data and source code public in an online repository to comply with journal requirements, increase transparency, or allow access to your data by other groups.[67] In addition, data exchange standards, such as the Synthetic Biology Open Language[68][69], increase the accessibility and reproducibility of your work.

Practice makes perfect

Whereas traditional data management methods can restrict your analyses to limited subsets of data, centralized information management systems (encompassing relational and NoSQL databases, metadata, sample tracking, etc.) facilitate the analysis of previously disparate datasets. Given the increasing availability and decreasing cost of information management systems, it is now possible for labs to produce, document, and track a seemingly endless amount of samples and data, and use these to inform their research directions in previously impossible ways. When establishing your LIMS, or incorporating new experiments, it is better to capture more data than less. As you standardize your workflows (see prior Rule 8), you should be able to establish clear metrics defining the success of an experiment and to scale the amount of the data you collect as needed.

While there are plenty of existing ELN and LIMS options to choose from (see Rule 6 and Rule 2, respectively), none are a turnkey solution. All data management systems require configuration and optimization for an individual lab. Each option has its own benefits and limitations, which your group must weigh. Coupled with the need to store your data with multiple backup options, thoughtful management practices are necessary to make any of these technologies work for your lab. The 10 rules discussed here should provide both a starting place and continued resource in the development of your LIMS. Remember that developing a LIMS is not a one-time event; all lab members must contribute to the maintenance of the LIMS and document their supplies, samples, and experiments in a timely manner. Although it might be an overwhelming process to begin with, careful data management will quickly benefit the data, users, and lab through saved time, standardized practices, and more powerful insights.[17][18]

Conclusions

Imparting a strong organizational structure for your lab information can ultimately save you both time and money if properly maintained. We present these 10 rules to help you build a strong foundation in managing your lab information so that you may avoid the costly and frustrating mistakes we have made over the years. By implementing these 10 rules, you should see some immediate benefits of your newfound structure, perhaps in the form of extra fridge space or fewer delays waiting for a reagent you did not realize was exhausted. In time, you will gain deep insights into your workflows and more easily analyze and report your data. The goal of these rules is also to spur conversation about lab management systems both between and within labs as there is no one-size-fits-all solution for lab management. While these rules provide a great starting point, the topic of how to manage lab information is something that must be a constant dialogue. The lab needs to discuss what is working and what is not working to assess and adjust the system to meet the needs of the lab. This dialogue must also be extended to all new members of the lab as many of these organizational steps may not be intuitive. It is critical to train new members extensively and to ensure that they are integrated into the lab’s common culture or else you risk falling back into bad practices. If properly trained, lab members will propagate and benefit from the organizational structure of the lab.

Acknowledgements

Funding

J.P., S.H., C.K., K.M., and C.-T.B. are supported by the National Science Foundation (award #2123367) and the National Institutes of Health (R01GM147816, T32GM132057). T.S.M. is supported by the Defense Advanced Research Projects Agency (N660012324032), the Office of Naval Research (N00014-21-1-2206), U.S. Environmental Protection Agency (84020501), the National Institutes of Health (R01 AT009741), and the National Science Foundation (MCB-2001743 and EF-2222403). C.J.M. is supported by the National Science Foundation (MCB-2231864) and the National Institutes Standards and Technology (70NANB21H103). D.A.N. is supported by the National Institutes of Health (R00HD098330 and DP2AI171121). B.M. and L.U.A. are supported by the National Institutes of Health (R35 GM124747). T.E.G. was supported by a Royal Society University Research Fellowship grant UF160357, a Turing Fellowship from The Alan Turing Institute under the EPSRC grant EP/N510129/1. W.Z. is supported by the National Institutes of Health (R01GM144961) and the National Science Foundation (IOS1922701).

This work was part of the Agile BioFoundry supported by the U.S. Department of Energy, Energy Efficiency and Renewable Energy, Bioenergy Technologies Office, and was part of the DOE Joint BioEnergy Institute supported by the U.S. Department of Energy, Office of Science, Office of Biological and Environmental Research, through contract DE-AC02-05CH11231 between Lawrence Berkeley National Laboratory and the U.S. Department of Energy.

The views and opinions of the authors expressed herein do not necessarily state or reflect those of the United States Government or any agency thereof. Neither the United States Government nor any agency thereof, nor any of their employees, makes any warranty, expressed or implied, or assumes any legal liability or responsibility or the accuracy, completeness, or usefulness of any information, apparatus, product, or process disclosed or represents that its use would not infringe privately owned rights. The United States Government retains and the publisher, by accepting the article for publication, acknowledges that the United States Government retains a nonexclusive, paid-up, irrevocable, worldwide license to publish or reproduce the published form of this manuscript, or allow others to do so, for United States Government purposes. The Department of Energy will provide public access to these results of federally sponsored research in accordance with the DOE Public Access Plan. The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

Competing interests

I have read the journal’s policy, and the authors of this manuscript have the following competing interests: J.P., S.P., and K.M. have a financial interest in GenoFAB, Inc. M.K. is an employee of LanzaTech. N.J.H. has a financial interest in TeselaGen Biotechnology, Inc. and Ansa Biotechnologies, Inc. GenoFAB, Inc. and TeselaGen Biotechnology, Inc. provide research information management systems. These companies may benefit or be perceived as benefiting from this publication.

References

- ↑ Noble, Denis (1 June 2002). "The rise of computational biology" (in en). Nature Reviews Molecular Cell Biology 3 (6): 459–463. doi:10.1038/nrm810. ISSN 1471-0072. https://www.nature.com/articles/nrm810.

- ↑ Sapoval, Nicolae; Aghazadeh, Amirali; Nute, Michael G.; Antunes, Dinler A.; Balaji, Advait; Baraniuk, Richard; Barberan, C. J.; Dannenfelser, Ruth et al. (1 April 2022). "Current progress and open challenges for applying deep learning across the biosciences" (in en). Nature Communications 13 (1): 1728. doi:10.1038/s41467-022-29268-7. ISSN 2041-1723. PMC PMC8976012. PMID 35365602. https://www.nature.com/articles/s41467-022-29268-7.

- ↑ 3.0 3.1 Chuang, Han-Yu; Hofree, Matan; Ideker, Trey (10 November 2010). "A Decade of Systems Biology" (in en). Annual Review of Cell and Developmental Biology 26 (1): 721–744. doi:10.1146/annurev-cellbio-100109-104122. ISSN 1081-0706. PMC PMC3371392. PMID 20604711. https://www.annualreviews.org/doi/10.1146/annurev-cellbio-100109-104122.

- ↑ 4.0 4.1 Chen, Chun; Le, Huong; Goudar, Chetan T. (1 March 2016). "Integration of systems biology in cell line and process development for biopharmaceutical manufacturing" (in en). Biochemical Engineering Journal 107: 11–17. doi:10.1016/j.bej.2015.11.013. https://linkinghub.elsevier.com/retrieve/pii/S1369703X1530111X.

- ↑ Yue, Rongting; Dutta, Abhishek (3 October 2022). "Computational systems biology in disease modeling and control, review and perspectives" (in en). npj Systems Biology and Applications 8 (1): 37. doi:10.1038/s41540-022-00247-4. ISSN 2056-7189. PMC PMC9528884. PMID 36192551. https://www.nature.com/articles/s41540-022-00247-4.

- ↑ 6.0 6.1 Markowetz, Florian (9 March 2017). "All biology is computational biology" (in en). PLOS Biology 15 (3): e2002050. doi:10.1371/journal.pbio.2002050. ISSN 1545-7885. PMC PMC5344307. PMID 28278152. https://dx.plos.org/10.1371/journal.pbio.2002050.

- ↑ Ghosh, Samik; Matsuoka, Yukiko; Asai, Yoshiyuki; Hsin, Kun-Yi; Kitano, Hiroaki (1 December 2011). "Software for systems biology: from tools to integrated platforms" (in en). Nature Reviews Genetics 12 (12): 821–832. doi:10.1038/nrg3096. ISSN 1471-0056. https://www.nature.com/articles/nrg3096.

- ↑ El Karoui, Meriem; Hoyos-Flight, Monica; Fletcher, Liz (7 August 2019). "Future Trends in Synthetic Biology—A Report". Frontiers in Bioengineering and Biotechnology 7: 175. doi:10.3389/fbioe.2019.00175. ISSN 2296-4185. PMC PMC6692427. PMID 31448268. https://www.frontiersin.org/article/10.3389/fbioe.2019.00175/full.

- ↑ Osborne, James M.; Bernabeu, Miguel O.; Bruna, Maria; Calderhead, Ben; Cooper, Jonathan; Dalchau, Neil; Dunn, Sara-Jane; Fletcher, Alexander G. et al. (27 March 2014). Bourne, Philip E.. ed. "Ten Simple Rules for Effective Computational Research" (in en). PLoS Computational Biology 10 (3): e1003506. doi:10.1371/journal.pcbi.1003506. ISSN 1553-7358. PMC PMC3967918. PMID 24675742. https://dx.plos.org/10.1371/journal.pcbi.1003506.

- ↑ Sandve, Geir Kjetil; Nekrutenko, Anton; Taylor, James; Hovig, Eivind (24 October 2013). Bourne, Philip E.. ed. "Ten Simple Rules for Reproducible Computational Research" (in en). PLoS Computational Biology 9 (10): e1003285. doi:10.1371/journal.pcbi.1003285. ISSN 1553-7358. PMC PMC3812051. PMID 24204232. https://dx.plos.org/10.1371/journal.pcbi.1003285.

- ↑ Goodman, Alyssa; Pepe, Alberto; Blocker, Alexander W.; Borgman, Christine L.; Cranmer, Kyle; Crosas, Merce; Di Stefano, Rosanne; Gil, Yolanda et al. (24 April 2014). Bourne, Philip E.. ed. "Ten Simple Rules for the Care and Feeding of Scientific Data" (in en). PLoS Computational Biology 10 (4): e1003542. doi:10.1371/journal.pcbi.1003542. ISSN 1553-7358. PMC PMC3998871. PMID 24763340. https://dx.plos.org/10.1371/journal.pcbi.1003542.

- ↑ Kazic, Toni (20 October 2015). "Ten Simple Rules for Experiments’ Provenance" (in en). PLOS Computational Biology 11 (10): e1004384. doi:10.1371/journal.pcbi.1004384. ISSN 1553-7358. PMC PMC4619002. PMID 26485673. https://dx.plos.org/10.1371/journal.pcbi.1004384.

- ↑ Zook, Matthew; Barocas, Solon; boyd, danah; Crawford, Kate; Keller, Emily; Gangadharan, Seeta Peña; Goodman, Alyssa; Hollander, Rachelle et al. (30 March 2017). Lewitter, Fran. ed. "Ten simple rules for responsible big data research" (in en). PLOS Computational Biology 13 (3): e1005399. doi:10.1371/journal.pcbi.1005399. ISSN 1553-7358. PMC PMC5373508. PMID 28358831. https://dx.plos.org/10.1371/journal.pcbi.1005399.

- ↑ 14.0 14.1 14.2 14.3 List, Markus; Ebert, Peter; Albrecht, Felipe (5 January 2017). Markel, Scott. ed. "Ten Simple Rules for Developing Usable Software in Computational Biology" (in en). PLOS Computational Biology 13 (1): e1005265. doi:10.1371/journal.pcbi.1005265. ISSN 1553-7358. PMC PMC5215831. PMID 28056032. https://dx.plos.org/10.1371/journal.pcbi.1005265.

- ↑ 15.0 15.1 Prlić, Andreas; Procter, James B. (6 December 2012). "Ten Simple Rules for the Open Development of Scientific Software" (in en). PLoS Computational Biology 8 (12): e1002802. doi:10.1371/journal.pcbi.1002802. ISSN 1553-7358. PMC PMC3516539. PMID 23236269. https://dx.plos.org/10.1371/journal.pcbi.1002802.

- ↑ 16.0 16.1 Taschuk, Morgan; Wilson, Greg (13 April 2017). "Ten simple rules for making research software more robust" (in en). PLOS Computational Biology 13 (4): e1005412. doi:10.1371/journal.pcbi.1005412. ISSN 1553-7358. PMC PMC5390961. PMID 28407023. https://dx.plos.org/10.1371/journal.pcbi.1005412.

- ↑ 17.0 17.1 17.2 17.3 17.4 Myneni, Sahiti; Patel, Vimla L. (1 June 2010). "Organization of biomedical data for collaborative scientific research: A research information management system" (in en). International Journal of Information Management 30 (3): 256–264. doi:10.1016/j.ijinfomgt.2009.09.005. PMC PMC2882303. PMID 20543892. https://linkinghub.elsevier.com/retrieve/pii/S0268401209001182.

- ↑ 18.0 18.1 Prasad, Poonam J.; Bodhe, G.L. (1 August 2012). "Trends in laboratory information management system" (in en). Chemometrics and Intelligent Laboratory Systems 118: 187–192. doi:10.1016/j.chemolab.2012.07.001. https://linkinghub.elsevier.com/retrieve/pii/S0169743912001438.

- ↑ 19.0 19.1 19.2 19.3 19.4 19.5 Knapp, Bernhard; Bardenet, Rémi; Bernabeu, Miguel O.; Bordas, Rafel; Bruna, Maria; Calderhead, Ben; Cooper, Jonathan; Fletcher, Alexander G. et al. (30 April 2015). "Ten Simple Rules for a Successful Cross-Disciplinary Collaboration" (in en). PLOS Computational Biology 11 (4): e1004214. doi:10.1371/journal.pcbi.1004214. ISSN 1553-7358. PMC PMC4415777. PMID 25928184. https://dx.plos.org/10.1371/journal.pcbi.1004214.

- ↑ 20.0 20.1 20.2 20.3 MacLeod, Miles (1 February 2018). "What makes interdisciplinarity difficult? Some consequences of domain specificity in interdisciplinary practice" (in en). Synthese 195 (2): 697–720. doi:10.1007/s11229-016-1236-4. ISSN 0039-7857. http://link.springer.com/10.1007/s11229-016-1236-4.

- ↑ 21.0 21.1 21.2 Vicens, Quentin; Bourne, Philip E. (2007). "Ten Simple Rules for a Successful Collaboration" (in en). PLoS Computational Biology 3 (3): e44. doi:10.1371/journal.pcbi.0030044. ISSN 1553-734X. PMC PMC1847992. PMID 17397252. https://dx.plos.org/10.1371/journal.pcbi.0030044.

- ↑ Andreev, Andrey; Komatsu, Valerie; Almiron, Paula; Rose, Kasey; Hughes, Alexandria; Lee, Maurice Y (3 May 2022). "Welcome to the lab" (in en). eLife 11: e79627. doi:10.7554/eLife.79627. ISSN 2050-084X. PMC PMC9064289. PMID 35503004. https://elifesciences.org/articles/79627.

- ↑ 23.0 23.1 Rasmussen, Lynn; Maddox, Clinton B.; Harten, Bill; White, E. Lucile (1 December 2007). "A Successful LIMS Implementation: Case Study at Southern Research Institute" (in en). JALA: Journal of the Association for Laboratory Automation 12 (6): 384–390. doi:10.1016/j.jala.2007.08.002. ISSN 1535-5535. http://journals.sagepub.com/doi/10.1016/j.jala.2007.08.002.

- ↑ 24.0 24.1 24.2 24.3 24.4 Wilkinson, Mark D.; Dumontier, Michel; Aalbersberg, IJsbrand Jan; Appleton, Gabrielle; Axton, Myles; Baak, Arie; Blomberg, Niklas; Boiten, Jan-Willem et al. (15 March 2016). "The FAIR Guiding Principles for scientific data management and stewardship" (in en). Scientific Data 3 (1): 160018. doi:10.1038/sdata.2016.18. ISSN 2052-4463. PMC PMC4792175. PMID 26978244. https://www.nature.com/articles/sdata201618.

- ↑ 25.0 25.1 25.2 Perkel, Jeffrey M. (6 August 2015). "Lab-inventory management: Time to take stock" (in en). Nature 524 (7563): 125–126. doi:10.1038/524125a. ISSN 0028-0836. https://www.nature.com/articles/524125a.

- ↑ Foster, Barbara L. (1 September 2005). "The Chemical Inventory Management System in academia" (in en). Chemical Health & Safety 12 (5): 21–25. doi:10.1016/j.chs.2005.01.019. ISSN 1074-9098. https://pubs.acs.org/doi/10.1016/j.chs.2005.01.019.

- ↑ "What the FDA Guidance on Data Integrity Means for Your Lab". Our Insights. Astrix, Inc. 11 May 2022. https://astrixinc.com/blog/fda-guidance-for-data-integrity/. Retrieved 18 March 2024.

- ↑ 28.0 28.1 Sivakami, N. (2018). "Comparative Study of Barcode, QR-code and RFIF System in Library Environment". International Journal of Academic Research in Library & Information Science 1 (1): 1–5. https://science.eurekajournals.com/index.php/IJARLIS/article/view/12.

- ↑ Tiwari, Sumit (1 December 2016). "An Introduction to QR Code Technology". 2016 International Conference on Information Technology (ICIT) (Bhubaneswar, India: IEEE): 39–44. doi:10.1109/ICIT.2016.021. ISBN 978-1-5090-3584-7. http://ieeexplore.ieee.org/document/7966807/.

- ↑ Copp, Adam J.; Kennedy, Theodore A.; Muehlbauer, Jeffrey D. (1 July 2014). "Barcodes Are a Useful Tool for Labeling and Tracking Ecological Samples" (in en). The Bulletin of the Ecological Society of America 95 (3): 293–300. doi:10.1890/0012-9623-95.3.293. ISSN 0012-9623. https://esajournals.onlinelibrary.wiley.com/doi/10.1890/0012-9623-95.3.293.

- ↑ Shukran, M A M; Ishak, M S; Abdullah, M N (1 August 2017). "Enhancing Chemical Inventory Management in Laboratory through a Mobile-Based QR Code Tag". IOP Conference Series: Materials Science and Engineering 226: 012093. doi:10.1088/1757-899X/226/1/012093. ISSN 1757-8981. https://iopscience.iop.org/article/10.1088/1757-899X/226/1/012093.

- ↑ Wahab, M.H.A.; Kadir, H.A.; Tukiran, Z. et al. (2010). "Web-based laboratory equipment monitoring system using RFID". 2010 International Conference on Intelligent and Advanced Systems. doi:10.1109/icias.2010.5716177. https://ieeexplore.ieee.org/document/5716177/.

- ↑ "Zebra Programming Language (ZPL)". Zebra Developers. https://developer.zebra.com/products/printers/zpl. Retrieved 30 September 2023.

- ↑ Beiner, G.G. (1 January 2020). "Labels for Eternity: Testing Printed Labels for use in Wet Collections" (in en). Collection Forum 34 (1): 101–113. doi:10.14351/0831-4985-34.1.101. ISSN 0831-4985. https://meridian.allenpress.com/collection-forum/article/34/1/101/471436/Labels-for-Eternity-Testing-Printed-Labels-for-use.

- ↑ "Label Printers - Thermal Transfer Label Printers and How They Work". Cableorganizer.com, LLC. https://www.cableorganizer.com/categories/cable-identification/label-printers/. Retrieved 30 September 2023.

- ↑ "How to Select the Right Thermal Transfer Ribbon Type". Technicode, Inc. 2 March 2022. https://technicodelabels.com/blog/how-to-select-thermal-transfer-ribbon-type/. Retrieved 30 September 2023.

- ↑ Gonzales, Sara; Carson, Matthew B.; Holmes, Kristi (3 August 2022). Markel, Scott. ed. "Ten simple rules for maximizing the recommendations of the NIH data management and sharing plan" (in en). PLOS Computational Biology 18 (8): e1010397. doi:10.1371/journal.pcbi.1010397. ISSN 1553-7358. PMC PMC9348704. PMID 35921268. https://dx.plos.org/10.1371/journal.pcbi.1010397.

- ↑ Michener, William K. (22 October 2015). Bourne, Philip E.. ed. "Ten Simple Rules for Creating a Good Data Management Plan" (in en). PLOS Computational Biology 11 (10): e1004525. doi:10.1371/journal.pcbi.1004525. ISSN 1553-7358. PMC PMC4619636. PMID 26492633. https://dx.plos.org/10.1371/journal.pcbi.1004525.

- ↑ Peccoud, Jean (30 September 2021). "Data sharing policies: share well and you shall be rewarded" (in en). Synthetic Biology 6 (1): ysab028. doi:10.1093/synbio/ysab028. ISSN 2397-7000. PMC PMC8482415. PMID 34604538. https://academic.oup.com/synbio/article/doi/10.1093/synbio/ysab028/6366307.

- ↑ Christensen, Garret; Dafoe, Allan; Miguel, Edward; Moore, Don A.; Rose, Andrew K. (18 December 2019). Naudet, Florian. ed. "A study of the impact of data sharing on article citations using journal policies as a natural experiment" (in en). PLOS ONE 14 (12): e0225883. doi:10.1371/journal.pone.0225883. ISSN 1932-6203. PMC PMC6919593. PMID 31851689. https://dx.plos.org/10.1371/journal.pone.0225883.

- ↑ 41.0 41.1 Kass, Robert E.; Caffo, Brian S.; Davidian, Marie; Meng, Xiao-Li; Yu, Bin; Reid, Nancy (9 June 2016). Lewitter, Fran. ed. "Ten Simple Rules for Effective Statistical Practice" (in en). PLOS Computational Biology 12 (6): e1004961. doi:10.1371/journal.pcbi.1004961. ISSN 1553-7358. PMC PMC4900655. PMID 27281180. https://dx.plos.org/10.1371/journal.pcbi.1004961.

- ↑ Foster, Erin D.; Deardorff, Ariel (4 April 2017). "Open Science Framework (OSF)". Journal of the Medical Library Association 105 (2). doi:10.5195/jmla.2017.88. ISSN 1558-9439. PMC PMC5370619. http://jmla.pitt.edu/ojs/jmla/article/view/88.

- ↑ Nayak, A.; Poriya, A.; Poojary, D. (2013). "Type Of NOSQL Databases And Its Comparison With Relational Databases". International Journal of Applied Information Systems 5 (4): 16–19. https://www.ijais.org/archives/volume5/number4/434-0888/.

- ↑ Pokorný, Jaroslav (1 November 2019). "Integration of Relational and NoSQL Databases" (in en). Vietnam Journal of Computer Science 06 (04): 389–405. doi:10.1142/S2196888819500210. ISSN 2196-8888. https://www.worldscientific.com/doi/abs/10.1142/S2196888819500210.

- ↑ Sahatqija, Kosovare; Ajdari, Jaumin; Zenuni, Xhemal; Raufi, Bujar; Ismaili, Florije (1 May 2018). "Comparison between relational and NOSQL databases". 2018 41st International Convention on Information and Communication Technology, Electronics and Microelectronics (MIPRO) (Opatija: IEEE): 0216–0221. doi:10.23919/MIPRO.2018.8400041. ISBN 978-953-233-095-3. https://ieeexplore.ieee.org/document/8400041/.

- ↑ Nargesian, Fatemeh; Zhu, Erkang; Miller, Renée J.; Pu, Ken Q.; Arocena, Patricia C. (1 August 2019). "Data lake management: challenges and opportunities" (in en). Proceedings of the VLDB Endowment 12 (12): 1986–1989. doi:10.14778/3352063.3352116. ISSN 2150-8097. https://dl.acm.org/doi/10.14778/3352063.3352116.

- ↑ 47.0 47.1 47.2 Sawadogo, Pegdwendé; Darmont, Jérôme (1 February 2021). "On data lake architectures and metadata management" (in en). Journal of Intelligent Information Systems 56 (1): 97–120. doi:10.1007/s10844-020-00608-7. ISSN 0925-9902. https://link.springer.com/10.1007/s10844-020-00608-7.

- ↑ Giebler, Corinna; Gröger, Christoph; Hoos, Eva; Schwarz, Holger; Mitschang, Bernhard (2019), Ordonez, Carlos; Song, Il-Yeol; Anderst-Kotsis, Gabriele et al.., eds., "Leveraging the Data Lake: Current State and Challenges" (in en), Big Data Analytics and Knowledge Discovery (Cham: Springer International Publishing) 11708: 179–188, doi:10.1007/978-3-030-27520-4_13, ISBN 978-3-030-27519-8, https://link.springer.com/10.1007/978-3-030-27520-4_13. Retrieved 2024-03-19

- ↑ 49.0 49.1 49.2 Nambiar, Athira; Mundra, Divyansh (7 November 2022). "An Overview of Data Warehouse and Data Lake in Modern Enterprise Data Management" (in en). Big Data and Cognitive Computing 6 (4): 132. doi:10.3390/bdcc6040132. ISSN 2504-2289. https://www.mdpi.com/2504-2289/6/4/132.

- ↑ Shijitha, R.; Karthigaikumar, P.; Stanly Paul, A. (2022). "Data Warehouse Design for Big Data in Academia" (in en). Computers, Materials & Continua 71 (1): 979–992. doi:10.32604/cmc.2022.016676. ISSN 1546-2226. https://www.techscience.com/cmc/v71n1/45356.

- ↑ 51.0 51.1 51.2 51.3 51.4 Hart, Edmund M.; Barmby, Pauline; LeBauer, David; Michonneau, François; Mount, Sarah; Mulrooney, Patrick; Poisot, Timothée; Woo, Kara H. et al. (20 October 2016). Markel, Scott. ed. "Ten Simple Rules for Digital Data Storage" (in en). PLOS Computational Biology 12 (10): e1005097. doi:10.1371/journal.pcbi.1005097. ISSN 1553-7358. PMC PMC5072699. PMID 27764088. https://dx.plos.org/10.1371/journal.pcbi.1005097.

- ↑ Rigden, Daniel J; Fernández, Xosé M (7 January 2022). "The 2022 Nucleic Acids Research database issue and the online molecular biology database collection" (in en). Nucleic Acids Research 50 (D1): D1–D10. doi:10.1093/nar/gkab1195. ISSN 0305-1048. PMC PMC8728296. PMID 34986604. https://academic.oup.com/nar/article/50/D1/D1/6495890.

- ↑ Helmy, Mohamed; Crits-Christoph, Alexander; Bader, Gary D. (10 November 2016). "Ten Simple Rules for Developing Public Biological Databases" (in en). PLOS Computational Biology 12 (11): e1005128. doi:10.1371/journal.pcbi.1005128. ISSN 1553-7358. PMC PMC5104318. PMID 27832061. https://dx.plos.org/10.1371/journal.pcbi.1005128.

- ↑ "Introduction to CLC Sequence Viewer". QIAGEN N.V. 1 June 2018. https://resources.qiagenbioinformatics.com/manuals/clcsequenceviewer/current/index.php?manual=Introduction_CLC_Sequence_Viewer.html. Retrieved 29 September 2023.

- ↑ 55.0 55.1 55.2 Nussbeck, Sara Y; Weil, Philipp; Menzel, Julia; Marzec, Bartlomiej; Lorberg, Kai; Schwappach, Blanche (1 June 2014). "The laboratory notebook in the 21 st century: The electronic laboratory notebook would enhance good scientific practice and increase research productivity" (in en). EMBO reports 15 (6): 631–634. doi:10.15252/embr.201338358. ISSN 1469-221X. PMC PMC4197872. PMID 24833749. https://www.embopress.org/doi/10.15252/embr.201338358.

- ↑ 56.0 56.1 Altman, Naomi; Krzywinski, Martin (1 January 2015). "Sources of variation" (in en). Nature Methods 12 (1): 5–6. doi:10.1038/nmeth.3224. ISSN 1548-7091. https://www.nature.com/articles/nmeth.3224.

- ↑ White, Ethan; Baldridge, Elita; Brym, Zachary; Locey, Kenneth; McGlinn, Daniel; Supp, Sarah (2013). "Nine simple ways to make it easier to (re)use your data". Ideas in Ecology and Evolution 6 (2). doi:10.4033/iee.2013.6b.6.f. https://ojs.library.queensu.ca/index.php/IEE/article/view/4608.

- ↑ Dunie, Matt (7 November 2017). Lawlor, Bonnie. ed. "The importance of research data management: The value of electronic laboratory notebooks in the management of data integrity and data availability". Information Services & Use 37 (3): 355–359. doi:10.3233/ISU-170843. https://www.medra.org/servlet/aliasResolver?alias=iospress&doi=10.3233/ISU-170843.

- ↑ Lee, Benjamin D. (20 December 2018). Markel, Scott. ed. "Ten simple rules for documenting scientific software" (in en). PLOS Computational Biology 14 (12): e1006561. doi:10.1371/journal.pcbi.1006561. ISSN 1553-7358. PMC PMC6301674. PMID 30571677. https://dx.plos.org/10.1371/journal.pcbi.1006561.

- ↑ Baumer, Benjamin; Udwin, Dana (1 May 2015). "R Markdown" (in en). WIREs Computational Statistics 7 (3): 167–177. doi:10.1002/wics.1348. ISSN 1939-5108. https://wires.onlinelibrary.wiley.com/doi/10.1002/wics.1348.

- ↑ Kluyver, Thomas; Ragan-Kelley, Benjamin; Pé Rez, Fernando; Granger, Brian; Bussonnier, Matthias; Frederic, Jonathan; Kelley, Kyle et al. (2016). "Jupyter Notebooks – a publishing format for reproducible computational workflows". Positioning and Power in Academic Publishing: Players, Agents and Agendas: 87–90. doi:10.3233/978-1-61499-649-1-87. https://ebooks.iospress.nl/doi/10.3233/978-1-61499-649-1-87.

- ↑ Rule, Adam; Birmingham, Amanda; Zuniga, Cristal; Altintas, Ilkay; Huang, Shih-Cheng; Knight, Rob; Moshiri, Niema; Nguyen, Mai H. et al. (25 July 2019). Lewitter, Fran. ed. "Ten simple rules for writing and sharing computational analyses in Jupyter Notebooks" (in en). PLOS Computational Biology 15 (7): e1007007. doi:10.1371/journal.pcbi.1007007. ISSN 1553-7358. PMC PMC6657818. PMID 31344036. https://dx.plos.org/10.1371/journal.pcbi.1007007.

- ↑ Perez-Riverol, Yasset; Gatto, Laurent; Wang, Rui; Sachsenberg, Timo; Uszkoreit, Julian; Leprevost, Felipe da Veiga; Fufezan, Christian; Ternent, Tobias et al. (14 July 2016). Markel, Scott. ed. "Ten Simple Rules for Taking Advantage of Git and GitHub" (in en). PLOS Computational Biology 12 (7): e1004947. doi:10.1371/journal.pcbi.1004947. ISSN 1553-7358. PMC PMC4945047. PMID 27415786. https://dx.plos.org/10.1371/journal.pcbi.1004947.

- ↑ 64.0 64.1 Patel, Jayesh (29 June 2019). "Bridging Data Silos Using Big Data Integration". International Journal of Database Management Systems 11 (3): 01–06. doi:10.5121/ijdms.2019.11301. http://aircconline.com/ijdms/V11N3/11319ijdms01.pdf.

- ↑ Abadi, D.J. (March 2009). "Data Management in the Cloud: Limitations and Opportunities". Bulletin of the Technical Committee on Data Engineering 32 (1): 3–12. http://sites.computer.org/debull/A09mar/A09MAR-CD.pdf.

- ↑ Tabrizchi, Hamed; Kuchaki Rafsanjani, Marjan (1 December 2020). "A survey on security challenges in cloud computing: issues, threats, and solutions" (in en). The Journal of Supercomputing 76 (12): 9493–9532. doi:10.1007/s11227-020-03213-1. ISSN 0920-8542. http://link.springer.com/10.1007/s11227-020-03213-1.

- ↑ Boland, Mary Regina; Karczewski, Konrad J.; Tatonetti, Nicholas P. (19 January 2017). "Ten Simple Rules to Enable Multi-site Collaborations through Data Sharing" (in en). PLOS Computational Biology 13 (1): e1005278. doi:10.1371/journal.pcbi.1005278. ISSN 1553-7358. PMC PMC5245793. PMID 28103227. https://dx.plos.org/10.1371/journal.pcbi.1005278.

- ↑ Baig, Hasan; Fontanarrosa, Pedro; Kulkarni, Vishwesh; McLaughlin, James Alastair; Vaidyanathan, Prashant; Bartley, Bryan; Beal, Jacob; Crowther, Matthew et al. (24 August 2020). "Synthetic biology open language (SBOL) version 3.0.0" (in en). Journal of Integrative Bioinformatics 17 (2-3): 20200017. doi:10.1515/jib-2020-0017. ISSN 1613-4516. PMC PMC7756618. PMID 32589605. https://www.degruyter.com/document/doi/10.1515/jib-2020-0017/html.