Difference between revisions of "LII:A Science Student's Guide to Laboratory Informatics"

Shawndouglas (talk | contribs) (Created stub. Saving and adding more.) |

Shawndouglas (talk | contribs) m (Text replacement - "\[\[Accelrys, Inc.(.*)" to "[[Vendor:Accelrys, Inc.$1") |

||

| (14 intermediate revisions by the same user not shown) | |||

| Line 6: | Line 6: | ||

'''Publication date''': November 2023 | '''Publication date''': November 2023 | ||

==Introduction== | ==Introduction== | ||

| Line 19: | Line 12: | ||

The purpose of this guide is to provide a student with a look at the [[Informatics (academic field)|informatics]] landscape in ''industrial labs''. The guide has two goals: | The purpose of this guide is to provide a student with a look at the [[Informatics (academic field)|informatics]] landscape in ''industrial labs''. The guide has two goals: | ||

# Provide a framework to help the reader understand what they need to know to be both comfortable and effective in an industrial setting, giving them a starting point for learning about the product classes and technologies; and, | #Provide a framework to help the reader understand what they need to know to be both comfortable and effective in an industrial setting, giving them a starting point for learning about the product classes and technologies; and, | ||

# Give an instructor an outline of a survey course should they want to pursue teaching this type of material. | #Give an instructor an outline of a survey course should they want to pursue teaching this type of material. | ||

This guide is not intended to provide a textbook-scale level of discussion. It's an annotated map of the laboratory portion of a technological world, identifying critical points of interest and how they relate to one another, while making recommendations for the reader to learn more. Its intent is that in one document you can appreciate what the technologies are, and if you hear their names, you'll be able to understand the technologies' higher-level positioning and function. The details, which are continually developing, will be referenced elsewhere. | This guide is not intended to provide a textbook-scale level of discussion. It's an annotated map of the laboratory portion of a technological world, identifying critical points of interest and how they relate to one another, while making recommendations for the reader to learn more. Its intent is that in one document you can appreciate what the technologies are, and if you hear their names, you'll be able to understand the technologies' higher-level positioning and function. The details, which are continually developing, will be referenced elsewhere. | ||

| Line 35: | Line 28: | ||

Outside of regulations and standards, even company policy can drive the desire for timely, high-quality analytical results. Corporate guidelines for lab operations ensure that laboratory data and information is well-managed and supportable. Consider a lawsuit brought by a consumer about product quality. Suppose the company can't demonstrate that the data supporting product quality is on solid ground. In that case, they may be fined with significant damages. Seeking to avoid this, the company puts into place enforceable policy and procedures (P&P) and may even put into place a [[quality management system]] (QMS). | Outside of regulations and standards, even company policy can drive the desire for timely, high-quality analytical results. Corporate guidelines for lab operations ensure that laboratory data and information is well-managed and supportable. Consider a lawsuit brought by a consumer about product quality. Suppose the company can't demonstrate that the data supporting product quality is on solid ground. In that case, they may be fined with significant damages. Seeking to avoid this, the company puts into place enforceable policy and procedures (P&P) and may even put into place a [[quality management system]] (QMS). | ||

As noted, labs are production operations. There is more leeway in research, but service labs (i.e., analytical, physical properties, quality control, contract testing, etc.) are heavily production-oriented, so some refer to the work as "scientific manufacturing" or "scientific production work" because of the heavy reliance on [[Laboratory automation|automation]]. That dependence on automation has led to the adoption of systems such as [[laboratory information management system]]s (LIMS), [[electronic laboratory notebook]]s (ELN), [[scientific data management system]]s (SDMS), instrument data systems (IDS), and robotics to organize and manage the work, and produce results. Some aspects of research, where large volumes of sample processing are essential, have the same issues. Yet realistically, how immersed are today's student scientists in the realities of these systems and their use outside of academia? Are they being taught sufficiently about these and other electronic systems that are increasingly finding their way into the modern industrial laboratory? | As noted, labs are production operations. There is more leeway in research, but service labs (i.e., analytical, physical properties, quality control, contract testing, etc.) are heavily production-oriented, so some refer to the work as "scientific manufacturing" or "scientific production work" because of the heavy reliance on [[Laboratory automation|automation]]. That dependence on automation has led to the adoption of systems such as [[laboratory information management system]]s (LIMS), [[electronic laboratory notebook]]s (ELN), [[scientific data management system]]s (SDMS), instrument data systems (IDS), and robotics to organize and manage the work, and produce results. Some aspects of research, where large volumes of [[Sample (material)|sample]] processing are essential, have the same issues. Yet realistically, how immersed are today's student scientists in the realities of these systems and their use outside of academia? Are they being taught sufficiently about these and other electronic systems that are increasingly finding their way into the modern industrial laboratory? | ||

==Operational models for research and service laboratories== | ==Operational models for research and service laboratories== | ||

Before we get too deeply into laboratory informatics concepts, we need to describe the setting where informatics tools are used. Otherwise, the tools won’t make sense. Scientific work, particularly laboratory work, is process-driven at several levels. Organizational processes describe how a business works and how the various departments relate to each other. Laboratories have processes operating at different levels; one may describe how the lab functions and carries out its intended purpose, and others detail how experimental procedures are carried out. Some of these processes—accounting, for example—are largely, with a few exceptions, the same across organizations in differing industries. Others depend on the industry and are the basis for requiring industry experience before hiring people at the mid- and upper levels. Still other activities, such as research, depend on the particular mission of a lab within an organization. A given company may have several different research laboratories directed at different areas of work with only the word “research” and some broad generalizations about what is in common. However, their internal methods of operation can vary widely. | |||

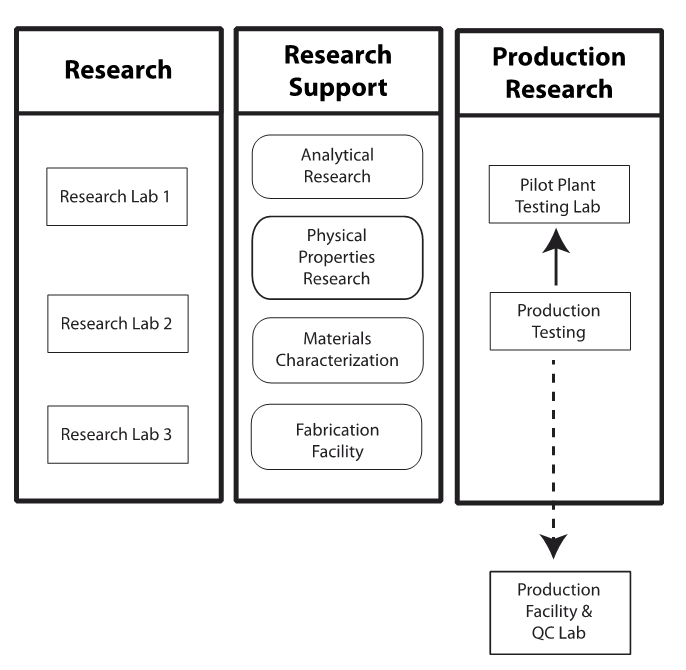

We’ll begin by looking at the working environment. Figure 1 shows the functions we need to consider. That model is based on the author’s experience; however, it fits many applied research groups in different industries whose work is intended to lead to new and improved products. The names of the labs may change to include [[microbiology]], [[toxicology]], electronics, [[Forensic science|forensics]], etc., depending on the industry, but the functional behavior will be similar. | |||

[[File:Fig1 Liscouski SciStudGuideLabInfo23.png|686px]] | |||

{{clear}} | |||

{| | |||

| style="vertical-align:top;" | | |||

{| border="0" cellpadding="5" cellspacing="0" width="686px" | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |<blockquote>'''Figure 1.''' An organizational structure of an R&D group.</blockquote> | |||

|- | |||

|} | |||

|} | |||

The labs I was working in supported R&D in polymers and pharmaceuticals because that's what the overarching company had broad interest in. The research labs (left column of Figure 1) were focused on those projects. The other facilities consisted of: | |||

*Analytical research: This lab had four functions: routine chemical analysis in support of the research labs, new method development to support both research and production [[quality control]] (QC), non-routine analytical work to address special projects, and process monitoring that tested the accuracy of the production QC labs (several production facilities were making different products). | |||

*Physical properties research: Similar in function to the analytical lab, this lab measured the physical properties of polymers instead of performing chemical analysis. | |||

*Materials characterization: This group worked with research and special projects looking at the composition of polymers and their properties such as rheology, molecular weight distribution, and other characteristics. | |||

*Fabrication: The fabrication facility processed experimental polymers into blends, films, and other components that could be further tested in the physical properties lab. | |||

Once an experimental material reached a stage where it was ready for scale-up development, it entered the pilot plant, where production processes were designed and tested to see if the material could be made in larger quantities and still retain its desirable properties (i.e., effectively produced to scale). A dedicated testing lab supported the pilot plant to do raw materials, in-process, and post-production testing. If a product met its goals, it was moved to a production facility for larger-scale testing and eventually commercial production. | |||

===Intra-lab workflows=== | |||

Let’s look at each of these support lab groups more closely and examine how their [[workflow]]s relate. | |||

====Analytical research==== | |||

The workflows in this lab fell into two categories: routine testing (i.e., the service lab model) and research. In the routine testing portion, samples could come from the research labs, production facilities, and the pilot plant testing lab. The research work could come from salespeople (e.g., “We found this in a sample of a competitive product, what is it?”, “Our customer asked us to analyze this," etc.), customer support trying to solve customer issues, and researchers developing test methods to support research. The methods used for analysis could come from various sources depending on the industry, e.g., standards organizations such as ASTM International (formerly American Society for Testing Materials, but their scope expanded over time), peer-reviewed academic journals, vendors, and intra-organizational sources. | |||

====Physical properties research==== | |||

The work here was predominately routine testing (i.e., the service lab model). Although samples could come from a variety of sources, as with analytical research, the test methods were standardized and came from groups like ASTM, and in some cases the customers of the company's products. Standardized procedures were used to compare results to testing by other organizations, including potential customers. Labs like this are today found in a variety of industries, including pharmaceuticals, where the lab might be responsible for tablet uniformity testing, among other things. | |||

====Materials characterization==== | |||

As noted, this lab performed work that fell between the analytical and physical properties labs. While their test protocols were standardized within the labs, the nature of the materials they worked on involved individual considerations on how the analysis should be approached and the results interpreted. At one level, they were a service lab and followed that behavior. On another, the execution of testing required more than "just another sample" thinking. | |||

====Fabrication==== | |||

The fabrication facility processed materials from a variety of sources: evaluation samples from both the production facility and pilot plant, as well as competitive material evaluation from the research labs. They also did parts fabrication for testing in the physical properties lab. Some physical tests required plastic materials formed into special shapes; for example, tensile bars for tensile strength testing (test bars are stretched to see how they deformed and eventually failed). The sample sizes they worked with ranged from a few pounds to thousands of pounds (e.g., film production). | |||

The pilot plant testing lab did evaluations on scaled-up processing materials. They had to be located within the pilot plant for fast turn-around testing, including on-demand work and routine analysis. They also serviced process chromatographs for in-line testing. Their test procedures came from both the chemical and physical labs as they were responsible for a variety of tests on small samples; anything larger was sent to the analytical research labs. The pilot plant testing lab followed a service lab model. | |||

===On the service lab model and research in general=== | |||

The service lab model has been noted several times and is common in most industries. The details of sample types and testing will vary, but the operational behavior will be the same and will work like this: | |||

#Samples are submitted for testing. In many labs, these are done on paper forms listing sample type, testing to be done, whom to bill, and a description of the sample and any unique concerns or issues. In labs with a LIMS, this can be done online by lab personnel or the sample submitter. | |||

#The work is logged in (electronically using a LIMS or manually for paper-based systems), and rush samples are brought to management's attention. Note that in the pilot plant test lab, everything is in a rush as the next steps in the plant’s work may depend upon the results. | |||

#Analysts generate worklists (whether electronic or paper, an ordered representation of sample or specimen locations and what analyses must be performed upon them by a specific instrument and/or analyst using specified procedures) and perform the required analysis, and results are recorded in the LIMS or [[laboratory notebook]]s. | |||

#The work is reviewed and approved for release and, in paper systems, recorded on the submission forms. | |||

#Reports are sent to whoever submitted the work electronically, or via the method the submitter requested. | |||

Work from non-routine samples may be logged in under “special projects,” though it may create the need for additional testing. | |||

There is no similar model for research work besides project descriptions, initial project outlines, etc. The nature of the work will change as the project progresses and more is learned. Recording results, observations, plans, etc., requires a flexible medium capable of maintaining notes, printouts, charts, and other forms of information. As a result, ELNs are modular systems consisting of a central application with the ability to link to a variety of functional modules such as graphics, statistics, molecular drawing, reaction databases, user-define database structures to hold experimental data, and more. For additional details, see ''[[LII:The Application of Informatics to Scientific Work: Laboratory Informatics for Newbies|The Application of Informatics to Scientific Work: Laboratory Informatics for Newbies]]''. | |||

====The role of laboratory informatics==== | |||

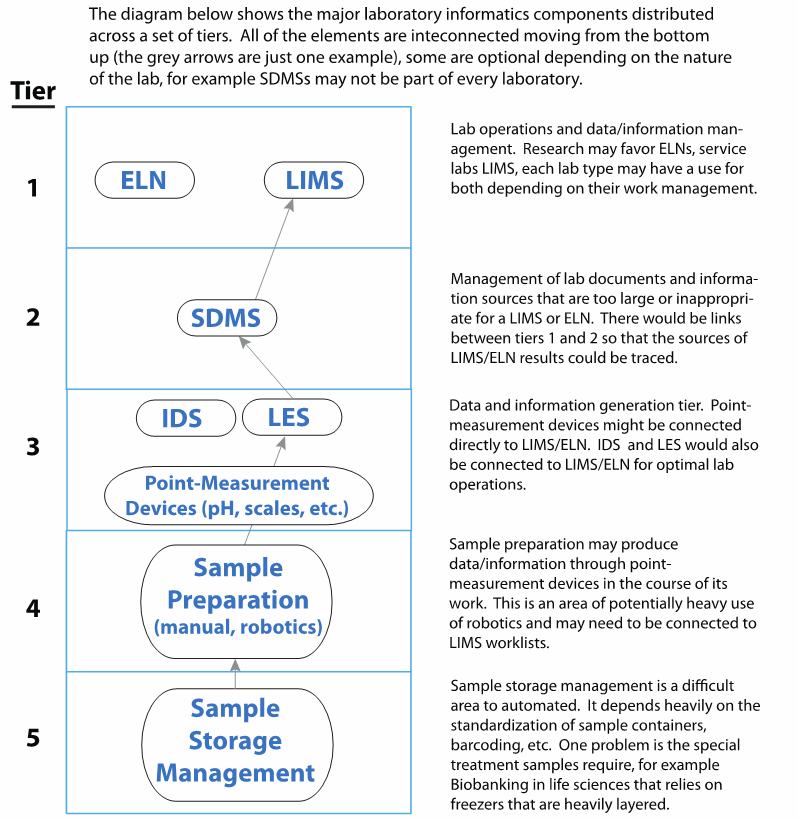

Lab informatics has several tiers of systems (Figure 2) that can be applied to lab work to make it more effective and efficient. | |||

[[File:Fig2 Liscouski SciStudGuideLabInfo23.png|700px]] | |||

{{clear}} | |||

{| | |||

| style="vertical-align:top;" | | |||

{| border="0" cellpadding="5" cellspacing="0" width="700px" | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |<blockquote>'''Figure 2.''' Tiered structure of laboratory informatics.</blockquote> | |||

|- | |||

|} | |||

|} | |||

The top tier consists of ELNs and LIMS, while supporting those systems are SDMS, as well as [[laboratory execution system]]s (LES) and IDS (which are are a combination of instruments and computer systems). Typical examples are [[chromatography data system]]s (CDS) connected to one or more [[Chromatography|chromatographs]], a [[Mass spectrometry|mass spectrometer]] connected to a dedicated computer, and almost any major instrument in an instrument-computer combination. CDSs are, at this point, unique in their ability to support multiple instruments. Sharing the same tier as LES and IDS are devices like pH meters, balances, and other devices with no databases associated with them; these instruments must be programmed to be used with upper-tier systems. Their data output can be manually entered into a LIMS, ELN, or LES, but in regulated labs, the input has to be verified by a second individual. Below that are mechanisms for sample preparation, management, and storage. Our initial concern will be with the top-tier systems. | |||

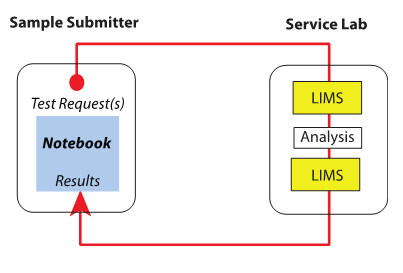

Next we look at the interactions involved in such workflows. The primary interaction between a service lab and someone requesting their services is shown in Figure 3. | |||

[[File:Fig3 Liscouski SciStudGuideLabInfo23.png|400px]] | |||

{{clear}} | |||

{| | |||

| style="vertical-align:top;" | | |||

{| border="0" cellpadding="5" cellspacing="0" width="400px" | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |<blockquote>'''Figure 3.''' Sample request/results flow.</blockquote> | |||

|- | |||

|} | |||

|} | |||

Samples are submitted by the research group or other groups, and the request proceeds through the system as described above. The split between the LIMS in Figure 2 illustrates the separation between logging samples in, the analysis process, and using the LIMS as an administrative tool for completing the work request and returning results to the submitter. Also note that Figure 2 shows the classical assignment of informatics products to labs: LIMS in service labs and notebooks (usually ELNs) to research labs. However, that assignment is an oversimplification; the systems have broader usage in both types of laboratory workflows. In the research labs, work may generate a large amount of testing that has to be done quickly so that the next steps in the experiments can be determined. The demand may be great enough to swamp the service labs, and they wouldn't be able to provide the turn-around time needed for research. In these cases, a LIMS would be added to the research lab's range of informatics tools so that high-demand testing would be done within those labs. Other, less demanding testing would be submitted to the service labs. The research lab LIMS could be an entirely independent installation or work with the same core system as the service labs. The choice would depend on the locations of the labs, the need for instrument connections, how cooperative they are, and corporate politics. | |||

The analytical research and materials characterization labs in Figure 1 could justify an ELN based on research associated with method development work. In addition to providing a means of detailing the research needed to create a new procedure, the ELN would need access to a variety of databases and tools, including chemical reaction modeling, molecular structure representation, published methods retrieval, etc., as well as any corporate research databases that could exist in a large organization. | |||

The fabrication facility could use an ELN to record the progress of any non-routine work that was being done. Equipment operating conditions, problems encountered and solved, and the results of their processing would be noted. This could be coordinated with the pilot plant or product development group’s work. | |||

==Laboratory operations and their associated informatics tools== | |||

Laboratory operations and work can be divided into two levels: | |||

*Data and information generation: This is where lab procedures are executed; the informatics tools consist of LES, IDS, and support for automation and devices such as pH meters, scales, etc. | |||

*Data, information, and operations management: This involves the management of the data and information ultimately generated, as well as the operational processes that led up to the creation that data and information. The informatics tools at this level consist of LIMS, ELN, and SDMS. | |||

The following largely addresses the latter, recognizing that the execution of analytical procedures—both electronically and manually—results in the creation of data and information that must be properly managed. Data, information, and operations management (i.e., laboratory management) involves keeping track of everything that goes on in the lab, including: | |||

*Lab personnel records: The qualifications, personnel files (along with human resources), vacation schedules, education/training, etc. of the lab's personnel. | |||

*Equipment lists and maintenance: Records related to scheduled maintenance, repairs, calibration, qualification for use, and software upgrades, if appropriate. | |||

*General process-related documentation: All lab documents, reports, guidelines, sample records, problems and non-conformities, method descriptions, contacts with vendors, etc. | |||

*Sample-specific records: What samples need work, what is the scope of the work, results, associated reports, etc. | |||

*Inventory: Records related to what materials and equipment (including personal protective equipment) are on hand, but also where those material and equipment are, their age (some materials such as prepared reagents have a limited useful lifetime, in other cases materials may have a limited shelf-life), and any special handling instructions such as storage and disposal. | |||

*Chemical and organism safety: Any special conditions needed for chemicals and organisms, their maintenance, condition, related records (e.g., material safety data sheets), etc. | |||

*Data governance efforts | |||

*Data integrity efforts | |||

*Regulatory compliance efforts: Documentation regarding laboratory effort to meet regulatory requirements or avoid regulatory issues, including preparation for regulatory audits.<ref name="LiscouskiHarnessing23">{{cite web |url=https://www.lablynx.com/wp-content/uploads/2023/06/Article-Harnessing-Informatics-for-Effective-Lab-Inspections-and-Audits.pdf |format=PDF |title=Harnessing Informatics for Effective Lab Inspections and Audits |author=Liscouski, J. |publisher=LabLynx, Inc |date=June 2023 |accessdate=17 November 2023}}</ref> | |||

Many of those laboratory operational aspects will be familiar to you, others less so. The last three bullets may be the least familiar to you. The primary point of lab operations is to produce data and information, which will then be used to support production and research operations. That data and information must be reliable and supportable. People have to trust that the analysis was performed correctly and that it can be relied upon to make decisions about product quality, to determine whether production operations are under control, or to take the next step in a research program. That’s where regulatory compliance data governance, data integrity, and regulatory compliance come in. | |||

===Data governance=== | |||

Data governance refers to the overall management of the availability, usability, integrity, and security of the data employed in an enterprise. It encompasses a set of processes, roles, policies, standards, and metrics that ensure the effective and efficient use of information in enabling an organization to achieve its goals. Its primary purpose is to ensure that data serves the organization's needs and that there's a framework in place to handle data-related issues. | |||

Data governance-related efforts have become more important over time, especially in how data and [[metadata]] are stored and made available. We see this importance come up with recent frameworks such as the FAIR principles, that ask that data and metadata be findable, accessible, interoperable, and reusable.<ref>{{Cite journal |last=Wilkinson |first=Mark D. |last2=Dumontier |first2=Michel |last3=Aalbersberg |first3=IJsbrand Jan |last4=Appleton |first4=Gabrielle |last5=Axton |first5=Myles |last6=Baak |first6=Arie |last7=Blomberg |first7=Niklas |last8=Boiten |first8=Jan-Willem |last9=da Silva Santos |first9=Luiz Bonino |last10=Bourne |first10=Philip E. |last11=Bouwman |first11=Jildau |date=2016-03-15 |title=The FAIR Guiding Principles for scientific data management and stewardship |url=https://www.nature.com/articles/sdata201618 |journal=Scientific Data |language=en |volume=3 |issue=1 |pages=160018 |doi=10.1038/sdata.2016.18 |issn=2052-4463 |pmc=PMC4792175 |pmid=26978244}}</ref> | |||

===Data integrity=== | |||

Data integrity refers to the accuracy and consistency of data over its lifecycle. It ensures that data remains unaltered and uncorrupted unless there's a specific need for a change. It’s focused more on the validity and reliability of data rather than the broader management processes. Its primary purpose is to ensure that data is recorded exactly as intended and, upon retrieval, remains the same as when it was stored.<ref name=":0">{{Cite book |last=Schmitt, S. |year=2016 |title=Assuring Data Integrity for Life Sciences |url=https://www.pda.org/bookstore/product-detail/3149-assuring-data-integrity-for-life-sciences |publisher=DHI Publishing, LLC |isbn=1-933722-97-5}}</ref> | |||

While data governance is a broader concept that deals with the overall management and strategy of data handling in an organization, data integrity is specifically about ensuring the accuracy and reliability of the data. Both are essential for making informed decisions based on the data and ensuring that the data can be trusted. | |||

The topic of data integrity has also increasingly become important.<ref name=":0" /> A framework called ALCOA+ (fully, ALCOA-CCEA) has been developed by the FDA to help define and guide work on data integrity. ALCO+ asks that paper and electronic data and information be attributable, legible, contemporaneous, original, accurate, complete, consistent, enduring, and available, which all further drive data integrity initiatives in the lab and beyond.<ref name="QSCDataInt19">{{cite web |url=https://qscompliance.com/wp-content/uploads/2019/01/ALCOA-Principles.pdf |format=PDF |title=Data Integrity for the FDA Regulated Industry |publisher=Quality Systems Compliance, LLC |date=12 January 2019 |accessdate=17 November 2023}}</ref> For more on this topic, see Schmitt's ''Assuring Data Integrity for Life Sciences''.<ref name=":0" /> | |||

===Regulatory compliance=== | |||

The purpose of placing regulations on laboratory operations varies, but the general idea across them all is to ensure that lab operations are safe, well-managed, and that laboratory data and information are reliable. These regulations cover personnel, their certifications/qualifications, equipment, reagents, safety procedures, and the validity of lab processes, among other things. This includes ensuring that instruments have been calibrated and are in good working order, and that all lab processes have been validated. Laboratory process validation in particular is an interesting aspect and one that causes confusion about what it means and who it applies to. | |||

In the late 1970s, when the original FDA guidelines for manufacturing and laboratory practices appeared, there was considerable concern about what “validation” meant and to what it was applied. The best description of the term is "documented proof that something (e.g., a process) works." The term "validation” is only applied to processes; equipment used during the process has to be "qualified for use." In short, if you are going to carry out a procedure, you have to have documented evidence that the procedure does what it is supposed to do and that the tools used in its execution are appropriate for the need they are to fill. If you are going to generate data and information, do it according to a proven process. This was initially applied to manufacturing and production, including testing and QC, but has since been more broadly used. There were questions about its application to research since the FDA didn’t have oversight over research labs, but those concerns have largely, in industrial circles, disappeared. There is one exception to the FDA's formal oversight in research, which has to do with clinical trials that are still part of the research and development (R&D) process. Product development and production involving human, animal, or food safety, for example, are still subject to regulatory review. | |||

This all culminates down to this: if you you produce data and information, do it through a proven (or even standardized) methodology; how else can you trust the results? | |||

Sources for regulations and guidelines affecting laboratories include: | |||

*'''FDA''': This includes [[21 CFR Part 11]] of the U.S. Code of Federal Regulations, which helps ensure security, integrity, and confidentially of electronic records.<ref name="CFR21_11">{{cite web |url=https://www.ecfr.gov/current/title-21/chapter-I/subchapter-A/part-11 |title=21 CFR Part 11 Electronic Records; Electronic Signatures |work=Code of Federal Regulations |publisher=National Archives |date=11 November 2023 |accessdate=17 November 2023}}</ref> | |||

*'''EPA''': This includes 40 CFR Part 792 on good laboratory practice<ref name="CFR40_792">{{cite web |url=https://www.ecfr.gov/current/title-40/chapter-I/subchapter-R/part-792 |title=40 CFR Part 792 Good Laboratory Practice Standards |work=Code of Federal Regulations |publisher=National Archives |date=15 November 2023 |accessdate=17 November 2023}}</ref> | |||

*'''[[Centers for Medicare and Medicaid Services]] (CMS)''': This includes 42 CFR Part 493 on laboratory requirements for complying with the [[Clinical Laboratory Improvement Amendments]] (CLIA)<ref name="CFR42_493">{{cite web |url=https://www.ecfr.gov/current/title-42/chapter-IV/subchapter-G/part-493 |title=42 CFR Part 493 Laboratory Requirements |work=Code of Federal Regulations |publisher=National Archives |date=13 November 2023 |accessdate=17 November 2023}}</ref> | |||

*'''International Society for Pharmaceutical Engineering (ISPE)''': This includes their work on [[Good Automated Manufacturing Practice]] (GAMP) guidance<ref name="ISPEWhatIsGAMP">{{cite web |url=https://ispe.org/initiatives/regulatory/what-gamp |title=What is GAMP? |publisher=International Society for Pharmaceutical Engineering |date=2023 |accessdate=17 November 2023}}</ref> | |||

*'''American Association for Laboratory Accreditation (A2LA)''': This includes guidelines and accreditation requirements that labs must adhere to as part of accrediting to a particular standard. This includes standards like the [[ISO 9000]] family of standards and [[ISO/IEC 17025]]. The ISO 9000 family is a set of five QMS standards that help organizations ensure that they meet customer and other stakeholder needs within statutory and regulatory requirements related to a product or service. ISO/IEC 17025 ''General requirements for the competence of testing and calibration laboratories'' is the primary standard used by testing and calibration laboratories, and there are special technology considerations to be made when complying. (See ''[[LII:LIMS Selection Guide for ISO/IEC 17025 Laboratories|LIMS Selection Guide for ISO/IEC 17025 Laboratories]]'' for more on this topic.) In most countries, ISO/IEC 17025 is the standard for which most labs must hold accreditation to be deemed technically competent. | |||

A comment should be made about the interplay of regulations, standards, and guidelines. The difference between regulations and standards is that regulations have the law to support their enforcement.<ref name="PankoninReg21">{{cite web |url=https://www.aem.org/news/regulations-vs-standards-clearing-up-the-confusion |title=Regulations vs. Standards: Clearing up the Confusion |author=Pankonin, M. |publisher=Association of Equipment Manufacturers |date=27 May 2021 |accessdate=17 November 2023}}</ref> Standards are consensus agreements that companies, industries, and associations use to define “best practices.” Guidelines are proposed standards of organizational behavior that may or may not eventually become regulations. The FDA regulations for example, began as guidelines, underwent discussion and modification, and eventually became regulations under 21 CFR Part 11. For a more nuanced discussion in one particular lab-related area, materials science, see ''[[LIMS Q&A:What standards and regulations affect a materials testing laboratory?|What standards and regulations affect a materials testing laboratory?]]''. | |||

===Meeting the needs of data governance, data integrity, and regulatory compliance=== | |||

Data governance, data integrity, and regulatory compliance are, to an extent, laboratory cultural issues. Your organization has to instill practices that contribute to meeting the requirements. Informatics tools can provide the means for executing the tasks to meet those needs. Still, first, it is a personnel consideration. | |||

The modern lab essentially has four ways of meeting those needs: | |||

*Paper-based record systems using forms and laboratory notebooks; | |||

*Spreadsheet software; | |||

*LIMS; and | |||

*ELN. | |||

''[[LII:The Application of Informatics to Scientific Work: Laboratory Informatics for Newbies|The Application of Informatics to Scientific Work: Laboratory Informatics for Newbies]]'' addresses these four ways in detail, but we'll briefly discuss these points further here. | |||

Systems like LIMS, [[laboratory information system]]s (LIS), ELN, and LES can assist a lab in meeting regulatory requirements, standards, and guidelines by providing tools to meet enforcement requirements. For example, one common requirement is the need to provide an [[audit trail]] for data and information. (We'll discuss that in more detail later.) While the four product classes noted have audit trails built in, efforts to build a sample tracking system in a spreadsheet often do not have that capability as it would be difficult to implement. Beyond that, the original LES as envisioned by Velquest was intended to provide documented proof that a lab procedure was properly executed by qualified personnel, with qualified equipment and reagents, and documentation for all steps followed along with collected data and information. This again was designed to meet the expectations for a well-run lab. | |||

====Paper-based systems==== | |||

Paper-based systems were once all we had, and they could adequately deal with all of the issues noted as they existed up to the early 1970s. Then, the demands of lab operations and growing regulatory compliance at the end of that decade developed a need for better tools. Compliance with laboratory practices depended on the organization’s enforcement. Enforcement failures led to the development of a formal, enforced regulatory program. | |||

The structure of lab operations changes due to the increasing availability of electronic data capture; having measurements in electronic form made them easier to work with if you had the systems in place to do so. Paper-based systems didn't lend themselves to that. You had to write results down on paper, and in order to later use those results you had to copy them to other documents or re-enter them into a program. In many cases, it was all people could afford, but that was a false economy as the cost of computer-based systems saved considerable time and effort. Electronic systems also afforded lab personnel a wider range of data analysis options, yielding more comprehensive work. | |||

Word processors represent one useful step up from paper-based systems, but word processors lack some of the flexibility of paper. Another drawback is ensuring compliance with regulations and guidelines. Since everything can be edited, an external mechanism has to be used to sign and witness entries. One possible workaround is to print off each day's work and have that signed. However, that simply inherits the problems with managing paper and makes audit trails difficult. | |||

An audit trail is a tool for keeping track of changes to data and information. People make mistakes, something changes, and an entry in a notebook or electronic system has to be corrected or updated. In the laboratory environment, you can't simply erase something or change an entry. Regulatory compliance, organizational guidelines, and data integrity requirements prevent that, which is part of the reason that entries have to be in ink to detect alterations. Changes are made in paper notebooks by lightly crossing out the old data (it still has to be readable), writing the updated information, noting the date and time of the change, why the change was made, and having the new entry signed and witnessed. That process acts as an audit trail, ensuring that results aren’t improperly altered. Paper-based systems require this voluntarily and should be enforced at the organizational level. Electronic laboratory systems do this as part of their design. | |||

Table 1 examines the pros and cons of using a paper-based system in the laboratory. | |||

{| | |||

| style="vertical-align:top;" | | |||

{| class="wikitable" border="1" cellpadding="5" cellspacing="0" width="100%" | |||

|- | |||

| colspan="2" style="background-color:white; padding-left:10px; padding-right:10px;" |'''Table 1.''' The pros and cons of using a paper-based system in the laboratory | |||

|- | |||

! style="padding-left:10px; padding-right:10px;" |Pros | |||

! style="padding-left:10px; padding-right:10px;" |Cons | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |• Low cost<br />• Easily transportable<br />• No power required<br />• Built-in backup copies<br />• High level of flexibility (writing, drawing, charts, etc.)<br />• Signature block for author/witness | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |• May be difficult to read<br />• Easily damaged, destroyed<br />• No/limited loss prevention<br />• Manual searches<br />• Data/information must be copied to be used<br />• Difficult to integrate with other users data<br />• High cost of use, everything is manual<br />• Audit practices are manual and either self or organizationally enforced | |||

|- | |||

|} | |||

|} | |||

====Spreadsheet software==== | |||

Spreadsheets have benefited many applications that need easy-to-produce calculation and database systems for home, office, and administrative work. The word "laboratory" doesn't appear in that sentence because of the demands for data integrity in laboratory applications. Spreadsheet applications are easy to produce for calculations, graphs, etc. Their ease of use and openness to undocumented modifications make them a poor choice for routine calculations. They're attractive because of their simplicity of use and inherent power, but they prove inappropriate for their lack of controls over editing, lack of audit trails, etc. If you need a routine calculation package, it should be done according to standard software development guidelines. One major drawback is the difficulty of validating a spreadsheet, instituting controls, and ensuring that the scripting hasn’t been tampered with. Spreadsheets don't accommodate any of those requirements; spreadsheets are tools, and the applications need to be built. Purpose-built laboratory database systems such as LIMS, on the other hand, are much better equipped to handle those issues. | |||

Some have turned to developing "spreadsheet-based LIMS." This type of project development requires a formal software development effort; it's not just building a spreadsheet and populating it with data and formulas. Current industrial lab operations are subject to corporate and regulatory guidelines, and violating them can have serious repercussions, including shutting down the lab or production facility. Software development requires documentation, user requirement documents, design documents, user manuals, and materials to support the software. What looks like a fun project may become a significant part of your work. This impacts the cost factor; the development and support costs of a spreadsheet-based LIMS may occupy your entire time. | |||

Performance is another matter. Spreadsheet systems typically allow a single user at a time. They don't have the underlying database management support needed for simultaneous multi-user operations. This is one reason spreadsheet implementations give way to replacements with LIMS in industrial labs. | |||

For more on the topic of transitioning from paper to spreadsheets to LIMS, see the article "Improving Lab Systems: From Paper to Spreadsheets to LIMS."<ref name="LiscouskiImproving22">{{cite web |url=https://www.lablynx.com/wp-content/uploads/2023/03/Improving-Lab-Systems-From-Paper-to-Spreadsheets-to-LIMS.pdf |format=PDF |title=Improving Lab Systems: From Paper to Spreadsheets to LIMS |author=Liscouski, J. |publisher=LabLynx, Inc |date=April 2022 |accessdate=17 November 2023}}</ref> This article will be helpful to you in considering a direction for laboratory informatics development and planning, describing the strengths and weaknesses of different approaches. It also contains references to regulatory compliance documents on the use of spreadsheets. | |||

====LIMS and ELN==== | |||

The commercial software market has evaluated the needs of research and service laboratory operations and developed software specifically designed to address those points. LIMS was designed to meet the requirements of service laboratories such as QC testing, contract testing, analytical lab, and similar groups. The important item is not the name of the lab but its operational characteristics—the processes used—as described earlier. The same software systems could easily be at home in a research lab that conducts a lot of routine testing on samples. If the lab has sets of samples, it needs to keep track of testing, generating worklists, and reporting results on a continuing basis; a LIMS may well be appropriate for their work. It is designed for a highly structured operational environment as described earlier. | |||

ELNs are useful in an operational environment that is less structured, whose needs and direction may change and whose data storage and analysis requirements are more fluid. That is usually in labs designated as "research," but not exclusively. For example, an analytical lab doing method development could use an ELN to support that work. | |||

Both LIMS and ELNs are “top tier” levels of software. LIMS may be subordinate to an ELN in a research lab whose work includes routine testing. Functionality in the "Needs" list that is not part of the products (functionality is determined by the vendor, usually with customer input) can be supplied by offerings from third-party vendors. Those can consist of applications that can be linked to the LIMS and ELN, or be completely independent products. | |||

Table 2 describes characteristics of LIMS and ELN. | |||

{| | |||

| style="vertical-align:top;" | | |||

{| class="wikitable" border="1" cellpadding="5" cellspacing="0" width="70%" | |||

|- | |||

| colspan="2" style="background-color:white; padding-left:10px; padding-right:10px;" |'''Table 2.''' Characteristics of LIMS and ELN | |||

|- | |||

! style="padding-left:10px; padding-right:10px;" |LIMS | |||

! style="padding-left:10px; padding-right:10px;" |ELN | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |• LIMS has a well-defined database structure and the software components to work with it and support its underlying processes.<br /> | |||

• It is purpose-built to manage sample logins, sample tracking, results entry, results tracking, reporting, etc. These comprise its core functionality.<br /> | |||

• In addition, LIMS may include instrument maintenance databases, trend analysis, inventory, personnel records, etc. | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |• ELNs have modules that can be organized to support projects and manage data according the research process used in that lab's work.<br /> | |||

• Some ELNs, at the vendors discretion, will contain graphics modules, inventory modules, and links to third-party applications such as reaction databases, molecular modeling, and statistical analysis. | |||

|- | |||

|} | |||

|} | |||

====Supporting tiers of software==== | |||

There are limits to what a vendor can or wants to include in their products. The more functionality, the higher the cost to the customer, and the more complex the support issue becomes. One of those areas is data storage. | |||

The results storage in LIMS is usually limited to sets of numerical values or alphanumeric strings, e.g., the color of something. Those results are often based on the analysis of larger data files from instruments, images, or other sources, files too large to be accommodated within a LIMS. That problem was solved in at least two ways: the creation of the SDMS and IDS. From the standpoint of data files, both have some elements in common, though IDSs are more limited. | |||

The SDMS acts as a large file cabinet, where different types of materials can be put into them—images, charts, scanned documents, data files, etc.—and then be referenced by the LIMS or ELN. The results sit in LIMS or ELN, and supporting information is linked to the results in the SDMS. That keeps the LIMS and ELN databases easier to structure while still supporting the lab's needs to manage large files from a variety of sources and formats. | |||

That same facility is available through an IDS, but only for the devices that the IDS is connected to, e.g., chromatographs, spectrometers, etc. In addition to that function, IDSs support instrumental data collection, analysis, and reporting. They can also be linked to LIMS and ELNs by electronically receiving worklists and then electronically sending the results back, thus avoiding the need for entering the data manually. | |||

The LES is also an aid to the laboratory, ensuring that laboratory methods and procedures are carried out correctly, with documented support for each step in the method. They can be seen as a quasi-automation system, except instead of automation hardware doing the work, the laboratorian is doing it, guided step-by-step by strict adherence to the method as embedded in software. The problem that this type of system, originally styled as an “electronic laboratory notebook” by Velquest Inc. (later purchased by [[Vendor:Accelrys, Inc.|Accelrys]]) was to ensure that there was documented evidence that every step in a lab procedure was properly carried out by qualified personnel, using calibrated/maintained instruments, current reagents, with all data electronically captured with potential links to LIMS or other systems. That system would provide bullet-proof support for data and information generated by procedures in regulated environments. That in turn means that the data/information produced is reliable and can stand up to challenges. | |||

Implementations of LES have ranged from stand-alone applications that linked to LIMS, ELNs, and SDMS, to programmable components of LIMS and ELNs. Instead of having a stand-alone framework to implement an LES, as with Velquest's product, embedded systems provide a scripting (i.e., programming by the user) facility within a LIMS that has access to the entire LIMS database, avoiding the need to interface two products. That embedded facility put a lot of pressure on the programmer to properly design, test, and validate each procedure in an isolated structure, separate from the active LIMS so that programming errors didn’t compromise the integrity of the database. Note: procedure execution in stand-alone systems requires validation as well. | |||

As for the IDS, instrument vendors of the late 1960s explored the benefits of connecting laboratory instruments to computer systems to see what could be gained from that combination. One problem they wanted to address was the handling of the large volumes of data that instruments produced. At that time, analog instrument output was often recorded on chart paper, and a single sample through a chromatograph might be recorded on a chart that was one or more feet long. A day’s work generated a lot of paper that had to be evaluated manually, which proved time-consuming and labor-intensive. Vendors have since successfully developed computer-instrument combinations that automatically transform the instrument's analog output to a digital format, and then store and process that data to produce useful information (results), eliminating the manual effort and making labs more cost-effective in the process. This was the first step in instrument automation; the user was still required to introduce the sample into the device. The second step solved that problem in many instances through the creation of automatic samplers that moved the samples into position for analysis. This includes auto-injectors, flow-through cells, and other autosamplers, depending on the analytical technique. | |||

Autosamplers have ranged from devices that used a syringe to take a portion of a sample from a vial and inject it into the instrument, to systems that would carry out some sample preparation tasks and then inject the sample<ref name="Agilent7693A">{{cite web |url=https://www.agilent.com/en/video/7693a-video |title=7693A Automated Liquid Sampler - Video |publisher=Agilent Technologies, Inc |accessdate=17 November 2023}}</ref>, to pneumatic tube-based systems<ref name="BaytekTT">{{cite web |url=https://www.baytekinternational.com/products/turbotube |title=TurboTube for Baytek LIMS software |publisher=Baytek International |accessdate=17 November 2023}}</ref> that would bring vials from a central holding area, inject the sample and then return the sample to holding, where it would be available for further work or disposal. | |||

The key to all this was the IDS computer and its programming that coordinated all these activities, acquired the data, and then processed it. Life was good as long as human intelligence evaluated the results, looked for anomalies, and took remedial action where needed. For a more detailed discussion of this subject please see ''[[LII:Notes on Instrument Data Systems|Notes on Instrument Data Systems]]''. | |||

The instrument data system took us a long way toward an automated laboratory, but there was still some major hurdles to cross, including sample preparation, sample storage management, and systems integration. While much progress has been made, there is a lot more work to do. | |||

==Getting work done quickly and at a low cost== | |||

Productivity is one of the driving factors in laboratory work, as in any production environment. What is the most efficient and effective way to accomplish high-quality work at the lowest possible cost? That may not sound like "science," but it is about doing science in an industrial world, in both research and service labs. That need drives software development, new systems, better instrumentation, and investments in robotics and informatics technologies. | |||

That need was felt most acutely in the clinical chemistry market in the 1980s. In that field, the cost of testing was an annual contractual agreement between the lab and its clients. If costs increased during that contract year, it impacted the lab's income, profits, and ability to function. Through an industry-wide effort, the labs agreed to pursue the concept of total laboratory automation (TLA). That solution involved the labs working with vendors and associations to create a set of communications standards and standardized testing protocols that would enable the clinical chemistry labs to contain costs and greatly increase their level of operational effectiveness. The communications protocols led to the ability to integrate instrumentation and computer systems, streamlining operations and data transfer. The standardized test protocols allowed vendors to develop custom instrumentation tailored to those protocols, and to have personnel educated in their use.<ref name="LeichtleTotal20">{{cite web |url=https://www.aacc.org/cln/articles/2020/december/total-laboratory-automation-samples-on-track |title=Total Laboratory Automation— Samples on Track |work=Clinical Laboratory News |author=Leichtle, A.B. |publisher=Association for Diagnostics & Laboratory Medicine |date=01 December 2020 |accessdate=17 November 2023}}</ref><ref name="LeichtleTotal21">{{cite web |url=https://www.aacc.org/cln/articles/2021/january/total-lab-automation-what-matters-most |title=Total Lab Automation: What Matters Most |work=Clinical Laboratory News |author=Leichtle, A.B. |publisher=Association for Diagnostics & Laboratory Medicine |date=01 January 2021 |accessdate=17 November 2023}}</ref> | |||

The traditional way of improving productivity in industrial processes, whether on the production line or in the laboratory is through automation. This is discussed in detail in ''[[LII:Elements of Laboratory Technology Management|Elements of Laboratory Technology Management]]'' and ''[[LII:Considerations in the Automation of Laboratory Procedures|Considerations in the Automation of Laboratory Procedures]]''. However, we'll elaborate more here. | |||

Connecting an IDS to a LIMS, SDMS, or ELN is one form of automation, in the sense that information about samples and work to be done can travel automatically between a LIMS to an IDS and be processed, with the results getting returned without human intervention. However, making such automation successful requires several conditions: | |||

*Proven, validated procedures and methods; | |||

*A proven, validated automated version of those methods (the method doesn’t have to be re-validated, but the implementation of the method does<ref>{{Cite journal |last=Webster |first=G |last2=Kott |first2=L |last3=Maloney |first3=T |date=2005-06 |title=Considerations When Implementing Automated Methods into GxP Laboratories |url=http://journals.sagepub.com/doi/10.1016/j.jala.2005.03.003 |journal=Journal of the Association for Laboratory Automation |language=en |volume=10 |issue=3 |pages=182–191 |doi=10.1016/j.jala.2005.03.003}}</ref>); | |||

*A clear economic justification for the automation, including sufficient work to be done to make the automation of the process worthwhile; and, | |||

*Standardization in communications and equipment where possible (this is one of the key success factors in clinical chemistry’s TLA program, and the reason for the success in automation and equipment development in processes using microplates). | |||

Robotics is often one of the first things people think of when automation is suggested. It is also one of the more difficult to engineer because of the need for expertise in electromechanical equipment, software development, and interfacing between control systems and the robotic components themselves. If automation is a consideration, then the user should look first to commercial products. Vendors are looking for any opportunity to provide tools for the laboratory market. Many options have already been exploited or are under development. Present your needs to vendors and see what their reaction is. As noted earlier, user needs drove the development of autosamplers, which are essentially robots. Aside from purpose-built robotic add-ons to laboratory instrumentation, a common approach is user-designed robotics. We'll cover more on the subject of user-built systems below, but the bottom line is that they are usually more expensive to design, build, and maintain than the original project plan allows for. | |||

Robotics has a useful role in sample preparation. That requires careful consideration of the source of the samples and their destination. The most successful applications of robotics are in cases where the samples are in a standardized container, and the results of the preparation are similarly standardized. As noted above, this is one reason why sample processing using microplates has been so successful. The standard format of the plates with a fixed set of geometries for sample well placement means that the position of samples is predictable, and equipment can be designed to take advantage of that. | |||

Sample preparation with non-standard containers requires specialized engineering to make adaptations for variations. Early robotics used a variety of grippers to grasp test tubes, flasks, bottles, etc., and in the long run, they were unworkable for long-term applications, often requiring frequent adjustments. | |||

Sample storage management is another area where robotics has a potential role but is constrained by the lack of standardization in sample containers, a point that varies widely by industry. In some cases, samples, particularly those that originate with consumers (e.g., water testing), can come in a variety of containers and they have to be handled manually to organize them in a form that a sample inventory system can manage. Life sciences applications can have standardized formats for samples, but in cases such as [[biobanking]], retrieval issues can arise because the samples may be stored in freezers with multiple levels, making them difficult to access. | |||

A basic sample storage management system would be linked to a LIMS, have an inventory of samples with locations, appropriate environmental controls, a barcode system to make labels machine-readable, and if robotics were considered, be organized so that a robot could have access to all materials without disrupting others. It would also have to interface cleanly with the sample preparation and sample disposal functions. | |||

==Artificial intelligence applications in the lab== | |||

[[Artificial intelligence]] (AI) in the lab is both an easy and difficult subject to write about. It's easy because anything written will be out-of-date as it is produced, and it's difficult because we really have no idea where things are going, and what we think of as an advanced AI now will be superseded next month, and probably has been in classified intelligence circles. With that said, there are still some useful things to say about the subject that would benefit you. | |||

We need to be very cautious about the application of AI to lab work, if nothing else, because you are signing your name to lab results, not the AI. If something is challenged, or found to be a problem, you are responsible and accountable for the work. This is particularly problematic for AI usage since we can’t trace back to the cause of the problem. In traditional programming, we have the code and can find the source of a programming problem. There is no traceback with AI systems as they currently exist. Was there a coding issue? Was there a training issue? Was the training data set biased? | |||

The application of computing technology to lab work has placed layers of computing between the scientist and the actual measurements made in the lab. Each layer holds the potential for problems to develop, and assumptions to be made, which the analyst may not be aware of. Put a sample into the instrument, and the computer produces a report. Between the analog output of the instrument—the actual instruments' measurement—and what the analyst sees we have: | |||

*The analog-to-digital converter (ADC), which reads the signal and converts it to a digital value 9there are different types of ADC, some better suited to some applications than others); | |||

*Noise filtering, which may precede the ADC or exist in the form of digital signal processing; | |||

*Software that converts the raw data to a more usable form, ranging from a simple pass-through (nothing needed), to peak detection, baseline correction, overlapping peak resolution, peak size calculations, etc.; | |||

*Quantitative analysis, based on the previous points for samples and reference materials; | |||

*Monitoring, checking for anomalies and segregation of problem samples; and | |||

*Whatever capabilities an AI provides. | |||

None of this is a problem as long as the analyst is aware of what is going on and has taken steps to ensure that none of those actions adversely affect the results of the analysis. This is the direction that laboratory computing is taking, and it provides a useful basis for laboratory automation systems that will reduce the cost of doing work, and free busy personnel to do other work. AI is another potentially useful layer to streamlining the work you are signing your name to. Similar concerns would also apply to automated sample preparation. | |||

There is a long list of applications that could benefit from AI technologies, and they are being explored and exploited. The question of what those applications are was posed to ChatGPT-4 (the prompt was "What are some good examples of successful applications of artificial intelligence applied to laboratory work?"), and it responded as such: | |||

<blockquote>Certainly! Artificial Intelligence (AI) and Machine Learning (ML) have made significant inroads into laboratory work across various scientific disciplines. Here are some successful applications: | |||

1. Automated Image Analysis: | |||

:- Pathology: Automated detection and classification of tumors in histopathological slides. | |||

:- Microbiology: Automated identification and counting of bacterial colonies or reading antibiotic susceptibility tests. | |||

:- Cell Biology: Analyzing cell morphology, cell counting, or detecting cellular events in microscopy images. | |||

2. Predictive Analytics: | |||

:- Drug Discovery: Predicting drug interactions and potential drug candidates by analyzing chemical structures. | |||

:- Genomics: Predicting potential disease genes or understanding gene function. | |||

3. Laboratory Automation and Robotics: | |||

:- Sample Handling: Robots equipped with AI can adaptively handle and process samples. | |||

:- Optimization: AI can optimize experimental conditions such as reaction times, temperatures, or concentrations. | |||

4. Data Mining: | |||

:- Literature Analysis: AI can scan vast amounts of published research to identify trends, potential collaborations, or overlooked conclusions. | |||

:- High-throughput Screening: Analyzing and identifying compounds with desired properties from large libraries. | |||

5. Spectroscopy and Chromatography: | |||

:- Analyzing complex spectra or chromatograms, distinguishing minute differences or predicting material properties based on spectral data. (https://cen.acs.org/physical-chemistry/computational-chemistry/Computers-learning-analyze-chemists-spectra/101/i7) | |||

6. Predictive Maintenance: | |||

:- Predicting when equipment needs maintenance or identifying early signs of malfunction, ensuring that lab operations run smoothly. | |||

7. Natural Language Processing (NLP): | |||

:- Automated transcription of lab notes or voice commands. | |||

:- Extracting structured data from unstructured lab reports. | |||

8. Proteomics & Metabolomics: | |||

:- Analyzing complex data sets to identify biomarkers or metabolic pathways. | |||

:- Mass spectrometry data interpretation and peptide identification. | |||

9. Clinical Decision Support: | |||

:- Assisting doctors in diagnosing diseases based on lab results, medical history, and other data sources. | |||

10. Environment Monitoring: | |||

:- Detecting pollutants or pathogens in environmental samples using pattern recognition. | |||

11. Simulation & Modeling: | |||

:- Computational chemistry: Predicting molecular behaviors or drug-target interactions. | |||

:- Systems biology: Modeling complex biological systems or pathways. | |||

12. Quality Control & Assurance: | |||

:- Detecting anomalies or outliers in routine lab measurements. | |||

:- Predicting and ensuring the consistency of lab results over time. | |||

13. Biobanking: | |||

:- Optimizing storage and retrieval of biological samples using pattern recognition and predictive analytics. | |||

14. Genetic Analysis and Personalized Medicine: | |||

:- Predicting disease susceptibility or drug response based on genetic data. | |||

15. 3D Bioprinting: | |||

:- Using AI to optimize the design and production of 3D-printed tissues or organs.</blockquote> | |||

These are just a few of the many applications of AI in laboratory settings. As technology advances, it's likely that AI will become an even more integral part of laboratory work, driving efficiencies and enabling new discoveries. | |||

While that is an interesting list, more specifics would be useful. ChatGPT-4 received the follow-up question, "Can you provide reference for those examples?" | |||

<blockquote>Of course! While I can't directly link you to the references, I can mention landmark papers, well-known projects, or general topics that you can further search to access the original sources. Here's a non-exhaustive list based on the applications mentioned: | |||

1. Automated Image Analysis: | |||

:- Esteva, A., et al. (2017). Dermatologist-level classification of skin cancer with deep neural networks. ''Nature''. (''https://www.nature.com/articles/nature21056'') | |||

:- Coudray, N., et al. (2018). Classification and mutation prediction from non–small cell lung cancer histopathology images using deep learning. ''Nature Medicine''. | |||

2. Predictive Analytics: | |||

:- Wallach, I., et al. (2015). AtomNet: A deep convolutional neural network for bioactivity prediction in structure-based drug discovery. ''arXiv'' preprint. | |||

3. Laboratory Automation and Robotics: | |||

:- Gil, Y., et al. (2018). Toward the Geoscience Paper of the Future: Best Practices for Documenting and Sharing Research from Data to Software to Provenance. ''Earth and Space Science''. (''https://agupubs.onlinelibrary.wiley.com/doi/10.1002/2015EA000136'') | |||

4. Data Mining: | |||

:- Jensen, L. J., et al. (2006). STRING 8—a global view on proteins and their functional interactions in 630 organisms. ''Nucleic Acids Research''. | |||

5. Spectroscopy and Chromatography: | |||

:- Pasolli, E., et al. (2016). Machine learning meta-analysis of large metagenomic datasets: tools and biological insights. ''PLoS Computational Biology''. (''https://www.semanticscholar.org/paper/Machine-Learning-Meta-analysis-of-Large-Metagenomic-Pasolli-Truong/b067701cf2a2d54ecde7b4e9c25b843d787b66c2'') | |||

6. Natural Language Processing (NLP): | |||

:- Jensen, L. J., et al. (2006). Literature mining for the biologist: from information retrieval to biological discovery. ''Nature Reviews Genetics''. | |||

7. Proteomics & Metabolomics: | |||

:- Smith, C. A., et al. (2006). XCMS: Processing mass spectrometry data for metabolite profiling using nonlinear peak alignment, matching, and identification. ''Analytical Chemistry''. | |||

8. Clinical Decision Support: | |||

:- Rajkomar, A., et al. (2018). Scalable and accurate deep learning with electronic health records. ''NPJ Digital Medicine''. (''https://pubmed.ncbi.nlm.nih.gov/31304302/'') | |||

9. Environment Monitoring: | |||

:- Aksenov, A. A., et al. (2014). Global survey of the oxygen isotope composition of bioavailable soil water. ''Earth and Planetary Science Letters''. | |||

10. Simulation & Modeling: | |||

:- Duvenaud, D., et al. (2015). Convolutional networks on graphs for learning molecular fingerprints. ''Advances in Neural Information Processing Systems''. | |||

11. Quality Control & Assurance: | |||

:- Hinton, G. E., et al. (2012). Improving neural networks by preventing co-adaptation of feature detectors. ''arXiv'' preprint. (''https://arxiv.org/abs/1207.0580'') | |||

12. Genetic Analysis and Personalized Medicine: | |||

:- LeCun, Y., et al. (2015). Deep learning. ''Nature''. | |||

These references are based on well-accepted papers and findings up to my last training cut-off in January 2022. You might want to search for these papers or topics in databases like Google Scholar, PubMed, or academic journals for full access and more recent works on the subject.</blockquote> | |||

The URLs in italics are ones that have been verified. | |||

This latter list is consistent with material that has been found in advertising and the literature. For example, Mettler Toledo has been advertising an AI system for its thermal analysis products.<ref name="MTAIWizard">{{cite web |url=https://www.mt.com/us/en/home/library/know-how/lab-analytical-instruments/ai-wizard.html |title=AIWizard – Artificial Intelligence for Thermal Analysis |publisher=Mettler Toledo |accessdate=17 November 2023}}</ref> Articles are also available describing the automated analysis of spectra.<ref>{{Cite journal |last=Jung |first=Guwon |last2=Jung |first2=Son Gyo |last3=Cole |first3=Jacqueline M. |date=2023 |title=Automatic materials characterization from infrared spectra using convolutional neural networks |url=http://xlink.rsc.org/?DOI=D2SC05892H |journal=Chemical Science |language=en |volume=14 |issue=13 |pages=3600–3609 |doi=10.1039/D2SC05892H |issn=2041-6520 |pmc=PMC10055241 |pmid=37006683}}</ref> There is even an AI-prompted interview with ChatGPT about how it sees applications to spectroscopy.<ref name="WorkmanAnInt23">{{cite web |url=https://www.spectroscopyonline.com/view/an-interview-with-ai-about-its-potential-role-in-vibrational-and-atomic-spectroscopy |title=An Interview with AI About Its Potential Role in Vibrational and Atomic Spectroscopy |author=Workman Jr., J. |work=Spectroscopy Online |date=17 May 2023 |accessdate=17 November 2023}}</ref> | |||

The approach Mettler takes with thermal analysis is interesting. It presents the results as a suggested analysis of the data and makes it easy for the analysis to adjust settings if it disagrees with that result.<ref name="MTAIWizard" /> It means that the analyst has to examine each result, and after review accepts the responsibility for the work by either making adjustments or accepting things as they are. This requires an experienced analyst. The problems begin if we skip that step in pursuit of fully automated facilities, are in a rush to release results, or put the tools in the hands of inexperienced personnel. | |||

AI offers a great deal of potential benefits to laboratory work, something marketers are going to exploit to attract more customers. Product offerings need to be viewed skeptically as supposed benefits may be less than promised, or not as fully tested as needed. Unlike dealing with spelling checkers that consistently make improper word substitutions, AI-generated errors in data analysis are dangerous because they may go undetected. | |||

==Systems development: Tinkering vs. engineering== | |||

One common practice in laboratory work, particularly in research, is modifying equipment or creating new configurations of equipment and instruments to get work done. That same thought pattern often extends to software development; components such as spreadsheets, compilers, database systems, and so on are common parts of laboratory computer systems. Many people include programming as part of their list of skills. That can lead to the development of special purpose software to solve issues in data handling and analysis. | |||

That activity in an industrial lab is potentially problematic. Organizations have controls over what software development is permitted so that organizational security isn’t compromised and that the development activities do not create problems with organizational or regulatory requirements and guidelines. | |||

If a need for software develops, there are recognized processes for defining, implementing, and validating those projects. One of the best-known in laboratory science comes from ISPE's GAMP guidelines.<ref name="ISPEWhatIsGAMP" /> There is also a discussion of the methodology in ''[[LII:Considerations in the Automation of Laboratory Procedures|Considerations in the Automation of Laboratory Procedures]]''. | |||

The development process begins with a “needs analysis” that describes why the project is being undertaken and what it is supposed to accomplish, along with the benefits of doing it. That is followed by a user-requirements document that has to be agreed upon before the project begins. Once that is done, a prototype system(s) can be developed that will give you a chance to explore different options for development, project requirements, etc., that will form the basis of a design specification for the development of the actual project (whereupon the prototype is scrapped). At this point, the rest of the GAMP process is followed through the completion of the project. The end result is a proven, working, documented system that can be relied upon (based on evidence) to work and be supported. If changes are needed, the backup documentation is there to support that work. | |||

This is an engineering approach to systems development. Those systems may result in software, a sample preparation process, or the implementation of an automated test method. It is needed to ensure that things work, and if the developer is no longer available, the project can still be used, supported, modified, etc., as needed. The organizations investment is protected, the data and information produced can be supported and treated as reliable, and all guidelines and regulations are being met. | |||

==In closing...== | |||

The purpose of this document is to give the student a high-level overview of the purpose and use of the major informatics systems that are commonly used in industrial research and service laboratories. This is an active area of development, with new products and platforms being released annually, usually around major conferences. The links included are starting points to increasing the depth of the material. | |||

==About the author== | |||

Initially educated as a chemist, author Joe Liscouski (joe dot liscouski at gmail dot com) is an experienced laboratory automation/computing professional with over forty years of experience in the field, including the design and development of automation systems (both custom and commercial systems), LIMS, robotics and data interchange standards. He also consults on the use of computing in laboratory work. He has held symposia on validation and presented technical material and short courses on laboratory automation and computing in the U.S., Europe, and Japan. He has worked/consulted in pharmaceutical, biotech, polymer, medical, and government laboratories. His current work centers on working with companies to establish planning programs for lab systems, developing effective support groups, and helping people with the application of automation and information technologies in research and quality control environments. | |||

==References== | ==References== | ||

| Line 46: | Line 468: | ||

<!---Place all category tags here--> | <!---Place all category tags here--> | ||

[[Category:LII:Guides, white papers, and other publications| | [[Category:LII:Guides, white papers, and other publications|Science Student's Guide to Laboratory Informatics]] | ||

Latest revision as of 16:17, 29 March 2024

Title: A Science Student's Guide to Laboratory Informatics

Author for citation: Joe Liscouski, with editorial modifications by Shawn Douglas

License for content: Creative Commons Attribution-ShareAlike 4.0 International

Publication date: November 2023

Introduction

An undergraduate science education aims to teach people what they need to know to pursue a particular scientific discipline; it emphasizes foundational elements of the discipline. In most cases in current science education, the time allotted to teaching a scientific discipline is often insufficient to address the existing and growing knowledge base and deal with the multidisciplinary aspects of executing laboratory work in both industrial and academic settings; the focus is primarily on educational topics. Yet employers in science-based industries want to hire people "ready to work," leaving a significant gap between the goals of science education and the background needed to be productive in the workplace. One example of this gap is found in the lack of emphasis on laboratory informatics in laboratory-adjacent scientific endeavors.

The purpose of this guide is to provide a student with a look at the informatics landscape in industrial labs. The guide has two goals:

- Provide a framework to help the reader understand what they need to know to be both comfortable and effective in an industrial setting, giving them a starting point for learning about the product classes and technologies; and,

- Give an instructor an outline of a survey course should they want to pursue teaching this type of material.

This guide is not intended to provide a textbook-scale level of discussion. It's an annotated map of the laboratory portion of a technological world, identifying critical points of interest and how they relate to one another, while making recommendations for the reader to learn more. Its intent is that in one document you can appreciate what the technologies are, and if you hear their names, you'll be able to understand the technologies' higher-level positioning and function. The details, which are continually developing, will be referenced elsewhere.

Note that this guide references LIMSforum.com on multiple occasions. LIMSforum is an educational forum for laboratory informatics that will ask you to sign in for access to its contents. There is no charge for accessing or using any of the materials; the log-in is for security purposes. Sign-in to LIMSforum can be done with a variety of existing social media accounts, or you can create a new LIMSforum account.