Difference between revisions of "LII:Directions in Laboratory Systems: One Person's Perspective"

Shawndouglas (talk | contribs) (Created stub. Saving and adding more.) |

Shawndouglas (talk | contribs) m (Text replacement - "\[\[PerkinElmer Inc.(.*)" to "[[Vendor:PerkinElmer Inc.$1") |

||

| (19 intermediate revisions by the same user not shown) | |||

| Line 7: | Line 7: | ||

'''Publication date''': November 2021 | '''Publication date''': November 2021 | ||

==The | ==Introduction== | ||

The | The purpose of this work is to provide one person's perspective on planning for the use of computer systems in the [[laboratory]], and with it a means of developing a direction for the future. Rather than concentrating on “science first, support systems second,” it reverses that order, recommending the construction of a solid support structure before populating the lab with systems and processes that produce knowledge, information, and data (K/I/D). | ||

===Intended audience=== | |||

This material is intended for those working in laboratories of all types. The biggest benefit will come to those working in startup labs since they have a clean slate to work with, as well as those freshly entering into scientific work as it will help them understand the roles of various systems. Those working in existing labs will also benefit by seeing a different perspective than they may be used to, giving them an alternative path for evaluating their current structure and how they might adjust it to improve operations. | |||

However, all labs in a given industry can benefit from this guide since one of its key points is the development of industry-wide guidelines to solving technology management and planning issues, improving personnel development, and more effectively addressing common projects in automation, instrument communications, and vendor relationships (resulting in lower costs and higher success rates). This would also provide a basis for evaluating new technologies (reducing risks to early adopters) and fostering product development with the necessary product requirements in a particular industry. | |||

===About the content=== | |||

This material follows in the footsteps of more than 15 years of writing and presentation on the topic. That writing and presentation—[[Book:LIMSjournal - Laboratory Technology Special Edition|compiled here]]—includes: | |||

*[[LII:Are You a Laboratory Automation Engineer?|''Are You a Laboratory Automation Engineer?'' (2006)]] | |||

*[[LII:Elements of Laboratory Technology Management|''Elements of Laboratory Technology Management'' (2014)]] | |||

*[[LII:A Guide for Management: Successfully Applying Laboratory Systems to Your Organization's Work|''A Guide for Management: Successfully Applying Laboratory Systems to Your Organization's Work'' (2018)]] | |||

*[[LII:Laboratory Technology Management & Planning|''Laboratory Technology Management & Planning'' (2019)]] | |||

*[[LII:Notes on Instrument Data Systems|''Notes on Instrument Data Systems'' (2020)]] | |||

*[[LII:Laboratory Technology Planning and Management: The Practice of Laboratory Systems Engineering|''Laboratory Technology Planning and Management: The Practice of Laboratory Systems Engineering'' (2020)]] | |||

*[[LII:Considerations in the Automation of Laboratory Procedures|''Considerations in the Automation of Laboratory Procedures'' (2021)]] | |||

*[[LII:The Application of Informatics to Scientific Work: Laboratory Informatics for Newbies|''The Application of Informatics to Scientific Work: Laboratory Informatics for Newbies'' (2021)]] | |||

While that material covers some of the “where do we go from here” discussions, I want to bring a lot of it together in one spot so that we can see what the entire picture looks like, while still leaving some of the details to the titles above. Admittedly, there have been some changes in thinking over time from what was presented in those pieces. For example, the concept of "laboratory automation engineering" has morphed into "laboratory systems engineering," given that in the past 15 years the scope of laboratory automation and computing has broadened significantly. Additionally, references to "scientific manufacturing" are now replaced with "scientific production," since laboratories tend to produce ideas, knowledge, results, information, and data, not tangible widgets. And as the state of laboratories continues to dynamically evolve, there will likely come more changes. | |||

Of special note is 2019's ''Laboratory Technology Management & Planning'' webinars. They provide additional useful background towards what is covered in this guide. | |||

==Looking forward and back: Where do we begin?== | |||

===The "laboratory of the future" and laboratory systems engineering=== | |||

The “laboratory of the future” (LOF) makes for an interesting playground of concepts. People's view of the LOF is often colored by their commercial and research interests. Does the future mean tomorrow, next month, six years, or twenty years from now? In reality, it means all of those time spans coupled with the length of a person's tenure in the lab, and the legacy they want to leave behind. | |||

However, with those varied time spans we’ll need flexibility and adaptability for [[Information management|managing data]] and [[information]] while also preserving access and utility for the products of lab work, and that requires organization and planning. Laboratory equipment will change and storage media and data formats will evolve. The instrumentation used to collect data and information will change, and so will the computers and software applications that manage that data and information. Every resource that has been expended in executing lab work has been to develop knowledge, information, and data (K/I/D). How are you going to meet that management challenge and retain the expected return on investment (ROI)? Answering it will be one of the hallmarks of the LOF. It will require a deliberate plan that touches on every aspect of lab work: people, equipment and systems choices, and relationships with vendors and information technology support groups. Some points reside within the lab while others require coordination with corporate groups, particularly when we address long-term storage, ease of access, and security (both physical and electronic). | However, with those varied time spans we’ll need flexibility and adaptability for [[Information management|managing data]] and [[information]] while also preserving access and utility for the products of lab work, and that requires organization and planning. Laboratory equipment will change and storage media and data formats will evolve. The instrumentation used to collect data and information will change, and so will the computers and software applications that manage that data and information. Every resource that has been expended in executing lab work has been to develop knowledge, information, and data (K/I/D). How are you going to meet that management challenge and retain the expected return on investment (ROI)? Answering it will be one of the hallmarks of the LOF. It will require a deliberate plan that touches on every aspect of lab work: people, equipment and systems choices, and relationships with vendors and information technology support groups. Some points reside within the lab while others require coordination with corporate groups, particularly when we address long-term storage, ease of access, and security (both physical and electronic). | ||

| Line 16: | Line 41: | ||

Modern laboratory work is a merger of science and information technology. Some of the information technology is built into instruments and equipment, the remainder supports those devices or helps manage operations. That technology needs to be understood, planned, and engineered into smoothly functioning systems if labs are to function at a high level of performance. | Modern laboratory work is a merger of science and information technology. Some of the information technology is built into instruments and equipment, the remainder supports those devices or helps manage operations. That technology needs to be understood, planned, and engineered into smoothly functioning systems if labs are to function at a high level of performance. | ||

Given all that, how do we prepare for the LOF, whatever that future turns out to be? One step is the development of “laboratory | Given all that, how do we prepare for the LOF, whatever that future turns out to be? One step is the development of “laboratory systems engineering” as a means of bringing structure and discipline to the use of [[Informatics (academic field)|informatics]], robotics, and [[Laboratory automation|automation]] to lab systems. | ||

But who is the laboratory systems engineer (LSE)? The LSE is someone able to understand and be conversant in both the laboratory science and IT worlds, relating them to each other to the benefit of lab operation effectiveness while guiding IT in performing their roles. A fully dedicated LSE will understand a number of important principles: | |||

#Knowledge, information, and data should always be protected, available, and usable. | |||

#[[Data integrity]] is paramount. | |||

#Systems and their underlying components should be supportable, meaning they are proven to meet users' needs (validated), capable of being modified without causing conflicts with results produced by previous versions, documented, upgradable (without major disruption to lab operations), and able to survive upgrades in connected systems. | |||

#Systems should be integrated into lab operations and not exist as isolated entities, unless there are overriding concerns. | |||

#Systems should be portable, meaning they are able to be relocated and installed where appropriate, and not restricted to a specific combination of hardware and/or software that can’t be duplicated. | |||

#There should be a smooth, reproducible (bi-directional, if appropriate), error-free (including error detection and correction) flow of results, from data generation to the point of use or need. | |||

===But how did we get here?=== | |||

The primary purpose of laboratory work is developing and carrying out scientific methods and experiments, which are used to answer questions. We don’t want to lose sight of that, or the skill needed to do that work. Initially the work was done manually, which inevitably limited the amount of data and information that could be produced, and in turn the rate at which new knowledge could be developed and distributed. | |||

However, the introduction of electronic instruments changed that, and the problem shifted from data and information production to data and information utilization (including the development of new knowledge), distribution, and management. That’s where we are today. | |||

Science plays a role in the production of data and information, as well as the development of knowledge. In between we have the tools used in data and information collection, storage, [[Data analysis|analysis]], etc. That’s what we’ll be talking about in this document. The equipment and concepts we’re concerned with here are the tools used to assist in conducting that work and working with the results. They are enablers and amplifiers of lab processes. As in almost any application, the right tools used well are an asset. | |||

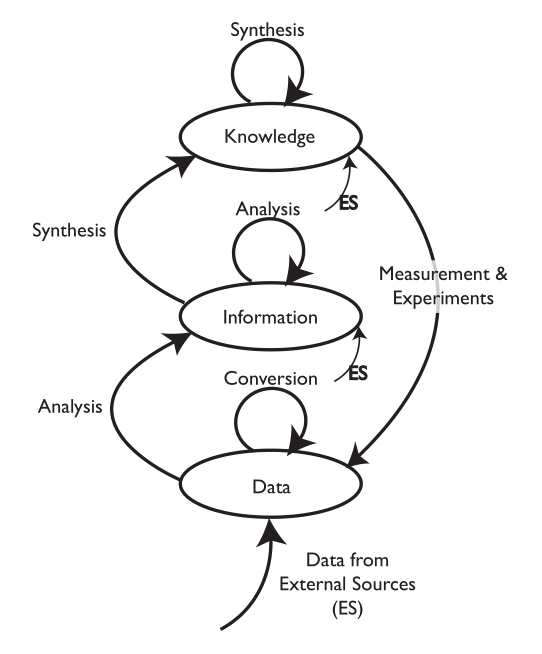

[[File:Fig1 Liscouski DirectLabSysOnePerPersp21.png|400px]] | |||

{{clear}} | |||

{| | |||

| style="vertical-align:top;" | | |||

{| border="0" cellpadding="5" cellspacing="0" width="400px" | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |<blockquote>'''Figure 1.''' Databases for knowledge, information, and data (K/I/D) are represented as ovals, and the processes acting on them as arrows.<ref>{{Cite book |last=Liscouski, J. |year=2015 |title=Computerized Systems in the Modern Laboratory: A Practical Guide |publisher=PDA/DHI |pages=432 |asin=B010EWO06S |isbn=978-1933722863}}</ref></blockquote> | |||

|- | |||

|} | |||

|} | |||

One problem with the distinction between science and informatics tools is that lab personnel understand the science, but they largely don't understand the intricacies of information and computing technologies that comprise the tools they use to facilitate their work. Laboratory personnel are educated in a variety of scientific disciplines, each having its own body of knowledge and practices, each requiring specialization. Shouldn't the same apply to the expertise needed to address the “tools”? On one hand we have [[Chromatography|chromatographers]], [[Spectroscopy|spectroscopists]], physical chemists, toxicologists, etc., and on the other roboticists, database experts, network specialists, and so on. In today's reality these are not IT specialists but rather LSEs who understand how to apply the IT tools to lab work with all the nuances and details needed to be successful. | |||

===Moving forward=== | |||

If we are going to advance laboratory science and its practice, we need the right complement of experienced people. Moving forward to address laboratory- and organization-wide productivity needs a different perspective; rather than ask how we improve things at the bench, ask how we improve the processes and organization. | |||

This guide is about long-term planning for lab automation and K/I/D management. The material in this guide is structured in sections, and each section starts with a summary, so you can read the summary and decide if you want more detail. However, before you toss it on the “later” pile, read the next few bits and then decide what to do. | |||

Long-term planning is essential to organizational success. The longer you put it off, the more expensive it will be, the longer it will take to do it, and the more entrenched behaviors you'll have to overcome. Additionally, you won’t be in a position to take advantage of the developing results. | |||

It’s time to get past the politics and the inertia and move forward. Someone has to take the lead on this, full-time or part-time, depending on the size of your organization (i.e., if there's more than one lab, know that it affects all of them). Leadership should be from the lab side, not the IT side, as IT people may not have the backgrounds needed and may view everything through their organizations priorities. (However, their support will be necessary for a successful outcome.) | |||

The work conducted on the lab bench produces data and information. That is the start of realizing the benefits from research and testing work. The rest depends upon your ability to work with that data and information, which in turn depends on how well your data systems are organized and managed. This culminates in maximizing benefit at the least cost, i.e., ROI. It’s important to you, and it’s important to your organization. | |||

Planning has to be done at least four levels: | |||

#Industry-wide (e.g., biotech, mining, electronics, cosmetics, food and beverage, plastics, etc.) | |||

#Within your organization | |||

#Within your lab | |||

#Within your lab processes | |||

One important aspect of this planning process—particularly at the top, industry-wide level—is the specification of a framework to coordinate product, process (methods), or standards research and development at the lower levels. This industry-wide framework is ideally not a “this is what you must do” but rather a common structure that can be adapted to make the work easier and, as a basis for approaching vendors for products and product modifications that will benefit those in your industry, give them confidence that the requests have a broader market appeal. If an industry-wide approach isn’t feasible, then larger companies may group together to provide the needed leadership. Note, however, that this should not be perceived as an industry/company vs. vendor effort; rather, this is an industry/company working with vendors. The idea of a large group effort is to demonstrate a consensus viewpoint and that vendors' development efforts won’t be in vain. | |||

The development of this framework, among other things, should cover: | |||

*Informatics | |||

*Communications (networking, instrument control and data, informatics control and data, etc.) | |||

*Physical security (including power) | |||

*Data integrity and security | |||

*[[Cybersecurity]] | |||

*The FAIR principles (the findability, accessibility, interoperability, and reusability of data<ref name="WilkinsonTheFAIR16">{{cite journal |title=The FAIR Guiding Principles for scientific data management and stewardship |journal=Scientific Data |author=Wilkinson, M.D.; Dumontier, M.; Aalbersberg, I.J. et al. |volume=3 |pages=160018 |year=2016 |doi=10.1038/sdata.2016.18 |pmid=26978244 |pmc=PMC4792175}}</ref>) | |||

*The application of [[Cloud computing|cloud]] and [[virtualization]] technologies | |||

*Long-term usable access to lab information in databases without vendor controls (i.e., the impact of [[software as a service]] and other software subscription models) | |||

*Bi-directional [[Data exchange|data interchange]] between archived instrument data in standardized formats and vendor software, requiring tamper-proof formats | |||

*Instrument design for automation | |||

*Sample storage management | |||

*Guidance for automation | |||

*Education for lab management and lab personnel | |||

*The conversion of manual methods to semi- or fully automated systems | |||

These topics affect both a lab's science personnel and its LSEs. While some topics will be of more interest to the engineers than the scientists, both groups have a stake in the results, as do any IT groups. | |||

As digital systems become more entrenched in scientific work, we may need to restructure our thinking from “lab bench” and “informatics” to “data and information sources” and “digital tools for working, organizing, and managing those elements." Data and information sources can extend to third-party labs and other published material. We have to move from digital systems causing incremental improvements (today’s approach), to a true revolutionary restructuring of how science is conducted. | |||

==Laboratory computing== | |||

'''Key point''': ''Laboratory systems are planned, designed, and engineered. They are not simply a collection of components. Laboratory computing is a transformational technology, one which has yet to fully emerge in large part because those who work in laboratories with computing aren’t fully educated about it to take advantage of it.'' | |||

Laboratory computing has always been viewed as an "add-on" to traditional laboratory work. These add-ons have the potential to improve our work, make it faster, and make it more productive (see Appendix 1 for more details). | |||

The common point-of-view in the discussion of lab computing has been focused on the laboratory scientist or manager, with IT providing a supporting role. That isn’t the only viewpoint available to us, however. Another viewpoint is from that of the laboratory systems engineer (LSE), who focuses on data and information flow. This latter viewpoint should compel us to reconsider the role of computing in the laboratory and the higher level needs of laboratory operations. | |||

Why is this important? Data and information generation may represent the end of the lab bench process, but it’s just the beginning of its use in broader scientific work. The ability to take advantage of those elements in the scope of manufacturing and corporate research and development (R&D) is where the real value is realized. That requires planning for storage, access, and utilization over the long term. | |||

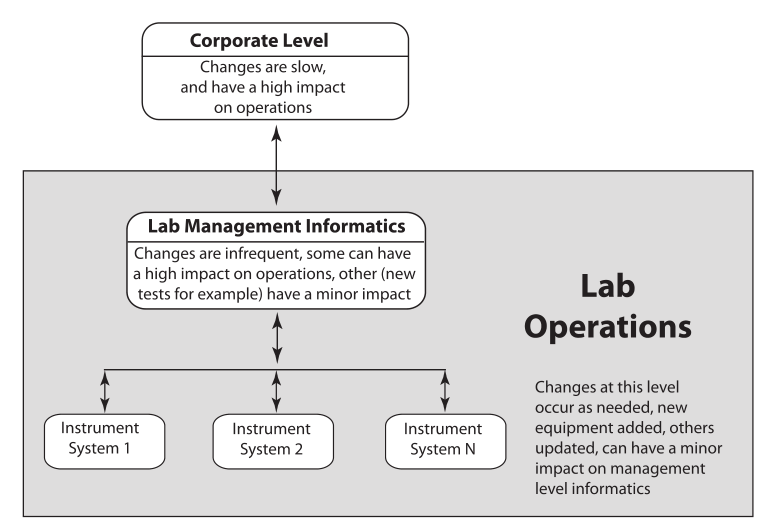

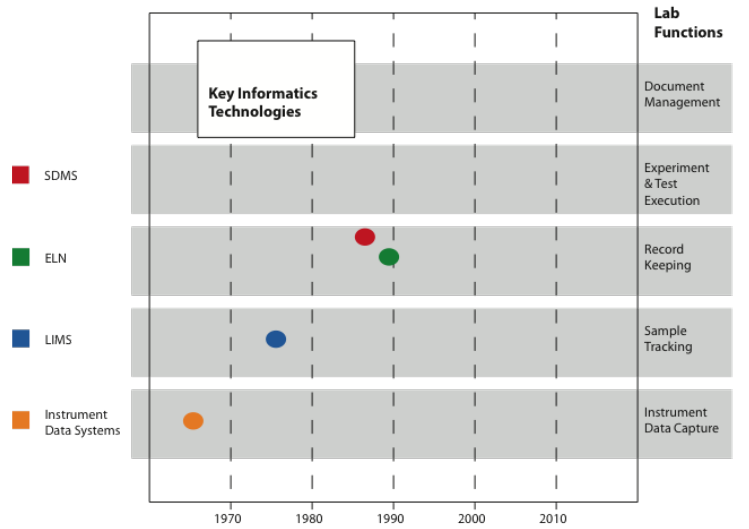

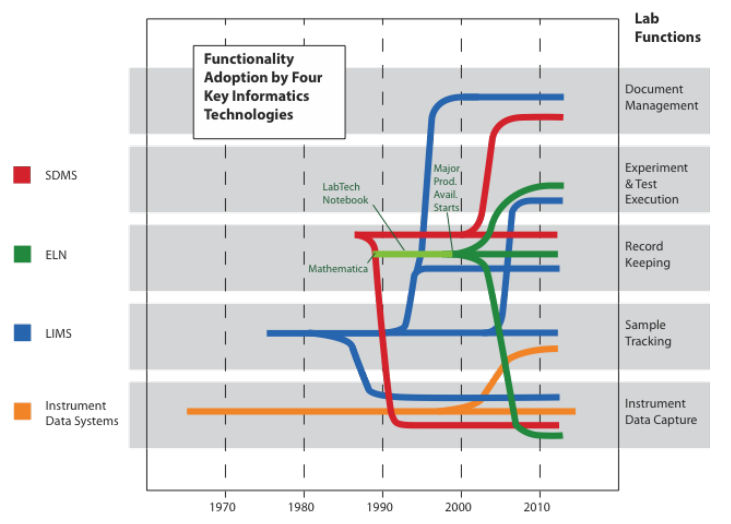

The problem with the traditional point-of-view (i.e., instrumentation first, with computing in a supporting role) is that the data and information landscape is built supporting the portion of lab work that is the most likely to change (Figure 2). You wind up building an information architecture to meet the requirements of diverse data structures instead of making that architecture part of the product purchase criteria. Systems are installed as needs develop, not as part of a pre-planned information architecture. | |||

[[File:Fig2 Liscouski DirectLabSysOnePerPersp21.png|650px]] | |||

{{clear}} | |||

{| | |||

| style="vertical-align:top;" | | |||

{| border="0" cellpadding="5" cellspacing="0" width="650px" | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |<blockquote>'''Figure 2.''' Hierarchy of lab systems, noting frequency of change</blockquote> | |||

|- | |||

|} | |||

|} | |||

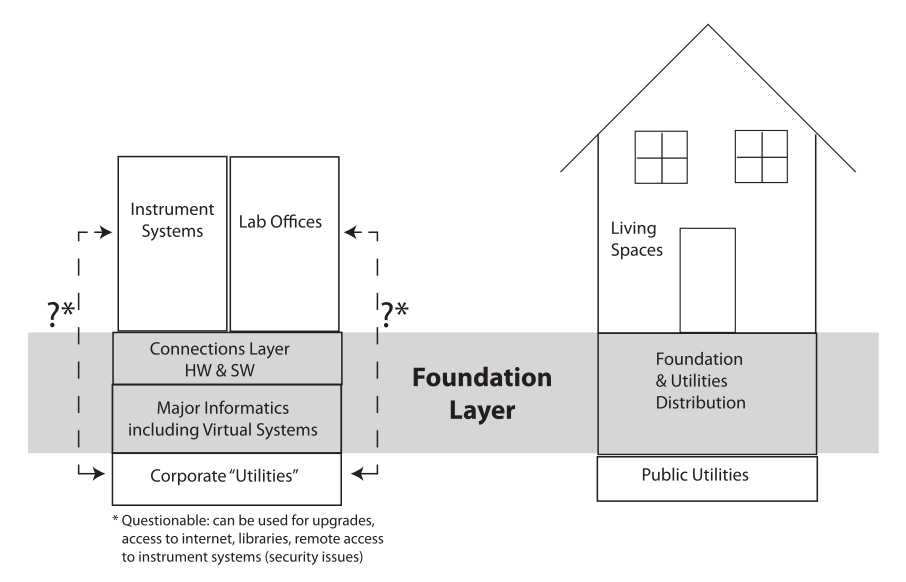

Designing an informatics architecture has some things in common with building a house. You create the foundation first, a supporting structure that everything sits on. Adding the framework sets up the primary living space, which can be modified as needed without disturbing the foundation (Figure 3). If you built the living space first and then wanted to install a foundation, you’d have a mess to deal with. | |||

[[File:Fig3 Liscouski DirectLabSysOnePerPersp21.png|700px]] | |||

{{clear}} | |||

{| | |||

| style="vertical-align:top;" | | |||

{| border="0" cellpadding="5" cellspacing="0" width="700px" | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |<blockquote>'''Figure 3.''' Comparison of foundation and living/working space levels in an organization</blockquote> | |||

|- | |||

|} | |||

|} | |||

The same holds true with [[laboratory informatics]]. Set the foundation—the [[laboratory information management system]] (LIMS), [[electronic laboratory notebook]] (ELN), [[scientific data management system]] (SDMS), etc.—first, then add the data and information generation systems. That gives you a common set of requirements for making connections and can clarify some issues in product selection and systems integration. It may seem backwards if your focus is on data and information production, but as soon as you realize that you have to organize and manage those products, the benefits will be clear. | |||

You might wonder how you go about setting up a LIMS, ELN, etc. before the instrumentation is set. However it isn’t that much of a problem. You know why your lab is there and what kind of work you plan to do. That will guide you in setting up the foundation. The details of tests can be added as need. Most of that depends on having your people educated in what the systems are and how to use them. | |||

Our comparison between a building and information systems does bring up some additional points. A building's access to utilities runs through control points; water and electricity don’t come in from the public supply to each room but run through central control points that include a distribution system with safety and management features. We need the same thing in labs when it comes to network access. In our current society, access to private information for profit is a fact of life. While there are desirable features of lab systems available through network access (remote checking, access to libraries, updates, etc.), they should be controlled so that those with malicious intent are prevented access, and data and information are protected. Should instrument systems and office computers have access to corporate and external networks? That’s your decision and revolves around how you want your lab run, as well as other applicable corporate policies. | |||

The connections layer in Figure 3 is where devices connect to each other and the major informatics layer. This layer includes two functions: basic networking capability and application-to-application transfer. Take for example moving pH measurements to a LIMS or ELN; this is where things can get very messy. You need to define what that is and what the standards are to ensure a well-managed system (more on that when we look at industry-wide guidelines). | |||

To complete the analogy, people do move the living space of a house from one foundation to another, often making for an interesting experience. Similarly, it’s also possible to change the informatics foundation from one product set to another. It means exporting the contents of the database(s) to a product-independent format and then importing into the new system. If you think this is something that might be in your future, make the ability to engage in that process part of the product selection criteria. Like moving a house, it isn’t going to be fun.<ref name="FishOvercoming05">{{cite journal |title=Overcoming the Challenges of a LIMS Migration |journal=Research & Development |author=Fish, M.; Minicuci, D. |volume=47 |issue=2 |year=2005 |url=http://apps.thermoscientific.com/media/SID/Informatics/PDF/Article-Overcoming-the-Challanges.pdf |format=PDF}}</ref><ref name="FishOvercoming13">{{cite web |url=https://www.scientistlive.com/content/overcoming-daunting-business-challenges-lims-migration |title=Overcoming daunting business challenges of a LIMS migration |author=Fish, M.; Minicuci, D. |work=Scientist Live |date=01 April 2013 |accessdate=17 November 2021}}</ref><ref name="FreeLIMSWhat18">{{cite web |url=https://freelims.org/blog/legacy-data-migration-to-lims.html |title=Overcoming the Challenges of Legacy Data Migration |work=FreeLIMS.org |publisher=CloudLIMS.com, LLC |date=29 June 2018 |accessdate=17 November 2021}}</ref> The same holds true for ELN and SDMS. | |||

==How automation affects people's work in the lab== | |||

'''Key point''': ''There are two basic ways lab personnel can approach computing: it’s a black box that they don’t understand but is used as part of their work, or they are fully aware of the equipment's capabilities and limitations and know how to use it to its fullest benefit.'' | |||

While lab personnel may be fully educated in the science behind their work, the role of computing—from pH meters to multi-instrument data systems—may be viewed with a lack of understanding. That is a significant problem because they are responsible for the results that those systems produce, and they may not be aware of what happens to the signals from the instruments, where the limitations lie, and what can turn a well-executed procedure to junk because an instrument or computer setting wasn’t properly evaluated and used. | |||

In reality, automation has both a technical impact on their work and an impact on themselves. These are outlined below. | |||

Technical impact on work: | |||

*It can make routine work easier and more productive, reducing costs and improving ROI (more on that below). | |||

*It can allow work to be performed that might otherwise be too expensive to entertain. There are techniques such as high-throughput screening and statistical experimental design that are useful in laboratory work but might be avoided because the effort of generating the needed data is too labor-intensive and time-consuming. Automated systems can relieve that problem and produce the volumes of data those techniques require. | |||

*It can improve accuracy and reproducibility. Automated systems, properly designed and implemented, are inherently more reproducible than a corresponding manual system. | |||

*It can increase safety by limiting people's exposure to hazardous situations and materials. | |||

*It can also be a financial hole if proper planning and engineering aren’t properly applied to a project. “Scope creep,” changes in direction, and changes in project requirements and personnel are key reasons that projects are delayed or fail. | |||

Impact on the personnel themselves: | |||

*It can increase technical specialization, potentially improving work opportunities and people's job satisfaction. Having people move into a new technology area gives them an opportunity to grow both personally and professionally. | |||

*Full automation of a process can cause some jobs to end, or at least change them significantly (more on that below). | |||

*It can elevate routine work to more significant supervisory roles. | |||

Most of these impacts are straightforward to understand, but several require further elaboration. | |||

===It can make routine work easier and more productive, reducing costs and improving ROI=== | |||

This sounds like a standard marketing pitch; is there any evidence to support it? In the 1980s, clinical chemistry labs were faced with a problem: the cost for their services was set on an annual basis without any adjustments permitted for rising costs during that period. If costs rose, income dropped; the more testing they did the worse the problem became. They addressed this problem as a community, and that was a key factor in their success. Clinical chemistry labs do testing on materials taken from people and animals and run standardized tests. This is the kind of environment that automation was created for, and they, as a community, embarked on a total laboratory automation (TLA) program. That program had a number of factors: education, standardization of equipment (the tests were standardized so the vendors knew exactly what they needed in equipment capabilities), and the development of instrument and computer communications protocols that enabled the transfer of data and information between devices (application to application). | |||

Organizations such as the American Society for Clinical Laboratory Science (ASCLS) and the American Association for Clinical Chemistry (AACC), as well as many others, provide members with education and industry-wide support organization. Other examples include the Clinical and Laboratory Standards Institute (CLSI), a non-profit organization that develops standards for laboratory automation and informatics (e.g., AUTO03-A2 on laboratory automation<ref name="CLSIAUTO03">{{cite web |url=https://clsi.org/standards/products/automation-and-informatics/documents/auto03/ |title=AUTO03 Laboratory Automation: Communications With Automated Clinical Laboratory Systems, Instruments, Devices, and Information Systems, 2nd Edition |publisher=Clinical and Laboratory Standards Institute |date=30 September 2009 |accessdate=17 November 2021}}</ref>), and [[Health Level 7|Health Level Seven, Inc.]], a non-profit organization that provide software standards for [[Data integration|data interoperability]]. | |||

Given that broad, industry-wide effort to address automation issues, the initial response was as follows<ref name="SarkoziTheEff03">{{cite journal |title=The effects of total laboratory automation on the management of a clinical chemistry laboratory. Retrospective analysis of 36 years |journal=Clinica Chimica Acta |author=Sarkozi, L.; Simson, E.; Ramanathan, L. |volume=329 |issue=1–2 |pages=89–94 |year=2003 |doi=10.1016/S0009-8981(03)00020-2}}</ref>: | |||

*Between 1965 and 2000, the Consumer Price Index increased by a factor of 5.5 in the United States. | |||

*During the same 36 years, at Mount Sinai Medical Center's chemistry department, the productivity (indicated as the number of reported test results/employee/year) increased from 10,600 to 104,558 (9.3-fold). | |||

*When expressed in constant 1965 dollars, the total cost per test decreased from $0.79 to $0.15. | |||

In addition, the following data (Table 1 and 2) from Dr. Michael Bissell of Ohio State University provides further insight into the resulting potential increase in labor productivity by implementing TLA in the lab<ref name="BissellTotal14">{{cite web |url=https://www.youtube.com/watch?v=RdwFZyYE_4Q |title=Total Laboratory Automation - Michael Bissell, MD, Ph.D |work=YouTube |publisher=University of Washington |date=15 July 2014 |accessdate=17 November 2021}}</ref>: | |||

{| | |||

| style="vertical-align:top;" | | |||

{| class="wikitable" border="1" cellpadding="5" cellspacing="0" width="100%" | |||

|- | |||

| colspan="4" style="background-color:white; padding-left:10px; padding-right:10px;" |'''Table 1.''' Overall productivity of labor<br /> <br />FTE = Full-time equivalent; TLA = Total laboratory automation | |||

|- | |||

! style="padding-left:10px; padding-right:10px;" |Ratio | |||

! style="padding-left:10px; padding-right:10px;" |Pre-TLA | |||

! style="padding-left:10px; padding-right:10px;" |Post-Phase 1 | |||

! style="padding-left:10px; padding-right:10px;" |Change | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Test/FTE | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |50,813 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |64,039 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" | +27% | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Tests/Tech FTE | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |80,058 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |89,120 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" | +11% | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Tests/Paid hour | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |20.8 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |52.9 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" | +24% | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Tests/Worked hour | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |24.4 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |30.8 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" | +26% | |||

|- | |||

|} | |||

|} | |||

{| | |||

| style="vertical-align:top;" | | |||

{| class="wikitable" border="1" cellpadding="5" cellspacing="0" width="100%" | |||

|- | |||

| colspan="4" style="background-color:white; padding-left:10px; padding-right:10px;" |'''Table 2.''' Productivity of labor-processing area<br /> <br />FTE = Full-time equivalent; TLA = Total laboratory automation | |||

|- | |||

! style="padding-left:10px; padding-right:10px;" |Ratio | |||

! style="padding-left:10px; padding-right:10px;" |Pre-TLA | |||

! style="padding-left:10px; padding-right:10px;" |Post-Phase 1 | |||

! style="padding-left:10px; padding-right:10px;" |Change | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Specimens/Processing FTE | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |39,899 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |68,708 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" | +72% | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Specimens/Processing paid hour | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |19.1 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |33.0 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" | +73% | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Requests/Processing paid hour | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |12.7 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |21.5 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" | +69% | |||

|- | |||

|} | |||

|} | |||

Through TLA, improvements can be seen in: | |||

*Improved sample throughput | |||

*Cost reduction | |||

*Less variability in the data | |||

*Reduced and more predictable consumption of materials | |||

*Improved use of people's talents | |||

While this data is almost 20 years old, it illustrates the impact in a change from a manual system to an automated lab environment. It also gives us an idea of what might be expected if industry- ir community-wide automation programs were developed. | |||

Clinical laboratories are not unique in the potential to organize industry-wide standardized aspects of technology and work, and provide education. The same can be done anywhere as long as the ability to perform a particular test procedure isn’t viewed as a unique competitive advantage. The emerging ''[[Cannabis]]'' testing industry represents one such opportunity, among others. | |||

The clinical industry has provided a template for the development of laboratory systems and automation. The list of instruments that meet the clinical communications standard continues to grow (e.g., Waters' MassLynx LIMS Interface<ref name="WatersMassLynx16">{{cite web |url=https://www.waters.com/webassets/cms/library/docs/720005731en%20Masslynx%20LIMS%20Interface.pdf |format=PDF |title=MassLynx LIMS Interface |publisher=Waters Corporation |date=November 2016 |accessdate=17 November 2021}}</ref>). There is nothing unique about the communications standards that prevent them from being used as a basis for development in other industries, aside from the data dictionary. As such, we need to move from an every-lab-for-itself approach to lab systems development toward a more cooperative and synergistic model. | |||

===Automation can cause some jobs to end, or at least change them significantly=== | |||

One of the problems that managers and the people under them get concerned about is change. No matter how beneficial the change is for the organization, it raises people’s anxiety levels and can affect their job performance unless they are prepared for it. In that context, questions and concerns staff may have in relation to automating aspects of a job include: | |||

*How are these changes going to affect my job and my income? Will it cause me to lose my job? That’s about as basic as it gets, and it can impact people at any organizational level. | |||

*Bringing in new technologies and products means learning new things, and that opens up the possibility that people may fail or not be as effective as they are currently. It can reshuffle the pecking order. | |||

*The process of introducing new equipment, procedures, etc. is going to disrupt the lab's [[workflow]]. The changes may be procedural structural, or both; how are you going to deal with those issues? | |||

Two past examples will highlight different approaches. In the first, a multi-instrument automation system was being introduced. Management told the lab personnel what was going to happen and why, and that they would be part of the final acceptance process. If they weren’t happy, the system would be modified to meet their needs. The system was installed, software written to meet their needs, instruments connected, and the system was tested. Everyone was satisfied except one technician, and that proved to be a major roadblock to putting the system into service. The matter was discussed with the lab manager, who didn’t see the problem; as soon as the system was up and running, that technician would be given a new set of responsibilities, something she was interested in. But no one told her that. As she saw it, once the system came on line she was out of a job. (One of the primary methods used in that lab's work was chromatography, with the instrument output recorded on chart paper. Most measurements were done using peak height, but peak area was used for some critical analyses. Those exacting measurements, made with a planimeter, were her responsibility and her unique—as she saw it—contribution to the lab's work. The instrument system replaced the need for this work. The other technicians and chemists had no problem adapting to the data system.) However, a short discussion between she and the lab manager alleviated the concerns. | |||

The second example was handled a lot differently, and was concerned with the implementation of a lab’s first LIMS. The people in the lab knew something was going on but not the details. Individual meetings were held with each member of the lab team to discuss what was being considered and to learn of their impressions and concerns (these sessions were held with an outside consultant, and the results were reported to management in summary form). Once that was completed, the project started, with the lab manager holding a meeting of all lab personnel and IT, describing what was going to be done, why, how the project was going to proceed, and the role of those working in the lab in determining product requirement and product selection. The concerns raised in the individual sessions were addressed up-front, and staff all understood that no one was going to lose their job, or suffer a pay cut. Yes, some jobs would change, and where appropriate that was discussed with each individual. There was an educational course about what a LIMS was, its role in the lab's work, and how it would improve the lab’s operations. When those sessions were completed, the lab’s personnel looked forward to the project. They were part of the project and the process, rather than having it done without their participation. In short, instead of it happening to them, it happened with them as willing participants. | |||

People's attitudes about automation systems and being willing participants in their development can make a big difference in a project's success or failure. You don’t want people to feel that the incoming system and the questions surrounding it are threatening, or seen as something that is potentially going to end their employment. They may not freely participate or they may leave when you need them the most. | |||

All of this may seem daunting for a lab to take on by itself. Large companies may have the resources to handle it, but we need more than a large company to do this right; we need an industry-wide effort. | |||

==Development of industry-wide guidelines== | |||

'''Key point''': ''The discussion above about clinical labs illustrates what can be accomplished when an industry group focuses on a technology problem. We need to extend that thinking—and action—to a broader range of industries individually. The benefits of an industry-wide approach to addressing technology and education issues include:'' | |||

*''providing a wider range of inputs to solving problems;'' | |||

*''providing a critical marketing mass to lobby vendors to create products that fit customers’ needs in a particular industry;'' | |||

*''developing an organized educational program with emphasis on that industry's requirements;'' | |||

*''giving labs (startup and existing) a well-structured reference point to guide them (not dictate) in making technology decisions;'' | |||

*''reducing the cost of automation, with improved support; and'' | |||

*''where competitive issues aren’t a factor, enabling industry-funded R&D technology development and implementation for production or manufacturing quality, process control (integration of online quality information), and process management.'' | |||

The proposal: each industry group should define a set of guidelines to assist labs in setting up an information infrastructure. For the most part, large sections would end up similar across multiple industries, as there isn’t that much behavioral distinction between some industry sets. The real separation would come in two places: the data dictionaries (data descriptions) and the nature of the testing and automation to implement that testing. Where there is a clear competitive edge to a test or its execution, each company may choose to go it alone, but that still leaves a lot of room for cooperation in method development, addressing both the basic science and its automated implementations, particularly where ASTM, USP, etc. methods are employed. | |||

The benefits of this proposal are noted in the key point above. However, the three most significant ones are arguably: | |||

*the development of an organized education program with an emphasis on industry requirements; | |||

*the development of a robust communications protocol for application-to-application transfers; and, | |||

*the ability to lobby vendors from an industry-wide basis for product development, modification, and support. | |||

In the "Looking forward and back" section earlier in this guide, we showed a bulleted list of considerations for the development of such a guideline-based framework. What follows is a more organized version of those points, separated into three sections, which all need to be addressed in any industry-based framework. For the purposes of this guide, we'll focus primarily on Section C: "Issues that need concerted attention." Section A on background and IT support, and Section B on lab-specific background information, aren't discussed as they are addressed elsewhere, particularly in the previous works referenced in the Introduction. | |||

:'''A.''' General background and routine IT support | |||

::1. Physical security (including power) | |||

::2. Cybersecurity | |||

::3. Cloud and virtualization technologies | |||

:'''B.''' Lab-specific background information | |||

::1. Informatics (could be an industry-specific version of ''ASTM E1578 - 18 Standard Guide for Laboratory Informatics''<ref name="ASTME1578_18">{{cite web |url=https://www.astm.org/Standards/E1578.htm |title=ASTM E1578 - 18 Standard Guide for Laboratory Informatics |publisher=ASTM International |date=2018 |accessdate=17 November 2021}}</ref>) | |||

::2. Sample storage management and organization (see Appendix 1, section 1.4 of this guide) | |||

::3. Guidance for automation | |||

::4: Data integrity and security (see S. Schmitt's ''Assuring Data Integrity for Life Sciences''<ref>{{Cite book |editor-last=Schmitt, S. |title=Assuring Data Integrity for Life Sciences |url=https://www.dhibooks.com/assuring-data-integrity-for-life-sciences |publisher=DHI Publishing |pages=385 |isbn=9781933722979}}</ref>, which has broader application outside the life sciences) | |||

::5. The FAIR principles (the findability, accessibility, interoperability, and reusability of data; see Wilkinson ''et al.'' 2016<ref name="WilkinsonTheFAIR16" />) | |||

:''C.'' Issues that need concerted attention: | |||

::1. Education for lab management and lab personnel | |||

::2. The conversion of manual methods to semi- or full-automated methods (see [[LII:Considerations in the Automation of Laboratory Procedures|''Considerations in the Automation of Laboratory Procedures'']] for more) | |||

::3. Long-term usable access to lab information in databases without vendor controls (i.e., the impact of [[software as a service]] and other software subscription models) | |||

::4. Archived instrument data in standardized formats and standardized vendor software (requires tamper-proof formats) | |||

::5. Instrument design for automation (Most instruments and their support software are dual-use, i.e., they work as stand-alone devices via front panels, or through software controls. While this is a useful selling tool—whether by manual or automated use—it means the device is larger, more complex, and expensive than a automation-only device that uses software [e.g., a smartphone or computer] for everything. Instruments and devices designed for automation should be more compact and permit more efficient automation systems.) | |||

::6. Communications (networking, instrument control and data, informatics control and data, etc.) | |||

We'll now go on to expand upon items C-1, C-3, C-4, and C-6. | |||

===C-1. Education for lab management and lab personnel=== | |||

Laboratory work has become a merger of two disciplines: science and information technology (including robotics). Without the first, nothing happens; without the second, work will proceed but at a slower more costly pace. There are different levels of education requirements. For those working at the lab bench, not only do they need to understand the science, but also how the instrumentation and supporting computers systems (if any, including those embedded in the instrument) make and transform measurements into results. “The computer did it” is not a satisfactory answer to “how did you get that result,” nor is “magic," or maintaining willful ignorance of exactly how the instrument measurements are taken. Laboratorians are responsible and accountable for the results, and they should be able to explain the process of how they were derived, including how settings on the instrument and computer software affect that work. Lab managers should understand the technologies at the functional level and how the systems interact with each other. They are accountable for the overall integrity of the systems and the data and information they contain. IT personnel should understand how lab systems differ from office applications and why business-as-usual for managing office applications doesn’t work in the lab environment. The computer systems are there to support the instruments, and any changes that may affect that relationship should be initiated with care; the instrument vendor’s support for computer systems upgrades is essential. | |||

As such, we need a new personnel position, that of the laboratory systems engineer or LSE, to provide support for the informatics architecture. This isn’t simply an IT person; it should be someone who is fluent in both the science and the information technology applied to lab work. (See [[LII:Laboratory Technology Planning and Management: The Practice of Laboratory Systems Engineering|''Laboratory Technology Planning and Management: The Practice of Laboratory Systems Engineering'']] for more on this topic.) | |||

===C-3. Long-term usable access to lab information in databases without vendor controls=== | |||

The data and information your lab produces, with the assistance of instrumentation and instrument data systems, is yours, and no one should put limits on your ability to work with it. There is a problem with modern software design: most lab data and information can only be viewed through applications software. In some cases, the files may be used with several applications, but often it is the vendor's proprietary formats that limit access. In those instances, you have to maintain licenses for that software for as long as you need access to the data and information, even if the original application has been replaced by something else. This can happen for a variety of reasons: | |||

*Better technology is available from another vendor | |||

*A vendor sold part of its operations to another organization | |||

*Organizations merge | |||

*Completion of a consulting or research contract requires all data to be sent to the contracting organization | |||

All of these, and others, are reasons for maintaining multiple versions of similar datasets that people need access to yet don’t want to maintain licenses for into the future, even though they still must consider meeting regulatory (FDA, EPA, ISO, etc.) requirements. | |||

All of this revolves around your ability to gain value from your data and information without having to pay for its access. The vendors don’t want to give their software away for free either. What we need is something like the relationship between Adobe Acrobat Reader and the Adobe Acrobat application software. The latter gives you the ability to create, modify, comment, etc. documents, while the former allows you to view them. The Reader gives anyone the ability to view the contents, just not alter it. We need a “reader” application for instrument data collected and processed by an instrument data system. We need to be able to view the reports, raw data, etc. and export the data in a useful format, everything short of acquiring and processing new data. This gives you the ability to work with your intellectual property and allows it to be viewed by regulatory agencies if that becomes necessary, without incurring unnecessary costs or depriving the vendor of justifiable income. | |||

This has become increasingly important as vendors have shifted to a subscription model for software licenses in place of one-time payments with additional charges for voluntary upgrades. One example from another realm illustrates the point. My wife keeps all of her recipes in an application on her iPad. One day she looked for a recipe and received a message that read, roughly, “No access unless you upgrade the app <not free>.” As it turned out, a Google search recommended re-installing the current app instead. It worked, but she upgraded anyhow, just to be safe. It’s your content, but who “owns” it if the software vendor can impose controls on how it is used? | |||

As more of our work depends on software, we find ourselves in a constant upgrade loop of new hardware, new operating systems, and new applications just to maintain the status quo. We need more control over what happens to our data. Industry-wide guidelines backed by the buying power of an industry could create vendor policies that would mitigate that. Earlier in this document we noted that the future is going to extend industry change for a long time, with hardware and software evolving in ways we can’t imagine. Hardware changes (anyone remember floppy disks?) inevitably make almost everything obsolete, so how do we protect our access to data and information? Floppy disks were the go-to media 40 years ago, and since then cassette tapes, magnetic tape, Zip drives, CD-ROMs, DVDs, Syquest drives, and other types of storage media have come and gone. Networked systems, at the moment, are the only consistent and reliable means of exchange and storage as datasets increase in size. | |||

One point we have to take into account is that versions of applications will only function on certain versions of operating systems and databases. All three elements are going to evolve, and at some point we’ll have to deal with “old” generations while new ones are coming online. One good answer to that is emulation. Some software systems like VMWare Corporation's VMware allow packages of operating systems, databases, and applications to operate on computers regardless of their age, with each collection residing in a “container”; we can have several generations of those containers residing on a computer and execute them at will, as if they were still running on the original hardware. If you are looking for the data for a particular sample, go to the container that covers its time period and access it. Emulation packages are powerful tools; using them you can even run Atari 2600 games on a Windows or OS X system. | |||

Addressing this issue is generally bigger than what a basic laboratory-based organization can handle, involving policy making and support from information technology support groups and corporate legal and financial management. The policies have to take into account physical locations of servers, support, financing, regulatory support groups, and international laws, even if your company isn’t a multinational (third-party contracting organizations may be). Given this complexity, and the fact that most companies in a given industry will be affected, industry-wide guidelines would be useful. | |||

===C-4. Archived instrument data in standardized formats and standardized vendor software=== | |||

This has been an area of interest for over 25 years, beginning with the Analytical Instrument Association's work that resulted in a set of ASTM Standard Guides: | |||

*''ASTM E2078 - 00(2016) Standard Guide for Analytical Data Interchange Protocol for Mass Spectrometric Data''<ref name="ASTMEE2078_16">{{cite web |url=https://www.astm.org/Standards/E2078.htm |title=ASTM E2078 - 00(2016) Standard Guide for Analytical Data Interchange Protocol for Mass Spectrometric Data |publisher=ASTM International |date=2016 |accessdate=17 November 2021}}</ref> | |||

*''ASTM E1948 - 98(2014) Standard Guide for Analytical Data Interchange Protocol for Chromatographic Data''<ref name="ASTME1948_14">{{cite web |url=https://www.astm.org/Standards/E1948.htm |title=ASTM E1948 - 98(2014) Standard Guide for Analytical Data Interchange Protocol for Chromatographic Data |publisher=ASTM International |date=2014 |accessdate=17 November 2021}}</ref> | |||

Millipore Sigma continues to invest in solutions based on the Analytical Information Markup Language (AnIML) standard (an outgrowth of work done at NIST).<ref name="MerckMilli19">{{cite web |url=https://www.emdgroup.com/en/news/bssn-software-06-08-2019.html |title=MilliporeSigma Acquires BSSN Software to Accelerate Customers’ Digital Transformation in the Lab |publisher=Merck KGaA |date=06 August 2019 |accessdate=17 November 2021}}</ref> There have also been a variety of standards programs, all of which have a goal of moving instrument data into a neutral data format that is free of proprietary interests, allowing it to be used and analyzed as the analyst needs (e.g., JCAMP-DX<ref>{{Cite journal |last=McDonald |first=Robert S. |last2=Wilks |first2=Paul A. |date=1988-01 |title=JCAMP-DX: A Standard Form for Exchange of Infrared Spectra in Computer Readable Form |url=http://journals.sagepub.com/doi/10.1366/0003702884428734 |journal=Applied Spectroscopy |language=en |volume=42 |issue=1 |pages=151–162 |doi=10.1366/0003702884428734 |issn=0003-7028}}</ref> and GAML<ref name="GAML">{{cite web |url=http://www.gaml.org/default.asp |title=Welcome to GAML.org |work=GAML.org |date=22 June 2007 |accessdate=17 November 2021}}</ref>). | |||

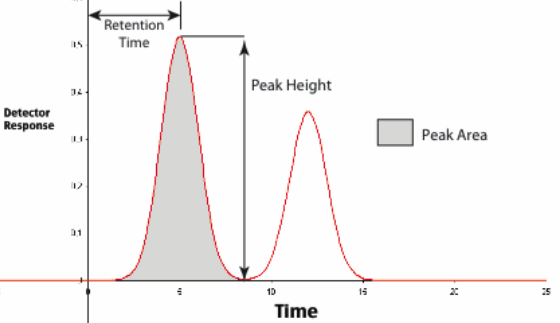

Data interchange standards can help address issues in two aspects of data analysis: qualitative and quantitative work. In qualitative applications, the exported data can imported into other packages that provide facilities not found in the original data acquisition system. Examining an infrared spectra or [[Nuclear magnetic resonance spectroscopy|nuclear magnetic resonance]] (NMR) scan depends upon peak amplitude, shape, and positions to provide useful information, and some software (including user-developed software) may provide facilities that the original data acquisition system didn’t, or it might be a matter of having a file to send to someone for review or inclusion in another project. | |||

Quantitative analysis is a different matter. Techniques such as chromatography, [[inductively coupled plasma mass spectrometry]] (ICP), and [[atomic absorption spectroscopy]], among others, rely on the measurement of peak characteristics or single wavelength absorbance measurements in comparison with measurements made on standards. A single chromatogram is useless (for quantitative work) unless the standards are available. (However, it may be useful for qualitative work if retention time references to appropriate known materials are available.) If you are going to export the data for any of the techniques noted, and others as well, you need the full collection of standards and samples for the data to be of any value. | |||

Yet there is a problem with some, if not all of these programs: they trust the integrity of the analyst to use the data honestly. It is possible for people to use these exported formats in ways that circumvent current data integrity practices and falsify results. | |||

There are good reasons to want vendor neutral data formats so that data sets can be examined by user-developed software, to put it into a form where the analysis is not limited by a vendor's product design. It also holds the potential for separating data acquisition from [[data analysis]] as long as all pertinent data and information (e.g., standards and [[Sample (material)|samples]]) were held together in a package that could not be altered without detection. It may be that something akin to [[blockchain]] technology could be used to register and manage access to datasets on a company-by-company basis (each company having it’s own registry that would become part of the lab's data architecture). | |||

These standards formats are potentially very useful to labs, created by people with a passion for doing something useful and beneficial to the practice of science, sometimes at their own expense. This is another area where a coordinated industry-wide statement of requirements and support would lead to some significant advancements in systems development, and enhanced capability for those practicing instrumental science. | |||

If these capabilities are important to you, than addressing that need has to be part of an industry-wide conversation and consensus to provide the marketing support to have the work done. | |||

===C-6. Communications=== | |||

Most computers and electronic devices in the laboratory have communications capability built in: RS-232 (or similar), digital I/O, Ethernet port, Bluetooth, Wi-Fi, IEEE-488, etc. What these connection types do is provide the potential for communications, and the realization of that potential depends on message formatting, structure of the contents, and moving beyond proprietary interests to those expressed by an industry-wide community. There are two types of devices that need to be addressed: computer systems that service instruments (instrument data systems), and, devices that don’t require an external computer to function (pH meters, balances, etc.) that may have Ethernet ports, serial ASCII, digital I/O, or IEEE-488 connections. In either of these cases, the typical situation is one in which the vendor has determined a communications structure and the user has to adapt their systems to it, often using custom programming to parse messages and take action. | |||

The user community needs to determine its needs and make them known using community-wide buying power as justification for asking for vendor development efforts. As noted earlier, this is not an adversarial situation, but rather a maturation of industry-wide communities working with vendors; the “buying power” comments simply give the vendors the confidence that its development efforts won’t be in vain. | |||

In both cases we need application-to-application message structures that meet several needs. They should be able to handle test method identifications, so that the receiving application knows what the | |||

message is addressing, as well as whether the content is a list of samples to be processed or samples that have already been processed, with results. Additionally, the worklist message should ideally contain, in attribute-value pairs, the number of samples, with a list of sample IDs to be processed. As for the completed work message, the attribute-value pairs would also ideally contain the number of samples processed, the sample IDs, and the results of the analysis (which could be one or more elements depending on the procedure). Of course, there may be other elements required of these message structures; these were just examples. Ultimately, the final framework could be implemented in a format similar to that used in HTML files: plain-text that is easily read and machine- and operating-system-independent. | |||

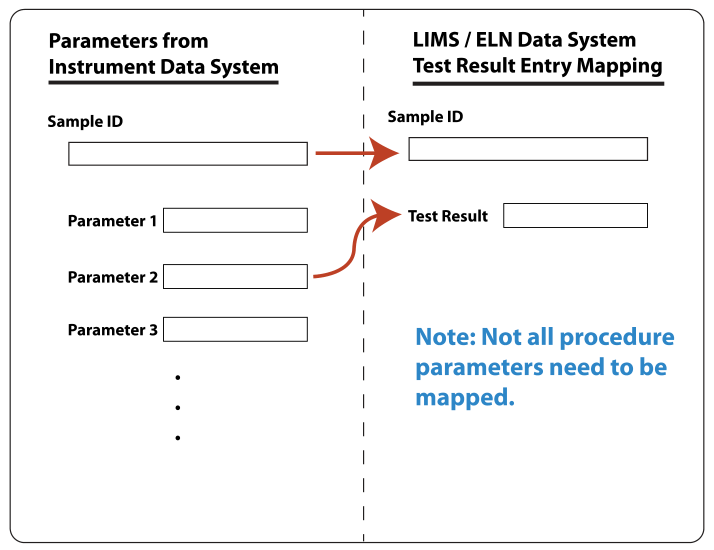

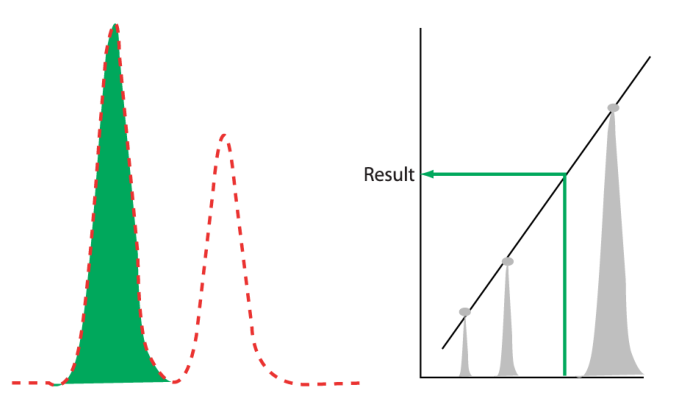

Mapping the message content to database system structure (LIMS or ELN) could be done using a simple built-in application (within the LIMS or ELN) that would graphically display the received message content on one side of a window, with the database’s available fields on the other side. The two sides would then graphically be mapped to one another, as shown in the Figure 4 below. (Note: Since the format of the messages are standardized, we wouldn’t need a separate mapping function to accommodate different vendors). | |||

[[File:Fig4 Liscouski DirectLabSysOnePerPersp21.png|600px]] | |||

{{clear}} | |||

{| | |||

| style="vertical-align:top;" | | |||

{| border="0" cellpadding="5" cellspacing="0" width="600px" | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |<blockquote>'''Figure 4.''' Mapping IDS message contents to database system</blockquote> | |||

|- | |||

|} | |||

|} | |||

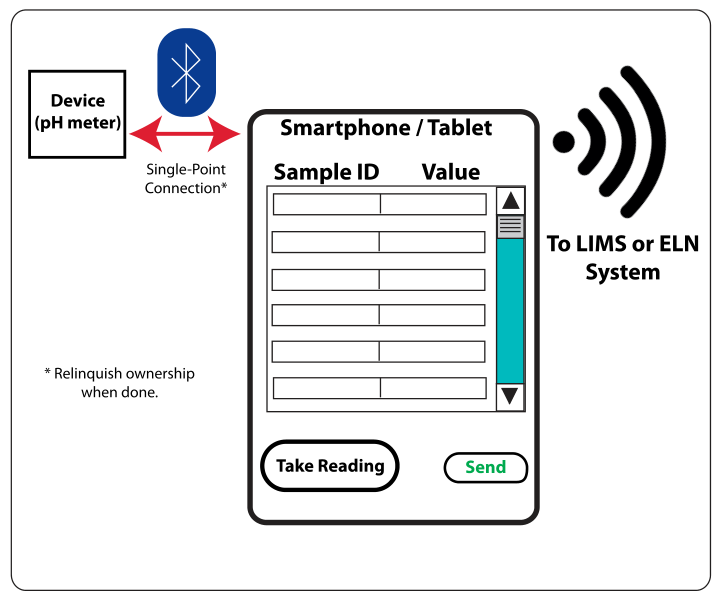

The second case—devices like pH meters, etc.—is a little more interesting since the devices don’t have the same facilities available as a computer system provides. However, in the consumer marketplace, this is well-trod ground, using both a smartphone or tablet as an interface, and translation mechanisms between small, fixed function devices and more extensive applications platforms. The only non-standard element is a single-point Bluetooth connection, as shown in Figure 5. | |||

[[File:Fig5 Liscouski DirectLabSysOnePerPersp21.png|600px]] | |||

{{clear}} | |||

{| | |||

| style="vertical-align:top;" | | |||

{| border="0" cellpadding="5" cellspacing="0" width="600px" | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |<blockquote>'''Figure 5.''' Using a smartphone or tablet as an interface to a LIMS or ELN. The procedure takes a series of pH measurements for a series of samples.</blockquote> | |||

|- | |||

|} | |||

|} | |||

A single-point Bluetooth connection is used to exchange information between the measuring device and the smartphone or tablet. There are multi-point connection devices (the smartphone, for example) but we want to restrict the device to avoid confusion about who is in control of the measuring unit. A setup screen (not shown) would set up the worklist and populate the sample IDs. The “Take Reading” button would read the device and enter the value into the corresponding sample position—taking them in order—and enable the next reading until all samples had been read. “Send” would transmit the formatted message to the LIMS or ELN. Depending on the nature of the device and the procedure being used, the application can take any form, this is just a simple example taking pH measurement for a series of samples. In essence, the device-smartphone combination becomes an instrument data system, and the database setup would be the same as described above. | |||

The bottom line is this: standardized message formats can greatly simplify the interfacing of instrument data systems, LIMS, ELNs, and other laboratory informatics applications. The clinical chemistry community created communications standards that would permit meaningful messages to be sent between an instrument data system and database system structured so that either end of the link would recognize the message and be able to extract and use the message content without the custom programming common to most labs today. There is no reason why the same thing can’t be done in any other industry; it may even be possible to adapt the clinical chemistry protocols. The data dictionary (list of test names and attributes) would have to be adjusted, but that is a minor point that can be handled on an industry-by-industry basis and be incorporated as part of a system installation. | |||

What is needed is people coming together as a group and organizing and defining the effort. How important is it to you to streamline the effort in getting systems up and running without custom programming, to work toward a plug-and-play capability that we encounter in consumer systems (an environment where vendors know easy integration is a must or their products won’t sell)? | |||

====Additional note on Bluetooth-enabled instruments==== | |||

The addition of Bluetooth to a device can result in much more compact systems, making the footprint smaller and reducing the cost of the unit. By using a smartphone to replace the front panel controls, the programming can become much more sophisticated. Imagine a Bluetooth-enabled pH meter and a separate Bluetooth, electronically controlled titrator. That combination would permit optimized{{Efn|Where the amount of titrant added is adjusted based on the response to the previous addition. This should yield faster titrations with increased accuracy.}} delivery of titrant making the process faster and more accurate, while also providing a graphical display of the resulting titration curve, putting the full data processing capability of the smartphone and it’s communications at the service of the experiment. Think about what the addition of a simple clock did for thermostats: it opened the door to programmable thermostats and better controls. What would smartphones controlling simple devices like balances, pH meters, titrators, etc. facilitate? | |||

==Laboratory work and scientific production== | |||

'''Key point''': ''Laboratory work is a collection of processes and procedures that have to be carried out for research to progress, or, to support product manufacturing and production processes. In order to meet “productivity” goals, get enough work done to make progress, or provide a good ROI, we need labs to transition manual to automated (partial or full) execution as much as possible. We also need to ensure and demonstrate process stability, so that lab work itself doesn’t create variability and unreliable results.'' | |||

== | ===Different kinds of laboratory work=== | ||

There are different kinds of laboratory work. Some consist of a series of experiments or observations that may not be repeated, or are repeated with expected or unplanned variations. Other experiments may be repeated over and over either without variation or with controlled variations based on gained experience. It is that second set that concerns this writing, because repetition provides a basis and a reason for automation. | |||

Today we are used to automation in lab work, enacted in the form of computers and robotics ranging from mechanical arms to auto-samplers, auto-titration systems, and more. Some of the technology development we've discussed began many decades before, and there's utility in looking back that far to note how technologies have developed. However, while there are definitely forms of automation and robotics available today, they are not always interconnected, integrated, or compatible with each other; they were produced as products either without an underlying integration framework, or with one that was limited to a few cooperating vendors. This can be problematic. | |||

There are two major elements to repetitive laboratory methods: the underlying science and how the procedures are executed. At these methods’ core, however is the underlying scientific method, be it a chemical, biological, or physical sequence. We automate processes not things. We don’t, for example, automate an instrument, but rather the process of using it to accomplish something. If you are automating the use of a telescope to obtain the spectra of a series of stars, you build a control algorithm to look at each star in a list, have the instrument position itself properly, record the data using sensors, process it, and then move on the next star on the list. (You would also build in error detection with messages like "there isn’t any star there," "cover on telescope," and "it isn’t dark yet," along with relevant correction routines). The control algorithm is the automation, while the telescope and sensors are just tools being used. | |||

When building a repetitive laboratory method, the method should be completely described and include aspects such as: | |||

*the underlying science | |||

*a complete list of equipment and materials when implemented as a manual process | |||

*possible interferences | |||

*critical facets of the method | |||

*variables that have to be controlled and their operating ranges (e.g., temperature, pH of solutions, etc.) | |||

*special characteristics of instrumentation | |||

*safety considerations | |||

*recommended sources of equipment and sources to be avoided | |||

*software considerations such as possible products, desirable characteristics, and things to be avoided | |||

*potentially dangerous, hazardous situations | |||

*an end-to-end walkthrough of the methods used | |||

Of course, the method has to be validated. You need to have complete confidence in its ability to produce the results necessary given the input into the process. At this point, the scientific aspects of the work are complete and finished. They may be revisited if problems arise during process automation, but no further changes should be entertained unless you are willing to absorb additional costs and alterations to the schedule. This is a serious matter; one of the leading causes of project failures is “scope creep,” which occurs when incremental changes are made to a process while it is under development. This results in the project becoming a moving target, with seemingly minor changes able to cause a major disruption in the project's design. | |||

===Scientific production=== | |||

At this point, we have a proven procedure that we need to execute repeatedly on a set of inputs (samples). We aren’t carrying out "experiments"; that word suggests that something may be changing in the nature of the procedure, and at this point it shouldn’t. Changes to the underlying process invite a host of problems and may forfeit any chance at a successful automation effort. | |||

However, somewhere in the (distant) future something in the process is likely to change. A new piece of technology may be introduced, equipment (including software) may need to be upgraded, or the timing of a step may need to be adjusted. That’s life. How that change is made is very important. Before it is implemented, the process has to be in place long enough to establish that it works reliably, that it produces useful results, and that there is a history of running the same reference sample(s) over and over again in the mix of samples to show that the process is under control with acceptable variability in results (i.e., statistical process control). In manufacturing and production language, this is referred to as "evolutionary operations" (EVOP). But we can pull it all together under the heading “scientific production.”{{Efn|In prior writings, the term “scientific manufacturing” was used. The problem with that term is that we’re not making products but instead producing results. Plus “manufacturing results” has some negative connotations.}} | |||

If your reaction to the previous paragraph is “this is a science lab not a commercial production operation,” you’re getting close to the point. This is a production operation (commercial or not, it depends on the type of lab, contract testing, and other factors) going on within a science lab. It’s just a matter of scale. | |||

===Demonstrating process stability: The standard sample program=== | |||

One of the characteristics of a successfully implemented stable process is consistency of the results with the same set of inputs. Basically, if everything is working properly, the same set of samples introduced at the beginning should yield the same results time after time, regardless of whether the implementation is manual or automated. A standard sample program (Std.SP) is a means of demonstrating the stability and operational integrity of a process; it can also tell you if a process is getting out of control. Introduced early in my career, the Std.SP was used to show the consistency of results between analysts in a lab, and to compare the performance of our lab and the quality control labs in the production facilities. The samples were submitted and marked like any similar sample and became part of the workflow. The lab managers maintained control charts of the labs and and individual's performance on the samples. The combined results would show whether the lab and those working there had things under control or if problems were developing. Those problems might be with an individual, a change in equipment performance, or a change in incoming raw materials used in the analysis. | |||

The Std.SP was the answer to the typical “how do you know you can trust these results?” question, which often came up when a research program or production line was having issues. This becomes more important when automation is used and the sampling and testing throughput increases. If an automated system is having issues, you are just producing bad data faster. The Std.SP is a high-level test of the system's performance. A deviation of the results trend line or a widening of the variability are indications that something is wrong, which should lead to a detailed evaluation of the system to account for the deviations. A troubleshooting guide should be part of the original method description (containing aspects such as “if something starts going wrong, here’s where to look for problems” or “the test method is particularly sensitive to …”, etc.) and notes made during the implementation process. | |||

Reference samples are another matter, however. They have to be stable over a long enough period of use to establish a meaningful trend. If the reference samples are stable over a long period of time, you may only need two or three so that the method can be evaluated over different ranges (you may have a higher variability at a lower range than a higher one). If the reference samples are not stable, then their useful lifetime needs to be established and older ones swapped out periodically. There should be some overlap between samples near the end of their useful life and the introduction of new ones so that laboratorians can differentiate between system variation and changes in reference samples. You may need to use more reference samples in these situations. | |||

This inevitably becomes a lot of work, and if your lab utilizes many procedures, it can be time-consuming to manage an Std.SP. But before you decide it is too much work, ask yourself how valuable it is to have a solid, documented answer to the “how do you know you can trust these results?” question. Having a validated process or method is a start, but that only holds at the start of a method’s implementation. If all of this is new to you, find someone who understands process engineering and statistical process control and learn more from them. | |||

==Where is the future of lab automation going to take us?== | |||