Difference between revisions of "Main Page/Featured article of the week/2016"

Shawndouglas (talk | contribs) (Added last week's article of the week.) |

Shawndouglas (talk | contribs) m (Text replacement - "\[\[Cerner Corporation(.*)" to "[[Vendor:Cerner Corporation$1") |

||

| (35 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

{{ombox | {{ombox | ||

| type = notice | | type = notice | ||

| text = If you're looking for the | | text = If you're looking for other "Article of the Week" archives: [[Main Page/Featured article of the week/2014|2014]] - [[Main Page/Featured article of the week/2015|2015]] - 2016 - [[Main Page/Featured article of the week/2017|2017]] - [[Main Page/Featured article of the week/2018|2018]] - [[Main Page/Featured article of the week/2019|2019]] - [[Main Page/Featured article of the week/2020|2020]] - [[Main Page/Featured article of the week/2021|2021]] - [[Main Page/Featured article of the week/2022|2022]] - [[Main Page/Featured article of the week/2023|2023]] - [[Main Page/Featured article of the week/2024|2024]] | ||

}} | }} | ||

| Line 17: | Line 17: | ||

<!-- Below this line begin pasting previous news --> | <!-- Below this line begin pasting previous news --> | ||

<h2 style="font-size:105%; font-weight:bold; text-align:left; color:#000; padding:0.2em 0.4em; width:50%;">Featured article of the week: June 27–July 3:</h2> | <h2 style="font-size:105%; font-weight:bold; text-align:left; color:#000; padding:0.2em 0.4em; width:50%;">Featured article of the week: December 19–25:</h2> | ||

<div style="padding:0.4em 1em 0.3em 1em;"> | |||

<div style="float: left; margin: 0.5em 0.9em 0.4em 0em;">[[File:Fig1 Mackert JournalOfMedIntRes2016 18-10.png|240px]]</div> | |||

'''"[[Journal:Health literacy and health information technology adoption: The potential for a new digital divide|Health literacy and health information technology adoption: The potential for a new digital divide]]"''' | |||

Approximately one-half of American adults exhibit low health literacy and thus struggle to find and use [[Health informatics|health information]]. Low health literacy is associated with negative outcomes, including overall poorer health. [[Health information technology]] (HIT) makes health information available directly to patients through electronic tools including [[patient portal]]s, wearable technology, and mobile apps. The direct availability of this [[information]] to patients, however, may be complicated by misunderstanding of HIT privacy and information sharing. The purpose of this study was to determine whether health literacy is associated with patients’ use of four types of HIT tools: fitness and nutrition apps, activity trackers, and patient portals. ('''[[Journal:Health literacy and health information technology adoption: The potential for a new digital divide|Full article...]]''')<br /> | |||

</div> | |||

|- | |||

|<br /><h2 style="font-size:105%; font-weight:bold; text-align:left; color:#000; padding:0.2em 0.4em; width:50%;">Featured article of the week: December 12–18:</h2> | |||

<div style="padding:0.4em 1em 0.3em 1em;"> | |||

<div style="float: left; margin: 0.5em 0.9em 0.4em 0em;">[[File:Fig2 Lam BMCBioinformatics2016 17.gif|240px]]</div> | |||

'''"[[Journal:VennDiagramWeb: A web application for the generation of highly customizable Venn and Euler diagrams|VennDiagramWeb: A web application for the generation of highly customizable Venn and Euler diagrams]]"''' | |||

Visualization of data generated by high-throughput, high-dimensionality experiments is rapidly becoming a rate-limiting step in [[Computational informatics|computational biology]]. There is an ongoing need to quickly develop high-quality visualizations that can be easily customized or incorporated into automated pipelines. This often requires an interface for manual plot modification, rapid cycles of tweaking visualization parameters, and the generation of graphics code. To facilitate this process for the generation of highly-customizable, high-resolution Venn and Euler diagrams, we introduce ''VennDiagramWeb'': a web application for the widely used VennDiagram R package. ('''[[Journal:VennDiagramWeb: A web application for the generation of highly customizable Venn and Euler diagrams|Full article...]]''')<br /> | |||

</div> | |||

|- | |||

|<br /><h2 style="font-size:105%; font-weight:bold; text-align:left; color:#000; padding:0.2em 0.4em; width:50%;">Featured article of the week: December 5–11:</h2> | |||

<div style="padding:0.4em 1em 0.3em 1em;"> | |||

<div style="float: left; margin: 0.5em 0.9em 0.4em 0em;">[[File:Fig2 Bekker JofCheminformatics2016 8-1.gif|240px]]</div> | |||

'''"[[Journal:Molmil: A molecular viewer for the PDB and beyond|Molmil: A molecular viewer for the PDB and beyond]]"''' | |||

Molecular viewers are a vital tool for our understanding of protein structures and functions. The shift from regular desktop platforms such as Windows, Mac OSX and Linux to mobile platforms such as iOS and Android in the last half-decade, however, prevents traditional online molecular viewers such as PDBj’s previously developed jV and the popular Jmol from running on these new platforms as these platforms do not support Java Applets. For mobile platforms a native application (i.e., an application specifically designed and optimized for each of these platforms) can be created and distributed via their respective application stores. However, with new platforms on the horizon, or already available, in addition to the already established desktop platforms, it would be a tedious and inefficient job to make a molecular viewer available on all platforms, current and future. ('''[[Journal:Molmil: A molecular viewer for the PDB and beyond|Full article...]]''')<br /> | |||

</div> | |||

|- | |||

|<br /><h2 style="font-size:105%; font-weight:bold; text-align:left; color:#000; padding:0.2em 0.4em; width:50%;">Featured article of the week: November 28–December 4:</h2> | |||

<div style="padding:0.4em 1em 0.3em 1em;"> | |||

<div style="float: left; margin: 0.5em 0.9em 0.4em 0em;">[[File:Fig1 Gates JofResearchNIST2015 120.jpg|240px]]</div> | |||

'''"[[Journal:Smart electronic laboratory notebooks for the NIST research environment|Smart electronic laboratory notebooks for the NIST research environment]]"''' | |||

[[Laboratory notebook]]s have been a staple of scientific research for centuries for organizing and documenting ideas and experiments. Modern [[Laboratory|laboratories]] are increasingly reliant on electronic data collection and analysis, so it seems inevitable that the digital revolution should come to the ordinary laboratory notebook. The most important aspect of this transition is to make the shift as comfortable and intuitive as possible, so that the creative process that is the hallmark of scientific investigation and engineering achievement is maintained, and ideally enhanced. The smart [[electronic laboratory notebook]]s described in this paper represent a paradigm shift from the old pen and paper style notebooks and provide a host of powerful operational and documentation capabilities in an intuitive format that is available anywhere at any time. ('''[[Journal:Smart electronic laboratory notebooks for the NIST research environment|Full article...]]''')<br /> | |||

</div> | |||

|- | |||

|<br /><h2 style="font-size:105%; font-weight:bold; text-align:left; color:#000; padding:0.2em 0.4em; width:50%;">Featured article of the week: November 21–27:</h2> | |||

<div style="padding:0.4em 1em 0.3em 1em;"> | |||

<div style="float: left; margin: 0.5em 0.9em 0.4em 0em;">[[File:Fig1 Goldberg mBio2015 6-6.jpg|240px]]</div> | |||

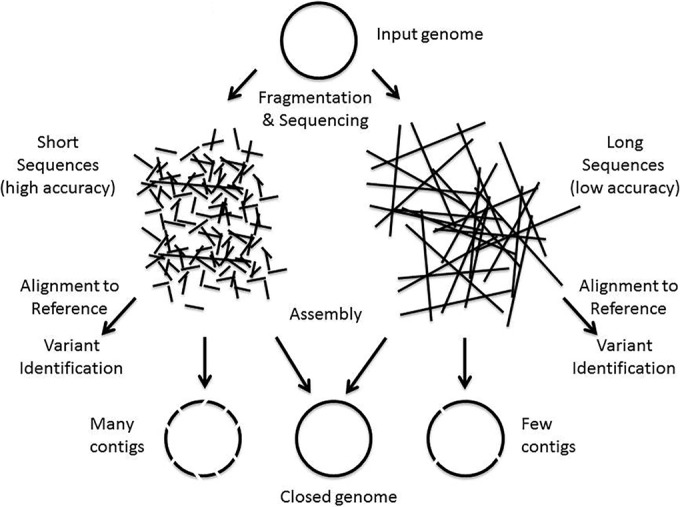

'''"[[Journal:Making the leap from research laboratory to clinic: Challenges and opportunities for next-generation sequencing in infectious disease diagnostics|Making the leap from research laboratory to clinic: Challenges and opportunities for next-generation sequencing in infectious disease diagnostics]]"''' | |||

Next-generation DNA sequencing (NGS) has progressed enormously over the past decade, transforming [[Genomics|genomic]] analysis and opening up many new opportunities for applications in clinical microbiology [[Laboratory|laboratories]]. The impact of NGS on microbiology has been revolutionary, with new microbial genomic sequences being generated daily, leading to the development of large databases of genomes and gene sequences. The ability to analyze microbial communities without culturing organisms has created the ever-growing field of metagenomics and microbiome analysis and has generated significant new insights into the relation between host and microbe. The medical literature contains many examples of how this new technology can be used for infectious disease diagnostics and pathogen analysis. The implementation of NGS in medical practice has been a slow process due to various challenges such as clinical trials, lack of applicable regulatory guidelines, and the adaptation of the technology to the clinical environment. In April 2015, the American Academy of Microbiology (AAM) convened a colloquium to begin to define these issues, and in this document, we present some of the concepts that were generated from these discussions. ('''[[Journal:Making the leap from research laboratory to clinic: Challenges and opportunities for next-generation sequencing in infectious disease diagnostics|Full article...]]''')<br /> | |||

</div> | |||

|- | |||

|<br /><h2 style="font-size:105%; font-weight:bold; text-align:left; color:#000; padding:0.2em 0.4em; width:50%;">Featured article of the week: November 14–20:</h2> | |||

<div style="padding:0.4em 1em 0.3em 1em;"> | |||

<div style="float: left; margin: 0.5em 0.9em 0.4em 0em;">[[File:Fig1 Aronson JournalOfPersMed2016 6-1.png|240px]]</div> | |||

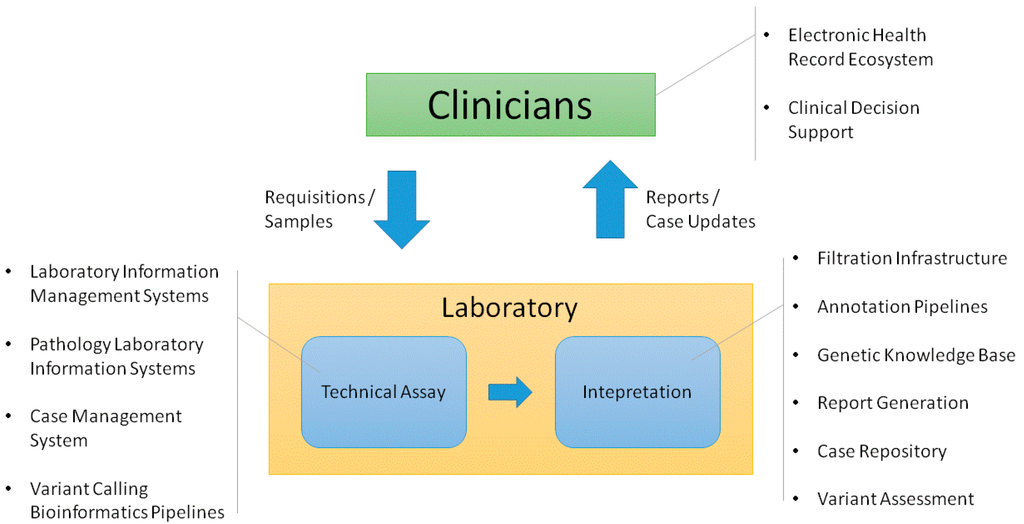

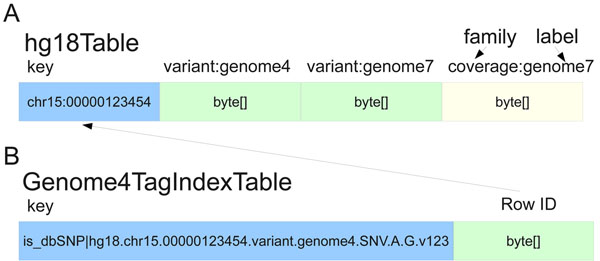

'''"[[Journal:Information technology support for clinical genetic testing within an academic medical center|Information technology support for clinical genetic testing within an academic medical center]]"''' | |||

Academic medical centers require many interconnected systems to fully support genetic testing processes. We provide an overview of the end-to-end support that has been established surrounding a genetic testing laboratory within our environment, including both [[laboratory]] and clinician-facing infrastructure. We explain key functions that we have found useful in the supporting systems. We also consider ways that this infrastructure could be enhanced to enable deeper assessment of genetic test results in both the laboratory and clinic. ('''[[Journal:Information technology support for clinical genetic testing within an academic medical center|Full article...]]''')<br /> | |||

</div> | |||

|- | |||

|<br /><h2 style="font-size:105%; font-weight:bold; text-align:left; color:#000; padding:0.2em 0.4em; width:50%;">Featured article of the week: November 07–13:</h2> | |||

<div style="padding:0.4em 1em 0.3em 1em;"> | |||

<div style="float: left; margin: 0.5em 0.9em 0.4em 0em;">[[File:Fig1 Hong Database2016 2016.jpg|240px]]</div> | |||

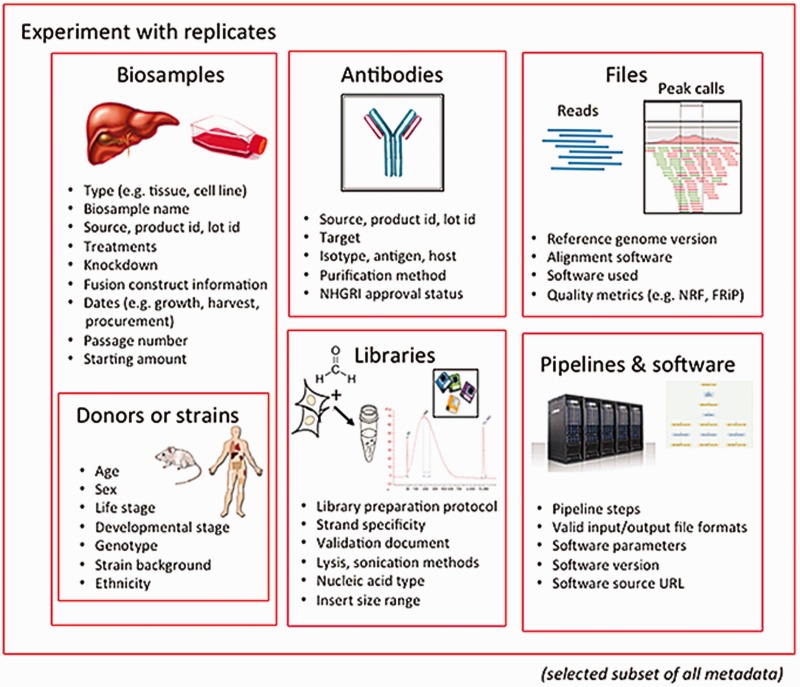

'''"[[Journal:Principles of metadata organization at the ENCODE data coordination center|Principles of metadata organization at the ENCODE data coordination center]]"''' | |||

The Encyclopedia of DNA Elements (ENCODE) Data Coordinating Center (DCC) is responsible for organizing, describing and providing access to the diverse data generated by the ENCODE project. The description of these data, known as metadata, includes the biological sample used as input, the protocols and assays performed on these samples, the data files generated from the results and the computational methods used to analyze the data. Here, we outline the principles and philosophy used to define the ENCODE metadata in order to create a metadata standard that can be applied to diverse assays and multiple genomic projects. In addition, we present how the data are validated and used by the ENCODE DCC in creating the ENCODE Portal. ('''[[Journal:Principles of metadata organization at the ENCODE data coordination center|Full article...]]''')<br /> | |||

</div> | |||

|- | |||

|<br /><h2 style="font-size:105%; font-weight:bold; text-align:left; color:#000; padding:0.2em 0.4em; width:50%;">Featured article of the week: October 31–November 06:</h2> | |||

<div style="padding:0.4em 1em 0.3em 1em;"> | |||

<div style="float: left; margin: 0.5em 0.9em 0.4em 0em;">[[File:Fig1 Bianchi FrontinGenetics2016 7.jpg|240px]]</div> | |||

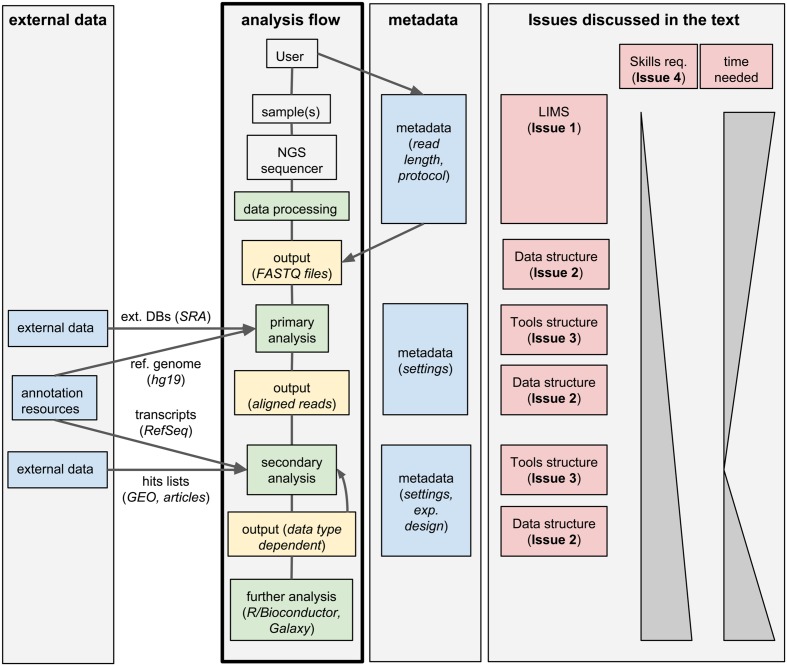

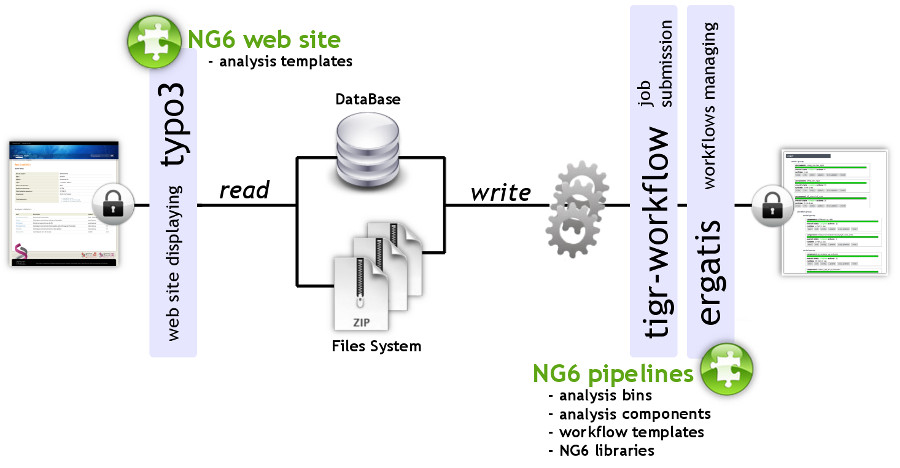

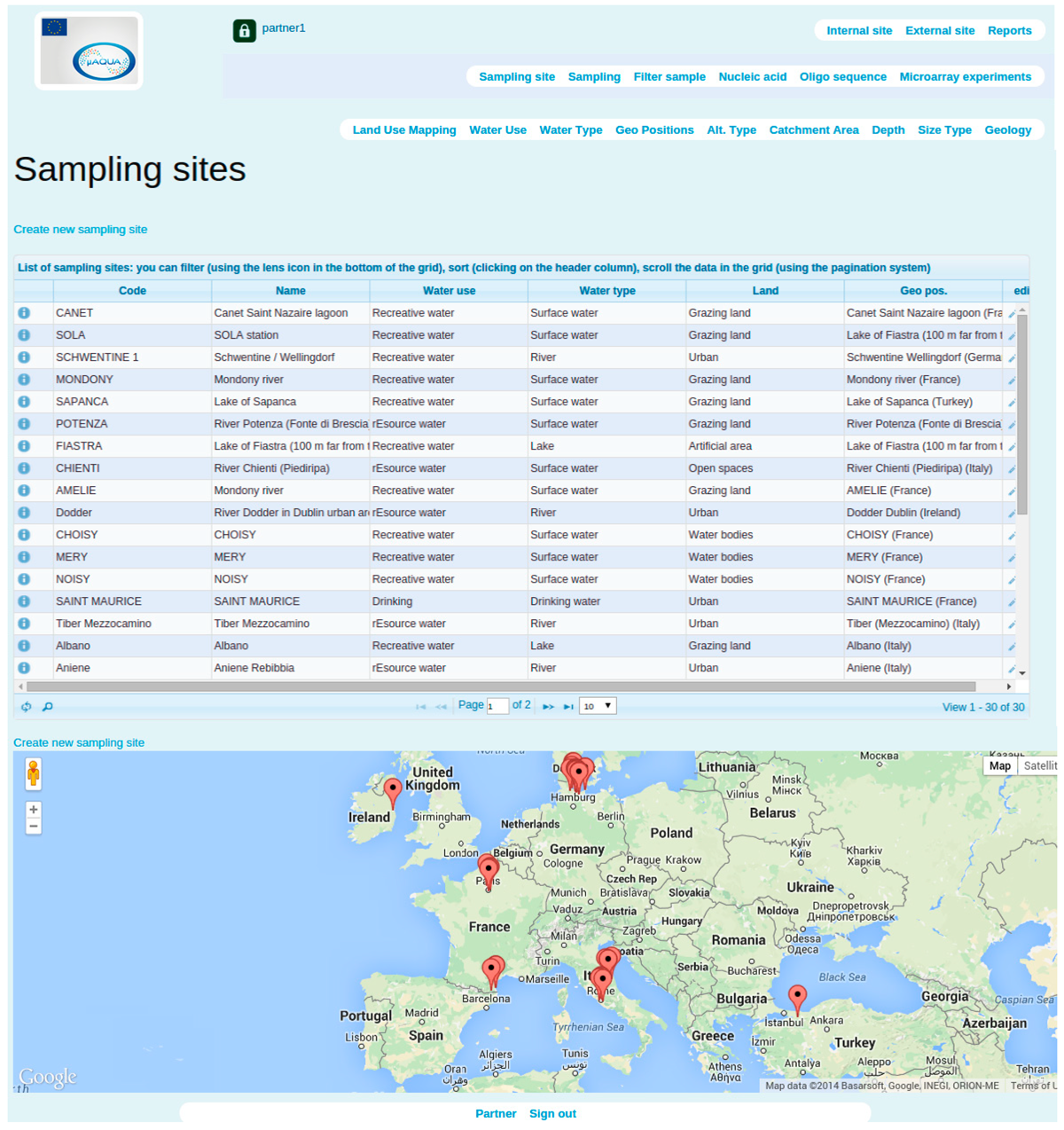

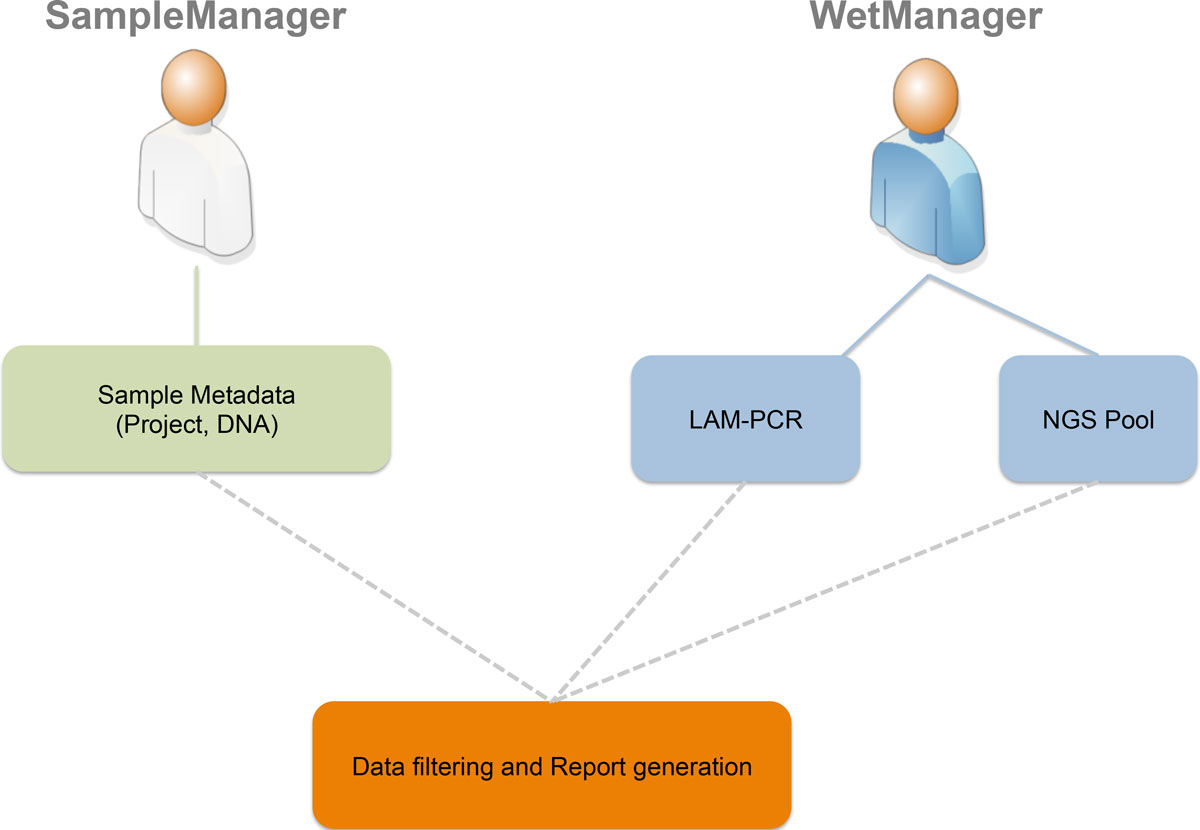

'''"[[Journal:Integrated systems for NGS data management and analysis: Open issues and available solutions|Integrated systems for NGS data management and analysis: Open issues and available solutions]]"''' | |||

Next-generation sequencing (NGS) technologies have deeply changed our understanding of cellular processes by delivering an astonishing amount of data at affordable prices; nowadays, many biology [[laboratory|laboratories]] have already accumulated a large number of sequenced samples. However, managing and analyzing these data poses new challenges, which may easily be underestimated by research groups devoid of IT and quantitative skills. In this perspective, we identify five issues that should be carefully addressed by research groups approaching NGS technologies. In particular, the five key issues to be considered concern: (1) adopting a [[laboratory information management system]] (LIMS) and safeguard the resulting raw data structure in downstream analyses; (2) monitoring the flow of the data and standardizing input and output directories and file names, even when multiple analysis protocols are used on the same data; (3) ensuring complete traceability of the analysis performed; (4) enabling non-experienced users to run analyses through a graphical user interface (GUI) acting as a front-end for the pipelines; (5) relying on standard metadata to annotate the datasets, and when possible using controlled vocabularies, ideally derived from biomedical ontologies. ('''[[Journal:Integrated systems for NGS data management and analysis: Open issues and available solutions|Full article...]]''')<br /> | |||

</div> | |||

|- | |||

|<br /><h2 style="font-size:105%; font-weight:bold; text-align:left; color:#000; padding:0.2em 0.4em; width:50%;">Featured article of the week: October 24–30:</h2> | |||

<div style="padding:0.4em 1em 0.3em 1em;"> | |||

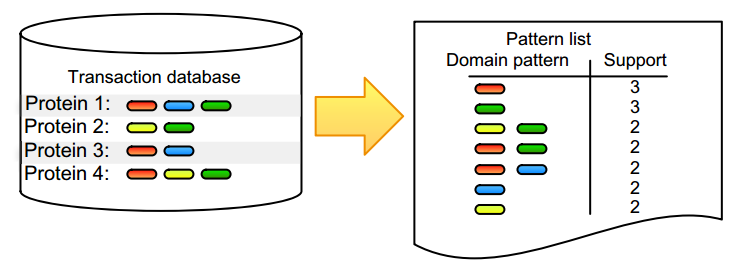

<div style="float: left; margin: 0.5em 0.9em 0.4em 0em;">[[File:Fig1 Naulaerts BioAndBioInsights2016 10.png|240px]]</div> | |||

'''"[[Journal:Practical approaches for mining frequent patterns in molecular datasets|Practical approaches for mining frequent patterns in molecular datasets]]"''' | |||

Pattern detection is an inherent task in the analysis and interpretation of complex and continuously accumulating biological data. Numerous [[wikipedia:Sequential pattern mining|itemset mining]] algorithms have been developed in the last decade to efficiently detect specific pattern classes in data. Although many of these have proven their value for addressing bioinformatics problems, several factors still slow down promising algorithms from gaining popularity in the life science community. Many of these issues stem from the low user-friendliness of these tools and the complexity of their output, which is often large, static, and consequently hard to interpret. Here, we apply three software implementations on common [[bioinformatics]] problems and illustrate some of the advantages and disadvantages of each, as well as inherent pitfalls of biological data mining. Frequent itemset mining exists in many different flavors, and users should decide their software choice based on their research question, programming proficiency, and added value of extra features. ('''[[Journal:Practical approaches for mining frequent patterns in molecular datasets|Full article...]]''')<br /> | |||

</div> | |||

|- | |||

|<br /><h2 style="font-size:105%; font-weight:bold; text-align:left; color:#000; padding:0.2em 0.4em; width:50%;">Featured article of the week: October 17–23:</h2> | |||

<div style="padding:0.4em 1em 0.3em 1em;"> | |||

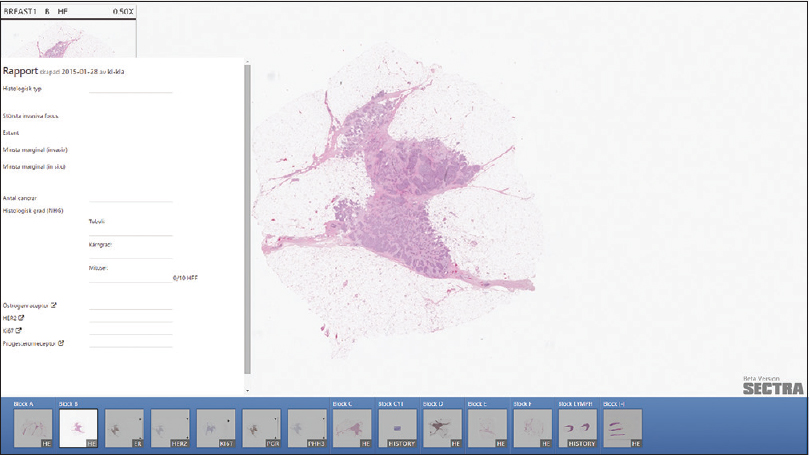

<div style="float: left; margin: 0.5em 0.9em 0.4em 0em;">[[File:Fig1 Cervin JofPathInfo2016 7.jpg|240px]]</div> | |||

'''"[[Journal:Improving the creation and reporting of structured findings during digital pathology review|Improving the creation and reporting of structured findings during digital pathology review]]"''' | |||

Today, pathology reporting consists of many separate tasks, carried out by multiple people. Common tasks include dictation during case review, transcription, verification of the transcription, report distribution, and reporting the key findings to follow-up registries. Introduction of digital workstations makes it possible to remove some of these tasks and simplify others. This study describes the work presented at the Nordic Symposium on Digital Pathology 2015, in Linköping, Sweden. | |||

We explored the possibility of having a digital tool that simplifies image review by assisting note-taking, and with minimal extra effort, populates a structured report. Thus, our prototype sees reporting as an activity interleaved with image review rather than a separate final step. We created an interface to collect, sort, and display findings for the most common reporting needs, such as tumor size, grading, and scoring. ('''[[Journal:Improving the creation and reporting of structured findings during digital pathology review|Full article...]]''')<br /> | |||

</div> | |||

|- | |||

|<br /><h2 style="font-size:105%; font-weight:bold; text-align:left; color:#000; padding:0.2em 0.4em; width:50%;">Featured article of the week: October 10–16:</h2> | |||

<div style="padding:0.4em 1em 0.3em 1em;"> | |||

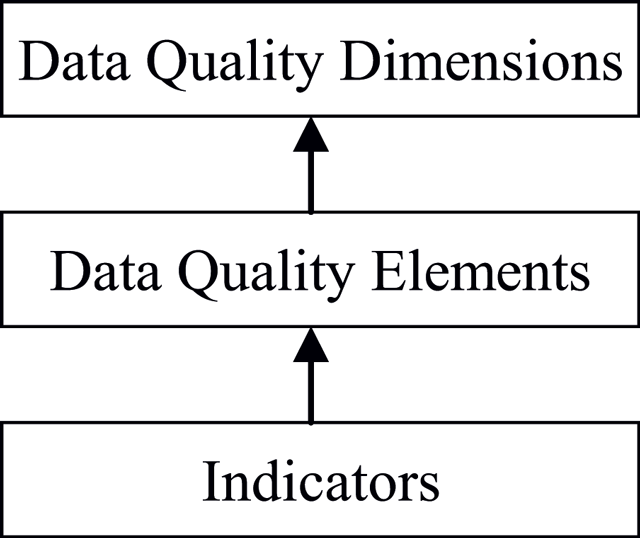

<div style="float: left; margin: 0.5em 0.9em 0.4em 0em;">[[File:Fig1 Cai DataScienceJournal2015 14.png|240px]]</div> | |||

'''"[[Journal:The challenges of data quality and data quality assessment in the big data era|The challenges of data quality and data quality assessment in the big data era]]"''' | |||

High-quality data are the precondition for analyzing and using big data and for guaranteeing the value of the data. Currently, comprehensive analysis and research of quality standards and quality assessment methods for big data are lacking. First, this paper summarizes reviews of data quality research. Second, this paper analyzes the data characteristics of the big data environment, presents quality challenges faced by big data, and formulates a hierarchical data quality framework from the perspective of data users. This framework consists of big data quality dimensions, quality characteristics, and quality indexes. Finally, on the basis of this framework, this paper constructs a dynamic assessment process for data quality. This process has good expansibility and adaptability and can meet the needs of big data quality assessment. The research results enrich the theoretical scope of big data and lay a solid foundation for the future by establishing an assessment model and studying evaluation algorithms. ('''[[Journal:The challenges of data quality and data quality assessment in the big data era|Full article...]]''')<br /> | |||

</div> | |||

|- | |||

|<br /><h2 style="font-size:105%; font-weight:bold; text-align:left; color:#000; padding:0.2em 0.4em; width:50%;">Featured article of the week: October 3–9:</h2> | |||

<div style="padding:0.4em 1em 0.3em 1em;"> | |||

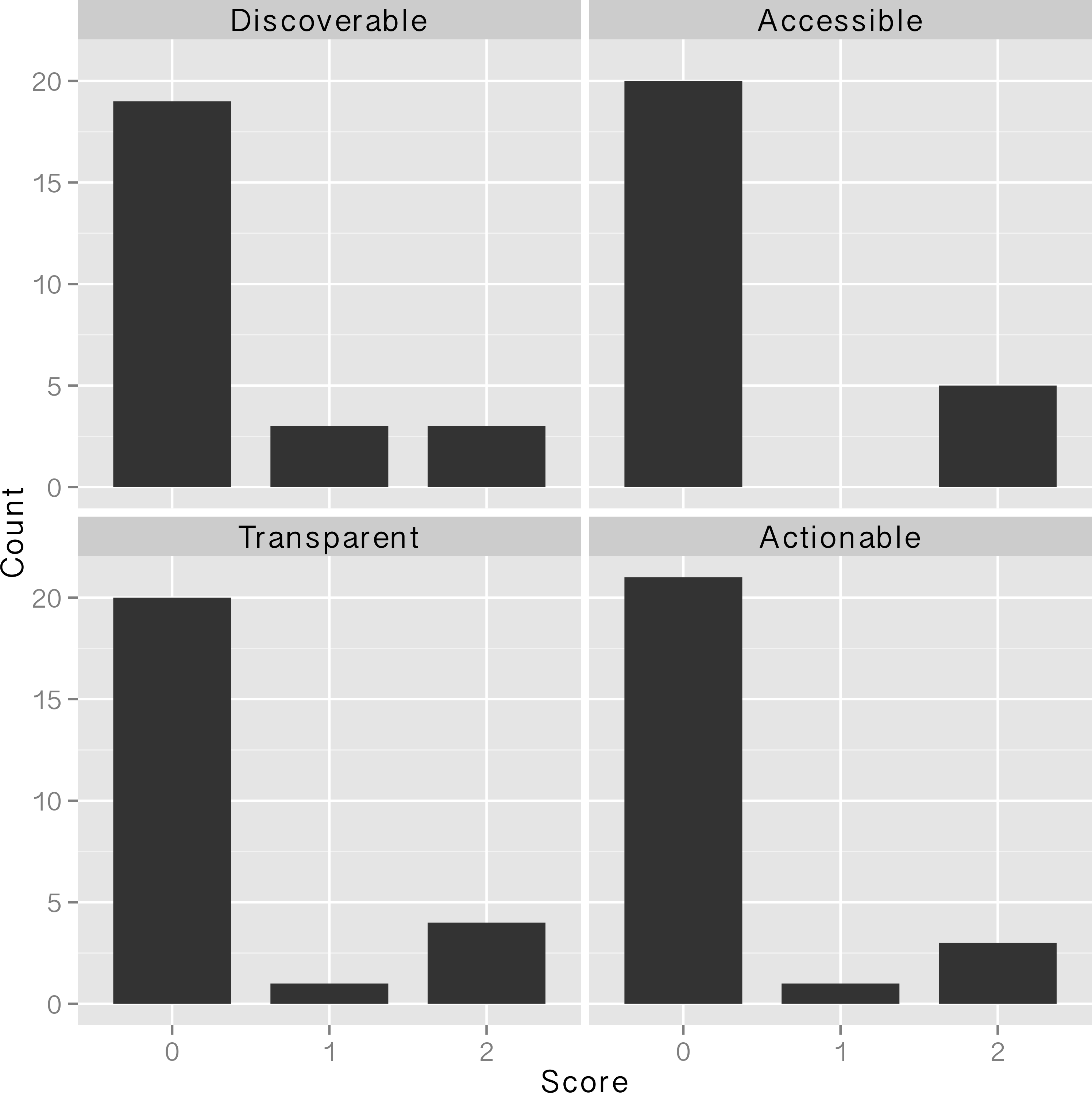

<div style="float: left; margin: 0.5em 0.9em 0.4em 0em;">[[File:Journal.pone.0147942.g002.PNG|240px]]</div> | |||

'''"[[Journal:Water, water, everywhere: Defining and assessing data sharing in academia|Water, water, everywhere: Defining and assessing data sharing in academia]]"''' | |||

Sharing of research data has begun to gain traction in many areas of the sciences in the past few years because of changing expectations from the scientific community, funding agencies, and academic journals. National Science Foundation (NSF) requirements for a data management plan (DMP) went into effect in 2011, with the intent of facilitating the dissemination and sharing of research results. Many projects that were funded during 2011 and 2012 should now have implemented the elements of the data management plans required for their grant proposals. In this paper we define "data sharing" and present a protocol for assessing whether data have been shared and how effective the sharing was. We then evaluate the data sharing practices of researchers funded by the NSF at Oregon State University in two ways: by attempting to discover project-level research data using the associated DMP as a starting point, and by examining data sharing associated with journal articles that acknowledge NSF support. Sharing at both the project level and the journal article level was not carried out in the majority of cases, and when sharing was accomplished, the shared data were often of questionable usability due to access, documentation, and formatting issues. We close the article by offering recommendations for how data producers, journal publishers, data repositories, and funding agencies can facilitate the process of sharing data in a meaningful way. ('''[[Journal:Water, water, everywhere: Defining and assessing data sharing in academia|Full article...]]''')<br /> | |||

</div> | |||

|- | |||

|<br /><h2 style="font-size:105%; font-weight:bold; text-align:left; color:#000; padding:0.2em 0.4em; width:50%;">Featured article of the week: September 26–October 2:</h2> | |||

<div style="padding:0.4em 1em 0.3em 1em;"> | |||

<div style="float: left; margin: 0.5em 0.9em 0.4em 0em;">[[File:Fig2 Maier MammalianGenome2015 26-9.gif|240px]]</div> | |||

'''"[[Journal:Principles and application of LIMS in mouse clinics|Principles and application of LIMS in mouse clinics]]"''' | |||

Large-scale systemic mouse phenotyping, as performed by mouse clinics for more than a decade, requires thousands of mice from a multitude of different mutant lines to be bred, individually tracked and subjected to phenotyping procedures according to a standardised schedule. All these efforts are typically organised in overlapping projects, running in parallel. In terms of logistics, data capture, [[data analysis]], result visualisation and reporting, new challenges have emerged from such projects. These challenges could hardly be met with traditional methods such as pen and paper colony management, spreadsheet-based data management and manual data analysis. Hence, different [[laboratory information management system]]s (LIMS) have been developed in mouse clinics to facilitate or even enable mouse and data management in the described order of magnitude. This review shows that general principles of LIMS can be empirically deduced from LIMS used by different mouse clinics, although these have evolved differently. Supported by LIMS descriptions and lessons learned from seven mouse clinics, this review also shows that the unique LIMS environment in a particular facility strongly influences strategic LIMS decisions and LIMS development. As a major conclusion, this review states that there is no universal LIMS for the mouse research domain that fits all requirements. Still, empirically deduced general LIMS principles can serve as a master decision support template, which is provided as a hands-on tool for mouse research facilities looking for a LIMS. ('''[[Journal:Principles and application of LIMS in mouse clinics|Full article...]]''')<br /> | |||

</div> | |||

|- | |||

|<br /><h2 style="font-size:105%; font-weight:bold; text-align:left; color:#000; padding:0.2em 0.4em; width:50%;">Featured article of the week: September 19–25:</h2> | |||

<div style="padding:0.4em 1em 0.3em 1em;"> | |||

<div style="float: left; margin: 0.5em 0.9em 0.4em 0em;">[[File:Fig1 Hussain AppliedCompInfo2016.jpg|240px]]</div> | |||

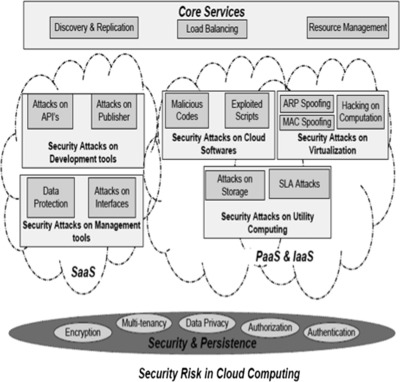

'''"[[Journal:Multilevel classification of security concerns in cloud computing|Multilevel classification of security concerns in cloud computing]]"''' | |||

Threats jeopardize some basic security requirements in a cloud. These threats generally constitute privacy breach, data leakage and unauthorized data access at different cloud layers. This paper presents a novel multilevel classification model of different security attacks across different cloud services at each layer. It also identifies attack types and risk levels associated with different cloud services at these layers. The risks are ranked as low, medium and high. The intensity of these risk levels depends upon the position of cloud layers. The attacks get more severe for lower layers where infrastructure and platform are involved. The intensity of these risk levels is also associated with security requirements of data encryption, multi-tenancy, data privacy, authentication and authorization for different cloud services. The multilevel classification model leads to the provision of dynamic security contract for each cloud layer that dynamically decides about security requirements for cloud consumer and provider. ('''[[Journal:Multilevel classification of security concerns in cloud computing|Full article...]]''')<br /> | |||

</div> | |||

|- | |||

|<br /><h2 style="font-size:105%; font-weight:bold; text-align:left; color:#000; padding:0.2em 0.4em; width:50%;">Featured article of the week: September 12–18:</h2> | |||

<div style="padding:0.4em 1em 0.3em 1em;"> | |||

<div style="float: left; margin: 0.5em 0.9em 0.4em 0em;">[[File:Fig1 Norton JofeScienceLibrarianship2016 5 1.png|240px]]</div> | |||

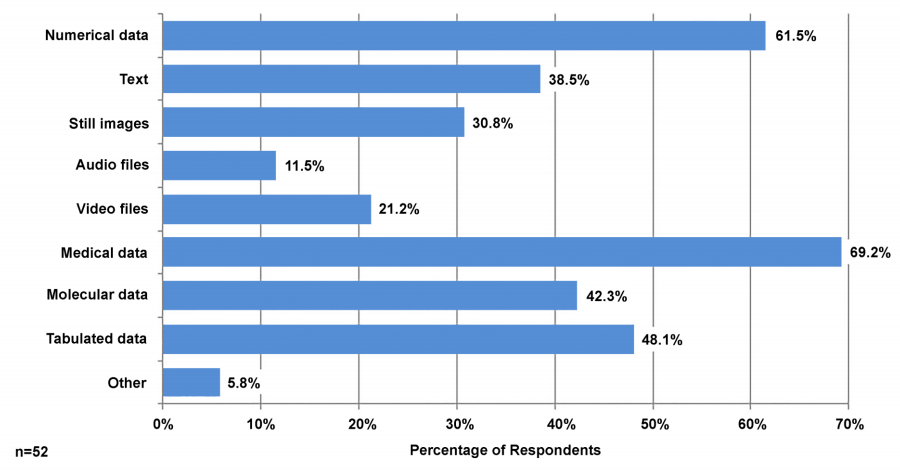

'''"[[Journal:Assessment of and response to data needs of clinical and translational science researchers and beyond|Assessment of and response to data needs of clinical and translational science researchers and beyond]]"''' | |||

As universities and libraries grapple with data management and “big data,” the need for data management solutions across disciplines is particularly relevant in clinical and [[Translational research|translational science research]], which is designed to traverse disciplinary and institutional boundaries. At the University of Florida Health Science Center Library, a team of librarians undertook an assessment of the research data management needs of clinical and translation science (CTS) researchers, including an online assessment and follow-up one-on-one interviews. | |||

The 20-question online assessment was distributed to all investigators affiliated with UF’s Clinical and Translational Science Institute (CTSI) and 59 investigators responded. Follow-up in-depth interviews were conducted with nine faculty and staff members. | |||

Results indicate that UF’s CTS researchers have diverse data management needs that are often specific to their discipline or current research project and span the data lifecycle. A common theme in responses was the need for consistent data management training, particularly for graduate students; this led to localized training within the Health Science Center and CTSI, as well as campus-wide training. ('''[[Journal:Assessment of and response to data needs of clinical and translational science researchers and beyond|Full article...]]''')<br /> | |||

</div> | |||

|- | |||

|<br /><h2 style="font-size:105%; font-weight:bold; text-align:left; color:#000; padding:0.2em 0.4em; width:50%;">Featured article of the week: September 5–11:</h2> | |||

<div style="padding:0.4em 1em 0.3em 1em;"> | |||

<div style="float: left; margin: 0.5em 0.9em 0.4em 0em;">[[File:Fig1 Hatakeyama BMCBioinformatics2016 17.gif|240px]]</div> | |||

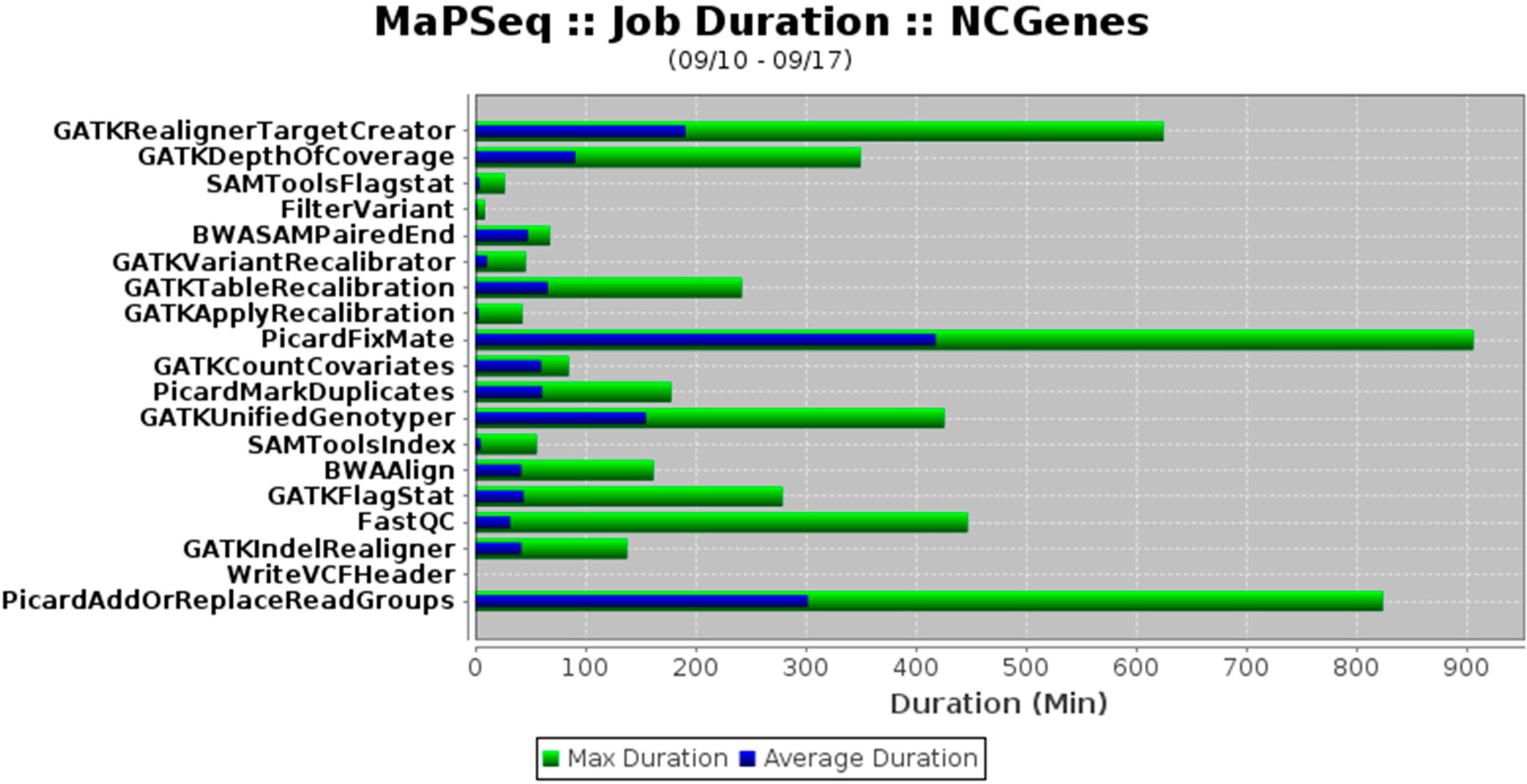

'''"[[Journal:SUSHI: An exquisite recipe for fully documented, reproducible and reusable NGS data analysis|SUSHI: An exquisite recipe for fully documented, reproducible and reusable NGS data analysis]]"''' | |||

Next generation sequencing (NGS) produces massive datasets consisting of billions of reads and up to thousands of samples. Subsequent [[Bioinformatics|bioinformatic]] analysis is typically done with the help of open-source tools, where each application performs a single step towards the final result. This situation leaves the bioinformaticians with the tasks of combining the tools, managing the data files and meta-information, documenting the analysis, and ensuring reproducibility. | |||

We present SUSHI, an agile data analysis framework that relieves bioinformaticians from the administrative challenges of their data analysis. SUSHI lets users build reproducible data analysis workflows from individual applications and manages the input data, the parameters, meta-information with user-driven semantics, and the job scripts. As distinguishing features, SUSHI provides an expert command line interface as well as a convenient web interface to run bioinformatics tools. SUSHI datasets are self-contained and self-documented on the file system. This makes them fully reproducible and ready to be shared. With the associated meta-information being formatted as plain text tables, the datasets can be readily further analyzed and interpreted outside SUSHI. ('''[[Journal:SUSHI: An exquisite recipe for fully documented, reproducible and reusable NGS data analysis|Full article...]]''')<br /> | |||

</div> | |||

|- | |||

|<br /><h2 style="font-size:105%; font-weight:bold; text-align:left; color:#000; padding:0.2em 0.4em; width:50%;">Featured article of the week: August 29–September 04:</h2> | |||

<div style="padding:0.4em 1em 0.3em 1em;"> | |||

<div style="float: left; margin: 0.5em 0.9em 0.4em 0em;">[[File:Fig14 Baker BiodiversityDataJournal2014 2.JPG|240px]]</div> | |||

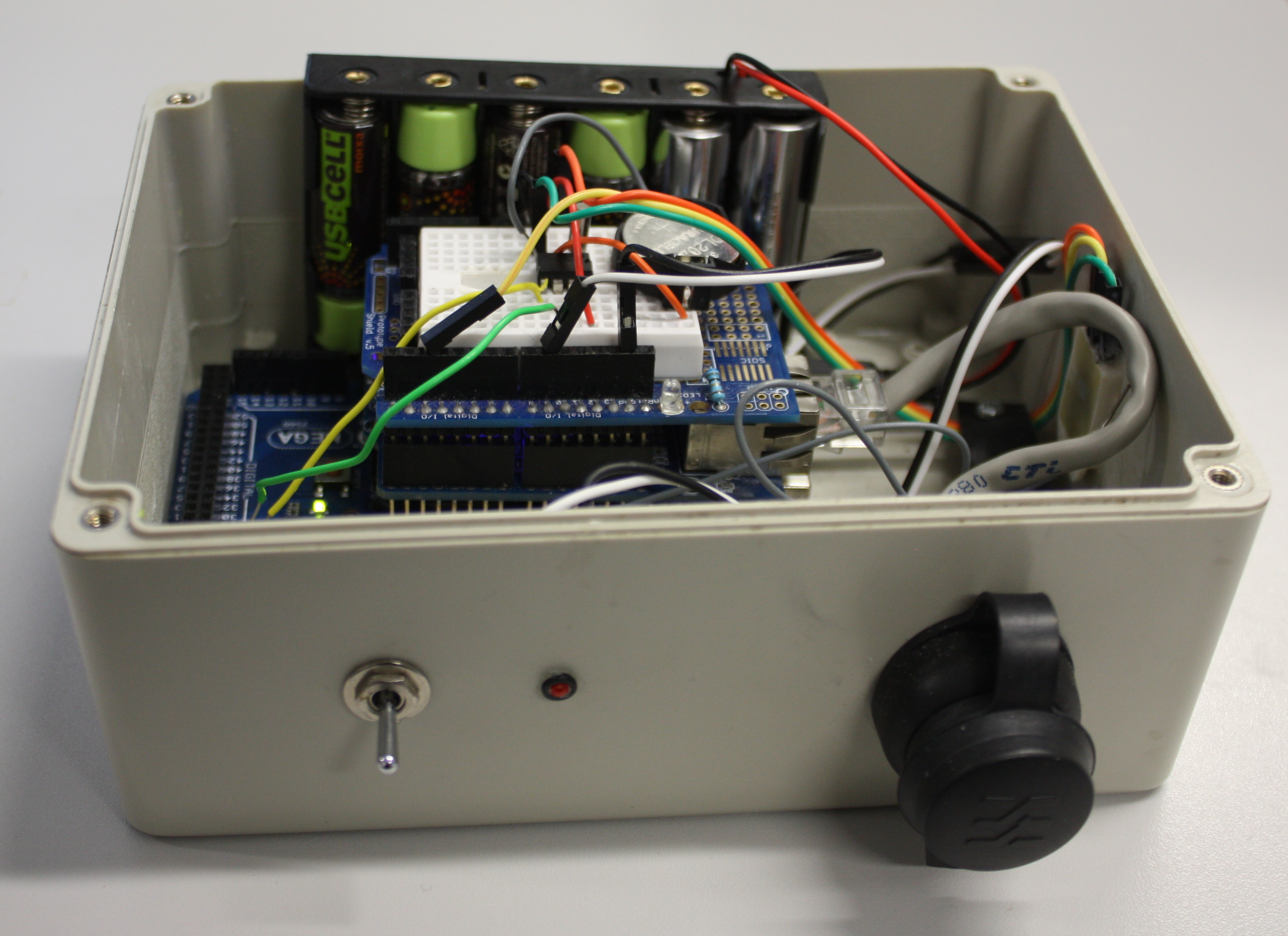

'''"[[Journal:Open source data logger for low-cost environmental monitoring|Open source data logger for low-cost environmental monitoring]]"''' | |||

The increasing transformation of biodiversity into a data-intensive science has seen numerous independent systems linked and aggregated into the current landscape of [[biodiversity informatics]]. This paper outlines how we can move forward with this program, incorporating real-time environmental monitoring into our methodology using low-power and low-cost computing platforms. | |||

Low power and cheap computational projects such as Arduino and Raspberry Pi have brought the use of small computers and micro-controllers to the masses, and their use in fields related to biodiversity science is increasing (e.g. Hirafuji shows the use of Arduino in agriculture. There is a large amount of potential in using automated tools for monitoring environments and identifying species based on these emerging hardware platforms, but to be truly useful we must integrate the data they generate with our existing systems. This paper describes the construction of an open-source environmental data logger based on the Arduino platform and its integration with the web content management system [[Drupal]] which is used as the basis for Scratchpads among other biodiversity tools. ('''[[Journal:Open source data logger for low-cost environmental monitoring|Full article...]]''')<br /> | |||

</div> | |||

|- | |||

|<br /><h2 style="font-size:105%; font-weight:bold; text-align:left; color:#000; padding:0.2em 0.4em; width:50%;">Featured article of the week: August 22–28:</h2> | |||

<div style="padding:0.4em 1em 0.3em 1em;"> | |||

<div style="float: left; margin: 0.5em 0.9em 0.4em 0em;">[[File:Fig3 Eivazzadeh JMIRMedInformatics2016 4-2.png|240px]]</div> | |||

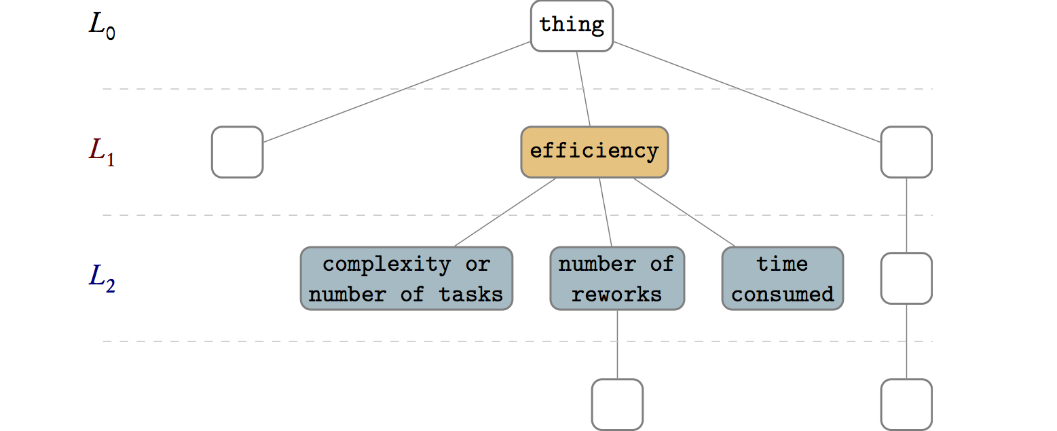

'''"[[Journal:Evaluating health information systems using ontologies|Evaluating health information systems using ontologies]]"''' | |||

There are several frameworks that attempt to address the challenges of evaluation of [[Health information technology|health information systems]] by offering models, methods, and guidelines about what to evaluate, how to evaluate, and how to report the evaluation results. Model-based evaluation frameworks usually suggest universally applicable evaluation aspects but do not consider case-specific aspects. On the other hand, evaluation frameworks that are case-specific, by eliciting user requirements, limit their output to the evaluation aspects suggested by the users in the early phases of system development. In addition, these case-specific approaches extract different sets of evaluation aspects from each case, making it challenging to collectively compare, unify, or aggregate the evaluation of a set of heterogeneous health information systems. | |||

The aim of this paper is to find a method capable of suggesting evaluation aspects for a set of one or more health information systems — whether similar or heterogeneous — by organizing, unifying, and aggregating the quality attributes extracted from those systems and from an external evaluation framework. ('''[[Journal:Evaluating health information systems using ontologies|Full article...]]''')<br /> | |||

</div> | |||

|- | |||

|<br /><h2 style="font-size:105%; font-weight:bold; text-align:left; color:#000; padding:0.2em 0.4em; width:50%;">Featured article of the week: August 15–21:</h2> | |||

<div style="padding:0.4em 1em 0.3em 1em;"> | |||

<div style="float: left; margin: 0.5em 0.9em 0.4em 0em;">[[File:Fig1 Garza BMCBioinformatics2016 17.gif|240px]]</div> | |||

'''"[[Journal:From the desktop to the grid: Scalable bioinformatics via workflow conversion|From the desktop to the grid: Scalable bioinformatics via workflow conversion]]"''' | |||

Reproducibility is one of the tenets of the [[scientific method]]. Scientific experiments often comprise complex data flows, selection of adequate parameters, and analysis and visualization of intermediate and end results. Breaking down the complexity of such experiments into the joint collaboration of small, repeatable, well defined tasks, each with well defined inputs, parameters, and outputs, offers the immediate benefit of identifying bottlenecks, pinpoint sections which could benefit from parallelization, among others. [[Workflow]]s rest upon the notion of splitting complex work into the joint effort of several manageable tasks. | |||

There are several engines that give users the ability to design and execute workflows. Each engine was created to address certain problems of a specific community, therefore each one has its advantages and shortcomings. Furthermore, not all features of all workflow engines are royalty-free — an aspect that could potentially drive away members of the scientific community. ('''[[Journal:From the desktop to the grid: Scalable bioinformatics via workflow conversion|Full article...]]''')<br /> | |||

</div> | |||

|- | |||

|<br /><h2 style="font-size:105%; font-weight:bold; text-align:left; color:#000; padding:0.2em 0.4em; width:50%;">Featured article of the week: August 8–14:</h2> | |||

<div style="padding:0.4em 1em 0.3em 1em;"> | |||

<div style="float: left; margin: 0.5em 0.9em 0.4em 0em;">[[File:Fig0.5 Alperin JofCheminformatics2016 8.gif|240px]]</div> | |||

'''"[[Journal:Terminology spectrum analysis of natural-language chemical documents: Term-like phrases retrieval routine|Terminology spectrum analysis of natural-language chemical documents: Term-like phrases retrieval routine]]"''' | |||

This study seeks to develop, test and assess a methodology for automatic extraction of a complete set of ‘term-like phrases’ and to create a terminology spectrum from a collection of natural language PDF documents in the field of chemistry. The definition of ‘term-like phrases’ is one or more consecutive words and/or alphanumeric string combinations with unchanged spelling which convey specific scientific meanings. A terminology spectrum for a natural language document is an indexed list of tagged entities including: recognized general scientific concepts, terms linked to existing thesauri, names of chemical substances/reactions and term-like phrases. The retrieval routine is based on n-gram textual analysis with a sequential execution of various ‘accept and reject’ rules with taking into account the morphological and structural [[information]]. | |||

The assessment of the retrieval process, expressed quantitatively with a precision (P), recall (R) and F1-measure, which are calculated manually from a limited set of documents (the full set of text abstracts belonging to five EuropaCat events were processed) by professional chemical scientists, has proved the effectiveness of the developed approach. ('''[[Journal:Terminology spectrum analysis of natural-language chemical documents: Term-like phrases retrieval routine|Full article...]]''')<br /> | |||

</div> | |||

|- | |||

|<br /><h2 style="font-size:105%; font-weight:bold; text-align:left; color:#000; padding:0.2em 0.4em; width:50%;">Featured article of the week: August 1–7:</h2> | |||

<div style="padding:0.4em 1em 0.3em 1em;"> | |||

<div style="float: left; margin: 0.5em 0.9em 0.4em 0em;">[[File:ALegalFrame1.png|240px]]</div> | |||

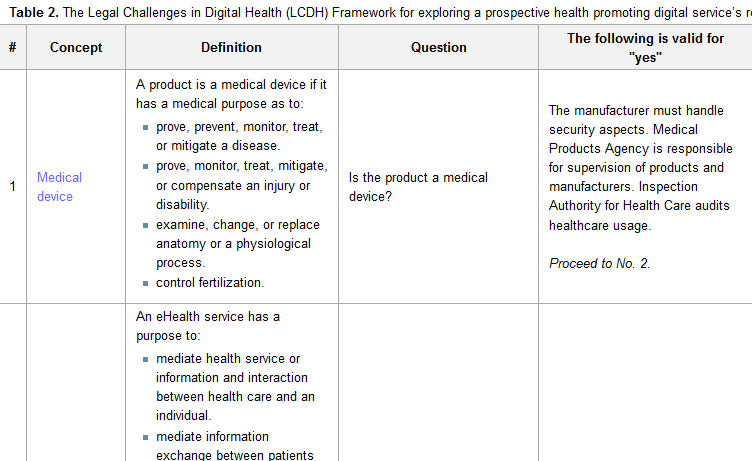

'''"[[Journal:A legal framework to support development and assessment of digital health services|A legal framework to support development and assessment of digital health services]]"''' | |||

Digital health services empower people to track, manage, and improve their own health and quality of life while delivering a more personalized and precise health care, at a lower cost and with higher efficiency and availability. Essential for the use of digital health services is that the treatment of any personal data is compatible with the Patient Data Act, Personal Data Act, and other applicable privacy laws. | |||

The aim of this study was to develop a framework for legal challenges to support designers in development and assessment of digital health services. A purposive sampling, together with snowball recruitment, was used to identify stakeholders and information sources for organizing, extending, and prioritizing the different concepts, actors, and regulations in relation to digital health and health-promoting digital systems. The data were collected through structured interviewing and iteration, and three different cases were used for face validation of the framework. A framework for assessing the legal challenges in developing digital health services (Legal Challenges in Digital Health [LCDH] Framework) was created and consists of six key questions to be used to evaluate a digital health service according to current legislation. ('''[[Journal:A legal framework to support development and assessment of digital health services|Full article...]]''')<br /> | |||

</div> | |||

|- | |||

|<br /><h2 style="font-size:105%; font-weight:bold; text-align:left; color:#000; padding:0.2em 0.4em; width:50%;">Featured article of the week: July 25–31:</h2> | |||

<div style="padding:0.4em 1em 0.3em 1em;"> | |||

<div style="float: left; margin: 0.5em 0.9em 0.4em 0em;">[[File:Fig1 Ashish FrontInNeuroinformatics2016 9.jpg|240px]]</div> | |||

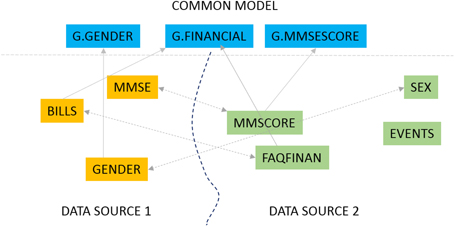

'''"[[Journal:The GAAIN Entity Mapper: An active-learning system for medical data mapping|The GAAIN Entity Mapper: An active-learning system for medical data mapping]]"''' | |||

This work is focused on mapping biomedical datasets to a common representation, as an integral part of data harmonization for integrated biomedical data access and sharing. We present GEM, an intelligent software assistant for automated data mapping across different datasets or from a dataset to a common data model. The GEM system automates data mapping by providing precise suggestions for data element mappings. It leverages the detailed metadata about elements in associated dataset documentation such as data dictionaries that are typically available with biomedical datasets. It employs unsupervised text mining techniques to determine similarity between data elements and also employs machine-learning classifiers to identify element matches. It further provides an active-learning capability where the process of training the GEM system is optimized. Our experimental evaluations show that the GEM system provides highly accurate data mappings (over 90 percent accuracy) for real datasets of thousands of data elements each, in the Alzheimer's disease research domain. ('''[[Journal:The GAAIN Entity Mapper: An active-learning system for medical data mapping|Full article...]]''')<br /> | |||

</div> | |||

|- | |||

|<br /><h2 style="font-size:105%; font-weight:bold; text-align:left; color:#000; padding:0.2em 0.4em; width:50%;">Featured article of the week: July 18–24:</h2> | |||

<div style="padding:0.4em 1em 0.3em 1em;"> | |||

<div style="float: left; margin: 0.5em 0.9em 0.4em 0em;">[[File:Fig1 Easton OJofPubHlthInfo2015 7-3.jpg|240px]]</div> | |||

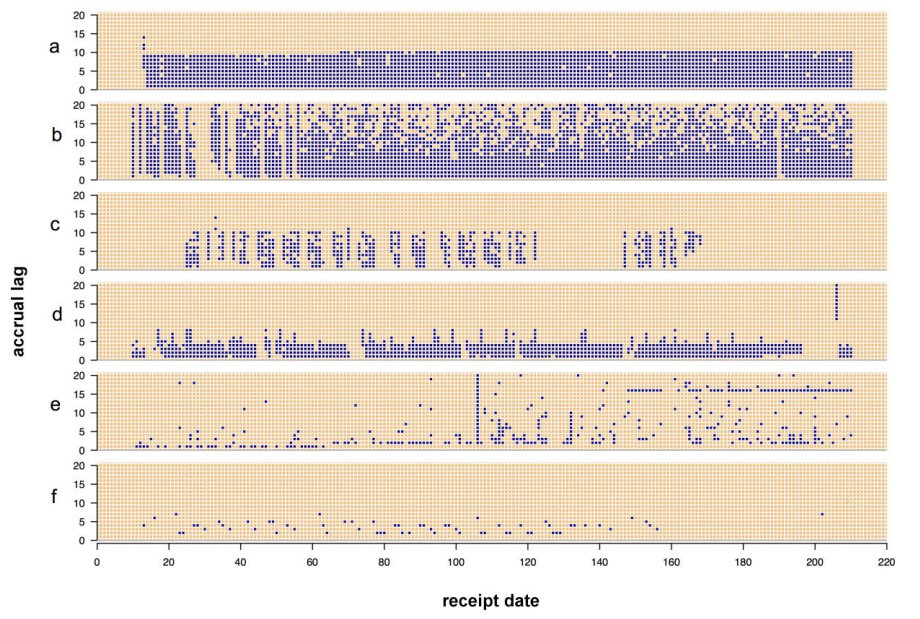

'''"[[Journal:Visualizing the quality of partially accruing data for use in decision making|Visualizing the quality of partially accruing data for use in decision making]]"''' | |||

Secondary use of clinical health data for near real-time [[Public health laboratory|public health surveillance]] presents challenges surrounding its utility due to data quality issues. Data used for real-time surveillance must be timely, accurate and complete if it is to be useful; if incomplete data are used for surveillance, understanding the structure of the incompleteness is necessary. Such data are commonly aggregated due to privacy concerns. The Distribute project was a near real-time influenza-like-illness (ILI) surveillance system that relied on aggregated secondary clinical health data. The goal of this work is to disseminate the data quality tools developed to gain insight into the data quality problems associated with these data. These tools apply in general to any system where aggregate data are accrued over time and were created through the end-user-as-developer paradigm. Each tool was developed during the exploratory analysis to gain insight into structural aspects of data quality. ('''[[Journal:Visualizing the quality of partially accruing data for use in decision making|Full article...]]''')<br /> | |||

</div> | |||

|- | |||

|<br /><h2 style="font-size:105%; font-weight:bold; text-align:left; color:#000; padding:0.2em 0.4em; width:50%;">Featured article of the week: July 11–17:</h2> | |||

<div style="padding:0.4em 1em 0.3em 1em;"> | |||

<div style="float: left; margin: 0.5em 0.9em 0.4em 0em;">[[File:Fig1 Guo JofPathInformatics2016 7.jpg|240px]]</div> | |||

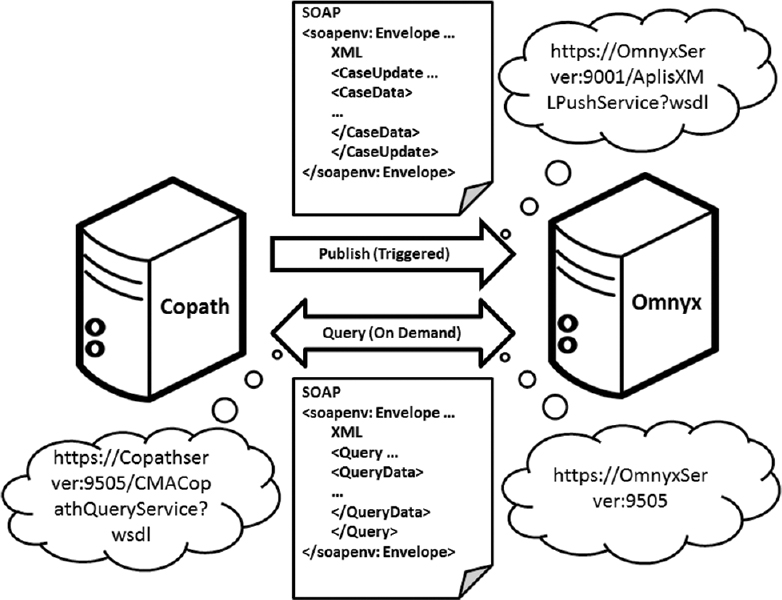

'''"[[Journal:Digital pathology and anatomic pathology laboratory information system integration to support digital pathology sign-out|Digital pathology and anatomic pathology laboratory information system integration to support digital pathology sign-out]]"''' | |||

The adoption of digital pathology offers benefits over labor-intensive, time-consuming, and error-prone manual processes. However, because most workflow and [[laboratory]] transactions are centered around the anatomical pathology laboratory information system (APLIS), adoption of digital pathology ideally requires integration with the APLIS. A digital pathology system (DPS) integrated with the APLIS was recently implemented at our institution for diagnostic use. We demonstrate how such integration supports digital workflow to sign-out anatomical pathology cases. | |||

Workflow begins when pathology cases get accessioned into the APLIS ([[Vendor:Cerner Corporation|CoPathPlus]]). Glass slides from these cases are then digitized (Omnyx VL120 scanner) and automatically uploaded into the DPS (Omnyx; Integrated Digital Pathology (IDP) software v.1.3). The APLIS transmits case data to the DPS via a publishing web service. The DPS associates scanned images with the correct case using barcode labels on slides and information received from the APLIS. When pathologists remotely open a case in the DPS, additional information (e.g. gross pathology details, prior cases) gets retrieved from the APLIS through a query web service. ('''[[Journal:Digital pathology and anatomic pathology laboratory information system integration to support digital pathology sign-out|Full article...]]''')<br /> | |||

</div> | |||

|- | |||

|<br /><h2 style="font-size:105%; font-weight:bold; text-align:left; color:#000; padding:0.2em 0.4em; width:50%;">Featured article of the week: July 4–10:</h2> | |||

<div style="padding:0.4em 1em 0.3em 1em;"> | |||

<div style="float: left; margin: 0.5em 0.9em 0.4em 0em;">[[File:Fig1 Reisman EBioinformatics2016 12.jpg|240px]]</div> | |||

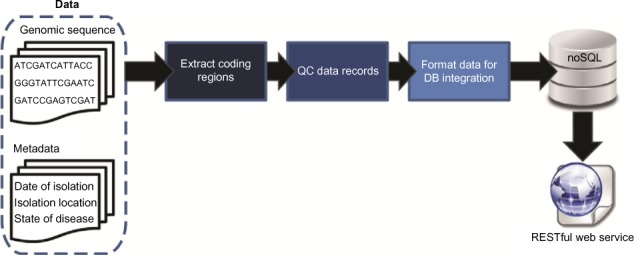

'''"[[Journal:A polyglot approach to bioinformatics data integration: A phylogenetic analysis of HIV-1|A polyglot approach to bioinformatics data integration: A phylogenetic analysis of HIV-1]]"''' | |||

As [[sequencing]] technologies continue to drop in price and increase in throughput, new challenges emerge for the management and accessibility of genomic sequence data. We have developed a pipeline for facilitating the storage, retrieval, and subsequent analysis of molecular data, integrating both sequence and metadata. Taking a polyglot approach involving multiple languages, libraries, and persistence mechanisms, sequence data can be aggregated from publicly available and local repositories. Data are exposed in the form of a RESTful web service, formatted for easy querying, and retrieved for downstream analyses. As a proof of concept, we have developed a resource for annotated HIV-1 sequences. Phylogenetic analyses were conducted for >6,000 HIV-1 sequences revealing spatial and temporal factors influence the evolution of the individual genes uniquely. Nevertheless, signatures of origin can be extrapolated even despite increased globalization. The approach developed here can easily be customized for any species of interest. ('''[[Journal:A polyglot approach to bioinformatics data integration: A phylogenetic analysis of HIV-1|Full article...]]''')<br /> | |||

</div> | |||

|- | |||

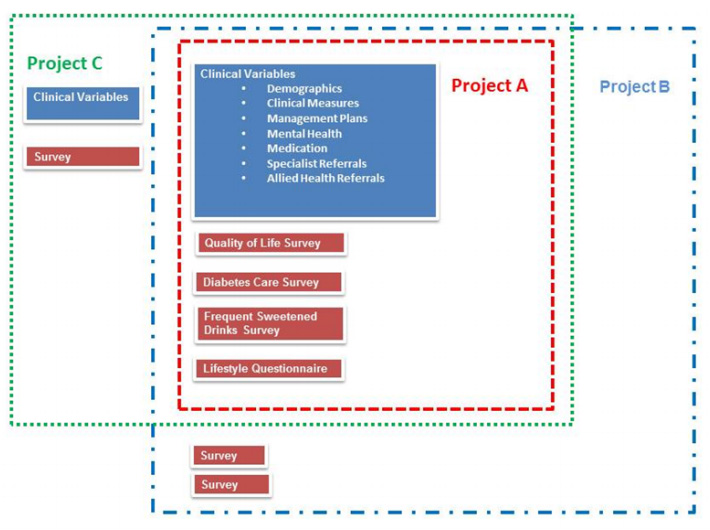

|<br /><h2 style="font-size:105%; font-weight:bold; text-align:left; color:#000; padding:0.2em 0.4em; width:50%;">Featured article of the week: June 27–July 3:</h2> | |||

<div style="padding:0.4em 1em 0.3em 1em;"> | <div style="padding:0.4em 1em 0.3em 1em;"> | ||

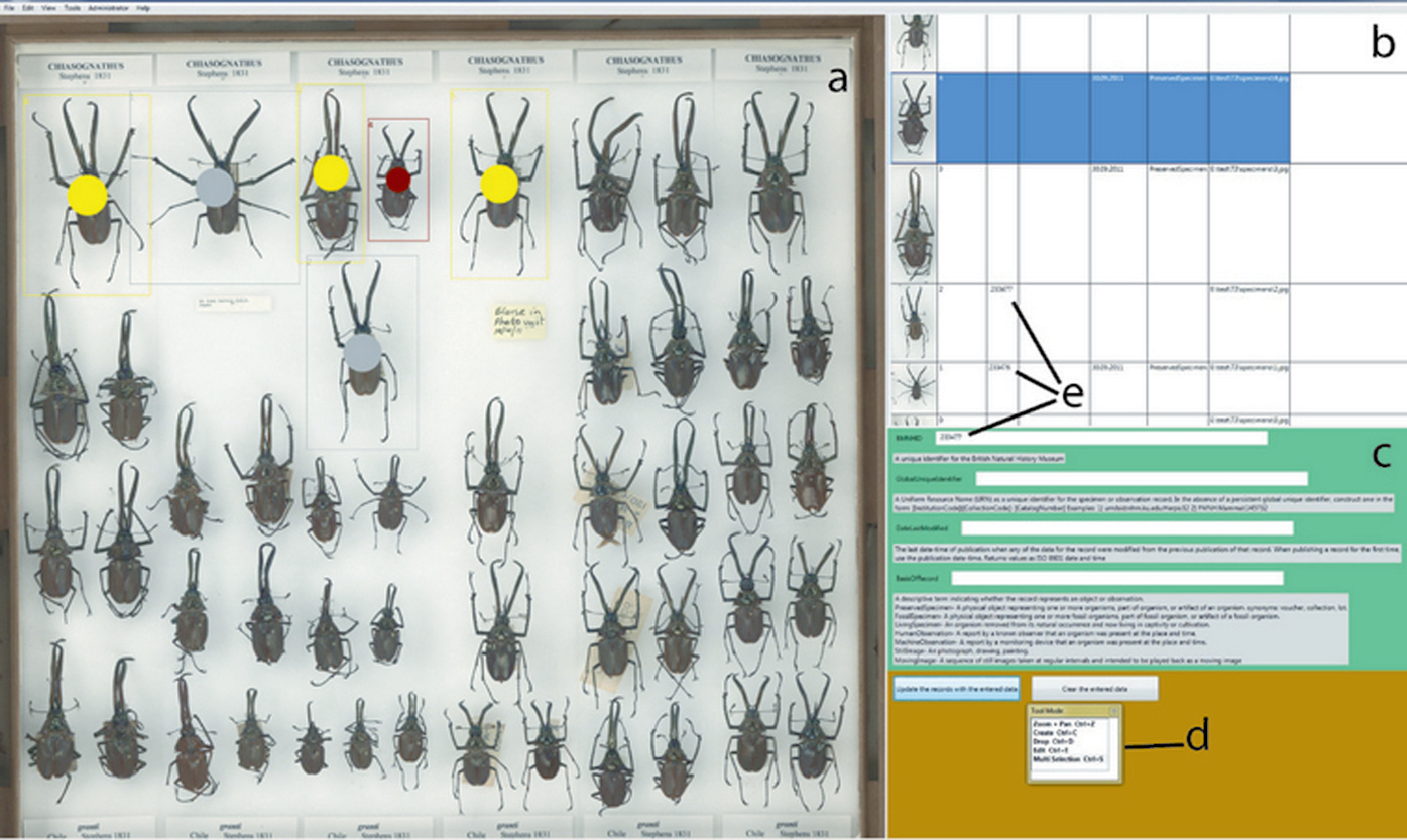

<div style="float: left; margin: 0.5em 0.9em 0.4em 0em;">[[File:Fig4 Rodriguez BMCBioinformatics2016 17.gif|240px]]</div> | <div style="float: left; margin: 0.5em 0.9em 0.4em 0em;">[[File:Fig4 Rodriguez BMCBioinformatics2016 17.gif|240px]]</div> | ||

| Line 61: | Line 281: | ||

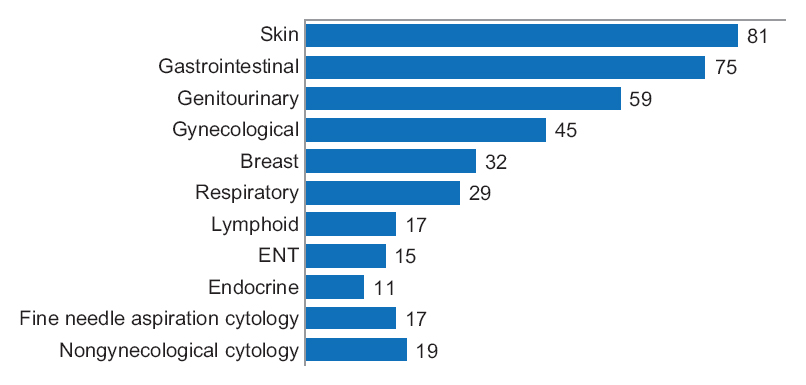

One senior staff pathologist reported 400 consecutive cases in histology, nongynecological, and fine needle aspiration cytology (20 sessions, 20 cases/session), over 4 weeks. Complex, difficult, and rare cases were excluded from the study to reduce the bias. A primary diagnosis was digital, followed by traditional microscopy, six months later, with only request forms available for both. Microscopic slides were scanned at ×20, digital images accessed through the fully integrated [[laboratory information management system]] (LIMS) and viewed in the image viewer on double 23” displays. ('''[[Journal:Diagnostic time in digital pathology: A comparative study on 400 cases|Full article...]]''')<br /> | One senior staff pathologist reported 400 consecutive cases in histology, nongynecological, and fine needle aspiration cytology (20 sessions, 20 cases/session), over 4 weeks. Complex, difficult, and rare cases were excluded from the study to reduce the bias. A primary diagnosis was digital, followed by traditional microscopy, six months later, with only request forms available for both. Microscopic slides were scanned at ×20, digital images accessed through the fully integrated [[laboratory information management system]] (LIMS) and viewed in the image viewer on double 23” displays. ('''[[Journal:Diagnostic time in digital pathology: A comparative study on 400 cases|Full article...]]''')<br /> | ||

</div> | </div> | ||

|- | |- | ||

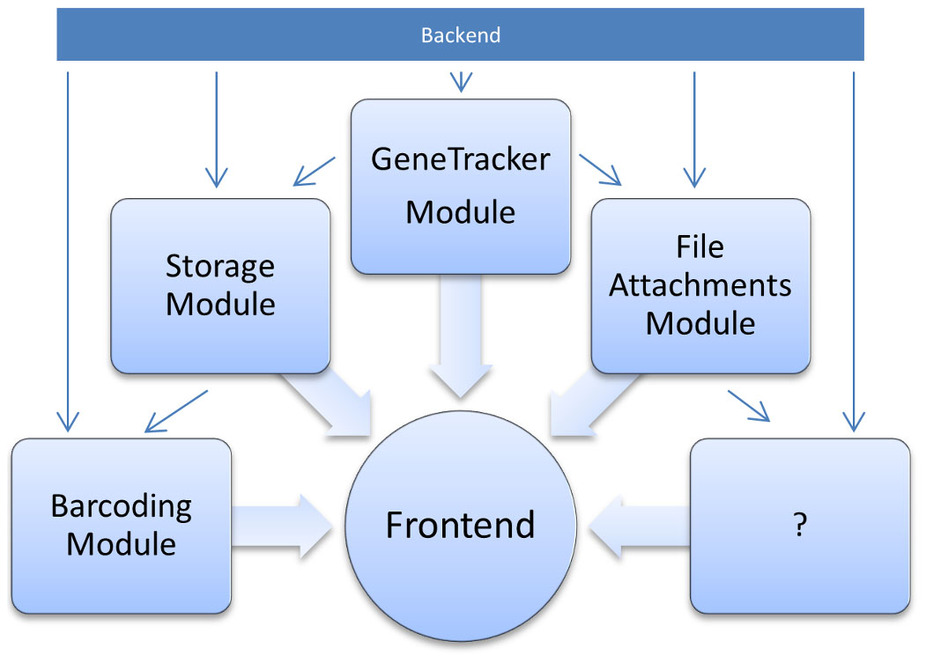

| Line 236: | Line 448: | ||

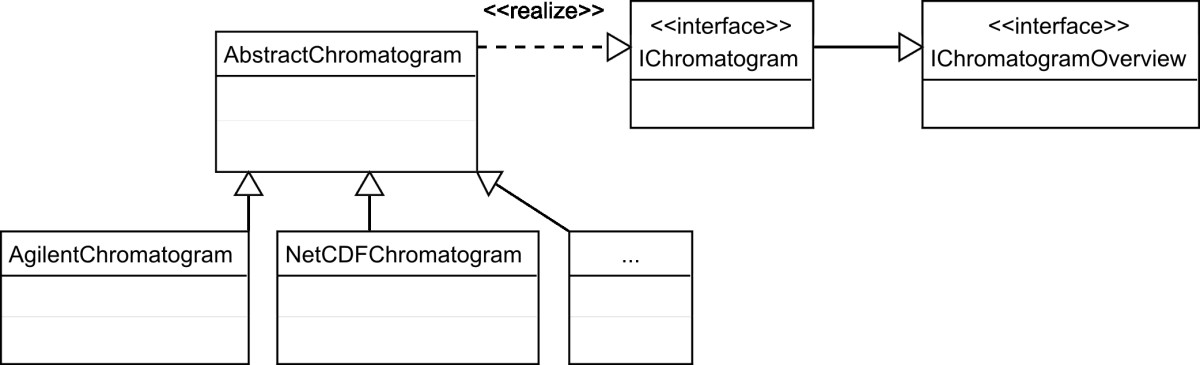

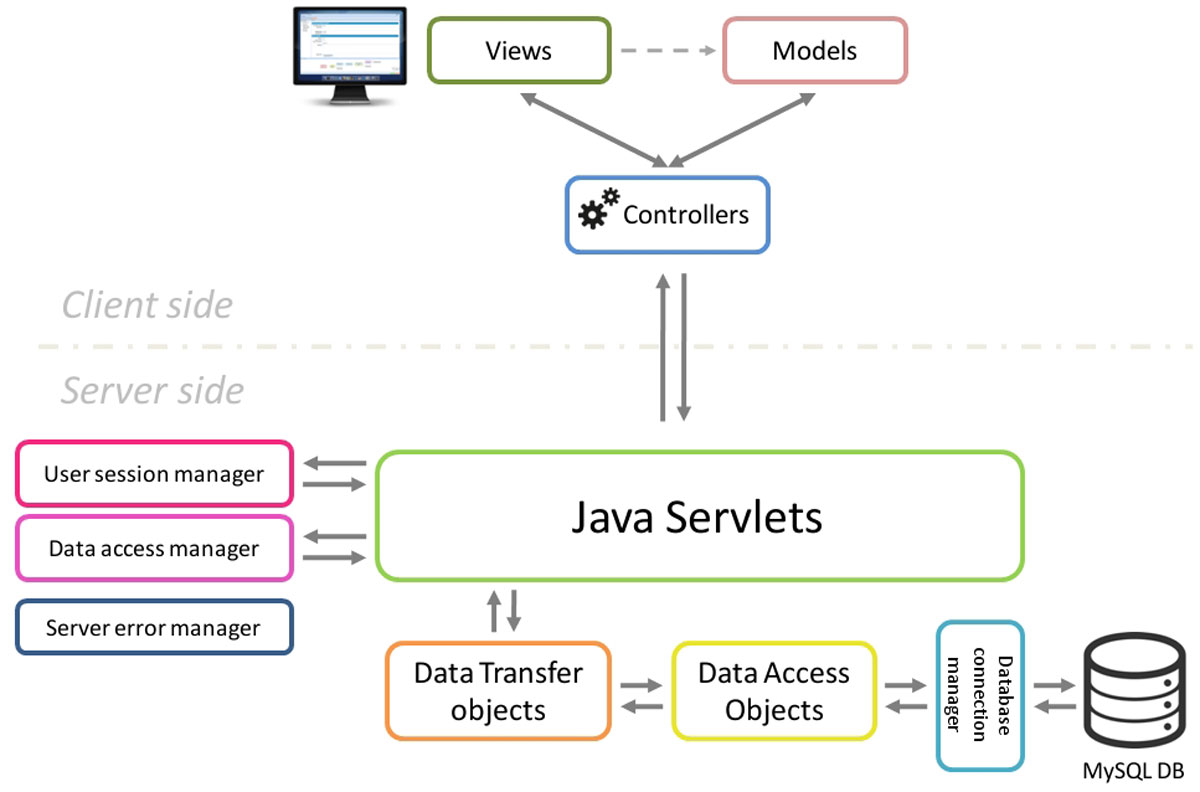

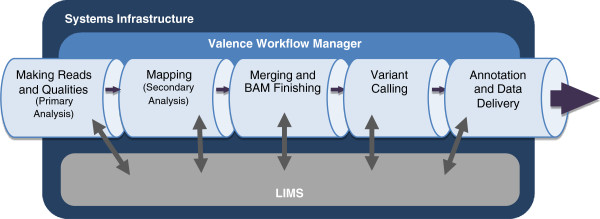

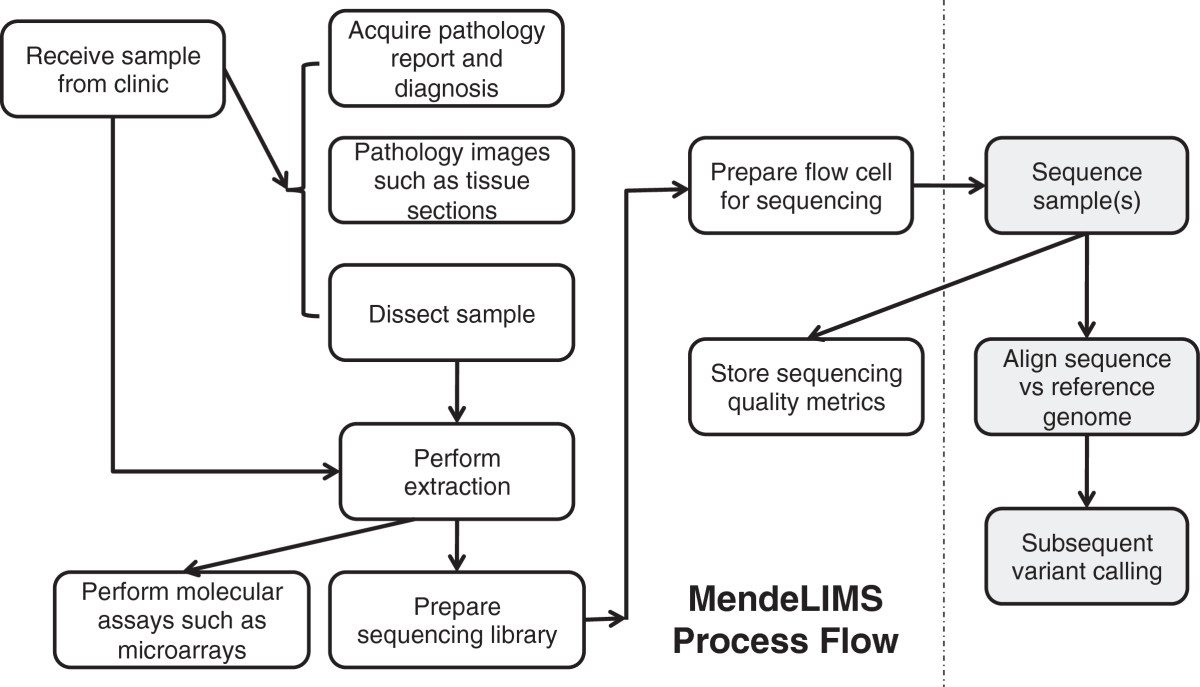

Large clinical genomics studies using next generation DNA sequencing require the ability to select and track samples from a large population of patients through many experimental steps. With the number of clinical genome sequencing studies increasing, it is critical to maintain adequate [[laboratory information management system]]s to manage the thousands of patient samples that are subject to this type of genetic analysis. | Large clinical genomics studies using next generation DNA sequencing require the ability to select and track samples from a large population of patients through many experimental steps. With the number of clinical genome sequencing studies increasing, it is critical to maintain adequate [[laboratory information management system]]s to manage the thousands of patient samples that are subject to this type of genetic analysis. | ||

To meet the needs of clinical population studies using genome sequencing, we developed a web-based laboratory information management system (LIMS) with a flexible configuration that is adaptable to continuously evolving experimental protocols of next generation DNA sequencing technologies. Our system is referred to as [[Stanford University School of Medicine#MendeLIMS|MendeLIMS]], is easily implemented with open source tools and is also highly configurable and extensible. MendeLIMS has been invaluable in the management of our clinical genome sequencing studies. ('''[[Journal:MendeLIMS: A web-based laboratory information management system for clinical genome sequencing|Full article...]]''')<br /> | To meet the needs of clinical population studies using genome sequencing, we developed a web-based laboratory information management system (LIMS) with a flexible configuration that is adaptable to continuously evolving experimental protocols of next generation DNA sequencing technologies. Our system is referred to as [[Vendor:Stanford University School of Medicine#MendeLIMS|MendeLIMS]], is easily implemented with open source tools and is also highly configurable and extensible. MendeLIMS has been invaluable in the management of our clinical genome sequencing studies. ('''[[Journal:MendeLIMS: A web-based laboratory information management system for clinical genome sequencing|Full article...]]''')<br /> | ||

</div> | </div> | ||

|- | |- | ||

Latest revision as of 22:16, 4 April 2024

|

|

If you're looking for other "Article of the Week" archives: 2014 - 2015 - 2016 - 2017 - 2018 - 2019 - 2020 - 2021 - 2022 - 2023 - 2024 |

Featured article of the week archive - 2016

Welcome to the LIMSwiki 2016 archive for the Featured Article of the Week.

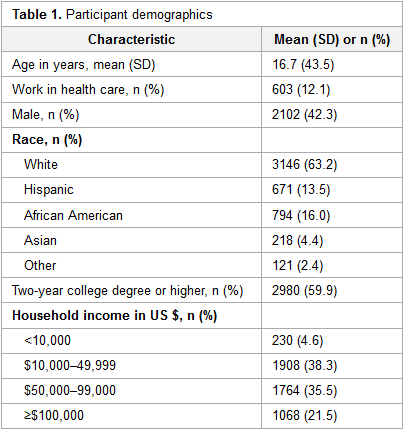

Featured article of the week: December 19–25:"Health literacy and health information technology adoption: The potential for a new digital divide" Approximately one-half of American adults exhibit low health literacy and thus struggle to find and use health information. Low health literacy is associated with negative outcomes, including overall poorer health. Health information technology (HIT) makes health information available directly to patients through electronic tools including patient portals, wearable technology, and mobile apps. The direct availability of this information to patients, however, may be complicated by misunderstanding of HIT privacy and information sharing. The purpose of this study was to determine whether health literacy is associated with patients’ use of four types of HIT tools: fitness and nutrition apps, activity trackers, and patient portals. (Full article...)

|