Featured article of the week: July 24–30:"Understanding cybersecurity frameworks and information security standards: A review and comprehensive overview"

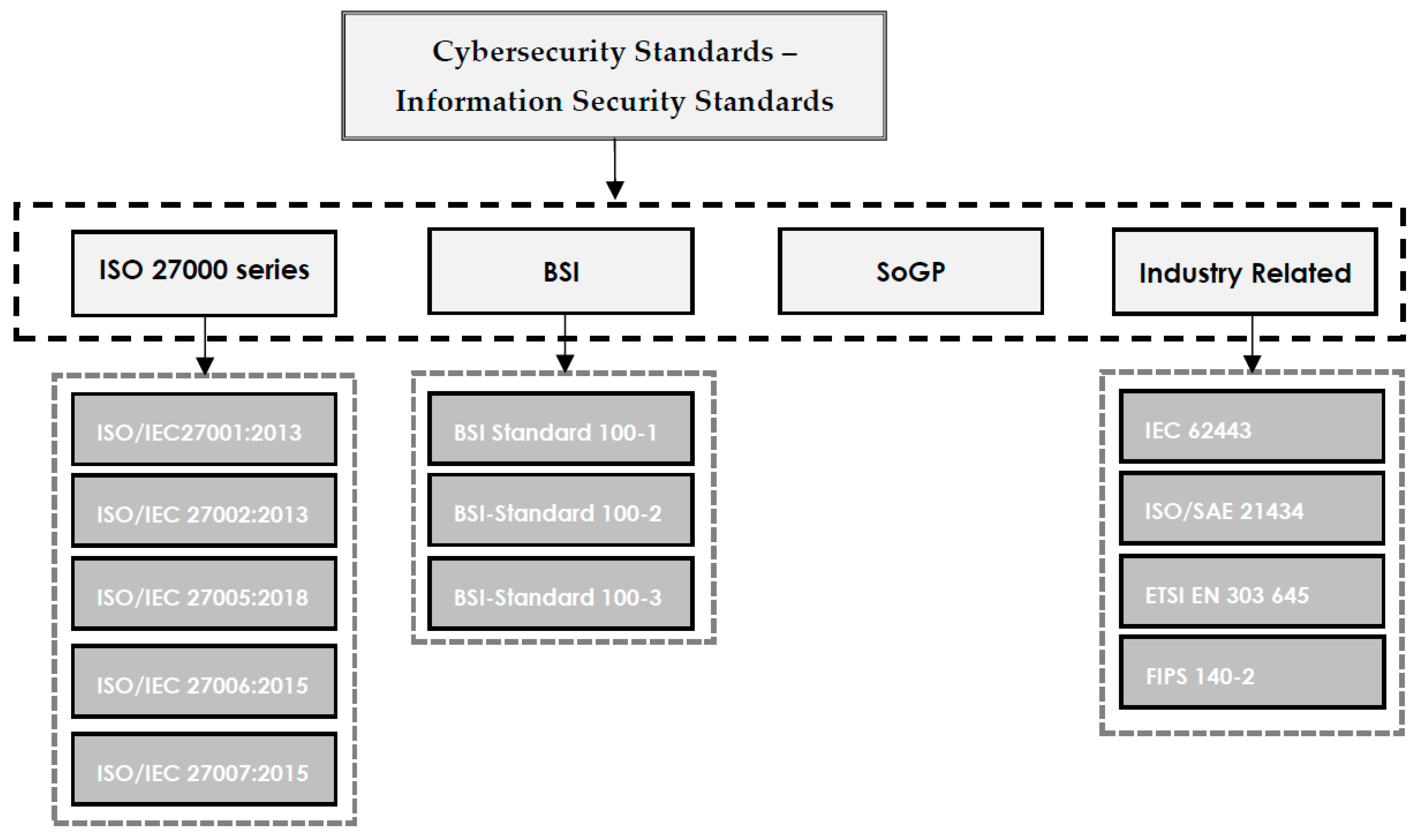

Businesses are reliant on data to survive in the competitive market, and data is constantly in danger of loss or theft. Loss of valuable data leads to negative consequences for both individuals and organizations. Cybersecurity is the process of protecting sensitive data from damage or theft. To successfully achieve the objectives of implementing cybersecurity at different levels, a range of procedures and standards should be followed. Cybersecurity standards determine the requirements that an organization should follow to achieve cybersecurity objectives and minimize the impact of cybercrimes. Cybersecurity standards demonstrate whether an information management system can meet security requirements through a range of best practices and procedures. A range of standards has been established by various organizations to be employed in information management systems of different sizes and types ... (Full article...)

Featured article of the week: July 17–23:

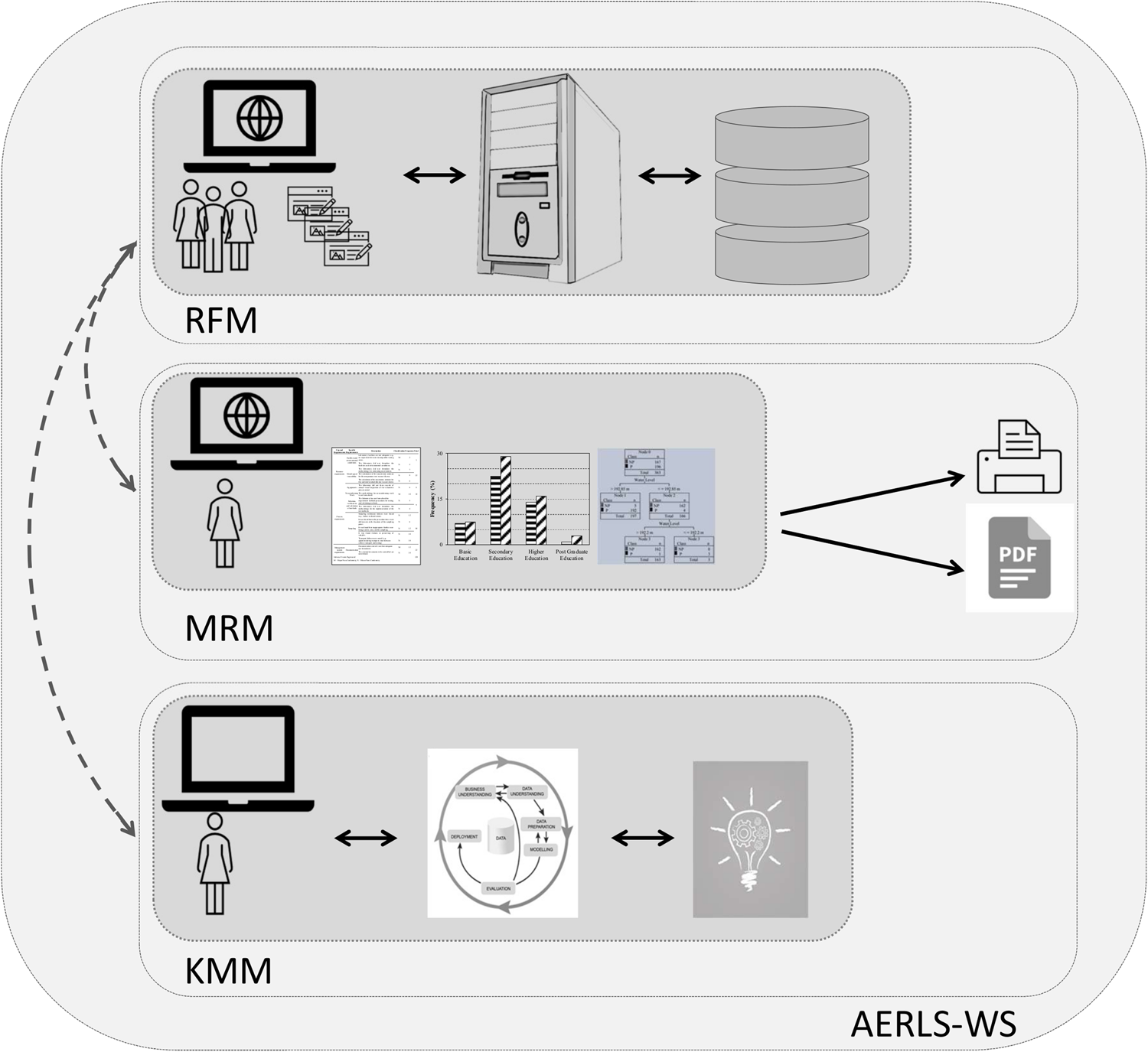

"Bridging data management platforms and visualization tools to enable ad-hoc and smart analytics in life sciences"

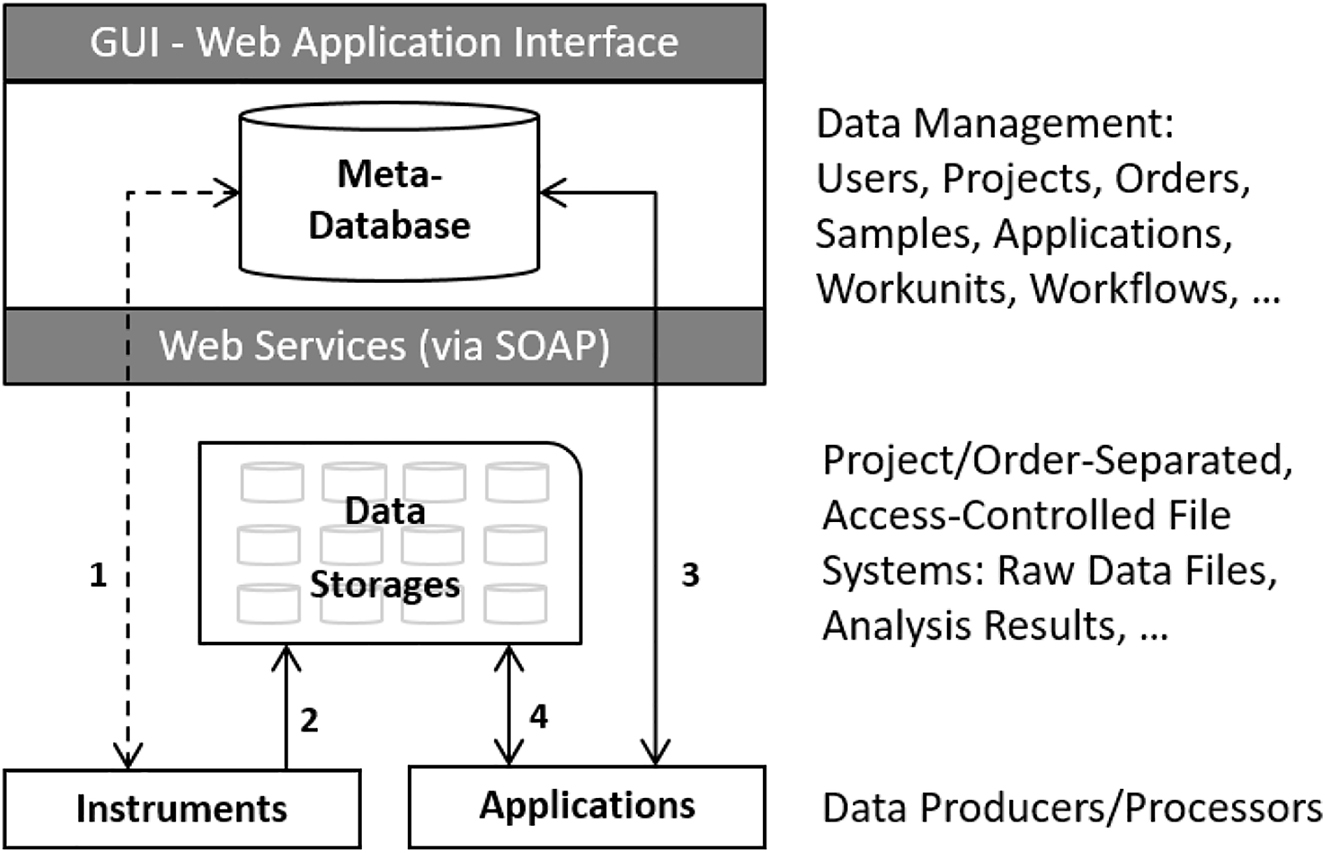

Core facilities, which share centralized research resources across institutions and organizations, have to offer technologies that best serve the needs of their users and provide them a competitive advantage in research. They have to set up and maintain tens to hundreds of instruments, which produce large amounts of data and serve thousands of active projects and customers. Particular emphasis has to be given to the reproducibility of the results. Increasingly, the entire process—from building the research hypothesis, conducting the experiments, and taking the measurements, through to data exploration and analysis—is solely driven by very few experts in various scientific fields ... (Full article...)

|

Featured article of the week: July 10–16:

"Digitalization of calibration data management in the pharmaceutical industry using a multitenant platform"

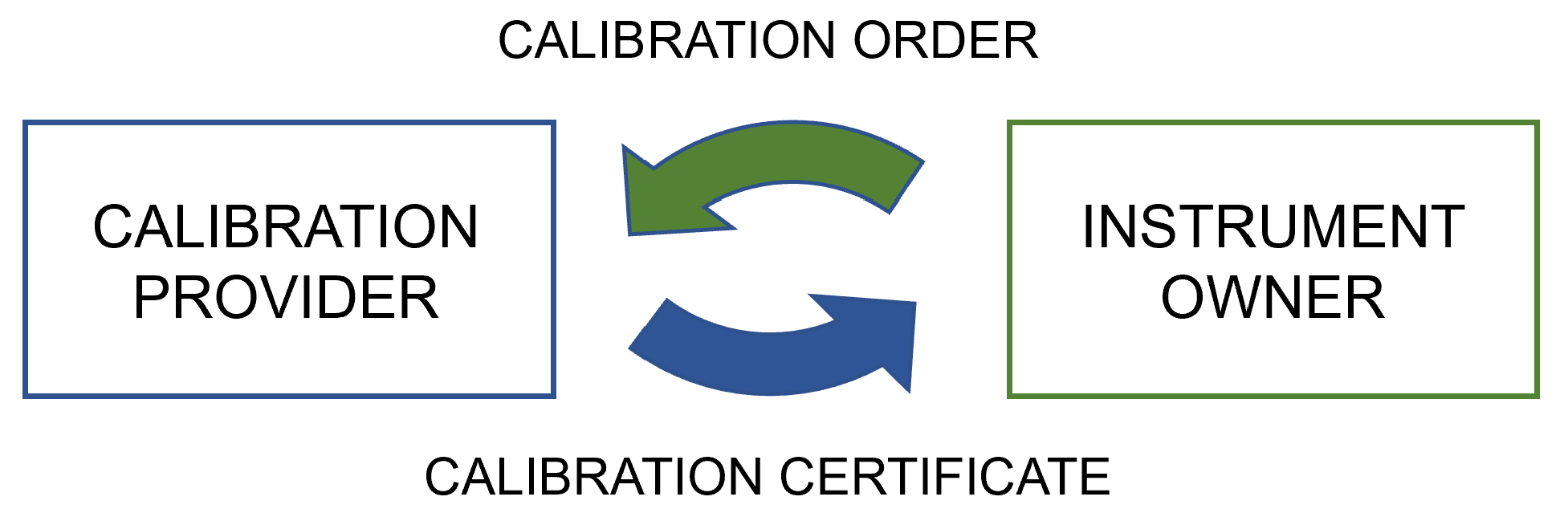

The global quality infrastructure (QI) has been established and is maintained to ensure the safety of products and services for their users. One of the cornerstones of the QI is metrology, i.e., the science of measurement, as quality management systems (QMS) commonly rely on measurements for evaluating quality. For this reason, calibration procedures and management of the data related to them are of the utmost importance for quality management in the process industry, made a particularly high priority by regulatory authorities. To overcome the relatively low level of digitalization in metrology, machine-interpretable data formats such as digital calibration certificates (DCC) are being developed ... (Full article...)

|

Featured article of the week: July 03–09:

"Introductory evidence on data management and practice systems of forensic autopsies in sudden and unnatural deaths: A scoping review"

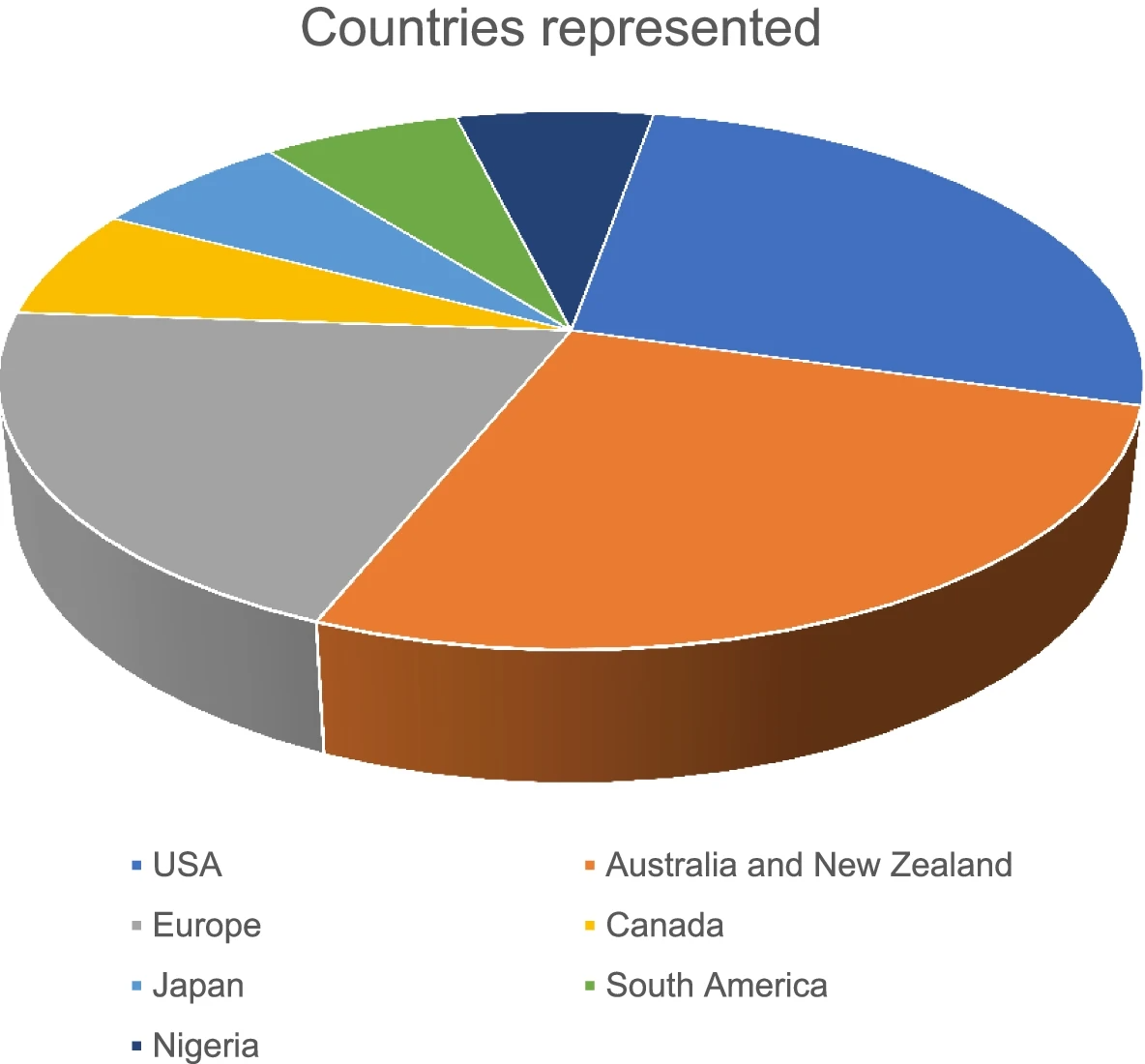

The investigation into sudden unexpected and unnatural deaths supports criminal justice, aids in litigation, and provides important information for public health, including surveillance, epidemiology, and prevention programs. The use of mortality data to convey trends can inform policy development and resource allocations. Hence, data practices and data management systems in forensic medicine are critical. This study scoped literature and described the body of knowledge on data management and practice systems in forensic medicine. Five steps of the methodological framework of Arksey and O’Malley guided this scoping review. A combination of keywords, Boolean terms, and medical subject headings was used to search PubMed, EBSCOhost (CINAHL with full text and Health Sources), Cochrane Library, Scopus, Web of Science, Science Direct, WorldCat, and Google Scholar for peer review papers in English ... (Full article...)

|

Featured article of the week: June 26–July 02:

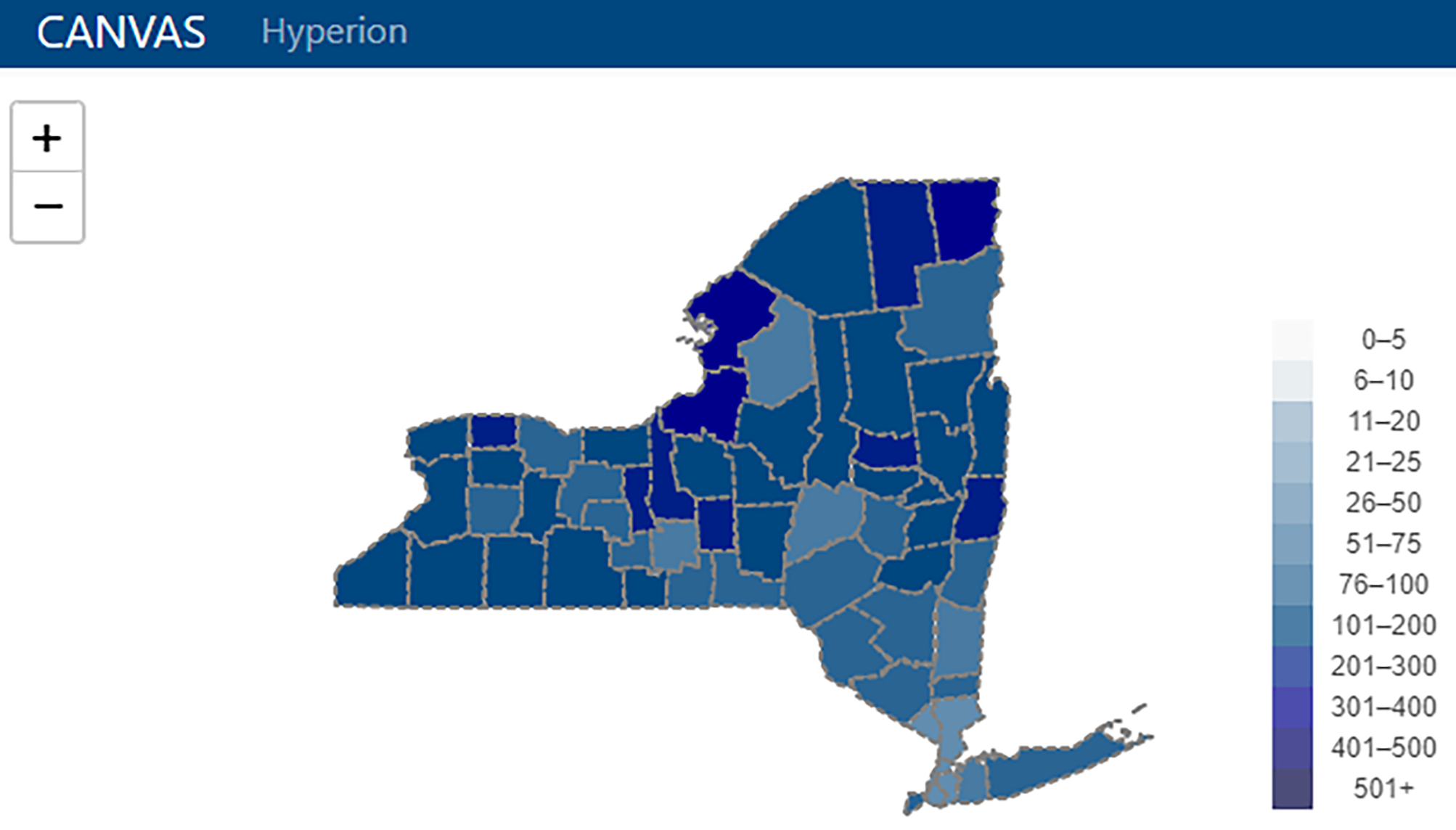

"From months to minutes: Creating Hyperion, a novel data management system expediting data insights for oncology research and patient care"

Ensuring timely access to accurate data is critical for the functioning of a cancer center. Despite overlapping data needs, data are often fragmented and sequestered across multiple systems (such as the electronic health record [EHR], state and federal registries, and research databases), creating high barriers to data access for clinicians, researchers, administrators, quality officers, and patients. The creation of integrated data systems also faces technical, leadership, cost, and human resource barriers, among others. The University of Rochester's James P. Wilmot Cancer Institute (WCI) hired a small team of individuals with both technical and clinical expertise to develop a custom data management software platform—Hyperion— addressing five challenges: lowering the skill level required to maintain the system, reducing costs, allowing users to access data autonomously, optimizing data security and utilization, and shifting technological team structure to encourage rapid innovation ... (Full article...)

|

Featured article of the week: June 19–25:

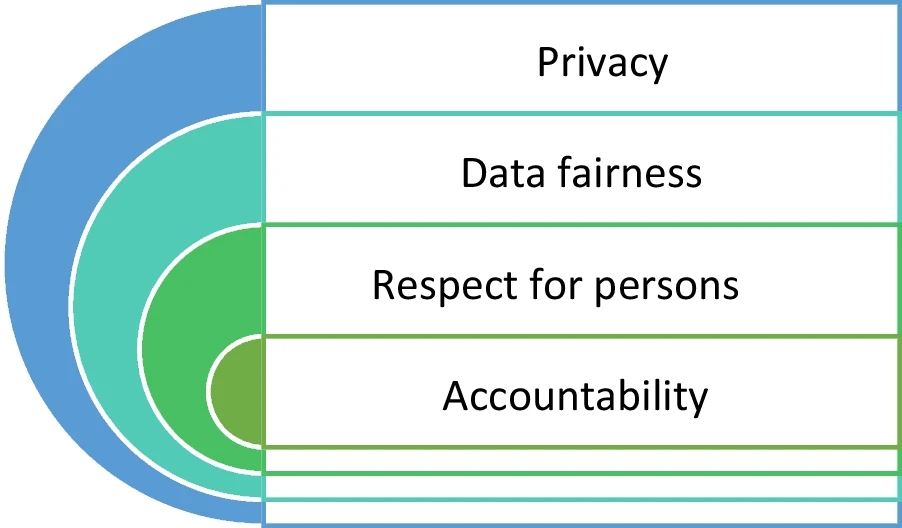

"Health data privacy through homomorphic encryption and distributed ledger computing: An ethical-legal qualitative expert assessment study"

Increasingly, hospitals and research institutes are developing technical solutions for sharing patient data in a privacy-preserving manner. Two of these technical solutions are homomorphic encryption and distributed ledger technology. Homomorphic encryption allows computations to be performed on data without this data ever being decrypted. Therefore, homomorphic encryption represents a potential solution for conducting feasibility studies on cohorts of sensitive patient data stored in distributed locations. Distributed ledger technology provides a permanent record on all transfers and processing of patient data, allowing data custodians to audit access. A significant portion of the current literature has examined how these technologies might comply with data protection and research ethics frameworks ... (Full article...)

|

Featured article of the week: June 12–18:

"Avoidance of operational sampling errors in drinking water analysis"

The internal audits carried out in the first half of 2019 in Portuguese water laboratories as part of quality accreditation in accordance with ISO/IEC 17025:2017 showed a high frequency of adverse events in connection with sampling. These faults can be a consequence of a wide range of causes, and in some cases, the information about them can be insufficient or unclear. Considering that sampling has a major influence on the quality of the analytical results provided by water laboratories, this work presents a system for reporting and learning from adverse events. Its aim is to record nonconformities, errors, and adverse events, making possible automatic data analysis to better ensure continuous improvement in operational sampling. The system is based on the Eindhoven Classification Model and enables automatic data analysis and reporting to identify the main causes of failure ... (Full article...)

|

Featured article of the week: June 05–11:

"ISO/IEC 17025: History and introduction of concepts"

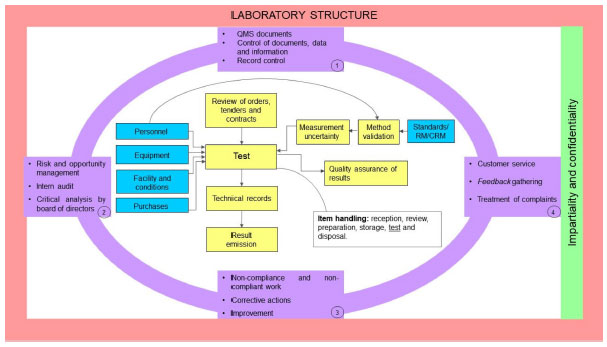

Quality is an increasingly present concept nowadays, and meeting the needs of customers who buy and use products and hire services becomes essential. For laboratories, the concept is applied not only to the reliability and traceability of the results produced, but it also presents itself in meeting the customer’s needs and providing confidence when signing agreements in the international trade. The concept of quality in a laboratory can be carried out from the development and implementation of a quality management system (QMS). To this end, the normative, internationally accepted document ISO/IEC 17025 aims at instructing the development and implementation of a management system, which ideally proves the technical capacity of testing and calibration laboratories and guides the generation of reliable results ... (Full article...)

|

Featured article of the week: May 29–June 04:

"Practical considerations for laboratories: Implementing a holistic quality management system"

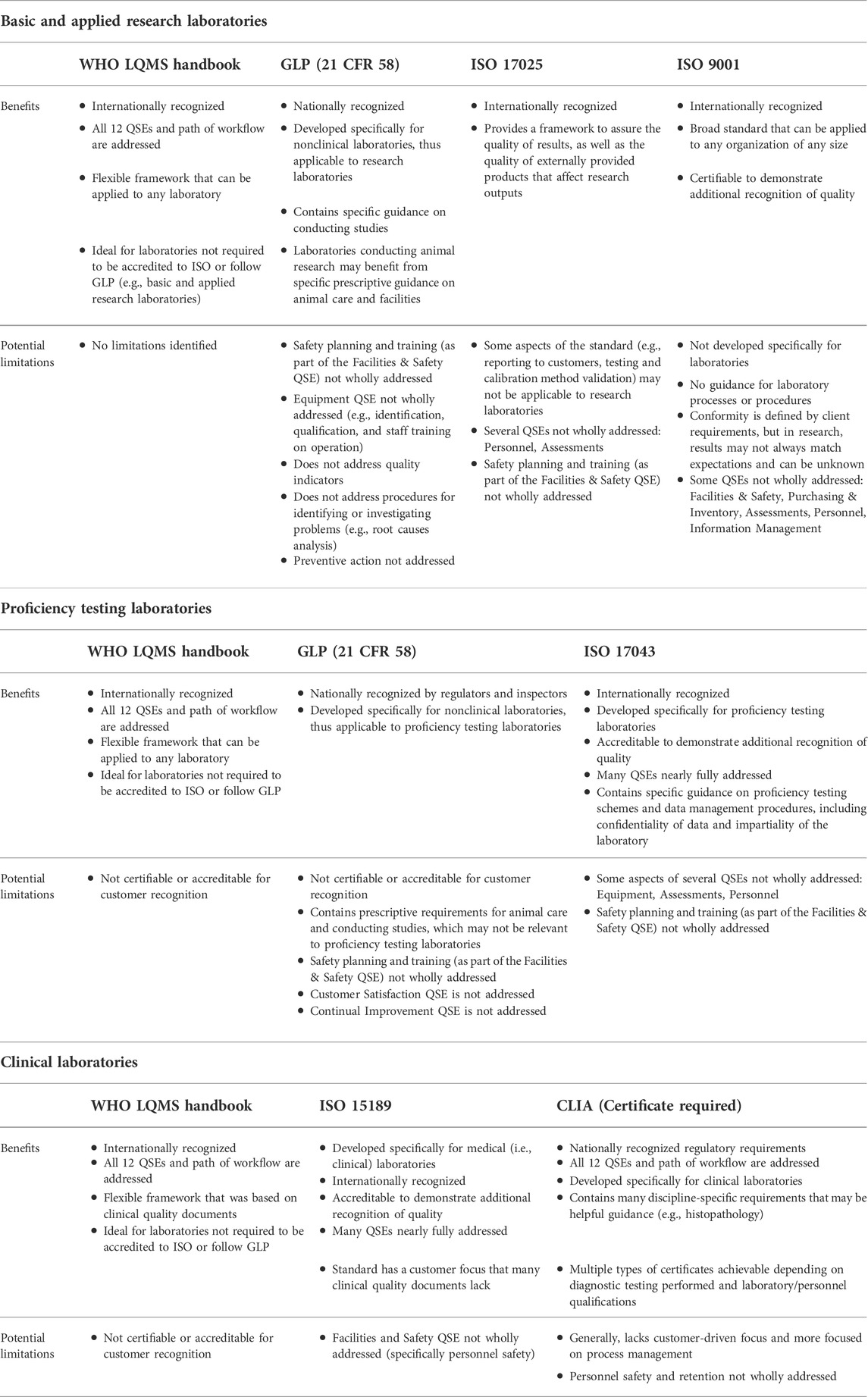

A quality management system (QMS) is an essential element for the effective operation of research, clinical, testing, or production/manufacturing laboratories. As technology continues to rapidly advance and new challenges arise, laboratories worldwide have responded with innovation and process changes to meet the continued demand. It is critical for laboratories to maintain a robust QMS that accommodates laboratory activities (e.g., basic and applied research; regulatory, clinical, or proficiency testing), records management, and a path for continuous improvement to ensure that results and data are reliable, accurate, timely, and reproducible. A robust, suitable QMS provides a framework to address gaps and risks throughout the laboratory's workflow that could potentially lead to a critical error, thus compromising the integrity and credibility of the institution. While there are many QMS frameworks (e.g., a model such as a consensus standard, guideline, or regulation) that may apply to laboratories, ensuring that the appropriate framework is adopted based on the type of work performed and that key implementation steps are taken is important for the long-term success of the QMS and for the advancement of science ... (Full article...)

|

Featured article of the week: May 22–28:

"Precision nutrition: Maintaining scientific integrity while realizing market potential"

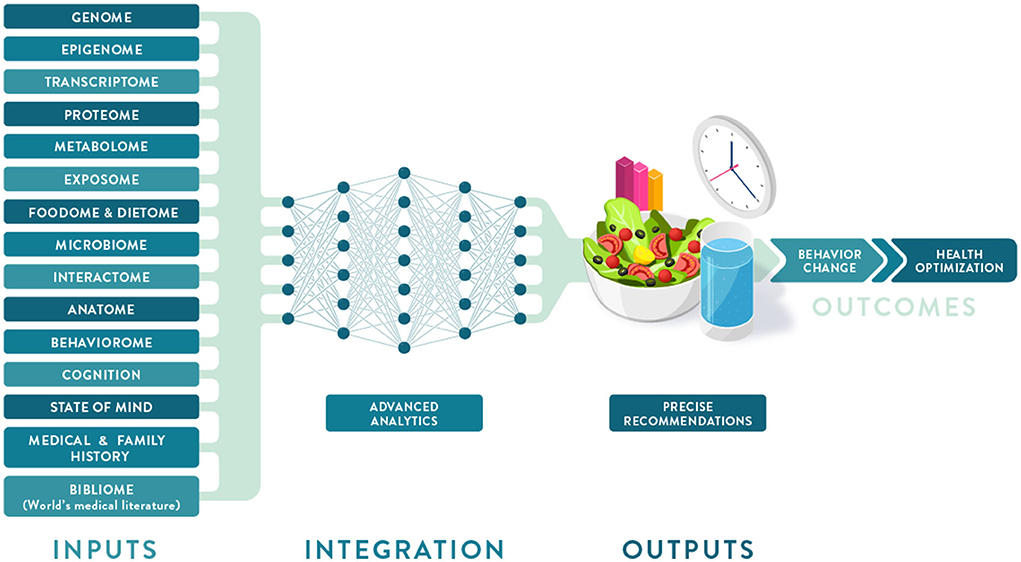

Precision nutrition (PN) is an approach to developing comprehensive and dynamic nutritional recommendations based on individual variables, including genetics, microbiome, metabolic profile, health status, physical activity, dietary pattern, and food environment, as well as socioeconomic and psychosocial characteristics. PN can help answer the question “what should I eat to be healthy?”, recognizing that what is healthful for one individual may not be the same for another, and understanding that health and responses to diet change over time. The growth of the PN market has been driven by increasing consumer interest in individualized products and services coupled with advances in technology, analytics, and omic sciences. However, important concerns are evident regarding the adequacy of scientific substantiation supporting claims for current products and services. An additional limitation to accessing PN is the current cost of diagnostic tests and wearable devices ... (Full article...)

|

Featured article of the week: May 15–21:

"Construction of control charts to help in the stability and reliability of results in an accredited water quality control laboratory"

Overall, laboratory water quality analysis must have stability in their results, especially in laboratories accredited by ISO/IEC 17025. Accredited parameters should be strictly reliable. Using control charts to ascertain divergences between results is thus very useful. The present work applied a methodology of analysis of results through control charts to accurately monitor the results for a wastewater treatment plant. The parameters analyzed were pH, biological oxygen demand for five days (BOD5), chemical oxygen demand (COD), total suspended solids (TSS), and total phosphorus (TP). The stability of the results was analyzed from the control charts and 30 analyses performed in the last 12 months. From the results, it was possible to observe whether the results were stable, according to the rehabilitation factor, which cannot exceed WN = 1.00, and the efficiency of removal of pollutants, which remained above 70% for all parameters ... (Full article...)

|

Featured article of the week: May 08–14:

"Application of informatics in cancer research and clinical practice: Opportunities and challenges"

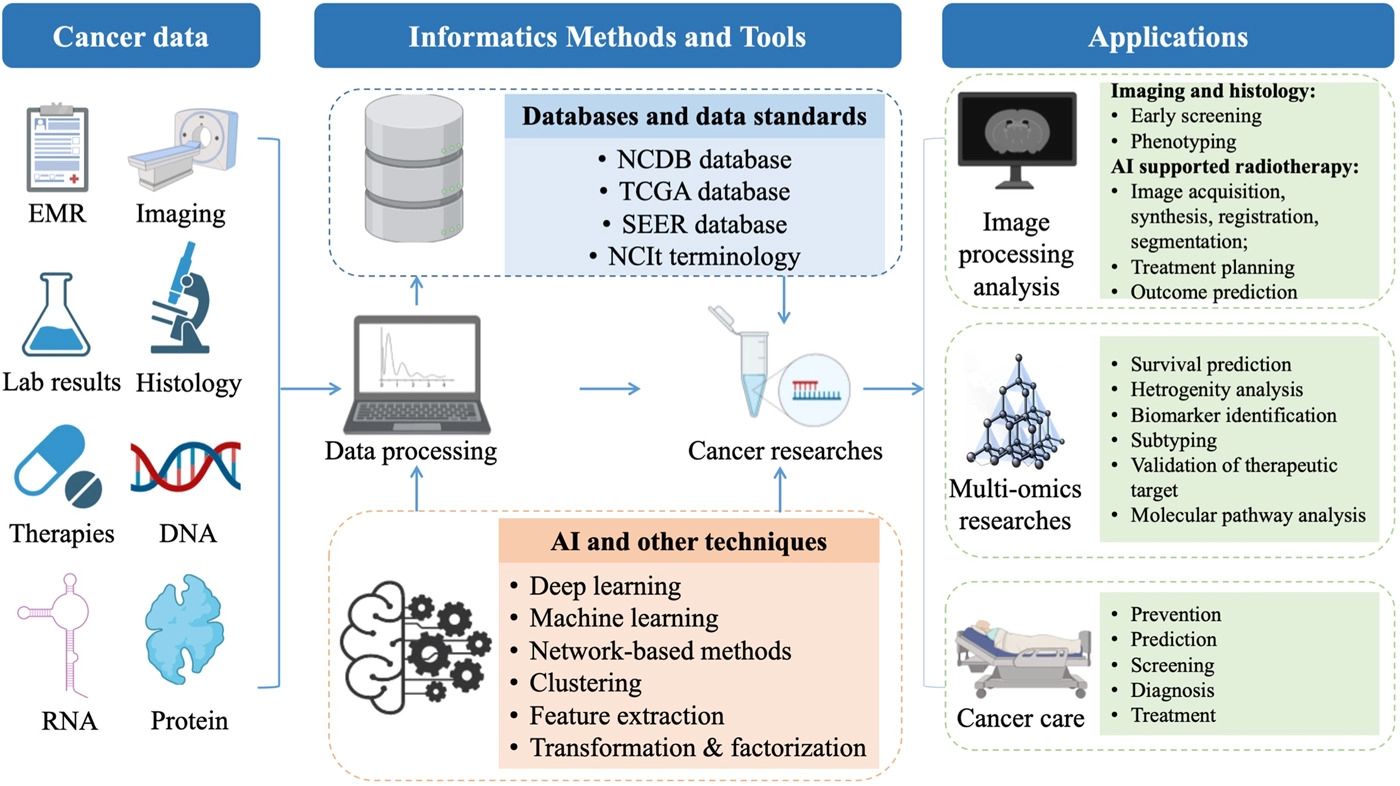

Cancer informatics has significantly progressed in the big data era. We summarize the application of informatics approaches to the cancer domain from both the informatics perspective (e.g., data management and data science) and the clinical perspective (e.g., cancer screening, risk assessment, diagnosis, treatment, and prognosis). We discuss various informatics methods and tools that are widely applied in cancer research and practices, such as cancer databases, data standards, terminologies, high-throughput omics data mining, machine learning algorithms, artificial intelligence imaging, and intelligent radiation ... (Full article...)

|

Featured article of the week: May 01–07:

"Recommendations for achieving interoperable and shareable medical data in the USA"

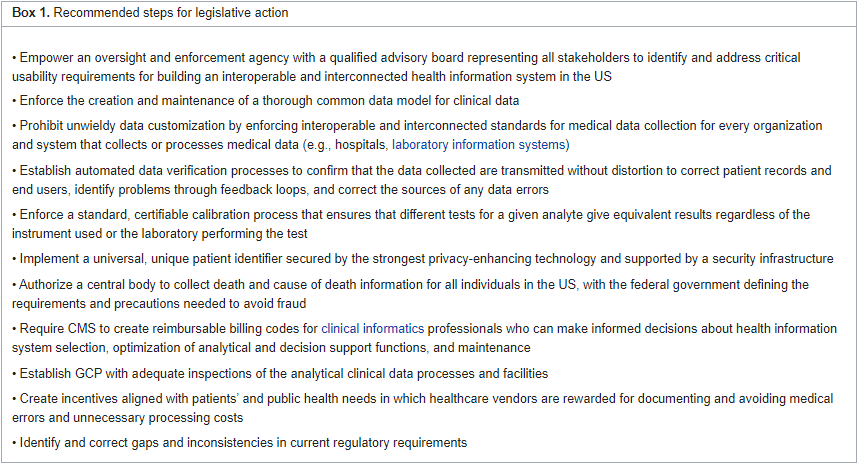

Easy access to large quantities of accurate health data is required to understand medical and scientific information in real time; evaluate public health measures before, during, and after times of crisis; and prevent medical errors. Introducing a system in the United States of America that allows for efficient access to such health data and ensures auditability of data facts, while avoiding data silos, will require fundamental changes in current practices. Here, we recommend the implementation of standardized data collection and transmission systems, universal identifiers for individual patients and end users, a reference standard infrastructure to support calibration and integration of laboratory results from equivalent tests, and modernized working practices. Requiring comprehensive and binding standards, rather than incentivizing voluntary and often piecemeal efforts for data exchange, will allow us to achieve the analytical information environment that patients need ... (Full article...)

|

Featured article of the week: April 24–30:

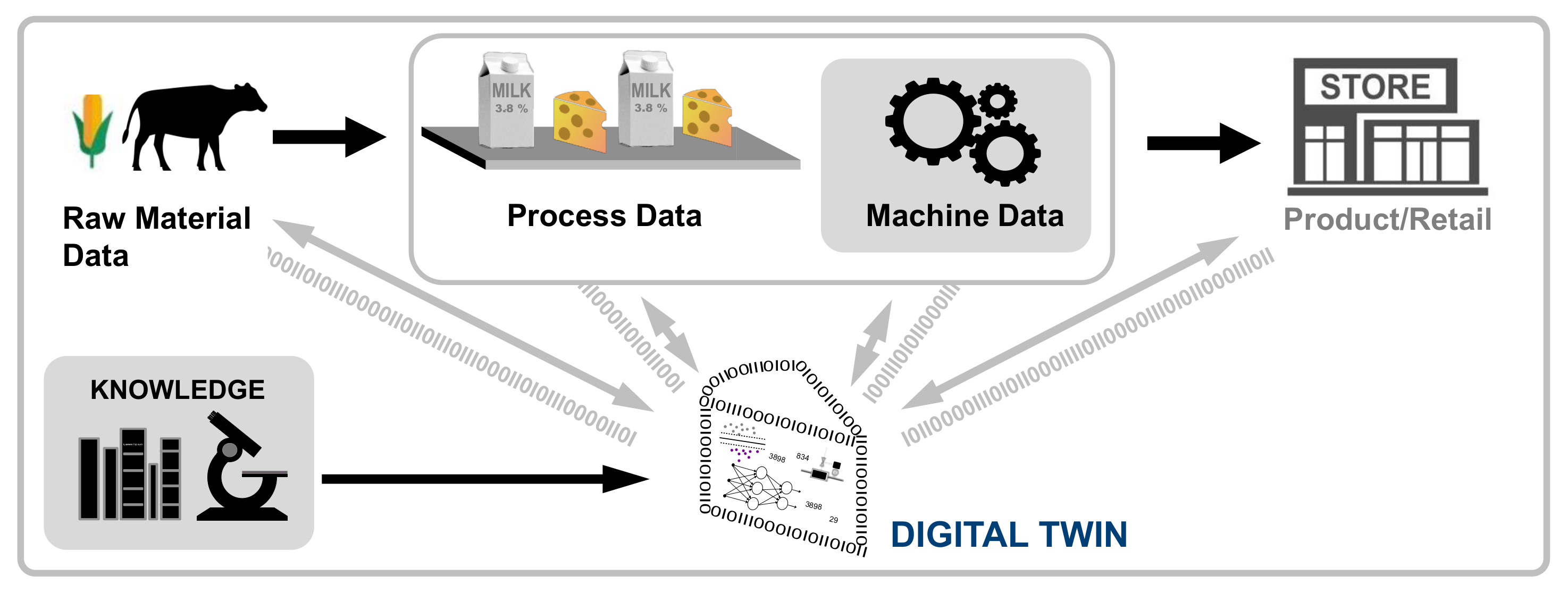

"Can a byte improve our bite? An analysis of digital twins in the food industry"

The food industry faces many challenges, including the need to feed a growing population, manage food loss and waste, and improve inefficient production systems. To cope with those challenges, digital twins—digital representations of physical entities created by integrating real-time and real-world data—seem to be a promising approach. This paper aims to provide an overview of digital twin applications in the food industry and analyze their challenges and potentials. First, a literature review is executed to examine digital twin applications in the food supply chain. The applications found are classified according to a taxonomy, and key elements to implement digital twins are identified. Further, the challenges and potentials of digital twin applications in the food industry are discussed. This survey reveals that application of digital twins mainly target the production (i.e., agriculture) or food processing stages ... (Full article...)

|

Featured article of the week: April 17–23:

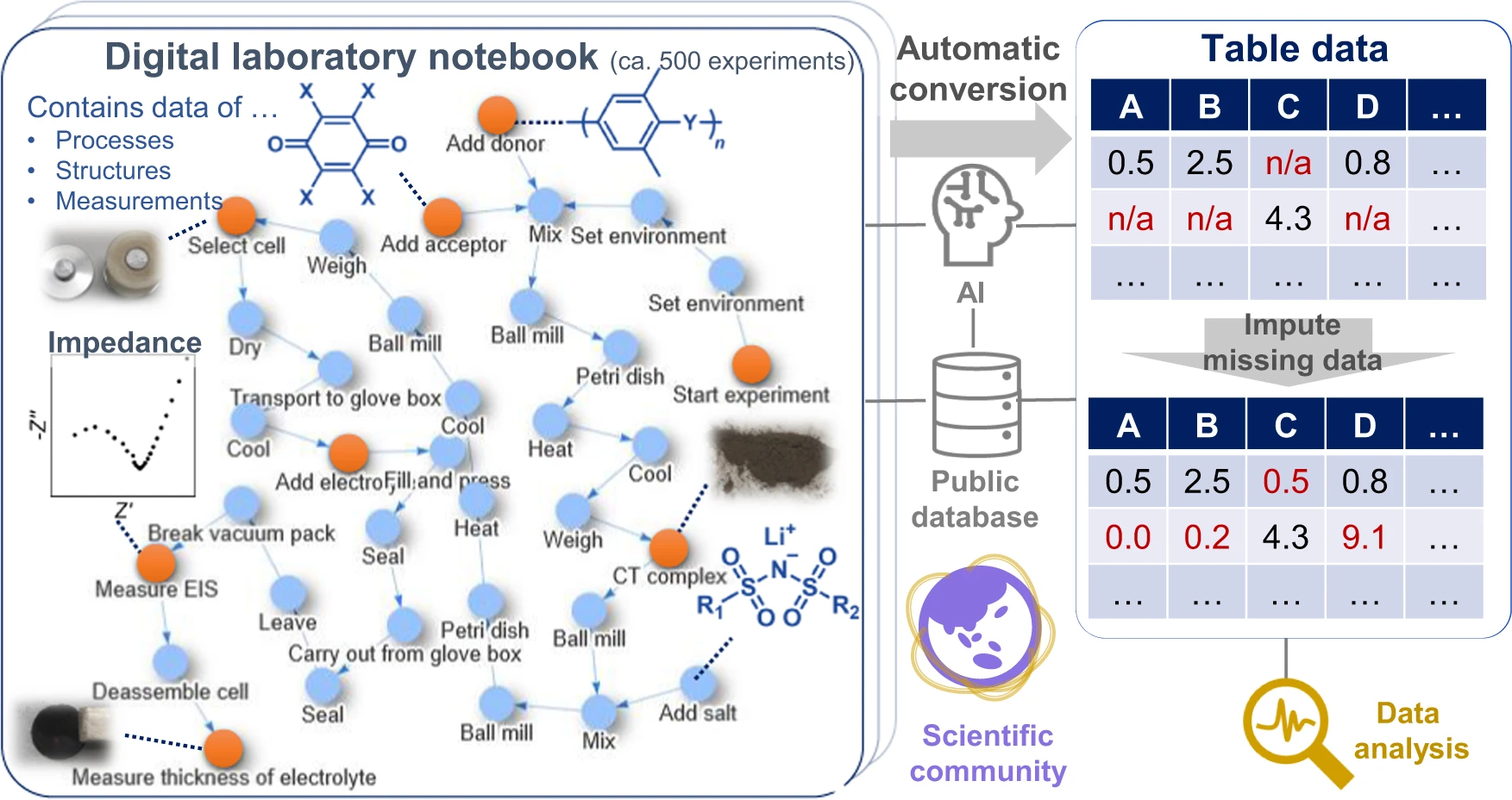

"Exploration of organic superionic glassy conductors by process and materials informatics with lossless graph database"

Data-driven material exploration is a ground-breaking research style; however, daily experimental results are difficult to record, analyze, and share. We report a data platform that losslessly describes the relationships of structures, properties, and processes as graphs in electronic laboratory notebooks (ELNs). As a model project, organic superionic glassy conductors were explored by recording over 500 different experiments. Automated data analysis revealed the essential factors for a remarkable room-temperature ionic conductivity of 10−4 to 10−3 S cm−1 and a Li+ transference number of around 0.8. In contrast to previous materials research, everyone can access all the experimental results—including graphs, raw measurement data, and data processing systems—at a public repository. Direct data sharing will improve scientific communication and accelerate integration of material knowledge ... (Full article...)

|

Featured article of the week: April 10–16:

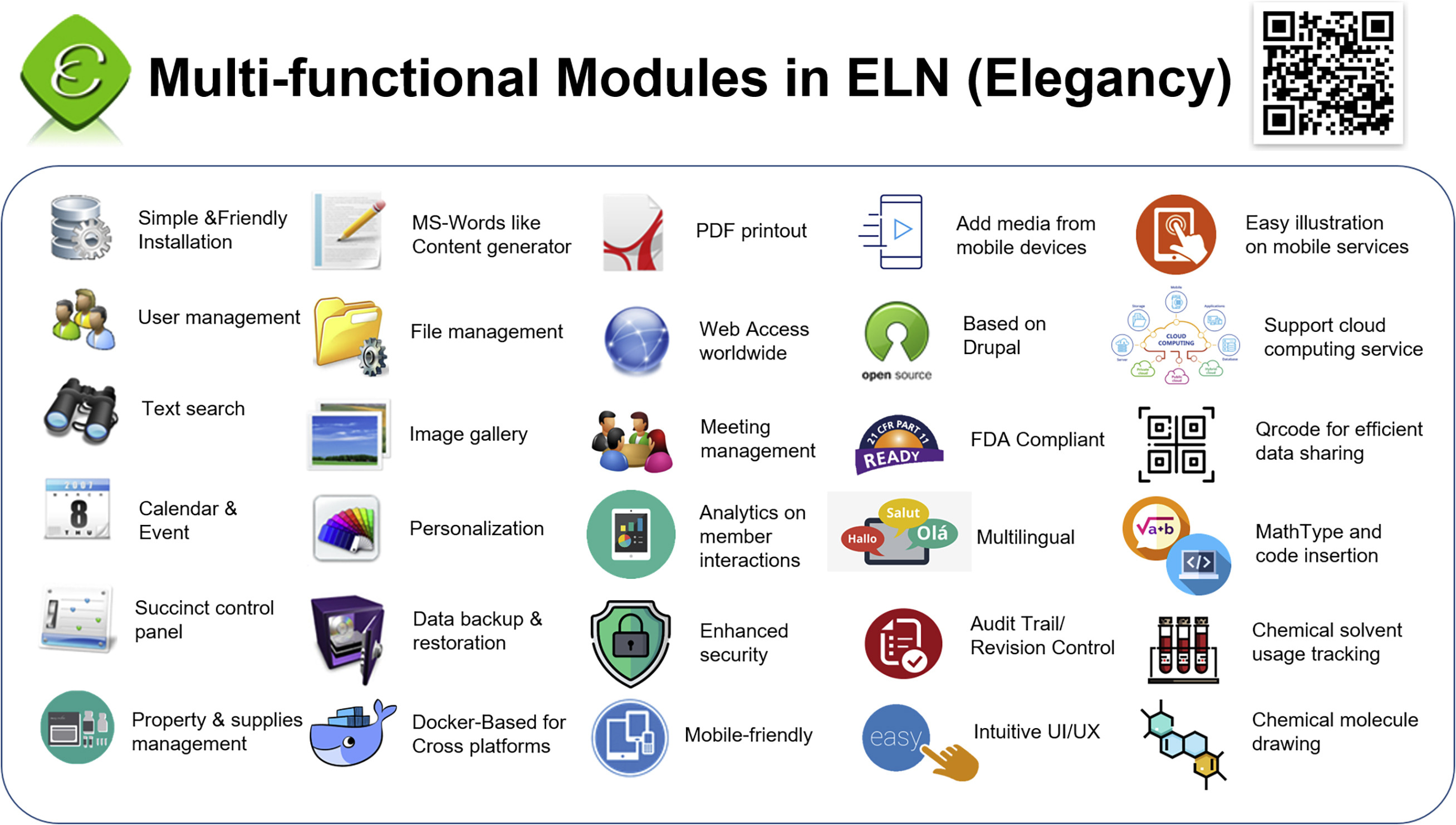

"Elegancy: Digitizing the wisdom from laboratories to the cloud with free no-code platform"

One of the top priorities in any laboratory is archiving experimental data in the most secure, efficient, and errorless way. It is especially important to those in chemical and biological research, for it is more likely to damage experiment records. In addition, the transmission of experiment results from paper to electronic devices is time-consuming and redundant. Therefore, we introduce an open-source no-code electronic laboratory notebook (ELN), Elegancy, a cloud-based/standalone web service distributed as a Docker image. Elegancy fits all laboratories but is specially equipped with several features benefitting biochemical laboratories. It can be accessed via various web browsers, allowing researchers to upload photos or audio recordings directly from their mobile devices. Elegancy also contains a meeting arrangement module, audit/revision control, and laboratory supply management system. We believe Elegancy could help the scientific research community gather evidence, share information, reorganize knowledge, and digitize laboratory works with greater ease and security ... (Full article...)

|

Featured article of the week: April 03–09:

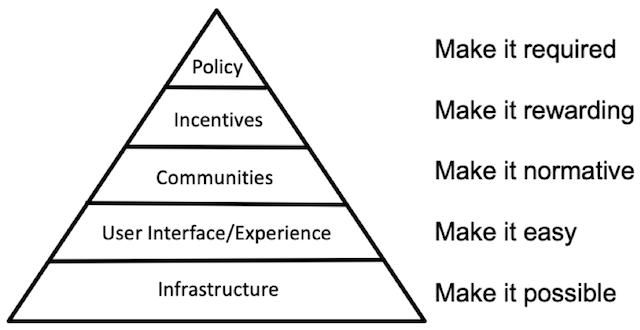

"Implementing an institution-wide electronic laboratory notebook initiative"

To strengthen institutional research data management practices, the Indiana University School of Medicine (IUSM) licensed an electronic laboratory notebook (ELN) to improve the organization, security, and shareability of information and data generated by the school’s researchers. The Ruth Lilly Medical Library led implementation on behalf of the IUSM’s Office of Research Affairs. This article describes the pilot and full-scale implementation of an ELN at IUSM. The initial pilot of the ELN in late 2018 involved 15 research labs, with access expanded in 2019 to all academic medical school constituents ... (Full article...)

|

Featured article of the week: March 27–April 02:

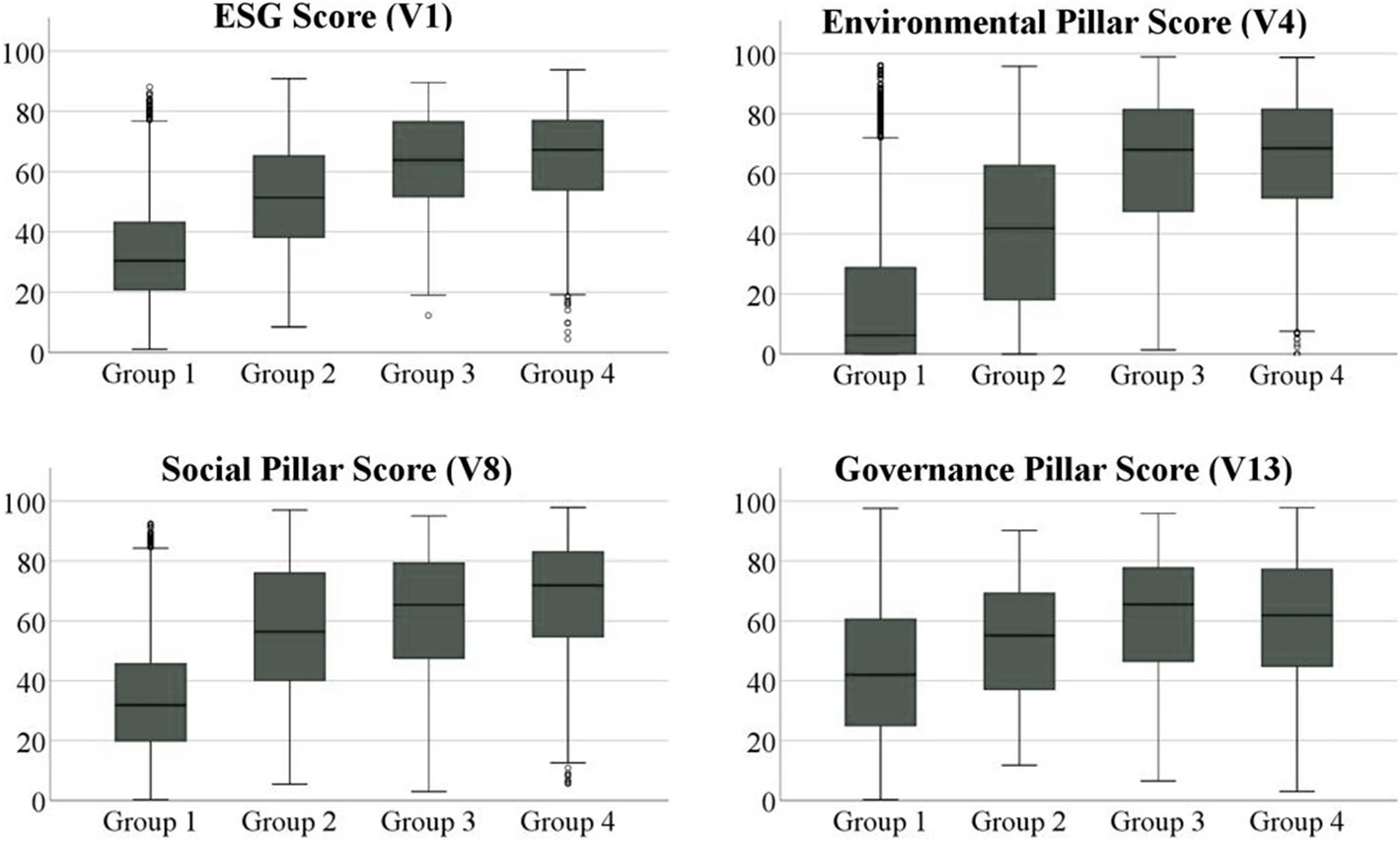

"Quality and environmental management systems as business tools to enhance ESG performance: A cross-regional empirical study"

The growing societal and political focus on sustainability at the global level is pressuring companies to enhance their environmental, social, and governance (ESG) performance to satisfy respective stakeholder needs and ensure sustained business success. With a data sample of 4,292 companies from Europe, East Asia, and North America, this work aims to prove through a cross-regional empirical study that quality management systems (QMSs) and environmental management systems (EMSs) represent powerful business tools to achieve this enhanced ESG performance. Descriptive and cluster analyses reveal that firms with QMSs and/or EMSs accomplish statistically significant higher ESG scores than companies without such management systems. Furthermore, the results indicate that operating both types of management systems simultaneously increases performance in the environmental and social pillar even further, while the governance dimension appears to be affected mainly by the adoption of EMSs alone ... (Full article...)

|

Featured article of the week: March 20–26:

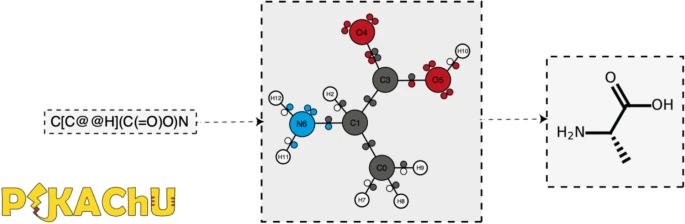

"PIKAChU: A Python-based informatics kit for analyzing chemical units"

As efforts to computationally describe and simulate the biochemical world become more commonplace, computer programs that are capable of in silico chemistry play an increasingly important role in biochemical research. While such programs exist, they are often dependency-heavy, difficult to navigate, or not written in Python, the programming language of choice for bioinformaticians. Here, we introduce PIKAChU (Python-based Informatics Kit for Analysing CHemical Units), a cheminformatics toolbox with few dependencies implemented in Python. PIKAChU builds comprehensive molecular graphs from simplified molecular-input line-entry system (SMILES) strings, which allow for easy downstream analysis and visualization of molecules. ... (Full article...)

|

Featured article of the week: March 13–19:

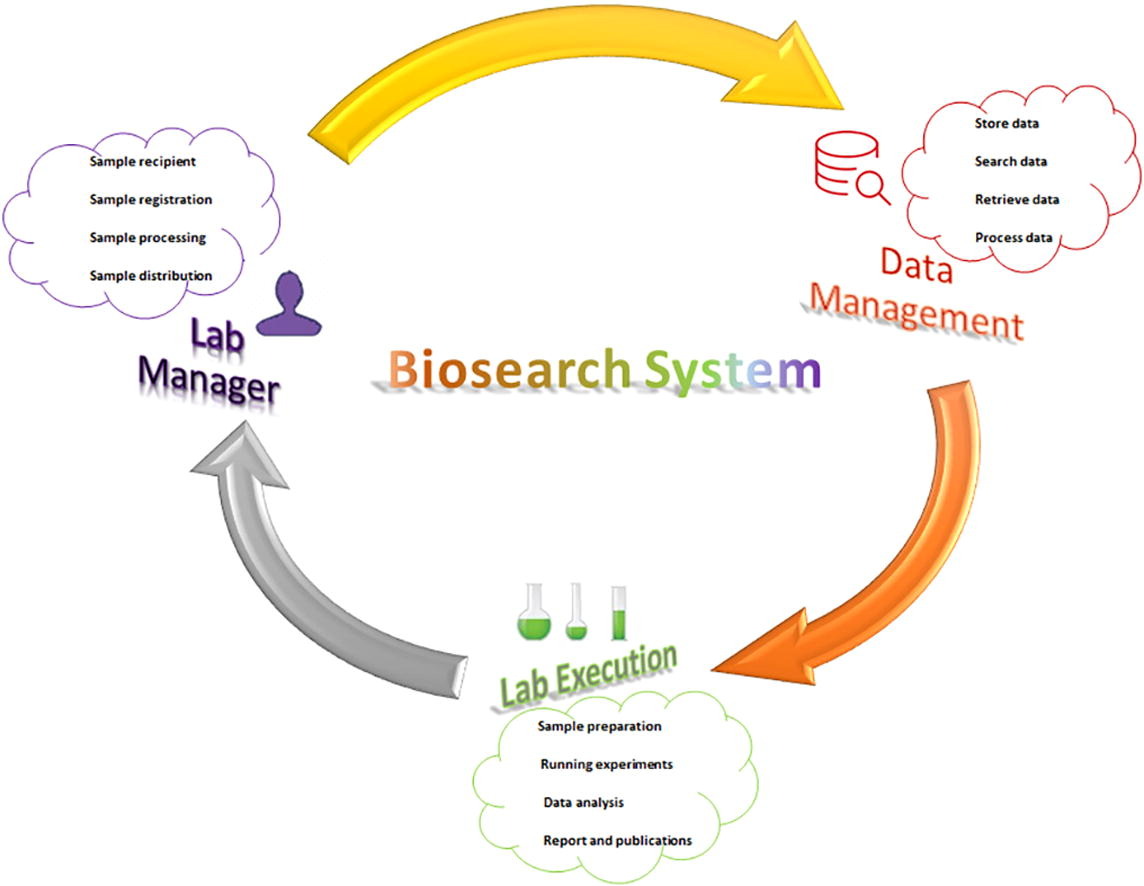

"Development of Biosearch System for biobank management and storage of disease-associated genetic information"

Databases and software are important to manage modern high-throughput laboratories and store clinical and genomic information for quality assurance. Commercial software is expensive, with proprietary code issues, while academic versions have adaptation issues. Our aim was to develop an adaptable in-house software system that can store specimen- and disease-associated genetic information in biobanks to facilitate translational research. A prototype was designed per the research requirements, and computational tools were used to develop the software under three tiers, using Visual Basic and ASP.net for the presentation tier, SQL Server for the data tier, and Ajax and JavaScript for the business tier. We retrieved specimens from the biobank using this software and performed microarray-based transcriptomic analysis to detect differentially expressed genes (DEGs) ... (Full article...)

|

Featured article of the week: March 06–12:

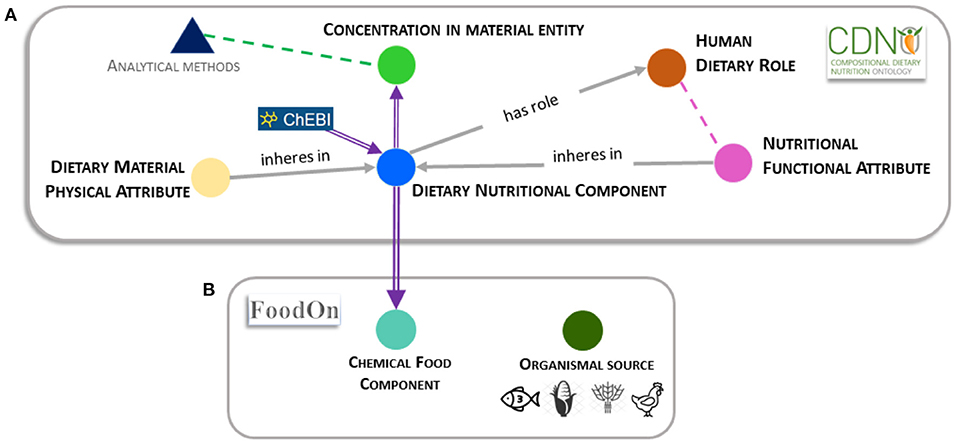

"Establishing a common nutritional vocabulary: From food production to diet"

Informed policy and decision-making for food systems, nutritional security, and global health would benefit from standardization and comparison of food composition data, spanning production to consumption. To address this challenge, we present a formal controlled vocabulary of terms, definitions, and relationships within the Compositional Dietary Nutrition Ontology (CDNO) that enables description of nutritional attributes for material entities contributing to the human diet. We demonstrate how ongoing community development of CDNO classes can harmonize trans-disciplinary approaches for describing nutritional components from food production to diet ... (Full article...)

|

Featured article of the week: February 28–March 05:

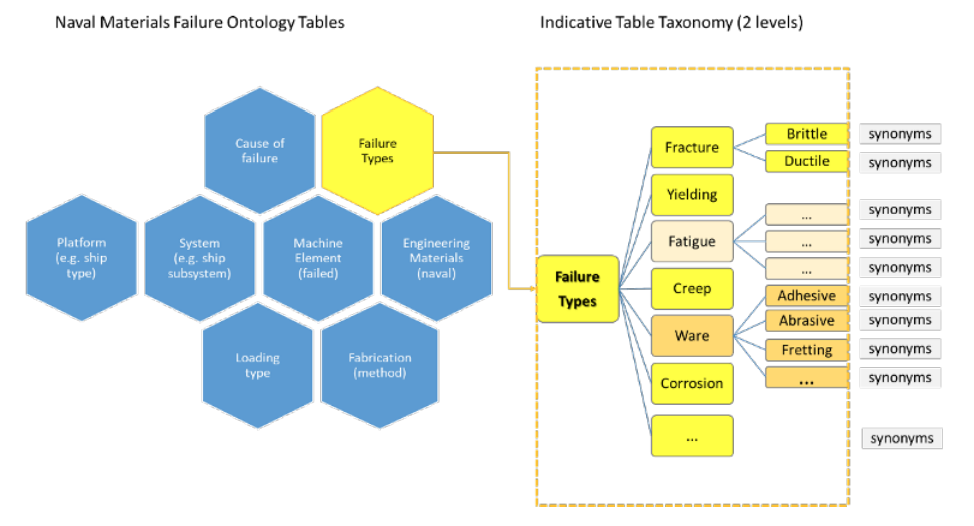

"Designing a knowledge management system for naval materials failures"

Implemented materials fail from time to time, requiring failure analysis. This type of scientific analysis expands into forensic engineering for it aims not only to identify individual and symptomatic reasons for failure, but also to assess and understand repetitive failure patterns, which could be related to underlying material faults, design mistakes, or maintenance omissions. Significant information can be gained and studied from carefully documenting and managing the data that comes from failure analysis of materials, including in the naval industry. The NAVMAT research project, presented herein, attempts an interdisciplinary approach to materials informatics by integrating materials engineering and informatics under a platform of knowledge management. Our approach utilizes a focused, common-cause failure analysis methodology for the naval and marine environment. The platform's design is dedicated to the effective recording, efficient indexing, and easy and accurate retrieval of relevant information, including the associated history of maintenance and secure operation concerning failure incidents of marine materials, components, and systems in an organizational fleet. ... (Full article...)

|

Featured article of the week: February 20–27:

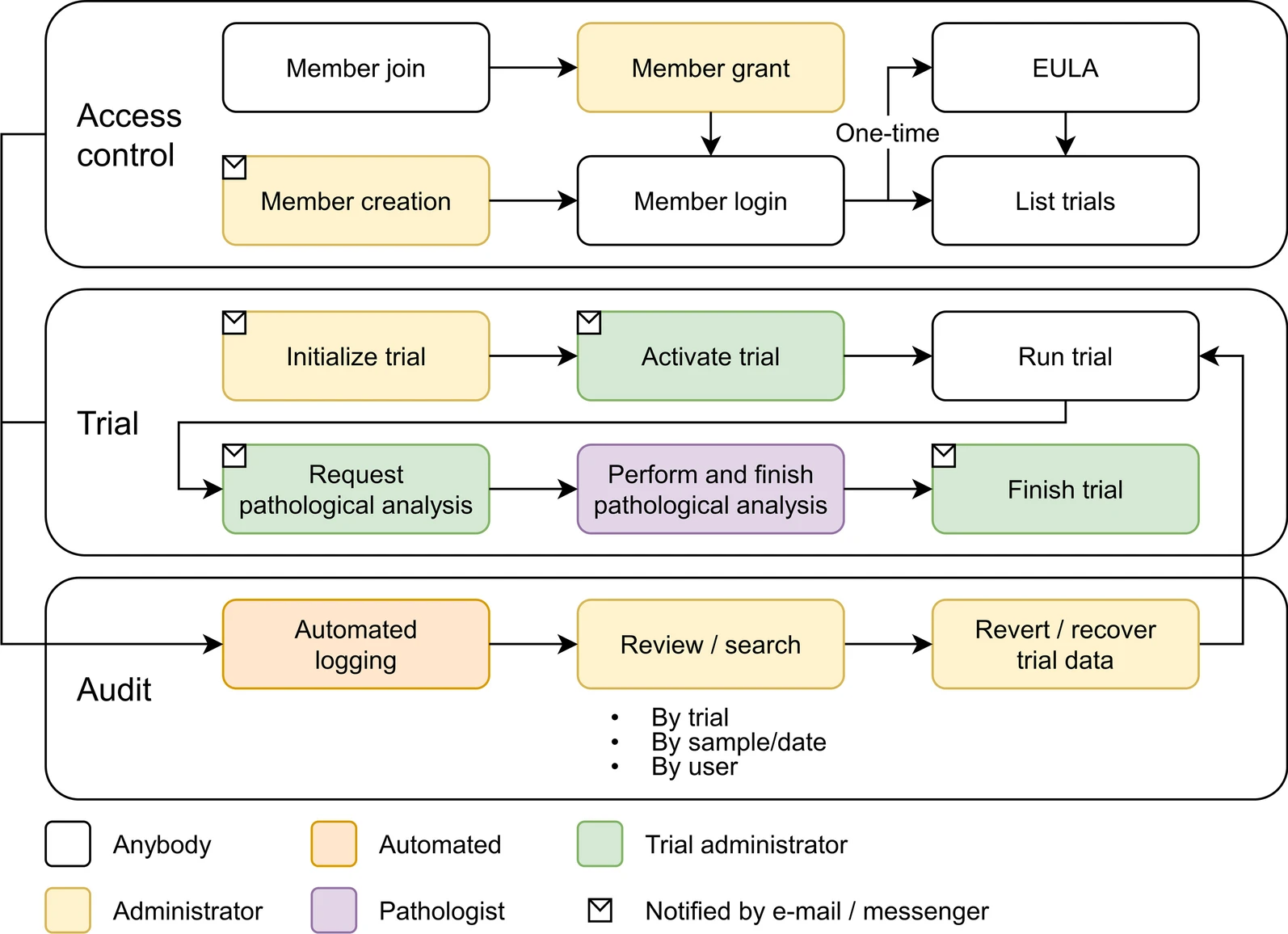

"Laboratory information management system for COVID-19 non-clinical efficacy trial data"

As the number of large-scale research studies involving multiple organizations producing data has steadily increased, an integrated system for a common interoperable data format is needed. For example, in response to the coronavirus disease 2019 (COVID-19) pandemic, a number of global efforts are underway to develop vaccines and therapeutics. We are therefore observing an explosion in the proliferation of COVID-19 data, and interoperability is highly requested in multiple institutions participating simultaneously in COVID-19 pandemic research. In this study, a laboratory information management system (LIMS) has been adopted to systemically manage, via web interface, various COVID-19 non-clinical trial data—including mortality, clinical signs, body weight, body temperature, organ weights, viral titer (viral replication and viral RNA), and multi-organ histopathology—from multiple institutions ... (Full article...)

|

Featured article of the week: February 13–19:

"Improving data quality in clinical research informatics tools"

Maintaining data quality is a fundamental requirement for any successful and long-term data management project. Providing high-quality, reliable, and statistically sound data is a primary goal for clinical research informatics. In addition, effective data governance and management are essential to ensuring accurate data counts, reports, and validation. As a crucial step of the clinical research process, it is important to establish and maintain organization-wide standards for data quality management to ensure consistency across all systems designed primarily for cohort identification ... (Full article...)

|

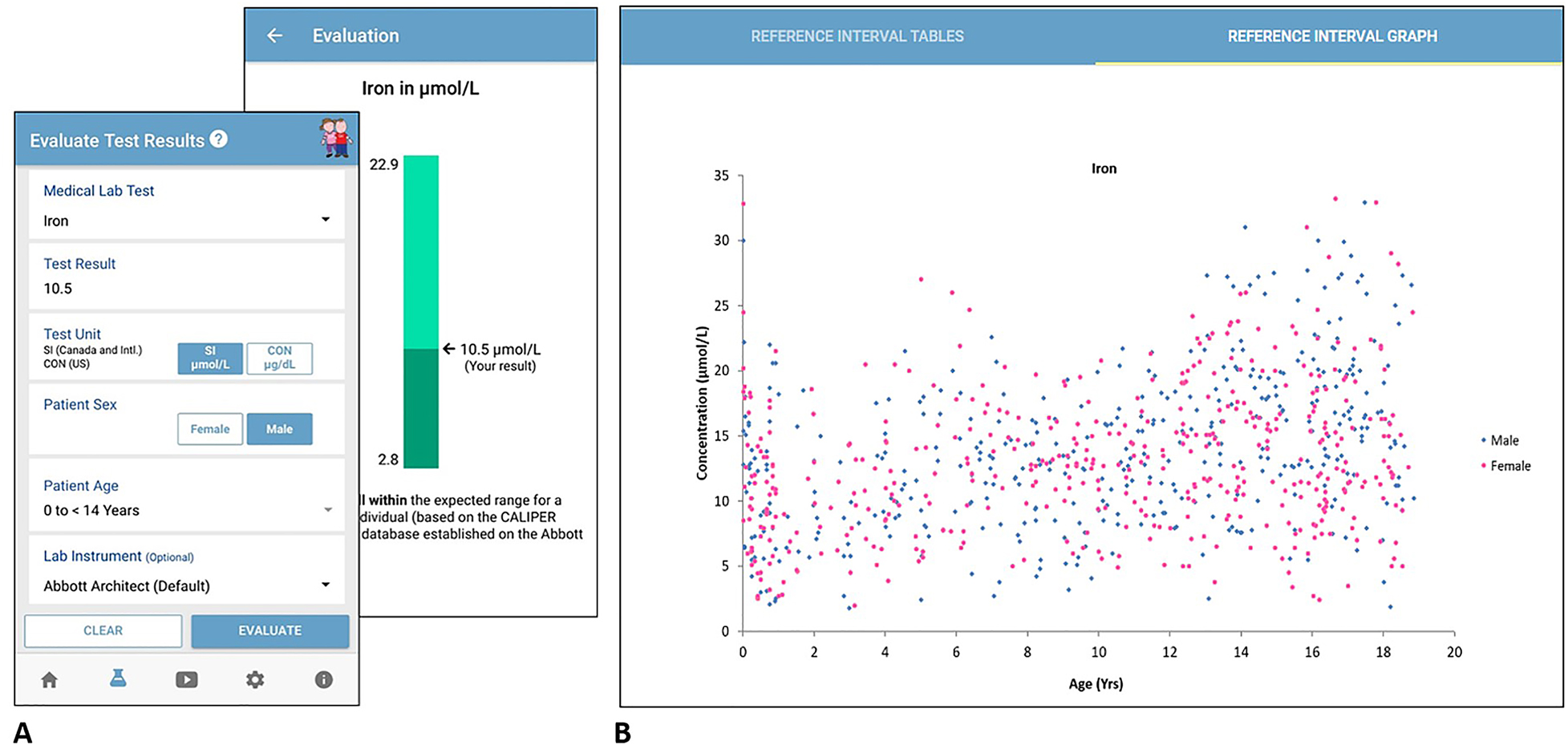

Featured article of the week: February 6–12:

"Electronic tools in clinical laboratory diagnostics: Key examples, limitations, and value in laboratory medicine"

Electronic tools in clinical laboratory diagnostics can assist laboratory professionals, clinicians, and patients in medical diagnostic management and laboratory test interpretation. With increasing implementation of electronic health records (EHRs) and laboratory information systems (LIS) worldwide, there is increasing demand for well-designed and evidence-based electronic resources. Both complex data-driven and simple interpretative electronic healthcare tools are currently available to improve the integration of clinical and laboratory information towards a more patient-centered approach to medicine. Several studies have reported positive clinical impact of electronic healthcare tool implementation in clinical laboratory diagnostics, including in the management of neonatal bilirubinemia, cardiac disease, and nutritional status ... (Full article...)

|

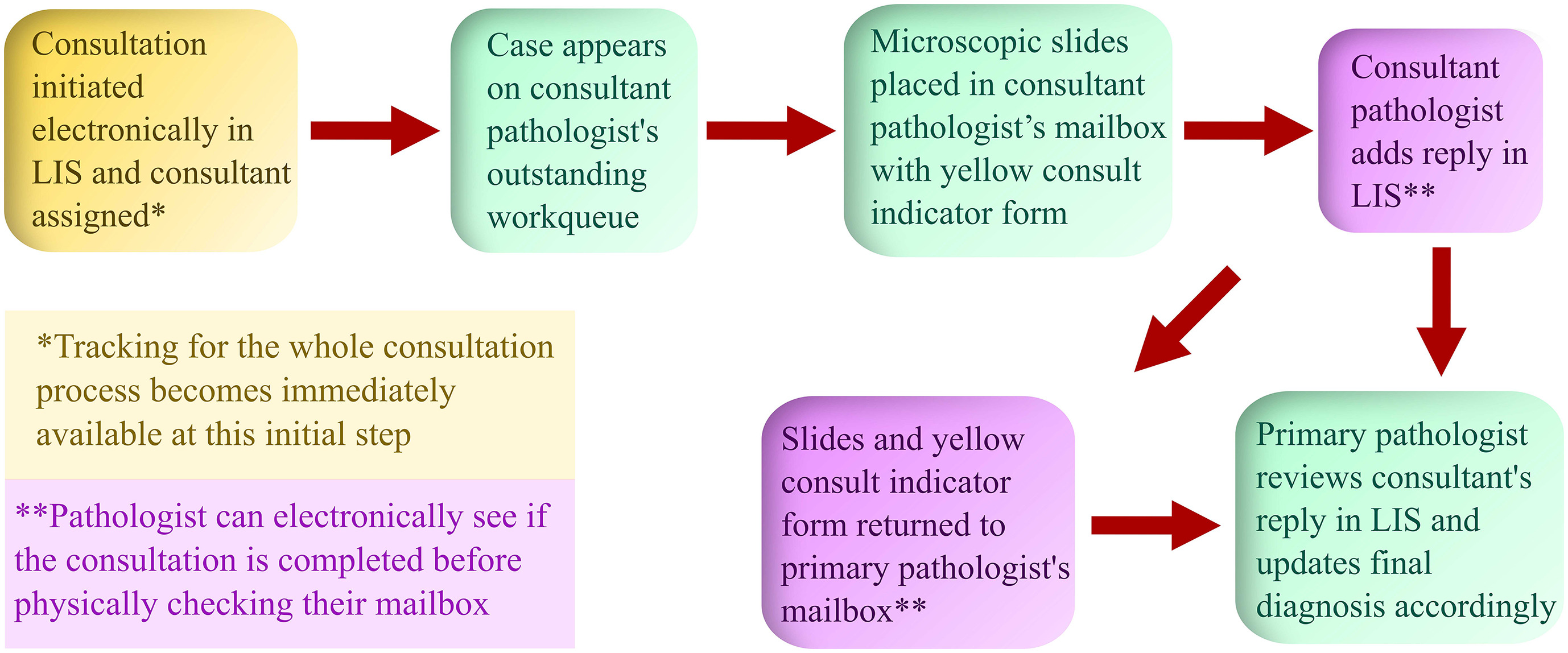

Featured article of the week: January 30–February 5:

"Anatomic pathology quality assurance: Developing an LIS-based tracking and documentation module for intradepartmental consultations"

An electronic intradepartmental consultation system for anatomic pathology (AP) was conceived and developed in the laboratory information system (LIS) of University of Iowa Hospitals and Clinics in 2019. Previously, all surgical pathology intradepartmental consultative activities were initiated and documented with paper forms, which were circulated with the pertinent microscopic slides and were eventually filed. In this study, we discuss the implementation and utilization of an electronic intradepartmental AP consultation system. Workflows and procedures were developed to organize intradepartmental surgical pathology consultations from the beginning to the end point of the consultative activities entirely using a paperless system that resided in the LIS ... (Full article...)

|

Featured article of the week: January 23–29:

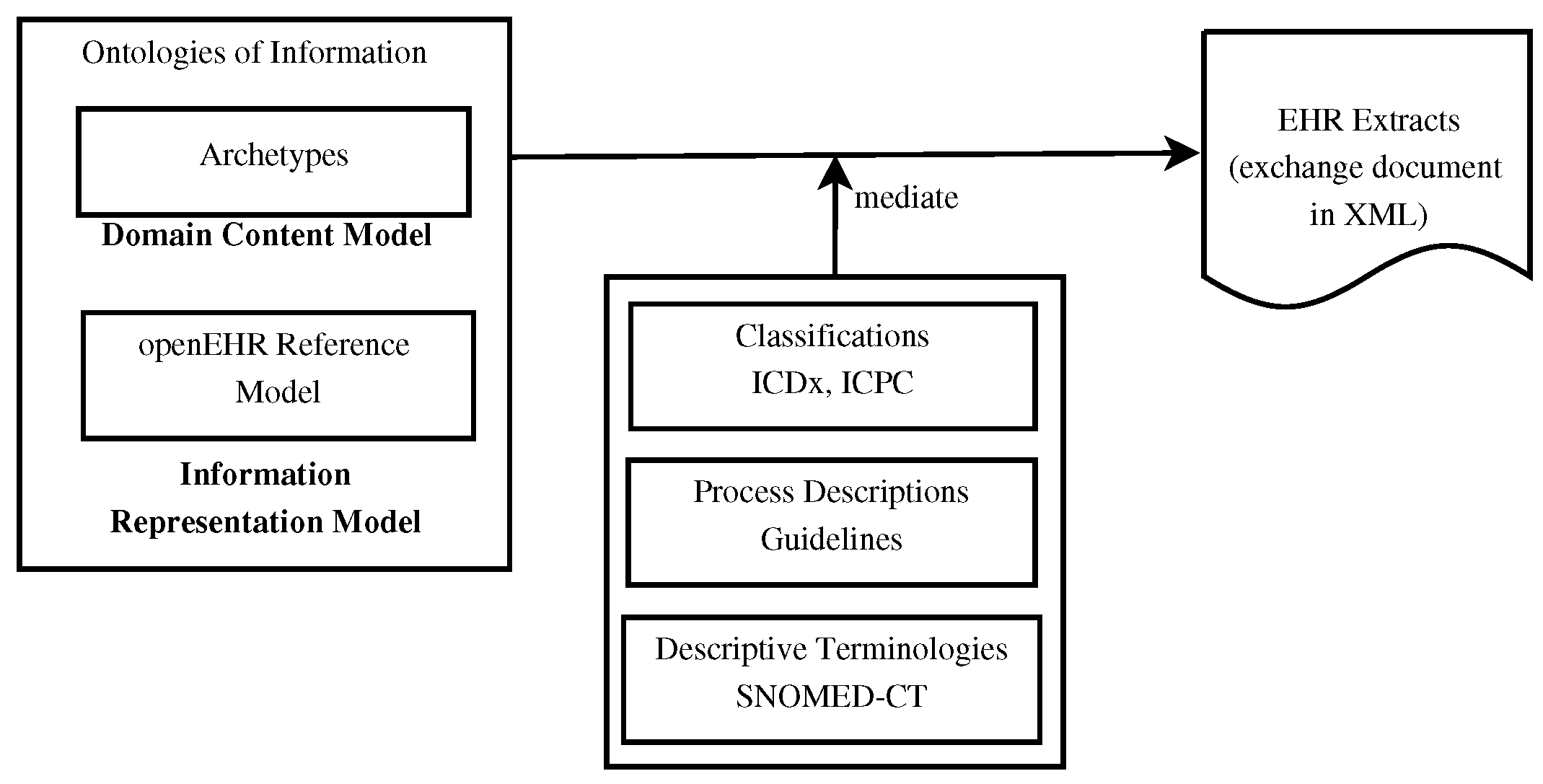

"Using knowledge graph structures for semantic interoperability in electronic health records data exchanges"

Information sharing across medical institutions is restricted to information exchange between specific partners. The lifelong electronic health record (EHR) structure and content require standardization efforts. Existing standards such as openEHR, Health Level 7 (HL7), and ISO/EN 13606 aim to achieve data independence along with semantic interoperability. This study aims to discover knowledge representation to achieve semantic health data exchange. openEHR and ISO/EN 13606 use archetype-based technology for semantic interoperability. The HL7 Clinical Document Architecture is on its way to adopting this through HL7 templates. Archetypes are the basis for knowledge-based systems, as these are means to define clinical knowledge. The paper examines a set of formalisms for the suitability of describing, representing, and reasoning about archetypes ... (Full article...)

|

Featured article of the week: January 16–22:

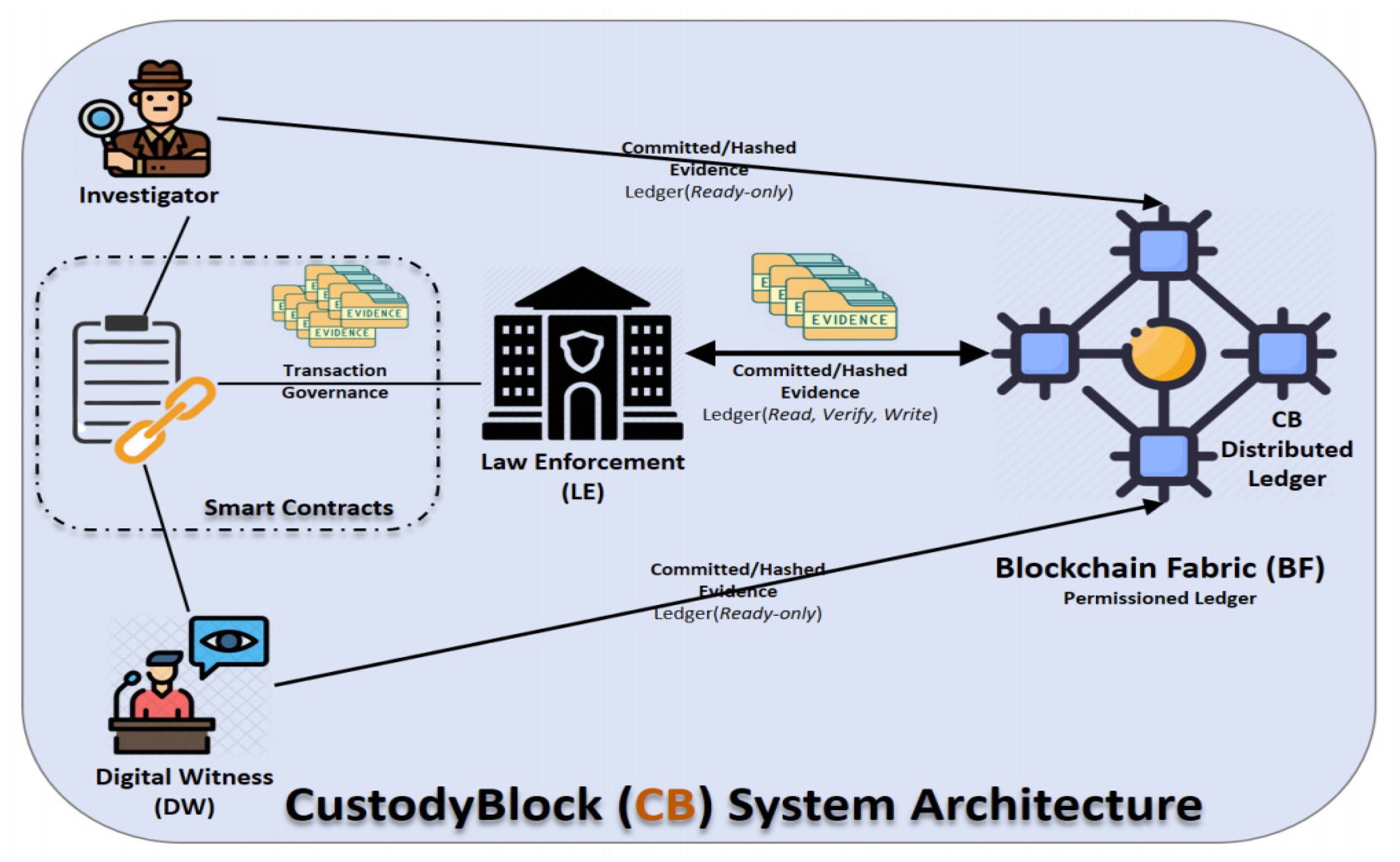

"CustodyBlock: A distributed chain of custody evidence framework"

With the increasing number of cybercrimes, the digital forensics team has no choice but to implement more robust and resilient evidence-handling mechanisms. The capturing of digital evidence, which is a tangible and probative piece of information that can be presented in court and used in trial, is challenging due to its volatility and the possible effects of improper handling procedures. When computer systems get compromised, digital forensics comes into play to analyze, discover, extract, and preserve all relevant evidence. Therefore, it is imperative to maintain efficient evidence management to guarantee the credibility and admissibility of digital evidence in a court of law. A critical component of this process is to utilize an adequate chain of custody (CoC) approach to preserve the evidence in its original state from compromise and/or contamination ... (Full article...)

|

Featured article of the week: January 09–15:

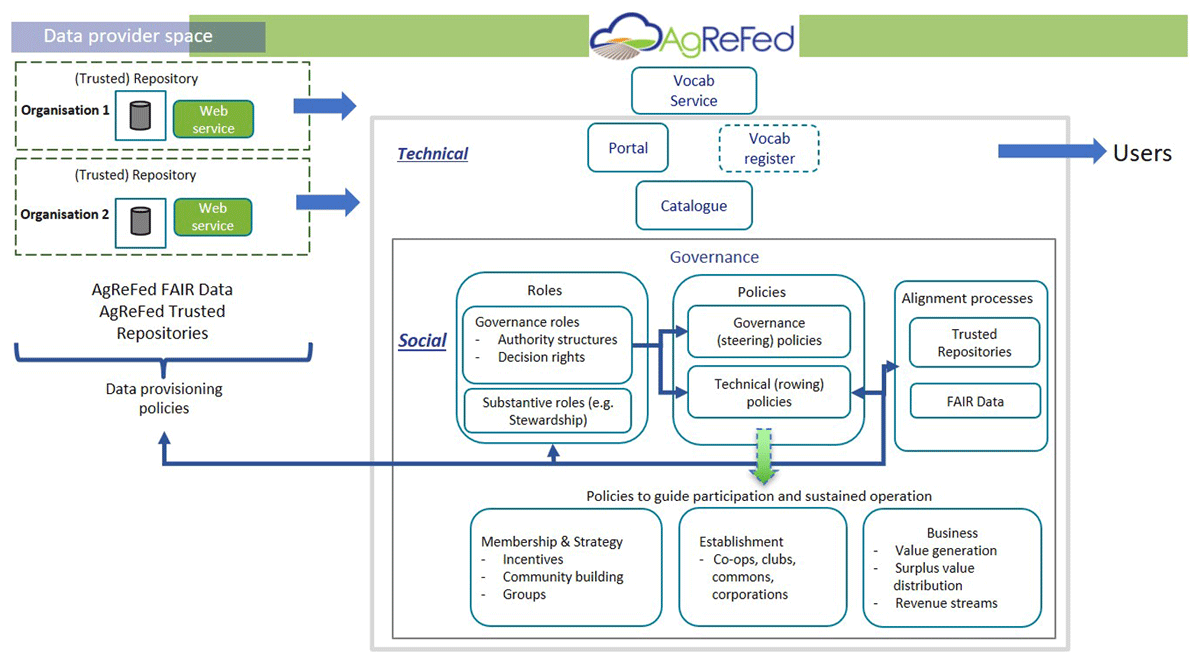

"Development and governance of FAIR thresholds for a data federation"

The FAIR (findable, accessible, interoperable, and re-usable) principles and practice recommendations provide high-level guidance and recommendations that are not research-domain specific in nature. There remains a gap in practice at the data provider and domain scientist level, demonstrating how the FAIR principles can be applied beyond a set of generalist guidelines to meet the needs of a specific domain community. We present our insights developing FAIR thresholds in a domain-specific context for self-governance by a community (in this case, agricultural research). "Minimum thresholds" for FAIR data are required to align expectations for data delivered from providers’ distributed data stores through a community-governed federation (the Agricultural Research Federation, AgReFed) ... (Full article...)

|

Featured article of the week: January 02–08:

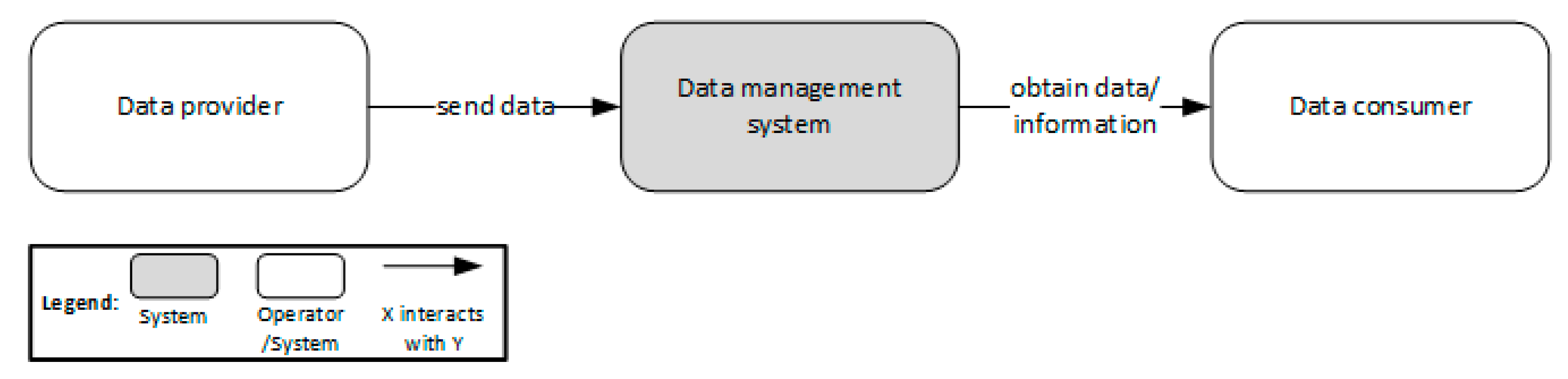

"Design of a data management reference architecture for sustainable agriculture"

Effective and efficient data management is crucial for smart farming and precision agriculture. To realize operational efficiency, full automation, and high productivity in agricultural systems, different kinds of data are collected from operational systems using different sensors, stored in different systems, and processed using advanced techniques, such as machine learning and deep learning. Due to the complexity of data management operations, a data management reference architecture is required. While there are different initiatives to design data management reference architectures, a data management reference architecture for sustainable agriculture is missing. In this study, we follow domain scoping, domain modeling, and reference architecture design stages to design the reference architecture for sustainable agriculture. Four case studies were performed to demonstrate the applicability of the reference architecture. This study shows that the proposed data management reference architecture is practical and effective for sustainable agriculture ... (Full article...)

|

|